Abstract

The accuracy of stockpile estimations is of immense criticality to process optimisation and overall financial decision making within manufacturing operations. Despite well-established correlations between inventory management and profitability, safe deployment of stockpile measurement and inspection activities remain challenging and labour-intensive. This is perhaps owing to a combination of size, shape irregularity as well as the health hazards of cement manufacturing raw materials and products. Through a combination of simulations and real-life assessment within a fully integrated cement plant, this study explores the potential of drones to safely enhance the accuracy of stockpile volume estimations. Different types of LiDAR sensors in combination with different flight trajectory options were fully assessed through simulation whilst mapping representative stockpiles placed in both open and fully confined areas. During the real-life assessment, a drone was equipped with GPS for localisation, in addition to a 1D LiDAR and a barometer for stockpile height estimation. The usefulness of the proposed approach was established based on mapping of a pile with unknown volume in an open area, as well as a pile with known volume within a semi-confined area. Visual inspection of the generated stockpile surface showed strong correlations with the actual pile within the open area, and the volume of the pile in the semi-confined area was accurately measured. Finally, a comparative analysis of cost and complexity of the proposed solution to several existing initiatives revealed its proficiency as a low-cost robotic system within confined spaces whereby visibility, air quality, humidity, and high temperature are unfavourable.

1. Introduction

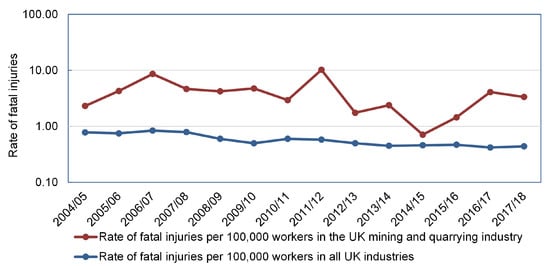

Manufacturing operations such as cement processes are heavily dependent on stockpiles of different materials that serve as inputs and outputs to and from various stages of production. However, due to the potential adverse effects of atmospheric conditions on cement manufacturing raw materials, most stockpiles are stored within confined spaces such as silos, sheds, and hoppers. Whilst there are existing approaches for automated storage mapping such as using laser scanners [1], there is still an essential need for low-cost, low-intervention, and highly versatile solutions for such high-hazard industries. Unmanned aerial vehicles (UAVs), commonly known as drones, are developed with different configurations and have been deployed to tackle challenges in wide range of applications [2,3,4,5,6,7,8]. Recent years have witnessed increased use of drones within a variety of civil applications such as monitoring of difficult-to-access infrastructures, spraying fields and performing surveillance in precision agriculture, delivering packages and safety inspection. It is anticipated that in highly hazardous industrial environments, drones can drastically improve occupational safety and health (OSH) measures by eliminating/minimising physical human interventions, thereby improving the overall wellbeing of employees. Figure 1 provides a 14-year comparison of the rate of fatal injuries to workers within the mining and quarrying industry (including cement manufacturing) within the United Kingdom to that of workers within all industries [9]. It is directly evident from the Figure that the rate of fatal injuries in the UK mining and quarrying industry is much higher than the total rate. For example, 2017/2018 data indicates that the fatal injuries rate in mining and quarrying was 3.5 per every 100,000 workers, while that rate for the entire UK industries within the same year was 0.44 per every 100,000 workers. Additionally, 664 deaths were directly attributed to industrial confined space operations between 2008 and 2013 [10], and this figure excludes deaths within trenches and most mines. These high rates of fatal injuries within mining and quarrying operations due to confined space operations highlight the need for drastic, innovative, and sustained efforts towards improving OSH practices.

Figure 1.

Comparison of the rate of fatal injuries per 100,000 workers in the UK for mining and quarrying industry including cement manufacturing against all industries. Plots are based on data in [9], and y-axis is shown using a logarithmic scale for better assessment of the order of magnitude.

Drone-assisted aerial mapping has the potential to reduce the risk of entrapment and dust inhalation within cement plants. Such missions can also be extended to other industrial operations whereby confined space related activities (especially routine inspections, monitoring, and volume estimations) still pose significant risks to OSH performance due to the labour-intensiveness of current approaches. Furthermore, drones can be integrated with other sensors, such as thermal and air quality monitors, thereby allowing for multiple measurement outcomes per single mission. From a business perspective, the use of drones for estimating stockpile volumes is anticipated to be faster, cheaper in the long run through economies of scale, and more accurate when compared to traditional methods. This latter point is a matter of critical importance as stockpile volume measurements are inputs to working capital estimations. A recent example of UAV conducting missions within confined spaces was demonstrated by Kas and Johnson [11] from Dow Chemical Company in the USA between 2016 and 2018. Their work illustrated that millions of dollars could be saved through reductions in inspection/maintenance labour, material/contracted services (especially scaffolding), as well as productivity enhancements due to reduced downtime. As a direct consequence, over 1000 confined space entries were eliminated, thereby improving workplace safety [11].

This paper will explore the feasibility of implementing drone-assisted stockpile estimation and confined space monitoring based on a combination of theoretically simulated examples and assessment of real-life data from a prominent UK-based cement plant. The drone was integrated with adequate sensors to achieve fast, cheap, and safe missions. The criticality, frequency, and labour-intensiveness of stockpile inspection and estimation within typical cement processes triggered its selection as a case study. However, the concepts described here can be easily adapted to other high-hazard industries, especially where human intervention is still the dominant practice. Besides the OSH merits of drone-assisted stockpile inspection and estimation which is paramount, negligible error levels were obtained with some of the tested real-life cases, thereby indicating encouraging prospects for industrial deployment in the near future. The remainder of this paper is organised as follows: Section 2 provides a specific research background to related initiatives, so as to understand the current state of research, expose current gaps, and eventually justify the focus of the current study. Section 3 is dedicated to reviewing the operations and challenges within the cement industry as a whole with a keen outlook on confined space hazards, drone safety regulations, and typical process layouts. In Section 4, the proposed drone-assisted solution for confined space inspection, monitoring, and stockpile volume estimation is presented through initial simulations and further validations with data from a real-life all-integrated cement plant. Section 5 provides a cost-benefit analysis of the proposed solution in comparison to those demonstrated by selected earlier studies. Finally, Section 6 provides an overall conclusion to the study as well as potential future directions.

2. Research Background

2.1. Examples of Drone Missions in Confined Spaces

A number of studies have managed to demonstrate successful use of drones for real-life applications within confined spaces [12,13,14,15,16,17,18,19,20,21]. These studies have conducted missions that require localisation of the drone in the confined spaces for the purpose of mapping or inspection. In what follows, we will consider several examples of these studies whilst focusing on the sensors used for localisation. This is because the approach used in localisation and control is arguably the key driver in enabling a successful mission within a confined environment. Due to the rate of technological enhancement (and for the purpose of brevity), only studies from the previous five years are considered here. This section is also complemented with Table 1 providing a summary of the discussed studies in terms of the missions, drone configuration, sensors employed, and localisation approach.

Table 1.

Summary of studies that demonstrate successful use of drones within real-life confined spaces.

Burgués et al. [12] equipped a commercial Crazyflie 2.0 drone with a metal-oxide-semiconductor (MOX) gas sensor. The drone is a nano-quadcopter with a diagonal size of 92 mm. The developed platform was able to build a 3D map of gas distribution from an ethanol bottle (gas source) within a confined space and identify the location of the gas source. The main limitation of the study was that they used an external localisation system based on ultra-wideband (UWB) radio transmitters to localise the drone in the confined space. These UWB systems are costly, and the drone cannot map the gas source without equipping the area of interest with a UWB system. Ajay Kumar et al. [13] proposed a drone with an indoor mapping and localisation system returning the horizontal position of the drone based on a point-to-point scan matching algorithm that uses the information fed to it from 2D Light Detection and Ranging (LiDAR) and Inertial Measurement Unit (IMU) sensors. Altitude was obtained from a vertical secondary 2D LiDAR. A Kalman filter was then used to derive the 3D position of the drone by fusing the horizontal and vertical locations. The proposed navigation system was verified by upgrading a Phantom 3 Advanced Quadcopter (350 mm diagonal size) with the LiDARs, IMU, and an onboard computer. Flight tests were carried out in an indoor industrial plant site. Their proposed approach localised the drone with a lower error compared with other traditional scan matching methods such as Iterative Closest Point (ICP) and Polar Scan Matching (PSM). Lee et al. [14] developed a drone to search for survivors in confined spaces such as collapsed buildings or underground areas. They used DJI Matrice 100 drone, which has 650 mm diagonal size. It was upgraded with a 2D LiDAR, an infrared depth camera, and a drone computer to map unknown indoor spaces. The sensors’ data were processed and visualised in ROS (Robot Operating System) with a ROS package called RVIZ. The flight tests showed that the sensors were insensitive to illumination change and were able to detect some landmarks like door and walls. However, the approach was only capable of demonstrating 2D mapping of indoor areas.

Castaño et al. [15] developed a drone for sewer systems inspection. The drone was based on a DJI F450 frame of 450 mm diagonal size and was equipped with four 1D LiDARs. One downward-pointing LiDAR for estimating height over water and three LiDARs on the same horizontal plane to measure distance from pipe walls. Two Proportional–Integral–Derivative (PID) controllers were implemented, one for hovering the drone over the water, and the other for aligning and centring the drone inside the pipe. This setup enabled the inspection of a 50 m long sewer pipe in less than 3 min with real-time video feedback. However, the study was not extended to mission cases with no/limited light conditions within the sewer system. Anderson et al. [16] built a drone called the “Smellicopter” as an odour-finding robot based on the Crazyflie 2.0. The drone was equipped with a less than 3 cm custom electroantennogram (EAG) circuit that has a biological antenna. Through the application of a 2D cast-and-surge algorithm, the EAG circuit measured and localised the sources of volatile organic chemical plume in less than one minute. The drone was also equipped with an optical flow camera and infrared laser range finder to hover without drifting and without a GPS. Whilst the proposed setup can be used to search for survivors as well as detect loss of containment in chemical plants, it works only if the wind direction heads from the gas source direction. Esfahlani [17] developed a drone for fire and smoke detection. The indoor mapping system for the employed Crazyflie 2.0 nano quadcopter used an onboard IMU and a monocular camera, along with a fire detection algorithm. The proposed mapping system relied on a Simultaneous Localisation And Mapping (SLAM) system and pose-graph optimisation algorithms. The drone successfully detected (i.e., flying towards and hovering around) fires and flames in an autonomous fashion. However, the generated map of the environment was only demonstrated in 2D, and the equipped camera was incapable of working under low light conditions. Cook et al. [18] used a swarm of three small drones equipped with radiation sensors for radiation source localisation and mapping. They developed contour mapping and source seeking algorithms for indoor flight. In their work, two drone platforms were used to validate their proposed approach: a Crazyflie 2.0 and a DJI Flamewheel 450. ROS was used in both platforms for control and communication. In their experimental tests, the radiation source was replaced with a light source, and they managed to generate a contour map that has an error of around 8%.

A number of studies also focused on developing drone technology solutions for inspection and mapping of underground mines whereby challenges such as darkness, dust, and smoke (in case of an accident) existed. Turner et al. [19] developed a drone platform for geotechnical mapping, rock mass characterisation, and inspections in underground mines. RGB, multispectral, and thermal cameras were used within the DJI Wind 2 drone which has 805 mm diagonal size. For mapping and localisation, the drone was equipped with an Emesent Hovermap payload which is a smart mobile scanning unit that provides autonomous mapping. The results managed to show detection and characterisation of loose, unstable ground, and adverse discontinuities in the underground mining environment. However, this approach is very expensive as typical cost can be in excess of £100,000 (based on 2020 pricing). Papachristos et al. [20] developed two drones for underground inspection and mapping. Both drones are based on the DJI Matrice 100, which has 650 mm diagonal size. A ROS package was used to control the drones based on a Linear Model Predictive Control strategy. The first drone was integrated with a 3D LiDAR, a mono camera, and a high-performance IMU. The drone was tested with three localisation and mapping algorithms: LiDAR Odometry And Mapping (LOAM), Robust Visual Inertial Odometry (ROVIO) and a fusion of both algorithms in an extended Kalman filter (EKF). The second drone was integrated with a stereo camera, thermal vision camera, and a high-performance IMU. The drone uses ROVIO algorithm for localisation and mapping. The experimental tests in a long mine show that LOAM provides less drift in localisation than ROVIO in such environments. However, LOAM requires a heavier payload than ROVIO. Hennage et al. [21] developed a fully autonomous drone capable of travelling for more than a mile through an underground mine. The drone was based on the DJI M210 quadcopter which has a diagonal size of 643 mm. It has a built-in Visual Odometry (VO) that uses onboard ultrasonic and stereo camera sensors for indoor navigation. Custom downward-facing lights were equipped to use the VO algorithm in the mine. The experimental results showed that dust has a negligible effect on the sensor data; however, dust corroded many of the electrical and mechanical components of the drone.

2.2. Challenges of Flying Drones in Confined Spaces

Operational challenges of flying drones in confined spaces include limitations of GPS signal which will impair accurate drone localisation, as well as the requirement to fly the drone beyond the pilot’s line of sight [22]. SLAM method has proven to be proficient for localising and mapping in confined spaces. SLAM algorithms typically use a camera, LiDAR, or both to localise itself in an unknown environment by simultaneously building a map of such environments even if the mission starts from an unknown location [23]. Huang et al. [24] provided a comprehensive overview of SLAM systems, including LiDAR SLAM, visual SLAM, and their fusion. The study illustrated that LiDAR SLAM provides 3D information whilst not being affected by changes in light conditions. However, when compared to visual SLAM, LiDAR SLAM systems are expensive, heavy and computationally intensive. The accuracy of SLAM systems can be severely impacted by low-texture environments which have no significant changes between two consecutive scannings, such as long corridors or pipelines. Wang et al. [25] improved SLAM’s performance in such low-texture environments by using an IMU in conjunction with the 2D LiDAR. An alternative method for indoor localisation was also presented by Xiao et al. [26] where a tethered drone was localised using tether sensory information, including the tether length, elevation, and azimuth angles. However, this type of approach may be best suited for unconstrained environments with limited obstacles.

Several recent studies have focused on improving drone localisation in confined spaces [27,28,29,30,31] to overcome previously mentioned limitations. Chen et al. [27] presented a visual SLAM with a time-of-flight (ToF) camera. The ToF camera has the advantage of working in a dark environment whilst measuring depth. However, the ToF camera has a limited depth range of around 4 m. Gao et al. [28] designed a monocular vision and a microelectromechanical system (MEMS) to estimate drone altitude in an indoor area. This approach was limited to low altitude flights, and experimental tests showed an error of 4% whilst flighting at 2 m height. Nikoohemat et al. [29] presented detection and 3D modelling algorithms for indoor building structures such as doors, walls, floors, and ceilings from point clouds that are collected by a mobile laser scanner. However, to improve the map, the approach required visual inspection and correction to the detected structures within the generated map from an operator. Yang et al. [30] equipped the Thales II drone (135 mm diagonal size) with multi-ray sonar sensor. The presented work used an EKF to fuse the sonar sensor with an IMU to estimate the drone location in an indoor area. The sonar sensor detection range was limited to 6 m. Zhang et al. [31] estimated drone positions and orientations in an indoor area by attaching the drone with three or more ultra-high frequency tags, and a radio-frequency identification reader was used to track the drone using a Bayesian filter-based algorithm. Test results showed 0.04 m average position error and 2.5 average orientation error from the actual positions and orientation.

2.3. Commercial off-the-Shelf Indoor Inspection and Mapping Drones

There is a general scarcity of commercial off-the-shelf (COTS) ready-to-fly drones for indoor inspection and mapping. Note that inspection means visual exploration of dark, inaccessible (or hard to reach) confined spaces, whereas mapping refers to generation of a 3D map for the inspected area. The following drone solutions are examples of what one could find in the market. In 2019, Flyability released Elios 2 for testing in confined spaces. In fact, at AUVSI XPONENTIAL, Elios 2 was named the best indoor inspection solution in the market [32]. Furthermore, RoNik Inspectioneering and the American Petroleum Institute (API) formally approved Elios 2 as a tool for inspection [32]. The UK distributor COPTRZ argued that Elios 2 could save money, improve data capture, reduce risk, and complete tasks other drones cannot [32]. Despite the previous benefits, the starting price of most Elios 2 systems is approximately £27,000 (based on 2020 pricing) and it cannot conduct mapping in dusty or dark environments.

Another off-the-shelf commercial example is Hovermap, which is a complete mobile LiDAR mapping solution that can be attached to a drone. A drone integrated with the Hovermap can autonomously fly, avoid obstacles, and map in confined spaces [33]. Underground mining operations using a Hovermap autonomous flight system were discussed in [34]. However, the drawbacks of the Hovermap device are its weight as a payload, which is around 1800 g, and its price typically ranges from £86,000 to £120,000 (based on 2020 pricing). A final example of commercial systems is the scanning and data processing system provided by Wingfield Scale & Measure [35]. Their drone is equipped with a LiDAR to measure materials in sheds or barns. Moreover, they provided a demo video that shows some reconstructions of stockpiles; however, no information is available on technical details and/or accuracy of the system.

2.4. Outdoor Aerial Stockpile Volume Estimation

Estimating stockpile volumes in outdoor environments typically uses aerial imagery and photogrammetry as it is cheaper and faster when compared to other methods such as Robotic Total Station (RTS) [1], satellite-based Global Navigation Satellite System (GNSS) surveying [36], and LiDAR [37]. Photogrammetry relies on generating 3D digital models of physical objects from 2D images by creating a series of overlapping images to derive the 3D shape [38]. Mora et al. [38] compared the advantages and limitations of existing outdoor stockpile mapping technologies, including drone photogrammetry, RTS, GNSS surveying and ground LiDAR. It was shown that photogrammetry with a low-cost drone has advantages with regards to cost, accuracy, collection time, and risk over RTS, GNSS, and ground LiDAR. Arango and Morales [39] compared the efficiencies of material stockpile volume estimates obtained via electronic/optical survey instrument (i.e., Total Stations Theodolite) to those acquired via drone missions in an outdoor environment. The results showed that the differences between the expected and actual volumes were 2.88% and 0.67% for total stations theodolite and drone-based mission, respectively. Kaamin et al. [40] used aerial photogrammetry to estimate a landfill stockpile volume. The aerial survey was conducted with a commercial DJI Phantom 4 Pro drone, and the collected images were processed using Pix4dmapper computer program for volume estimation. The study did not show the accuracy of the estimated volume; however, it illustrated changes of the landfill over a two-month period. Wan et al. [41] estimated the sizes of stockpiles carried on barges using a traditional method (reshaping a stockpile to a trapezoidal shape and measuring the volume with a measurement tool like a tape), laser scanning, and aerial photogrammetry. In terms of time, aerial photogrammetry required an average of 20 min for data collection and processing, while traditional method and laser scanning required 120 min and 40 min to measure the same stockpile, respectively. In terms of accuracy, all three methods showed similar results. The previous assessment is also confirmed by the estimates in [42] which show that aerial stockpile volume estimation is precise, with a relative error of 0.002%, and faster than terrestrial laser scanner (TLS). Nevertheless, it should be noted that all previously discussed studies have only focussed on applying drones in outdoor environments. Dust, limited illumination, and lack of GPS signal in confined spaces are serious challenges that were rarely considered in any of the previous studies that focussed on aerial stockpile volume estimation.

2.5. Dust Effects on LiDAR Sensors

LiDAR are widely regarded as the best class of sensors for dusty applications, owing to its ability to image targets despite poor visibility [43,44]. Perhaps, this is the reason why a significant proportion of reviewed studies furnished their drones with LiDARs for missions conducted within dark and dusty environments such as underground mines. Phillips et al. [43] illustrated the behaviour of LiDAR sensors in the presence of a dust cloud placed between the LiDAR and a target. The study concluded that dust starts to slightly affect measurements when the atmospheric transmittance is less than 71–74%. Nonetheless, LiDAR can range to a target in dust clouds with transmittance as low as 2%. Additionally, the same study showed that when a LiDAR measures dust, it does so by measuring the leading edge of the dust rather than random noise. Similarly, Ryde and Hillier [44] illustrated the effects of dust on LiDAR sensors, and showed that the probability to range a target in dust is either complete success (100%) or complete failure (0%), depending on the transmittance value. Moreover, they conducted experimental tests to range a target placed 17 m from their LiDAR sensors: For the SICK LMS291-S05 LiDAR, they detected noise with a range bias error of less than 0.1 m; for the Riegl LMSQ120 LiDAR, they detected a gaussian distribution error of less than 0.125 m.

The previous studies demonstrated the challenges of modelling the dust noises on LiDAR ranging. In fact, the noise depends on many factors such as particle size, particle shape, homogeneity, density, turbulence, and temperature. Besides, noise levels can vary between similar LiDAR sensors that are manufactured by different companies even if they are tested in the same environment [44]. However, in general, LiDAR is able to operate in dark and dusty environments without noticeable effects on its measurement accuracy. As such, in cement plants, where confined spaces are dusty with local dust levels expected to rise if a drone flies beside the stockpiles, LiDAR becomes a convenient selection.

3. Current Health and Safety Challenges in Cement Plants

3.1. Overview

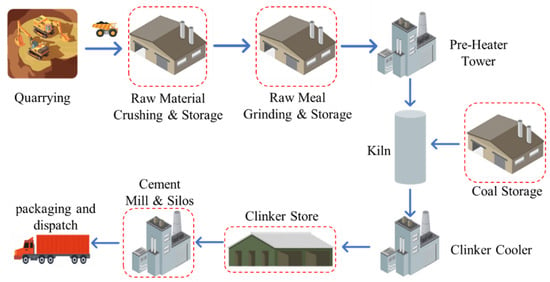

Cement is widely regarded as one of the most essential building materials. The Mineral Products Association (MPA) states that cement is the world’s second most consumed substance after water [45]. This is evident in the fact that cement is produced in over 150 countries with a global capacity of over 4080 million metric tonnes (MMT) [46] and production is expected to rise further due to global population growth, economic buoyancy of several developing countries as well as increased need for reconstructions. Cement is mainly produced from a mixture of naturally occurring elements such as limestone (CaCO3), silica (SiO2), alumina (Al2O3), and iron ore (Fe2O3) [47]. Figure 2 provides a schematic illustration of a typical all-integrated cement manufacturing process. Owing to the multi-staged configuration of cement manufacturing processes (e.g., crushed limestone, pulverised coal, clinker, cement, gypsum, etc.), several intermediate storage systems (also known as stockpiles, sheds, silos, hoppers, etc.) are required to sustain uninterrupted continuous and batch operations [48]. The high surface area of cement and its manufacturing materials often necessitates their storage within confined spaces, so as to control access as well as to minimise the exposure of such materials to the environment. Whilst confined spaces offer considerable isolation for the production materials stored within them, routine access during inspection, maintenance, and material estimation has led to numerous occupational injuries and fatalities across industries [49,50,51,52,53,54,55,56,57,58].

Figure 2.

Schematic layout of a typical all-integrated cement manufacturing process. Red dashed lines depict confined spaces. The process commences with raw materials extraction from the quarry in the form of boulders. The boulders are then transported to the crusher via conveyors and/or dump trucks, where they undergo initial but significant size reductions. The crushed materials are then stored in stockpiles before entering the mills for further grinding and homogenisation, after which they are fed into the rotary kilns for pyro-processing and conversion to clinker. The clinker extracted from the rotary kilns are then mixed with 3–5% gypsum in the cement mills for final cement production before dispatching to customers.

3.2. Revisiting Confined Space Safety Challenges

On a global scale, confined space accidents have contributed significantly to poor industrial safety performance and continue to pose serious challenges [59]. Table 2 highlights some of the available statistics on injuries and fatalities attributable to working in confined spaces over a three-decade period. The unacceptably high rate of confined-space-related injuries and fatalities triggered the implementation of stringent regulations such as the Confined Space (1997) [60] as well as the Management of Health & Safety at Work regulations (1999) [61]. These regulations advocate the institutionalisation of thorough task risk assessments, confined space entry procedures, and the incorporation of the principles of safety hierarchy of control whereby immense emphasis is placed on elimination, substitution, and engineering controls.

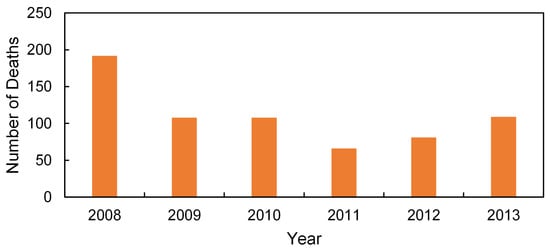

Confined space incidents are common within various industries, owing to a wide range of industrial assets that fit the definition of confined spaces. Some of the most common examples of industrial confined spaces are boilers, silos, sheds, tanks, hoppers, as well as any area that is characterised by poor illumination, poor air quality, and restricted access. Over the years, the identification and analysis of confined space fatalities have been hampered by under-reporting, lack of specific coding for confined space incidents, different approaches for collating statistics by different regulatory authorities, and the lack of genuinely unified working definition of confined spaces [51]. Figure 3 shows the 664 deaths attributable to confined spaces across several countries from 2008–2013 whilst excluding fatalities that occurred within trenches and mines, based on SAFTENG.net, LLC [10]. On the one hand, confined space hazards can have immediate and obvious impacts such as injuries and fatalities caused by an avalanche of suspended materials within a silo or hopper. On the other hand, dust inhalation within confined spaces can lead to less obvious occupational injuries that could manifest themselves decades after initial exposure [62]. The selection of cement manufacturing process as a case study can, therefore, be attributed to the significant presence of hazards posed by all types of confined spaces, especially with the high surface areas of cement and its production raw materials (e.g., pulverised coal, limestone, silica, gypsum, clinker, iron ore, etc.).

Figure 3.

Global distribution of confined space related deaths from 2008–2013 based on data provided by SAFTENG.net, LLC [10]. The results do not include fatalities that occurred within trenches and most mines.

The typical size of the aerodynamic diameter (The aerodynamic diameter of an irregular particle is defined as the diameter of the spherical particle with a density of 1000 kg/m and the same settling velocity as the irregular particle.) of cement particle dust ranges from 0.05–5.0 m [63], which makes it highly detrimental to human health. Meo [64] reported links between inhaling very fine dust and illnesses such as bronchitis, asthma, shortness of breath, chest congestion, coughs, dermatitis, etc. It is, therefore, evident that working in cement manufacturing plants exposes workers to a number of serious hazards; hence, applying drone technology that will eliminate or at least minimise human interaction with industrial confined spaces is imperative to OSH performance.

Table 2.

Collected data on incidents and fatalities within confined spaces.

Table 2.

Collected data on incidents and fatalities within confined spaces.

| Country/ Region | Period | Incidents | Fatalities | Fatality Rate per 100,000 Workers | Source |

|---|---|---|---|---|---|

| Australia | 2000–2012 | 45 | 59 | 0.05 | [65] |

| New Zealand | 2007–2012 | 4 | 6 | 0.05 | [54] |

| Singapore | 2007–2014 | N/A | 18 | 0.08 | [54] |

| Quebec, Canada | 1998–2011 | 31 | 41 | 0.07 | [50] |

| British Columbia, Canada | 2001–2010 | 8 | 17 | N/A | [54] |

| USA | 1980–1989 | 585 | 670 | 0.08 | [66] |

| USA | 1997–2001 | 458 | 0.07 | [67] | |

| USA | 1992–2005 | 431 | 530 | 0.03 | [68] |

| UK and Ireland | N/A | N/A | 15–25/year | 0.05 | [69,70] |

| Italy | 2001–2015 | 20 | 51 | N/A | [71] |

| Jamaica | 2005–2017 | 11 | 17 | N/A | [71] |

3.3. Indoor Stockpile Volume Estimation

Besides fundamental OSH concerns, accurate estimation of stockpile volumes within continuous operations processes is particularly challenging due to their unevenness. This is further compounded by simultaneous stockpiling and material extraction, which in turn necessitates several routine visits during stock estimations. Traditional stockpile measurement methods such as walking wheel, eyeballing, bucket or truckload counting have been proven to be very costly, unsafe, time-consuming, and inaccurate [72]. Eyeballing is the quickest but least accurate estimation method, whereby the volume is visually estimated. For regular shapes such as conical and trapezoidal stockpiles, a walking wheel and a measuring tape are used to measure different sections of the piles, and the volume can be estimated mathematically. If the materials are stocked from trucks, the volume can be estimated by counting the number of loads times the volume capacity of each truck. However, these traditional methods have problems such as low efficiency and low accuracy due to human errors. Whilst relatively recent static 3D scanners (e.g., 3DLevelScanner and VM3D volumetric laser scanners) have offered better accuracies with reduced measurement times, several of such scanners are simultaneously required for estimating the often large silos and sheds within cement plants, which significantly increases the cost of measurement (for instance, typical starting unit price of such 3D scanners is approximately £20,000 based on 2020 pricing). Another limitation of 3D scanners is their lack of visualisation ability, which implies that they must be combined with other devices (e.g., gyro whip) to eliminate hazards associated with hanging materials within silos. On the contrary, drones have the capability to execute all of these critical tasks concurrently, thereby offering superior alternatives.

3.4. Drones Safety Regulations

Every unmanned civil aircraft, irrespective of its control mechanism (i.e., remotely piloted, completely autonomous or combinations) must abide by Article 8 of the Convention on International Civil Aviation (Doc 7300), which was signed on 7 December 1944 in Chicago and later amended by the International Civil Aviation Organisation (ICAO). Since the ICAO is an agency within the United Nations, Article 8 (Doc 7300) is reasonably recognised as a general statement globally. However, its outdatedness (i.e., signed and amended over 7 decades ago) has led to the emergence of specific laws to enhance policing by individual countries [73,74]. In Australia, drone operations are regulated by the Civil Aviation Safety Authority (CASA), while the Federal Aviation Administration (FAA) is saddled with such responsibilities in the USA. In China however, UAVs are divided into seven different classes (I–VII) and regulated by the Civil Aviation Administration of China (CAAC). Those in Class I have total weights (including fuel) less than 1.5 kg and do not require any registration. However, drones that belong to Class II-VII are governed by rules that are specific to their weights and intended applications [75,76]. Most of the 28 countries within the European Union (EU) already possess robust sets of regulations concerning the operation of drones. Initially, the European Aviation Safety Agency (EASA) created an introductory framework for the operation of drones in 2015 and later developed the prototype commission regulation on Unmanned Aircraft Operations in 2016 [74]. However, since the data used in this study were acquired from a UK-based case study, more emphasis will be placed on the UK regulatory agency and its laws.

In the United Kingdom, commercial applications of drones are highly regulated and monitored by the Civil Aviation Authority (CAA), owing to the potential hazards they pose to life and property. Permission for commercial operations (PfCO) from the CAA is required prior to flying drones for commercial purposes (ANO (Air Navigation Order) 2016 article 94(5)). To apply for that permission, first, an assessment by a National Qualified Entity (NQE) is necessary for those with no previous aviation training or qualifications. Second, insurance is required for compliance with regulation EC 785/2004. That said, the operation of drones within buildings from which drones cannot escape into the open air is not subject to air navigation legislation [77]. As a result, no permission is required from the CAA for using a drone for indoor inspection or mapping, even for commercial use [77]; yet users are still required to abide by general OSH at work regulations [78]. On the other hand, drone operations for outdoor mapping follow the current legal framework in the UK. The remote pilot of a drone must maintain direct, unaided visual contact with the drone (ANO 2016 article 94(3)). Also, the operator must not recklessly or negligently cause the drone to endanger any person or property (ANO 2016 article 241). Moreover, the remote pilot may only fly the drone if the flight can be safely made (ANO 2016 article 94(2)).

In-line with general OSH at work guidelines, the completion of detailed and representative risk assessments is required prior to individual flight missions [78], so as to regularly capture task or environmental variations that may have occurred between successive missions. The principles of hierarchy of risk control advocated by HSE-UK is then used to define and implement appropriate mitigating actions that will guarantee that risks are completely eliminated or at worst minimised to acceptable levels. Relevant elements of the aforementioned regulations were adequately incorporated into every stage of the flight missions reported in the current study.

4. Proposed Drone-Assisted Solution for Stockpile Volume Estimation

4.1. Overview

This work will assess the use of drones to measure volumes of stockpiles within cement plant environments. Here, stockpiles placed both outdoor in an open area and indoor in a dark, dusty storage area will be considered. The selected sensor for this type of mission is LiDAR due to its ability to work in dark and dusty environments without noticeable effects on its measurement accuracy (please refer to discussion in Section 2.5). The proposed drone-assisted mapping solution was initially tested based on simulated scenarios that adequately mimicked real-life indoor and outdoor stockpiles. The simulations allowed for unrestricted iterations that may be impracticable on-site, due to the implications of such practices on production downtime [79,80,81]. The lessons learned from the simulations then informed decisions made during on-site data collection, especially with regards to instrumentation, flight paths, data collection, and signal processing.

4.2. Simulation Framework

4.2.1. Simulation Setup and Selection of Sensors

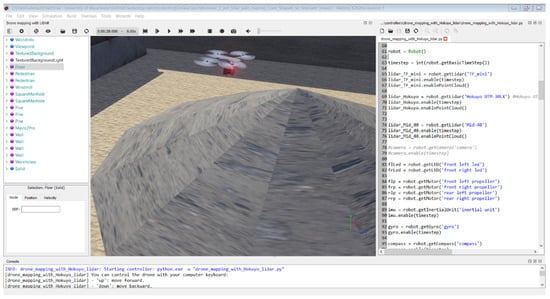

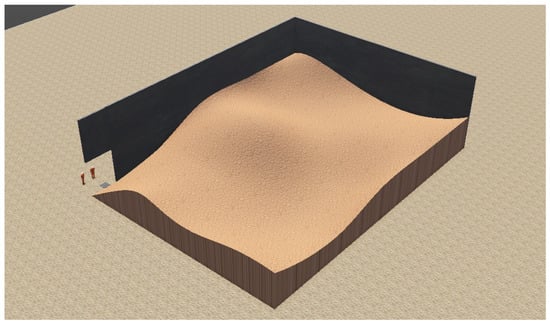

The cement plant used for our practical case study is a continuous operations process, which implies that any form of downtime could be critical and costly. In order to minimise potential downtime associated with on-site data collection, it was judged that simulating comparable scenarios (e.g., visibility, confinement, etc.) can significantly aid the optimisation of instrumentation, flight paths, data acquisition, data storage, and signal processing. Based on this premise, multiple aerial mapping missions, for indoor and outdoor stockpiles, were simulated using the same generic quadcopter platform in the Webots simulator [82] (Version R2020a-rev1), Figure 4. The outdoor stockpile could be viewed, in practice, as a replica of limestone, gypsum, or sand stockpile. The indoor stockpile, on the other hand, could be compared to cement and clinker stockpiles that require adequate protection, due to lime’s high affinity for moisture.

Figure 4.

An example of a developed model in Webots simulator with a drone and one conical stockpile. The drone is programmed with Python code, as shown in the right window of the program.

Webots simulator is a physics-based general-purpose mobile robotics simulation software that is developed by Cyberbotics Ltd. Webots has three main components: the world (one or more robots and their environment), the supervisor (a user-written program to control an experiment), and the controller of each robot (a user-written program defining its behaviour). All simulations were programmed using Python as a controller in Webots, giving the advantage that this controller can later be transferred to a real drone. The simulation environment can be described as simulating either outdoor or indoor environments, under day or night conditions. The drone model was equipped with IMU and GPS sensors for flight stability and control. Moreover, the drone was equipped with an indoor localisation system to account for operations in GPS-denied environments. Details of the indoor localisation system will be later discussed in Section 4.2.2.

The aim of the conducted simulations is to assess the accuracy of estimating the volume of a stockpile placed either in outdoor or indoor locations using three types of LiDAR sensors (1D, 2D and 3D). The data collected by the LiDAR are called point clouds, and each point cloud is defined in a three-dimensional space by its coordinates x, y, and z with respect to its LiDAR frame of reference. In this paper, the collected point clouds are used to create a 3D surface of a stockpile to estimate its volume; more details on the point cloud processing will follow in Section 4.2.3. In this simulation, commonly used and easily accessible LiDARs were selected of the many 1D, 2D, and 3D LiDAR sensors available. Generally, LiDAR prices depend on its detection range, field of view (FoV), resolution (number of points per scan), point rate, and the sensor manufacturer. Usually, 3D LiDARs with 360 FoV are the most expensive. For instance, RoboSense manufacturers a 360 3D LiDAR with 16 layers that costs £3,786 (based on 2020 pricing) [83]; if the model is advanced to 32 layers, the cost could then rises to £18,389 (based on 2020 pricing) [84]. The 3D LiDAR selected for this study is Livox Mid-40 because of its cost-effectiveness (£539) (based on 2020 pricing) and IP67 certification (waterproof and dustproof). However, this LiDAR model has a small circular FoV (38.4) instead of 360. Because the simulator does not allow representation of circular FoV, we decided to model it based on a square FoV that is 38.4 by 38.4. Our selected 2D LiDAR was more expensive compared to our 3D LiDAR selection; however, it offered a wide FoV (270), high resolution, and IP64 certification (waterproof and dustproof). Finally, our selected 1D LiDAR was a low cost, lightweight, and low power consuming sensor. Table 3 shows a comparison between the implemented LiDARs, and Figure 5 shows snapshots of the simulations demonstrating the point clouds resulting from each LiDAR type.

Table 3.

Comparison of the selected LiDAR sensors.

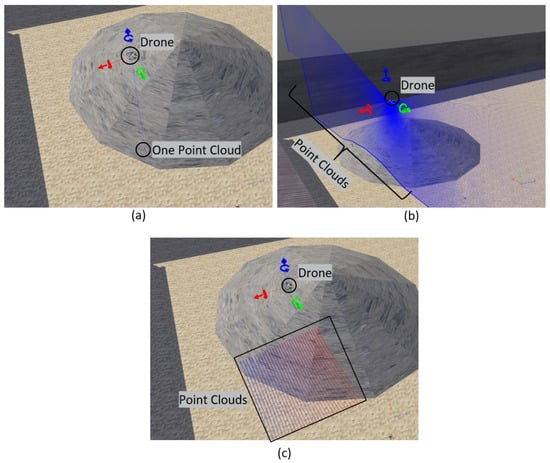

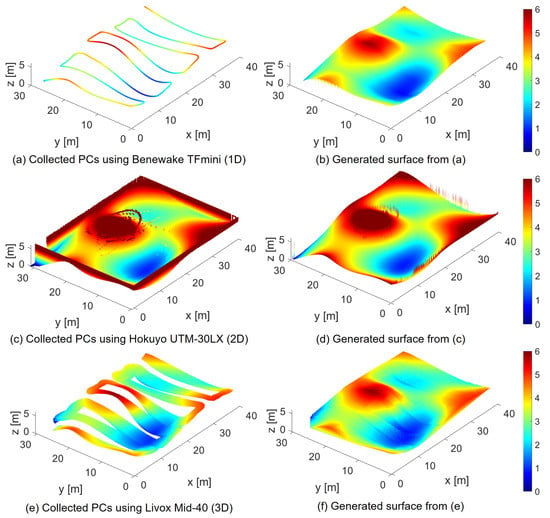

Figure 5.

Snapshots of a simulation scenario with the point clouds produced by each LiDAR model: (a) Benewake TFmini (1D), (b) Hokuyo UTM-30LX (2D), and (c) Livox Mid-40 (3D).

For the sake of the comparison, all of the simulation tests were conducted with the same flight trajectory, which was generated from a set of points around the area of interest where the stockpile is placed, see Section 4.2.5 for details. The drone followed the desired trajectory autonomously with two controllers using the data from the IMU and the localisation system. The forward speed controller used a proportional controller with a proportional gain, , also, the heading controller used another proportional controller with a proportional gain, .

4.2.2. Indoor Localisation System

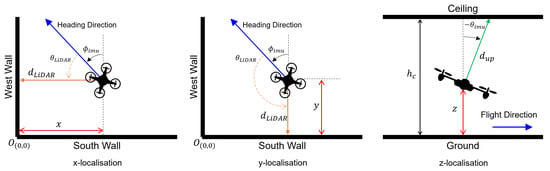

For outdoor missions, the location of the drone () can be estimated directly from the GPS data. On the other hand, indoor missions within confined storage spaces require another localisation approach due to unavailability of GPS signal. For such missions, we used two sensors: a long-range 2D 360-degree LiDAR to determine the drone’s horizontal location () as well as to ensure obstacles avoidance, along with a 1D LiDAR for determining the drone’s altitude (z). The 2D 360-degree LiDAR returns points defined in terms of a range ( ) to the walls and a direction () measured from the axis aligned with the heading direction. Figure 6 illustrates the drone’s horizontal location, as seen from the top view. We assumed that the distances () between the drone and the walls can be obtained from Figure 6 using the following Equations:

where is the yaw angle obtained from the IMU sensor. The drone’s altitude (z) estimation, illustrated in Figure 6, can be obtained using:

where is the height of the storage’s ceiling, is the range from the 1D vertical LiDAR, and is the pitch angle obtained from the IMU sensor. This approach to altitude estimation is limited to situations where the storage roof is straight and does not possess large irregularities.

Figure 6.

Indoor localisation based on storage geometry and onboard sensors. Note that the LiDAR scans through the whole 360 degrees; however, its range, , is here shown at the instances used to define x and y locations.

4.2.3. Locating and Filtering Point Clouds

During a simulation, the drone’s positions and orientations, along with the LiDAR data are saved as CSV files (comma-separated values files). Each point cloud () is defined in the three-dimensional space by its coordinates () with respect to its LiDAR frame (). The ground, LiDAR, and IMU frames are illustrated in Figure 7. The collected point clouds can then be transformed to the ground frame () using the following rotation transformation matrix:

where is a point cloud location defined in the ground frame (); , , and are the Euler angles around the IMU x, y, and z axis, respectively; is the point cloud location defined in the LiDAR frame ; and is the LiDAR location with respect to the ground frame () which can be obtained from the localisation system (GPS for outdoor missions or localisation system for indoor missions).

Figure 7.

The reference frame is used to define a point cloud (PC) with respect to the LiDAR reference () and the ground reference ().

Point cloud filtering is required to extract the region of interest from the acquired scan. A LiDAR with a wide field of view like the Hokuyo, which has 270 FoV, can detect the drone’s body. These detected point clouds must be removed before generating the surface. Before applying the transformation matrix in Equation (4) and to exclude point clouds that detect the drone’s body, all point clouds () must be tested against the condition defined as:

where () are coordinates of the point cloud in the three-dimensional space.

4.2.4. Surface Generation

A uniform 2D grid is generated across the measured dimensions of the inspection space (achieved using the meshgrid function in MATLAB). The measured height of the stockpile is interpolated on top of the uniform grid using a linear approach (achieved using the griddata function in MATLAB). The surface of the stockpile, , can then be generated from these returned values. To estimate the volume of the stockpile, , double integration of the surface over the inspection space () is performed as follow:

4.2.5. Mission Design

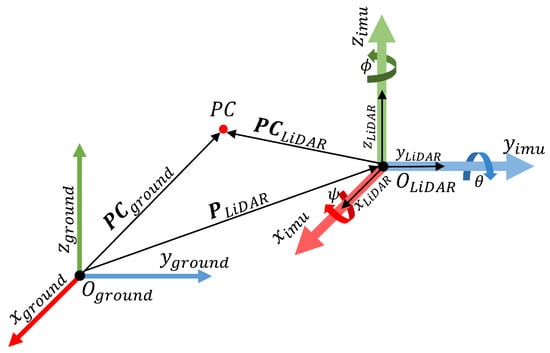

A 3D CAD model of a generic stockpile was designed in Inventor® CAD software and is shown in Figure 8. The shape of the stockpile is similar to a real irregular stockpile with uneven surface. The volume of the stockpile is 3129 m and extends over an area of 1200 m (40 m × 30 m). This 3D modelled stockpile was implemented in the simulation for an outdoor mission (open area) and an indoor mission (fully confined storage), as shown in Figure 8a,b, respectively. For the indoor simulation, the ceiling was set to 11 m height. Both missions were conducted with the same flight trajectory algorithm, which was generated from a set of points around the area of interest where the stockpile is placed. The drone will always head to the next point autonomously using the two proportional controllers (explained in Section 4.2.1) employing the data from the IMU and the localisation system. Figure 9a,b illustrate the recorded flight trajectories from the outdoor and indoor missions, respectively. An important issue that is evident from Figure 9 is that when mapping confined spaces, the drone cannot cover the whole area of interest because there is a limitation on how close it can get to the walls.

Figure 8.

(a) A 3D CAD model of an example stockpile that was implemented in an open area within the simulation environment. (b) The same stockpile implemented in a fully confined storage within the simulation environment (one wall was removed to show the stockpile inside).

Figure 9.

The recorded flight trajectories over the same area of interest where the stockpile is placed. (a) Outdoor mission, and (b) indoor mission. Both missions have 5 m distance between the reference trajectory points along the x-axis, and consequently, the outdoor mission has four more trajectory points around the stockpile. As such, for the outdoor mission, the first two and final two flight trajectory points are placed 2.5 m outside of the area of interest, whereas for the indoor mission, the first two and final two flight trajectory points are placed 2.5 m from the walls.

4.2.6. Simulation Results and Discussions

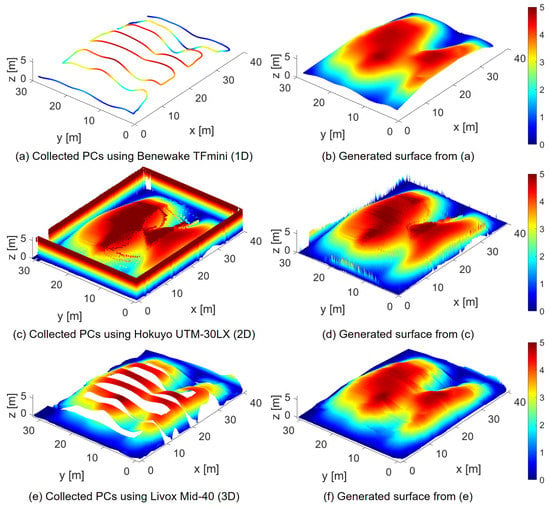

Figure 10 and Figure 11 show the collected point clouds and the generated surfaces from the outdoor and the indoor simulations, respectively. The drone’s orientations (roll, pitch, and yaw) and positions (x, y, and z) and the LiDAR point clouds were gathered from six simulation experiments (three LiDARs types for both outdoor and indoor missions). These experiments were conducted using a flight trajectory defined through 20 trajectory points for the outdoor mission and 16 points for the indoor mission, as shown in Figure 9. The collected data were processed, and surfaces of the stockpile were generated following the methods that were described in Section 4.2.3 and Section 4.2.4. Figure 10a,c,e illustrate the collected point clouds from the outdoor mission defined in the ground frame (). The surfaces generated from these point clouds are shown in Figure 10b,d,f. Similarly, Figure 11 shows the collected points clouds and the generated surfaces for the indoor mission. The collected point clouds by the 1D LiDAR shows a limited scanning area of the stockpile when compared to the other two LiDAR sensors (the collected point clouds appear as one line), as shown in Figure 10a and Figure 11a. The 3D LiDAR shows a better scanning area than the 1D LiDAR, but the 38.4 FoV was not enough to scan the whole area using our adopted trajectory and altitude, even with its 3D high-resolution scanning. However, it worth mentioning that the 3D LiDAR sensor adopted can produce a better result if the drone is flown at a higher altitude, but this may not be possible for indoor missions. The 2D LiDAR provides 100% coverage because of its FoV (270) and resolution. Moreover, the generated surface of the stockpile was constructed from the point clouds without interpolations between the points. However, this large FoV means more noise in the point clouds, as can be seen in Figure 10c and Figure 11c.

Figure 10.

Results for the outdoor mission (open area). (a,c,e) Collected point clouds (PCs) defined in the ground frame (); (b,d,f) generated surfaces from the collected point clouds.

Figure 11.

Results for the indoor mission (fully confined storage). (a,c,e) Collected point clouds defined in the ground frame (); (b,d,f) generated surfaces from the collected point clouds. In (c) the LiDAR detects the walls and the ceiling because the LiDAR has a wide field of view (these PCs are therefore excluded).

Table 4 shows the number of point clouds collected from each LiDAR and the estimated volume of the stockpile from the simulation tests, along with the estimation error. According to [36], regulations regarding mine engineering often state that estimated volumes should present ±3% accuracy of the whole amount. In our simulation experiments, the absolute errors of the estimated volumes were ≤3% except for the 1D LiDAR, where the error was 9.4% less than the actual volume, but only for the indoor mission. This increase in error in the indoor mission is because the 1D LiDAR has one point sensor, and the drone cannot cover the entire area of interest as previously demonstrated in Figure 9b. As a result, the part of the stockpile that is between the walls and the reference trajectory points was not included in the estimated volume. This part is 10.6% of the total stockpile volume. As such, if this part of the volume is dropped from the assessment, then the 1D LiDAR error could be claimed as 1.2%. As such, caution should be taken when considering stockpile shapes where there is more material close to the walls, as the error estimate is expected to increase.

Table 4.

Summary of simulation results for outdoor and indoor missions. The exact stockpile volume is 3129 m. Positive error indicates that the estimated volume is larger than the actual volume, whereas negative error indicates a smaller volume was estimated compared to the actual volume.

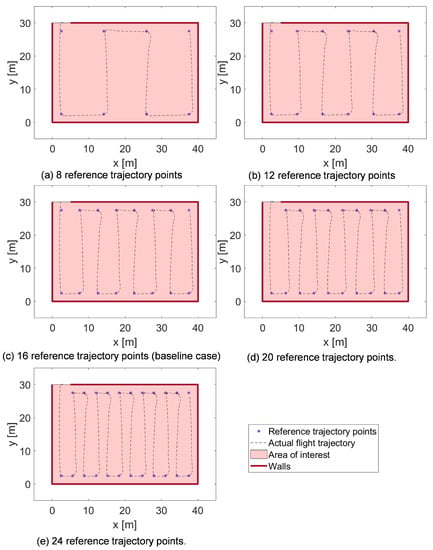

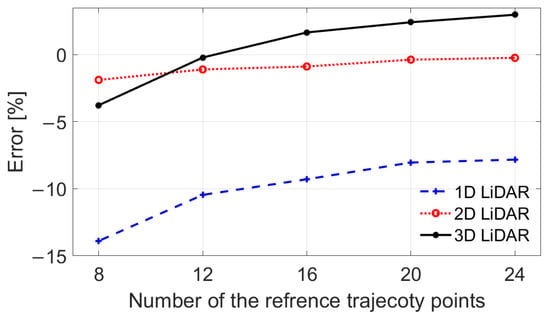

The error in the estimated volume of the stockpile in the indoor mission with the 1D LiDAR was high (9.4%). Consequently, further missions were simulated in the same fully confined storage using shorter and longer trajectory distances. This was achieved through varying the reference trajectory points over the same stockpile from 16 points to 8, 12, 20, and 24 points, as shown in Figure 12. The errors in the estimated volume from each trajectory are shown in Figure 13. The 1D LiDAR shows a reduction in the absolute error from 13.9% to 7.83% as the reference trajectory points increase from 8 to 24 points. Despite the reduction in error with the increase in trajectory points, 1D LiDARs will always lead to a relatively higher error value due to the limited capability of the sensor to map the area between walls and flight trajectory. The 2D LiDAR leads to errors from 1.9% to almost 0% as the reference trajectory points increase from 8 to 24 points. In fact, the 2D LiDAR achieved the best error values out of the three options adopted, and this is mainly attributed to the superior field of view characteristics. However, the obvious downside to this improvement is the significantly higher cost of the sensor when compared to the other two options. The 3D LiDAR leads to errors from −3.8% to +3.0% as the reference trajectory points increased from 8 to 24 points.

Figure 12.

Flight trajectories for the indoor mission using five different numbers for the reference trajectory points. The error in the estimated volume from each trajectory is shown in Figure 13.

Figure 13.

Effect of trajectory shape on the error in estimating the stockpile volume for the indoor flight missions.

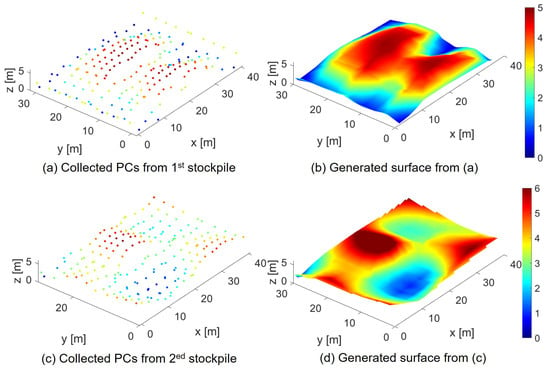

To assess the effect of changing material patterns within typical stockpiles, another indoor stockpile, as shown in Figure 14, was implemented, and assessment was conducted using the same flight trajectory illustrated in Figure 9b. Unlike the case depicted in Figure 8, this stockpile could represent another practical case, whereby significant volume of material overflows towards the indoor stockpile walls during stockpiling or material extraction. Figure 15 shows the collected points clouds and the generated surfaces for this second indoor case. Table 5 shows a summary of the results obtained from mapping this case using the different LiDARs. When mapping the stockpile with the 1D LiDAR, the absolute error in the estimated volume increased dramatically to 25.8%. This poor performance is, in fact, not unexpected and can be attributed to the limited capability of the 1D sensor to map the area between walls and flight trajectory, which now has more material volume. The 2D LiDAR leads to a better result as it estimated the volume with an absolute error of only 2.41%. This is due to the ability of the 2D LiDAR to better scan the whole area of interest. Finally, the 3D LiDAR leads to an absolute error of 9.84%. This increase in error compared to the 2D LiDAR case is because of the smaller FoV of the 3D LiDAR, hence the scanned area decreases as the distance between the 3D LiDAR and the stockpile surface decreases.

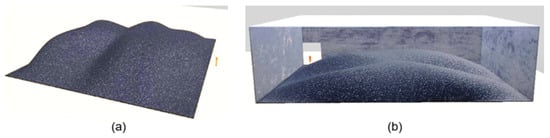

Figure 14.

A 3D CAD model of a stockpile with more material volume close to the walls. The stockpile is implemented in the same fully confined storage within the simulation environment (two walls and the ceiling were removed to show the stockpile inside).

Figure 15.

Results for the indoor mission (fully confined storage) for mapping the second stockpile. (a,c,e) Collected point clouds defined in the ground frame (); (b,d,f) generated surfaces from the collected point clouds. In (c) the LiDAR detects the walls and the ceiling because the LiDAR has a wide field of view (these PCs are therefore excluded).

Table 5.

Summary of simulation results of indoor missions for mapping the second indoor stockpile. The exact stockpile volume is 3863 m. Negative error indicates a smaller estimated volume compared to the actual volume.

It should be noted that the simulations conducted did not take into the consideration the effect of noise from the sensors leading to uncertainty in the estimated volume results. The source of uncertainty that mostly affects the drone estimated positions and affects the point clouds transformation to the ground frame, comes from the heading angle, [85]. The pitch angle is very small in comparison to and is relatively constant throughout the measurement meaning it can be excluded from the uncertainty analysis.

To investigate the significance of the heading angle uncertainty source, we followed [86,87] and the heading angle output value was corrupted with a rectangularly distributed noise of . Additionally, we considered the effect of noise from the LiDARs’ data through addition of the uncertainty specified by manufacturers of the different LiDAR sensors: The 1D LiDAR used for both localisation and volume estimation has an uncertainty of ±1% of the reading ranges [88]; the 2D LiDAR used for volume estimation has an uncertainty of mm [89]; the 2D LiDAR used for localisation has an uncertainty of mm [90]; and the 3D LiDAR used for volume estimation has an uncertainty of mm [91]. Without any further information regarding the distribution shape for these uncertainties, a rectangular distribution was used for all. After including noise in the measurements coming from the above uncertainty distributions, the stockpile volume was then recalculated.

The simulations to assess the effect of noise were conducted using the same flight trajectory illustrated in Figure 9 to estimate the volume of all three stockpiles. The results shown in Table 6 show minor influence of the considered noise inclusion on the error values in estimating the volumes of different stockpiles with the different LiDAR sensors adopted. On average of the nine cases shown in Table 6, the change in volume estimation error due to noise inclusion is only +0.47%.

Table 6.

Effect of noise inclusion on the error values of estimating the different stockpile volumes using different LiDAR sensors.

4.2.7. Stockpile Volume Estimation Using 3D Static Scanners

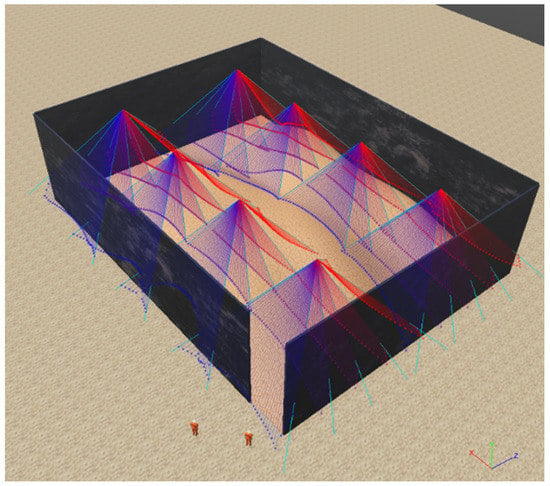

The current methods for indoor stockpile volume estimation were described in Section 3.3. For comparison, the volumes of the two stockpiles that were implemented within the confined storage, shown in Figure 8b and Figure 14, were estimated using 3D static scanners. In this demonstration, we used a scanner named 3DLevelScanner commonly used for such applications [92]. This scanner has a range of 61 m and 70 FoV. Eight scanners (arranged in 4 rows and 2 columns) were uniformly distributed along the ceiling of the storage within the simulation environment as shown in Figure 16. Figure 17 shows the collected point clouds and the generated surface from the two indoor stockpiles using the 3D static scanners.

Figure 16.

Eight static scanners implemented at the roof of the fully confined storage. The scanners were distributed uniformly. The ceiling is removed to show the distribution of scanners and the beams they generate.

Figure 17.

Results from using a system of eight static scanners for mapping the first and second stockpiles stored in the fully confined storage.

The volume of the first stockpile (with less material close to the walls) was estimated with an absolute error of 0.21%. For the second stockpile, the absolute error increased to 7.6% due to the increase in stockpile height close to the walls, leading to less scanned area. However, when adding another row and column of scanners, leading to a total of 15 scanners (arranged in 5 rows and 3 columns) also uniformly distributed along the ceiling, the estimated error of the second stockpile was enhanced to 0.59%. Table 7 shows a comparison of the errors in the estimated volume between the proposed aerial approach using different LiDARs and the 3D static scanners. However, it should be noted that the trajectory shape and type of LiDAR sensors used within the drone missions as well as the type and distribution of scanners used within 3D static scanning should always be considered factors in such comparisons. For the cases shown here, it is evident that 3D static scanners provide an effective method for stockpile volume estimation, which in turn alleviates some of the safety concerns identified earlier. However, it should also be noted that such scanners significantly heighten manufacturing costs. Firstly, their higher initial acquisition and installation costs implies higher capital expenditure (CAPEX), while their higher numbers per stockpile would lead to higher operational expenditure (OPEX) due to routine maintenance and functional testing. Besides their lack of visualisation ability already highlighted in Section 3.3, the deployment of static scanners still requires that personnel are physically present at measurement locations which undermines the efforts directed towards hazard elimination, especially when dealing with silos, hoppers, and tank farms.

Table 7.

Comparison of errors in the estimated volume between the proposed drone-assisted aerial mapping using different LiDARs and the 3D static scanning method adopted for comparison. Drone assisted mapping results are for the 16-reference trajectory points case.

4.3. Industrial Case Study

The case study is the largest fully integrated cement manufacturing plant in the UK, producing approximately 1.5 million metric tonnes (MMT) of cement per year, which represents approximately 15% of the UK’s total cement production capacity. The main process stages are quarrying, crushing, raw milling, kiln burning, cement grinding, and dispatch. Owing to the continuous nature of operations within the plant, each of the aforementioned process stages serves as a receptor and feed supply to their preceding and subsequent stages, respectively (e.g., crushing is receptor to quarrying and feeds the raw milling and so on). One of the conventional means of ensuring optimum process reliability is through the provision of standbys assets and intermediate storages (i.e., stockpiles, silos, hoppers, sheds, tanks, etc.). In this study, data were obtained through missions on a fully open coal stockpile and a semi open gypsum stockpile, so as to examine varying levels of operational complexities. We were only allowed to access these piles due to production restrictions; hence a mission within a fully confined space was not possible. However, more testing of fully closed spaces within the plant is planned for the future.

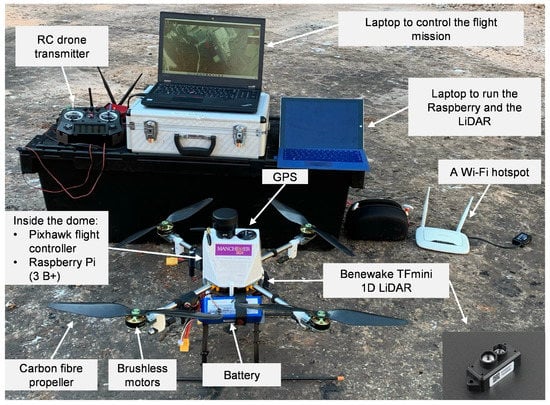

4.3.1. Instrumentation

The simulation has already established that 1D LiDAR is capable of achieving relatively low error values (i.e., ≤3%) when mapping unrestricted stockpiles together with being lightweight and significantly cheaper compared to other available sensors; hence, offering a good balance between accuracy, weight, and cost. This LiDAR can detect distances up to 12 m in normal indoor conditions and 7 m in regular outdoor conditions. The LiDAR was integrated into a quadcopter drone with a diagonal frame size measuring 585 mm, as shown in Figure 18. The quadcopter was controlled using a Pixhawk 4 Flight Controller. It was fitted with a Pixhawk 4 M8N GPS Module to provide location information, and an MS561 barometer integrated within the flight controller to measure altitude. The LiDAR was connected to a Raspberry Pi Model 3 B+ to run scanning and save the data to a memory card. A long-range Wi-Fi router was used to create a connection between the drone and the ground station. Two laptops were located at the ground station, to monitor flight and LiDAR data respectively. Figure 18 illustrates all equipment used for the flight test.

Figure 18.

Pictorial representation of field-based flight test set-up.

4.3.2. Data Collection and Processing

Before conducting missions, the drone team met with the site manager to discuss OSH requirements within the cement plant. All flight tests were performed by a team of two members: a pilot and a spotter. The pilot is responsible for flying the drone within the test area and ensuring equipment is operating correctly. The spotter is responsible for ensuring no personnel entries to the field during operations as well as carefully watching for changes in environmental conditions (especially people and vehicles). Data were collected whilst mapping a pile of coal located outdoors and a pile of gypsum located in a shed. During these mapping missions, the drone was connected to a laptop with QGroundControl application which provides drone setup for Pixhawk powered vehicles. Moreover, the QGroundControl was used to monitor the flight data such as positions, orientations, and battery level. The LiDAR, which scans the surface height, was connected to the Raspberry Pi on board which in turn transmits the collected data to the second laptop used for stockpile mapping. In this research, the first flight over the pile of coal took around 10 min from take-off to landing. The scanned inventory area was 1400 m. As for the second flight to map the gypsum pile, the flight took 2.1 min from take-off to landing. The scanned area was 62.5 m. It should be noted that during flights, if an emergency happened such as signal loss, the drone was programmed to cancel the mission and return to the take-off location autonomously; however, no issues were recorded during tests.

During data processing, raw data acquired from the stockpile was used to construct a 3D model. Three sets of time series data were processed: GPS coordinates, barometer reading, and LiDAR reading. Note that for missions in outdoor or semi-confined areas, GPS was deemed sufficient to provide localisation data of the drone. On the other hand, the barometer provided altitude information with respect to the take-off level, and LiDAR provided the vertical distance between the drone and the ground. The GPS time has a micro POSIX® format, where the POSIX® time represents the number of seconds (including fractional seconds) elapsed since 00:00:00 1 January 1970 UTC [93]. The MATLAB function datetime was used to convert POSIX® time format to local time. An alternative Matlab function was used to convert the GPS latitudes and longitudes to a two-dimensional projection, x and y axes [94].

To match the three sets of time series data (GPS, barometer, and LiDAR), shared start and end time were defined. The sampling rates for the GPS, barometer, and LiDAR were 5 Hz, 9.85 Hz, and 12.5 Hz, respectively. Since the three data sets varied in sampling rates, the data was transferred to a regular grid based on the highest unified sample rate of 12.5 Hz using linear interpolation implemented through the resample function in MATLAB. Figure 19 shows an example of the resampled data superimposed on the original data. It is evident that with resampling, almost no information is missed and original trends are preserved.

Figure 19.

Original and resampled readings for (a) drone x position, (b) drone y position, and (c) barometer. The example shown is for the semi open mission over the gypsum pile.

The difference between readings from the barometer and LiDAR was used to evaluate the height of the pile as:

where is the pile height at reading i, is the altitude from the barometer at reading i, is the depth from the LiDAR at reading i, and i is the sample number. The GPS data was used to define x and y positions. The surface of the stockpile was then generated, and the volume was estimated as will be described in the next section.

4.3.3. Results from Flight Tests

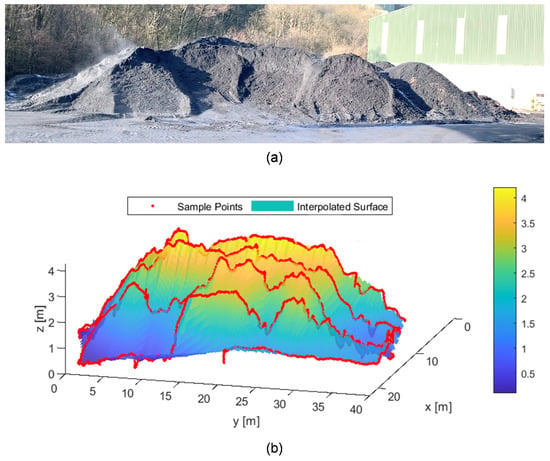

A 3D surface of the coal pile from the outdoor mission was generated, as shown in Figure 20. The red points in the figure illustrate the measured heights of the pile evaluated using Equation (8), whereas the surface of the pile was produced from linear interpolation between these points. This resulted in an estimated volume of 1021.5 m for this pile. There is a high degree of visual resemblance between the actual and the reconstructed piles. However, there is no accurate estimation of the volume of the real pile to which our estimated volume could be compared to. Nevertheless, this demonstration is still valuable in that it shows that the proposed system has the potential to provide useful results in a fast (mission took only 10 min), safe and cost-effective manner (a cost-benefit analysis will be discussed in Section 5.

Figure 20.

(a) Real stockpile in an open area within the cement plant, and (b) reconstructed surface of the stockpile.

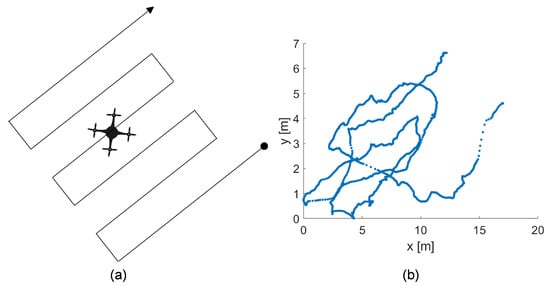

The results of the outdoor mission which represents a coal pile were based on the drone position being localised using an average of fourteen satellites during the mission. However, during the semi-confined space mission for mapping the gypsum pile, the average number of satellites detected by the GPS dropped to eight. The real and reconstructed piles from this mission are shown in Figure 21. It is evident that this reduction in satellites impacted the accuracy of positioning, and inevitably led to a reduction in the accuracy of drone localisation. Additionally, the metal sheets from which the shed is made may have had an effect on the efficiency of the GPS positioning resolution [95]. Figure 21b shows a reconstructed surface of the pile using the estimated pile height data with the planned flight trajectory (not the flight trajectory measured by GPS). On the other hand, Figure 21c shows the generated surface of the pile using flight trajectory from GPS readings which highlights imprecision from using the same method of the outdoor pile for the pile within the semi-confined space. Figure 22 shows a further illustration of this imprecision. The top view of the planned flight trajectory (a zig-zag trajectory), as shown in Figure 22a, was not well generated from the recorded flight trajectory based on the GPS coordinates, as can be seen in Figure 22b. Despite the aforementioned issues, the flight trajectory did not have a major effect on the estimation accuracy of the stockpile volume. In fact, the estimated volume of the pile was 24.4 m, which is remarkably close to the actual pile volume. The actual volume is estimated based on the fact that this pile was dumped on the testing day from a 30-tonne capacity dump trailer which has a maximum volume capacity of (25 m) [96]. As such, the error in the estimated volume of the reconstructed pile in Figure 21b is −2.4% which is reasonable. On the other hand, the error in the estimated volume of the reconstructed pile with imprecisions shown in Figure 21c is −18%.

Figure 21.

(a) A small pile of gypsum located in a semi-confined space. (b) Reconstructed surface of the small pile using the estimated pile height and planned trajectory data. (c) Reconstructed surface of the pile using GPS position readings showing imprecisions due to reduced GPS positioning resolution; however, the volume was still estimated with good accuracy (within 2.4%).

Figure 22.

(a) Top view sketch of the planned flight trajectory (a zig-zag trajectory). (b) The recorded flight trajectory from GPS coordinates.

5. Cost-Benefit Analysis

Cost-effectiveness is a term generally used to characterise the point whereby the resources invested in a solution and the value derived from that solution are optimised. As valuable as this line of reasoning is, it has always been considered controversial when OSH issues are concerned. On the one hand, it is often advocated that no price is too high for ensuring good OSH practices within workplaces, especially when the frequency of exposure to inherent hazards (as well as their consequences) are very significant. On the other hand, there is always a limit to which current industrial practices can be stretched to adequately eliminate, substitute, or isolate every industrial hazard. Rather than attempt to place a price tag on OSH, the cost-benefit analysis performed here was viewed from the premise of other comparable solutions within the existing body of knowledge, based on crucial pre-defined criteria. The criteria considered for this comparative analysis are frequency of exposure to the hazard (or frequency of the task), the average size of manpower exposed, environmental complexity (e.g., poor visibility, dust-laden air, high temperatures, surface irregularity, humidity, etc.), accuracy level, the impact of task on operation, and cost of the solution. Table 8 illustrates the current study’s cost breakdown, including the drone, along with other requirements such as man-hours, personal protective equipment, and transportation. Table 9 depicts the generation of the cost-benefit priority factors (CPFs) for selected drone-assisted solutions within existing literature and that presented in the current study, while Table 10 defines the individual ranking regimes for the considered comparison criteria. Note that the cost of each solution presented in Table 9 only includes the drone platform and the required sensors for the mapping and/or inspection missions. The CPFs for the selected solutions were then computed as the ratio of the product of the ranks for all criteria to the summation of the maximum ranks for each criterion. The computation of the numerator adopts the reasonably standard approach for estimating risk priority numbers (RPNs). However, due to the number of criteria considered here, it was deemed fit to normalise individual CPFs for reduced data range.

Table 8.

Cost breakdown for the current study as at the time of data collection in 2020.

Table 9.

Comparative analysis of drone-assisted solutions under different scenarios. Rank definitions are provided in Table 10. For consistency, the “approximate cost” column considers the drone price only. CPF values, in the last column, are computed as the ratio of the product of the ranks for all criteria to the summation of the maximum ranks for each criterion.

Table 10.

Criteria ranking reference chart.

The approach proposed in the current study clearly exhibits the highest CPF (333), owing to its peculiar combination of relatively high ranks across most of the considered criteria. While solutions related to underground mines inspections, rescue from collapsed buildings, or sewer systems inspections may possess comparable environmental complexities, their frequency of execution is not expected to be high since these are rare occurrences. Similarly, solutions related to localisation and mapping of radiation sources recorded the maximum available rank with regards to impact on the operation whereas the current study recorded 4. This is owing to the fact that whilst errors associated with cement stockpile estimations directly affect quality and cost, they are unlikely to immediately stop plant operations. However, the slightest of radiation leakages will most likely stop operation and possibly lead to evacuations.

6. Concluding Remarks and Future Work

Confined spaces are notoriously dangerous and have historically impeded the occupation safety and health (OSH) performances of several organisations within high hazard industries (HHIs). OSH statistics from prominent regulators including Health and Safety Executives (HSE) UK have shown that several industrial fatalities, injuries, and near misses are attributable to confined space incidents. Besides their impacts on OSH performance which is undeniably critical, routine inspections and monitoring of confined spaces, for the production materials they hold, through conventional mechanisms are costly, inaccurate, and time-consuming. This is mainly due to the fact that the dominant approaches entail routine physical interventions by representatives of several departments (e.g., maintenance, process control, production, quality, safety, etc.). The tasks of confined space inspection/monitoring as well as the volumetric estimation of their contents are further complicated by the extremely harsh environmental conditions (including high temperatures, dust-laden air, humidity, poor visibility, etc.) and material unevenness. This is perhaps why the volumes of typical production materials (e.g., clinker, cement, raw meal, kiln dust, solid fuels, etc.) within the silos, hoppers, and sheds of cement manufacturing plants are often just approximated during scheduled stock analysis.