1. Introduction

Power networks are significant components of infrastructure that transport electricity from power supply institutions to consumers. Management and maintenance of power transmission lines (PTLs) are important for stable power supplying [

1]. Power transmission line (PTL) inspection mainly includes regular inspection for power component defects to avoid malfunction, such as power failure caused by components breakage [

2], and to detect surrounding potential threats, especially vegetation invasion, which may cause loss of power or even forest fire if contact is made with PTLs. However, traditional inspection methods are much too reliant on artificial observation or manual analysis of aerial photos and videos, which is inefficient and relies on experience [

3]. Meanwhile, PTLs are often exposed to harsh environments or high mountainous areas that are dangerous and difficult to reach for inspectors [

4,

5]. Therefore, it is a challenging task to detect a wide range of PTLs. Recently, light detection and ranging (LiDAR) technology has been becoming an efficient and accurate inspection solution, because it can acquire spatial geometric data in the form of 3D point clouds rapidly without being affected by light conditions [

6,

7]. Compared with aerial images captured by traditional methods, the point clouds contain information such as multiple echoes, intensity and coordinates of each point, whose 3D surface information (e.g., geometric structure and semantic information) can be described directly. PTLs can be analyzed by a series of processes of extraction or classification, modelling and assessment of risk, helping to finish PTL corridor inspection.

According to different platforms, LiDAR is mainly divided into two types: airborne LiDAR and terrestrial LiDAR [

2]. For PTL inspection, different LiDAR platforms have their own advantages and limitations. Airborne LiDAR can obtain point clouds with relatively uniform density in a large scope of transmission line channels and can ignore terrain conditions, entering areas that are hard to reach by vehicles or workers [

8,

9]. Airborne LiDAR systems (ALS) often integrate with LiDAR sensor units, GPS (Global positioning system) units and INS (inertial navigation system) units, and can be carried on a manned aircraft (e.g., helicopter), a fixed-wing unmanned aerial vehicle (UAV) or a Rotary-wing UAV platform. Manned aircraft LiDAR is restricted by its expensive flying costs and strict airspace application conditions. UAV LiDAR can obtain a wide range of point clouds, and its spatial resolution is relatively high because of its lower flight height and flying speed mode. Rotary-wing UAV LiDAR can closely obtain high-resolution point clouds and have the advantages of flexibility and less strict requirements for take-off and landing, so they become an economical PTL inspection solution and the best choice for small- and medium-sized enterprises and ordinary consumers. Terrestrial LiDAR systems, such as mobile LiDAR scanning systems (MLS), are integrated with LiDAR units, GPS units, Charge Coupled Devices (CCD) units, and DMI units. The MLS often obtains the PTL point clouds only when PTL channels are located in no-fly zones and urban areas. The farther the distance from the scanner, the lower is the obtained MLS point-cloud density, which makes it possible to obtain breakage PTLs [

10,

11].

For PTLs inspection, the research has mainly concerned power elements detection [

9,

10,

12,

13,

14,

15,

16,

17,

18,

19], PTL and pylon reconstruction [

20,

21,

22,

23,

24,

25], safety analysis and simulation [

1,

7,

26,

27,

28,

29]. As the basis of reconstruction and analysis, extraction accuracy of the PTLs determines the effectiveness of reconstruction and recognition of safety hazards. Thus, the PTL classification has received much attention. In recent years, PTL extraction methods have been greatly researched, which mainly include optical images [

4,

7,

30,

31,

32], thermal images [

33] and point clouds [

9,

15,

28,

34] acquired from different platforms. Thermal images are used to detect electrical faults but not for 3D reconstruction in high-voltage electric utilities [

35]. Because of high resolution and low cost, the optical images are widely applied in PTL extraction. The Hough Transform is a popular method to extract PTLs for images. Nasseri et al. detected PTLs by using the Hough Transform and a particle filter [

36]. Song et al. proposed a sequential local-to-global algorithm based on morphological filtering [

30]. Fryskowska presented a wavelet-based method for processing data acquired with a low-cost UAV camera [

37]. However, the accuracy is unstable and dependent on the quality of obtained images, which are susceptible to natural weather.

Many point-cloud algorithms aiming at power-line extraction have been developed, and most of the extraction methods can be divided into three steps: pre-processing, power-line extraction and refinement. The purpose of pre-processing is to optimize the captured data to reduce interference from non-PTL points and improve efficiency in the subsequent steps. There is a certain distance from PTLs to ground, so the ground points are separated by ground filtering techniques, such as cloth simulation [

38] and TIN densification filtering [

39], and then the candidate points are selected from non-ground points by height difference filtering [

9,

12,

15,

21]. However, ground filtering is a time-consuming process, and pre-treatment results are affected by the terrain in most of these methods. Chen et al. [

26]. and Awrangjeb et al. [

13] obtained the scope of PTLs by location information about pylons. However, due to various structure types of pylons, complex parameters are required to pick up pylons. Thus, these algorithms are high complexity in their identification of pylons. To eliminate influence of differences in point density from different collection platforms, Zhang et al. [

12] and Jung et al. [

40] used voxel-based subsampling to balance point cloud density. In addition, data sampling may cause loss of accuracy.

Feature determination and optimization is a critical issue for determining PTL extraction accuracy. Feature-based filters are applied frequently, including eigenvalue features [

9,

10,

12], elevation difference features [

13,

15] and density features [

10,

21]. Guan et al. [

19] detected PTLs from MLS by combining a height filter, a density filter and a shape filter. Due to low point density and occlusion caused by other objects, the performance of Guan’s method was affected. To solve the accuracy loss caused by uneven distribution or obscured PTLs, Fan used a hierarchical clustering method for extraction in various gap situations [

41]. Point-based features are widely applied to extract PTLs by analyzing and calculating points’ properties by neighborhood searching. Certain limitations of differences in corridor terrain and data on distinct platforms could influence the feature stability. Zhang et al. [

12] clustered point clouds by dividing voxel data structures, and the PTLs were extracted by eigenvalue and distribution features. The combination of multiple features improves robustness of classification to some extent, which gained our attention. However, there is no in-depth analysis of feature selection and weight in Zhang’s study. There have been some methods that projected point clouds into 2D image structures and efficiently extracted PTLs by existing computer vision processing techniques [

42]. Jaehoon et al. [

36] used a combination of 2D image features and 3D features to extract power lines and compared various algorithms to prove his method’s superiority in accuracy and efficiency. In the study of Axelsson [

43], point clouds were detected from the horizontal XOY plane by using Hough Transform and RANSAC algorithms. Munir et al. combined 2D grid and 3D point-based structures to extract individual conductors [

44]. However, the 2D projected methods cannot deal with the interference points in the vertical direction, and the data conversion between 2D images and 3D points may cause the loss of extraction accuracy. Meanwhile, the existing algorithms have no in-depth study concerning how to select features and quantify the importance of different features to optimal extraction.

Furthermore, machine learning is another strategy for PTL extraction. The popular supervised classifiers include JointBoost [

45], support vector machine (SVM) [

16,

46], random forest (RF) [

6,

37,

47] and adaptive boosting (AdaBoost) [

16]. Precision is closely related to selection of classifiers. Guo et al. [

45] used the JointBoost classifier and a graph-cut segmentation algorithm to classify PTLs. Suresh K. Lodha et al. compared the SVM classifier with the AdaBoost classifier and concluded that the extraction performances of SVM and AdaBoost are similar [

14]. Wang et al. [

46] compared six classifiers and found the random forest was the most suitable for extracting PTLs, and Peng et al. [

8] reached the same conclusion. By executing SVM classifier training, Wang et al. proposed a multi-scale slant cylindrical neighborhood-searching algorithm for spatial structural features extraction, and then extracted PTLs by multi-scale features [

46]. Yang et al. classified PTLs using a random forest that was optimized by Markov Random Field [

48]. Machine learning methods can obtain excellent extraction accuracy, but the unbalanced samples and data gaps could affect the success rate of extraction. Meanwhile, the time-consuming nature of samples training makes it expensive for PTL classification. It is limited by the differences (point densities, various terrains) in data across different platforms. With the continuous improvement of computer performance and excellent performance of deep learning technology in target recognition, researchers have become interested in using deep learning to classify transmission line scenarios. At present, there are two commonly used deep learning strategies for power scenarios. In the first strategy, 2D feature images converted from 3D point clouds are applied to 2D CNNs for classification [

49]. However, transforming unstructured 3D point sets into regular representation inevitably may cause spatial information loss. The second one is to use PointNet [

50], PointNet++ [

51], PointCNN [

52] and so on to classify transmission line scenarios. These models are overly dependent on sample training and cannot obtain stable classification accuracy with different data.

A post-processing refinement step is employed to improve the accuracy of PTL extraction. Jaehoon et al. [

36] rasterized candidate power-line points onto 2D binary images, removing error points using image-based filtering. Zhang et al. [

12] and Awrangjeb [

13] analyzed the positional relationship between pylons and PTLs in the 3D space to filter out false positives. In addition, PTLs also can be refined by modelling. The PTLs can be fitted using 3D mathematical models, which consist of two parts: a XOY projection plane model and a vertical projection plane (XOZ plane or YOZ plane) model [

21]. Alternatively, a catenary curve model also can be applied to reconstruct the PTLs [

22]. Post-treatment may cause a few of the power-line points to be missing and increase time consumption as well.

Overall, there is a lot of advanced work on PTL extraction. However, there are still limitations on generality and efficiency. For the most unsupervised methods, extraction accuracy overly depends on the performance of the pre-processing step. Prior information, such as vehicle trajectory and sample data, as well as classified pylon point clouds or pylon coordinates are required, which affects the generality of many algorithms. Some algorithms depend heavily on the stability of features. In complex environments (e.g., mountains, cities), accuracy reduction of extraction results from the proximity of PTLs to vegetation or buildings. The popular algorithms extract PTLs by hierarchical-multiple-rule evaluation of many geometric features, which need strict input parameters. When extracting PTLs from different scenes, manual intervention is necessary to achieve stable extraction results. To achieve efficient PTL extraction for different platforms and complex scenes, this paper proposes a method based on entropy-weighting feature evaluation (EWFE), which focuses on efficient PTL extraction by using as few salient geometric features as possible and achieving robust extraction with few parameter adjustments.

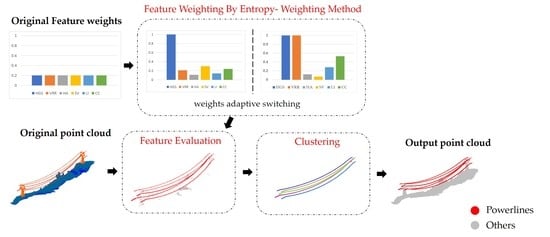

A feature vector is constituted by analyzing six salient features (SSFs) including Height above Ground Surface (HGS), Vertical Range Ratio (VRR), Horizontal Angle (HA), Surface Variation (SV), Linearity (LI) and Curvature Change (CC). After the feature information normalization, the weights of the SSFs are calculated and assigned by entropy-weighting method. The point clouds are filtered by the HGS feature, which can improve the processing efficiency by removing most non-PTL points with minimal PTL loss. Then, PTLs are extracted by the remaining five features evaluated by an adaptive feature-weighting algorithm. Noises are finally removed by clustering. The whole workflow of the proposed EWFE algorithm is illustrated in

Figure 1.

This paper is structured as follows:

Section 2 describes the datasets and analyzes the proposed method in detail. Experiments are provided for describing the applicability of the proposed detecting method in

Section 3. Discussions about the influence of feature weighting distribution and real-time detection analysis are presented in

Section 4. Finally,

Section 5 concludes this work and provides plans for the future.