A Spatial Variant Motion Compensation Algorithm for High-Monofrequency Motion Error in Mini-UAV-Based BiSAR Systems

Abstract

:1. Introduction

2. Method

2.1. High-Frequency Motion Error Signal Model in BiSAR Systems

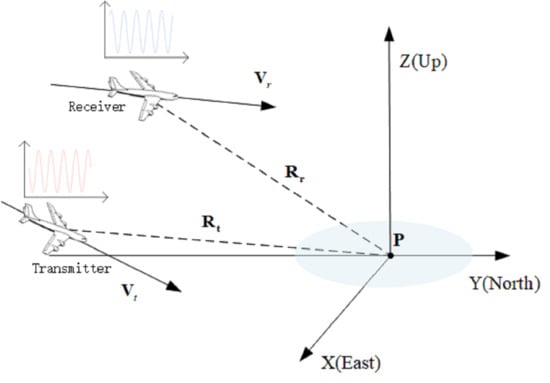

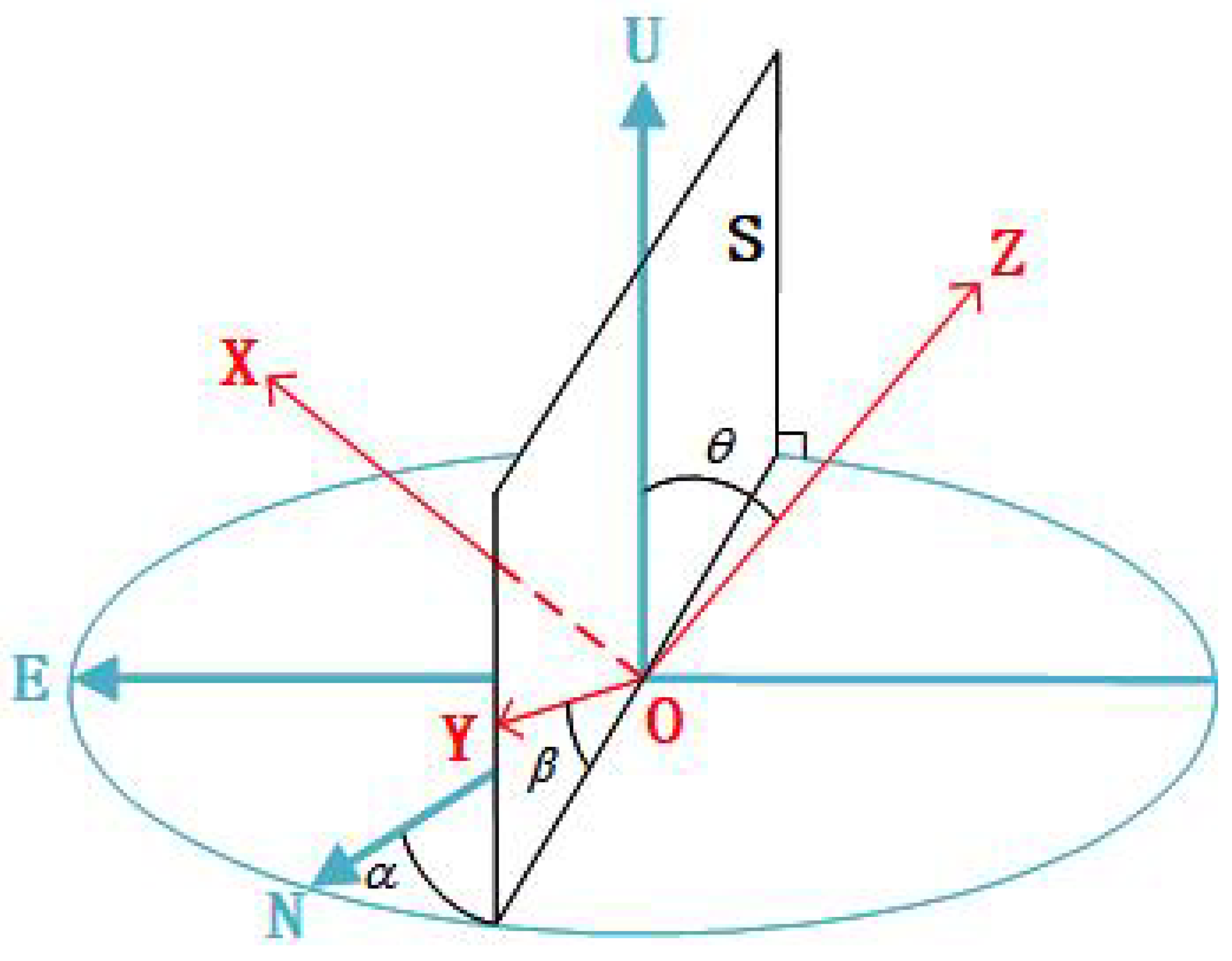

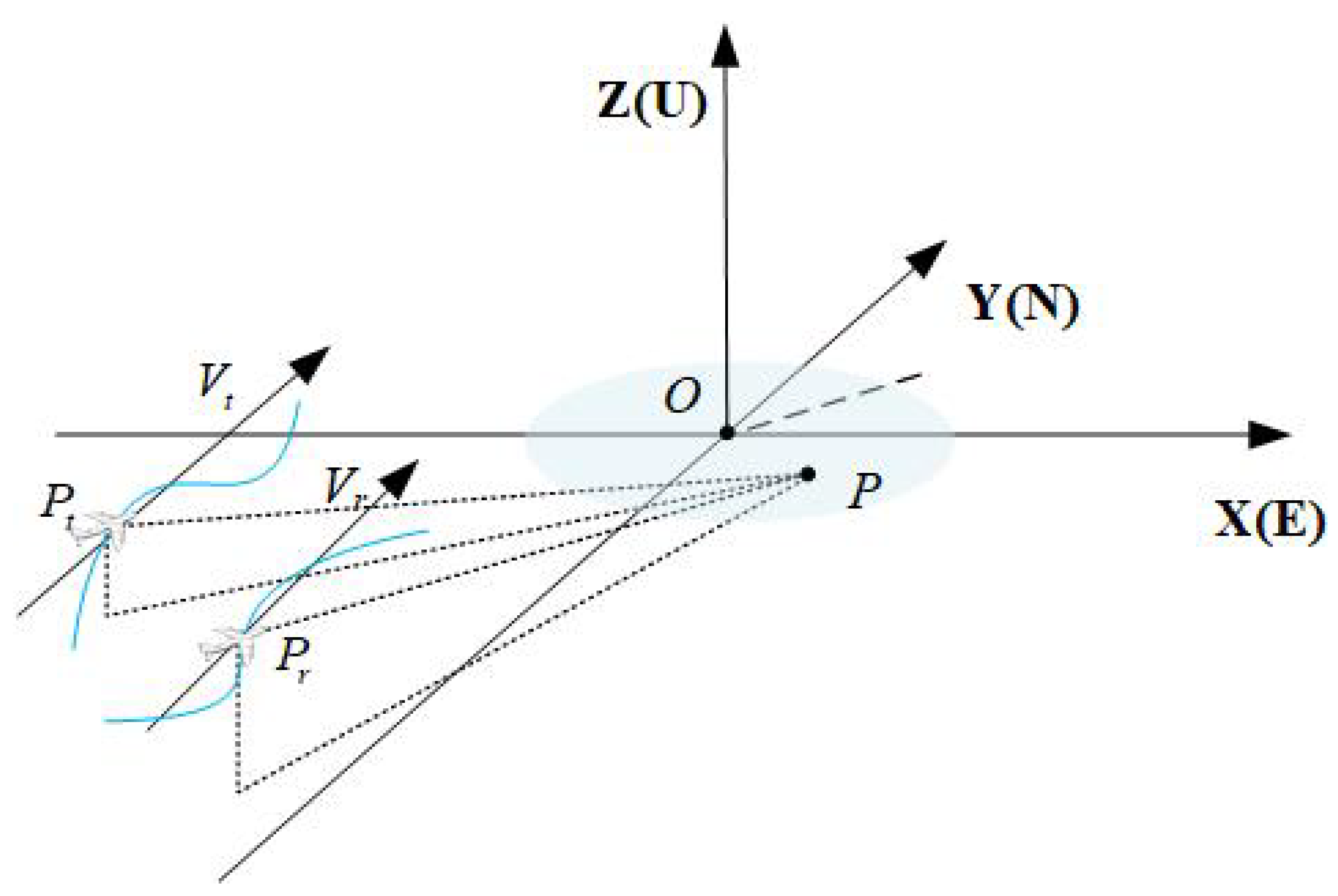

2.1.1. High-Frequency Motion Error Model

2.1.2. High-Frequency Motion Error in BiSAR

2.1.3. System Signal Model

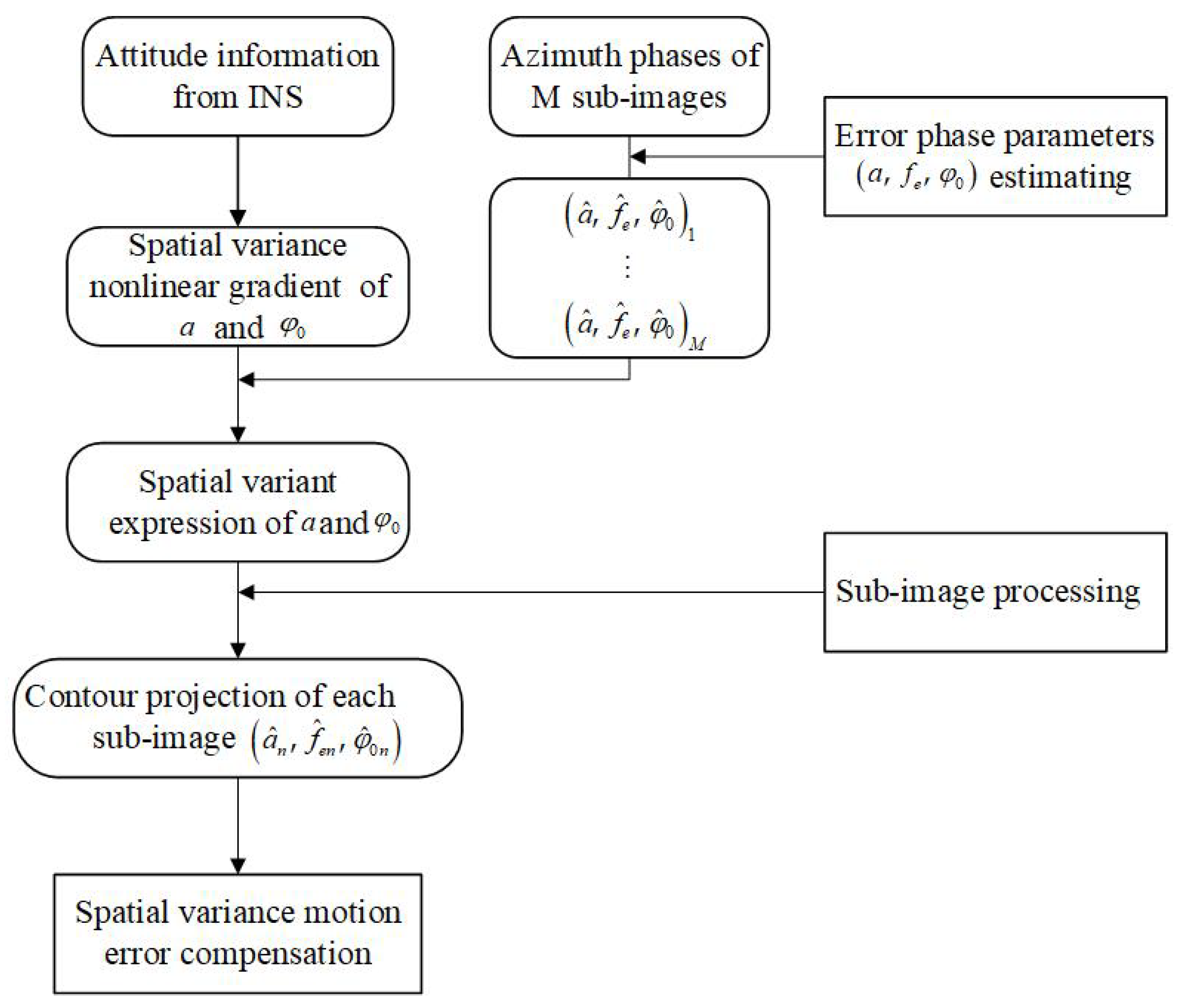

2.2. High-Frequency MOCO for BISAR

2.2.1. High-Frequency Phase Error Parameters Estimation

2.2.2. Spatial Variant Model Establishment

- 1.

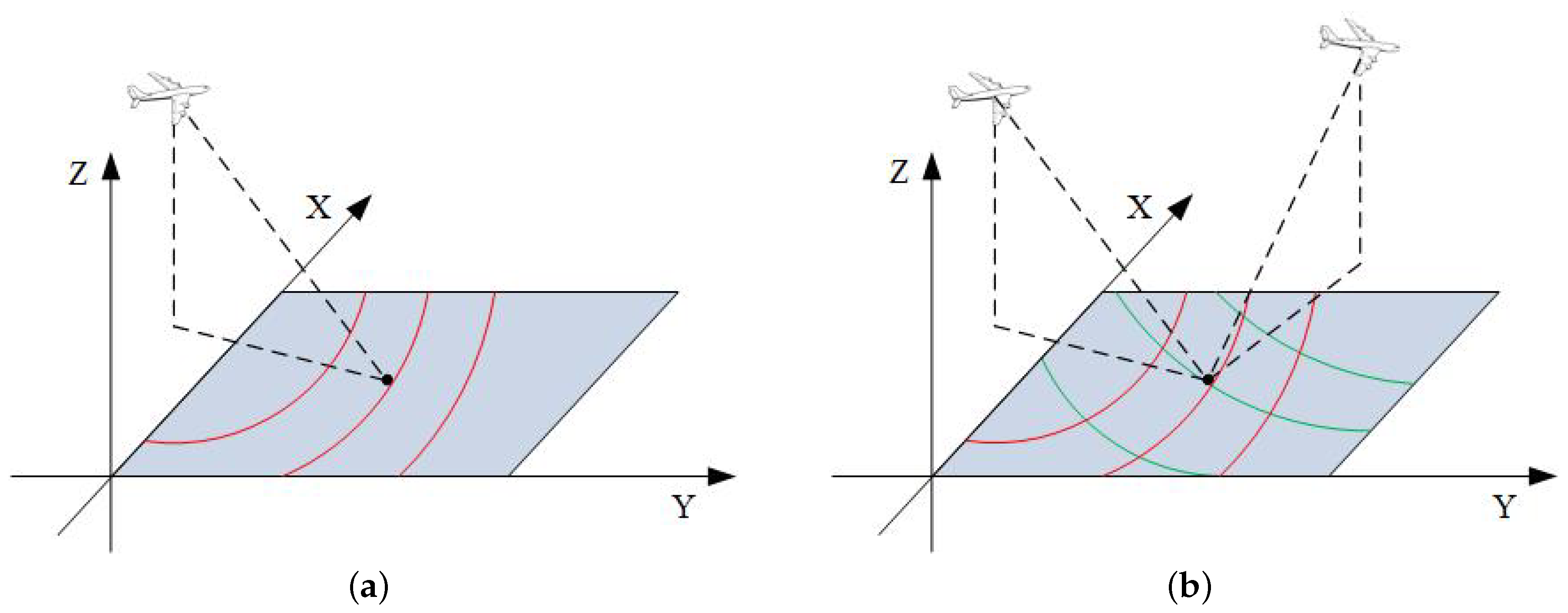

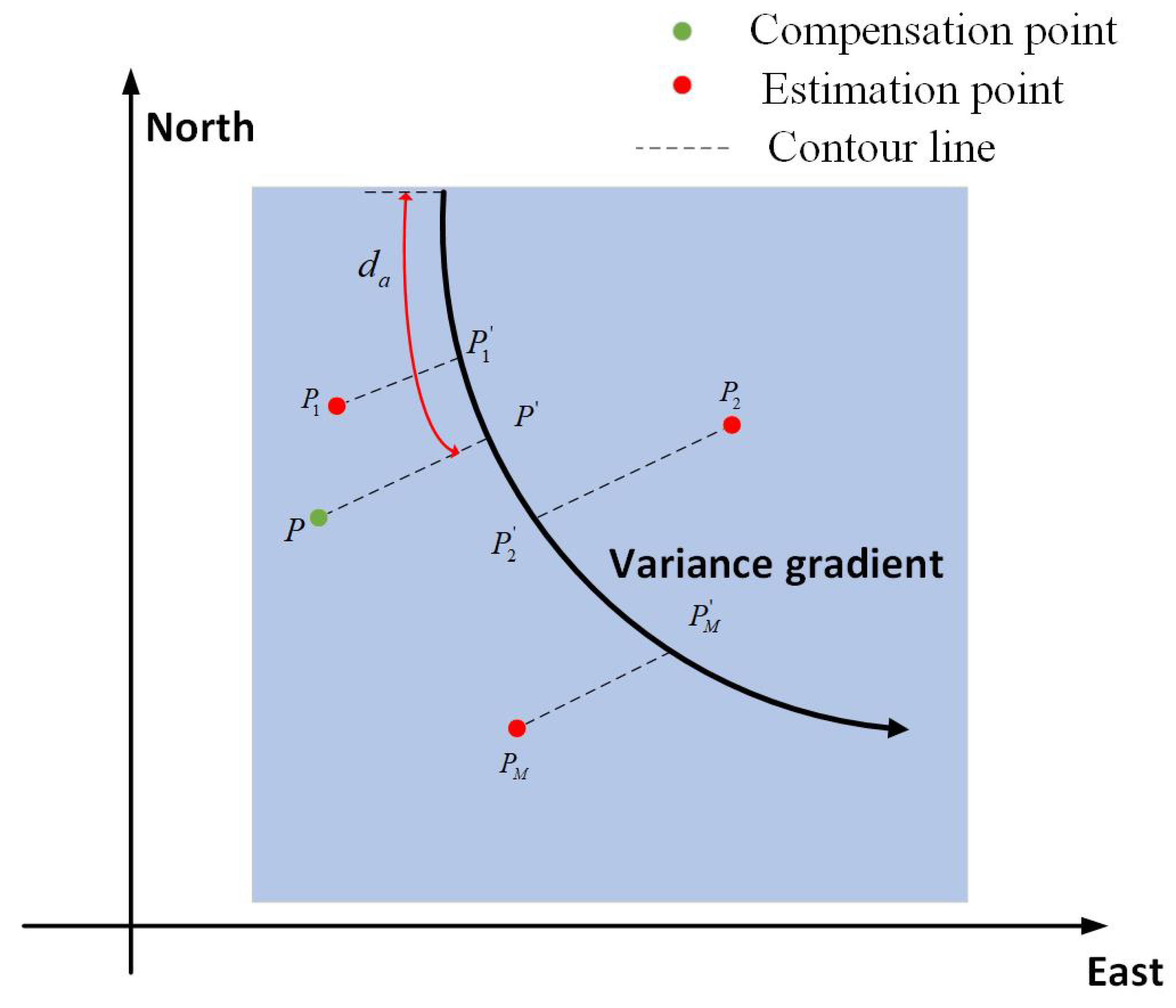

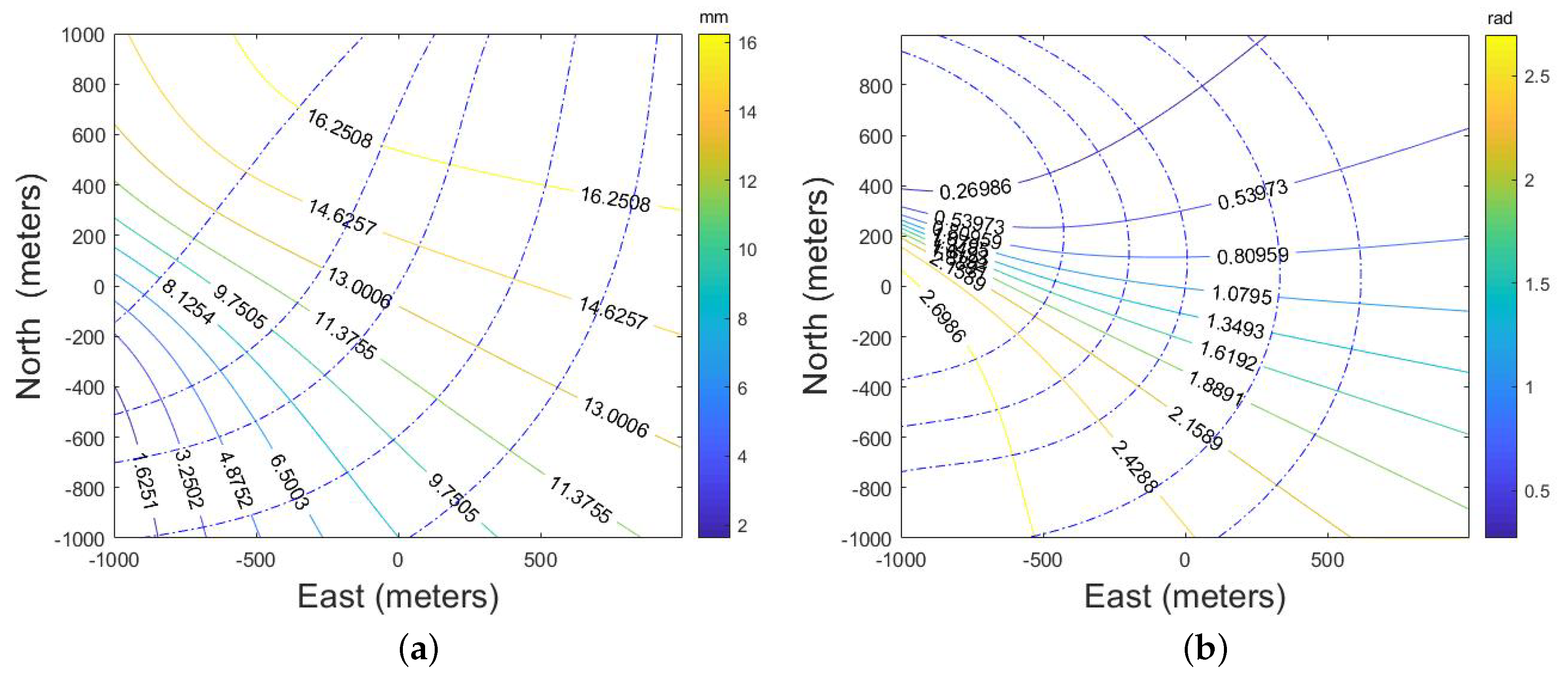

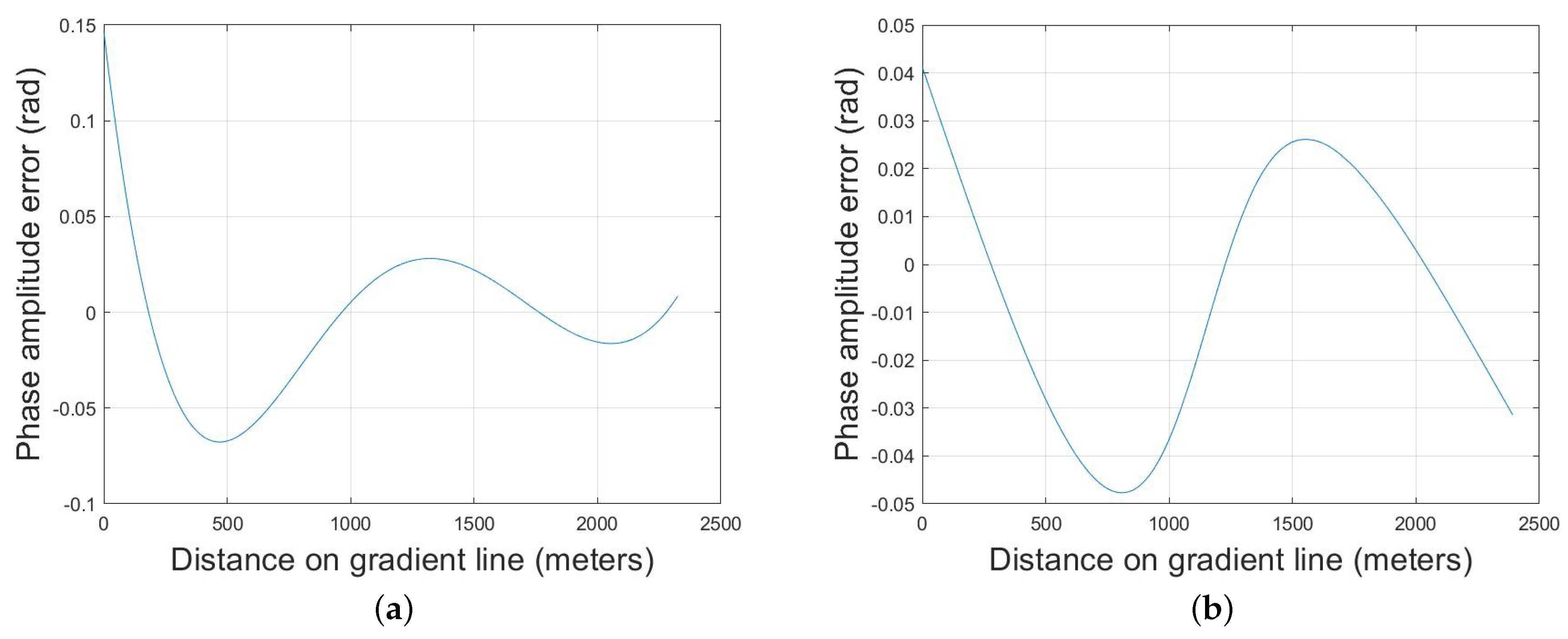

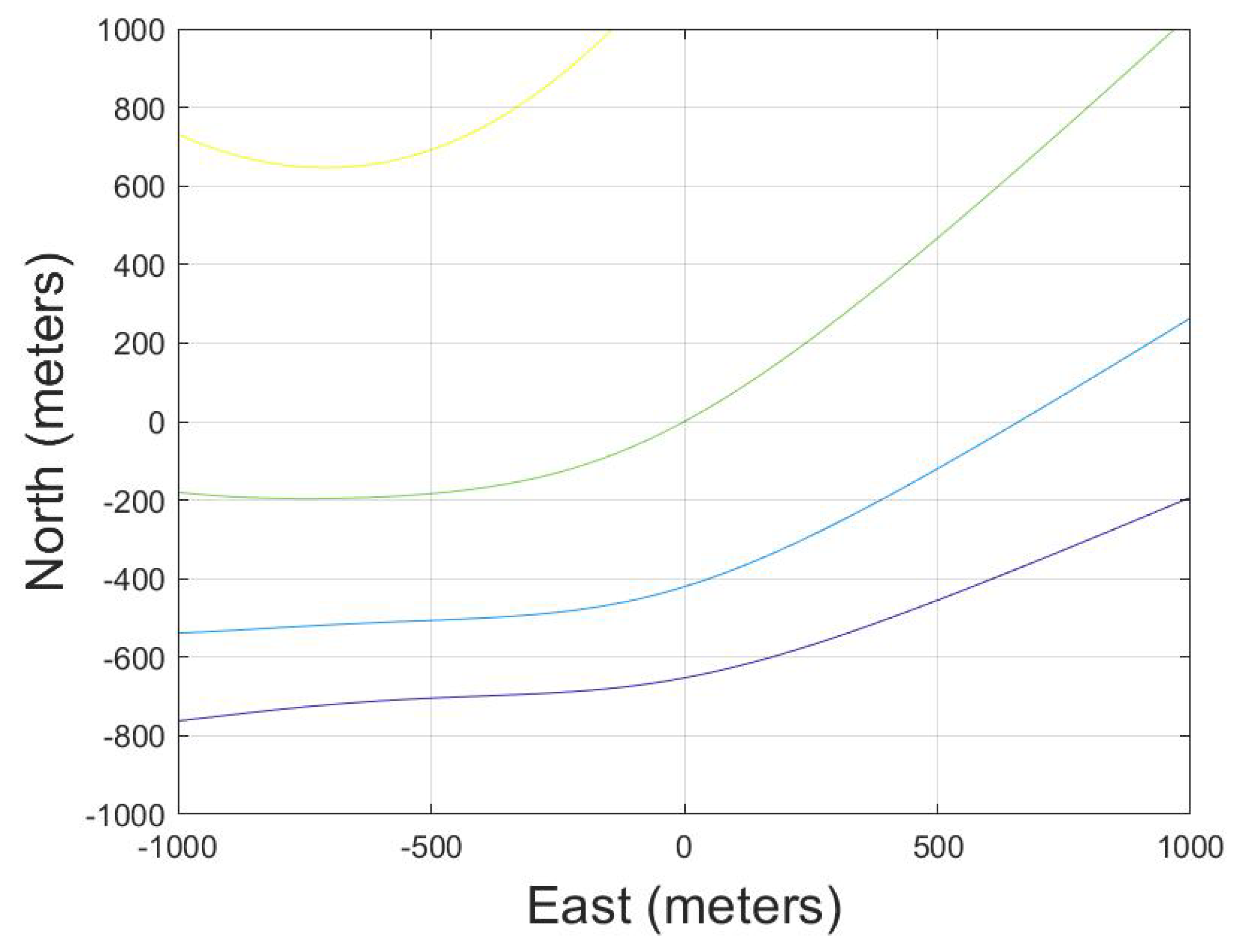

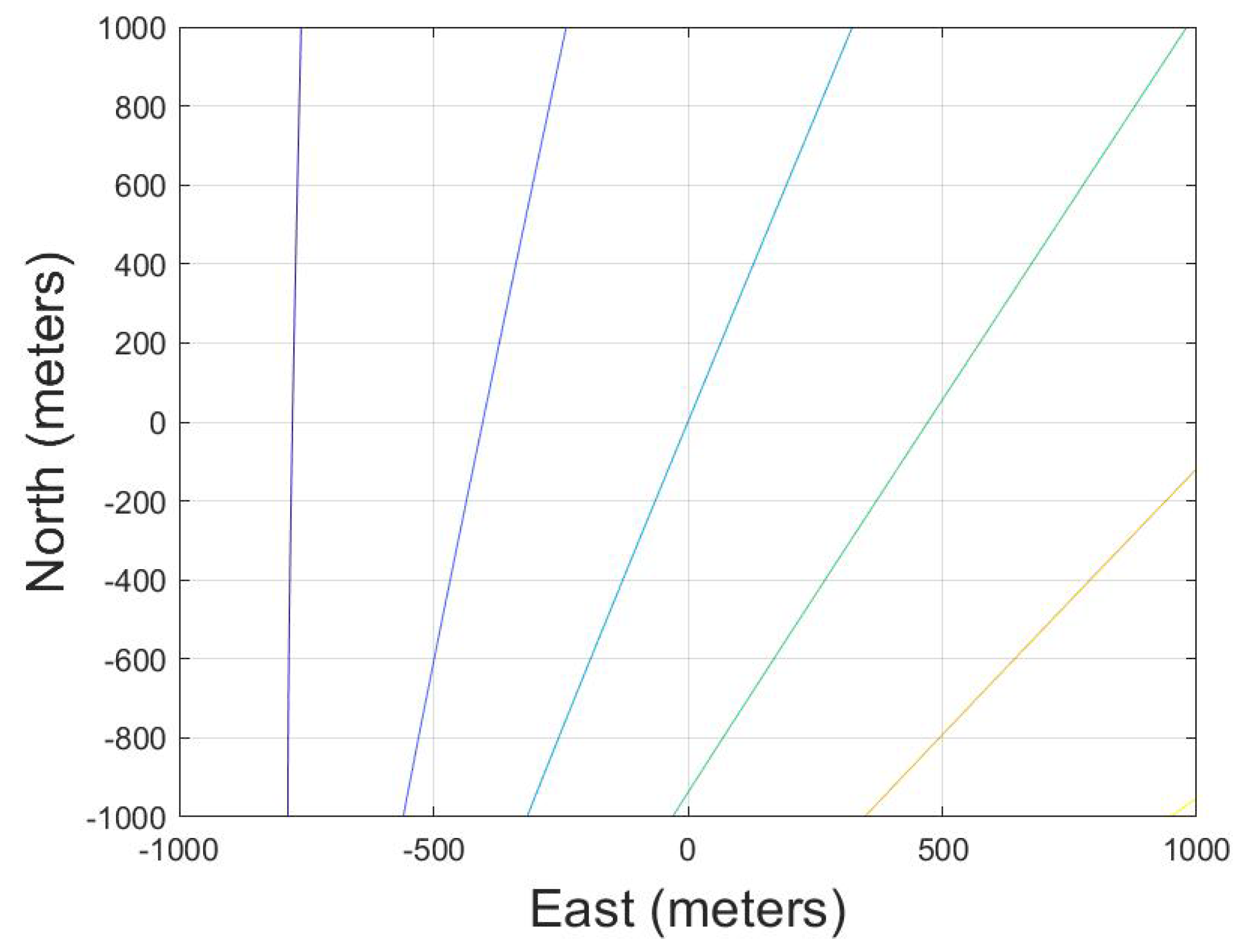

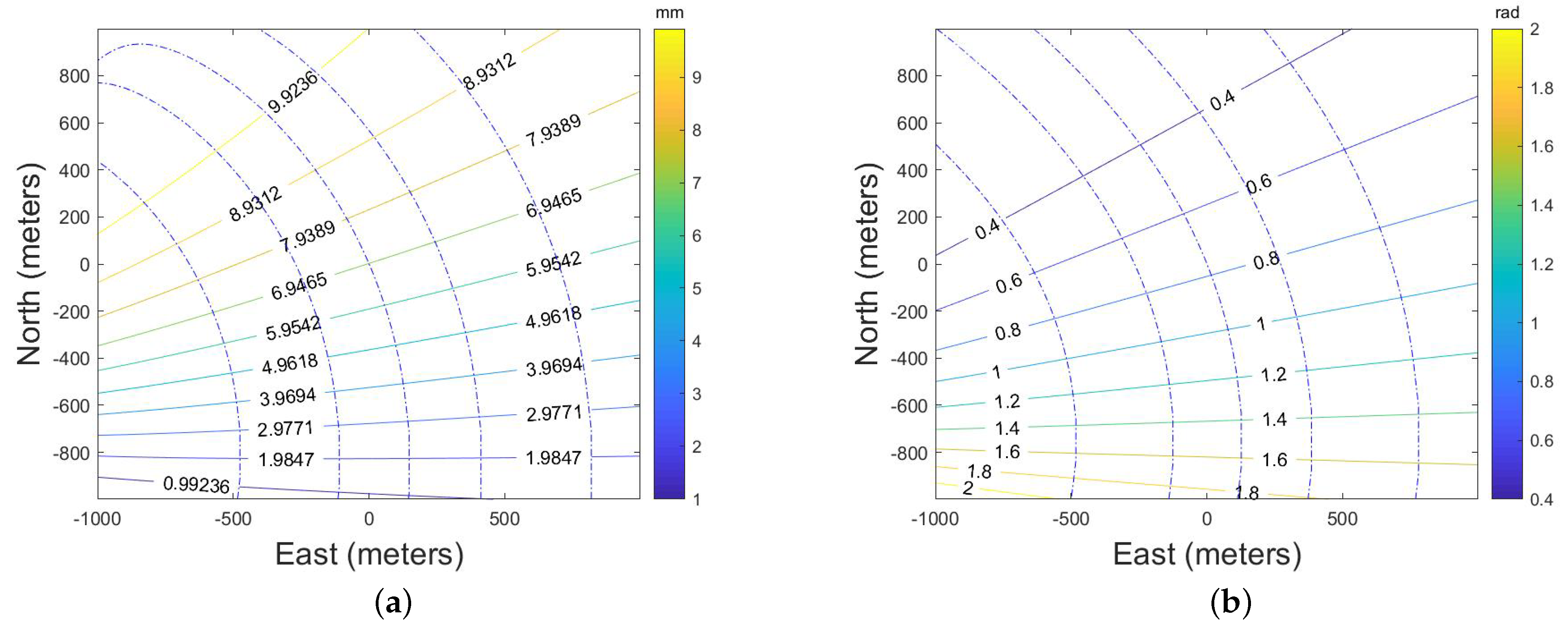

- First, the approximate UAV’s attitude information can be obtained through the INS, which is written as:Limited by the accuracy of the INS, the estimated value cannot be directly used for motion error compensation, but it can be used to obtain the approximate direction of the spatial variance of the high-frequency motion error parameters. Based on the system configuration, and can be calculated. Then, combining them with (7), the approximate a and of each position can be calculated. The diagram is shown in Figure 5. The red line in Figure 5a is the contour of a and the green line in Figure 5b is the contour of . The contour line will change with the system configuration and error parameters.

- 2.

- Second, calculate the nonlinear gradient of the a and for the whole scene. For the position , the amplitude is and the initial phase is . The gradient direction can be calculated as:where is the gradient direction, and:The blue line in Figure 5 indicates the nonlinear gradient of the spatial variance. The error parameters vary the most violently along the direction of the spatial variance gradient. Therefore, the model based on the gradient information can greatly describe the spatial variance of the scene, which is more accurate than modeling along range or azimuth direction. Since the bistatic spatial variance is more complex than that of monostatic condition, the proposed variance model is necessary under the BiSAR condition.

- 3.

- Third, estimate the parameters of the high-frequency phase error in several subimages. It is believed that the error parameters on one contour are the same; thus, target can be projected to the gradient along the contour line. A diagram of this is shown in Figure 6. are the estimation positions and are the projection points of , respectively. In this way, based on M sets of estimation results, M sets of error parameters for the nonlinear spatial variance gradient can be obtained.

- 4.

- Fourth, based on M sets of estimation results, a and can be obtained at each point on the gradient. Here, the second-order fit is selected, which can be expressed as:where is the distance from to the origin point O of the amplitude gradient is the distance from to the origin point O of the initial phase gradient. is shown as the red line in Figure 6 as an example. The coefficients can be calculated as:Now, a and at each point on the nonlinear gradient are obtained.

- 5.

- Fifth, divide the whole image into subimages with the premise that the greatest difference in phase error is less than . Then, project the center of each subimage onto the nonlinear spatial variant gradient. The high-frequency error parameter of each subimage is same as the projection point on the spatial variant gradient. In Figure 6, is the center of one of the subimages that needs to be compensated for, and the error parameters can be obtained through .

2.2.3. High-Frequency Motion Error Compensation

3. Experiment and Results

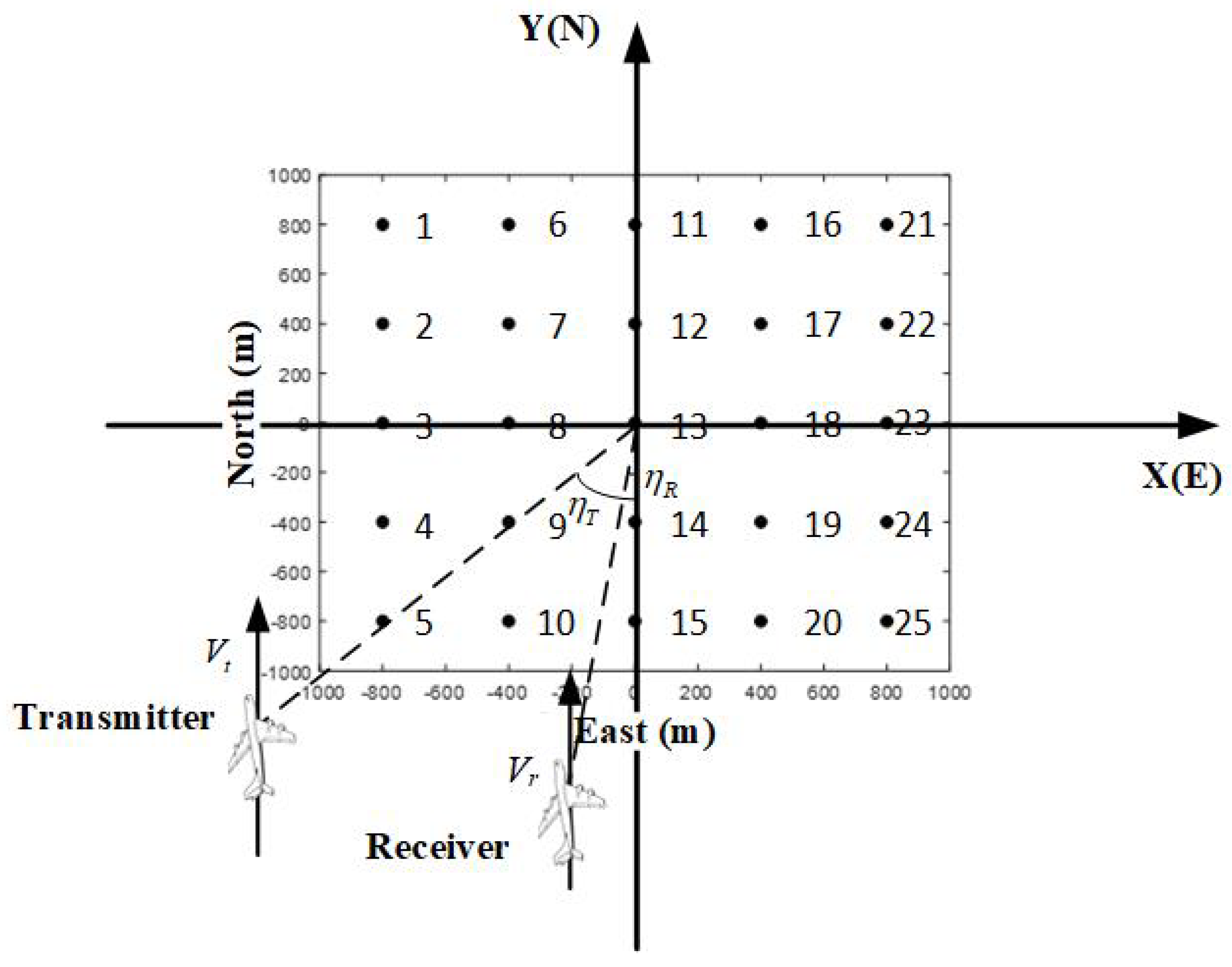

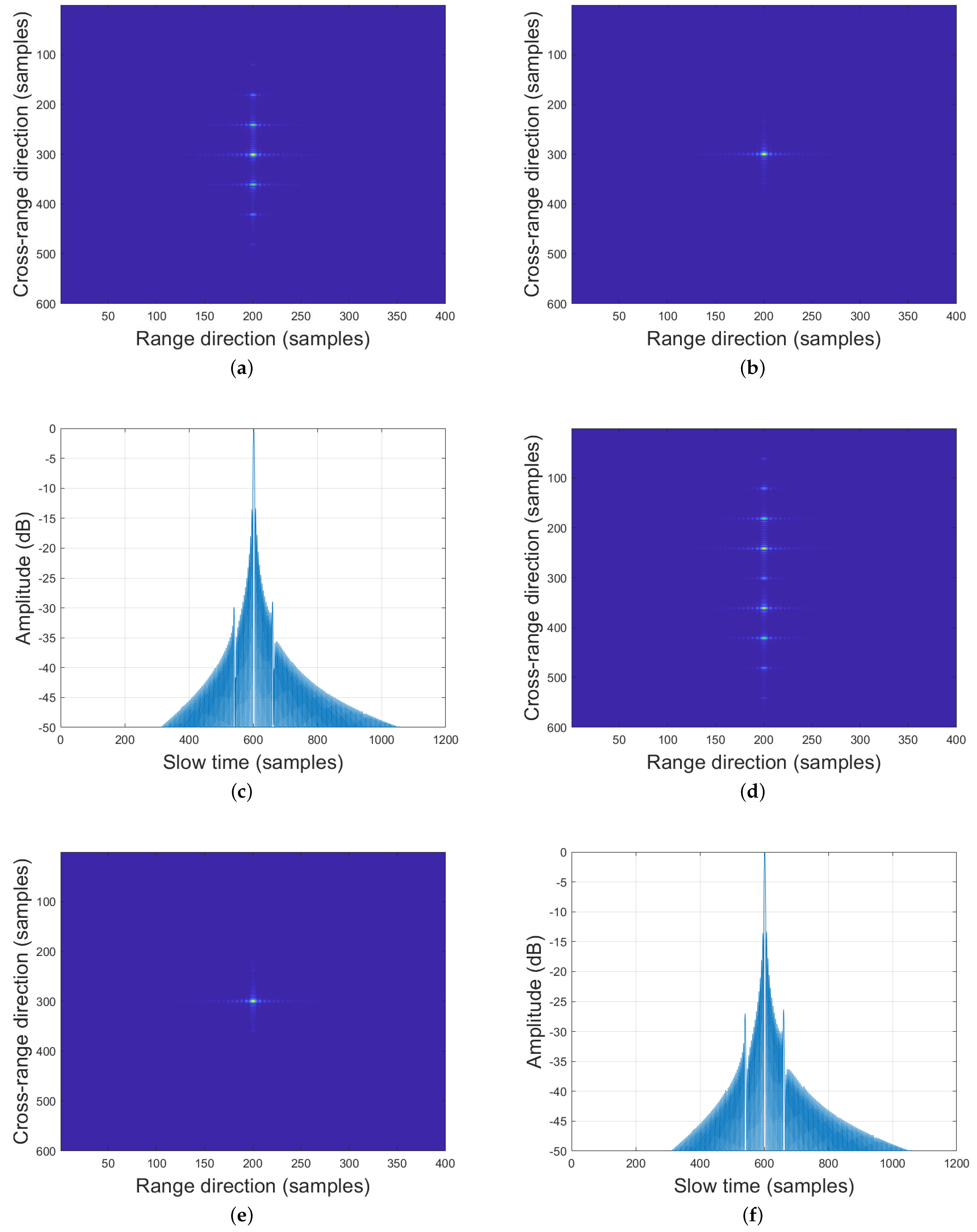

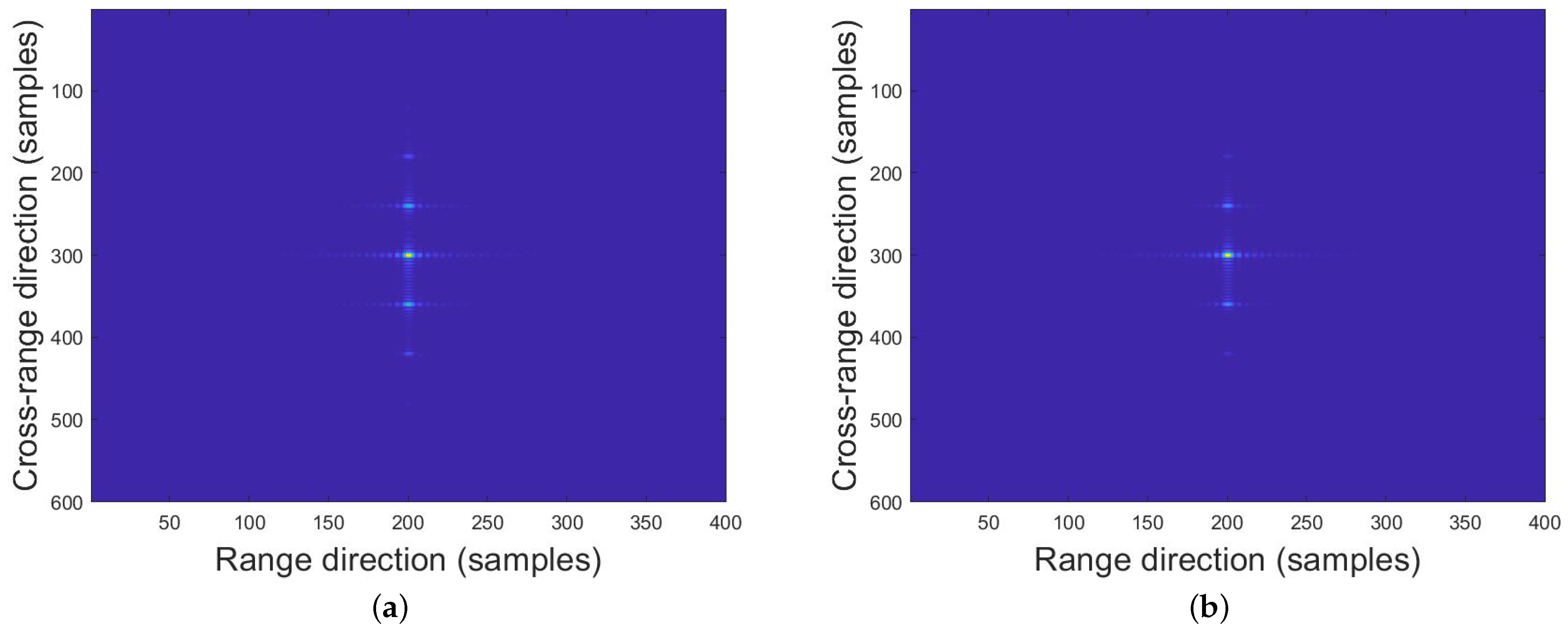

3.1. Simulation and Analysis

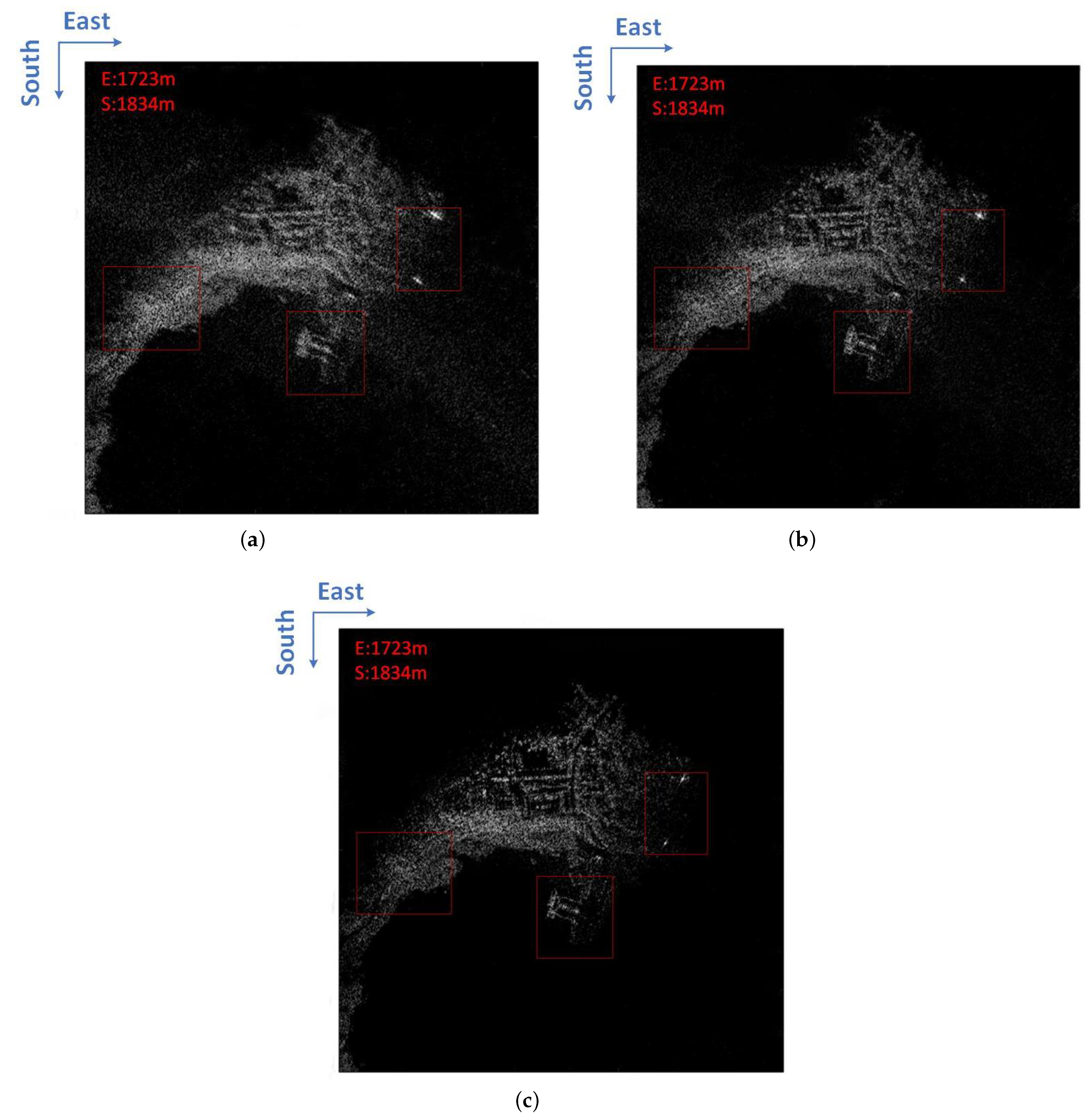

3.2. Raw Data Processing

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Sun, Z.; Wu, J.; Yang, J.; Huang, Y.; Li, C.; Li, D. Path Planning for GEO-UAV Bistatic SAR Using Constrained Adaptive Multiobjective Differential Evolution. IEEE Trans. Geosci. Remote Sens. 2016, 54, 6444–6457. [Google Scholar] [CrossRef]

- Lort, M.; Aguasca, A.; López-Martínez, C.; Marín, T.M. Initial Evaluation of SAR Capabilities in UAV Multicopter Platforms. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 127–140. [Google Scholar] [CrossRef] [Green Version]

- Zhou, S.; Yang, L.; Zhao, L.; Bi, G. Quasi-Polar-Based FFBP Algorithm for Miniature UAV SAR Imaging Without Navigational Data. IEEE Trans. Geosci. Remote Sens. 2017, 55, 7053–7065. [Google Scholar] [CrossRef]

- Jiang, Y.; Wang, Z. Analysis for Resolution of Bistatic SAR Configuration with Geosynchronous Transmitter and UAV Receiver. Int. J. Antennas Propag. 2013, 2013, 245–253. [Google Scholar] [CrossRef] [Green Version]

- Zeng, T.; Wang, Z.; Liu, F.; Wang, C. An Improved Frequency-Domain Image Formation Algorithm for Mini-UAV-Based Forward-Looking Spotlight BiSAR Systems. Remote Sens. 2020, 12, 2680. [Google Scholar] [CrossRef]

- Zhang, M.; Wang, R.; Deng, Y.; Wu, L.; Zhang, Z.; Zhang, H.; Li, N.; Liu, Y.; Luo, X. A Synchronization Algorithm for Spaceborne/Stationary BiSAR Imaging Based on Contrast Optimization With Direct Signal From Radar Satellite. IEEE Trans. Geosci. Remote Sens. 2016, 54, 1977–1989. [Google Scholar] [CrossRef]

- Qiu, X.; Hu, D.; Ding, C. An Improved NLCS Algorithm With Capability Analysis for One-Stationary BiSAR. IEEE Trans. Geosci. Remote Sens. 2008, 46, 3179–3186. [Google Scholar] [CrossRef]

- Xiong, T.; Li, Y.; Li, Q.; Wu, K.; Zhang, L.; Zhang, Y.; Mao, S.; Han, L. Using an Equivalence-Based Approach to Derive 2-D Spectrum of BiSAR Data and Implementation Into an RDA Processor. IEEE Trans. Geosci. Remote Sens. 2021, 59, 4765–4774. [Google Scholar] [CrossRef]

- Lim, T.S.; Koo, V.C.; Ewe, H.T.; Chuah, H.T. High-frequency Phase Error Reduction in Sar Using Particle Swarm of Optimization Algorithm. J. Electromagn. Waves Appl. 2007, 21, 795–810. [Google Scholar] [CrossRef]

- Marechal, N. High frequency phase errors in SAR imagery and implications for autofocus. In Proceedings of the 1996 International Geoscience and Remote Sensing Symposium, Lincoln, NE, USA, 31 May 1996. [Google Scholar]

- Li, Y.; Wu, Q.; Wu, J.; Li, P.; Zheng, Q.; Ding, L. Estimation of High-Frequency Vibration Parameters for Terahertz SAR Imaging Based on FrFT With Combination of QML and RANSAC. IEEE Access 2021, 9, 5485–5496. [Google Scholar] [CrossRef]

- Wang, Z.; Liu, F.; Zeng, T.; Wang, C. A Novel Motion Compensation Algorithm Based on Motion Sensitivity Analysis for Mini-UAV-Based BiSAR System. IEEE Trans. Geosci. Remote Sens. 2021, 1–13. [Google Scholar] [CrossRef]

- Bao, M.; Zhou, S.; Yang, L.; Xing, M.; Zhao, L. Data-Driven Motion Compensation for Airborne Bistatic SAR Imagery Under Fast Factorized Back Projection Framework. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 1728–1740. [Google Scholar] [CrossRef]

- Pu, W.; Wu, J.; Huang, Y.; Li, W.; Sun, Z.; Yang, J.; Yang, H. Motion Errors and Compensation for Bistatic Forward-Looking SAR With Cubic-Order Processing. IEEE Trans. Geosci. Remote Sens. 2016, 54, 6940–6957. [Google Scholar] [CrossRef]

- Miao, Y.; Wu, J.; Yang, J. Azimuth Migration-Corrected Phase Gradient Autofocus for Bistatic SAR Polar Format Imaging. IEEE Geosci. Remote Sens. Lett. 2021, 18, 697–701. [Google Scholar] [CrossRef]

- Li, D.; Lin, H.; Liu, H.; Wu, H.; Tan, X. Focus Improvement for Squint FMCW-SAR Data Using Modified Inverse Chirp-Z Transform Based on Spatial-Variant Linear Range Cell Migration Correction and Series Inversion. IEEE Sens. J. 2016, 16, 2564–2574. [Google Scholar] [CrossRef]

- Sun, Z.; Wu, J.; Li, Z.; Huang, Y.; Yang, J. Highly Squint SAR Data Focusing Based on Keystone Transform and Azimuth Extended Nonlinear Chirp Scaling. IEEE Geosci. Remote Sens. Lett. 2015, 12, 145–149. [Google Scholar] [CrossRef]

- Li, D.; Lin, H.; Liu, H.; Liao, G.; Tan, X. Focus Improvement for High-Resolution Highly Squinted SAR Imaging Based on 2-D Spatial-Variant Linear and Quadratic RCMs Correction and Azimuth-Dependent Doppler Equalization. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 168–183. [Google Scholar] [CrossRef]

- Huang, L.; Qiu, X.; Hu, D.; Ding, C. Focusing of Medium-Earth-Orbit SAR With Advanced Nonlinear Chirp Scaling Algorithm. IEEE Trans. Geosci. Remote Sens. 2011, 49, 500–508. [Google Scholar] [CrossRef]

- Chen, J.; Zhang, J.; Jin, Y.; Yu, H.; Liang, B.; Yang, D.G. Real-Time Processing of Spaceborne SAR Data With Nonlinear Trajectory Based on Variable PRF. IEEE Trans. Geosci. Remote Sens. 2021, 1–12. [Google Scholar] [CrossRef]

- Prats-Iraola, P.; Scheiber, R.; Rodriguez-Cassola, M.; Mittermayer, J.; Wollstadt, S.; De Zan, F.; Bräutigam, B.; Schwerdt, M.; Reigber, A.; Moreira, A. On the Processing of Very High Resolution Spaceborne SAR Data. IEEE Trans. Geosci. Remote Sens. 2014, 52, 6003–6016. [Google Scholar] [CrossRef] [Green Version]

- Huang, D.; Guo, X.; Zhang, Z.; Yu, W.; Truong, T.K. Full-Aperture Azimuth Spatial-Variant Autofocus Based on Contrast Maximization for Highly Squinted Synthetic Aperture Radar. IEEE Trans. Geosci. Remote Sens. 2020, 58, 330–347. [Google Scholar] [CrossRef]

- Chen, J.; Xing, M.; Sun, G.C.; Li, Z. A 2-D Space-Variant Motion Estimation and Compensation Method for Ultrahigh-Resolution Airborne Stepped-Frequency SAR With Long Integration Time. IEEE Trans. Geosci. Remote Sens. 2017, 55, 6390–6401. [Google Scholar] [CrossRef]

- Tang, Y.; Zhang, B.; Xing, M.; Bao, Z.; Guo, L. The Space-Variant Phase-Error Matching Map-Drift Algorithm for Highly Squinted SAR. IEEE Geosci. Remote Sens. Lett. 2013, 10, 845–849. [Google Scholar] [CrossRef]

- Peng, B.; Wei, X.; Deng, B.; Chen, H.; Liu, Z.; Li, X. A Sinusoidal Frequency Modulation Fourier Transform for Radar-Based Vehicle Vibration Estimation. IEEE Trans. Instrum. Meas. 2014, 63, 2188–2199. [Google Scholar] [CrossRef]

- Wang, P.; Orlik, P.V.; Sadamoto, K.; Tsujita, W.; Gini, F. Parameter Estimation of Hybrid Sinusoidal FM-Polynomial Phase Signal. IEEE Signal Process. Lett. 2017, 24, 66–70. [Google Scholar] [CrossRef]

- Wang, Z.; Wang, Y.; Xu, L. Parameter Estimation of Hybrid Linear Frequency Modulation-Sinusoidal Frequency Modulation Signal. IEEE Signal Process. Lett. 2017, 24, 1238–1241. [Google Scholar] [CrossRef]

- Stankovic, L.; Dakovic, M.; Thayaparan, T.; POPOVIC-BUGARIN, V. Inverse radon transform–based micro-doppler analysis from a reduced set of observations. IEEE Trans. Aerosp. Electron. Syst. 2015, 51, 1155–1169. [Google Scholar] [CrossRef]

- Li, Z.; Wang, H.; Su, T.; Bao, Z. Generation of wide-swath and high-resolution SAR images from multichannel small spaceborne SAR systems. IEEE Geosci. Remote Sens. Lett. 2005, 2, 82–86. [Google Scholar] [CrossRef]

- Zhu, X.X.; Bamler, R. Very High Resolution Spaceborne SAR Tomography in Urban Environment. IEEE Trans. Geosci. Remote Sens. 2010, 48, 4296–4308. [Google Scholar] [CrossRef] [Green Version]

- Kong, Y.K.; Cho, B.L.; Kim, Y.S. Ambiguity-free Doppler centroid estimation technique for airborne SAR using the Radon transform. IEEE Trans. Geosci. Remote Sens. 2005, 43, 715–721. [Google Scholar] [CrossRef]

- Nies, H.; Loffeld, O.; Natroshvili, K. Analysis and Focusing of Bistatic Airborne SAR Data. IEEE Trans. Geosci. Remote Sens. 2007, 45, 3342–3349. [Google Scholar] [CrossRef]

- Xu, G.; Sugimoto, N. A linear algorithm for motion from three weak perspective images using Euler angles. IEEE Trans. Pattern Anal. Mach. Intell. 1999, 21, 54–57. [Google Scholar] [CrossRef]

- Fu, X.; Wang, B.; Xiang, M.; Jiang, S.; Sun, X. Residual RCM Correction for LFM-CW Mini-SAR System Based on Fast-Time Split-Band Signal Interferometry. IEEE Trans. Geosci. Remote Sens. 2019, 57, 4375–4387. [Google Scholar] [CrossRef]

- Sun, G.C.; Xing, M.; Wang, Y.; Yang, J.; Bao, Z. A 2-D Space-Variant Chirp Scaling Algorithm Based on the RCM Equalization and Subband Synthesis to Process Geosynchronous SAR Data. IEEE Trans. Geosci. Remote Sens. 2014, 52, 4868–4880. [Google Scholar] [CrossRef]

| Parameters | Values |

|---|---|

| Transmitter position | m |

| Transmitter velocity | m/s |

| Transmitter acceleration | m/s |

| Transmitter squinted angle | |

| Receiver position | m |

| Transmitter velocity | m/s |

| Transmitter acceleration | m/s |

| Transmitter squinted angle | |

| Radar wavelength | 0.019 m |

| Bandwidth | 120 MHz |

| Synthetic aperture time | 1.5 s |

| PRF | 1250 Hz |

| The center of the antenna beam pointing | m |

| High-Frequency Error | |||

|---|---|---|---|

| Amplitude H (mm) | Frequency (Hz) | Initial Phase | |

| Transmitter | 5 | 20 | |

| Receiver | 5 | 140 | |

| Target | Phase Amplitude (rad) | Frequency (Hz) | Initial Phase |

|---|---|---|---|

| 3 | 0.24 | 4.99 | 5.22 |

| 8 | 0.90 | 4.99 | 15.93 |

| 14 | 1.12 | 4.99 | 51.89 |

| 17 | 2.53 | 4.99 | 98.48 |

| Target | Target 1 | Target 5 | Target 21 | Target 25 |

|---|---|---|---|---|

| Proposed algorithm | −28.03 dB | −27.33 dB | −27.63 dB | −26.58 dB |

| Traditional algorithm | −8.34 dB | −15.88 dB | −14.24 dB | −12.33 dB |

| Parameters | Values |

|---|---|

| Transmitter position | m |

| Transmitter velocity | m/s |

| Transmitter acceleration | m/s |

| Transmitter squinted angle | |

| Receiver position | m |

| Transmitter velocity | m/s |

| Transmitter acceleration | m/s |

| Transmitter squinted angle | |

| Radar wavelength | 0.019 m |

| Bandwidth | 120 MHz |

| Synthetic aperture time | 1.5 s |

| PRF | 1250 Hz |

| The center of the antenna beam pointing | m |

| APC position | mm |

| Yaw Angle | Pitch Angle | Roll Angle | Initial Phase | |

|---|---|---|---|---|

| Transmitter | ||||

| Receiver |

| Platform | High-Frequency Error from the INS | |

|---|---|---|

| Amplitude H (mm) | Initial Phase | |

| Transmitter | −23.4 | |

| Receiver | 70.4 | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, Z.; Liu, F.; He, S.; Xu, Z. A Spatial Variant Motion Compensation Algorithm for High-Monofrequency Motion Error in Mini-UAV-Based BiSAR Systems. Remote Sens. 2021, 13, 3544. https://doi.org/10.3390/rs13173544

Wang Z, Liu F, He S, Xu Z. A Spatial Variant Motion Compensation Algorithm for High-Monofrequency Motion Error in Mini-UAV-Based BiSAR Systems. Remote Sensing. 2021; 13(17):3544. https://doi.org/10.3390/rs13173544

Chicago/Turabian StyleWang, Zhanze, Feifeng Liu, Simin He, and Zhixiang Xu. 2021. "A Spatial Variant Motion Compensation Algorithm for High-Monofrequency Motion Error in Mini-UAV-Based BiSAR Systems" Remote Sensing 13, no. 17: 3544. https://doi.org/10.3390/rs13173544

APA StyleWang, Z., Liu, F., He, S., & Xu, Z. (2021). A Spatial Variant Motion Compensation Algorithm for High-Monofrequency Motion Error in Mini-UAV-Based BiSAR Systems. Remote Sensing, 13(17), 3544. https://doi.org/10.3390/rs13173544