Abstract

Visual Simultaneous Localization and Mapping (SLAM) technologies based on point features achieve high positioning accuracy and complete map construction. However, despite their time efficiency and accuracy, such SLAM systems are prone to instability and even failure in poor texture environments. In this paper, line features are integrated with point features to enhance the robustness and reliability of stereo SLAM systems in poor texture environments. Firstly, method Edge Drawing lines (EDlines) is applied to reduce the line feature detection time. Meanwhile, the proposed method improves the reliability of features by eliminating outliers of line features based on the entropy scale and geometric constraints. Furthermore, this paper proposes a novel Bags of Word (BoW) model combining the point and line features to improve the accuracy and robustness of loop detection used in SLAM. The proposed PL-BoW technique achieves this by taking into account the co-occurrence information and spatial proximity of visual words. Experiments using the KITTI and EuRoC datasets demonstrate that the proposed stereo Point and EDlines SLAM (PEL-SLAM) achieves high accuracy consistently, including in challenging environments difficult to sense accurately. The processing time of the proposed method is reduced by 9.9% and 4.5% when compared to the Point and Line SLAM (PL-SLAM) and Point and stereo Point and Line based Visual Odometry (sPLVO) methods, respectively.

1. Introduction

Simultaneous Localization and Mapping (SLAM) was initially proposed by Smith in 1987 [1]. Since then, diverse methods systems that can simultaneously estimate the position of the onboard sensors and construct the surrounding environment map via the captured scene information have been extensively developed. This has been especially important in the field of robot navigation using diverse types of camera systems (e.g., monocular, stereo and panoramic) [2,3,4,5].

In recent years, most of the research has focused on improving the accuracy of point-feature SLAM, and many encouraging breakthroughs have been made [6,7]. Such research includes the development of the ORB-SLAM2 system based on Parallel Tracking and Mapping (PTAM) ideas that improve the place recognition and the loop closure modules [8]. The invariant of ORB features to different viewpoints and illuminations has been employed to improve tracking, mapping, and loop closure. As a result, ORB-SLAM2 has become one of the most popular systems in rich-textured scenes. Despite the success of the ORB-SLAM2 framework, the expanding applications of mobile robots, among many other applications, such as augmented reality and autonomous driving, impose new challenges, which still remain to be resolved, related to low-textured or structured engineered environments [9,10]. Traditional point-based strategies are unstable and even fail in some scenarios where the point features are uneven or not well distributed. Fortunately, Cadena et al. pointed out that a way to solve this problem is to find an alternative feature that is abundant in the environment [3]. The low sensitivity of line features to light changes and motion blurring is also a prevalent topic in SLAM research. Lu et al. proposed an RGB-D SLAM system that combines line features and depth information to solve the problem of indoor illumination changes [11]. Scaramuzza et al. focused on extracting the vertical lines in the wider field image information captured by the omnidirectional camera to improve the accuracy and robustness of the mobile robot visual odometer system [12,13]. Pumarola et al. improved the initial accuracy of the system by synchronously calculating point and line features in the monocular SLAM initialization thread [14]. Ma et al. utilized vanishing points (VP) to constrain line features, which greatly reduced the mismatching of line features [15]. Despite the improvements, the above line segment detection and matching methods are time-consuming, making the line-based SLAM methods difficult to be processed in real-time.

To enhance the effectiveness of line detection methods, Gomez-Ojeda et al. combined a Line Segment Detector (LSD) with a Line Band Descriptor (LBD) algorithm to develop an enhanced stereo version of Point and Line SLAM (PL-SLAM). Such a method has shown higher translation accuracy than that of ORB-SLAM2 in rich-feature scenes, which proved that stereo systems are more accurate and resistant to interference than other monocular systems in line detection [16]. Berenguer et al. proposed a method to estimate the relative attitude by using Holistic descriptors of the omnidirectional image. This method solves the problem that the omnidirectional image can not deal with the height change of mobile robots, but it requires further research to apply in estimating movements with six degrees of freedom [17]. Inspired by the Scale Invariant Feature Transform (SIFT) algorithm, Li et al. proposed the scale-invariant mean-standard deviation LSD (SMLSD) to extract line features faster without sacrificing detection accuracy [18]. However, Zuo et al. proved that the performance of LSD line detection is still unsatisfactory for real-time applications [19]. There is an urgent need to develop line detection methods that can accurately extract line features at a fast rate regardless of the environment geometrical complexity. To realize the real-time SLAM for point and line features, Zhang et al. proposed the line detector based on Canny edges to obtain line features iteratively [20]. In contrast to [20], Vakhitov et al. focused on improving the accuracy and robustness of line feature extraction by training a deep yet lightweight full-convolutional neural network [21]. Gomez-Ojeda et al. [22] and Luo et al. [23] introduced the Fast Line Detector (FLD) algorithm to reduce the detecting time of the LSD method. The fast detection speed and straightforward logic composition of the FLD approach makes it suitable in the field of point-line SLAM. Although effective, it requires prior information about the scene to determine the needed parameters, which limits its usefulness in prior unknown environments, such as those encountered inside collapsed buildings.

Another hindrance that prevents the line feature from being extensively used is the complex outlier culling technique that makes their implementation nontrivial. Line features have a number of characteristics that change with the angle of view and occlusions, so the method of removing inappropriate features from the detected lines has been a focus of constant research. Shao et al. utilized a coplanar constraint of line features to filter mismatched line features, but the method lacks processing real-time data [24]. Ma et al. trained a general classifier to judge the correctness of any hypothetical matching [25]. This method converts the mismatch elimination into a two-class classification problem, but the performance of the algorithm in unknown scenes still requires further experimental verification. Lim et al. proposed a structural model based on epipolar and vanishing points to remove degenerate LSD line features intended to improve the reliability of line features [26].

Although the above-mentioned approaches present many practical solutions to issues existing in line feature detection and outliers elimination, they are still unable to effectively address the SLAM problem of point-line loop detection. Pumarola et al. proposed that only point features were detected in the PL-SLAM loop closure to reduce the computational complexity [14]. Gomez-Ojeda et al. built a line Bag of Words (BoW) model that had a parallel relationship with the point BoW to achieve the independent line loop detection [22]. However, the point-line detection method without considering the geometric proximity between point and line features does not significantly improve the accuracy of loop detection. Ma et al. modeled a BoW containing VPs and their connecting lines to improve the accuracy of the SLAM system in the corridor [15]. For the problem of false loop detection caused by the existence of a similar point map, researchers have pointed out that the accuracy and robustness of loop detection can be improved by building a Bag of Words (BoW) model that considers the spatial proximity and co-occurrence information of visual vocabulary [27,28]. Under this context, an effective Point and EDlines SLAM framework, named PEL-SLAM, is proposed to solve the above-mentioned issues, including the line feature detection, line outliers elimination, and loop detection.

Specifically, a point-line stereo SLAM system based on Edge Drawing lines (EDlines) [29] and the improved BoW model is proposed to realize localization and mapping in human-made environments. The proposed approach takes advantage of line features in low-textured environments and PL-BoW for loop detection. Point and line features of the input stereo image are detected by ORB and EDlines, followed by calculating the uncertainty entropy that describes the accuracy of the detected line features. The PL-BoW is then obtained using a PL pair selection technique following the ORB point and LBD line descriptors. Finally, a loop detection mechanism based on PL-BoW, similarity score, and space consistency detection of the keyframe is employed to determine the best matching keyframe. The three main contributions of this paper are:

- (1)

- A stereo SLAM system based on the integration of point and line features. Such a method employs an EDlines algorithm to improve the speed of line feature detector in the front-end of the system. In addition, the comprehensive representation and transformation of line features are also derived.

- (2)

- A method using entropy scale and geometric constraints is proposed to eliminate the outliers of line features. The strategy of removing the mismatched features in the front-end ensures the reliability of the extracted lines without increasing the additional algorithm complexity. The application of this method improves the accuracy of camera pose estimation and map construction.

- (3)

- A novel Point and Line Bags of Word (PL-BoW) model combining the point and line features is proposed to improve the accuracy and robustness of loop detection. Unlike popular methods of evaluating the BoW of point and line features independently, the proposed PL-BoW model takes into account the constraints of the extracted point and line features. Such a model improves the reliability of the loop detection process under the interference of weak texture and light changes, which typically exist in structured engineered environments.

The remainder part of this paper is as follows. Section 2 provides the geometric expression, detection, and matching methods of line features. The graph optimization for line features and the improved loop detection mechanism are presented in Section 3. Section 4 compares and analyzes the experimental results of the proposed algorithm with existing methods. The conclusions of the paper are presented in Section 5.

2. Representation and Detection of Line Features

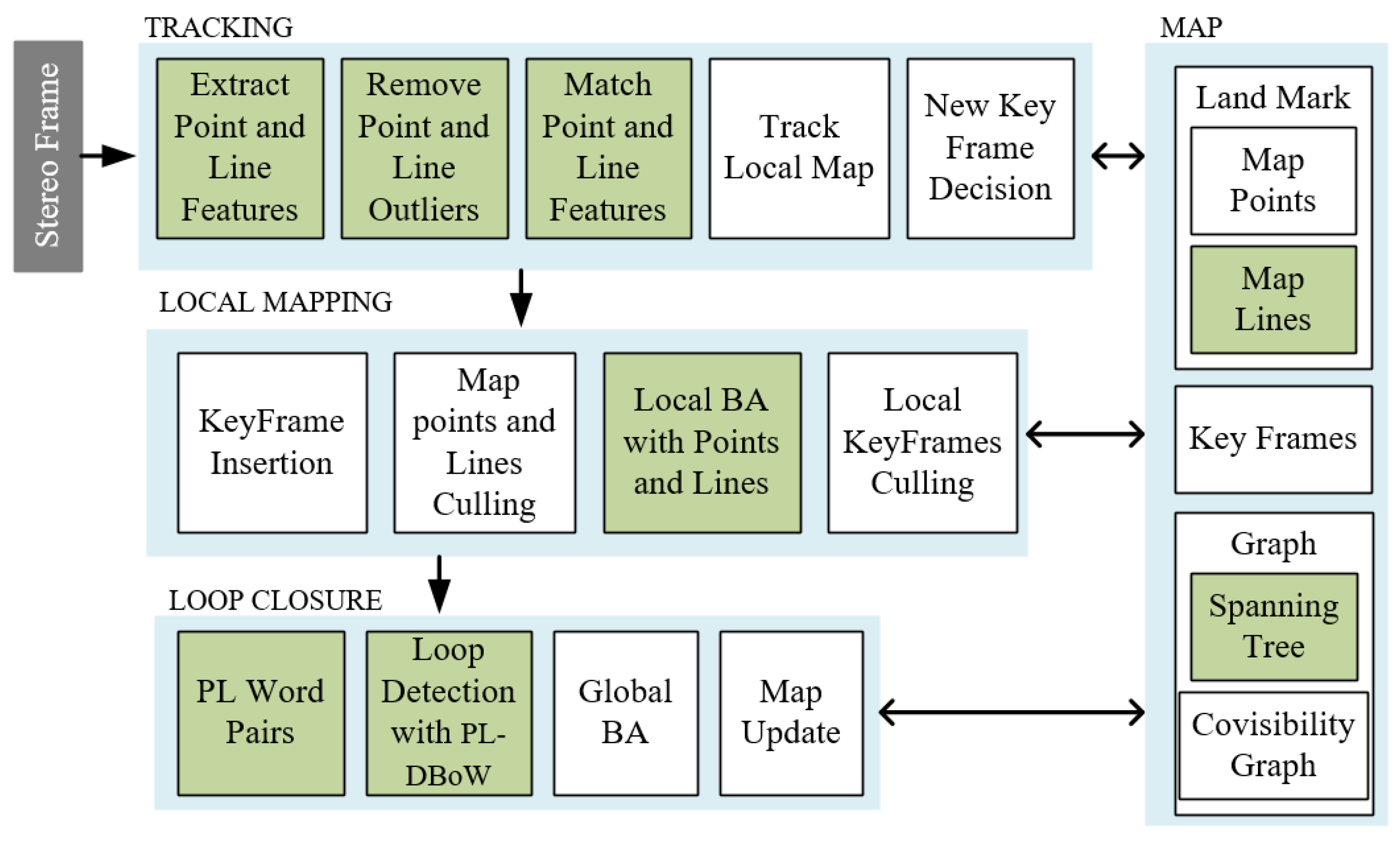

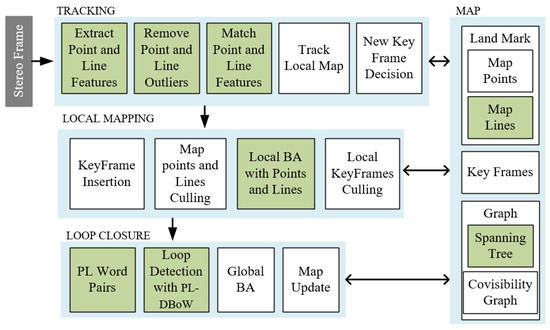

The schematic diagram of the PEL-SLAM system proposed here is illustrated in Figure 1. Since the PEL-SLAM system is improved on the basis of ORB-SLAM2, most modules of the system are the same as the ORB-SLAM2 system except for the green part in Figure 1.

Figure 1.

The schematic diagram of the proposed PEL-SLAM.

Improvements of the line representation, line feature detection, and line feature matching in the front-end of the proposed method are presented in this section, while improvements of Bundle Adjustment (BA) optimization and loop closure in the back-end of the system are described in the following sections.

2.1. Geometric Representation of Lines

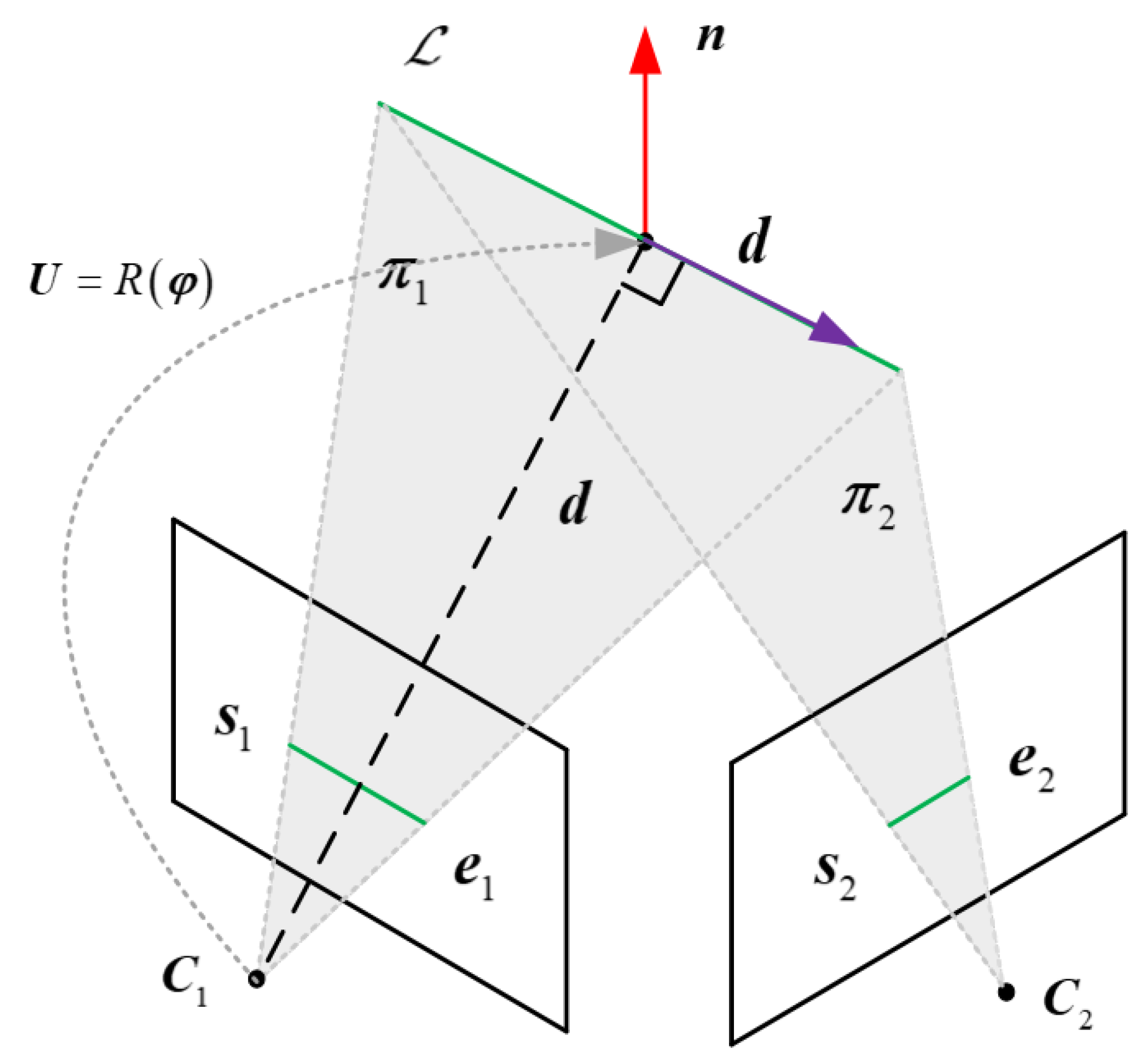

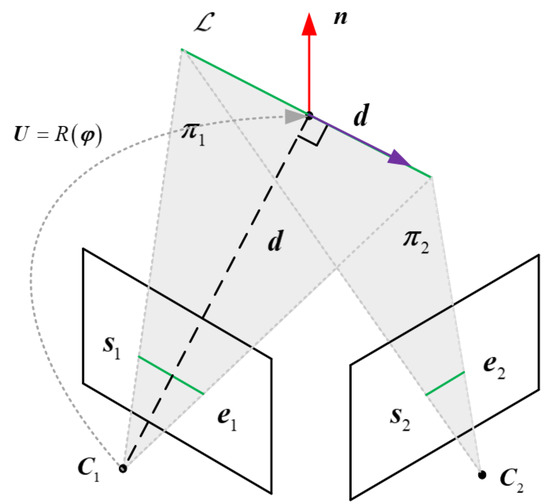

A spatial line can be expressed via its two endpoints in the image plane, and (see Figure 2). This is an essential operation of the visual odometer needed to transform 3D lines in the world reference to the image plane. The Plcker parameter has the characteristic of observability and computational simplicity, and it is used for the transformation and projection of line features. However, the over parameterization of the Plcker parameter hinders the performance of the system in back-end optimization. To address this, an orthonormal representation is introduced for optimization. The spatial line in the Plcker coordinate is expressed by , where is the normal vector, and represents the line direction vector of the plane . The plane is composed of the line and the camera coordinate origin .

Figure 2.

Plcker parameters and triangulation of a spatial line.

As shown in Figure 2, the Plcker line coordinate is usually constructed by triangulation of two different camera frames. The parameters of the plane can be determined by , , and the camera origin in the world reference as follows:

where the [] is the skew-symmetric matrix of a vector in . Other planes (e.g., ) can be obtained following the same calculation.

Given planes and , the coordinate of the line feature in the world reference is determined via the following dual Plcker matrix :

With the Plcker coordinates known, the transformation of the line becomes convenient in 3D Euclidean space. In what follows, we represent a 3D rigid body motion by a rotation matrix and a translation vector . Given the transformation matrix from the world reference to the camera reference, the corresponding Plcker transformation matrix is defined by:

Then the Plcker representation of the given line feature can be transformed by:

After calculating the representation of the line feature in the camera reference, the spatial line can be projected to the image plane through:

where denotes the projection matrix of a given line, and represent the intrinsic parameters of the calibrated camera. It should be noted that when a line feature is projected onto a normalized image plane, is an identity matrix.

Since a spatial line in 3D space only has four degrees of freedom, using the Plcker representation increases the computational complexity of the optimization process. The de-coupled orthonormal representation employed in the unconstrained optimization problem is obtained from the decomposition of the Plcker coordinates.

Furthermore, and are expressed by:

where denotes the angle of rotation from the camera reference to the line reference (see Figure 2). Since implies one-dimensional scale information, the minimum parameterization of a spatial line can be defined by a four-dimensional vector . Once the orthogonal representation of the optimized feature is obtained, the corresponding Plcker coordinates are calculated by:

in which denotes the column of .

2.2. Extraction and Description of Line Features

The line segment detector LSD, which gives an accurate line detection and requires no parameter tuning (widely applied in state-of-the-art PL-SLAM systems), is designed to extract line segments on noisy images with sub-pixel detection accuracy [30]. However, the computational complexity of LSD causes extra time spending on the system in the front-end feature extraction. Since the limitation of LSD directly affects the tracking and matching of line features, this aspect leads to the failure of the visual odometer.

To improve the real-time performance of the line feature extraction, the EDlines algorithm is used instead of the LSD to detect line features from images captured by the stereo camera. The EDlines algorithm has been proven to run faster than the LSD, while its output tends to contain irrelevant lines [15]. The experiment in [31] shows that EDlines can achieve a similar performance when compared with LSD without sacrificing the detection accuracy by using an appropriate line outlier elimination method.

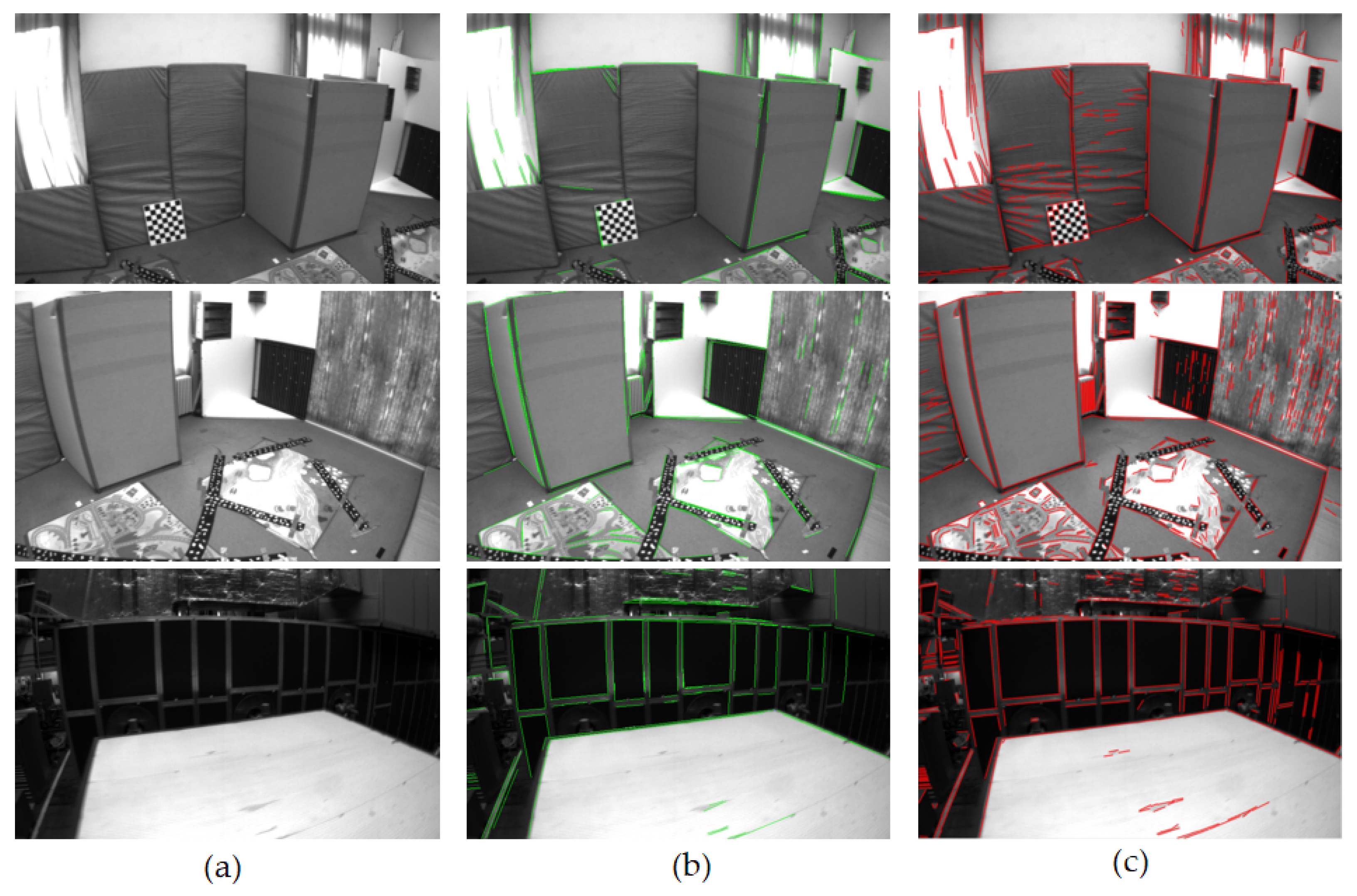

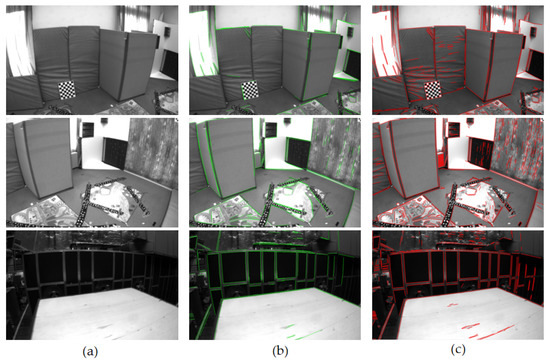

In this work, the individual performance of the LSD and EDlines mechanisms was validated on three different scenes. Referring to Figure 3, the median three figures show the line features detected by LSD, whereas the three figures on the right side of the figure show the detected results of EDlines. The results show that both detecting algorithms are able to detect the line features of interest that correspond to real straight lines in the given environments. However, it is noted that EDlines is more likely to detect curves that are near straight lines. The detected curves may degrade the performance of the system. However, these curves usually possess bad spatial positions and thus can be easily recognized from different camera views. Thus, from the testing performed, it was identified that it is possible to use EDlines to achieve effective line detection without corrupting the systems by leveraging some line renting strategies.

Figure 3.

The extracted lines of LSD and EDlines in the EuRoC dataset [33]. (a)The original images. (b) The green lines in the left column are the line features detected by LSD. (c) The red lines in the right column are the line features detected by EDlines.

To make the line features detected by EDlines more recognizable, the LBD descriptors [32] are computed to represent each line feature. Similar to the ORB descriptor for point feature, the LBD descriptor contains geometric attributes and appearance descriptions of the corresponding line features. For two consecutive stereo frames, the similarity of line features is measured by calculating the consistency of LBD descriptors between line pairs.

2.3. Extraction and Description of Line Features

After exacting line features, LBD descriptors are generated to describe the corresponding line features in two consecutive captured adjacent frames. The similarity of descriptors is calculated to find the matching line features. The extracted line feature is considered to be a good match only if such feature is the best match in both images of a stereo frame. Before eliminating outliers, the preprocessing of matched pairs is required to improve the accuracy of the system matching. Given the occlusion and perspective changes that might exist in real-world environments, in this work, the line pair is not considered a match if their lengths are more than twice as different. At the same time, the line pair is considered to be mismatched if the distance between the midpoints of the two lines on the image is greater than a given threshold.

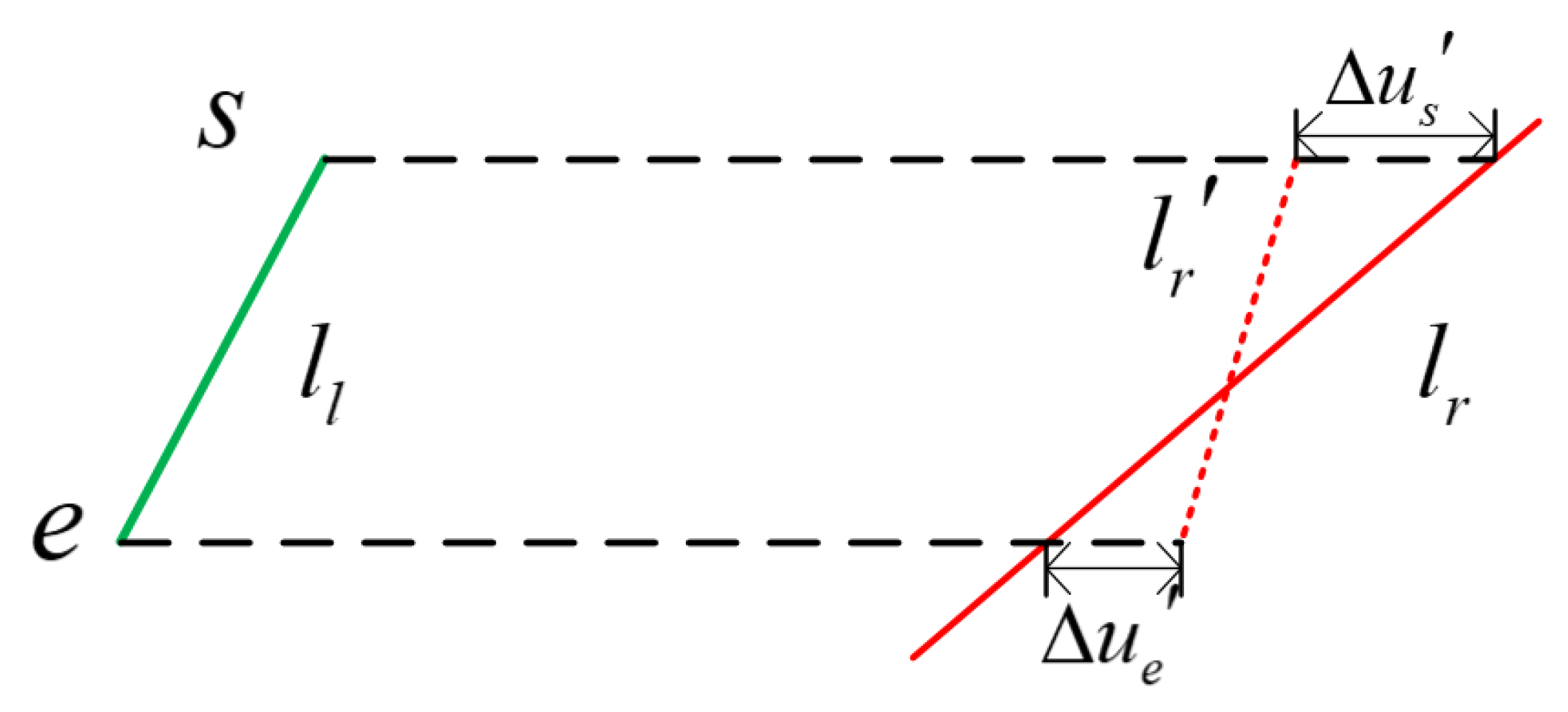

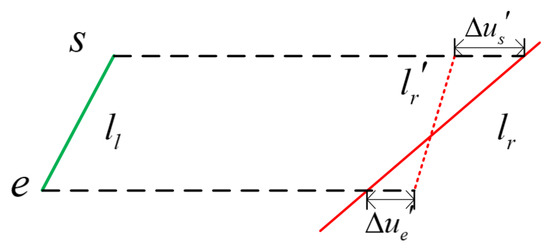

For a stereo frame, a spatial line is represented as and in the left and right images, respectively, where can be represented as by the homogeneous coordinates of its endpoints. Given a stereo frame captured by a stereo camera, the matching points in the two rectified images have the same horizontal position. Therefore, the endpoints in the right image can be obtained through and line , as illustrated in Figure 4.

Figure 4.

The line model in a stereo frame.

The endpoints of the line can be denoted by the endpoints and of the left line.

The depth parameters of and are calculated by the disparities as follows:

where , , and b denotes the baseline of the stereo camera. Then the 3D position of is obtained by the back-projection of the endpoint as follows:

Since line features are more sensitive to image noise and mismatching than point features, the uncertainty of line features is modeled to quantify the reliability of line features. Considering the spatial properties of line features, the covariance propagation method is used to construct the uncertainty matrix of the endpoint, which can be expressed as follows:

in which represents the Jacobian matrix of , and is the uncertainty matrix of . Based on the properties of in the image plane, is modeled as a bi-dimensional Gaussian set with standard deviation pixel. The matrix can be derived from Equation (13) as follows:

Due to the fact that the scale of uncertainty between image pairs varies, the values are not directly comparable. The entropy of multivariate normal distribution is thus introduced to abstract the uncertainty in the covariance matrix into a scalar value, which is defined as:

where m is the dimension of . The uncertainty entropy of can be calculated in the same way.

In the methods of [20,24], the processing of outliers is to remove matching pairs that do not meet a set constant threshold. However, we found that a constant threshold is not applicable because the uncertainty depends on the changes of motion and scene. A threshold determination method based on the uncertainty entropy of all line features of the current frame is thus applied to the outliers eliminating process. After calculating the average entropy for all line features of the current frame, the threshold is set to 0.85 according to experiments. If the uncertainty entropy and of a spatial line reconstructed from the stereo frame are greater than , the line can be regarded as an accurate line feature, and vice versa.

3. Bundle Adjustment and Loop Closure with Points and Lines

The BA module in the back-end optimization consists of two main aspects: local BA of local mapping thread and global BA of loop closure thread. The original BA module of ORB-SLAM2 includes variables of camera poses and point landmarks. However, the addition of line features complicates the optimization process as a minimization process of the cost function in the co-visibility graph needs to be performed. Therefore, a graph BA optimization strategy considering line and point features is adopted in this paper. In addition, the accuracy of the loop closure depends on the calculation of the global BA and the loop detection. A novel point and line BoW is thus proposed to improve the stability and accuracy of the loop detection.

3.1. Graph Optimization with Point and Line Features

Due to the fact that the length and angle of the same line in the two images are different, projection errors cannot be obtained directly from the corresponding two frames. In this work, the projection errors are computed by re-projecting the matched lines from the world reference back to the current image reference. Given the spatial line in the world reference and its orthogonal expression , the corresponding line feature is transformed to the camera frame of reference by . Then the projected line is obtained by projecting the line features onto the normalized plane of the current frame. The reprojection error is then defined as the distance between the projected line and the endpoints of the detected line , which is computed via Equation (17).

where and are the endpoints of the detected line . With the reprojection error defined, a global loss function C containing point and line features is formulated as:

where denotes the robust Huber cost functions, and , denote the inverse information matrices of the reprojection error of points and lines, respectively. Compared to the original loss function of the ORB-SLAM2 system, the reprojection error function about the line feature is added to Equation (18). The camera pose and landmark are calculated by minimizing the loss function C. The Jacobians of are already derived in ORB-SLAM2, and the Jacobians concerning camera pose and line landmark can be expressed by the following matrix chain multiplication:

The Jacobian matrix of the line reprojection error relative to is expressed as:

According to the 3D line projection, Equation (21) is obtained.

The term represents the Jacobian matrix of line features concerning translation and rotation errors in camera reference in which . The line features only optimize the translation and the rotation in the state variable, and the Jacobian matrix is calculated as follows:

The Jacobian matrix of the line in the camera reference concerning the line in the world reference is the inverse of the transformation matrix represented as Equation (24).

According to the orthogonal representation of a spatial line, the last term of Equation (19) can be defined as:

With the analysis of the Jacobians completed, iterative algorithms, such as Gaussian–Newton, can be employed to solve the local graph optimization of local mapping and global graph optimization of loop closure.

3.2. Loop Closure with Points and Lines

In human-made environments, the existence of weak textures (i.e., white wall) and frequent light changes leads to the false detection of the traditional BoW-based loop closure. The insufficient recognition of point features leads to the mismatch results between frames. To address this aspect, PL-BoW, a BoW that combines point and line features, is proposed, which utilizes the co-occurrence information and spatial proximity of visual words.

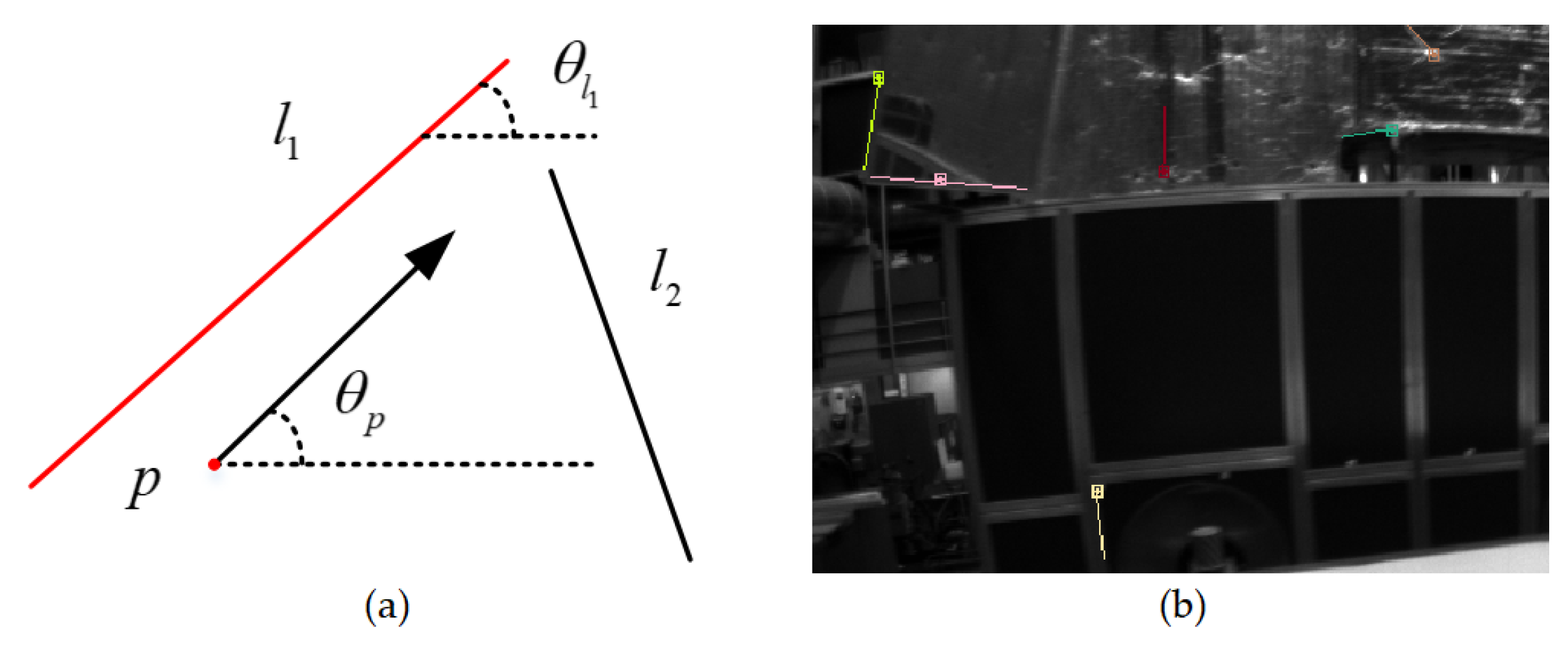

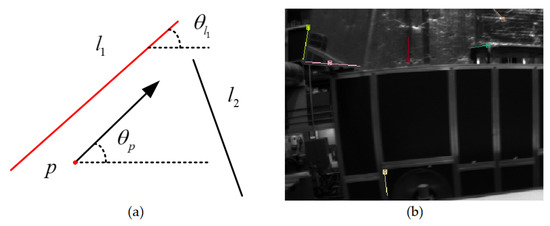

The visual words of point features are generated from the ORB descriptor, including the position and direction of point features. At the same time, the direction and position information of the corresponding line feature is obtained from its endpoints. As shown in Figure 5, the combination of a point and a line is defined as a PL pair only when the direction and distance of the line and the point are close enough. To improve the search speed of PL pairs, a K-D tree is constructed by using the position of each point feature. Then the line features satisfying the PL pair are selected to build the point and line K-D tree.

Figure 5.

Word pairs of points and lines. (a) Relative spatial co-occurrence of words in a pair. (b) Word pairs of point and line in a given image.

Given a current keyframe and a candidate keyframe , the corresponding BoW vectors are defined as and , respectively. In loop closure detection, the similarity of two keyframes is calculated through the BoW vectors as follows:

where and represent the total number of point and line features in the image, respectively. The mismatched keyframes that have the lower score are removed from the set of candidate keyframes. The procedure of the improved PL-BoW based loop detection is given in Algorithm 1.

| Algorithm 1: PL-BoW Based Loop Detection. | |

| Input: | The keyframes set , the KD-tree associated with and the current keyframe ; |

| Output: | A revisit matching keyframe ; |

| 1 | Select candidate keyframes through retrieving the words of points and lines in the |

| image using Term Frequency-Inverse | |

| Document Frequency (TF-IDF); | |

| 2 | NumberOfCommonView Words ; |

| 3 | NumberOfCommonViewPLpairs ; |

| 4 | for each do |

| 5 | if |

| 6 | ; |

| 7 | Calculate the similarity ; |

| 8 | ; |

| 9 | end |

| 10 | end |

| 11 | for each do |

| 12 | Remove with ; |

| 13 | end |

| 14 | Perform space consistency detection on to obtain . |

4. Experimental Verification

To test and analyze the proposed PEL-SLAM, a set of experimental tests were performed with an Intel CPU i5-10060 KF@4.1GHz, 32GB RAM, without a dedicated GPU. OpenCV and g2o were mainly used as the environment running on an Ubuntu16.04 desktop. The proposed PL visual stereo SLAM algorithm was compared with popular methods, including ORB-SLAM2 [8], PL-SLAM [16], and stereo Point and Line based Visual Odometry (sPLVO) [23]. The above algorithms were tested on the KITTI stereo dataset [34] and EuRoC micro aerial vehicle (MAV) dataset [33], which provide several challenging sequences of images in indoor and outdoor environments. The absolute Root Mean Square Errors (RMSEs) between the estimated translation and rotation of the SLAM system and the groundtruth given in the dataset were computed by the EVO [35] evaluation tool.

4.1. Stereo SLAM on KITTI Dataset

The test results in terms of the RMES of ORB-SLAM2, PL-SLAM, and the proposed algorithm on the KITTI dataset are shown in Table 1. Since the strategy applied in the sPLVO is not appropriate for outdoor environments, sPLVO was not tested for comparison on KITTI dataset.

Table 1.

Absolute RMSE errors of three methods on KITTI.

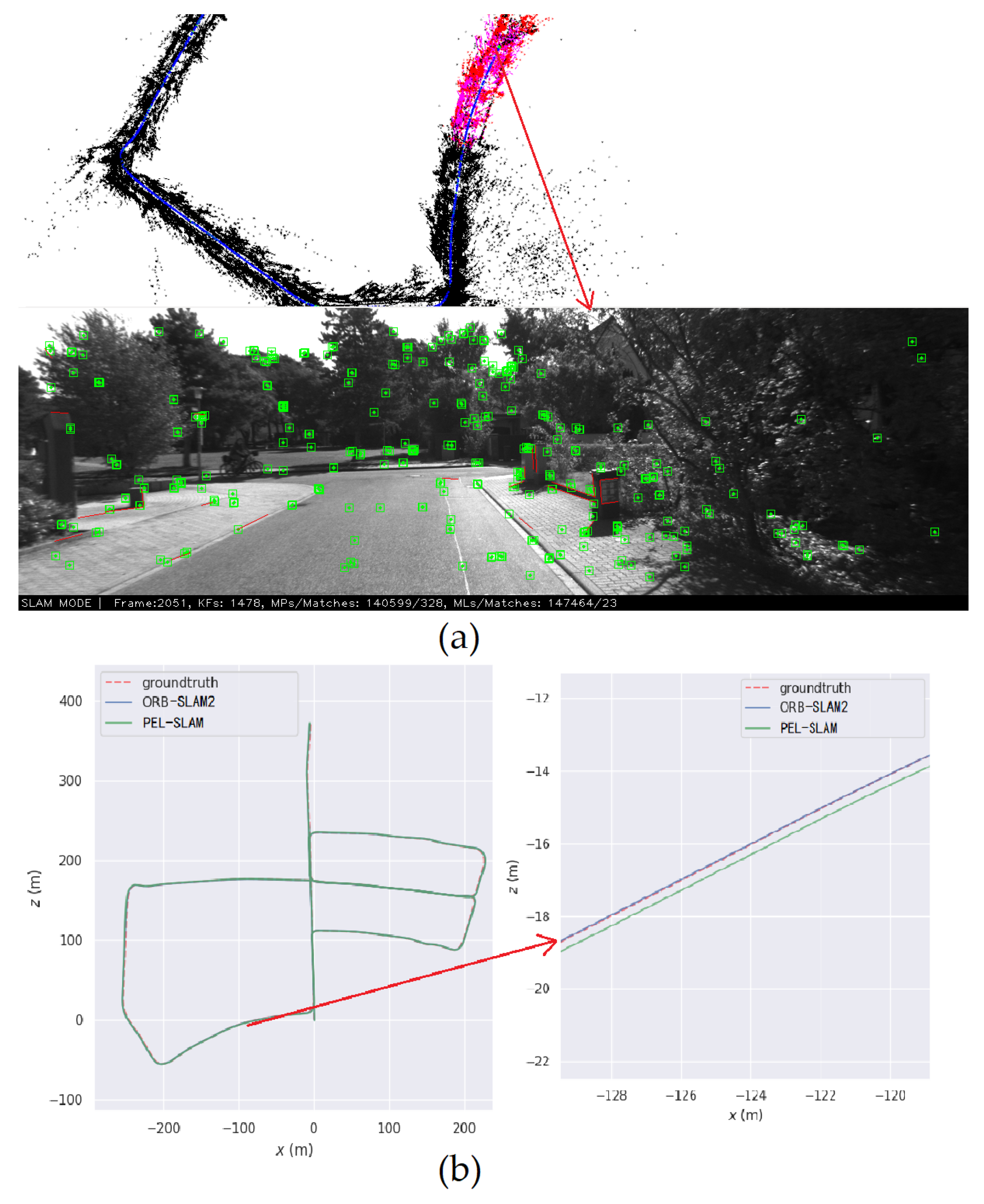

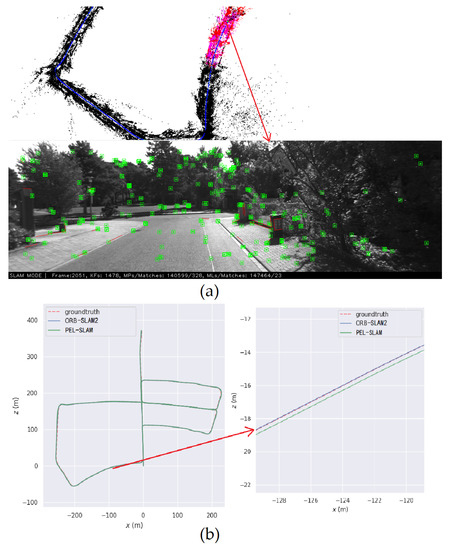

The lowest absolute translation and rotation errors for each test are marked in bold. Overall, the proposed method performs better than the other two methods. The translation and rotation errors of the proposed method are about 24.8% and 29.6% lower than that of ORB-SLAM2, respectively. However, it can be seen that the application of line features in the environment with insufficient line features hinders the accuracy of the SLAM process. As shown in Figure 6, the condition of the KITTI 05 dataset is mostly foresting with fewer line features, and the translation and rotation accuracy of the proposed method are reduced by 17.1% and 8.8%, respectively. Table 1 also shows that the proposed method outperforms PL-SLAM, which also employs both point and line features. One reason for this is that the use of PL-BoW suppresses the long-term positioning drift of the system in large outdoor scenes. Another reason for this result is that the proposed line feature outliers elimination method reduces the front-end mismatching and further improves the accuracy of the constraints constructed in the back-end optimization of the system.

Figure 6.

An example of the PEL-SLAM on KITTI 05. (a) Feature extraction and mapping of PEL-SLAM. (b) Trajectory comparison between ORB-SLAM2 and PEL-SLAM.

4.2. Stereo SLAM on EuRoC Dataset

The accuracy of the four algorithms (PEL-SLAM, PL-SLAM, sPLVO, and ORB-SLAM2) was also compared on the EuRoC dataset, which includes indoor environments images of machine halls and rooms. Table 2 shows the absolute translation and rotation errors of these four algorithms.

Table 2.

Absolute RMSE errors of four methods on EuRoC.

It is clear from Table 2 that the strategy used by sPLVO for indoor line features makes it competitive in indoor environments. Nevertheless, the performance of the proposed method on the EuRoC dataset was generally superior to PL-SLAM, ORB-SLAM2, and sPLVO. Compared with the ORB-SLAM2, the translation and rotation accuracy of the proposed method are improved by nearly 5.7% and 7.3%, respectively. These improvements demonstrate that the incorporation of line features increases the accuracy of pose estimation and map construction. Although both sPLVO and the proposed method introduce line feature outliers elimination, the proposed method shows better accuracy in most testing cases. Compared with the state-of-the-art sPLVO, the maximum translation and rotation accuracy of the proposed method improves the results by 17.2% and 24.8%, respectively. These results indicate that using the entropy scale to measure the uncertainty of line features and applying PL-BoW to loop detection are beneficial to the accuracy of the SLAM process.

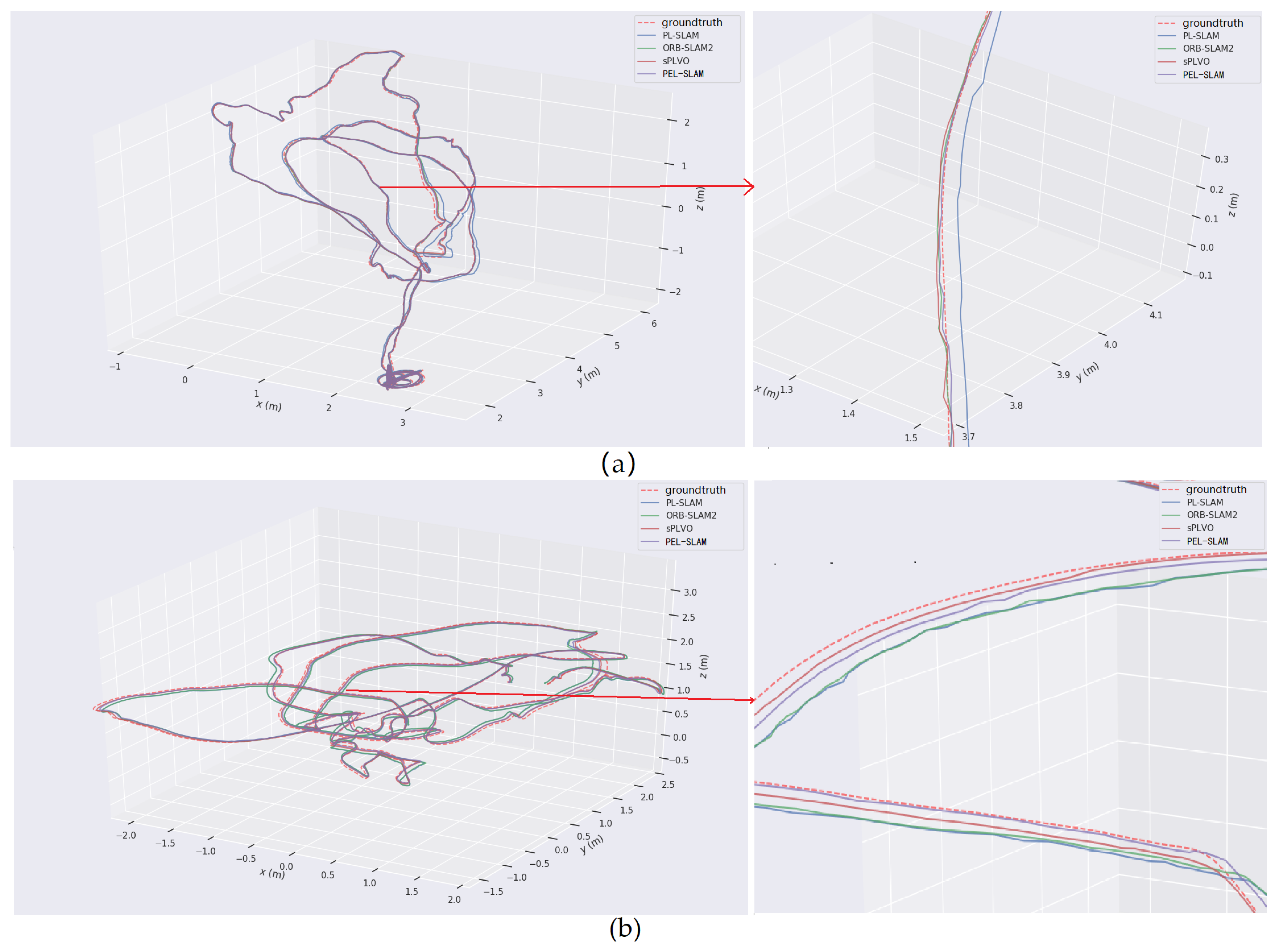

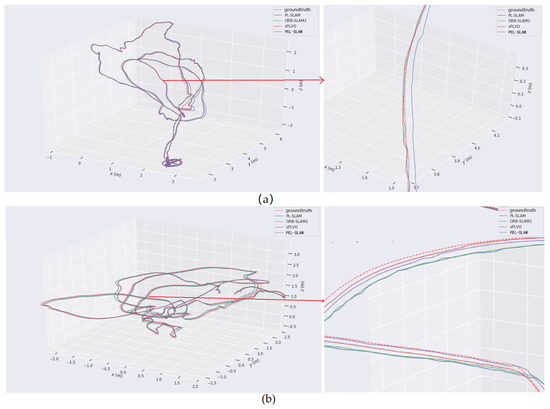

The tests also demonstrate that the proposed method environments with drastic changes and rapid motion of carriers. Figure 7 illustrates the estimated trajectory comparisons of MH-02 and V1-01, which contains the results with typical rapid motion and changing light. The accuracy of the two methods based on point and line features (i.e., sPLVO and PEL-SLAM) is higher than the methods that use only-point-features, ORB-SLAM2. Meanwhile, the average rotation accuracy of the proposed method is nearly 14.3% higher than that of sPLVO.

Figure 7.

Trajectory comparison between PL-SLAM, ORB-SLAM2, sPLVO, and the proposed PEL-SLAM. (a) The 3D view of the estimated trajectories on EuRoC MH-02. (b) The 3D view of the estimated trajectories on EuRoC V1-01.

4.3. Comparison of Processing Time

Due to the fact that the real-time performance is one of the important indicators of the SLAM process, a comparison between the average processing time per frame of different methods was performed (Table 3). Table 3 shows that the operating time of the SLAM process is directly affected by the image resolution, which means that the process needs more time to process the KITTI images. At the same time, the application of line features increases the running time of the system, especially the detection of line features. The PEL-SLAM reduces the time consumption during the line features detection. Compared with PL-SLAM, the proposed method saves approximately 7.7% and 12.2% of the average running time in KITTI and EuRoC datasets, respectively. The running time of the proposed method is 4.5% faster than that of sPLVO without sacrificing the accuracy of the pose estimation and map construction.

Table 3.

Average processing time per frame on KITTI and EuRoC.

5. Conclusions

In this paper, a method that can extract point and line features with the purpose of using them to improve the positioning, mapping, and loop detection is proposed. The PEL-SLAM system solves the problem of point-based failure in poorly textured scenes. The proposed method uses the faster EDlines instead of the widely used LSD and leverages the entropy scale to measure the uncertainty of line features. Meanwhile, the proposed PL-BoW is constructed and applied to the loop detection, which improves the accuracy of loop keyframe matching. Such mechanisms system enables SLAM to be performed in real-time without loss of accuracy while producing a complete point-linemap. Finally, the detailed experiments on KITTI and EuRoC datasets show that the proposed method outperforms many well-known methods with respect to translation and rotation accuracy in challenging environments, with the additional benefit of using less time consumption compared with the state-of-the-art PL SLAM systems. In the proposed algorithm, the accuracy of the system depends on the performance of the detected visual features. The way of combining other sensors information to improve the robustness of the point-line SLAM will be the focus of future research.

Author Contributions

H.R., A.R.-S. and Y.G. conceived the idea; H.R., X.X. and Y.Z. designed the software, collected the data, and analyzed the experimental data; L.G. collected the related resources and supervised the experiment; A.R.-S. proposed the comment for the paper and experiment. All authors have read and agreed to the published version of the manuscript.

Funding

This work is sponsored by the National Natural Science Foundation of China (NSFC. 61803118), the Special Project of Chongqing Technology Innovation and Application Development (cstc2019jscx-msxmX0423), the Science and Technology Research Program of Chongqing Municipal Education Commission (KJZD-K201804701), and the Post Doc. Foundation of Heilongjiang Province (LBH-Z17053).

Data Availability Statement

The data that support the findings of this study are available from the corresponding author upon request.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Smith, R.C.; Cheeseman, P. On the representation and estimation of spatial uncertainty. Int. J. Robot. Res. 1986, 5, 56–68. [Google Scholar] [CrossRef]

- Bescos, B.; Fácil, J.M.; Civera, J.; Neira, J. DynaSLAM: Tracking, mapping, and inpainting in dynamic scenes. IEEE Robot. Autom. Lett. 2018, 3, 4076–4083. [Google Scholar] [CrossRef] [Green Version]

- Cadena, C.; Carlone, L.; Carrillo, H.; Latif, Y.; Scaramuzza, D.; Neira, J.; Reid, I.; Leonard, J.J. Past, present, and future of simultaneous localization and mapping: Toward the robust-perception age. IEEE Trans. Robot. 2016, 32, 1309–1332. [Google Scholar] [CrossRef] [Green Version]

- Poulose, A.; Han, D.S. Hybrid indoor localization using IMU sensors and smartphone camera. Sensors 2019, 19, 5084. [Google Scholar] [CrossRef] [Green Version]

- Zhang, Z.; Rebecq, H.; Forster, C.; Scaramuzza, D. Benefit of large field-of-view cameras for visual odometry. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–21 May 2016; pp. 801–808. [Google Scholar]

- Li, H.; Yao, J.; Lu, X.; Wu, J. Combining points and lines for camera pose estimation and optimization in monocular visual odometry. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; pp. 1289–1296. [Google Scholar]

- Palmarini, R.; Erkoyuncu, J.A.; Roy, R.; Torabmostaedi, H. A systematic review of augmented reality applications in maintenance. Robot.-Comput.-Integr. Manuf. 2018, 49, 215–228. [Google Scholar] [CrossRef] [Green Version]

- Mur-Artal, R.; Tardós, J.D. Orb-slam2: An open-source slam system for monocular, stereo, and rgb-d cameras. IEEE Trans. Robot. 2017, 33, 1255–1262. [Google Scholar] [CrossRef] [Green Version]

- Li, Y.; Brasch, N.; Wang, Y.; Navab, N.; Tombari, F. Structure-slam: Low-drift monocular slam in indoor environments. IEEE Robot. Autom. Lett. 2020, 5, 6583–6590. [Google Scholar] [CrossRef]

- Zhang, Y.; Hsiao, M.; Zhao, Y.; Dong, J.; Enge, J.J. Distributed Client-Server Optimization for SLAM with Limited On-Device Resources. arXiv 2021, arXiv:2103.14303. [Google Scholar]

- Lu, Y.; Song, D. Robust RGB-D odometry using point and line features. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 3934–3942. [Google Scholar]

- Scaramuzza, D.; Pradalier, C.; Siegwart, R. Performance evaluation of a vertical line descriptor for omnidirectional images. In Proceedings of the 2008 IEEE/RSJ International Conference on Intelligent Robots and Systems, Nice, France, 22–26 September 2008; pp. 3127–3132. [Google Scholar]

- Scaramuzza, D.; Criblez, N.; Martinelli, A.; Siegwart, R. A Robust Descriptor for Tracking Vertical Lines in Omnidirectional Images and its Use in Robot Self-Calibration. Int. J. Robot. Res. 2009, 28, 149–171. [Google Scholar] [CrossRef] [Green Version]

- Pumarola, A.; Vakhitov, A.; Agudo, A.; Sanfeliu, A.; Moreno-Noguer, F. PL-SLAM: Real-time monocular visual SLAM with points and lines. In Proceedings of the 2017 IEEE international conference on robotics and automation (ICRA), Singapore, 29 May–3 June 2017; pp. 4503–4508. [Google Scholar]

- Ma, J.; Wang, X.; He, Y.; Mei, X.; Zhao, J. Line-based stereo slam by junction matching and vanishing point alignment. IEEE Access 2019, 7, 181800–181811. [Google Scholar] [CrossRef]

- Gomez-Ojeda, R.; Moreno, F.A.; Zuniga-Noël, D.; Scaramuzza, D.; Gonzalez-Jimenez, J. PL-SLAM: A stereo SLAM system through the combination of points and line segments. IEEE Trans. Robot. 2019, 35, 734–746. [Google Scholar] [CrossRef] [Green Version]

- Berenguer, Y.; Payá, L.; Valiente, D.; Peidró, A.; Reinoso, O. Relative altitude estimation using omnidirectional imaging and holistic descriptors. Remote Sens. 2019, 11, 323. [Google Scholar] [CrossRef] [Green Version]

- Li, K.; Yao, J. Line segment matching and reconstruction via exploiting coplanar cues. ISPRS J. Photogramm. Remote Sens. 2017, 125, 33–49. [Google Scholar] [CrossRef]

- Zuo, X.; Xie, X.; Liu, Y.; Huang, G. Robust visual SLAM with point and line features. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; pp. 1775–1782. [Google Scholar]

- Zhang, G.; Lee, J.H.; Lim, J.; Suh, I.H. Building a 3-D line-based map using stereo SLAM. IEEE Trans. Robot. 2015, 31, 1364–1377. [Google Scholar] [CrossRef]

- Vakhitov, A.; Lempitsky, V. Learnable line segment descriptor for visual slam. IEEE Access 2019, 7, 39923–39934. [Google Scholar] [CrossRef]

- Gomez-Ojeda, R.; Gonzalez-Jimenez, J. Geometric-based line segment tracking for HDR stereo sequences. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 69–74. [Google Scholar]

- Luo, X.; Tan, Z.; Ding, Y. Accurate line reconstruction for point and line-based stereo visual odometry. IEEE Access 2019, 7, 185108–185120. [Google Scholar] [CrossRef]

- Shao, Z.; Chen, M.; Liu, C. Feature matching for illumination variation images. J. Electron. Imaging 2015, 24, 033011. [Google Scholar] [CrossRef]

- Ma, J.; Jiang, X.; Jiang, J.; Zhao, J.; Guo, X. LMR: Learning a two-class classifier for mismatch removal. IEEE Trans. Image Process. 2019, 28, 4045–4059. [Google Scholar] [CrossRef]

- Lim, H.; Kim, Y.; Jung, K.; Hu, S.; Myung, H. Avoiding Degeneracy for Monocular Visual SLAM with Point and Line Features. arXiv 2021, arXiv:2103.01501. [Google Scholar]

- Qian, K.; Zhao, W.; Li, K.; Ma, X.; Yu, H. Visual SLAM With BoPLW Pairs Using Egocentric Stereo Camera for Wearable-Assisted Substation Inspection. IEEE Sensors J. 2019, 20, 1630–1641. [Google Scholar] [CrossRef]

- Zhao, W.; Qian, K.; Ma, Z.; Ma, X.; Yu, H. Stereo visual SLAM using bag of point and line word pairs. In Proceedings of the International Conference on Intelligent Robotics and Applications, Shenyang, China, 8–11 August 2019; pp. 651–661. [Google Scholar]

- Akinlar, C.; Topal, C. EDLines: A real-time line segment detector with a false detection control. Pattern Recognit. Lett. 2011, 32, 1633–1642. [Google Scholar] [CrossRef]

- Von Gioi, R.G.; Jakubowicz, J.; Morel, J.M.; Randall, G. LSD: A fast line segment detector with a false detection control. IEEE Trans. Pattern Anal. Mach. Intell. 2008, 32, 722–732. [Google Scholar] [CrossRef] [PubMed]

- Ma, X.; Ning, S. Real-Time Visual-Inertial SLAM with Point-Line Feature using Improved EDLines Algorithm. In Proceedings of the 2020 IEEE 5th Information Technology and Mechatronics Engineering Conference (ITOEC), Chongqing, China, 12–14 June 2020; pp. 1323–1327. [Google Scholar]

- Zhang, L.; Koch, R. An efficient and robust line segment matching approach based on LBD descriptor and pairwise geometric consistency. J. Vis. Commun. Image Represent. 2013, 24, 794–805. [Google Scholar] [CrossRef]

- Burri, M.; Nikolic, J.; Gohl, P.; Schneider, T.; Rehder, J.; Omari, S.; Achtelik, M.W.; Siegwart, R. The EuRoC micro aerial vehicle datasets. Int. J. Robot. Res. 2016, 35, 1157–1163. [Google Scholar] [CrossRef]

- Geiger, A.; Lenz, P.; Urtasun, R. Are we ready for autonomous driving? the kitti vision benchmark suite. In Proceedings of the 2012 IEEE conference on computer vision and pattern recognition, Providence, RI, USA, 16–21 June 2012; pp. 3354–3361. [Google Scholar]

- Grupp, M. Python Package for the Evaluation of Odometry and SLAM; GitHub: San Francisco, CA, USA, 2017. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).