Thermal Imagery Feature Extraction Techniques and the Effects on Machine Learning Models for Smart HVAC Efficiency in Building Energy

Abstract

:1. Introduction

2. Related Work

- Collect data of thermal images for occupancy detection;

- Study and identify the best textural image features that work for occupancy detection;

- Inference learning based on the feature map output from layers of pretrained CNNs such as ResNet-50 and VGG-16;

- Comparative analysis of different CNN architectures and different feature extraction techniques for thermal images-based occupancy detection;

- Performance of VGG-16 and ResNet-50 in an end-to-end manner using the transfer learning approach for occupancy detection.

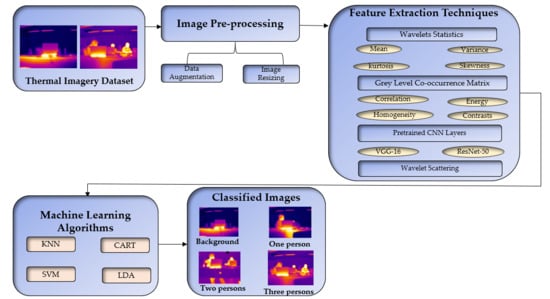

3. Materials and Methods

3.1. Thermal Images Dataset

3.2. Wavelet Statistics Feature Extraction

3.3. Grey-Level Co-Occurrence Matrix

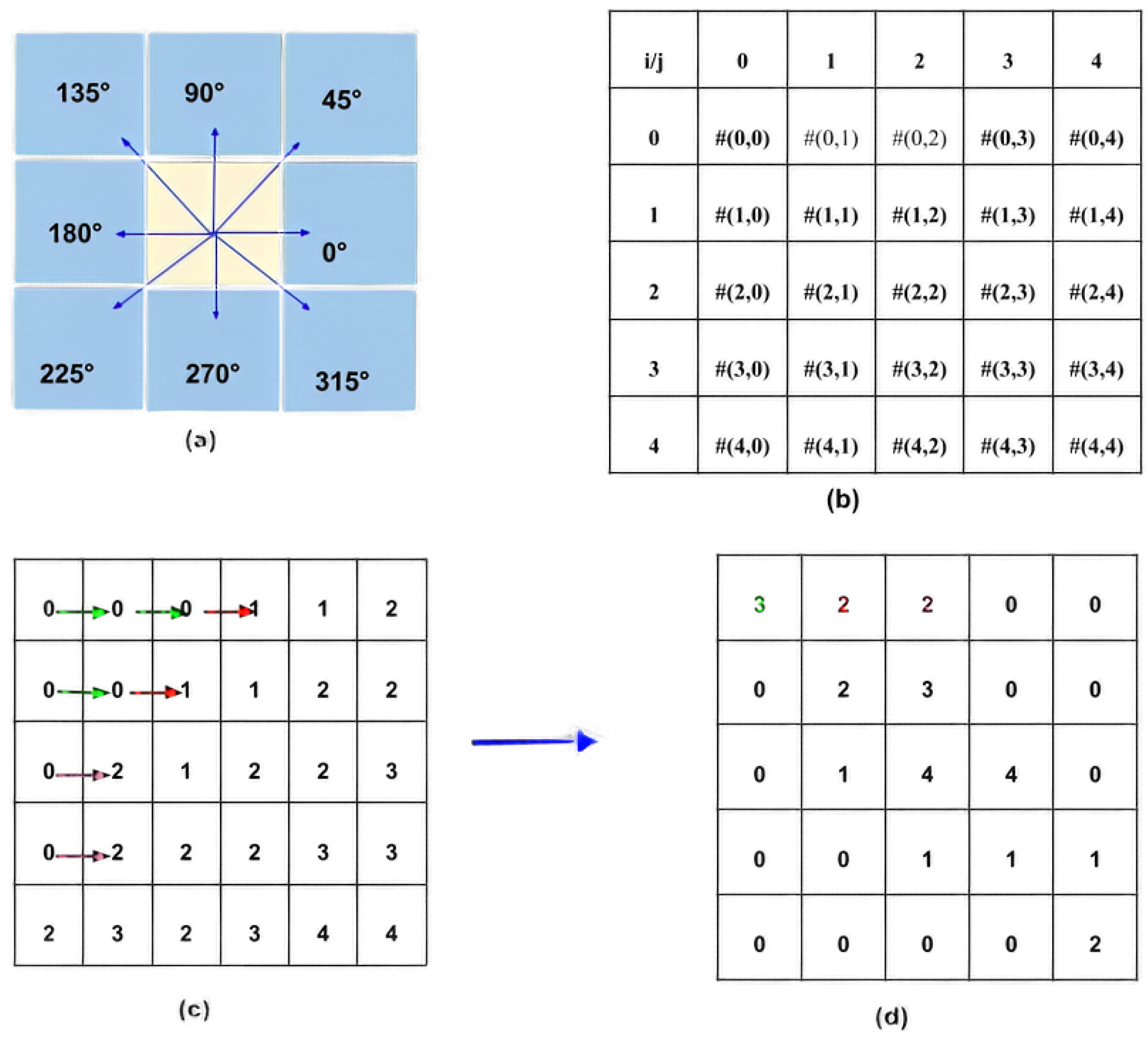

3.4. Wavelet Scattering Transform

3.5. Resnet-50 and SVM

3.6. VGG-16 and SVM

3.7. VGG-16 and ResNet-50 Transfer Learning Frameworks

4. Results and Discussions

4.1. Wavelet Statistical Features and GLCM Modelling Accuracy

4.2. Performance Results Based on Wavelet Scattering Feature Extraction

4.3. Pretrained CNN Deep Features: ResNet-50 with SVM and VGG-16 with SVM

4.4. Analysis of Results of VGG-16 and ResNet-50 Transfer Learning Frameworks

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

Abbreviations

| HVAC | Heating, Ventilation and Air-conditioning |

| CNN | Convolutional Neural Network |

| ANN | Artificial Neural Network |

| FNN | Feedforward Neural Network |

| PSO | Particle Swarm Optimization |

| HMM | Hidden Markov Model |

| ResNet | Residual Neural Network |

| VGG | Visual Geometry Group |

| CO2 | Carbon Dioxide |

| PIR | Passive Infrared |

| CART | Classification and Regression Trees |

| KNN | K-Nearest Neighbors |

| GLCM | Gray-Level Co-Occurrence Matrix |

| SVM | Support Vector Machine |

| LDA | Linear Discriminant Analysis |

| PCA | Principal Component Analysis |

| CV | Cross Validation |

| AUC | Area Under the Curve |

| ROC | Receiver Operating Characteristic |

| STD | Standard Deviation |

| ACC | Accuracy |

| DWT | Discrete Wavelet Transform |

| CWT | Continuous Wavelet Transform |

Appendix A

Appendix B

Appendix C

Appendix D

Appendix E

Appendix F

References

- Acquaah, Y.; Steele, J.B.; Gokaraju, B.; Tesiero, R.; Monty, G.H. Occupancy Detection for Smart HVAC Efficiency in Building Energy: A Deep Learning Neural Network Framework using Thermal Imagery. In Proceedings of the 2020 IEEE Applied Imagery Pattern Recognition Workshop (AIPR), Washington DC, USA, 13–15 October 2020; pp. 1–6. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Kumar, S.; Singh, J.; Singh, O. Ensemble-based extreme learning machine model for occupancy detection with ambient attributes. Int. J. Syst. Assur. Eng. Manag. 2020, 11, 173–183. [Google Scholar] [CrossRef]

- Vela, A.; Alvarado-Uribe, J.; Davila, M.; Hernandez-Gress, N.; Ceballos, H.G. Estimating Occupancy Levels in Enclosed Spaces Using Environmental Variables: A Fitness Gym and Living Room as Evaluation Scenarios. Sensors 2020, 20, 6579. [Google Scholar] [CrossRef]

- Weber, M.; Doblander, C.; Mandl, P. Towards the Detection of Building Occupancy with Synthetic Environmental Data. arXiv 2020, arXiv:2010.04209. [Google Scholar]

- Wang, C.; Jiang, J.; Roth, T.; Nguyen, C.; Liu, Y.; Lee, H. Integrated sensor data processing for occupancy detection in residential buildings. Energy Build. 2021, 237, 110810. [Google Scholar] [CrossRef]

- Ivasic-Kos, M.; Kristo, M.; Pobar, M. Person Detection in Thermal Videos Using YOLO. In Proceedings of the Intelligent Systems and Applications, Cham, Switzerland, 24 August 2019; pp. 254–267. [Google Scholar]

- Yang, D.B.; Gonzalez-Banos, H.H.; Guibas, L.J. Counting people in crowds with a real-time network of simple image sensors. In Proceedings of the Ninth IEEE International Conference on Computer Vision, Washington, DC, USA, 13–16 October 2003; Volume 121, pp. 122–129. [Google Scholar]

- Lu, S.; Hameen, E.; Aziz, A. Dynamic HVAC Operations with Real-Time Vision-Based Occupant Recognition System. In Proceedings of the 2018 ASHRAE Winter Conference, Chicago, IL, USA, 20–24 January 2018. [Google Scholar]

- Gomez, A.; Conti, F.; Benini, L. Thermal image-based CNN’s for ultra-low power people recognition. In Proceedings of the 15th ACM International Conference on Computing Frontiers, Ischia, Italy, 8–10 May 2018; pp. 326–331. [Google Scholar]

- Yuan, Y.; Li, X.; Liu, Z.; Guan, X. Occupancy Estimation in Buildings Based on Infrared Array Sensors Detection. IEEE Sens. J. 2019, 20, 1043–1053. [Google Scholar] [CrossRef]

- Gokaraju, B.; Durbha, S.S.; King, R.L.; Younan, N.H. A Machine Learning Based Spatio-Temporal Data Mining Approach for Detection of Harmful Algal Blooms in the Gulf of Mexico. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2011, 4, 710–720. [Google Scholar] [CrossRef]

- Palacharla, P.K.; Durbha, S.S.; King, R.L.; Gokaraju, B.; Lawrence, G.W. A hyperspectral reflectance data based model inversion methodology to detect reniform nematodes in cotton. In Proceedings of the 2011 6th International Workshop on the Analysis of Multi-temporal Remote Sensing Images (Multi-Temp), Trento, Italy, 12–14 July 2011; pp. 249–252. [Google Scholar]

- Gokaraju, B.; Nobrega, R.A.A.; Doss, D.A.; Turlapaty, A.C.; Tesiero, R.C. Data fusion of multi-source satellite data sets for cost-effective disaster management studies. In Proceedings of the SoutheastCon 2017, Concord, NC, USA, 30 March–2 April 2017; pp. 1–5. [Google Scholar] [CrossRef]

- Gokaraju, B.; Durbha, S.S.; King, R.L.; Younan, N.H. Sensor web and data mining approaches for Harmful algal bloom detection and monitoring in the Gulf of Mexico region. In Proceedings of the 2009 IEEE International Geoscience and Remote Sensing Symposium, Rondebosch, South Africa, 12 July 2009. [Google Scholar] [CrossRef]

- Gokaraju, B.; Agrawal, R.; Doss, D.A.; Bhattacharya, S. Identification of Spatio- Temporal Patterns in Cyber Security for Detecting the Signature Identity of Hacker. In Proceedings of the SoutheastCon 2018, St. Petersburg, FL, USA, 19–22 April 2018; pp. 1–5. [Google Scholar]

- Van Oosterhout, T.; Bakkes, S.; Krose, B. Head detection in stereo data for people counting and segmentation. In Proceedings of the Sixth International Conference on Computer Vision Theory and Applications, Vilamoura, Portugal, 5–7 March 2011; pp. 620–625. [Google Scholar] [CrossRef] [Green Version]

- Parise, A.; Manso-Callejo, M.A.; Cao, H.; Mendonca, M.; Kohli, H.; Wachowicz, M. Indoor Occupancy Prediction using an IoT Platform. In Proceedings of the 2019 Sixth International Conference on Internet of Things: Systems, Management and Security (IOTSMS), Granada, Spain, 22–25 October 2019; pp. 26–31. [Google Scholar]

- Beltran, A.; Erickson, V.; Cerpa, A. ThermoSense: Occupancy Thermal Based Sensing for HVAC Control. In Proceedings of the 5th ACM Workshop on Embedded Systems for Energy-Efficient Buildings, Roma, Italy, 11–15 November 2013; pp. 1–8. [Google Scholar]

- Zappi, P.; Farella, E.; Benini, L. Enhancing the spatial resolution of presence detection in a PIR based wireless surveillance network. In Proceedings of the 2007 IEEE Conference on Advanced Video and Signal Based Surveillance, London, UK, 5–7 September 2007; pp. 295–300. [Google Scholar] [CrossRef]

- Tyndall, A.; Cardell-Oliver, R.; Keating, A. Occupancy Estimation Using a Low-Pixel Count Thermal Imager. IEEE Sens. J. 2016, 16, 3784–3791. [Google Scholar] [CrossRef]

- Sirmacek, B.; Riveiro, M. Occupancy Prediction Using Low-Cost and Low-Resolution Heat Sensors for Smart Offices. Sensors 2020, 20, 5497. [Google Scholar] [CrossRef] [PubMed]

- Zou, H.; Zhou, Y.; Yang, J.; Spanos, C.J. Device-free occupancy detection and crowd counting in smart buildings with WiFi-enabled IoT. Energy Build. 2018, 174, 309–322. [Google Scholar] [CrossRef]

- Wang, J.; Tse, N.C.F.; Chan, J.Y.C. Wi-Fi based occupancy detection in a complex indoor space under discontinuous wireless communication: A robust filtering based on event-triggered updating. Build. Environ. 2019, 151, 228–239. [Google Scholar] [CrossRef]

- Nivetha, V.; Subathra, B.; Srinivasan, S. Wi-Fi based Occupancy Detection in a Building with Indoor Localization. In Proceedings of the 2019 IEEE International Conference on Intelligent Techniques in Control, Optimization and Signal Processing (INCOS), Virudhunagar, India, 11–13 April 2019; pp. 1–4. [Google Scholar]

- Simma, K.C.J.; Mammoli, A.; Bogus, S.M. Real-Time Occupancy Estimation Using WiFi Network to Optimize HVAC Operation. Procedia Comput. Sci. 2019, 155, 495–502. [Google Scholar] [CrossRef]

- Fatema, N.; Malik, H. Data-Driven Occupancy Detection Hybrid Model Using Particle Swarm Optimization Based Artificial Neural Network. In Metaheuristic and Evolutionary Computation: Algorithms and Applications; Springer: Singapore, 2020; pp. 283–297. [Google Scholar] [CrossRef]

- Tien, P.W.; Wei, S.; Calautit, J.K.; Darkwa, J.; Wood, C. A vision-based deep learning approach for the detection and prediction of occupancy heat emissions for demand-driven control solutions. Energy Build. 2020, 226, 110386. [Google Scholar] [CrossRef]

- Sharma, P.; Xu, G.; Hui, X.; Hysell, D.L.; Kan, E.C. Deep-Learning-Based Occupant Counting by Ambient RF Sensing. IEEE Sens. J. 2020, 21, 8564–8574. [Google Scholar] [CrossRef]

- Rueda, L.; Agbossou, K.; Cardenas, A.; Henao, N.; Kelouwani, S. A comprehensive review of approaches to building occupancy detection. Build. Environ. 2020, 180, 106966. [Google Scholar] [CrossRef]

- Metwaly, A.; Peña Queralta, J.; Sarker, V.; Nguyen gia, T.; Nasir, O.; Westerlund, T. Edge Computing with Embedded AI: Thermal Image Analysis for Occupancy Estimation in Intelligent Buildings. In Proceedings of the INTelligent Embedded Systems Architectures and Applications, INTESA@ESWEEK 2019, New York, NY, USA, 13–18 October 2019. [Google Scholar]

- Feng, C.; Mehmani, A.; Zhang, J. Deep Learning-Based Real-Time Building Occupancy Detection Using AMI Data. IEEE Trans. Smart Grid 2020, 11, 4490–4501. [Google Scholar] [CrossRef]

- Khalil, M.; McGough, S.; Pourmirza, Z.; Pazhoohesh, M.; Walker, S. Transfer Learning Approach for Occupancy Prediction in Smart Buildings. In Proceedings of the 12th International Renewable Engineering Conference (IREC), Amman, Jordan, 14–15 April 2021; pp. 1–6. [Google Scholar]

- Chen, Z.; Jiang, C.; Masood, M.K.; Soh, Y.C.; Wu, M.; Li, X. Deep Learning for Building Occupancy Estimation Using Environmental Sensors. In Deep Learning: Algorithms and Applications; Springer International Publishing: New York, NY, USA, 2019; pp. 335–357. [Google Scholar] [CrossRef]

- Bruna, J.; Mallat, S. Invariant Scattering Convolution Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 1872–1886. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Anden, J.; Mallat, S. Deep Scattering Spectrum. IEEE Trans. Signal Process. 2014, 62, 4114–4128. [Google Scholar] [CrossRef] [Green Version]

- Sepúlveda, A.; Castillo, F.; Palma, C.; Rodriguez-Fernandez, M. Emotion Recognition from ECG Signals Using Wavelet Scattering and Machine Learning. Appl. Sci. 2021, 11, 4945. [Google Scholar] [CrossRef]

- Ma, S.; Cheng, B.; Shang, Z.; Liu, G. Scattering transform and LSPTSVM based fault diagnosis of rotating machinery. Mech. Syst. Signal Process. 2017, 104, 155–170. [Google Scholar] [CrossRef]

- Minaee, S.; Abdolrashidi, A.; Wang, Y. Iris recognition using scattering transform and textural features. In Proceedings of the 2015 IEEE Signal Processing and Signal Processing Education Workshop (SP/SPE), Salt Lake City, UT, USA, 9–12 August 2015; pp. 37–42. [Google Scholar]

- Li, J.; Ke, L.; Du, Q.; Ding, X.; Chen, X.; Wang, D. Heart Sound Signal Classification Algorithm: A Combination of Wavelet Scattering Transform and Twin Support Vector Machine. IEEE Access 2019, 7, 179339–179348. [Google Scholar] [CrossRef]

- Saydjari, A.K.; Portillo, S.K.N.; Slepian, Z.; Kahraman, S.; Burkhart, B.; Finkbeiner, D.P. Classification of Magnetohydrodynamic Simulations Using Wavelet Scattering Transforms. Astrophys. J. 2021, 910, 122. [Google Scholar] [CrossRef]

- Bishop, C.M. Pattern Recognition and Machine Learning; Springer: New York, NY, USA, 2006. [Google Scholar]

| Models | GLCM Features | Wavelet Statistical Features | GLCM and Wavele Statistical Features | ||||||

|---|---|---|---|---|---|---|---|---|---|

| ACC | STD | AUC | ACC | STD | AUC | ACC | STD | AUC | |

| LDA | 0.80 | 0.09 | 0.86 | 0.61 | 0.10 | 0.86 | 0.84 | 0.06 | 0.86 |

| KNN | 0.75 | 0.06 | 0.88 | 0.53 | 0.06 | 0.80 | 0.86 | 0.05 | 0.87 |

| CART | 0.8 | 0.065 | 0.84 | 0.84 | 0.06 | 0.80 | 0.85 | 0.06 | 0.83 |

| Models. | GLCM and Daubechies Features | GLCM and Symlets Features | ||||

|---|---|---|---|---|---|---|

| ACC | STD | AUC | ACC | STD | AUC | |

| LDA | 0.75 | 0.07 | 0.84 | 0.75 | 0.07 | 0.84 |

| CART | 0.80 | 0.06 | 0.84 | 0.80 | 0.06 | 0.83 |

| Number of Rotations | Feature Matrices (Rows × Columns) | Accuracy (%) | Execution Time (s) |

|---|---|---|---|

| [1 1] | 341 × 16 | 89.55 | 14.78 |

| [2 2] | 341 × 51 | 98.51 | 21.92 |

| [3 3] | 341 × 106 | 98.52 | 3024 |

| [4 4] | 341 × 181 | 98.52 | 57.57 |

| [5 5] | 341 × 276 | 100.00 | 68.12 |

| Number of Principal Components | Polynomial SVM (%) | Quadratic SVM (%) | Cubic SVM (%) | LDA (%) | KNN (%) |

|---|---|---|---|---|---|

| 2 (341 × 2) | 73.13 | 77.40 | 77.10 | 69.50 | 78.00 |

| 5 (341 × 5) | 94.03 | 92.70 | 93.30 | 80.60 | 94.40 |

| 10 (341 × 10) | 98.50 | 94.40 | 93.80 | 88.30 | 92.10 |

| 20 (341 × 20) | 100.00 | 95.90 | 95.90 | 92.40 | 94.40 |

| All features (341 × 276) | 100.00 | 95.60 | 96.20 | 70.70 | 95.90 |

| Pretrained CNN | Accuracy |

|---|---|

| ResNet-50 + SVM | 96.30% |

| VGG-16 + SVM | 97.12% |

| Transfer Learning Framework | Accuracy (%) | Training Time (s) |

|---|---|---|

| ResNet-50 | 98.04 | 2015 |

| VGG-16 | 89.42 | 2954 |

| Reference | Sensor | Output | Processing | Accuracy |

|---|---|---|---|---|

| [1] | Thermal | Numbered | AlexNet CNN | 98.80% |

| [11] | Thermal | Numbered | CNN | 53.70% |

| [22] | PIR+Thermal | Numbered | K*algorithm | 82.56% |

| [21] | PIRs | Numbered | Custom | 89.00% |

| [32] | Thermal | Numbered | FNN | 98.9% |

| This paper | Thermal | Numbered | Wavelet scattering and SVM | 100% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Acquaah, Y.T.; Gokaraju, B.; Tesiero, R.C., III; Monty, G.H. Thermal Imagery Feature Extraction Techniques and the Effects on Machine Learning Models for Smart HVAC Efficiency in Building Energy. Remote Sens. 2021, 13, 3847. https://doi.org/10.3390/rs13193847

Acquaah YT, Gokaraju B, Tesiero RC III, Monty GH. Thermal Imagery Feature Extraction Techniques and the Effects on Machine Learning Models for Smart HVAC Efficiency in Building Energy. Remote Sensing. 2021; 13(19):3847. https://doi.org/10.3390/rs13193847

Chicago/Turabian StyleAcquaah, Yaa Takyiwaa, Balakrishna Gokaraju, Raymond C. Tesiero, III, and Gregory H. Monty. 2021. "Thermal Imagery Feature Extraction Techniques and the Effects on Machine Learning Models for Smart HVAC Efficiency in Building Energy" Remote Sensing 13, no. 19: 3847. https://doi.org/10.3390/rs13193847

APA StyleAcquaah, Y. T., Gokaraju, B., Tesiero, R. C., III, & Monty, G. H. (2021). Thermal Imagery Feature Extraction Techniques and the Effects on Machine Learning Models for Smart HVAC Efficiency in Building Energy. Remote Sensing, 13(19), 3847. https://doi.org/10.3390/rs13193847