Review on Active and Passive Remote Sensing Techniques for Road Extraction

Abstract

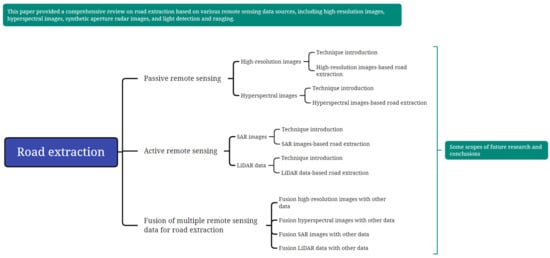

:1. Introduction

2. Overview of the Existing Data Acquisition Techniques for Road Extraction

2.1. High-Resolution Imaging Technology

2.1.1. Data Acquisition Methods and Characteristics

2.1.2. Typical Sensors

2.1.3. Application Status and Prospects

2.2. Hyperspectral Imaging Technology

2.2.1. Data Acquisition Methods and Characteristics

2.2.2. Typical Sensors

2.2.3. Application Status and Prospects

2.3. SAR Imaging Technology

2.3.1. Data Acquisition Methods and Characteristics

2.3.2. Typical Sensors

2.3.3. Application Status and Prospects

2.4. Airborne Laser Scanning (ALS)

2.4.1. Data Acquisition Methods and Characteristics

2.4.2. Typical Sensors

2.4.3. Application Status and Prospects

3. Road Extraction Based on Different Data Sources

3.1. Road Extraction Based on High-Spatial Resolution Images

3.1.1. Main Methods

3.1.2. Status and Prospects

3.2. Road Extraction Based on Hyperspectral Images

3.2.1. Main Methods

3.2.2. Status and Prospects

3.3. Road Extraction Based on SAR Images

3.3.1. Main Methods

3.3.2. Status and Prospects

3.4. Road Extraction Based on LiDAR Data

3.4.1. Main Methods

3.4.2. Status and Prospects

4. Combination of Multisource Data for Road Extraction

4.1. Combination of High-Resolution Images with Other Data for Road Extraction

4.2. Combination of Hyperspectral Images with Other Data for Road Extraction

4.3. Combination of SAR Images with Other Data for Road Extraction

4.4. Combination of LiDAR with Other Data for Road Extraction

4.5. Some Scopes of Future Research in Road Extraction

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Huang, B.; Zhao, B.; Song, Y. Urban Land-Use Mapping Using a Deep Convolutional Neural Network with High Spatial Resolution Multispectral Remote Sensing Imagery. Remote Sens. Environ. 2018, 214, 73–86. [Google Scholar] [CrossRef]

- Wang, J.; Treitz, P.M.; Howarth, P.J. Road Network Detection from SPOT Imagery for Updating Geographical Information Systems in the Rural–Urban Fringe. Int. J. Geogr. Inf. Syst. 1992, 6, 141–157. [Google Scholar] [CrossRef]

- Mena, J.B. State of the Art on Automatic Road Extraction for GIS Update: A Novel Classification. Pattern Recognit. Lett. 2003, 24, 3037–3058. [Google Scholar] [CrossRef]

- Coulibaly, I.; Spiric, N.; Sghaier, M.O.; Manzo-Vargas, W.; Lepage, R.; St-Jacques, M. Road Extraction from High Resolution Remote Sensing Image Using Multiresolution in Case of Major Disaster. In Proceedings of the 2014 IEEE Geoscience and Remote Sensing Symposium, Quebec City, QC, Canada, 13–18 July 2014; pp. 2712–2715. [Google Scholar]

- Cheng, G.; Zhu, F.; Xiang, S.; Pan, C. Road Centerline Extraction via Semisupervised Segmentation and Multidirection Nonmaximum Suppression. IEEE Geosci. Remote Sens. Lett. 2016, 13, 545–549. [Google Scholar] [CrossRef]

- McKeown, D.M. Toward automatic cartographic feature extraction. In Mapping and Spatial Modelling for Navigation; Pau, L.F., Ed.; Springer: Berlin, Heidelberg, 1990; pp. 149–180. [Google Scholar]

- Robinson, A.H.; Morrison, J.L.; Muehrcke, P.C. Cartography 1950–2000. Trans. Inst. Br. Geogr. 1977, 2, 3–18. [Google Scholar] [CrossRef]

- Ulmke, M.; Koch, W. Road Map Extraction Using GMTI Tracking. In Proceedings of the 2006 9th International Conference on Information Fusion, Florence, Italy, 10–13 July 2006; pp. 1–7. [Google Scholar]

- Koch, W.; Koller, J.; Ulmke, M. Ground Target Tracking and Road Map Extraction. ISPRS J. Photogramm. Remote Sens. 2006, 61, 197–208. [Google Scholar] [CrossRef]

- Niu, Z.; Li, S.; Pousaeid, N. Road Extraction Using Smart Phones GPS. In Proceedings of the 2nd International Conference on Computing for Geospatial Research & Applications, Washington, DC, USA, 23–25 May 2011; Association for Computing Machinery: New York, NY, USA, 2011; pp. 1–6. [Google Scholar]

- Bhoraskar, R.; Vankadhara, N.; Raman, B.; Kulkarni, P. Wolverine: Traffic and Road Condition Estimation Using Smartphone Sensors. In Proceedings of the 2012 Fourth International Conference on Communication Systems and Networks (COMSNETS 2012), Bangalore, India, 3–7 January 2012; pp. 1–6. [Google Scholar]

- Balali, V.; Ashouri Rad, A.; Golparvar-Fard, M. Detection, Classification, and Mapping of U.S. Traffic Signs Using Google Street View Images for Roadway Inventory Management. Vis. Eng. 2015, 3, 15. [Google Scholar] [CrossRef] [Green Version]

- Zhang, M.; Liu, Y.; Luo, S.; Gao, S. Research on Baidu Street View Road Crack Information Extraction Based on Deep Learning Method. J. Phys. Conf. Ser. 2020, 1616, 012086. [Google Scholar] [CrossRef]

- Li, D.; Ke, Y.; Gong, H.; Li, X. Object-Based Urban Tree Species Classification Using Bi-Temporal WorldView-2 and WorldView-3 Images. Remote Sens. 2015, 7, 16917–16937. [Google Scholar] [CrossRef] [Green Version]

- Pan, Y.; Zhang, X.; Cervone, G.; Yang, L. Detection of Asphalt Pavement Potholes and Cracks Based on the Unmanned Aerial Vehicle Multispectral Imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 3701–3712. [Google Scholar] [CrossRef]

- Irwin, K.; Beaulne, D.; Braun, A.; Fotopoulos, G. Fusion of SAR, Optical Imagery and Airborne LiDAR for Surface Water Detection. Remote Sens. 2017, 9, 890. [Google Scholar] [CrossRef] [Green Version]

- Wang, W.; Yang, N.; Zhang, Y.; Wang, F.; Cao, T.; Eklund, P. A Review of Road Extraction from Remote Sensing Images. J. Traffic Transp. Eng. 2016, 3, 271–282. [Google Scholar] [CrossRef] [Green Version]

- Hu, J.; Razdan, A.; Femiani, J.C.; Cui, M.; Wonka, P. Road Network Extraction and Intersection Detection From Aerial Images by Tracking Road Footprints. IEEE Trans. Geosci. Remote Sens. 2007, 45, 4144–4157. [Google Scholar] [CrossRef]

- Shen, J.; Lin, X.; Shi, Y.; Wong, C. Knowledge-Based Road Extraction from High Resolution Remotely Sensed Imagery. In Proceedings of the 2008 Congress on Image and Signal Processing, Sanya, China, 27–30 May 2008; IEEE: New York, NY, USA, 2008; Volume 4, pp. 608–612. [Google Scholar]

- George, J.; Mary, L.; Riyas, K.S. Vehicle Detection and Classification from Acoustic Signal Using ANN and KNN. In Proceedings of the 2013 International Conference on Control Communication and Computing (ICCC), Thiruvananthapuram, India, 13–15 December 2013; IEEE: New York, NY, USA, 2013; pp. 436–439. [Google Scholar]

- Li, J.; Chen, M. On-Road Multiple Obstacles Detection in Dynamical Background. In Proceedings of the 2014 Sixth International Conference on Intelligent Human-Machine Systems and Cybernetics, Hangzhou, China, 26–27 August 2014; IEEE: New York, NY, USA, 2014; Volume 1, pp. 102–105. [Google Scholar]

- Simler, C. An Improved Road and Building Detector on VHR Images. In Proceedings of the 2011 IEEE International Geoscience and Remote Sensing Symposium, Vancouver, BC, Canada, 24–29 July 2011; IEEE: New York, NY, USA, 2011; pp. 507–510. [Google Scholar]

- Zhu, D.-M.; Wen, X.; Ling, C.-L. Road Extraction Based on the Algorithms of MRF and Hybrid Model of SVM and FCM. In Proceedings of the 2011 International Symposium on Image and Data Fusion, Tengchong, China, 9–11 August 2011; IEEE: New York, NY, USA, 2011; pp. 1–4. [Google Scholar]

- Zhou, J.; Bischof, W.F.; Caelli, T. Road Tracking in Aerial Images Based on Human–Computer Interaction and Bayesian Filtering. ISPRS J. Photogramm. Remote Sens. 2006, 61, 108–124. [Google Scholar] [CrossRef] [Green Version]

- Miao, Z.; Wang, B.; Shi, W.; Zhang, H. A Semi-Automatic Method for Road Centerline Extraction From VHR Images. IEEE Geosci. Remote Sens. Lett. 2014, 11, 1856–1860. [Google Scholar] [CrossRef]

- Pawar, V.; Zaveri, M. Graph Based K-Nearest Neighbor Minutiae Clustering for Fingerprint Recognition. In Proceedings of the 2014 10th International Conference on Natural Computation (ICNC), Xiamen, China, 19–21 August 2014; IEEE: New York, NY, USA, 2014; pp. 675–680. [Google Scholar]

- Anil, P.N.; Natarajan, S. A Novel Approach Using Active Contour Model for Semi-Automatic Road Extraction from High Resolution Satellite Imagery. In Proceedings of the 2010 Second International Conference on Machine Learning and Computing, Bangalore, India, 9–11 February 2010; IEEE: New York, NY, USA, 2010; pp. 263–266. [Google Scholar]

- Abraham, L.; Sasikumar, M. A Fuzzy Based Road Network Extraction from Degraded Satellite Images. In Proceedings of the 2013 International Conference on Advances in Computing, Communications and Informatics (ICACCI), Mysore, India, 22–25 August 2013; IEEE: New York, NY, USA, 2013; pp. 2032–2036. [Google Scholar]

- Awrangjeb, M. Road Traffic Island Extraction from High Resolution Aerial Imagery Using Active Contours. In Proceedings of the Australian Remote Sensing & Photogrammetry Conference (ARSPC 2010), Alice Springs, Australia, 13–17 September 2010. [Google Scholar]

- Valero, S.; Chanussot, J.; Benediktsson, J.A.; Talbot, H.; Waske, B. Advanced Directional Mathematical Morphology for the Detection of the Road Network in Very High Resolution Remote Sensing Images. Pattern Recognit. Lett. 2010, 31, 1120–1127. [Google Scholar] [CrossRef] [Green Version]

- Ma, R.; Wang, W.; Liu, S. Extracting Roads Based on Retinex and Improved Canny Operator with Shape Criteria in Vague and Unevenly Illuminated Aerial Images. J. Appl. Remote Sens. 2012, 6, 063610. [Google Scholar]

- Movaghati, S.; Moghaddamjoo, A.; Tavakoli, A. Road Extraction from Satellite Images Using Particle Filtering and Extended Kalman Filtering. IEEE Trans. Geosci. Remote Sens. 2010, 48, 2807–2817. [Google Scholar] [CrossRef]

- Barzohar, M.; Cooper, D.B. Automatic Finding of Main Roads in Aerial Images by Using Geometric-Stochastic Models and Estimation. IEEE Trans. Pattern Anal. Mach. Intell. 1996, 18, 707–721. [Google Scholar] [CrossRef]

- Abdollahi, A.; Pradhan, B.; Shukla, N.; Chakraborty, S.; Alamri, A. Deep Learning Approaches Applied to Remote Sensing Datasets for Road Extraction: A State-Of-The-Art Review. Remote Sens. 2020, 12, 1444. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Identity Mappings in Deep Residual Networks, Proceedings of the Computer Vision—ECCV 2016, Amsterdam, The Netherlands, 8–16 October 2016; Leibe, B., Matas, J., Sebe, N., Welling, M., Eds.; Springer International Publishing: Berlin/Heidelberg, Germany, 2016; pp. 630–645. [Google Scholar]

- Zhong, Z.; Li, J.; Cui, W.; Jiang, H. Fully Convolutional Networks for Building and Road Extraction: Preliminary Results. In Proceedings of the 2016 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Beijing, China, 10–15 July 2016; pp. 1591–1594. [Google Scholar]

- Wei, Y.; Wang, Z.; Xu, M. Road Structure Refined CNN for Road Extraction in Aerial Image. IEEE Geosci. Remote Sens. Lett. 2017, 14, 709–713. [Google Scholar] [CrossRef]

- Alshehhi, R.; Marpu, P.R.; Woon, W.L.; Dalla Mura, M. Simultaneous Extraction of Roads and Buildings in Remote Sensing Imagery with Convolutional Neural Networks. ISPRS J. Photogramm. Remote Sens. 2017, 130, 139–149. [Google Scholar] [CrossRef]

- Liu, R.; Miao, Q.; Song, J.; Quan, Y.; Li, Y.; Xu, P.; Dai, J. Multiscale Road Centerlines Extraction from High-Resolution Aerial Imagery. Neurocomputing 2019, 329, 384–396. [Google Scholar] [CrossRef]

- Li, P.; Zang, Y.; Wang, C.; Li, J.; Cheng, M.; Luo, L.; Yu, Y. Road Network Extraction via Deep Learning and Line Integral Convolution. In Proceedings of the 2016 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Beijing, China, 10–15 July 2016; pp. 1599–1602. [Google Scholar]

- Varia, N.; Dokania, A.; Senthilnath, J. DeepExt: A Convolution Neural Network for Road Extraction Using RGB Images Captured by UAV. In Proceedings of the 2018 IEEE Symposium Series on Computational Intelligence (SSCI), Bangalore, India, 18–21 November 2018; pp. 1890–1895. [Google Scholar]

- Abdollahi, A.; Pradhan, B.; Shukla, N. Extraction of Road Features from UAV Images Using a Novel Level Set Segmentation Approach. Int. J. Urban Sci. 2019, 23, 391–405. [Google Scholar] [CrossRef]

- Moranduzzo, T.; Melgani, F. Detecting Cars in UAV Images With a Catalog-Based Approach. IEEE Trans. Geosci. Remote Sens. 2014, 52, 6356–6367. [Google Scholar] [CrossRef]

- Yang, B.; Chen, C. Automatic Registration of UAV-Borne Sequent Images and LiDAR Data. ISPRS J. Photogramm. Remote Sens. 2015, 101, 262–274. [Google Scholar] [CrossRef]

- Kestur, R.; Farooq, S.; Abdal, R.; Mehraj, E.; Narasipura, O.S.; Mudigere, M. UFCN: A Fully Convolutional Neural Network for Road Extraction in RGB Imagery Acquired by Remote Sensing from an Unmanned Aerial Vehicle. J. Appl. Remote Sens. 2018, 12, 016020. [Google Scholar] [CrossRef]

- Henry, C.; Azimi, S.M.; Merkle, N. Road Segmentation in SAR Satellite Images With Deep Fully Convolutional Neural Networks. IEEE Geosci. Remote Sens. Lett. 2018, 15, 1867–1871. [Google Scholar] [CrossRef] [Green Version]

- Panboonyuen, T.; Vateekul, P.; Jitkajornwanich, K.; Lawawirojwong, S. An Enhanced Deep Convolutional Encoder-Decoder Network for Road Segmentation on Aerial Imagery, Proceedings of the Recent Advances in Information and Communication Technology, Bangkok, Thailand, 5–6 July 2017; Meesad, P., Sodsee, S., Unger, H., Eds.; Springer International Publishing: Berlin/Heidelberg, Germany, 2018; pp. 191–201. [Google Scholar]

- Wang, J.; Song, J.; Chen, M.; Yang, Z. Road Network Extraction: A Neural-Dynamic Framework Based on Deep Learning and a Finite State Machine. Int. J. Remote Sens. 2015, 36, 3144–3169. [Google Scholar] [CrossRef]

- Panboonyuen, T.; Jitkajornwanich, K.; Lawawirojwong, S.; Srestasathiern, P.; Vateekul, P. Road Segmentation of Remotely-Sensed Images Using Deep Convolutional Neural Networks with Landscape Metrics and Conditional Random Fields. Remote Sens. 2017, 9, 680. [Google Scholar] [CrossRef] [Green Version]

- Constantin, A.; Ding, J.-J.; Lee, Y.-C. Accurate Road Detection from Satellite Images Using Modified U-Net. In Proceedings of the 2018 IEEE Asia Pacific Conference on Circuits and Systems (APCCAS), Chengdu, China, 26–30 October 2018; pp. 423–426. [Google Scholar]

- Zhang, Z.; Liu, Q.; Wang, Y. Road Extraction by Deep Residual U-Net. IEEE Geosci. Remote Sens. Lett. 2018, 15, 749–753. [Google Scholar] [CrossRef] [Green Version]

- Hong, Z.; Ming, D.; Zhou, K.; Guo, Y.; Lu, T. Road Extraction From a High Spatial Resolution Remote Sensing Image Based on Richer Convolutional Features. IEEE Access 2018, 6, 46988–47000. [Google Scholar] [CrossRef]

- Xin, J.; Zhang, X.; Zhang, Z.; Fang, W. Road Extraction of High-Resolution Remote Sensing Images Derived from DenseUNet. Remote Sens. 2019, 11, 2499. [Google Scholar] [CrossRef] [Green Version]

- Li, Y.; Xu, L.; Rao, J.; Guo, L.; Yan, Z.; Jin, S. A Y-Net Deep Learning Method for Road Segmentation Using High-Resolution Visible Remote Sensing Images. Remote Sens. Lett. 2019, 10, 381–390. [Google Scholar] [CrossRef]

- Cheng, G.; Wang, Y.; Xu, S.; Wang, H.; Xiang, S.; Pan, C. Automatic Road Detection and Centerline Extraction via Cascaded End-to-End Convolutional Neural Network. IEEE Trans. Geosci. Remote Sens. 2017, 55, 3322–3337. [Google Scholar] [CrossRef]

- Xu, Y.; Xie, Z.; Feng, Y.; Chen, Z. Road Extraction from High-Resolution Remote Sensing Imagery Using Deep Learning. Remote Sens. 2018, 10, 1461. [Google Scholar] [CrossRef] [Green Version]

- Buslaev, A.; Seferbekov, S.; Iglovikov, V.; Shvets, A. Fully Convolutional Network for Automatic Road Extraction from Satellite Imagery. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Salt Lake City, UT, USA, 18–22 June 2018; pp. 197–1973. [Google Scholar]

- Zhou, L.; Zhang, C.; Wu, M. D-LinkNet: LinkNet with Pretrained Encoder and Dilated Convolution for High Resolution Satellite Imagery Road Extraction. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Salt Lake City, UT, USA, 18–22 June 2018; pp. 192–1924. [Google Scholar]

- Doshi, J. Residual Inception Skip Network for Binary Segmentation. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Salt Lake City, UT, USA, 18–22 June 2018; pp. 206–2063. [Google Scholar]

- Xu, Y.; Feng, Y.; Xie, Z.; Hu, A.; Zhang, X. A Research on Extracting Road Network from High Resolution Remote Sensing Imagery. In Proceedings of the 2018 26th International Conference on Geoinformatics, Kunming, China, 18–30 June 2018; pp. 1–4. [Google Scholar]

- He, H.; Yang, D.; Wang, S.; Wang, S.; Liu, X. Road Segmentation of Cross-Modal Remote Sensing Images Using Deep Segmentation Network and Transfer Learning. Ind. Robot Int. J. Robot. Res. Appl. 2019, 46, 384–390. [Google Scholar] [CrossRef]

- Xia, W.; Zhang, Y.-Z.; Liu, J.; Luo, L.; Yang, K. Road Extraction from High Resolution Image with Deep Convolution Network—A Case Study of GF-2 Image. Proceedings 2018, 2, 325. [Google Scholar] [CrossRef] [Green Version]

- Gao, L.; Song, W.; Dai, J.; Chen, Y. Road Extraction from High-Resolution Remote Sensing Imagery Using Refined Deep Residual Convolutional Neural Network. Remote Sens. 2019, 11, 552. [Google Scholar] [CrossRef] [Green Version]

- Xie, Y.; Miao, F.; Zhou, K.; Peng, J. HsgNet: A Road Extraction Network Based on Global Perception of High-Order Spatial Information. ISPRS Int. J. Geo-Inf. 2019, 8, 571. [Google Scholar] [CrossRef] [Green Version]

- Costea, D.; Marcu, A.; Slusanschi, E.; Leordeanu, M. Creating Roadmaps in Aerial Images with Generative Adversarial Networks and Smoothing-Based Optimization. In Proceedings of the IEEE International Conference on Computer Vision Workshops, Venice, Italy, 22–29 October 2017; pp. 2100–2109. [Google Scholar]

- Shi, Q.; Liu, X.; Li, X. Road Detection from Remote Sensing Images by Generative Adversarial Networks. IEEE Access 2017, 6, 25486–25494. [Google Scholar] [CrossRef]

- Belli, D.; Kipf, T. Image-Conditioned Graph Generation for Road Network Extraction. arXiv 2019, arXiv:1910.14388, 4388. [Google Scholar]

- Castrejon, L.; Kundu, K.; Urtasun, R.; Fidler, S. Annotating Object Instances With a Polygon-RNN. arXiv 2017, arXiv:1704.05548, 5230–5238. [Google Scholar]

- Acuna, D.; Ling, H.; Kar, A.; Fidler, S. Efficient Interactive Annotation of Segmentation Datasets With Polygon-RNN++. arXiv 2018, arXiv:1803.09693, 859–868. [Google Scholar]

- Li, Z.; Wegner, J.D.; Lucchi, A. Topological Map Extraction From Overhead Images. arXiv 2019, arXiv:1812.01497, 1715–1724. [Google Scholar]

- Lian, R.; Wang, W.; Mustafa, N.; Huang, L. Road Extraction Methods in High-Resolution Remote Sensing Images: A Comprehensive Review. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 5489–5507. [Google Scholar] [CrossRef]

- Sun, N.; Zhang, J.X.; Huang, G.M.; Zhao, Z.; Lu, L.J. Review of Road Extraction Methods from SAR Image. IOP Conf. Ser. Earth Environ. Sci. 2014, 17, 012245. [Google Scholar] [CrossRef] [Green Version]

- Sun, Z.; Geng, H.; Lu, Z.; Scherer, R.; Woźniak, M. Review of Road Segmentation for SAR Images. Remote Sens. 2021, 13, 1011. [Google Scholar] [CrossRef]

- Wang, G.; Weng, Q. Remote Sensing of Natural Resources; CRC Press: Boca Raton, FL, USA, 2013; ISBN 978-1-4665-5692-8. [Google Scholar]

- Gargoum, S.; El-Basyouny, K. Automated Extraction of Road Features Using LiDAR Data: A Review of LiDAR Applications in Transportation. In Proceedings of the 2017 4th International Conference on Transportation Information and Safety (ICTIS), Banff, AB, Canada, 8–10 August 2017; pp. 563–574. [Google Scholar]

- Ma, L.; Li, Y.; Li, J.; Wang, C.; Wang, R.; Chapman, M.A. Mobile Laser Scanned Point-Clouds for Road Object Detection and Extraction: A Review. Remote Sens. 2018, 10, 1531. [Google Scholar] [CrossRef] [Green Version]

- Wang, R.; Peethambaran, J.; Chen, D. LiDAR Point Clouds to 3-D Urban Models: A Review. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 606–627. [Google Scholar] [CrossRef]

- Alparone, L.; Baronti, S.; Garzelli, A.; Nencini, F. A Global Quality Measurement of Pan-Sharpened Multispectral Imagery. IEEE Geosci. Remote Sens. Lett. 2004, 1, 313–317. [Google Scholar] [CrossRef]

- French, A.N.; Norman, J.M.; Anderson, M.C. A Simple and Fast Atmospheric Correction for Spaceborne Remote Sensing of Surface Temperature. Remote Sens. Environ. 2003, 87, 326–333. [Google Scholar] [CrossRef]

- Jia, J.; Wang, Y.; Chen, J.; Guo, R.; Shu, R.; Wang, J. Status and Application of Advanced Airborne Hyperspectral Imaging Technology: A Review. Infrared Phys. Technol. 2020, 104, 103115. [Google Scholar] [CrossRef]

- Turner, D.; Lucieer, A.; Watson, C. An Automated Technique for Generating Georectified Mosaics from Ultra-High Resolution Unmanned Aerial Vehicle (UAV) Imagery, Based on Structure from Motion (SfM) Point Clouds. Remote Sens. 2012, 4, 1392–1410. [Google Scholar] [CrossRef] [Green Version]

- Ozesmi, S.L.; Bauer, M.E. Satellite Remote Sensing of Wetlands. Wetl. Ecol. Manag. Vol. 2002, 10, 381–402. [Google Scholar] [CrossRef]

- Sato, H.P.; Hasegawa, H.; Fujiwara, S.; Tobita, M.; Koarai, M.; Une, H.; Iwahashi, J. Interpretation of Landslide Distribution Triggered by the 2005 Northern Pakistan Earthquake Using SPOT 5 Imagery. Landslides 2007, 4, 113–122. [Google Scholar] [CrossRef]

- Yadav, S.K.; Singh, S.K.; Gupta, M.; Srivastava, P.K. Morphometric Analysis of Upper Tons Basin from Northern Foreland of Peninsular India Using CARTOSAT Satellite and GIS. Geocarto Int. 2014, 29, 895–914. [Google Scholar] [CrossRef]

- Dial, G.; Bowen, H.; Gerlach, F.; Grodecki, J.; Oleszczuk, R. IKONOS Satellite, Imagery, and Products. Remote Sens. Environ. 2003, 88, 23–36. [Google Scholar] [CrossRef]

- Li, D.; Wang, M.; Jiang, J. China’s High-Resolution Optical Remote Sensing Satellites and Their Mapping Applications. Geo-Spat. Inf. Sci. 2021, 24, 85–94. [Google Scholar] [CrossRef]

- Hao, P.; Wang, L.; Niu, Z. Potential of Multitemporal Gaofen-1 Panchromatic/Multispectral Images for Crop Classification: Case Study in Xinjiang Uygur Autonomous Region, China. J. Appl. Remote Sens. 2015, 9, 096035. [Google Scholar] [CrossRef]

- Zheng, Y.; Dai, Q.; Tu, Z.; Wang, L. Guided Image Filtering-Based Pan-Sharpening Method: A Case Study of GaoFen-2 Imagery. ISPRS Int. J. Geo-Inf. 2017, 6, 404. [Google Scholar] [CrossRef] [Green Version]

- Yang, A.; Zhong, B.; Hu, L.; Wu, S.; Xu, Z.; Wu, H.; Wu, J.; Gong, X.; Wang, H.; Liu, Q. Radiometric Cross-Calibration of the Wide Field View Camera Onboard GaoFen-6 in Multispectral Bands. Remote Sens. 2020, 12, 1037. [Google Scholar] [CrossRef] [Green Version]

- Liu, Y.-K.; Liu, Y.-K.; Ma, L.-L.; Ma, L.-L.; Wang, N.; Qian, Y.-G.; Qian, Y.-G.; Zhao, Y.-G.; Qiu, S.; Gao, C.-X.; et al. On-Orbit Radiometric Calibration of the Optical Sensors on-Board SuperView-1 Satellite Using Three Independent Methods. Opt. Express 2020, 28, 11085–11105. [Google Scholar] [CrossRef]

- Aguilar, M.A.; Saldaña, M.M.; Aguilar, F.J. GeoEye-1 and WorldView-2 Pan-Sharpened Imagery for Object-Based Classification in Urban Environments. Int. J. Remote Sens. 2013, 34, 2583–2606. [Google Scholar] [CrossRef]

- Shi, Y.; Huang, W.; Ye, H.; Ruan, C.; Xing, N.; Geng, Y.; Dong, Y.; Peng, D. Partial Least Square Discriminant Analysis Based on Normalized Two-Stage Vegetation Indices for Mapping Damage from Rice Diseases Using PlanetScope Datasets. Sensors 2018, 18, 1901. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Meusburger, K.; Bänninger, D.; Alewell, C. Estimating Vegetation Parameter for Soil Erosion Assessment in an Alpine Catchment by Means of QuickBird Imagery. Int. J. Appl. Earth Obs. Geoinf. 2010, 12, 201–207. [Google Scholar] [CrossRef]

- Alkan, M.; Buyuksalih, G.; Sefercik, U.G.; Jacobsen, K. Geometric Accuracy and Information Content of WorldView-1 Images. Opt. Eng. 2013, 52, 026201. [Google Scholar] [CrossRef]

- Ye, B.; Tian, S.; Ge, J.; Sun, Y. Assessment of WorldView-3 Data for Lithological Mapping. Remote Sens. 2017, 9, 1132. [Google Scholar] [CrossRef]

- Akumu, C.E.; Amadi, E.O.; Dennis, S. Application of Drone and WorldView-4 Satellite Data in Mapping and Monitoring Grazing Land Cover and Pasture Quality: Pre- and Post-Flooding. Land 2021, 10, 321. [Google Scholar] [CrossRef]

- Mulawa, D. On-Orbit Geometric Calibration of the OrbView-3 High Resolution Imaging Satellite. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2004, 35, 1–6. [Google Scholar]

- Tyc, G.; Tulip, J.; Schulten, D.; Krischke, M.; Oxfort, M. The RapidEye Mission Design. Acta Astronaut. 2005, 56, 213–219. [Google Scholar] [CrossRef]

- Oh, K.-Y.; Jung, H.-S. Automated Bias-Compensation Approach for Pushbroom Sensor Modeling Using Digital Elevation Model. IEEE Trans. Geosci. Remote Sens. 2016, 54, 3400–3409. [Google Scholar] [CrossRef]

- Kim, J.; Jin, C.; Choi, C.; Ahn, H. Radiometric Characterization and Validation for the KOMPSAT-3 Sensor. Remote Sens. Lett. 2015, 6, 529–538. [Google Scholar] [CrossRef]

- Seo, D.; Oh, J.; Lee, C.; Lee, D.; Choi, H. Geometric Calibration and Validation of Kompsat-3A AEISS-A Camera. Sensors 2016, 16, 1776. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Kubik, P.; Lebègue, L.; Fourest, S.; Delvit, J.-M.; de Lussy, F.; Greslou, D.; Blanchet, G. First In-Flight Results of Pleiades 1A Innovative Methods for Optical Calibration. In Proceedings of the International Conference on Space Optics—ICSO 2012; International Society for Optics and Photonics, Ajaccio, France, 9–12 October 2012; Volume 10564, p. 1056407. [Google Scholar]

- Panagiotakis, E.; Chrysoulakis, N.; Charalampopoulou, V.; Poursanidis, D. Validation of Pleiades Tri-Stereo DSM in Urban Areas. ISPRS Int. J. Geo-Inf. 2018, 7, 118. [Google Scholar] [CrossRef] [Green Version]

- Yang, G.D.; Zhu, X. Ortho-Rectification of SPOT 6 Satellite Images Based on RPC Models. Appl. Mech. Mater. 2013, 392, 808–814. [Google Scholar] [CrossRef]

- Wilson, K.L.; Skinner, M.A.; Lotze, H.K. Eelgrass (Zostera Marina) and Benthic Habitat Mapping in Atlantic Canada Using High-Resolution SPOT 6/7 Satellite Imagery. Estuar. Coast. Shelf Sci. 2019, 226, 106292. [Google Scholar] [CrossRef]

- Rais, A.A.; Suwaidi, A.A.; Ghedira, H. DubaiSat-1: Mission Overview, Development Status and Future Applications. In Proceedings of the 2009 IEEE International Geoscience and Remote Sensing Symposium, Cape Town, South Africa, 12–17 July 2009; Volume 5, pp. V-196–V–199. [Google Scholar]

- Suwaidi, A.A. DubaiSat-2 Mission Overview. In Sensors, Systems, and Next-Generation Satellites XVI; International Society for Optics and Photonics: Edinburgh, UK, 2012; Volume 8533, p. 85330W. [Google Scholar]

- Immitzer, M.; Böck, S.; Einzmann, K.; Vuolo, F.; Pinnel, N.; Wallner, A.; Atzberger, C. Fractional Cover Mapping of Spruce and Pine at 1ha Resolution Combining Very High and Medium Spatial Resolution Satellite Imagery. Remote Sens. Environ. 2018, 204, 690–703. [Google Scholar] [CrossRef] [Green Version]

- Hamedianfar, A.; Shafri, H.Z.M. Detailed Intra-Urban Mapping through Transferable OBIA Rule Sets Using WorldView-2 Very-High-Resolution Satellite Images. Int. J. Remote Sens. 2015, 36, 3380–3396. [Google Scholar] [CrossRef]

- Diaz-Varela, R.A.; Zarco-Tejada, P.J.; Angileri, V.; Loudjani, P. Automatic Identification of Agricultural Terraces through Object-Oriented Analysis of Very High Resolution DSMs and Multispectral Imagery Obtained from an Unmanned Aerial Vehicle. J. Environ. Manag. 2014, 134, 117–126. [Google Scholar] [CrossRef]

- Goetz, A.F.H.; Vane, G.; Solomon, J.E.; Rock, B.N. Imaging Spectrometry for Earth Remote Sensing. Science 1985, 228, 1147–1153. [Google Scholar] [CrossRef] [PubMed]

- Plaza, A.; Benediktsson, J.A.; Boardman, J.W.; Brazile, J.; Bruzzone, L.; Camps-Valls, G.; Chanussot, J.; Fauvel, M.; Gamba, P.; Gualtieri, A.; et al. Recent Advances in Techniques for Hyperspectral Image Processing. Remote Sens. Environ. 2009, 113, S110–S122. [Google Scholar] [CrossRef]

- Green, R.O.; Eastwood, M.L.; Sarture, C.M.; Chrien, T.G.; Aronsson, M.; Chippendale, B.J.; Faust, J.A.; Pavri, B.E.; Chovit, C.J.; Solis, M.; et al. Imaging Spectroscopy and the Airborne Visible/Infrared Imaging Spectrometer (AVIRIS). Remote Sens. Environ. 1998, 65, 227–248. [Google Scholar] [CrossRef]

- Goetz, A.F.H.; Srivastava, V. Mineralogical Mapping in the Cuprite Mining District, Nevada. In Proceedings of the Airborne Imaging Spectrometer Data Analysis Workshop, JPL Publication 85-41, Jet Propulsion Laboratory. Pasadena, CA, USA, 8–10 April 1985; pp. 22–29. [Google Scholar]

- Zarco-Tejada, P.J.; Guillén-Climent, M.L.; Hernández-Clemente, R.; Catalina, A.; González, M.R.; Martín, P. Estimating Leaf Carotenoid Content in Vineyards Using High Resolution Hyperspectral Imagery Acquired from an Unmanned Aerial Vehicle (UAV). Agric. For. Meteorol. 2013, 171–172, 281–294. [Google Scholar] [CrossRef] [Green Version]

- Hruska, R.; Mitchell, J.; Anderson, M.; Glenn, N.F. Radiometric and Geometric Analysis of Hyperspectral Imagery Acquired from an Unmanned Aerial Vehicle. Remote Sens. 2012, 4, 2736–2752. [Google Scholar] [CrossRef] [Green Version]

- Yue, J.; Yang, G.; Li, C.; Li, Z.; Wang, Y.; Feng, H.; Xu, B. Estimation of Winter Wheat Above-Ground Biomass Using Unmanned Aerial Vehicle-Based Snapshot Hyperspectral Sensor and Crop Height Improved Models. Remote Sens. 2017, 9, 708. [Google Scholar] [CrossRef] [Green Version]

- Lu, J.; Liu, H.; Yao, Y.; Tao, S.; Tang, Z.; Lu, J. Hsi Road: A Hyper Spectral Image Dataset For Road Segmentation. In Proceedings of the 2020 IEEE International Conference on Multimedia and Expo (ICME), London, UK, 6–10 July 2020; pp. 1–6. [Google Scholar]

- Wendel, A.; Underwood, J. Illumination Compensation in Ground Based Hyperspectral Imaging. ISPRS J. Photogramm. Remote Sens. 2017, 129, 162–178. [Google Scholar] [CrossRef]

- Van der Meer, F.D.; van der Werff, H.M.A.; van Ruitenbeek, F.J.A.; Hecker, C.A.; Bakker, W.H.; Noomen, M.F.; van der Meijde, M.; Carranza, E.J.M.; de Smeth, J.B.; Woldai, T. Multi- and Hyperspectral Geologic Remote Sensing: A Review. Int. J. Appl. Earth Obs. Geoinf. 2012, 14, 112–128. [Google Scholar] [CrossRef]

- Stuffler, T.; Kaufmann, C.; Hofer, S.; Förster, K.P.; Schreier, G.; Mueller, A.; Eckardt, A.; Bach, H.; Penné, B.; Benz, U.; et al. The EnMAP Hyperspectral Imager—An Advanced Optical Payload for Future Applications in Earth Observation Programmes. Acta Astronaut. 2007, 61, 115–120. [Google Scholar] [CrossRef]

- Carmon, N.; Ben-Dor, E. Mapping Asphaltic Roads’ Skid Resistance Using Imaging Spectroscopy. Remote Sens. 2018, 10, 430. [Google Scholar] [CrossRef] [Green Version]

- Schaepman, M.E.; Jehle, M.; Hueni, A.; D’Odorico, P.; Damm, A.; Weyermann, J.; Schneider, F.D.; Laurent, V.; Popp, C.; Seidel, F.C.; et al. Advanced Radiometry Measurements and Earth Science Applications with the Airborne Prism Experiment (APEX). Remote Sens. Environ. 2015, 158, 207–219. [Google Scholar] [CrossRef] [Green Version]

- Edberg, S.J.; Evans, D.L.; Graf, J.E.; Hyon, J.J.; Rosen, P.A.; Waliser, D.E. Studying Earth in the New Millennium: NASA Jet Propulsion Laboratory’s Contributions to Earth Science and Applications Space Agencies. IEEE Geosci. Remote Sens. Mag. 2016, 4, 26–39. [Google Scholar] [CrossRef]

- Green, R.O.; Team, C. New Measurements of the Earth’s Spectroscopic Diversity Acquired during the AVIRIS-NG Campaign to India. In Proceedings of the 2017 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Fort Worth, TX, USA, 23–28 July 2017; pp. 3066–3069. [Google Scholar]

- Jie-lin, Z.; Jun-hu, W.; Mi, Z.; Yan-ju, H.; Ding, W. Aerial Visible-Thermal Infrared Hyperspectral Feature Extraction Technology and Its Application to Object Identification. IOP Conf. Ser. Earth Environ. Sci. 2014, 17, 012184. [Google Scholar] [CrossRef] [Green Version]

- Jia, J.; Wang, Y.; Cheng, X.; Yuan, L.; Zhao, D.; Ye, Q.; Zhuang, X.; Shu, R.; Wang, J. Destriping Algorithms Based on Statistics and Spatial Filtering for Visible-to-Thermal Infrared Pushbroom Hyperspectral Imagery. IEEE Trans. Geosci. Remote Sens. 2019, 57, 4077–4091. [Google Scholar] [CrossRef]

- Jia, J.; Zheng, X.; Guo, S.; Wang, Y.; Chen, J. Removing Stripe Noise Based on Improved Statistics for Hyperspectral Images. IEEE Geosci. Remote Sens. Lett. 2020, 1–5. [Google Scholar] [CrossRef]

- Rouvière, L.R.; Sisakoun, I.; Skauli, T.; Coudrain, C.; Ferrec, Y.; Fabre, S.; Poutier, L.; Boucher, Y.; Løke, T.; Blaaberg, S. Sysiphe, an Airborne Hyperspectral System from Visible to Thermal Infrared. In Proceedings of the 2016 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Beijing, China, 10–15 July 2016; pp. 1947–1949. [Google Scholar]

- Marmo, J.; Folkman, M.A.; Kuwahara, C.Y.; Willoughby, C.T. Lewis Hyperspectral Imager Payload Development. Proc. SPIE 1996, 2819, 80–90. [Google Scholar]

- Pearlman, J.S.; Barry, P.S.; Segal, C.C.; Shepanski, J.; Beiso, D.; Carman, S.L. Hyperion, a Space-Based Imaging Spectrometer. IEEE Trans. Geosci. Remote Sens. 2003, 41, 1160–1173. [Google Scholar] [CrossRef]

- Barnsley, M.J.; Settle, J.J.; Cutter, M.A.; Lobb, D.R.; Teston, F. The PROBA/CHRIS Mission: A Low-Cost Smallsat for Hyperspectral Multiangle Observations of the Earth Surface and Atmosphere. IEEE Trans. Geosci. Remote Sens. 2004, 42, 1512–1520. [Google Scholar] [CrossRef]

- Murchie, S.; Arvidson, R.; Bedini, P.; Beisser, K.; Bibring, J.-P.; Bishop, J.; Boldt, J.; Cavender, P.; Choo, T.; Clancy, R.T.; et al. Compact Reconnaissance Imaging Spectrometer for Mars (CRISM) on Mars Reconnaissance Orbiter (MRO). J. Geophys. Res. Planets 2007, 112. [Google Scholar] [CrossRef]

- Liu, Y.; Sun, D.; Hu, X.; Ye, X.; Li, Y.; Liu, S.; Cao, K.; Chai, M.; Zhou, W.; Zhang, J.; et al. The Advanced Hyperspectral Imager: Aboard China’s GaoFen-5 Satellite. IEEE Geosci. Remote Sens. Mag. 2019, 7, 23–32. [Google Scholar] [CrossRef]

- Kimuli, D.; Wang, W.; Wang, W.; Jiang, H.; Zhao, X.; Chu, X. Application of SWIR Hyperspectral Imaging and Chemometrics for Identification of Aflatoxin B1 Contaminated Maize Kernels. Infrared Phys. Technol. 2018, 89, 351–362. [Google Scholar] [CrossRef]

- Ambrose, A.; Kandpal, L.M.; Kim, M.S.; Lee, W.H.; Cho, B.K. High Speed Measurement of Corn Seed Viability Using Hyperspectral Imaging. Infrared Phys. Technol. 2016, 75, 173–179. [Google Scholar] [CrossRef]

- He, H.; Sun, D. Hyperspectral Imaging Technology for Rapid Detection of Various Microbial Contaminants in Agricultural and Food Products. Trends Food Sci. Technol. 2015, 46, 99–109. [Google Scholar] [CrossRef]

- Randolph, K.; Wilson, J.; Tedesco, L.; Li, L.; Pascual, D.L.; Soyeux, E. Hyperspectral Remote Sensing of Cyanobacteria in Turbid Productive Water Using Optically Active Pigments, Chlorophyll a and Phycocyanin. Remote Sens. Environ. 2008, 112, 4009–4019. [Google Scholar] [CrossRef]

- Huang, H.; Liu, L.; Ngadi, M.O. Recent Developments in Hyperspectral Imaging for Assessment of Food Quality and Safety. Sensors 2014, 14, 7248–7276. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Brando, V.E.; Dekker, A.G. Satellite Hyperspectral Remote Sensing for Estimating Estuarine and Coastal Water Quality. IEEE Trans. Geosci. Remote Sens. 2003, 41, 1378–1387. [Google Scholar] [CrossRef]

- Shimoni, M.; Haelterman, R.; Perneel, C. Hypersectral Imaging for Military and Security Applications: Combining Myriad Processing and Sensing Techniques. IEEE Geosci. Remote Sens. Mag. 2019, 7, 101–117. [Google Scholar] [CrossRef]

- Kruse, F.A. Comparative Analysis of Airborne Visible/Infrared Imaging Spectrometer (AVIRIS), and Hyperspectral Thermal Emission Spectrometer (HyTES) Longwave Infrared (LWIR) Hyperspectral Data for Geologic Mapping. In Proceedings of the Algorithms and Technologies for Multispectral, Hyperspectral, and Ultraspectral Imagery XXI; International Society for Optics and Photonics, Baltimore, MD, USA, 21 May 2015; Volume 9472, p. 94721F. [Google Scholar]

- Duren, R.M.; Thorpe, A.K.; Foster, K.T.; Rafiq, T.; Hopkins, F.M.; Yadav, V.; Bue, B.D.; Thompson, D.R.; Conley, S.; Colombi, N.K.; et al. California’s Methane Super-Emitters. Nature 2019, 575, 180–184. [Google Scholar] [CrossRef] [Green Version]

- Gelautz, M.; Frick, H.; Raggam, J.; Burgstaller, J.; Leberl, F. SAR Image Simulation and Analysis of Alpine Terrain. ISPRS J. Photogramm. Remote Sens. 1998, 53, 17–38. [Google Scholar] [CrossRef]

- Haldar, D.; Das, A.; Mohan, S.; Pal, O.; Hooda, R.S.; Chakraborty, M. Assessment of L-Band SAR Data at Different Polarization Combinations for Crop and Other Landuse Classification. Prog. Electromagn. Res. B 2012, 36, 303–321. [Google Scholar] [CrossRef] [Green Version]

- Raney, R.K. Hybrid-Polarity SAR Architecture. IEEE Trans. Geosci. Remote Sens. 2007, 45, 3397–3404. [Google Scholar] [CrossRef] [Green Version]

- McNairn, H.; Brisco, B. The Application of C-Band Polarimetric SAR for Agriculture: A Review. Can. J. Remote Sens. 2004, 30, 525–542. [Google Scholar] [CrossRef]

- Jung, J.; Kim, D.; Lavalle, M.; Yun, S.-H. Coherent Change Detection Using InSAR Temporal Decorrelation Model: A Case Study for Volcanic Ash Detection. IEEE Trans. Geosci. Remote Sens. 2016, 54, 5765–5775. [Google Scholar] [CrossRef]

- Liu, J.G.; Black, A.; Lee, H.; Hanaizumi, H.; Moore, J.M.c.M. Land Surface Change Detection in a Desert Area in Algeria Using Multi-Temporal ERS SAR Coherence Images. Int. J. Remote Sens. 2001, 22, 2463–2477. [Google Scholar] [CrossRef]

- Monti-Guarnieri, A.V.; Brovelli, M.A.; Manzoni, M.; Mariotti d’Alessandro, M.; Molinari, M.E.; Oxoli, D. Coherent Change Detection for Multipass SAR. IEEE Trans. Geosci. Remote Sens. 2018, 56, 6811–6822. [Google Scholar] [CrossRef]

- Wahl, D.E.; Yocky, D.A.; Jakowatz, C.V.; Simonson, K.M. A New Maximum-Likelihood Change Estimator for Two-Pass SAR Coherent Change Detection. IEEE Trans. Geosci. Remote Sens. 2016, 54, 2460–2469. [Google Scholar] [CrossRef]

- Vosselman, G.; Maas, H.G. Airborne and Terrestrial Laser Scanning; CRC Press: Boca Raton, FL, USA, 2010; ISBN 978-1-904445-87-6. [Google Scholar]

- Garestier, F.; Dubois-Fernandez, P.C.; Papathanassiou, K.P. Pine Forest Height Inversion Using Single-Pass X-Band PolInSAR Data. IEEE Trans. Geosci. Remote Sens. 2007, 46, 59–68. [Google Scholar] [CrossRef]

- Rizzoli, P.; Martone, M.; Gonzalez, C.; Wecklich, C.; Borla Tridon, D.; Bräutigam, B.; Bachmann, M.; Schulze, D.; Fritz, T.; Huber, M.; et al. Generation and Performance Assessment of the Global TanDEM-X Digital Elevation Model. ISPRS J. Photogramm. Remote Sens. 2017, 132, 119–139. [Google Scholar] [CrossRef] [Green Version]

- Horn, R.; Nottensteiner, A.; Reigber, A.; Fischer, J.; Scheiber, R. F-SAR—DLR’s New Multifrequency Polarimetric Airborne SAR. In Proceedings of the 2009 IEEE International Geoscience and Remote Sensing Symposium, Cape Town, South Africa, 12–17 July 2009; Volume 2, pp. II-902–II-905. [Google Scholar]

- Dell’Acqua, F.; Gamba, P. Rapid Mapping Using Airborne and Satellite SAR Images. In Radar Remote Sensing of Urban Areas; Soergel, U., Ed.; Remote Sensing and Digital Image Processing; Springer Netherlands: Dordrecht, The Netherlands, 2010; pp. 49–68. ISBN 978-90-481-3751-0. [Google Scholar]

- Xiao, F.; Tong, L.; Luo, S. A Method for Road Network Extraction from High-Resolution SAR Imagery Using Direction Grouping and Curve Fitting. Remote Sens. 2019, 11, 2733. [Google Scholar] [CrossRef] [Green Version]

- Zhang, Q.; Kong, Q.; Zhang, C.; You, S.; Wei, H.; Sun, R.; Li, L. A New Road Extraction Method Using Sentinel-1 SAR Images Based on the Deep Fully Convolutional Neural Network. Eur. J. Remote Sens. 2019, 52, 572–582. [Google Scholar] [CrossRef] [Green Version]

- Harvey, W.A.; McKeown, D.M., Jr. Automatic Compilation of 3D Road Features Using LIDAR and Multi-Spectral Source Data. In Proceedings of the ASPRS Annual Conference, Portland, OR, USA, 28 April–2 May 2008; p. 11. [Google Scholar]

- Cheng, L.; Wu, Y.; Wang, Y.; Zhong, L.; Chen, Y.; Li, M. Three-Dimensional Reconstruction of Large Multilayer Interchange Bridge Using Airborne LiDAR Data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 691–708. [Google Scholar] [CrossRef]

- How to Plan for a Leica CityMapper-2 Project. Available online: https://blog.hexagongeosystems.com/how-to-plan-for-a-leica-citymapper-2-project/ (accessed on 21 July 2021).

- Leica SPL100 Single Photon LiDAR Sensor. Available online: https://leica-geosystems.com/products/airborne-systems/topographic-lidar-sensors/leica-spl100 (accessed on 21 July 2021).

- Communicatie, F.M. ALTM Galaxy PRIME. Available online: https://geo-matching.com/airborne-laser-scanning/altm-galaxy-prime (accessed on 21 July 2021).

- Wichmann, V.; Bremer, M.; Lindenberger, J.; Rutzinger, M.; Georges, C.; Petrini-Monteferri, F. Evaluating the Potential of Multispectral Airborne LiDAR for Topographic Mapping and Land Cover Classification. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2015, 2. [Google Scholar] [CrossRef] [Green Version]

- Pilarska, M.; Ostrowski, W. Evaluating the Possibility of Tree Species Calssification with Dual-Wavelength ALS Data. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019. [Google Scholar]

- RIEGL—RIEGL VUX-240. Available online: http://www.riegl.com/products/unmanned-scanning/riegl-vux-240/ (accessed on 21 July 2021).

- Magnoni, A.; Stanton, T.W.; Barth, N.; Fernandez-Diaz, J.C.; León, J.F.O.; Ruíz, F.P.; Wheeler, J.A. Detection Thresholds of Archaeological Features in Airborne LiDAR Data from Central Yucatán. Adv. Archaeol. Pract. 2016, 4, 232–248. [Google Scholar] [CrossRef]

- Saito, S.; Yamashita, T.; Aoki, Y. Multiple Object Extraction from Aerial Imagery with Convolutional Neural Networks. Electron. Imaging 2016, 2016, 1–9. [Google Scholar] [CrossRef]

- Ventura, C.; Pont-Tuset, J.; Caelles, S.; Maninis, K.-K.; Van Gool, L. Iterative Deep Learning for Road Topology Extraction. arXiv 2018, arXiv:1808.09814. [Google Scholar]

- Lian, R.; Huang, L. DeepWindow: Sliding Window Based on Deep Learning for Road Extraction from Remote Sensing Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 1905–1916. [Google Scholar] [CrossRef]

- Kass, M.; Witkin, A.; Terzopoulos, D. Snakes: Active Contour Models. Int. J. Comput. Vis. 1988, 1, 321–331. [Google Scholar] [CrossRef]

- Gruen, A.; Li, H. Semi-Automatic Linear Feature Extraction by Dynamic Programming and LSB-Snakes. Photogramm. Eng. Remote Sens. 1997, 63, 985–994. [Google Scholar]

- Jagalingam, P.; Vittal, V.H.; Vittal, A. Hegde Review of Quality Metrics for Fused Image. Aquat. Procedia 2015.

- Song, M.; Civco, D. Road Extraction Using SVM and Image Segmentation. Photogramm. Eng. Remote Sens. 2004, 70, 1365–1371. [Google Scholar] [CrossRef] [Green Version]

- Mayer, H. Object Extraction in Photogrammetric Computer Vision. ISPRS J. Photogramm. Remote Sens. 2008, 63, 213–222. [Google Scholar] [CrossRef]

- Kirthika, A.; Mookambiga, A. Automated Road Network Extraction Using Artificial Neural Network. In Proceedings of the 2011 International Conference on Recent Trends in Information Technology (ICRTIT), Chennai, India, 3–5 June 2011; pp. 1061–1065. [Google Scholar]

- Li, M.; Zang, S.; Zhang, B.; Li, S.; Wu, C. A Review of Remote Sensing Image Classification Techniques: The Role of Spatio-Contextual Information. Eur. J. Remote Sens. 2014, 47, 389–411. [Google Scholar] [CrossRef]

- Yang, X.-S.; Cui, Z.; Xiao, R.; Gandomi, A.H.; Karamanoglu, M. Swarm Intelligence and Bio-Inspired Computation: Theory and Applications; Elsevier: Waltham, MA, USA, 2013; ISBN 0-12-405177-4. [Google Scholar]

- Goodfellow, I.J.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative Adversarial Networks. arXiv 2014, arXiv:1406.2661. [Google Scholar]

- Zhang, Q.; Couloigner, I. Benefit of the Angular Texture Signature for the Separation of Parking Lots and Roads on High Resolution Multi-Spectral Imagery. Pattern Recognit. Lett. 2006, 27, 937–946. [Google Scholar] [CrossRef]

- Zhang, Q.; Couloigner, I. Automated Road Network Extraction from High Resolution Multi-Spectral Imagery. In Proceedings of the ASPRS 2006 Annual Conference, Reno, NV, USA, 1–5 May 2006. [Google Scholar]

- Manandhar, P.; Marpu, P.R.; Aung, Z. Segmentation Based Traversing-Agent Approach for Road Width Extraction from Satellite Images Using Volunteered Geographic Information. Appl. Comput. Inform. 2018, 17, 131–152. [Google Scholar] [CrossRef]

- Boggess, J.E. Identification of Roads in Satellite Imagery Using Artificial Neural Networks: A Contextual Approach; Mississippi State University: Starkville, MS, USA, 1993. [Google Scholar]

- Doucette, P.; Agouris, P.; Stefanidis, A.; Musavi, M. Self-Organised Clustering for Road Extraction in Classified Imagery. ISPRS J. Photogramm. Remote Sens. 2001, 55, 347–358. [Google Scholar] [CrossRef]

- Shackelford, A.K.; Davis, C.H. A Hierarchical Fuzzy Classification Approach for High-Resolution Multispectral Data over Urban Areas. IEEE Trans. Geosci. Remote Sens. 2003, 41, 1920–1932. [Google Scholar] [CrossRef] [Green Version]

- Doucette, P.; Agouris, P.; Stefanidis, A. Automated Road Extraction from High Resolution Multispectral Imagery. Photogramm. Eng. Remote Sens. 2004, 70, 1405–1416. [Google Scholar] [CrossRef]

- Jin, X.; Davis, C.H. An Integrated System for Automatic Road Mapping from High-Resolution Multi-Spectral Satellite Imagery by Information Fusion. Inf. Fusion 2005, 6, 257–273. [Google Scholar] [CrossRef]

- Shi, W.; Miao, Z.; Debayle, J. An Integrated Method for Urban Main-Road Centerline Extraction From Optical Remotely Sensed Imagery. IEEE Trans. Geosci. Remote Sens. 2014, 52, 3359–3372. [Google Scholar] [CrossRef]

- Liu, W.; Zhang, Z.; Chen, X.; Li, S.; Zhou, Y. Dictionary Learning-Based Hough Transform for Road Detection in Multispectral Image. IEEE Geosci. Remote Sens. Lett. 2017, 14, 2330–2334. [Google Scholar] [CrossRef]

- Sun, T.-L. A Detection Algorithm for Road Feature Extraction Using EO-1 Hyperspectral Images. In Proceedings of the IEEE 37th Annual 2003 International Carnahan Conference onSecurity Technology, Taipei, Taiwan, 14–16 October 2003; pp. 87–95. [Google Scholar]

- Gardner, M.E.; Roberts, D.A.; Funk, C. Road Extraction from AVIRIS Using Spectral Mixture and Q-Tree Filter Techniques. In Proceedings of the AVIRIS Airborne Geoscience Workshop, Santa Barbara, CA, USA, 1 December 2001; Volume 27, p. 6. [Google Scholar]

- Noronha, V.; Herold, M.; Roberts, D.; Gardner, M. Spectrometry and Hyperspectral Remote Sensing for Road Centerline Extraction and Evaluation of Pavement Condition. In Proceedings of the Pecora Conference, San Diego, CA, USA, 11–13 March 2002. [Google Scholar]

- Huang, X.; Zhang, L. An Adaptive Mean-Shift Analysis Approach for Object Extraction and Classification From Urban Hyperspectral Imagery. IEEE Trans. Geosci. Remote Sens. 2008, 46, 4173–4185. [Google Scholar] [CrossRef]

- Resende, M.; Jorge, S.; Longhitano, G.; Quintanilha, J.A. Use of Hyperspectral and High Spatial Resolution Image Data in an Asphalted Urban Road Extraction. In Proceedings of the IGARSS 2008—2008 IEEE International Geoscience and Remote Sensing Symposium, Boston, MA, USA, 8–11 July 2008; IEEE: New York, NY, USA, 2008; pp. III-1323–III-1325. [Google Scholar]

- Mohammadi, M. Road Classification and Condition Determination Using Hyperspectral Imagery. ISPRS—Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2012, XXXIX-B7, 141–146. [Google Scholar] [CrossRef] [Green Version]

- Liao, W.; Bellens, R.; Pizurica, A.; Philips, W.; Pi, Y. Classification of Hyperspectral Data Over Urban Areas Using Directional Morphological Profiles and Semi-Supervised Feature Extraction. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2012, 5, 1177–1190. [Google Scholar] [CrossRef]

- Miao, Z.; Shi, W.; Zhang, H.; Wang, X. Road Centerline Extraction From High-Resolution Imagery Based on Shape Features and Multivariate Adaptive Regression Splines. IEEE Geosci. Remote Sens. Lett. 2013, 10, 583–587. [Google Scholar] [CrossRef]

- Abdellatif, M.; Peel, H.; Cohn, A.G.; Fuentes, R. Hyperspectral Imaging for Autonomous Inspection of Road Pavement Defects. In Proceedings of the 36th International Symposium on Automation and Robotics in Construction (ISARC), Banff, AB, Canada, 24 May 2019. [Google Scholar]

- Tupin, F.; Maitre, H.; Mangin, J.-F.; Nicolas, J.-M.; Pechersky, E. Detection of Linear Features in SAR Images: Application to Road Network Extraction. IEEE Trans. Geosci. Remote Sens. 1998, 36, 434–453. [Google Scholar] [CrossRef] [Green Version]

- Tupin, F.; Houshmand, B.; Datcu, M. Road Detection in Dense Urban Areas Using SAR Imagery and the Usefulness of Multiple Views. IEEE Trans. Geosci. Remote Sens. 2002, 40, 2405–2414. [Google Scholar] [CrossRef] [Green Version]

- Wang, Y.; Zheng, Q. Recognition of Roads and Bridges in SAR Images. Pattern Recognit. 1998, 31, 953–962. [Google Scholar] [CrossRef]

- Dell’Acqua, F.; Gamba, P.; Lisini, G. Road Map Extraction by Multiple Detectors in Fine Spatial Resolution SAR Data. Can. J. Remote Sens. 2003, 29, 481–490. [Google Scholar] [CrossRef]

- Lisini, G.; Tison, C.; Tupin, F.; Gamba, P. Feature Fusion to Improve Road Network Extraction in High-Resolution SAR Images. IEEE Geosci. Remote Sens. Lett. 2006, 3, 217–221. [Google Scholar] [CrossRef]

- Hedman, K.; Stilla, U.; Lisini, G.; Gamba, P. Road Network Extraction in VHR SAR Images of Urban and Suburban Areas by Means of Class-Aided Feature-Level Fusion. IEEE Trans. Geosci. Remote Sens. 2010, 48, 1294–1296. [Google Scholar] [CrossRef]

- He, C.; Liao, Z.; Yang, F.; Deng, X.; Liao, M. Road Extraction From SAR Imagery Based on Multiscale Geometric Analysis of Detector Responses. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2012, 5, 1373–1382. [Google Scholar] [CrossRef]

- Lu, P.; Du, K.; Yu, W.; Wang, R.; Deng, Y.; Balz, T. A New Region Growing-Based Method for Road Network Extraction and Its Application on Different Resolution SAR Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 4772–4783. [Google Scholar] [CrossRef]

- Saati, M.; Amini, J. Road Network Extraction from High-Resolution SAR Imagery Based on the Network Snake Model. Photogramm. Eng. Remote Sens. 2017, 83, 207–215. [Google Scholar] [CrossRef]

- Xu, R.; He, C.; Liu, X.; Chen, D.; Qin, Q. Bayesian Fusion of Multi-Scale Detectors for Road Extraction from SAR Images. ISPRS Int. J. Geo-Inf. 2017, 6, 26. [Google Scholar] [CrossRef] [Green Version]

- Xiong, X.; Jin, G.; Xu, Q.; Zhang, H.; Xu, J. Robust Line Detection of Synthetic Aperture Radar Images Based on Vector Radon Transformation. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 5310–5320. [Google Scholar] [CrossRef]

- Jiang, M.; Miao, Z.; Gamba, P.; Yong, B. Application of Multitemporal InSAR Covariance and Information Fusion to Robust Road Extraction. IEEE Trans. Geosci. Remote Sens. 2017, 55, 3611–3622. [Google Scholar] [CrossRef]

- Jin, R.; Zhou, W.; Yin, J.; Yang, J. CFAR Line Detector for Polarimetric SAR Images Using Wilks’ Test Statistic. IEEE Geosci. Remote Sens. Lett. 2016, 13, 711–715. [Google Scholar] [CrossRef]

- Scharf, D.P. Analytic Yaw–Pitch Steering for Side-Looking SAR With Numerical Roll Algorithm for Incidence Angle. IEEE Trans. Geosci. Remote Sens. 2012, 50, 3587–3594. [Google Scholar] [CrossRef]

- Clode, S.; Kootsookos, P.J.; Rottensteiner, F. The Automatic Extraction of Roads from LIDAR Data; ISPRS: Istanbul, Turkey, 2004. [Google Scholar]

- Clode, S.; Rottensteiner, F.; Kootsookos, P.; Zelniker, E. Detection and Vectorization of Roads from Lidar Data. Photogramm. Eng. Remote Sens. 2007, 73, 517–535. [Google Scholar] [CrossRef] [Green Version]

- Hu, X.; Li, Y.; Shan, J.; Zhang, J.; Zhang, Y. Road Centerline Extraction in Complex Urban Scenes From LiDAR Data Based on Multiple Features. IEEE Trans. Geosci. Remote Sens. 2014, 52, 7448–7456. [Google Scholar] [CrossRef]

- Li, Y.; Yong, B.; Wu, H.; An, R.; Xu, H. Road Detection from Airborne LiDAR Point Clouds Adaptive for Variability of Intensity Data. Optik 2015, 126, 4292–4298. [Google Scholar] [CrossRef]

- Hui, Z.; Hu, Y.; Jin, S.; Yevenyo, Y.Z. Road Centerline Extraction from Airborne LiDAR Point Cloud Based on Hierarchical Fusion and Optimization. ISPRS J. Photogramm. Remote Sens. 2016, 118, 22–36. [Google Scholar] [CrossRef]

- Zhao, J.; You, S.; Huang, J. Rapid Extraction and Updating of Road Network from Airborne LiDAR Data. In Proceedings of the 2011 IEEE Applied Imagery Pattern Recognition Workshop (AIPR), Washington, DC, USA, 11–13 October 2011; pp. 1–7. [Google Scholar]

- Chen, Z.; Liu, C.; Wu, H. A Higher-Order Tensor Voting-Based Approach for Road Junction Detection and Delineation from Airborne LiDAR Data. ISPRS J. Photogramm. Remote Sens. 2019, 150, 91–114. [Google Scholar] [CrossRef]

- Sithole, G.; Vosselman, G. Bridge Detection in Airborne Laser Scanner Data. ISPRS J. Photogramm. Remote Sens. 2006, 61, 33–46. [Google Scholar] [CrossRef]

- Boyko, A.; Funkhouser, T. Extracting Roads from Dense Point Clouds in Large Scale Urban Environment. ISPRS J. Photogramm. Remote Sens. 2011, 66, S2–S12. [Google Scholar] [CrossRef] [Green Version]

- Lin, Y.; Hyyppä, J.; Jaakkola, A. Mini-UAV-Borne LIDAR for Fine-Scale Mapping. IEEE Geosci. Remote Sens. Lett. 2011, 8, 426–430. [Google Scholar] [CrossRef]

- Soilán, M.; Truong-Hong, L.; Riveiro, B.; Laefer, D. Automatic Extraction of Road Features in Urban Environments Using Dense ALS Data. Int. J. Appl. Earth Obs. Geoinformation 2018, 64, 226–236. [Google Scholar] [CrossRef]

- Zhou, W. An Object-Based Approach for Urban Land Cover Classification: Integrating LiDAR Height and Intensity Data. IEEE Geosci. Remote Sens. Lett. 2013, 10, 928–931. [Google Scholar] [CrossRef]

- Matkan, A.A.; Hajeb, M.; Sadeghian, S. Road Extraction from Lidar Data Using Support Vector Machine Classification. Photogramm. Eng. Remote Sens. 2014, 80, 409–422. [Google Scholar] [CrossRef] [Green Version]

- Morsy, S.; Shaker, A.; El-Rabbany, A. Multispectral LiDAR Data for Land Cover Classification of Urban Areas. Sensors 2017, 17, 958. [Google Scholar] [CrossRef] [Green Version]

- Karila, K.; Matikainen, L.; Puttonen, E.; Hyyppä, J. Feasibility of Multispectral Airborne Laser Scanning Data for Road Mapping. IEEE Geosci. Remote Sens. Lett. 2017, 14, 294–298. [Google Scholar] [CrossRef]

- Ekhtari, N.; Glennie, C.; Fernandez-Diaz, J.C. Classification of Airborne Multispectral Lidar Point Clouds for Land Cover Mapping. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 2068–2078. [Google Scholar] [CrossRef]

- Pan, S.; Guan, H.; Yu, Y.; Li, J.; Peng, D. A Comparative Land-Cover Classification Feature Study of Learning Algorithms: DBM, PCA, and RF Using Multispectral LiDAR Data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 1314–1326. [Google Scholar] [CrossRef]

- Pan, S.; Guan, H.; Chen, Y.; Yu, Y.; Nunes Gonçalves, W.; Marcato Junior, J.; Li, J. Land-Cover Classification of Multispectral LiDAR Data Using CNN with Optimized Hyper-Parameters. ISPRS J. Photogramm. Remote Sens. 2020, 166, 241–254. [Google Scholar] [CrossRef]

- Yu, Y.; Guan, H.; Li, D.; Gu, T.; Wang, L.; Ma, L.; Li, J. A Hybrid Capsule Network for Land Cover Classification Using Multispectral LiDAR Data. IEEE Geosci. Remote Sens. Lett. 2020, 17, 1263–1267. [Google Scholar] [CrossRef]

- Matikainen, L.; Karila, K.; Litkey, P.; Ahokas, E.; Hyyppä, J. Combining Single Photon and Multispectral Airborne Laser Scanning for Land Cover Classification. ISPRS J. Photogramm. Remote Sens. 2020, 164, 200–216. [Google Scholar] [CrossRef]

- Tiwari, P.S.; Pande, H.; Pandey, A.K. Automatic Urban Road Extraction Using Airborne Laser Scanning/Altimetry and High Resolution Satellite Data. J. Indian Soc. Remote Sens. 2009, 37, 223. [Google Scholar] [CrossRef]

- Hu, X.; Tao, C.V.; Hu, Y. Automatic Road Extraction from Dense Urban Area by Integrated Processing of High Resolution Imagery and Lidar Data. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2004, 35, 288–292. [Google Scholar]

- Zhang, Z.; Zhang, X.; Sun, Y.; Zhang, P. Road Centerline Extraction from Very-High-Resolution Aerial Image and LiDAR Data Based on Road Connectivity. Remote Sens. 2018, 10, 1284. [Google Scholar] [CrossRef] [Green Version]

- Feng, Q.; Zhu, D.; Yang, J.; Li, B. Multisource Hyperspectral and LiDAR Data Fusion for Urban Land-Use Mapping Based on a Modified Two-Branch Convolutional Neural Network. ISPRS Int. J. Geo-Inf. 2019, 8, 28. [Google Scholar] [CrossRef] [Green Version]

- Elaksher, A.F. Fusion of Hyperspectral Images and Lidar-Based Dems for Coastal Mapping. Opt. Lasers Eng. 2008, 46, 493–498. [Google Scholar] [CrossRef] [Green Version]

- Hsu, S.M.; Burke, H. Multisensor fusion with hyperspectral imaging data: Detection and classification. In Handbook of Pattern Recognition and Computer Vision; WORLD SCIENTIFIC: Singapore, 2005; pp. 347–364. ISBN 978-981-256-105-3. [Google Scholar]

- Cao, G.; Jin, Y.Q. A Hybrid Algorithm of the BP-ANN/GA for Classification of Urban Terrain Surfaces with Fused Data of Landsat ETM+ and ERS-2 SAR. Int. J. Remote Sens. 2007, 28, 293–305. [Google Scholar] [CrossRef]

- Lin, X.; Liu, Z.; Zhang, J.; Shen, J. Combining Multiple Algorithms for Road Network Tracking from Multiple Source Remotely Sensed Imagery: A Practical System and Performance Evaluation. Sensors 2009, 9, 1237–1258. [Google Scholar] [CrossRef] [Green Version]

- Perciano, T.; Tupin, F.; Jr, R.H.; Jr, R.M.C. A Two-Level Markov Random Field for Road Network Extraction and Its Application with Optical, SAR, and Multitemporal Data. Int. J. Remote Sens. 2016, 37, 3584–3610. [Google Scholar] [CrossRef]

- Bartsch, A.; Pointner, G.; Ingeman-Nielsen, T.; Lu, W. Towards Circumpolar Mapping of Arctic Settlements and Infrastructure Based on Sentinel-1 and Sentinel-2. Remote Sens. 2020, 12, 2368. [Google Scholar] [CrossRef]

- Liu, S.; Qi, Z.; Li, X.; Yeh, A.G.-O. Integration of Convolutional Neural Networks and Object-Based Post-Classification Refinement for Land Use and Land Cover Mapping with Optical and SAR Data. Remote Sens. 2019, 11, 690. [Google Scholar] [CrossRef] [Green Version]

- Lin, Y.; Zhang, H.; Li, G.; Wang, T.; Wan, L.; Lin, H. Improving Impervious Surface Extraction With Shadow-Based Sparse Representation From Optical, SAR, and LiDAR Data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 2417–2428. [Google Scholar] [CrossRef]

- Kim, Y.; Kim, Y. Improved Classification Accuracy Based on the Output-Level Fusion of High-Resolution Satellite Images and Airborne LiDAR Data in Urban Area. IEEE Geosci. Remote Sens. Lett. 2014, 11, 636–640. [Google Scholar] [CrossRef]

- Liu, L.; Lim, S. A Framework of Road Extraction from Airborne Lidar Data and Aerial Imagery. J. Spat. Sci. 2016, 61, 263–281. [Google Scholar] [CrossRef]

- Chen, Z.; Fan, W.; Zhong, B.; Li, J.; Du, J.; Wang, C. Corse-to-Fine Road Extraction Based on Local Dirichlet Mixture Models and Multiscale-High-Order Deep Learning. IEEE Trans. Intell. Transp. Syst. 2019, 21, 4283–4293. [Google Scholar] [CrossRef]

- Bruzzone, L.; Carlin, L. A Multilevel Context-Based System for Classification of Very High Spatial Resolution Images. IEEE Trans. Geosci. Remote Sens. 2006, 44, 2587–2600. [Google Scholar] [CrossRef] [Green Version]

- Wang, J.; Qin, Q.; Yang, X.; Wang, J.; Ye, X.; Qin, X. Automated Road Extraction from Multi-Resolution Images Using Spectral Information and Texture. In Proceedings of the 2014 IEEE Geoscience and Remote Sensing Symposium, Quebec City, QC, Canada, 13–18 July 2014; pp. 533–536. [Google Scholar]

- Zhao, W.; Du, S. Spectral–Spatial Feature Extraction for Hyperspectral Image Classification: A Dimension Reduction and Deep Learning Approach. IEEE Trans. Geosci. Remote Sens. 2016, 54, 4544–4554. [Google Scholar] [CrossRef]

- Hamraz, H.; Jacobs, N.B.; Contreras, M.A.; Clark, C.H. Deep Learning for Conifer/Deciduous Classification of Airborne LiDAR 3D Point Clouds Representing Individual Trees. ISPRS J. Photogramm. Remote Sens. 2019, 158, 219–230. [Google Scholar]

- Jia, J.; Chen, J.; Zheng, X.; Wang, Y.; Guo, S.; Sun, H.; Jiang, C.; Karjalainen, M.; Karila, K.; Duan, Z.; et al. Tradeoffs in the Spatial and Spectral Resolution of Airborne Hyperspectral Imaging Systems: A Crop Identification Case Study. IEEE Trans. Geosci. Remote Sens. 2021, 1–18. [Google Scholar] [CrossRef]

| Satellite | Launch (Year) | Swath (km) | PAN (m) | R (m) | G (m) | B (m) | NIR (m) |

|---|---|---|---|---|---|---|---|

| Gaofen 1 (CN) | 2013 [87] | 70 | 2 | 8 | 8 | 8 | 8 |

| Gaofen 2 (CN) | 2014 [88] | 0.8 | 3.2 | 3.2 | 3.2 | 3.2 | |

| Gaofen 6 (CN) | 2015 [89] | 2 | 8 | 8 | 8 | 8 | |

| SuperView (CN) | 2016 [90] | 12 | 0.5 | 2 | 2 | 2 | 2 |

| GeoEye 1 (US) | 2008 [91] | 15.2 | 0.41 | 1.65 | 1.65 | 1.65 | 1.65 |

| IKONOS (US) | 1999 [85] | 11.3 | 1 | 4 | 4 | 4 | 4 |

| PlanetScope (US) | 2018 [92] | 24.6 | / | 3 | 3 | 3 | 3 |

| QuickBirds (US) | 2001 [93] | 16.5 | 0.6 | 2.4 | 2.4 | 2.4 | 2.4 |

| WorldView 1 (US) | 2007 [94] | 17 | 0.5 | / | / | / | / |

| WorldView 2 (US) | 2009 [91] | 17 | 0.5 | 2 | 2 | 2 | 2 |

| WorldView 3 (US) | 2014 [95] | 13.1 | 0.31 | 1.24 | 1.24 | 1.24 | 1.24 |

| WorldView 4 (US) | 2016 [96] | 13.1 | 0.31 | 1.24 | 1.24 | 1.24 | 1.24 |

| OrbView 3 (US) | 2003 [97] | 8 | 1 | 4 | 4 | 4 | 4 |

| RapidEye (DE) | 2008 [98] | 77 | / | 6.5 | 6.5 | 6.5 | 6.5 |

| KOMPSAT 2 (KR) | 2006 [99] | 15 | 1 | 4 | 4 | 4 | 4 |

| KOMPSAT 3 (KR) | 2012 [100] | 16 | 0.7 | 2.8 | 2.8 | 2.8 | 2.8 |

| KOMPSAT 3A (KR) | 2015 [101] | 12 | 0.55 | 2.2 | 2.2 | 2.2 | 2.2 |

| Pléiades 1A (FR) | 2011 [102] | 20 | 0.7 | 2.8 | 2.8 | 2.8 | 2.8 |

| Pléiades 1B (FR) | 2012 [103] | 20 | 0.7 | 2.8 | 2.8 | 2.8 | 2.8 |

| SPOT 6 (FR) | 2012 [104] | 60 | 1.5 | 6 | 6 | 6 | 6 |

| SPOT 7 (FR) | 2014 [105] | 60 | 1.5 | 6 | 6 | 6 | 6 |

| DubaiSat 1 (AE) | 2009 [106] | 12 | 2.5 | 5 | 5 | 5 | 5 |

| DubaiSat 2 (AE) | 2013 [107] | 12 | 1 | 4 | 4 | 4 | 4 |

| Name | References | Platform | Wavelength Range (μm) | Channel | Spectral Resolution (nm) | IFOV (mrad) | FOV/Swath |

|---|---|---|---|---|---|---|---|

| AISA-FENIX 1K | [122], 2018 | Airborne | 0.38–0.97, 0.97–2.5 | 348, 246 | ≤4.5, ≤12 | 0.68 | 40° |

| APEX | [123], 2015 | Airborne | 0.372–1.015 0.94–2.54 | 114, 198 | 0.45–0.75, 5–10 | 0.489 | 28.1° |

| AVIRIS-NG | [124,125], 2016, 2017 | Airborne | 0.38–2.52 | 430 | 5 | 1 | 34° |

| CASI-1500 SASI-1000A TASI-600A | [126], 2014 | Airborne | 0.38–1.05, 0.95–2.45, 8–11.5 | 288, 100,32 | 2.3, 15, 110 | 0.49, 1.22, 1.19 | 40° |

| AMMIS | [127,128], 2019, 2020 | Airborne | 0.4–0.95, 0.95–2.5, 8–12.5 | 256, 512, 128 | 2.34, 3, 32 | 0.25, 0.5, 1 | 40° |

| SYSIPHE | [129], 2016 | Airborne | 0.4–1, 0.95–2.5, 3–5.4, 8.1–11.8 | 560 (total) | 5, 6.1, 11 cm−1, 5 cm−1 | 0.25 | 15° |

| HSI | [130], 1996 | LEWIS Satellite | 0.4–1, 1–2.5 | 128, 256 | 5, 5.8 | 0.057 | 7.68 km |

| Hyperion | [131], 2003 | EO-1 Satellite | 0.4–1, 0.9–2.5 | 242 (total) | 10 | 0.043 | 7.7 km |

| CHRIS | [132], 2004 | PROBA-1 Satellite | 0.4–1.05 | 18/62 | 1.25–11 | 0.03 | 18.6 km |

| CRISM | [133], 2007 | MRO Satellite | 0.362–1.053, 1.002–3.92 | 544 (total) | 6.55 | 0.061 | >7.5 km |

| AHSI | [134], 2019 | Gaofen-5 Satellite | 0.39–2.51 | 330 (total) | 5, 10 | 0.043 | 60 km |

| Special Characteristics | WaveLength | Horizontal and Elevation Accuracy | Altitude | Pulse Repetition Frequency | Point Density | |

|---|---|---|---|---|---|---|

| Leica Hyperion2+ [161], 2021 | Multiple pulses in the air measured | 1064 nm | <13 cm, <5 cm | 300–5500 m | −2000 kHz | 2 pts/m2/4000 m, 40 pts/m2/600 m |

| Leica SPL [162], 2021 | Single photon | 532 nm | <15 cm, <10 cm | 2000–4500 m | 20–60 kHz | 6 million points per second, 20 pts/m2 (4000 m AGL) |

| Optech Galaxy Prime [163], 2020 | Wide-area mapping | 1064 nm | 1/10,000 × altitude, <0.03–0.25 m | 150–6000 m | 10–1000 kHz | 1 million point per s, 60 pts/m2 (500 m AGL), 2 pts/m2 (3000 m) |

| Optech Titan [164], 2015 | 3 wavelength | 1550 nm, 1064 nm, 532 nm | 1/7500 × altitude, <5–10 cm | 300–2000 m | 3 × 50–300 kHz | 45 pts/m2 (400 AGL) |

| Riegl VQ-1560i-DW [165], 2019 | Dual-wavelength, multiple pulses in the air measured. | 532 nm, 1064 nm | / | 900–2500 m | 2 × 700–1000 kHz | 2 × 666,000 pts/s, 20 pts/m2 (1000 m AGL) |

| Riegl Vux-240 [166], 2021 | UAV | 1550 nm | <0.05 m <0.1 m | 250–1400 m | 150–1800 kHz | 60 pts/m2 (300 m) |

| Method | Advantages | Disadvantages | References | Precision |

|---|---|---|---|---|

| Patch-based DCNN | Weight sharing, less parameter | Inefficiency, large-scale training samples | [168], 2016 [38], 2017 | 0.905 0.917 |

| FCN-based | Arbitrary image size, end to end training | Low fitness, low position accuracy, lack of spatial consistency | [36], 2016 | 0.710 |

| DeconvNet-based | Arbitrary image size, end to end training, better fitness | High cost of computing and storage | [49], 2017 [51], 2018 | 0.858 0.919 |

| GAN-based | More consistent | Non-convergence, gradient vanishing, and model collapse | [65], 2017 [66], 2017 | 0.841 0.883 |

| Graph-based | High connectivity | Complex graph reconstruction and optimisation | [169], 2018 [170], 2020 | 0.835 0.823 |

| Method | Platform | Characteristic | References |

|---|---|---|---|

| Traditional process includes the spectral information | Spaceborne | Extract the main roads | [190], 2003 |

| Spectral mixture and Q-tree filter | Airborne | Assess road quality | [191], 2001 |

| Pixel to pixel classification | Airborne | Extract asphalted urban roads | [194], 2008 |

| Spectral angle mapper | Airborne | Road classification and condition determination | [196], 2012 |

| Computing the angle from spectral response | UAV | Detect pavement roads | [198], 2019 |

| Method | Category | Characteristic | References | Precision |

|---|---|---|---|---|

| Multiple Detectors | Heuristic | Fusion of different pre-processing algorithms, road extractors | [202], 2003 | 0.580 correctness |

| Line based on vector Radon transform | Heuristic | Suitable for different platform SAR images | [209], 2019 | 0.700–0.940 correctness |

| Multitemporal InSAR covariance and information fusion | Heuristic | Use interferometric information | [210], 2017 | 0.816 correctness |

| FCN-based | Data-driven | Automatic road extraction | [158], 2019 | 0.921 |

| FCN-8s | Data-driven | Lack efficiency | [46], 2018 | 0.717 |

| Method | Category | Characteristic | References | Correctness |

|---|---|---|---|---|

| Hierarchical fusion and optimisation | ALS | Extract road centreline | [217], 2015 | 0.914 |

| Point-based classification Raster-based classification | MS-ALS | Land cover classification | [226], 2017 | 0.920 0.860 |

| Object-based image analysis and random forest | MS-ALS | road detection and road surface classification | [227], 2017 | 0.805 |

| Support vector machine | MS-ALS | Three types of asphalt and a concrete class | [228], 2018 | 0.947 (Overall accuracy) |

| Hybrid capsule network | MS-ALS | Land cover classification | [231], 2020 | 0.979 (Overall accuracy) |

| Data | Resolution/ Mapping Unit | Extent | Advantages | Roads Extracted Mostly by |

|---|---|---|---|---|

| High spatial resolution [71], 2020 | 0.5–10 m | Local/regional/global | Most tools available, “basic” software | Colour, texture |

| Hyperspectral [198], 2019 | 0.25–30 m/ (>100 channels) | Local/regional | Spectral information | Colour, texture and spectral features |

| SAR [72], 2014 | 1–10 m | Local/regional/global | See through clouds, rapid mapping | Linear features/edge |

| ALS [75], 2017 | 0.25–2 m | Local (nationwide) | Height information | 3D geometry (intensity) |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jia, J.; Sun, H.; Jiang, C.; Karila, K.; Karjalainen, M.; Ahokas, E.; Khoramshahi, E.; Hu, P.; Chen, C.; Xue, T.; et al. Review on Active and Passive Remote Sensing Techniques for Road Extraction. Remote Sens. 2021, 13, 4235. https://doi.org/10.3390/rs13214235

Jia J, Sun H, Jiang C, Karila K, Karjalainen M, Ahokas E, Khoramshahi E, Hu P, Chen C, Xue T, et al. Review on Active and Passive Remote Sensing Techniques for Road Extraction. Remote Sensing. 2021; 13(21):4235. https://doi.org/10.3390/rs13214235

Chicago/Turabian StyleJia, Jianxin, Haibin Sun, Changhui Jiang, Kirsi Karila, Mika Karjalainen, Eero Ahokas, Ehsan Khoramshahi, Peilun Hu, Chen Chen, Tianru Xue, and et al. 2021. "Review on Active and Passive Remote Sensing Techniques for Road Extraction" Remote Sensing 13, no. 21: 4235. https://doi.org/10.3390/rs13214235

APA StyleJia, J., Sun, H., Jiang, C., Karila, K., Karjalainen, M., Ahokas, E., Khoramshahi, E., Hu, P., Chen, C., Xue, T., Wang, T., Chen, Y., & Hyyppä, J. (2021). Review on Active and Passive Remote Sensing Techniques for Road Extraction. Remote Sensing, 13(21), 4235. https://doi.org/10.3390/rs13214235