Robust Damage Estimation of Typhoon Goni on Coconut Crops with Sentinel-2 Imagery

Abstract

:1. Introduction

2. Related Work

2.1. Disaster Response

2.2. Vegetation Mapping

2.3. Change Detection

3. Area of Interest

Data

4. Methods

4.1. Active Learning for Density Estimation

4.2. Robust Density Change Detection

4.3. Baselines

5. Results

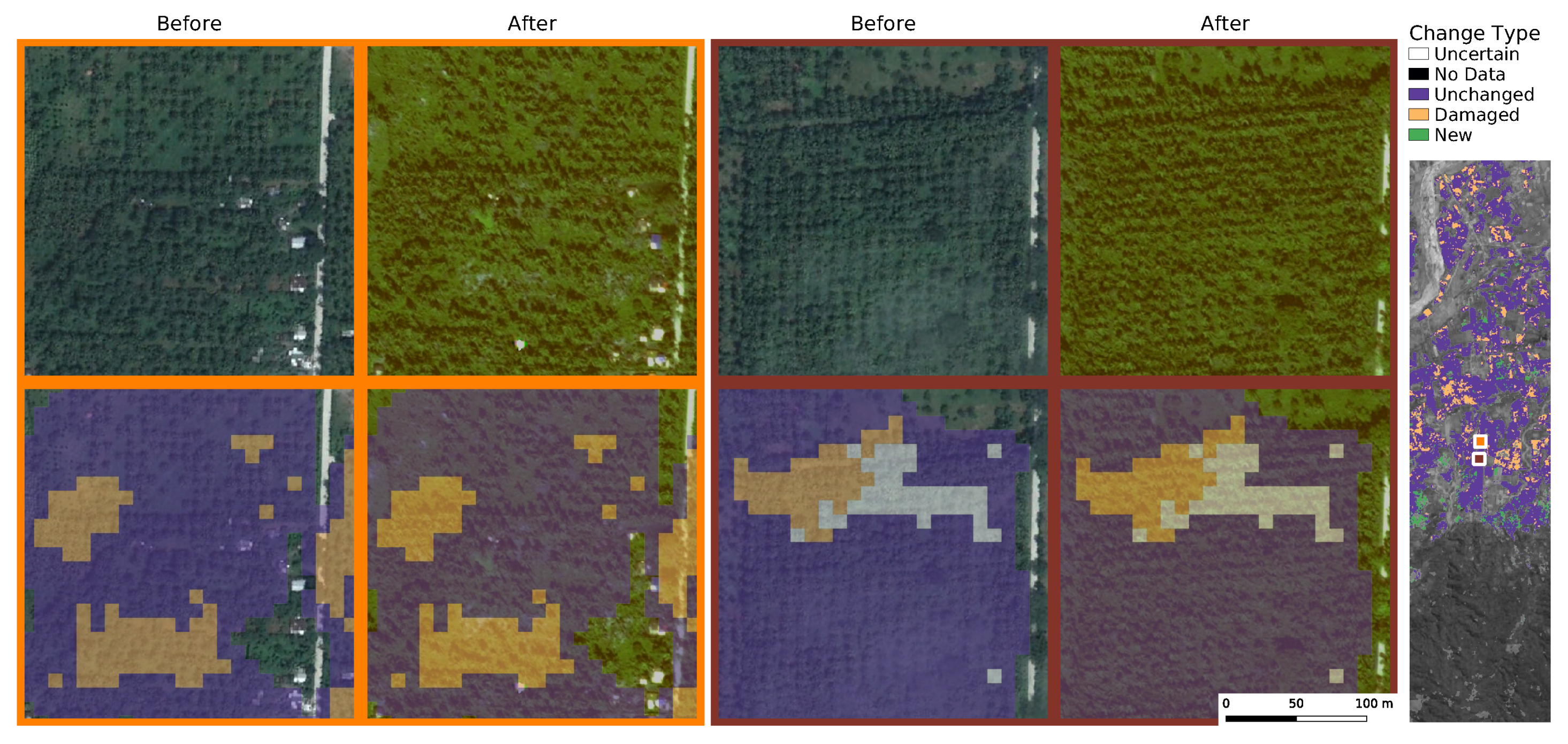

5.1. Estimated Coconut Damages

5.2. Coconut Damage Evaluation

5.3. Baseline Comparisons

6. Discussion

6.1. Sentinel-2 for Damage Detection

6.2. SAR Sensors for Damage Estimation

6.3. Limitations of Our Study

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- United Nations Office for the Coordination of Humanitarian Affairs. Philippines: Super Typhoon Goni (Rolly) Humanitarian Needs and Priorities (Nov 2020–April 2021). 2020. Available online: https://reliefweb.int/report/philippines/philippines-super-typhoon-goni-rolly-humanitarian-needs-and-priorities-nov-2020 (accessed on 30 November 2020).

- Aon plc.; Global Catastrophe Recap November 2020. 2020. Available online: http://thoughtleadership.aon.com/documents/20201210_analytics-if-november-global-recap.pdf (accessed on 30 June 2021).

- International Federation of Red Cross and Red Crescent Societies. Operation Update Report: Philippines: Floods and Typhoons 2020 (Typhoon Goni); Technical Report; IFRC: Geneva, Switzerland, 2021. [Google Scholar]

- Department of Agriculture, Philippines. DA Allots P8.5 B to Enable Typhoon-Affected Farmers, Fishers Recover, Start Anew. November 2020. Available online: https://www.da.gov.ph/da-allots-p8-5-b-to-enable-typhoon-affected-farmers-fishers-recover-start-anew/ (accessed on 30 June 2021).

- Seriño, M.N.V.; Cavero, J.A.; Cuizon, J.; Ratilla, T.C.; Ramoneda, B.M.; Bellezas, M.H.I.; Ceniza, M.J.C. Impact of the 2013 super typhoon haiyan on the livelihood of small-scale coconut farmers in Leyte island, Philippines. Int. J. Disaster Risk Reduct. 2021, 52, 101939. [Google Scholar] [CrossRef]

- Elmer Abonales, R.T.S. Typhoon Yolanda Coconut DAMAGE Report; Philippine Coconut Authority (PCA): Leyte, Philippines, 2013. [Google Scholar]

- Philippine Coconut Authority. Initial Report on Damage to Coconut by Tropical Storm Urduja & Typhoon Vinta. December 2017. Available online: https://pca.gov.ph/index.php/about-us/overview/10-news/110-initial-report-on-damage-to-coconut-by-tropical-storm-urduja-typhoon-vinta (accessed on 30 June 2021).

- Schumann, G.; Kirschbaum, D.; Anderson, E.; Rashid, K. Role of Earth Observation Data in Disaster Response and Recovery: From Science to Capacity Building. In Earth Science Satellite Applications: Current and Future Prospects; Hossain, F., Ed.; Springer International Publishing: Cham, Switzerland, 2016; pp. 119–146. [Google Scholar] [CrossRef]

- van den Homberg, M.; Monné, R.; Spruit, M. Bridging the information gap of disaster responders by optimizing data selection using cost and quality. Comput. Geosci. 2018, 120, 60–72. [Google Scholar] [CrossRef]

- Varghese, A.O.; Suryavanshi, A.; Joshi, A.K. Analysis of different polarimetric target decomposition methods in forest density classification using C band SAR data. Int. J. Remote Sens. 2016, 37, 694–709. [Google Scholar] [CrossRef]

- Gong, M.; Zhao, J.; Liu, J.; Miao, Q.; Jiao, L. Change Detection in Synthetic Aperture Radar Images Based on Deep Neural Networks. IEEE Trans. Neural Networks Learn. Syst. 2016, 27, 125–138. [Google Scholar] [CrossRef] [PubMed]

- Turkoglu, M.O.; D’Aronco, S.; Perich, G.; Liebisch, F.; Streit, C.; Schindler, K.; Wegner, J.D. Crop mapping from image time series: Deep learning with multi-scale label hierarchies. Remote Sens. Environ. 2021, 264, 112603. [Google Scholar] [CrossRef]

- Rußwurm, M.; Körner, M. Self-attention for raw optical satellite time series classification. ISPRS J. Photogramm. Remote Sens. 2020, 169, 421–435. [Google Scholar] [CrossRef]

- Descals, A.; Szantoi, Z.; Meijaard, E.; Sutikno, H.; Rindanata, G.; Wich, S. Oil Palm (Elaeis guineensis) Mapping with Details: Smallholder versus Industrial Plantations and their Extent in Riau, Sumatra. Remote Sens. 2019, 11, 2590. [Google Scholar] [CrossRef] [Green Version]

- Rodríguez, A.C.; D’Aronco, S.; Schindler, K.; Wegner, J.D. Mapping oil palm density at country scale: An active learning approach. Remote Sens. Environ. 2021, 261, 112479. [Google Scholar] [CrossRef]

- Lang, N.; Schindler, K.; Wegner, J.D. Country-wide high-resolution vegetation height mapping with Sentinel-2. Remote Sens. Environ. 2019, 233, 111347. [Google Scholar] [CrossRef] [Green Version]

- Rodriguez, A.C.; Wegner, J.D. Counting the uncountable: Deep semantic density estimation from space. In German Conference on Pattern Recognition; Springer: Stuttgart, Germany, 2018; pp. 351–362. [Google Scholar]

- Holland, G.; Bruyère, C.L. Recent intense hurricane response to global climate change. Clim. Dyn. 2014, 42, 617–627. [Google Scholar] [CrossRef] [Green Version]

- Kousky, C. Informing climate adaptation: A review of the economic costs of natural disasters. Energy Econ. 2014, 46, 576–592. [Google Scholar] [CrossRef]

- Li, Q.; Wang, C.; Zhang, H. A probabilistic framework for hurricane damage assessment considering non-stationarity and correlation in hurricane actions. Struct. Saf. 2016, 59, 108–117. [Google Scholar] [CrossRef]

- Wang, W.; Qu, J.J.; Hao, X.; Liu, Y.; Stanturf, J.A. Post-hurricane forest damage assessment using satellite remote sensing. Agric. For. Meteorol. 2010, 150, 122–132. [Google Scholar] [CrossRef]

- Mondal, D.; Chowdhury, S.; Basu, D. Role of Non Governmental Organization in Disaster Management. Res. J. Agric. Sci. 2015, 6, 1485–1489. [Google Scholar]

- de Waal, A.; Hilhorst, D.; Chan, E.Y.Y. Public Health Humanitarian Responses to Natural Disasters; Routledge: London, UK, 2017. [Google Scholar]

- Neumayer, E.; Plümper, T.; Barthel, F. The political economy of natural disaster damage. Glob. Environ. Chang. 2014, 24, 8–19. [Google Scholar] [CrossRef] [Green Version]

- Boccardo, P.; Giulio Tonolo, F. Remote Sensing Role in Emergency Mapping for Disaster Response. In Engineering Geology for Society and Territory—Volume 5; Lollino, G., Manconi, A., Guzzetti, F., Culshaw, M., Bobrowsky, P., Luino, F., Eds.; Springer International Publishing: Cham, Switzerland, 2015; pp. 17–24. [Google Scholar]

- Sun, W.; Bocchini, P.; Davison, B.D. Applications of artificial intelligence for disaster management. Nat. Hazards 2020, 103, 2631–2689. [Google Scholar] [CrossRef]

- Dutta, R.; Aryal, J.; Das, A.; Kirkpatrick, J.B. Deep cognitive imaging systems enable estimation of continental-scale fire incidence from climate data. Sci. Rep. 2013, 3, 3188. [Google Scholar] [CrossRef] [Green Version]

- Jiang, S.; Friedland, C.J. Automatic urban debris zone extraction from post-hurricane very high-resolution satellite and aerial imagery. Geomat. Nat. Hazards Risk 2016, 7, 933–952. [Google Scholar] [CrossRef]

- Hamdi, Z.M.; Brandmeier, M.; Straub, C. Forest Damage Assessment Using Deep Learning on High Resolution Remote Sensing Data. Remote Sens. 2019, 11, 1976. [Google Scholar] [CrossRef] [Green Version]

- Rudner, T.G.; Rußwurm, M.; Fil, J.; Pelich, R.; Bischke, B.; Kopačková, V.; Biliński, P. Multi3Net: Segmenting flooded buildings via fusion of multiresolution, multisensor, and multitemporal satellite imagery. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27–28 January 2019; Volume 33, pp. 702–709. [Google Scholar] [CrossRef]

- Sheykhmousa, M.; Kerle, N.; Kuffer, M.; Ghaffarian, S. Post-Disaster Recovery Assessment with Machine Learning-Derived Land Cover and Land Use Information. Remote Sens. 2019, 11, 1174. [Google Scholar] [CrossRef] [Green Version]

- Xu, J.Z.; Lu, W.; Li, Z.; Khaitan, P.; Zaytseva, V. Building Damage Detection in Satellite Imagery Using Convolutional Neural Networks. arXiv 2019, arXiv:1910.06444. [Google Scholar]

- Ghaffarian, S.; Rezaie Farhadabad, A.; Kerle, N. Post-Disaster Recovery Monitoring with Google Earth Engine. Appl. Sci. 2020, 10, 4574. [Google Scholar] [CrossRef]

- Zhao, Q.; Chen, Z.; Liu, C.; Luo, N. Extracting and classifying typhoon disaster information based on volunteered geographic information from Chinese Sina microblog. Concurr. Comput. Pract. Exp. 2019, 31, e4910. [Google Scholar] [CrossRef]

- Escobedo, F.J.; Luley, C.J.; Bond, J.; Staudhammer, C.; Bartel, C. Hurricane debris and damage assessment for Florida urban forests. J. Arboric. 2009, 35, 100. [Google Scholar]

- Bai, Y.; Gao, C.; Singh, S.; Koch, M.; Adriano, B.; Mas, E.; Koshimura, S. A Framework of Rapid Regional Tsunami Damage Recognition From Post-event TerraSAR-X Imagery Using Deep Neural Networks. IEEE Geosci. Remote Sens. Lett. 2018, 15, 43–47. [Google Scholar] [CrossRef] [Green Version]

- Ireland, G.; Volpi, M.; Petropoulos, G.P. Examining the Capability of Supervised Machine Learning Classifiers in Extracting Flooded Areas from Landsat TM Imagery: A Case Study from a Mediterranean Flood. Remote Sens. 2015, 7, 3372–3399. [Google Scholar] [CrossRef] [Green Version]

- Yang, L.; Cervone, G. Analysis of remote sensing imagery for disaster assessment using deep learning: A case study of flooding event. Soft Comput. 2019, 23, 13393–13408. [Google Scholar] [CrossRef]

- Cooner, A.J.; Shao, Y.; Campbell, J.B. Detection of Urban Damage Using Remote Sensing and Machine Learning Algorithms: Revisiting the 2010 Haiti Earthquake. Remote Sens. 2016, 8, 868. [Google Scholar] [CrossRef] [Green Version]

- Kovordányi, R.; Roy, C. Cyclone track forecasting based on satellite images using artificial neural networks. ISPRS J. Photogramm. Remote Sens. 2009, 64, 513–521. [Google Scholar] [CrossRef] [Green Version]

- Vetrivel, A.; Kerle, N.; Gerke, M.; Nex, F.; Vosselman, G. Towards automated satellite image segmentation and classification for assessing disaster damage using data-specific features with incremental learning. In Proceedings of the Geographic Object Based Image Analysis (GEOBIA), Enschede, The Netherlands, 14–16 September 2016. [Google Scholar]

- Vetrivel, A.; Gerke, M.; Kerle, N.; Nex, F.; Vosselman, G. Disaster damage detection through synergistic use of deep learning and 3D point cloud features derived from very high resolution oblique aerial images, and multiple-kernel-learning. ISPRS J. Photogramm. Remote Sens. 2018, 140, 45–59. [Google Scholar] [CrossRef]

- Gupta, R.; Hosfelt, R.; Sajeev, S.; Patel, N.; Goodman, B.; Doshi, J.; Heim, E.; Choset, H.; Gaston, M. xbd: A dataset for assessing building damage from satellite imagery. arXiv 2019, arXiv:1911.09296. [Google Scholar]

- Bejiga, M.B.; Zeggada, A.; Nouffidj, A.; Melgani, F. A Convolutional Neural Network Approach for Assisting Avalanche Search and Rescue Operations with UAV Imagery. Remote Sens. 2017, 9, 100. [Google Scholar] [CrossRef] [Green Version]

- Daudt, R.C.; Saux, B.L.; Boulch, A.; Gousseau, Y. Multitask Learning for Large-scale Semantic Change Detection. Comput. Vis. Image Underst. 2019, 187, 102783. [Google Scholar] [CrossRef] [Green Version]

- Zhu, X.X.; Tuia, D.; Mou, L.; Xia, G.S.; Zhang, L.; Xu, F.; Fraundorfer, F. Deep Learning in Remote Sensing: A Comprehensive Review and List of Resources. IEEE Geosci. Remote Sens. Mag. 2017, 5, 8–36. [Google Scholar] [CrossRef] [Green Version]

- Jasinski, M. Estimation of subpixel vegetation density of natural regions using satellite multispectral imagery. IEEE Trans. Geosci. Remote Sens. 1996, 34, 804–813. [Google Scholar] [CrossRef]

- Carlson, T.N.; Ripley, D.A. On the relation between NDVI, fractional vegetation cover, and leaf area index. Remote Sens. Environ. 1997, 62, 241–252. [Google Scholar] [CrossRef]

- Hansen, M.C.; DeFries, R.S.; Townshend, J.R.G.; Carroll, M.; Dimiceli, C.; Sohlberg, R.A. Global Percent Tree Cover at a Spatial Resolution of 500 Meters: First Results of the MODIS Vegetation Continuous Fields Algorithm. Earth Interact. 2003, 7, 1–15. [Google Scholar] [CrossRef] [Green Version]

- Agapiou, A. Estimating Proportion of Vegetation Cover at the Vicinity of Archaeological Sites Using Sentinel-1 and -2 Data, Supplemented by Crowdsourced OpenStreetMap Geodata. Appl. Sci. 2020, 10, 4764. [Google Scholar] [CrossRef]

- Melville, B.; Lucieer, A.; Aryal, J. Classification of lowland native grassland communities using hyperspectral Unmanned Aircraft System (UAS) Imagery in the Tasmanian midlands. Drones 2019, 3, 5. [Google Scholar] [CrossRef] [Green Version]

- Hansen, M.C.; Potapov, P.V.; Moore, R.; Hancher, M.; Turubanova, S.A.; Tyukavina, A.; Thau, D.; Stehman, S.V.; Goetz, S.J.; Loveland, T.R.; et al. High-Resolution Global Maps of 21st-Century Forest Cover Change. Science 2013, 342, 850–853. [Google Scholar] [CrossRef] [Green Version]

- Khan, S.H.; He, X.; Porikli, F.; Bennamoun, M. Forest Change Detection in Incomplete Satellite Images With Deep Neural Networks. IEEE Trans. Geosci. Remote Sens. 2017, 55, 5407–5423. [Google Scholar] [CrossRef]

- Hunt, M.L.; Blackburn, G.A.; Carrasco, L.; Redhead, J.W.; Rowland, C.S. High resolution wheat yield mapping using Sentinel-2. Remote Sens. Environ. 2019, 233, 111410. [Google Scholar] [CrossRef]

- You, J.; Li, X.; Low, M.; Lobell, D.; Ermon, S. Deep Gaussian Process for Crop Yield Prediction Based on Remote Sensing Data. In Proceedings of the AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017; Volume 31. [Google Scholar]

- Xu, Y.; Yu, L.; Li, W.; Ciais, P.; Cheng, Y.; Gong, P. Annual oil palm plantation maps in Malaysia and Indonesia from 2001 to 2016. Earth Syst. Sci. Data 2020, 12, 847–867. [Google Scholar] [CrossRef] [Green Version]

- Singh, A. Review Article Digital Change Detection Techniques Using Remotely-sensed Data. Int. J. Remote Sens. 1989, 10, 989–1003. [Google Scholar] [CrossRef] [Green Version]

- Hussain, M.; Chen, D.; Cheng, A.; Wei, H.; Stanley, D. Change Detection from Remotely Sensed Images: From Pixel-based to Object-based Approaches. ISPRS J. Photogramm. Remote Sens. 2013, 80, 91–106. [Google Scholar] [CrossRef]

- Caye Daudt, R. Convolutional Neural Networks for Change Analysis in Earth Observation Images with NOISY labels and Domain Shifts. Ph.D. Thesis, Institut Polytechnique de Paris, Paris, France, 2020. [Google Scholar]

- Celik, T. Unsupervised Change Detection in Satellite Images Using Principal Component Analysis and k-Means Clustering. IEEE Geosci. Remote Sens. Lett. 2009, 6, 772–776. [Google Scholar] [CrossRef]

- Du, B.; Ru, L.; Wu, C.; Zhang, L. Unsupervised deep slow feature analysis for change detection in multi-temporal remote sensing images. IEEE Trans. Geosci. Remote Sens. 2019, 57, 9976–9992. [Google Scholar] [CrossRef] [Green Version]

- Holgado Alvarez, J.L.; Ravanbakhsh, M.; Demir, B. S2-CGAN: Self-Supervised Adversarial Representation Learning for Binary Change Detection in Multispectral Images. In Proceedings of the IGARSS 2020—2020 IEEE International Geoscience and Remote Sensing Symposium, Waikoloa, HI, USA, 26 September–2 October 2020; pp. 2515–2518. [Google Scholar] [CrossRef]

- Singh, S.; Talwar, R. Review on different change vector analysis algorithms based change detection techniques. In Proceedings of the 2013 IEEE Second International Conference on Image Information Processing (ICIIP-2013), Shimla, India, 9–11 December 2013; pp. 136–141. [Google Scholar] [CrossRef]

- Melgani, F.; Moser, G.; Serpico, S.B. Unsupervised change detection methods for remote sensing images. In Image and Signal Processing for Remote Sensing VII; Serpico, S.B., Ed.; International Society for Optics and Photonics, SPIE: Bellingham, WA, USA, 2002; Volume 4541, pp. 211–222. [Google Scholar]

- Knapp, K.; Diamond, H.; Kossin, J.; Kruk, M.; Schreck, C. International Best Track Archive for Climate Stewardship (IBTRACS) Project, Version 4; National Centers for Environmental Information, NESDIS, NOAA, U.S. Department of Commerce: Washington, DC, USA, 2018. [Google Scholar]

- Kendall, A.; Gal, Y. What uncertainties do we need in bayesian deep learning for computer vision? In Proceedings of the 31st Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 5574–5584. [Google Scholar]

- Ovadia, Y.; Fertig, E.; Ren, J.; Nado, Z.; Sculley, D.; Nowozin, S.; Dillon, J.; Lakshminarayanan, B.; Snoek, J. Can you trust your model’s uncertainty? Evaluating predictive uncertainty under dataset shift. In Proceedings of the 33rd International Conference on Neural Information Processing Systems, Vancouver, BC, Canada, 8–14 December 2019; pp. 13991–14002. [Google Scholar]

- Malila, W.A. Change vector analysis: An approach for detecting forest changes with Landsat. In LARS Symposia; Institute of Electrical and Electronics Engineers: New York, NY, USA, 1980; p. 385. [Google Scholar]

- Saha, S.; Bovolo, F.; Bruzzone, L. Unsupervised deep change vector analysis for multiple-change detection in VHR images. IEEE Trans. Geosci. Remote Sens. 2019, 57, 3677–3693. [Google Scholar] [CrossRef]

- Wiskott, L.; Sejnowski, T.J. Slow feature analysis: Unsupervised learning of invariances. Neural Comput. 2002, 14, 715–770. [Google Scholar] [CrossRef] [PubMed]

- Wu, C.; Chen, H.; Du, B.; Zhang, L. Unsupervised Change Detection in Multitemporal VHR Images Based on Deep Kernel PCA Convolutional Mapping Network. IEEE Trans. Cybern. 2021, 1–15. [Google Scholar] [CrossRef]

- Louis, J.; Debaecker, V.; Pflug, B.; Main-Knorn, M.; Bieniarz, J.; Mueller-Wilm, U.; Cadau, E.; Gascon, F. Sentinel-2 Sen2Cor: L2A processor for users. In Living Planet Symposium 2016; Spacebooks Online: Prague, Czech Republic, 2016; pp. 1–8. [Google Scholar]

- Hoá’ná, D. Development and structure of foliage in wheat stands of different density. Biol. Plant. 1967, 9, 424–438. [Google Scholar] [CrossRef]

- Petropoulos, S.A.; Daferera, D.; Polissiou, M.; Passam, H. The effect of water deficit stress on the growth, yield and composition of essential oils of parsley. Sci. Hortic. 2008, 115, 393–397. [Google Scholar] [CrossRef]

- Underwood, J.P.; Hung, C.; Whelan, B.; Sukkarieh, S. Mapping almond orchard canopy volume, flowers, fruit and yield using lidar and vision sensors. Comput. Electron. Agric. 2016, 130, 83–96. [Google Scholar] [CrossRef]

- Kulkarni, S.C.; Rege, P.P. Pixel level fusion techniques for SAR and optical images: A review. Inf. Fusion 2020, 59, 13–29. [Google Scholar] [CrossRef]

- Schmitt, M.; Hughes, L.; Zhu, X. The sen1-2 dataset for deep learning in sar-optical data fusion. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2018, IV-1, 141–146. [Google Scholar] [CrossRef] [Green Version]

- Lin, N.; Emanuel, K.; Oppenheimer, M.; Vanmarcke, E. Physically based assessment of hurricane surge threat under climate change. Nat. Clim. Chang. 2012, 2, 462–467. [Google Scholar] [CrossRef] [Green Version]

| Recall (%) | MAE | |

|---|---|---|

| 0.10 | 46.68 | 1.08 |

| 0.20 | 64.92 | 2.16 |

| 0.40 | 95.50 | 5.33 |

| 0.45 | 98.20 | 5.91 |

| 0.50 | 99.39 | 6.26 |

| 2.00 | 100.00 | 6.50 |

| State | Before [M] | After [M] | Relative Damage [%] | Total [%] | Recall [%] |

|---|---|---|---|---|---|

| Quezon | 12.2 | 9.3 | 23.7 | 20.9 | 76.8 |

| Northern Samar | 6.2 | 4.2 | 32.9 | 10.7 | 84.0 |

| Camarines Sur | 4.6 | 1.1 | 77.1 | 7.9 | 70.5 |

| Sorsogon | 4.5 | 4.8 | −5.5 | 7.8 | 73.1 |

| Eastern Samar | 4.4 | 2.9 | 33.0 | 7.5 | 84.0 |

| Masbate | 3.9 | 4.6 | −18.4 | 6.6 | 96.0 |

| Samar | 3.3 | 2.3 | 30.3 | 5.7 | 92.3 |

| Leyte | 3.3 | 2.0 | 40.2 | 5.7 | 87.0 |

| Albay | 2.4 | 1.1 | 52.8 | 4.1 | 89.3 |

| Camarines Norte | 2.4 | 1.3 | 44.1 | 4.1 | 52.3 |

| Aurora | 1.8 | 1.4 | 21.7 | 3.1 | 82.7 |

| Oriental Mindoro | 1.3 | 1.3 | 2.1 | 2.2 | 62.4 |

| Cebu | 1.1 | 1.1 | −7.7 | 1.8 | 97.5 |

| Biliran | 1.0 | 1.1 | −6.0 | 1.7 | 90.5 |

| Romblon | 1.0 | 1.1 | −12.3 | 1.6 | 89.2 |

| Ours | GAN | k-Means | DSFA | DCVA | CVA | KPCA | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| C | N | U | C | N | C | N | C | N | C | N | C | N | C | N | |

| Coconut | |||||||||||||||

| C (11) | 9 | 1 | 1 | 0 | 11 | 3 | 8 | 0 | 11 | 0 | 11 | 3 | 8 | 3 | 8 |

| N (30) | 3 | 22 | 5 | 7 | 23 | 18 | 12 | 0 | 30 | 7 | 23 | 8 | 22 | 0 | 30 |

| Background | |||||||||||||||

| (46) | 0 | 43 | 3 | 0 | 46 | 29 | 17 | 0 | 46 | 10 | 36 | 14 | 32 | 4 | 42 |

| Ours | GAN | k-Means | DSFA | DCVA | CVA | KPCA | |

|---|---|---|---|---|---|---|---|

| Accuracy | 88.6 | 56.1 | 36.6 | 73.2 | 56.1 | 61.0 | 80.5 |

| Recall | 90.0 | 0.0 | 27.3 | 0.0 | 0.0 | 27.3 | 27.3 |

| Precision | 75.0 | 0.0 | 14.3 | 0.0 | 0.0 | 27.3 | 100.0 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Rodríguez, A.C.; Daudt, R.C.; D’Aronco, S.; Schindler, K.; Wegner, J.D. Robust Damage Estimation of Typhoon Goni on Coconut Crops with Sentinel-2 Imagery. Remote Sens. 2021, 13, 4302. https://doi.org/10.3390/rs13214302

Rodríguez AC, Daudt RC, D’Aronco S, Schindler K, Wegner JD. Robust Damage Estimation of Typhoon Goni on Coconut Crops with Sentinel-2 Imagery. Remote Sensing. 2021; 13(21):4302. https://doi.org/10.3390/rs13214302

Chicago/Turabian StyleRodríguez, Andrés C., Rodrigo Caye Daudt, Stefano D’Aronco, Konrad Schindler, and Jan D. Wegner. 2021. "Robust Damage Estimation of Typhoon Goni on Coconut Crops with Sentinel-2 Imagery" Remote Sensing 13, no. 21: 4302. https://doi.org/10.3390/rs13214302