Boost Precision Agriculture with Unmanned Aerial Vehicle Remote Sensing and Edge Intelligence: A Survey

Abstract

:1. Introduction

2. UAV Remote Sensing in Precision Agriculture

2.1. UAV Systems and Sensors for Precision Agriculture

2.2. Application of UAV Remote Sensing in Precision Agriculture

- Weed detection and mapping: As weeds have been responsible for most agricultural yield losses, the utilization of herbicides is important in the growth of crops, but the unreasonable use will cause a series of environmental problems. To fulfill precision weed management [19,74], UAV RS can help to accurately locate weed areas, analyze weed types and weed density etc., thus using herbicides at fixed points quantitatively or applying improved and targeted mechanical soil tillage [27]. Weeds detection and mapping tries to find/map the locations of weeds in the obtained UAV RS images, and is achieved generally based on the different spatial distribution [27,75], shape [76], spectral signatures [53,77,78,79], or their combinations [80] of weeds compared to normal crops. Accordingly, the most important sensors as UAV payload are mainly RGB sensors [27,76,77,80], multispectral sensors [53,78] and hyperspectral sensors [79] ADDIN.

- Crop pest and disease detection: Field crops are subjected to the attack of various pests and diseases at stages from sowing to harvest, which affects the yield and quality of crops and become one of the main limits to agricultural production in China. As the main part of pest and disease management, early detection of pests and diseases from UAV RS images allows efficient application of pesticides and an early response to the production costs and environmental impact. Crop pest and disease detection tries to locate the possible pest or disease infected areas on leaves from observed UAV RS images, and the detection basis is mainly their spectral difference [81]. To obtain more details of pests and diseases on leaves, UAVs are usually with low flight height for observations with high spatial or spectral resolution [82,83,84]. Commonly mounted sensors are RGB sensors [83,85,86,87], multispectral sensors [88], infrared sensors [89] and hyperspectral sensors [82,84].

- Crop growth monitoring: RS can be used to monitor group and individual characteristics of crop growth, e.g., crop seedling condition, growth status and changes. Crop growth information monitoring is fundamental for regulating crop growth, diagnosing crop nutrient deficiencies, analyzing and predicting crop yield etc., and can provide decision-making basis for agricultural policy formulation and food trade. Crop growth monitoring is to build a multitemporal crop model to allow for comparison of different phenological stages [90], and UAV provides a good platform for obtaining crop information [91]. The crop growth is generally quantified by several indices, such as LAI, leaf dry weight, leaf nitrogen accumulation, etc., in which multiple spectral bands are usually needed. As a relatively more comprehensive task, sensors onboard UAVs are usually multispectral/hyperspectral ones [91,92] or the combination of RGB and infrared [93] or LiDAR [63].

- Crop yield estimation: Accurate yield estimates are essential for predicting the volume of stock needed and organizing harvesting operations. RS information can be used as input variables or parameters to directly or indirectly reflect the influencing factors in the process of crop growth and yield formation, alone or in combination with other information for crop yield estimation. It tries to estimate the crop yield by observing the morphological characteristics of crops in a non-destructive way [16]. Similar to the task of crop growth monitoring, crop yield estimation also relies on multiple spectral bands for better and richer information. Therefore, UAVs are usually equipped with multimodal sensors, for example, hyperspectral/multispectral [15,16,94,95,96,97], thermal infrared [95], and combination with RGB [15,16,94,95,96,97] or SAR [66].

- Crop type classification: Crop type maps are one of the most essential inputs for agriculture tasks such as crop yield estimation, and accurate crop type identification is important for subsidy control, biodiversity monitoring, soil protection, sustainable application of fertilizer etc. There exist practices to explore the discrimination of different crop types from RS images in complex and heterogeneous environments. Crop type classification task tries to discriminate different types of crop into a map based on the information captured by RS data, and is similar to land cover/land use classification [98]. According to the demands of different tasks, it can be implemented from different spatial scales. For larger scale classification, SAR sensors are used [64,99,100], and for smaller scale, RGB images from UAVs can be utilized [101], or with SAR data fused [102].

3. Deep Learning in Precision Agriculture with UAV Remote Sensing

3.1. Deep Learning Methods in Precision Agriculture

- Classification tries to predict the presence/absence of at least one object of a particular object class in the image, and DL algorithms are required to provide a real-valued confidence of the object’s presence. Classification methods are mainly used to recognize crop diseases [86,113,114], weed type [27,115,116], or crop type [117,118].

- Detection tasks try to predict the bounding box of each object of a particular object class in the image with associated confidence, i.e., answer the question “where are the instances in the image, if any?” It means that the extracted object information is relatively more precise. Typical applications are finding the crops with pests [119] or other diseases [120], locating the weeds in the images [121,122], counting the crop number for yield estimation [123,124,125] or disaster evaluation [126], etc.

- Segmentation is a task that predicts the object label (for semantic segmentation) or instance label (for instance segmentation) of each pixel in the test image, which can be viewed as a more precise classification for each pixel. It can not only locate objects, but also obtain their pixels at a finer-grained level. Therefore, segmentation methods are usually used to accurately locate features of interest in images. Semantic segmentation can help locate crop leaf diseases [127,128], generating weed maps [76,78,129], or assessing crop growth [130,131] and yields [132], while instance segmentation can detect crop and weed plants [133,134], or conduct crop seed phenotyping [135] at a finer level.

3.2. Dataset for Intelligent Precision Agriculture

4. Edge Intelligence for UAV RS in Precision Agriculture

4.1. Cloud and Edge Computing for UAVs

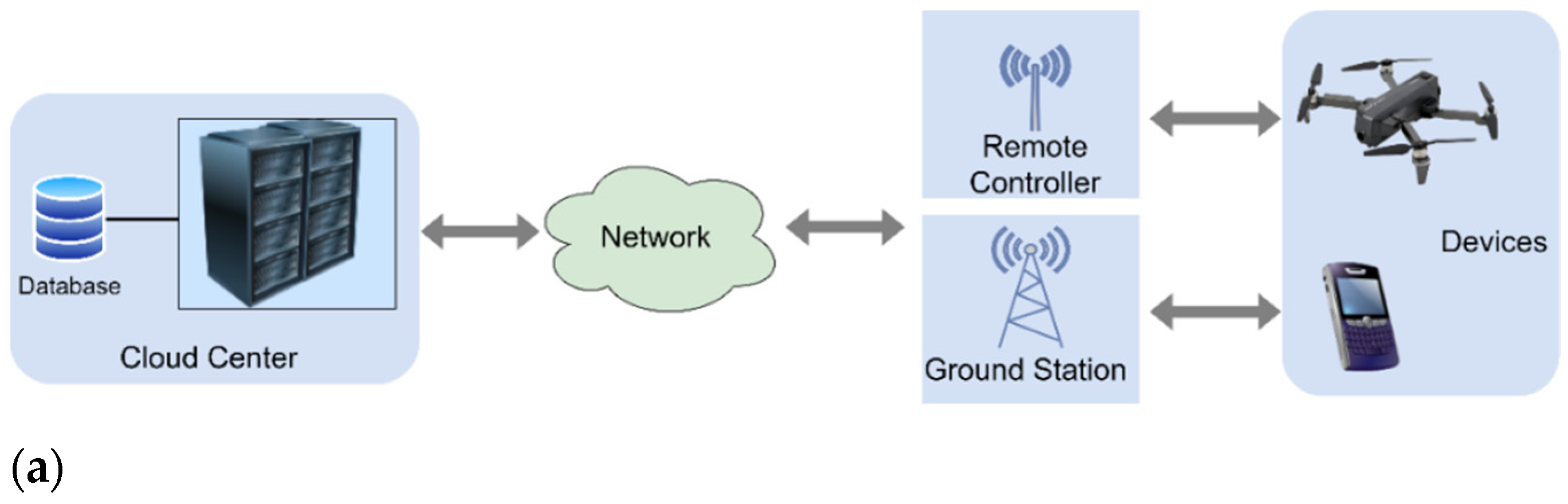

4.1.1. Cloud Computing Paradigm for UAVs

- The number of servers in the cloud is huge, providing users with powerful computing and storage resources for massive UAV RS data processing.

- Cloud computing supports users to obtain services at any location from various terminals such as a laptop or a phone through virtualization.

- Cloud computing is a distributed computing architecture, and issues such as single-point errors are inevitable. The fault-tolerant mechanisms such as copy strategies ensure high reliability for various processing and analysis services.

- Cloud centers can dynamically allocate or release resources according to the needs of specific users, and can meet the dynamic scale growth requirements of applications and users. It benefits from the scalability of cloud computing.

4.1.2. Edge Computing Paradigm for UAVs

- With the rapid development of IoT, devices around the world generate massive data, but only a few are critical and most are temporary, which do not require long-term storage. A large amount of temporary data are processed at the edge of the network, thereby reducing the pressure on network bandwidth and data centers.

- Although cloud computing can provide services for mobile devices to make up for their lack of computing, storage, power resources, the network transmission speed is limited by the development of communication technology, and there are issues such as unstable links and routing in a complex network environment. These factors can cause high latency, excessive jitter and slow data transmission speed, thus reducing the response of cloud services. Edge computing provides services near users, which can enhance the responsiveness of services.

- Edge computing provides infrastructures for the storage and use of critical data and improves data security.

4.2. Edge Intelligence: Convergence of AI and Edge Computing

4.3. Lightweight Network Model Design

4.3.1. Lightweight Convolution Design

4.3.2. Parameter Pruning

4.3.3. Low-rank Factorization

4.3.4. Parameter Quantization

4.3.5. Knowledge Distillation

4.4. Edge Resources for UAV RS

- General-purpose CPU based solutions: Multi-core CPUs are latency-oriented architectures, which have more computational power per core, but less number of cores, and are more suitable for task-level parallelism [215,216]. As for the general-purpose software-programmable platforms, Raspberry Pi has been widely adopted as ready-to-use solutions for a variety of UAV applications due to their weight, size and low power consumption.

- GPU solutions: GPUs have been designed as throughput-oriented architectures, and own less powerful cores than that of CPUs but have hundreds or thousands of cores and significantly larger memory bandwidth, which make GPUs suitable for data-level parallelism [215]. In recent years, the embedded GPUs especially from NVIDIA, for example, the Jetson boards, standing out among the other manufacturers have been widely used to provide flexible solutions compared with FPGAs.

- FPGA solutions: The advent of FPGA-based embedded platforms allows combining high-level management capabilities of processors and flexible operations of programmable hardware [217]. With the advantages of: a) relatively smaller size and weight compared with clusters, multi-core and many-core processors, b) significantly lower power consumption compared with GPUs, and c) reprogrammed ability during the flight different from application-specific integrated circuit (ASIC), FPGA-based platforms such as the Xilinx Zynq-7000 family provide plenty of solutions for real-time processing onboard UAVs [218].

5. Future Directions

- Lightweight intelligent model design in PA for edge inference. As mentioned in Section 4, most DL-based models for UAV RS data processing and analytics in PA are highly resources intensive. Hardware with powerful computing capability is important to support the training and inference of these large AI models. Currently, there are just a few studies towards applying common parameter pruning and quantization methods in PA with UAV RS. The metrics of size and efficiency can be further improved by considering the data and algorithm characteristics and exploiting other sophisticated model compression techniques such as knowledge distillation and a combination of multiple compression methods [183]. In addition, instead of using existing AI models, the neural architecture search (NAS) technique [243] can be utilized to derive models tailored to the hardware resource constraints on the performance metrics, e.g., latency and energy efficiency considering the underlying edge devices [34].

- Transfer learning and incremental learning for intelligent PA models on UAV edge devices. The performance of many DL models heavily relies on the quantity and quality of datasets. However, it is difficult or expensive to collect a large amount of data with labels. Therefore, edge devices can exploit transfer learning to learn a competitive model, in which a pre-trained model with a large-scale dataset is further fine-tuned according to the domain-specific data [244,245]. Secondly, edge devices such as UAVs may collect data with different distributions or even data belonging to an unknown class compared with the original training data during flight. The model on the edge devices can be updated by incremental learning to give better prediction performance [246].

- Collaboration of RS cloud centers, ground control stations and UAV edge devices. To bridge the gap between the low computing and storage capabilities of edge devices and the high resource requirements of DL training, the collaborative computing between the end, the edge and the cloud is a possible solution. It has become the trend for edge intelligence architectures and application scenes. A good cloud-edge-end collaboration architecture should take into account the characteristics of heterogeneous devices, asynchronous communication and diverse computing and storage resources, thus achieving collaborative model training and inference [247]. In the conventional mode, the model training is often performed in the cloud, and the trained model is deployed on edge devices. This mode is simple, but cannot fully utilize resources. For the case of edge intelligence for UAV RS in PA, decentralized edge devices and data centers can cooperate with each other to train or improve a model by using federated learning [248].

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- ISPA. Precision Ag Definition. Available online: https://www.ispag.org/about/definition (accessed on 17 October 2021).

- Messina, G.; Modica, G. Applications of UAV Thermal Imagery in Precision Agriculture: State of the Art and Future Research Outlook. Remote Sens. 2020, 12, 1491. [Google Scholar] [CrossRef]

- Schimmelpfennig, D. Farm profits and adoption of precision agriculture; U.S. Department of Agriculture, Economic Research Service: Washington, DA, USA, 2016.

- Maes, W.H.; Steppe, K. Perspectives for Remote Sensing with Unmanned Aerial Vehicles in Precision Agriculture. Trends Plant Sci. 2019, 24, 152–164. [Google Scholar] [CrossRef]

- Lillesand, T.; Kiefer, R.W.; Chipman, J. Remote Sensing and Image Interpretation; John Wiley & Sons: Hoboken, NJ, USA, 2015. [Google Scholar]

- Mulla, D.J. Twenty five years of remote sensing in precision agriculture: Key advances and remaining knowledge gaps. Biosyst. Eng. 2013, 114, 358–371. [Google Scholar] [CrossRef]

- Eskandari, R.; Mahdianpari, M.; Mohammadimanesh, F.; Salehi, B.; Brisco, B.; Homayouni, S. Meta-Analysis of Unmanned Aerial Vehicle (UAV) Imagery for Agro-Environmental Monitoring Using Machine Learning and Statistical Models. Remote Sens. 2020, 12, 3511. [Google Scholar] [CrossRef]

- Tsouros, D.C.; Bibi, S.; Sarigiannidis, P.G. A Review on UAV-Based Applications for Precision Agriculture. Information 2019, 10, 349. [Google Scholar] [CrossRef] [Green Version]

- Zhang, H.; Wang, L.; Tian, T.; Yin, J. A Review of Unmanned Aerial Vehicle Low-Altitude Remote Sensing (UAV-LARS) Use in Agricultural Monitoring in China. Remote Sens. 2021, 13, 1221. [Google Scholar] [CrossRef]

- Jang, G.; Kim, J.; Yu, J.-K.; Kim, H.-J.; Kim, Y.; Kim, D.-W.; Kim, K.-H.; Lee, C.W.; Chung, Y.S. Review: Cost-Effective Unmanned Aerial Vehicle (UAV) Platform for Field Plant Breeding Application. Remote Sens. 2020, 12, 998. [Google Scholar] [CrossRef] [Green Version]

- US Department of Defense. Unmanned Aerial Vehicle. Available online: https://www.thefreedictionary.com/Unmanned+Aerial+Vehicle (accessed on 19 October 2021).

- Deng, L.; Mao, Z.; Li, X.; Hu, Z.; Duan, F.; Yan, Y. UAV-based multispectral remote sensing for precision agriculture: A comparison between different cameras. ISPRS J. Photogramm. Remote Sens. 2018, 146, 124–136. [Google Scholar] [CrossRef]

- Christiansen, M.P.; Laursen, M.S.; Jørgensen, R.N.; Skovsen, S.; Gislum, R. Designing and Testing a UAV Mapping System for Agricultural Field Surveying. Sensors 2017, 17, 2703. [Google Scholar] [CrossRef] [Green Version]

- Popescu, D.; Stoican, F.; Stamatescu, G.; Ichim, L.; Dragana, C. Advanced UAV–WSN System for Intelligent Monitoring in Precision Agriculture. Sensors 2020, 20, 817. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zhou, X.; Zheng, H.; Xu, X.; He, J.; Ge, X.; Yao, X.; Cheng, T.; Zhu, Y.; Cao, W.; Tian, Y. Predicting grain yield in rice using multi-temporal vegetation indices from UAV-based multispectral and digital imagery. ISPRS J. Photogramm. Remote Sens. 2017, 130, 246–255. [Google Scholar] [CrossRef]

- Yang, Q.; Shi, L.; Han, J.; Zha, Y.; Zhu, P. Deep convolutional neural networks for rice grain yield estimation at the ripening stage using UAV-based remotely sensed images. Field Crops Res. 2019, 235, 142–153. [Google Scholar] [CrossRef]

- Su, J.; Liu, C.; Coombes, M.; Hu, X.; Wang, C.; Xu, X.; Li, Q.; Guo, L.; Chen, W.-H. Wheat yellow rust monitoring by learning from multispectral UAV aerial imagery. Comput. Electron. Agric. 2018, 155, 157–166. [Google Scholar] [CrossRef]

- Guo, A.; Huang, W.; Dong, Y.; Ye, H.; Ma, H.; Liu, B.; Wu, W.; Ren, Y.; Ruan, C.; Geng, Y. Wheat Yellow Rust Detection Using UAV-Based Hyperspectral Technology. Remote Sens. 2021, 13, 123. [Google Scholar] [CrossRef]

- Bajwa, A.; Mahajan, G.; Chauhan, B. Nonconventional Weed Management Strategies for Modern Agriculture. Weed Sci. 2015, 63, 723–747. [Google Scholar] [CrossRef]

- Huang, Y.; Reddy, K.N.; Fletcher, R.S.; Pennington, D. UAV Low-Altitude Remote Sensing for Precision Weed Management. Weed Technol. 2018, 32, 2–6. [Google Scholar] [CrossRef]

- Van Klompenburg, T.; Kassahun, A.; Catal, C. Crop yield prediction using machine learning: A systematic literature review. Comput. Electron. Agric. 2020, 177, 105709. [Google Scholar] [CrossRef]

- Su, Y.-X.; Xu, H.; Yan, L.-J. Support vector machine-based open crop model (SBOCM): Case of rice production in China. Saudi J. Biol. Sci. 2017, 24, 537–547. [Google Scholar] [CrossRef]

- Everingham, Y.; Sexton, J.; Skocaj, D.; Inman-Bamber, G. Accurate prediction of sugarcane yield using a random forest algorithm. Agron. Sustain. Dev. 2016, 36, 27. [Google Scholar] [CrossRef] [Green Version]

- Chandra, A.L.; Desai, S.V.; Guo, W.; Balasubramanian, V.N. Computer vision with deep learning for plant phenotyping in agriculture: A survey. arXiv Prepr. 2020, arXiv:2006.11391. [Google Scholar]

- Zhou, L.; Zhang, C.; Liu, F.; Qiu, Z.; He, Y. Application of Deep Learning in Food: A Review. Compr. Rev. Food Sci. Food Saf. 2019, 18, 1793–1811. [Google Scholar] [CrossRef] [Green Version]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Bah, M.D.; Hafiane, A.; Canals, R. Deep Learning with Unsupervised Data Labeling for Weed Detection in Line Crops in UAV Images. Remote Sens. 2018, 10, 1690. [Google Scholar] [CrossRef] [Green Version]

- Kitano, B.T.; Mendes, C.C.T.; Geus, A.R.; Oliveira, H.C.; Souza, J.R. Corn plant counting using deep learning and UAV images. IEEE Geosci. Remote. Sens. Lett. 2019, 1–5. [Google Scholar] [CrossRef]

- Nowakowski, A.; Mrziglod, J.; Spiller, D.; Bonifacio, R.; Ferrari, I.; Mathieu, P.P.; Garcia-Herranz, M.; Kim, D.-H. Crop type mapping by using transfer learning. Int. J. Appl. Earth Obs. Geoinf. 2021, 98, 102313. [Google Scholar] [CrossRef]

- Ma, L.; Liu, Y.; Zhang, X.; Ye, Y.; Yin, G.; Johnarson, B.A. Deep learning in remote sensing applications: A meta-analysis and review. ISPRS J. Photogramm. Remote Sens. 2019, 152, 166–177. [Google Scholar] [CrossRef]

- Chen, J.; Ran, X. Deep Learning with Edge Computing: A Review. Proc. IEEE 2019, 107, 1655–1674. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. In Proceedings of the International Conference on Learning Representations, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Liu, J.; Liu, R.; Ren, K.; Li, X.; Xiang, J.; Qiu, S. High-Performance Object Detection for Optical Remote Sensing Images with Lightweight Convolutional Neural Networks. In Proceedings of the 2020 IEEE 22nd International Conference on High Performance Computing and Communications; IEEE 18th International Conference on Smart City; IEEE 6th International Conference on Data Science and Systems (HPCC/SmartCity/DSS), Yanuca Island, Cuvu, Fiji, 14–16 December 2020; pp. 585–592. [Google Scholar]

- Zhou, Z.; Chen, X.; Li, E.; Zeng, L.; Luo, K.; Zhang, J. Edge Intelligence: Paving the Last Mile of Artificial Intelligence with Edge Computing. Proc. IEEE 2019, 107, 1738–1762. [Google Scholar] [CrossRef] [Green Version]

- Pu, Q.; Ananthanarayanan, G.; Bodik, P.; Kandula, S.; Akella, A.; Bahl, P.; Stoica, I. Low latency geo-distributed data analytics. ACM SIGCOMM Comp. Com. Rev. 2015, 45, 421–434. [Google Scholar] [CrossRef] [Green Version]

- Sittón-Candanedo, I.; Alonso, R.S.; Rodríguez-González, S.; Coria, J.A.G.; De La Prieta, F. Edge Computing Architectures in Industry 4.0: A General Survey and Comparison. International Workshop on Soft Computing Models in Industrial and Environmental Applications; Springer: Berlin/Heidelberg, Germany, 2019; pp. 121–131. [Google Scholar]

- Plastiras, G.; Terzi, M.; Kyrkou, C.; Theocharidcs, T. Edge intelligence: Challenges and opportunities of near-sensor machine learning applications. In Proceedings of the 2018 IEEE 29th International Conference on Application-Specific Systems, Architectures and Processors (ASAP), Milano, Italy, 10–12 July 2018; pp. 1–7. [Google Scholar]

- Deng, S.; Zhao, H.; Fang, W.; Yin, J.; Dustdar, S.; Zomaya, A.Y. Edge Intelligence: The Confluence of Edge Computing and Artificial Intelligence. IEEE Internet Things J. 2020, 7, 7457–7469. [Google Scholar] [CrossRef] [Green Version]

- Boursianis, A.D.; Papadopoulou, M.S.; Diamantoulakis, P.; Liopa-Tsakalidi, A.; Barouchas, P.; Salahas, G.; Karagiannidis, G.; Wan, S.; Goudos, S.K. Internet of Things (IoT) and Agricultural Unmanned Aerial Vehicles (UAVs) in smart farming: A comprehensive review. Internet Things 2020, 100187, in press. [Google Scholar] [CrossRef]

- Kim, J.; Kim, S.; Ju, C.; Son, H.I. Unmanned Aerial Vehicles in Agriculture: A Review of Perspective of Platform, Control, and Applications. IEEE Access 2019, 7, 105100–105115. [Google Scholar] [CrossRef]

- Mogili, U.R.; Deepak, B.B.V.L. Review on Application of Drone Systems in Precision Agriculture. Procedia Comput. Sci. 2018, 133, 502–509. [Google Scholar] [CrossRef]

- Kamilaris, A.; Prenafeta-Boldú, F.X. A review of the use of convolutional neural networks in agriculture. J. Agric. Sci. 2018, 156, 312–322. [Google Scholar] [CrossRef] [Green Version]

- Kamilaris, A.; Prenafeta-Boldú, F.X. Deep learning in agriculture: A survey. Comput. Electron. Agric. 2018, 147, 70–90. [Google Scholar] [CrossRef] [Green Version]

- Santos, L.; Santos, F.N.; Oliveira, P.M.; Shinde, P. Deep Learning Applications in Agriculture: A Short Review. Iberian Robotics Conference; Springer: Berlin/Heidelberg, Germany, 2019; pp. 139–151. [Google Scholar]

- Civil Aviation Administration of China. Interim Regulations on Flight Management of Unmanned Aerial Vehicles. 2018; Volume 2021. Available online: http://www.caac.gov.cn/HDJL/YJZJ/201801/t20180126_48853.html (accessed on 17 October 2021).

- Park, M.; Lee, S.; Lee, S. Dynamic topology reconstruction protocol for uav swarm networking. Symmetry 2020, 12, 1111. [Google Scholar] [CrossRef]

- Radoglou-Grammatikis, P.; Sarigiannidis, P.; Lagkas, T.; Moscholios, I. A compilation of UAV applications for precision agriculture. Comput. Netw. 2020, 172, 107148. [Google Scholar] [CrossRef]

- Hayat, S.; Yanmaz, E.; Muzaffar, R. Survey on Unmanned Aerial Vehicle Networks for Civil Applications: A Communications Viewpoint. IEEE Commun. Surv. Tutor. 2016, 18, 2624–2661. [Google Scholar] [CrossRef]

- Xie, C.; Yang, C. A review on plant high-throughput phenotyping traits using UAV-based sensors. Comput. Electron. Agric. 2020, 178, 105731. [Google Scholar] [CrossRef]

- Delavarpour, N.; Koparan, C.; Nowatzki, J.; Bajwa, S.; Sun, X. A Technical Study on UAV Characteristics for Precision Agriculture Applications and Associated Practical Challenges. Remote Sens. 2021, 13, 1204. [Google Scholar] [CrossRef]

- Tsouros, D.C.; Triantafyllou, A.; Bibi, S.; Sarigannidis, P.G. Data acquisition and analysis methods in UAV-based applications for Precision Agriculture. In Proceedings of the 2019 15th International Conference on Distributed Computing in Sensor Systems (DCOSS), Santorini Island, Greece, 29–31 May 2019; pp. 377–384. [Google Scholar]

- Tahir, M.N.; Lan, Y.; Zhang, Y.; Wang, Y.; Nawaz, F.; Shah, M.A.A.; Gulzar, A.; Qureshi, W.S.; Naqvi, S.M.; Naqvi, S.Z.A. Real time estimation of leaf area index and groundnut yield using multispectral UAV. Int. J. Precis. Agric. Aviat. 2020, 3. [Google Scholar]

- Stroppiana, D.; Villa, P.; Sona, G.; Ronchetti, G.; Candiani, G.; Pepe, M.; Busetto, L.; Migliazzi, M.; Boschetti, M. Early season weed mapping in rice crops using multi-spectral UAV data. Int. J. Remote Sens. 2018, 39, 5432–5452. [Google Scholar] [CrossRef]

- Wang, H.; Mortensen, A.K.; Mao, P.; Boelt, B.; Gislum, R. Estimating the nitrogen nutrition index in grass seed crops using a UAV-mounted multispectral camera. Int. J. Remote Sens. 2019, 40, 2467–2482. [Google Scholar] [CrossRef]

- Ishida, T.; Kurihara, J.; Viray, F.A.; Namuco, S.B.; Paringit, E.C.; Perez, G.J.; Takahashi, Y.; Marciano, J.J., Jr. A novel approach for vegetation classification using UAV-based hyperspectral imaging. Comput. Electron. Agric. 2018, 144, 80–85. [Google Scholar] [CrossRef]

- Ge, X.; Wang, J.; Ding, J.; Cao, X.; Zhang, Z.; Liu, J.; Li, X. Combining UAV-based hyperspectral imagery and machine learning algorithms for soil moisture content monitoring. PeerJ 2019, 7, e6926. [Google Scholar] [CrossRef] [PubMed]

- Zhao, X.; Yang, G.; Liu, J.; Zhang, X.; Xu, B.; Wang, Y.; Zhao, C.; Gai, J. Estimation of soybean breeding yield based on optimization of spatial scale of UAV hyperspectral image. Trans. Chin. Soc. Agric. Eng. 2017, 33, 110–116. [Google Scholar]

- Prakash, A. Thermal remote sensing: Concepts, issues and applications. Int. Arch. Photogramm. Remote Sens. 2000, 33, 239–243. [Google Scholar]

- Weng, Q. Thermal infrared remote sensing for urban climate and environmental studies: Methods, applications, and trends. ISPRS J. Photogramm. Remote Sens. 2009, 64, 335–344. [Google Scholar] [CrossRef]

- Khanal, S.; Fulton, J.; Shearer, S. An overview of current and potential applications of thermal remote sensing in precision agriculture. Comput. Electron. Agric. 2017, 139, 22–32. [Google Scholar] [CrossRef]

- Dong, P.; Chen, Q. LiDAR Remote Sensing and Applications; CRC Press: Boca Raton, FL, USA, 2017. [Google Scholar]

- Zhou, L.; Gu, X.; Cheng, S.; Yang, G.; Shu, M.; Sun, Q. Analysis of plant height changes of lodged maize using UAV-LiDAR data. Agriculture 2020, 10, 146. [Google Scholar] [CrossRef]

- Shendryk, Y.; Sofonia, J.; Garrard, R.; Rist, Y.; Skocaj, D.; Thorburn, P. Fine-scale prediction of biomass and leaf nitrogen content in sugarcane using UAV LiDAR and multispectral imaging. Int. J. Appl. Earth Obs. Geoinf. 2020, 92, 102177. [Google Scholar] [CrossRef]

- Ndikumana, E.; Minh, D.H.T.; Baghdadi, N.; Courault, D.; Hossard, L. Deep Recurrent Neural Network for Agricultural Classification using multitemporal SAR Sentinel-1 for Camargue, France. Remote Sens. 2018, 10, 1217. [Google Scholar] [CrossRef] [Green Version]

- Lyalin, K.S.; Biryuk, A.A.; Sheremet, A.Y.; Tsvetkov, V.K.; Prikhodko, D.V. UAV synthetic aperture radar system for control of vegetation and soil moisture. In Proceedings of the 2018 IEEE Conference of Russian Young Researchers in Electrical and Electronic Engineering (EIConRus), St. Petersburg and Moscow, Russia, 29 January–1 February 2018; pp. 1673–1675. [Google Scholar]

- Liu, C.-A.; Chen, Z.-X.; Shao, Y.; Chen, J.-S.; Hasi, T.; Pan, H.-Z. Research advances of SAR remote sensing for agriculture applications: A review. J. Integr. Agric. 2019, 18, 506–525. [Google Scholar] [CrossRef] [Green Version]

- Pádua, L.; Vanko, J.; Hruška, J.; Adão, T.; Sousa, J.J.; Peres, E.; Morais, R. UAS, sensors, and data processing in agroforestry: A review towards practical applications. Int. J. Remote Sens. 2017, 38, 2349–2391. [Google Scholar] [CrossRef]

- Allred, B.; Eash, N.; Freeland, R.; Martinez, L.; Wishart, D. Effective and efficient agricultural drainage pipe mapping with UAS thermal infrared imagery: A case study. Agric. Water Manag. 2018, 197, 132–137. [Google Scholar] [CrossRef]

- Santesteban, L.G.; Di Gennaro, S.F.; Herrero-Langreo, A.; Miranda, C.; Royo, J.; Matese, A. High-resolution UAV-based thermal imaging to estimate the instantaneous and seasonal variability of plant water status within a vineyard. Agric. Water Manag. 2017, 183, 49–59. [Google Scholar] [CrossRef]

- Xue, J.; Su, B. Significant Remote Sensing Vegetation Indices: A Review of Developments and Applications. J. Sensors 2017, 2017, 1–17. [Google Scholar] [CrossRef] [Green Version]

- Dai, B.; He, Y.; Gu, F.; Yang, L.; Han, J.; Xu, W. A vision-based autonomous aerial spray system for precision agriculture. In Proceedings of the 2017 IEEE International Conference on Robotics and Biomimetics (ROBIO), Macau, China, 5–8 December 2017; pp. 507–513. [Google Scholar]

- Faiçal, B.S.; Freitas, H.; Gomes, P.H.; Mano, L.; Pessin, G.; de Carvalho, A.; Krishnamachari, B.; Ueyama, J. An adaptive approach for UAV-based pesticide spraying in dynamic environments. Comput. Electron. Agric. 2017, 138, 210–223. [Google Scholar] [CrossRef]

- Faiçal, B.S.; Pessin, G.; Filho, G.P.R.; Carvalho, A.C.P.L.F.; Gomes, P.H.; Ueyama, J. Fine-Tuning of UAV Control Rules for Spraying Pesticides on Crop Fields: An Approach for Dynamic Environments. Int. J. Artif. Intell. Tools 2016, 25, 1660003. [Google Scholar] [CrossRef] [Green Version]

- Esposito, M.; Crimaldi, M.; Cirillo, V.; Sarghini, F.; Maggio, A. Drone and sensor technology for sustainable weed management: A review. Chem. Biol. Technol. Agric. 2021, 8, 18. [Google Scholar] [CrossRef]

- Bah, M.D.; Dericquebourg, E.; Hafiane, A.; Canals, R. Deep Learning based Classification System for Identifying Weeds using High-Resolution UAV Imagery. Science and Information Conference; Springer: Berlin/Heidelberg, Germany, 2018; pp. 176–187. [Google Scholar]

- Huang, H.; Deng, J.; Lan, Y.; Yang, A.; Deng, X.; Zhang, L. A fully convolutional network for weed mapping of unmanned aerial vehicle (UAV) imagery. PLoS ONE 2018, 13, e0196302. [Google Scholar] [CrossRef] [Green Version]

- Olsen, A.; Konovalov, D.A.; Philippa, B.; Ridd, P.; Wood, J.C.; Johns, J.; Banks, W.; Girgenti, B.; Kenny, O.; Whinney, J.; et al. DeepWeeds: A Multiclass Weed Species Image Dataset for Deep Learning. Sci. Rep. UK 2019, 9, 1–12. [Google Scholar] [CrossRef]

- Sa, I.; Popović, M.; Khanna, R.; Chen, Z.; Lottes, P.; Liebisch, F.; Nieto, J.; Stachniss, C.; Walter, A.; Siegwart, R. WeedMap: A Large-Scale Semantic Weed Mapping Framework Using Aerial Multispectral Imaging and Deep Neural Network for Precision Farming. Remote Sens. 2018, 10, 1423. [Google Scholar] [CrossRef] [Green Version]

- Scherrer, B.; Sheppard, J.; Jha, P.; Shaw, J.A. Hyperspectral imaging and neural networks to classify herbicide-resistant weeds. J. Appl. Remote Sens. 2019, 13, 044516. [Google Scholar] [CrossRef]

- Huang, H.; Lan, Y.; Yang, A.; Zhang, Y.; Wen, S.; Deng, J. Deep learning versus Object-based Image Analysis (OBIA) in weed mapping of UAV imagery. Int. J. Remote Sens. 2020, 41, 3446–3479. [Google Scholar] [CrossRef]

- Hasan, R.I.; Yusuf, S.M.; Alzubaidi, L. Review of the State of the Art of Deep Learning for Plant Diseases: A Broad Analysis and Discussion. Plants 2020, 9, 1302. [Google Scholar] [CrossRef] [PubMed]

- Abdulridha, J.; Batuman, O.; Ampatzidis, Y. UAV-Based Remote Sensing Technique to Detect Citrus Canker Disease Utilizing Hyperspectral Imaging and Machine Learning. Remote Sens. 2019, 11, 1373. [Google Scholar] [CrossRef] [Green Version]

- Tetila, E.C.; Machado, B.B.; Astolfi, G.; Belete, N.A.D.S.; Amorim, W.P.; Roel, A.R.; Pistori, H. Detection and classification of soybean pests using deep learning with UAV images. Comput. Electron. Agric. 2020, 179, 105836. [Google Scholar] [CrossRef]

- Zhang, X.; Han, L.; Dong, Y.; Shi, Y.; Huang, W.; Han, L.; González-Moreno, P.; Ma, H.; Ye, H.; Sobeih, T. A Deep Learning-Based Approach for Automated Yellow Rust Disease Detection from High-Resolution Hyperspectral UAV Images. Remote Sens. 2019, 11, 1554. [Google Scholar] [CrossRef] [Green Version]

- Hu, G.; Yin, C.; Wan, M.; Zhang, Y.; Fang, Y. Recognition of diseased Pinus trees in UAV images using deep learning and AdaBoost classifier. Biosyst. Eng. 2020, 194, 138–151. [Google Scholar] [CrossRef]

- Tetila, E.C.; Machado, B.B.; Menezes, G.K.; Oliveira, A.D.S.; Alvarez, M.; Amorim, W.P.; Belete, N.A.D.S.; Da Silva, G.G.; Pistori, H. Automatic Recognition of Soybean Leaf Diseases Using UAV Images and Deep Convolutional Neural Networks. IEEE Geosci. Remote Sens. Lett. 2019, 17, 903–907. [Google Scholar] [CrossRef]

- Wiesner-Hanks, T.; Wu, H.; Stewart, E.; DeChant, C.; Kaczmar, N.; Lipson, H.; Gore, M.A.; Nelson, R.J. Millimeter-Level Plant Disease Detection from Aerial Photographs via Deep Learning and Crowdsourced Data. Front. Plant Sci. 2019, 10, 1550. [Google Scholar] [CrossRef] [Green Version]

- Albetis, J.; Jacquin, A.; Goulard, M.; Poilvé, H.; Rousseau, J.; Clenet, H.; Dedieu, G.; Duthoit, S. On the Potentiality of UAV Multispectral Imagery to Detect Flavescence dorée and Grapevine Trunk Diseases. Remote Sens. 2018, 11, 23. [Google Scholar] [CrossRef] [Green Version]

- Kerkech, M.; Hafiane, A.; Canals, R. Vine disease detection in UAV multispectral images using optimized image registration and deep learning segmentation approach. Comput. Electron. Agric. 2020, 174, 105446. [Google Scholar] [CrossRef]

- Bendig, J.; Willkomm, M.; Tilly, N.; Gnyp, M.L.; Bennertz, S.; Qiang, C.; Miao, Y.; Lenz-Wiedemann, V.I.S.; Bareth, G. Very high resolution crop surface models (CSMs) from UAV-based stereo images for rice growth monitoring In Northeast China. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2013, 40, 45–50. [Google Scholar] [CrossRef] [Green Version]

- Ni, J.; Yao, L.; Zhang, J.; Cao, W.; Zhu, Y.; Tai, X. Development of an Unmanned Aerial Vehicle-Borne Crop-Growth Monitoring System. Sensors 2017, 17, 502. [Google Scholar] [CrossRef] [Green Version]

- Fu, Z.; Jiang, J.; Gao, Y.; Krienke, B.; Wang, M.; Zhong, K.; Cao, Q.; Tian, Y.; Zhu, Y.; Cao, W.; et al. Wheat Growth Monitoring and Yield Estimation based on Multi-Rotor Unmanned Aerial Vehicle. Remote Sens. 2020, 12, 508. [Google Scholar] [CrossRef] [Green Version]

- Zhao, J.; Zhang, X.; Gao, C.; Qiu, X.; Tian, Y.; Zhu, Y.; Cao, W. Rapid Mosaicking of Unmanned Aerial Vehicle (UAV) Images for Crop Growth Monitoring Using the SIFT Algorithm. Remote Sens. 2019, 11, 1226. [Google Scholar] [CrossRef] [Green Version]

- Li, B.; Xu, X.; Zhang, L.; Han, J.; Bian, C.; Li, G.; Liu, J.; Jin, L. Above-ground biomass estimation and yield prediction in potato by using UAV-based RGB and hyperspectral imaging. ISPRS J. Photogramm. Remote Sens. 2020, 162, 161–172. [Google Scholar] [CrossRef]

- Maimaitijiang, M.; Sagan, V.; Sidike, P.; Hartling, S.; Esposito, F.; Fritschi, F.B. Soybean yield prediction from UAV using multimodal data fusion and deep learning. Remote Sens. Environ. 2020, 237, 111599. [Google Scholar] [CrossRef]

- Nebiker, S.; Lack, N.; Abächerli, M.; Läderach, S. Light-weight multispectral UAV sensors and their capabilities for predicting grain yield and detecting plant diseases. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, 41. [Google Scholar]

- Stroppiana, D.; Migliazzi, M.; Chiarabini, V.; Crema, A.; Musanti, M.; Franchino, C.; Villa, P. Rice yield estimation using multispectral data from UAV: A preliminary experiment in northern Italy. In Proceedings of the 2015 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Milan, Italy, 26–31 July 2015; pp. 4467–4664. [Google Scholar]

- Kussul, N.; Lavreniuk, M.; Skakun, S.; Shelestov, A. Deep Learning Classification of Land Cover and Crop Types Using Remote Sensing Data. IEEE Geosci. Remote Sens. Lett. 2017, 14, 778–782. [Google Scholar] [CrossRef]

- Teimouri, N.; Dyrmann, M.; Jørgensen, R.N. A Novel Spatio-Temporal FCN-LSTM Network for Recognizing Various Crop Types Using Multi-Temporal Radar Images. Remote Sens. 2019, 11, 990. [Google Scholar] [CrossRef] [Green Version]

- Wang, S.; Di Tommaso, S.; Faulkner, J.; Friedel, T.; Kennepohl, A.; Strey, R.; Lobell, D. Mapping Crop Types in Southeast India with Smartphone Crowdsourcing and Deep Learning. Remote Sens. 2020, 12, 2957. [Google Scholar] [CrossRef]

- Rebetez, J.; Satizábal, H.F.; Mota, M.; Noll, D.; Büchi, L.; Wendling, M.; Cannelle, B.; Perez-Uribe, A.; Burgos, S. Augmenting a Convolutional Neural Network with Local Histograms-A Case Study in Crop Classification from High-Resolution UAV Imagery; ESANN: Bruges, Belgium, 2016. [Google Scholar]

- Zhao, L.; Shi, Y.; Liu, B.; Hovis, C.; Duan, Y.; Shi, Z. Finer Classification of Crops by Fusing UAV Images and Sentinel-2A Data. Remote Sens. 2019, 11, 3012. [Google Scholar] [CrossRef] [Green Version]

- Hinton, G.E.; Salakhutdinov, R.R. Reducing the Dimensionality of Data with Neural Networks. Science 2006, 313, 504–507. [Google Scholar] [CrossRef] [Green Version]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 2012, 25, 1097–1105. [Google Scholar] [CrossRef]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial nets. Adv. Neural Inf. Process. Syst. 2014, 27. [Google Scholar]

- Reyes, M.F.; Auer, S.; Merkle, N.M.; Henry, C.; Schmitt, M. SAR-to-Optical Image Translation Based on Conditional Generative Adversarial Networks - Optimization, Opportunities and Limits. Remote Sens. 2019, 11, 2067. [Google Scholar] [CrossRef] [Green Version]

- Wang, X.; Yan, H.; Huo, C.; Yu, J.; Pant, C. Enhancing Pix2Pix for Remote Sensing Image Classification. In Proceedings of the International Conference on Pattern Recognition, Beijing, China, 20–24 August 2018; pp. 2332–2336. [Google Scholar]

- Lv, N.; Ma, H.; Chen, C.; Pei, Q.; Zhou, Y.; Xiao, F.; Li, J. Remote Sensing Data Augmentation Through Adversarial Training. Int. Geosci. Remote Sens. Symp. 2020, 2511–2514. [Google Scholar]

- Ren, C.X.; Ziemann, A.; Theiler, J.; Durieux, A.M.S. Deep snow: Synthesizing remote sensing imagery with generative adversarial nets. In Proceedings of the 2020 Algorithms, Technologies, and Applications for Multispectral and Hyperspectral Imagery XXVI, Online only. 19 May 2020; pp. 196–205. [Google Scholar] [CrossRef]

- Everingham, M.; Eslami, S.M.A.; Van Gool, L.; Williams, C.K.I.; Winn, J.; Zisserman, A. The Pascal Visual Object Classes Challenge: A Retrospective. Int. J. Comput. Vis. 2015, 111, 98–136. [Google Scholar] [CrossRef]

- Ha, J.G.; Moon, H.; Kwak, J.T.; Hassan, S.I.; Dang, M.; Lee, O.N.; Park, H.Y. Deep convolutional neural network for classifying Fusarium wilt of radish from unmanned aerial vehicles. J. Appl. Remote Sens. 2017, 11. [Google Scholar] [CrossRef]

- Huang, H.; Deng, J.; Lan, Y.; Yang, A.; Zhang, L.; Wen, S.; Zhang, H.; Zhang, Y.; Deng, Y. Detection of Helminthosporium Leaf Blotch Disease Based on UAV Imagery. Appl. Sci. 2019, 9, 558. [Google Scholar] [CrossRef] [Green Version]

- De Camargo, T.; Schirrmann, M.; Landwehr, N.; Dammer, K.-H.; Pflanz, M. Optimized Deep Learning Model as a Basis for Fast UAV Mapping of Weed Species in Winter Wheat Crops. Remote Sens. 2021, 13, 1704. [Google Scholar] [CrossRef]

- Ukaegbu, U.; Tartibu, L.; Okwu, M.; Olayode, I. Development of a Light-Weight Unmanned Aerial Vehicle for Precision Agriculture. Sensors 2021, 21, 4417. [Google Scholar] [CrossRef]

- Onishi, M.; Ise, T. Automatic classification of trees using a UAV onboard camera and deep learning. arXiv Prepr. 2018, arXiv:1804.10390. [Google Scholar]

- Zhao, J.; Zhong, Y.; Hu, X.; Wei, L.; Zhang, L. A robust spectral-spatial approach to identifying heterogeneous crops using remote sensing imagery with high spectral and spatial resolutions. Remote Sens. Environ. 2020, 239, 111605. [Google Scholar] [CrossRef]

- Chen, C.-J.; Huang, Y.-Y.; Li, Y.-S.; Chen, Y.-C.; Chang, C.-Y.; Huang, Y.-M. Identification of Fruit Tree Pests with Deep Learning on Embedded Drone to Achieve Accurate Pesticide Spraying. IEEE Access 2021, 9, 21986–21997. [Google Scholar] [CrossRef]

- Li, F.; Liu, Z.; Shen, W.; Wang, Y.; Wang, Y.; Ge, C.; Sun, F.; Lan, P. A Remote Sensing and Airborne Edge-Computing Based Detection System for Pine Wilt Disease. IEEE Access 2021, 9, 66346–66360. [Google Scholar] [CrossRef]

- Valente, J.; Doldersum, M.; Roers, C.; Kooistra, L. Detecting rumex obtusifolius weed plants in grasslands from UAV RGB imagery using deep learning. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, 4, 179–185. [Google Scholar] [CrossRef] [Green Version]

- Veeranampalayam Sivakumar, A.N.; Li, J.; Scott, S.; Psota, E.; Jhala, A.J.; Luck, J.D.; Shi, Y. Comparison of object detection and patch-based classification deep learning models on mid-to late-season weed detection in UAV imagery. Remote Sens. 2020, 12, 2136. [Google Scholar] [CrossRef]

- Apolo-Apolo, O.; Martínez-Guanter, J.; Egea, G.; Raja, P.; Pérez-Ruiz, M. Deep learning techniques for estimation of the yield and size of citrus fruits using a UAV. Eur. J. Agron. 2020, 115, 126030. [Google Scholar] [CrossRef]

- Chen, Y.; Lee, W.S.; Gan, H.; Peres, N.; Fraisse, C.; Zhang, Y.; He, Y. Strawberry Yield Prediction Based on a Deep Neural Network Using High-Resolution Aerial Orthoimages. Remote Sens. 2019, 11, 1584. [Google Scholar] [CrossRef] [Green Version]

- Csillik, O.; Cherbini, J.; Johnson, R.; Lyons, A.; Kelly, M. Identification of Citrus Trees from Unmanned Aerial Vehicle Imagery Using Convolutional Neural Networks. Drones 2018, 2, 39. [Google Scholar] [CrossRef] [Green Version]

- Zhang, Z.; Flores, P.; Igathinathane, C.; Naik, D.L.; Kiran, R.; Ransom, J.K. Wheat Lodging Detection from UAS Imagery Using Machine Learning Algorithms. Remote Sens. 2020, 12, 1838. [Google Scholar] [CrossRef]

- Stewart, E.L.; Wiesner-Hanks, T.; Kaczmar, N.; DeChant, C.; Wu, H.; Lipson, H.; Nelson, R.J.; Gore, M.A. Quantitative Phenotyping of Northern Leaf Blight in UAV Images Using Deep Learning. Remote Sens. 2019, 11, 2209. [Google Scholar] [CrossRef] [Green Version]

- Kerkech, M.; Hafiane, A.; Canals, R. VddNet: Vine Disease Detection Network Based on Multispectral Images and Depth Map. Remote Sens. 2020, 12, 3305. [Google Scholar] [CrossRef]

- Zou, K.; Chen, X.; Zhang, F.; Zhou, H.; Zhang, C. A Field Weed Density Evaluation Method Based on UAV Imaging and Modified U-Net. Remote Sens. 2021, 13, 310. [Google Scholar] [CrossRef]

- Osco, L.P.; Nogueira, K.; Ramos, A.P.M.; Pinheiro, M.M.F.; Furuya, D.E.G.; Gonçalves, W.N.; Jorge, L.A.D.C.; Junior, J.M.; dos Santos, J.A. Semantic segmentation of citrus-orchard using deep neural networks and multispectral UAV-based imagery. Precis. Agric. 2021, 22, 1–18. [Google Scholar] [CrossRef]

- Zhang, J.; Xie, T.; Yang, C.; Song, H.; Jiang, Z.; Zhou, G.; Zhang, D.; Feng, H.; Xie, J. Segmenting Purple Rapeseed Leaves in the Field from UAV RGB Imagery Using Deep Learning as an Auxiliary Means for Nitrogen Stress Detection. Remote Sens. 2020, 12, 1403. [Google Scholar] [CrossRef]

- Xu, W.; Yang, W.; Chen, S.; Wu, C.; Chen, P.; Lan, Y. Establishing a model to predict the single boll weight of cotton in northern Xinjiang by using high resolution UAV remote sensing data. Comput. Electron. Agric. 2020, 179, 105762. [Google Scholar] [CrossRef]

- Champ, J.; Mora-Fallas, A.; Goëau, H.; Mata-Montero, E.; Bonnet, P.; Joly, A. Instance segmentation for the fine detection of crop and weed plants by precision agricultural robots. Appl. Plant Sci. 2020, 8, e11373. [Google Scholar] [CrossRef] [PubMed]

- Mora-Fallas, A.; Goëau, H.; Joly, A.; Bonnet, P.; Mata-Montero, E. Instance segmentation for automated weeds and crops detection in farmlands. A first approach to Acoustic Characterization of Costa Rican Children’s Speech. 2020. Available online: https://redmine.mdpi.cn/issues/2225524#change-20906846 (accessed on 17 October 2021).

- Toda, Y.; Okura, F.; Ito, J.; Okada, S.; Kinoshita, T.; Tsuji, H.; Saisho, D. Training instance segmentation neural network with synthetic datasets for crop seed phenotyping. Commun. Biol. 2020, 3, 173. [Google Scholar] [CrossRef] [Green Version]

- Khan, S.; Tufail, M.; Khan, M.T.; Khan, Z.A.; Iqbal, J.; Wasim, A. Real-time recognition of spraying area for UAV sprayers using a deep learning approach. PLoS ONE 2021, 16, e0249436. [Google Scholar] [CrossRef]

- Deng, J.; Zhong, Z.; Huang, H.; Lan, Y.; Han, Y.; Zhang, Y. Lightweight Semantic Segmentation Network for Real-Time Weed Mapping Using Unmanned Aerial Vehicles. Appl. Sci. 2020, 10, 7132. [Google Scholar] [CrossRef]

- Liu, C.; Li, H.; Su, A.; Chen, S.; Li, W. Identification and Grading of Maize Drought on RGB Images of UAV Based on Improved U-Net. IEEE Geosci. Remote Sens. Lett. 2020, 18, 198–202. [Google Scholar] [CrossRef]

- Tri, N.C.; Duong, H.N.; Van Hoai, T.; Van Hoa, T.; Nguyen, V.H.; Toan, N.T.; Snasel, V. A novel approach based on deep learning techniques and UAVs to yield assessment of paddy fields. In Proceedings of the 2017 9th International Conference on Knowledge and Systems Engineering (KSE), Hue, Vietnam, 19–21 October 2017; pp. 257–262. [Google Scholar]

- Osco, L.P.; Arruda, M.D.S.D.; Gonçalves, D.N.; Dias, A.; Batistoti, J.; de Souza, M.; Gomes, F.D.G.; Ramos, A.P.M.; Jorge, L.A.D.C.; Liesenberg, V.; et al. A CNN approach to simultaneously count plants and detect plantation-rows from UAV imagery. ISPRS J. Photogramm. Remote Sens. 2021, 174, 1–17. [Google Scholar] [CrossRef]

- Osco, L.P.; Arruda, M.D.S.D.; Junior, J.M.; da Silva, N.B.; Ramos, A.P.M.; Moryia, A.S.; Imai, N.N.; Pereira, D.R.; Creste, J.E.; Matsubara, E.; et al. A convolutional neural network approach for counting and geolocating citrus-trees in UAV multispectral imagery. ISPRS J. Photogramm. Remote Sens. 2020, 160, 97–106. [Google Scholar] [CrossRef]

- Zheng, J.; Fu, H.; Li, W.; Wu, W.; Yu, L.; Yuan, S.; Tao, W.Y.W.; Pang, T.K.; Kanniah, K.D. Growing status observation for oil palm trees using Unmanned Aerial Vehicle (UAV) images. ISPRS J. Photogramm. Remote Sens. 2021, 173, 95–121. [Google Scholar] [CrossRef]

- Ampatzidis, Y.; Partel, V.; Costa, L. Agroview: Cloud-based application to process, analyze and visualize UAV-collected data for precision agriculture applications utilizing artificial intelligence. Comput. Electron. Agric. 2020, 174, 105457. [Google Scholar] [CrossRef]

- Pang, Y.; Shi, Y.; Gao, S.; Jiang, F.; Veeranampalayam-Sivakumar, A.-N.; Thompson, L.; Luck, J.; Liu, C. Improved crop row detection with deep neural network for early-season maize stand count in UAV imagery. Comput. Electron. Agric. 2020, 178, 105766. [Google Scholar] [CrossRef]

- Wu, J.; Yang, G.; Yang, X.; Xu, B.; Han, L.; Zhu, Y. Automatic Counting of in situ Rice Seedlings from UAV Images Based on a Deep Fully Convolutional Neural Network. Remote Sens. 2019, 11, 691. [Google Scholar] [CrossRef] [Green Version]

- Yang, M.-D.; Tseng, H.-H.; Hsu, Y.-C.; Yang, C.-Y.; Lai, M.-H.; Wu, D.-H. A UAV Open Dataset of Rice Paddies for Deep Learning Practice. Remote Sens. 2021, 13, 1358. [Google Scholar] [CrossRef]

- Zhao, W.; Yamada, W.; Li, T.; Digman, M.; Runge, T. Augmenting Crop Detection for Precision Agriculture with Deep Visual Transfer Learning—A Case Study of Bale Detection. Remote Sens. 2020, 13, 23. [Google Scholar] [CrossRef]

- Ampatzidis, Y.; Partel, V. UAV-Based High Throughput Phenotyping in Citrus Utilizing Multispectral Imaging and Artificial Intelligence. Remote Sens. 2019, 11, 410. [Google Scholar] [CrossRef] [Green Version]

- Aeberli, A.; Johansen, K.; Robson, A.; Lamb, D.; Phinn, S. Detection of Banana Plants Using Multi-Temporal Multispectral UAV Imagery. Remote Sens. 2021, 13, 2123. [Google Scholar] [CrossRef]

- Fan, Z.; Lu, J.; Gong, M.; Xie, H.; Goodman, E.D. Automatic Tobacco Plant Detection in UAV Images via Deep Neural Networks. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 876–887. [Google Scholar] [CrossRef]

- Zan, X.; Zhang, X.; Xing, Z.; Liu, W.; Zhang, X.; Su, W.; Liu, Z.; Zhao, Y.; Li, S. Automatic Detection of Maize Tassels from UAV Images by Combining Random Forest Classifier and VGG16. Remote Sens. 2020, 12, 3049. [Google Scholar] [CrossRef]

- Liu, Y.; Cen, C.; Che, Y.; Ke, R.; Ma, Y.; Ma, Y. Detection of Maize Tassels from UAV RGB Imagery with Faster R-CNN. Remote Sens. 2020, 12, 338. [Google Scholar] [CrossRef] [Green Version]

- Yuan, W.; Choi, D. UAV-Based Heating Requirement Determination for Frost Management in Apple Orchard. Remote Sens. 2021, 13, 273. [Google Scholar] [CrossRef]

- Dyson, J.; Mancini, A.; Frontoni, E.; Zingaretti, P. Deep Learning for Soil and Crop Segmentation from Remotely Sensed Data. Remote Sens. 2019, 11, 1859. [Google Scholar] [CrossRef] [Green Version]

- Feng, Q.; Yang, J.; Liu, Y.; Ou, C.; Zhu, D.; Niu, B.; Liu, J.; Li, B. Multi-Temporal Unmanned Aerial Vehicle Remote Sensing for Vegetable Mapping Using an Attention-Based Recurrent Convolutional Neural Network. Remote Sens. 2020, 12, 1668. [Google Scholar] [CrossRef]

- Der Yang, M.; Tseng, H.H.; Hsu, Y.C.; Tseng, W.C. Real-time Crop Classification Using Edge Computing and Deep Learning. In Proceedings of the 2020 IEEE 17th Annual Consumer Communications & Networking Conference, Las Vegas, NV, USA, 10–13 January 2020; pp. 1–4. [Google Scholar]

- Yang, M.-D.; Boubin, J.G.; Tsai, H.P.; Tseng, H.-H.; Hsu, Y.-C.; Stewart, C.C. Adaptive autonomous UAV scouting for rice lodging assessment using edge computing with deep learning EDANet. Comput. Electron. Agric. 2020, 179, 105817. [Google Scholar] [CrossRef]

- Zhang, Q.; Liu, Y.; Gong, C.; Chen, Y.; Yu, H. Applications of Deep Learning for Dense Scenes Analysis in Agriculture: A Review. Sensors 2020, 20, 1520. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zhong, Y.; Hu, X.; Luo, C.; Wang, X.; Zhao, J.; Zhang, L. WHU-Hi: UAV-borne hyperspdectral with high spatial resolution (H2) benchmark datasets and classifier for precise crop identification based on deep convolutional neural network with CRF. Remote Sens. Environ. 2020, 250, 112012. [Google Scholar] [CrossRef]

- Wiesner-Hanks, T.; Stewart, E.L.; Kaczmar, N.; DeChant, C.; Wu, H.; Nelson, R.J.; Lipson, H.; Gore, M.A. Image set for deep learning: Field images of maize annotated with disease symptoms. BMC Res. Notes 2018, 11, 440. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Daudt, R.C.; Le Saux, B.; Boulch, A.; Gousseau, Y. Multitask learning for large-scale semantic change detection. Comput. Vis. Image Underst. 2019, 187, 102783. [Google Scholar] [CrossRef] [Green Version]

- Zhang, Y. CSIF. figshare. Dataset. 2018. [Google Scholar]

- Oldoni, L.V.; Sanches, I.D.; Picoli, M.C.A.; Covre, R.M.; Fronza, J.G. LEM+ dataset: For agricultural remote sensing applications. Data Brief 2020, 33, 106553. [Google Scholar] [CrossRef] [PubMed]

- Ferreira, A.; Felipussi, S.C.; Pires, R.; Avila, S.; Santos, G.; Lambert, J.; Huang, J.; Rocha, A. Eyes in the Skies: A Data-Driven Fusion Approach to Identifying Drug Crops from Remote Sensing Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 4773–4786. [Google Scholar] [CrossRef]

- Rußwurm, M.; Pelletier, C.; Zollner, M.; Lefèvre, S.; Körner, M. BreizhCrops: A time series dataset for crop type mapping. arXiv Prepr. 2019, arXiv:1905.11893. [Google Scholar] [CrossRef]

- Rustowicz, R.; Cheong, R.; Wang, L.; Ermon, S.; Burke, M.; Lobell, D. Semantic Segmentation of Crop Type in Ghana Dataset. Available online: https://doi.org/10.34911/rdnt.ry138p (accessed on 17 October 2021). [CrossRef]

- Rustowicz, R.; Cheong, R.; Wang, L.; Ermon, S.; Burke, M.; Lobell, D. Semantic Segmentation of Crop Type in South Sudan Dataset. Available online: https://doi.org/10.34911/rdnt.v6kx6n (accessed on 17 October 2021). [CrossRef]

- Torre, M.; Remeseiro, B.; Radeva, P.; Martinez, F. DeepNEM: Deep Network Energy-Minimization for Agricultural Field Segmentation. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 726–737. [Google Scholar] [CrossRef]

- United States Geological Survey. EarthExplorer. Available online: https://earthexplorer.usgs.gov/ (accessed on 17 October 2021).

- European Space Agency. Copernicus Open Access Hub. Available online: https://scihub.copernicus.eu/dhus/#/home (accessed on 17 October 2021).

- Weikmann, G.; Paris, C.; Bruzzone, L. TimeSen2Crop: A Million Labeled Samples Dataset of Sentinel 2 Image Time Series for Crop-Type Classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 4699–4708. [Google Scholar] [CrossRef]

- Khan, W.Z.; Ahmed, E.; Hakak, S.; Yaqoob, I.; Ahmed, A. Edge computing: A survey. Future Gener. Comput. Syst. 2019, 97, 219–235. [Google Scholar] [CrossRef]

- Liu, J.; Xue, Y.; Ren, K.; Song, J.; Windmill, C.; Merritt, P. High-Performance Time-Series Quantitative Retrieval from Satellite Images on a GPU Cluster. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 2810–2821. [Google Scholar] [CrossRef] [Green Version]

- Hakak, S.; A Latif, S.; Amin, G. A Review on Mobile Cloud Computing and Issues in it. Int. J. Comput. Appl. 2013, 75, 1–4. [Google Scholar] [CrossRef]

- Jeong, H.-J.; Choi, J.D.; Ha, Y.-G. Vision Based Displacement Detection for Stabilized UAV Control on Cloud Server. Mob. Inf. Syst. 2016, 2016, 1–11. [Google Scholar] [CrossRef]

- Apolo-Apolo, O.E.; Pérez-Ruiz, M.; Guanter, J.M.; Valente, J. A Cloud-Based Environment for Generating Yield Estimation Maps from Apple Orchards Using UAV Imagery and a Deep Learning Technique. Front. Plant Sci. 2020, 11, 1086. [Google Scholar] [CrossRef]

- Shi, W.; Cao, J.; Zhang, Q.; Li, Y.; Xu, L. Edge Computing: Vision and Challenges. IEEE Internet Things J. 2016, 3, 637–646. [Google Scholar] [CrossRef]

- Ahmed, E.; Akhunzada, A.; Whaiduzzaman, M.; Gani, A.; Ab Hamid, S.H.; Buyya, R. Network-centric performance analysis of runtime application migration in mobile cloud computing. Simul. Model. Pr. Theory 2015, 50, 42–56. [Google Scholar] [CrossRef]

- Horstrand, P.; Guerra, R.; Rodriguez, A.; Diaz, M.; Lopez, S.; Lopez, J.F. A UAV Platform Based on a Hyperspectral Sensor for Image Capturing and On-Board Processing. IEEE Access 2019, 7, 66919–66938. [Google Scholar] [CrossRef]

- Da Silva, J.F.; Brito, A.V.; De Lima, J.A.G.; De Moura, H.N. An embedded system for aerial image processing from unmanned aerial vehicles. In Proceedings of the 2015 Brazilian Symposium on Computing Systems Engineering (SBESC), Foz do Iguacu, Brazil, 3–6 November 2015; pp. 154–157. [Google Scholar]

- Xu, D.; Li, T.; Li, Y.; Su, X.; Tarkoma, S.; Jiang, T.; Crowcroft, J.; Hui, P. Edge Intelligence: Architectures, Challenges, and Applications. arXiv Prepr. 2020, arXiv:2003.12172. [Google Scholar]

- Sze, V.; Chen, Y.-H.; Yang, T.-J.; Emer, J.S. Efficient Processing of Deep Neural Networks: A Tutorial and Survey. Proc. IEEE 2017, 105, 2295–2329. [Google Scholar] [CrossRef] [Green Version]

- Han, S.; Mao, H.; Dally, W.J. Deep Compression: Compressing Deep Neural Networks with Pruning, Trained Quantization and Huffman Coding. In Proceedings of the International Conference on Learning Representations, San Juan, Puerto Rico, 2–4 May 2016. [Google Scholar]

- Egli, S.; Höpke, M. CNN-Based Tree Species Classification Using High Resolution RGB Image Data from Automated UAV Observations. Remote Sens. 2020, 12, 3892. [Google Scholar] [CrossRef]

- Fountsop, A.N.; Fendji, J.L.E.K.; Atemkeng, M. Deep Learning Models Compression for Agricultural Plants. Appl. Sci. 2020, 10, 6866. [Google Scholar] [CrossRef]

- Blekos, K.; Nousias, S.; Lalos, A.S. Efficient automated U-Net based tree crown delineation using UAV multi-spectral imagery on embedded devices. In Proceedings of the 2020 IEEE 18th International Conference on Industrial Informatics (INDIN), Warwick, UK, 20–23 July 2020; Volume 1, pp. 541–546. [Google Scholar]

- Iandola, F.N.; Han, S.; Moskewicz, M.W.; Ashraf, K.; Dally, W.J.; Keutzer, K. SqueezeNet: AlexNet-level accuracy with 50x fewer parameters and <0.5 MB model size. arXiv Prepr. 2016, arXiv:1602.07360. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L. MobileNetV2: Inverted Residuals and Linear Bottlenecks. In Proceedings of the 2018 IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4510–4520. [Google Scholar]

- Ma, N.; Zhang, X.; Zheng, H.; Sun, J. ShuffleNet V2: Practical Guidelines for Efficient CNN Architecture Design. In Proceedings of the European Conference on Computer Vision, Salt Lake City, UT, USA, 18–23 June 2018; pp. 122–138. [Google Scholar]

- Wang, S.; Zhao, J.; Ta, N.; Zhao, X.; Xiao, M.; Wei, H. A real-time deep learning forest fire monitoring algorithm based on an improved Pruned + KD model. J. Real-Time Image Process. 2021, 1–11. [Google Scholar] [CrossRef]

- Hua, X.; Wang, X.; Rui, T.; Shao, F.; Wang, D. Light-weight UAV object tracking network based on strategy gradient and attention mechanism. Knowledge-Based Syst. 2021, 224, 107071. [Google Scholar] [CrossRef]

- Han, S.; Pool, J.; Tran, J.; Dally, W.J. Learning both weights and connections for efficient neural networks. Neural Inf. Process. Syst. 2015, 28, 1135–1143. [Google Scholar]

- Srinivas, S.; Babu, R.V. Data-free Parameter Pruning for Deep Neural Networks. In Proceedings of the British Machine Vision Conference 2015 (BMVC), Swansea, UK, 7–10 September 2015. [Google Scholar] [CrossRef] [Green Version]

- Li, H.; Kadav, A.; Durdanovic, I.; Samet, H.; Graf, H.P. Pruning Filters for Efficient ConvNets. In Proceedings of the International Conference on Learning Representations, San Juan, Puerto Rico, 2–4 May 2016. [Google Scholar]

- Fan, W.; Xu, Z.; Liu, H.; Zongwei, Z. Machine Learning Agricultural Application Based on the Secure Edge Computing Platform. In Proceedings of the International Conference on Machine Learning, Online. 13–18 July 2020; pp. 206–220. [Google Scholar]

- Lebedev, V.; Ganin, Y.; Rakhuba, M.; Oseledets, I.; Lempitsky, V. Speeding-up Convolutional Neural Networks Using Fine-tuned CP-Decomposition. In Proceedings of the International Conference on Learning Representations, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Kim, Y.; Park, E.; Yoo, S.; Choi, T.; Yang, L.; Shin, D. Compression of deep convolutional neural networks for fast and low power mobile applications. arXiv Prepr. 2015, arXiv:1511.06530. [Google Scholar]

- Jaderberg, M.; Vedaldi, A.; Zisserman, A. Speeding up Convolutional Neural Networks with Low Rank Expansions. In Proceedings of the British Machine Vision Conference, Nottingham, UK, 1–5 September 2014. [Google Scholar]

- Zhang, X.; Zou, J.; He, K.; Sun, J. Accelerating Very Deep Convolutional Networks for Classification and Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 38, 1943–1955. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Falaschetti, L.; Manoni, L.; Rivera, R.C.F.; Pau, D.; Romanazzi, G.; Silvestroni, O.; Tomaselli, V.; Turchetti, C. A Low-Cost, Low-Power and Real-Time Image Detector for Grape Leaf Esca Disease Based on a Compressed CNN. IEEE J. Emerg. Sel. Top. Circuits Syst. 2021, 11, 468–481. [Google Scholar] [CrossRef]

- Chen, W.; Wilson, J.; Tyree, S.; Weinberger, K.; Chen, Y. Compressing Neural Networks with the Hashing Trick. Int. Conf. Mach. Learn. 2015, 3, 2285–2294. [Google Scholar]

- Dettmers, T. 8-Bit Approximations for Parallelism in Deep Learning. In Proceedings of the International Conference on Learning Representations, San Juan, Puerto Rico, 2–4 May 2016. [Google Scholar]

- Zhou, A.; Yao, A.; Guo, Y.; Xu, L.; Chen, Y. Incremental Network Quantization: Towards Lossless CNNs with Low-precision Weights. In Proceedings of the International Conference on Learning Representations, Toulon, France, 24–26 April 2017. [Google Scholar]

- Choudhary, T.; Mishra, V.; Goswami, A.; Sarangapani, J. A comprehensive survey on model compression and acceleration. Artif. Intell. Rev. 2020, 53, 5113–5155. [Google Scholar] [CrossRef]

- Romero, A.; Ballas, N.; Kahou, S.E.; Chassang, A.; Gatta, C.; Bengio, Y. FitNets: Hints for Thin Deep Nets. In Proceedings of the International Conference on Learning Representations, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Korattikara, A.; Rathod, V.; Murphy, K.; Welling, M. Bayesian dark knowledge. Neural Inf. Process. Syst. 2015, 28, 3438–3446. [Google Scholar]

- Kim, J.; Park, S.; Kwak, N. Paraphrasing Complex Network: Network Compression via Factor Transfer. Neural Inf. Process. Syst. 2018, 31, 2760–2769. [Google Scholar]

- Gou, J.; Yu, B.; Maybank, S.J.; Tao, D. Knowledge Distillation: A Survey. Int. J. Comput. Vis. 2021, 129, 1789–1819. [Google Scholar] [CrossRef]

- La Rosa, L.E.C.; Oliveira, D.A.B.; Zortea, M.; Gemignani, B.H.; Feitosa, R.Q. Learning Geometric Features for Improving the Automatic Detection of Citrus Plantation Rows in UAV Images. IEEE Geosci. Remote Sens. Lett. 2020, 1–5. [Google Scholar] [CrossRef]

- Qiu, W.; Ye, J.; Hu, L.; Yang, J.; Li, Q.; Mo, J.; Yi, W. Distilled-MobileNet Model of Convolutional Neural Network Simplified Structure for Plant Disease Recognition. Smart Agric. 2021, 3, 109. [Google Scholar]

- Ding, M.; Li, N.; Song, Z.; Zhang, R.; Zhang, X.; Zhou, H. A Lightweight Action Recognition Method for Unmanned-Aerial-Vehicle Video. In Proceedings of the 2020 IEEE 3rd International Conference on Electronics and Communication Engineering (ICECE), Xi’an, China, 14–16 December 2020; pp. 181–185. [Google Scholar]

- Dong, J.; Ota, K.; Dong, M. Real-Time Survivor Detection in UAV Thermal Imagery Based on Deep Learning. In Proceedings of the 2020 16th International Conference on Mobility, Sensing and Networking (MSN), Tokyo, Japan, 17–19 December 2020; pp. 352–359. [Google Scholar]

- Sherstjuk, V.; Zharikova, M.; Sokol, I. Forest fire monitoring system based on UAV team, remote sensing, and image processing. In Proceedings of the 2018 IEEE Second International Conference on Data Stream Mining & Processing (DSMP), Lviv, Ukraine, 21–25 August 2018; pp. 590–594. [Google Scholar]

- Sandino, J.; Vanegas, F.; Maire, F.; Caccetta, P.; Sanderson, C.; Gonzalez, F. UAV Framework for Autonomous Onboard Navigation and People/Object Detection in Cluttered Indoor Environments. Remote Sens. 2020, 12, 3386. [Google Scholar] [CrossRef]

- Jaiswal, D.; Kumar, P. Real-time implementation of moving object detection in UAV videos using GPUs. J. Real-Time Image Process. 2020, 17, 1301–1317. [Google Scholar] [CrossRef]

- Saifullah, A.; Agrawal, K.; Lu, C.; Gill, C. Multi-Core Real-Time Scheduling for Generalized Parallel Task Models. Real-Time Syst. 2013, 49, 404–435. [Google Scholar] [CrossRef]

- Madroñal, D.; Palumbo, F.; Capotondi, A.; Marongiu, A. Unmanned Vehicles in Smart Farming: A Survey and a Glance at Future Horizons. In Proceedings of the 2021 Drone Systems Engineering (DroneSE) and Rapid Simulation and Performance Evaluation: Methods and Tools Proceedings (RAPIDO’21), Budapest, Hungary, 18–20 January 2021; pp. 1–8. [Google Scholar]

- Li, W.; He, C.; Fu, H.; Zheng, J.; Dong, R.; Xia, M.; Yu, L.; Luk, W. A Real-Time Tree Crown Detection Approach for Large-Scale Remote Sensing Images on FPGAs. Remote Sens. 2019, 11, 1025. [Google Scholar] [CrossRef] [Green Version]

- Ma, Y.; Li, Q.; Chu, L.; Zhou, Y.; Xu, C. Real-Time Detection and Spatial Localization of Insulators for UAV Inspection Based on Binocular Stereo Vision. Remote Sens. 2021, 13, 230. [Google Scholar] [CrossRef]

- Rodríguez-Canosa, G.R.; Thomas, S.; Del Cerro, J.; Barrientos, A.; MacDonald, B. A Real-Time Method to Detect and Track Moving Objects (DATMO) from Unmanned Aerial Vehicles (UAVs) Using a Single Camera. Remote Sens. 2012, 4, 1090–1111. [Google Scholar] [CrossRef] [Green Version]

- Opromolla, R.; Fasano, G.; Accardo, D. A Vision-Based Approach to UAV Detection and Tracking in Cooperative Applications. Sensors 2018, 18, 3391. [Google Scholar] [CrossRef] [Green Version]

- Li, B.; Zhu, Y.; Wang, Z.; Li, C.; Peng, Z.-R.; Ge, L. Use of Multi-Rotor Unmanned Aerial Vehicles for Radioactive Source Search. Remote Sens. 2018, 10, 728. [Google Scholar] [CrossRef] [Green Version]

- Rebouças, R.A.; Da Cruz Eller, Q.; Habermann, M.; Shiguemori, E.H. Embedded system for visual odometry and localization of moving objects in images acquired by unmanned aerial vehicles. In Proceedings of the 2013 III Brazilian Symposium on Computing Systems Engineering, Rio De Janeiro, Brazil, 4–8 December 2013; pp. 35–40. [Google Scholar]

- Kizar, S.N.; Satyanarayana, G. Object detection and location estimation using SVS for UAVs. In Proceedings of the International Conference on Automatic Control and Dynamic Optimization Techniques, Pune, India, 9–10 September 2016; pp. 920–924. [Google Scholar]

- Abughalieh, K.M.; Sababha, B.H.; Rawashdeh, N.A. A video-based object detection and tracking system for weight sensitive UAVs. Multimedia Tools Appl. 2019, 78, 9149–9167. [Google Scholar] [CrossRef]

- Choi, H.; Geeves, M.; Alsalam, B.; Gonzalez, F. Open source computer-vision based guidance system for UAVs on-board decision making. In Proceedings of the 2016 IEEE aerospace conference, Big Sky, MO, USA, 5–12 March 2016; pp. 1–5. [Google Scholar]

- De Oliveira, D.C.; Wehrmeister, M.A. Using Deep Learning and Low-Cost RGB and Thermal Cameras to Detect Pedestrians in Aerial Images Captured by Multirotor UAV. Sensors 2018, 18, 2244. [Google Scholar] [CrossRef] [Green Version]

- Kersnovski, T.; Gonzalez, F.; Morton, K. A UAV system for autonomous target detection and gas sensing. In Proceedings of the 2017 IEEE aerospace conference, Big Sky, MO, USA, 4–11 March 2017; pp. 1–12. [Google Scholar]

- Basso, M.; Stocchero, D.; Henriques, R.V.B.; Vian, A.L.; Bredemeier, C.; Konzen, A.A.; De Freitas, E.P. Proposal for an Embedded System Architecture Using a GNDVI Algorithm to Support UAV-Based Agrochemical Spraying. Sensors 2019, 19, 5397. [Google Scholar] [CrossRef] [Green Version]

- Daryanavard, H.; Harifi, A. Implementing face detection system on uav using raspberry pi platform. In Proceedings of the Iranian Conference on Electrical Engineering, Mashhad, Iran, 8–10 May 2018; pp. 1720–1723. [Google Scholar]

- Safadinho, D.; Ramos, J.; Ribeiro, R.; Filipe, V.; Barroso, J.; Pereira, A. UAV Landing Using Computer Vision Techniques for Human Detection. Sensors 2020, 20, 613. [Google Scholar] [CrossRef] [Green Version]

- Natesan, S.; Armenakis, C.; Benari, G.; Lee, R. Use of UAV-Borne Spectrometer for Land Cover Classification. Drones 2018, 2, 16. [Google Scholar] [CrossRef] [Green Version]

- Benhadhria, S.; Mansouri, M.; Benkhlifa, A.; Gharbi, I.; Jlili, N. VAGADRONE: Intelligent and Fully Automatic Drone Based on Raspberry Pi and Android. Appl. Sci. 2021, 11, 3153. [Google Scholar] [CrossRef]

- Ayoub, N.; Schneider-Kamp, P. Real-Time On-Board Deep Learning Fault Detection for Autonomous UAV Inspections. Electronics 2021, 10, 1091. [Google Scholar] [CrossRef]

- Xu, L.; Luo, H. Towards autonomous tracking and landing on moving target. In Proceedings of the 2016 IEEE International Conference on Real-time Computing and Robotics (RCAR), Angkor Wat, Cambodia, 6–9 June 2016; pp. 620–628. [Google Scholar]

- Genc, H.; Zu, Y.; Chin, T.-W.; Halpern, M.; Reddi, V.J. Flying IoT: Toward Low-Power Vision in the Sky. IEEE Micro 2017, 37, 40–51. [Google Scholar] [CrossRef]

- Meng, L.; Peng, Z.; Zhou, J.; Zhang, J.; Lu, Z.; Baumann, A.; Du, Y. Real-Time Detection of Ground Objects Based on Unmanned Aerial Vehicle Remote Sensing with Deep Learning: Application in Excavator Detection for Pipeline Safety. Remote Sens. 2020, 12, 182. [Google Scholar] [CrossRef] [Green Version]

- Tijtgat, N.; Van Ranst, W.; Goedeme, T.; Volckaert, B.; De Turck, F. Embedded real-time object detection for a UAV warning system. In Proceedings of the IEEE International Conference on Computer Vision Workshops, Venice, Italy, 22–29 October 2017; pp. 2110–2118. [Google Scholar]

- Melián, J.; Jiménez, A.; Díaz, M.; Morales, A.; Horstrand, P.; Guerra, R.; López, S.; López, J. Real-Time Hyperspectral Data Transmission for UAV-Based Acquisition Platforms. Remote Sens. 2021, 13, 850. [Google Scholar] [CrossRef]

- Balamuralidhar, N.; Tilon, S.; Nex, F. MultEYE: Monitoring System for Real-Time Vehicle Detection, Tracking and Speed Estimation from UAV Imagery on Edge-Computing Platforms. Remote Sens. 2021, 13, 573. [Google Scholar] [CrossRef]

- Lammie, C.; Olsen, A.; Carrick, T.; Azghadi, M.R. Low-Power and High-Speed Deep FPGA Inference Engines for Weed Classification at the Edge. IEEE Access 2019, 7, 51171–51184. [Google Scholar] [CrossRef]

- Caba, J.; Díaz, M.; Barba, J.; Guerra, R.; López, J. FPGA-Based On-Board Hyperspectral Imaging Compression: Benchmarking Performance and Energy Efficiency against GPU Implementations. Remote Sens. 2020, 12, 3741. [Google Scholar] [CrossRef]

- Zoph, B.; Le, Q.V. Neural architecture search with reinforcement learning. arXiv Prepr. 2016, arXiv:1611.01578. [Google Scholar]

- Liu, D.; Kong, H.; Luo, X.; Liu, W.; Subramaniam, R. Bringing AI to Edge: From Deep Learning’s Perspective. arXiv Prepr. 2020, arXiv:2011.14808. [Google Scholar]

- Pan, S.J.; Yang, Q. A Survey on Transfer Learning. IEEE Trans. Knowl. Data Eng. 2009, 22, 1345–1359. [Google Scholar] [CrossRef]

- Xiao, T.; Zhang, J.; Yang, K.; Peng, Y.; Zhang, Z. Error-driven incremental learning in deep convolutional neural network for large-scale image classification. In Proceedings of the 22nd ACM international conference on Multimedia, Orlando, FL, USA, 3–7 November 2014; pp. 177–186. [Google Scholar]

- Kai, C.; Zhou, H.; Yi, Y.; Huang, W. Collaborative Cloud-Edge-End Task Offloading in Mobile-Edge Computing Networks With Limited Communication Capability. IEEE Trans. Cogn. Commun. Netw. 2020, 7, 624–634. [Google Scholar] [CrossRef]

- Bonawitz, K.; Eichner, H.; Grieskamp, W.; Huba, D.; Ingerman, A.; Ivanov, V.; Kiddon, C.; Konečný, J.; Mazzocchi, S.; McMahan, H.B. Towards federated learning at scale: System design. arXiv Prepr. 2019, arXiv:1902.01046. [Google Scholar]

| Category | Major Metrics |

|---|---|

| mini UAV | empty weight < 0.25 kg, flight altitude ≤ 50 m, max speed ≤ 40 km/h |

| light UAV | empty weight ≤ 4 kg, max take-off weight ≤ 7 kg, max speed ≤ 100 km/h |

| small UAV | empty weight ≤ 15 kg, or max take-off weight ≤ 25 kg |

| medium UAV | empty weight > 15 kg, 25 kg < max take-off weight ≤ 150 kg |

| large UAV | max take-off weight > 150 kg |

| Category | Advantages | Drawbacks | |

|---|---|---|---|

| fixed wing | long range and endurance, large load, fast flight speed | high requirements for take-off and landing, poor mobility, no hovering capability | |

| rotary wing | unmanned helicopter | long range, large load, hovering capability, low requirement for lifting and landing | slow speed, difficulty in operating, high maintenance cost |

| multi-rotor UAVs | small in size, flexible, hovering capability, almost no requirement for lifting and landing | short range and endurance, slow speed, small load | |

| flapping-wing | flexible, small in size | slow speed, single drive mode | |

| hybrid | flexibility in vertical take-off and landing, fast speed, long range | complex structure, high maintenance cost | |

| Sensors | Major Characteristics | Typical Applications |

|---|---|---|

| RGB imaging | obtain images in visible spectrum, with advantages of high-resolution, lightweight, low-cost, easy-to-use | crop recognition, plants defects and greenness monitoring [8,49,50,51] |

| Multispectral imager | high spatial resolution at centimeter-level RS data with multiple bands from visible to near infrared | leaf area index (LAI) estimation [52], crop diseases and weeds monitoring and mapping [18,53], nutrient deficiency diagnosis [54] |

| Hyperspectral imager | provide a large continuous narrow wavebands covering from ultraviolet to longwave infrared spectra | crop species classification with similar spectral features [55], soil moisture content monitoring [56], crop yield estimation [57] |

| Thermal sensors | use the information at the emitted radiation in the thermal infrared range of electromagnetic spectrum [58], and provide measurements of energy fluxes and temperatures from the earth’s surface [59] | monitoring of water stress, crop diseases and plant phenotyping, estimation of crop yield [2], support decision making for irrigation scheduling and harvesting operations [60] |

| LiDAR | use laser as a radiation source, and works at the wavelength of infrared to ultraviolet spectrum generally, with advantages of narrow beams, wide speed measurement ranges, and strong resistance to electromagnetic and clutter interference [61] | detect individual crops [13], measure canopy structure and height [62], predict biomass and leaf nitrogen content [63] |

| SAR | provide high-resolution, multi-polarization, multi-frequency images in all weather and all day | crop identification and land cover mapping [64], crop and cropland parameter extraction such as soil salt and moisture [65], crop yield estimation [66] |

| Area | Task | Specific Application | Type | Model | Reference |

|---|---|---|---|---|---|

| Crop pest and disease detection | Classification | Soybean leaf diseases recognition | CNN | Inception, VGG19, Xception, Resnet-50 | [86] |

| Classifying fusarium wilt of radish | CNN | VGG-A | [113] | ||

| Detection of helminthosporium leaf blotch disease | CNN | Customize CNN | [114] | ||

| Object detection | Detection for pine wilt disease | CNN | YOLOv4 | [120] | |

| Identification of fruit tree pests | CNN | YOLOv3-tiny | [119] | ||

| Recognition of spraying area | CNN | Customize CNN | [136] | ||

| Semantic segmentation | Quantitative phenotyping of northern leaf blight | CNN | Mask R-CNN | [127] | |

| Vine disease detection | CNN | VddNet | [128] | ||

| Field weed density evaluation | CNN | Modified U-Net | [129] | ||

| Weed detection and mapping | Classification | Mapping of weed species in winter wheat crops | CNN | Modified Resnet18 | [115] |

| Weed detection in line crops | CNN | Resnet18 | [27] | ||

| Mid-to late-season weed detection | CNN | MobilenetV2 | [122] | ||

| Weed classification | CNN | Resnet50 | [116] | ||

| Detecting rumex obtusifolius weed plants | CNN | AlexNet | [121] | ||

| Object detection | Mid-to late-season weed detection | CNN | SSD, Faster R-CNN | [122] | |

| Semantic segmentation | Large-scale semantic weed mapping | CNN | Modified SegNet | [78] | |

| Weed mapping | CNN | FCN | [76] | ||

| Real-time weed mapping | CNN | FCN | [137] | ||

| Identification and grading of maize drought | CNN | Modified U-Net | [138] | ||

| Crop growth monitoring and crop yield estimation | Classification | Yield assessment of paddy fields | CNN | Inception | [139] |

| Rice grain yield estimation at the ripening stage | CNN | AlexNet | [16] | ||

| Identification of citrus trees | CNN | Customize CNN | [134] | ||

| Count plants and detect plantation-rows | CNN | VGG19 | [140] | ||

| Counting and geolocation citrus-trees | CNN | VGG16 | [141] | ||

| Object detection | Strawberry yield prediction | CNN | Faster R-CNN | [124] | |

| Yield estimation of citrus fruits | CNN, RNN | Faster R-CNN | [123] | ||

| Growing status observation for oil palm trees | CNN | Faster R-CNN | [142] | ||

| Plant identification and counting | CNN | Faster R-CNN, YOLO | [143] | ||

| Crop detection for early-season maize stand count | CNN | Mask Scoring RCNN | [144] | ||

| Semantic segmentation | Predict single boll weight of cotton | CNN | FCN | [132] | |

| Segmenting purple rapeseed leaves for nitrogen stress detection | CNN | U-Net | [131] | ||

| Counting of in situ rice seedlings | CNN | Modified vgg16 + customize segmentation network | [145] | ||

| Crop type classification | Classification | Automatic classification of trees | CNN | GoogLeNet | [117] |

| Identifying heterogeneous crops | CRF | SCRF | [118] | ||

| Rice seedling detection | CNN | VGG16 | [146] | ||

| Object detection | Augmenting bale detection | CNN, GAN | CycleGAN, Modified YOLOv3 | [147] | |

| Phenotyping in citrus | CNN | YOLOv3 | [148] | ||

| Detection of banana plants | CNN | Customize CNN | [149] | ||

| Automatic tobacco plant detection | CNN | Customize CNN | [150] | ||

| Detection of maize tassels | CNN | Tassel region proposals based on morphological processing + VGG16 | [151] | ||

| Detection of maize tassels | CNN | Faster R-CNN | [152] | ||

| Frost management in apple orchard | CNN | YOLOv4 | [153] | ||

| Semantic segmentation | Soil and crop segmentation | CNN | Customize CNN | [154] | |

| Vegetable mapping | RNN | Attention-based RNN | [155] | ||

| Crop classification | CNN | SegNet | [156] | ||

| Semantic segmentation of citrus orchard | CNN | FCN, U-Net, SegNet, DDCN, DeepLabV3+ | [130] | ||

| UAV scouting for rice lodging assessment | CNN | EDANet | [157] |

| Source | Dataset | Platform | Data Types | Applications | Dataset Links |

|---|---|---|---|---|---|