Shallow-Water Benthic Habitat Mapping Using Drone with Object Based Image Analyses

Abstract

:1. Introduction

2. Materials and Methods

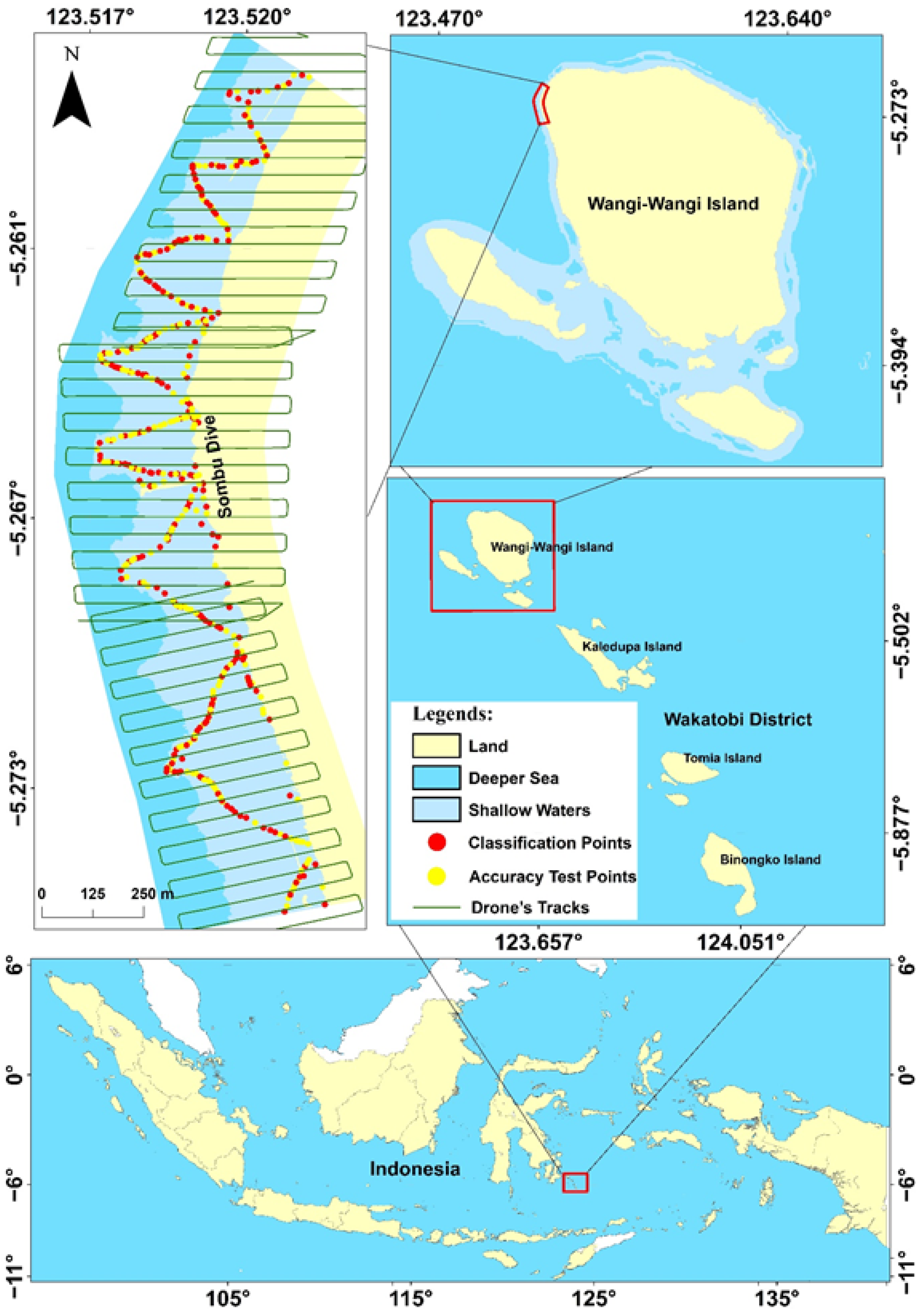

2.1. Study Area

2.2. Tools and Materials

2.3. Benthic Habitat Data Collection

2.4. Drone Image Acquisition

2.5. Orthophoto Digital Processes

2.6. Geometric Correction

2.7. Image Classification

2.8. Benthic Habitat Classification Scheme

2.9. Accuracy Test

3. Result and Disscussion

3.1. Digital Orthophoto

3.2. Image Classification Level 1 (Reef Level)

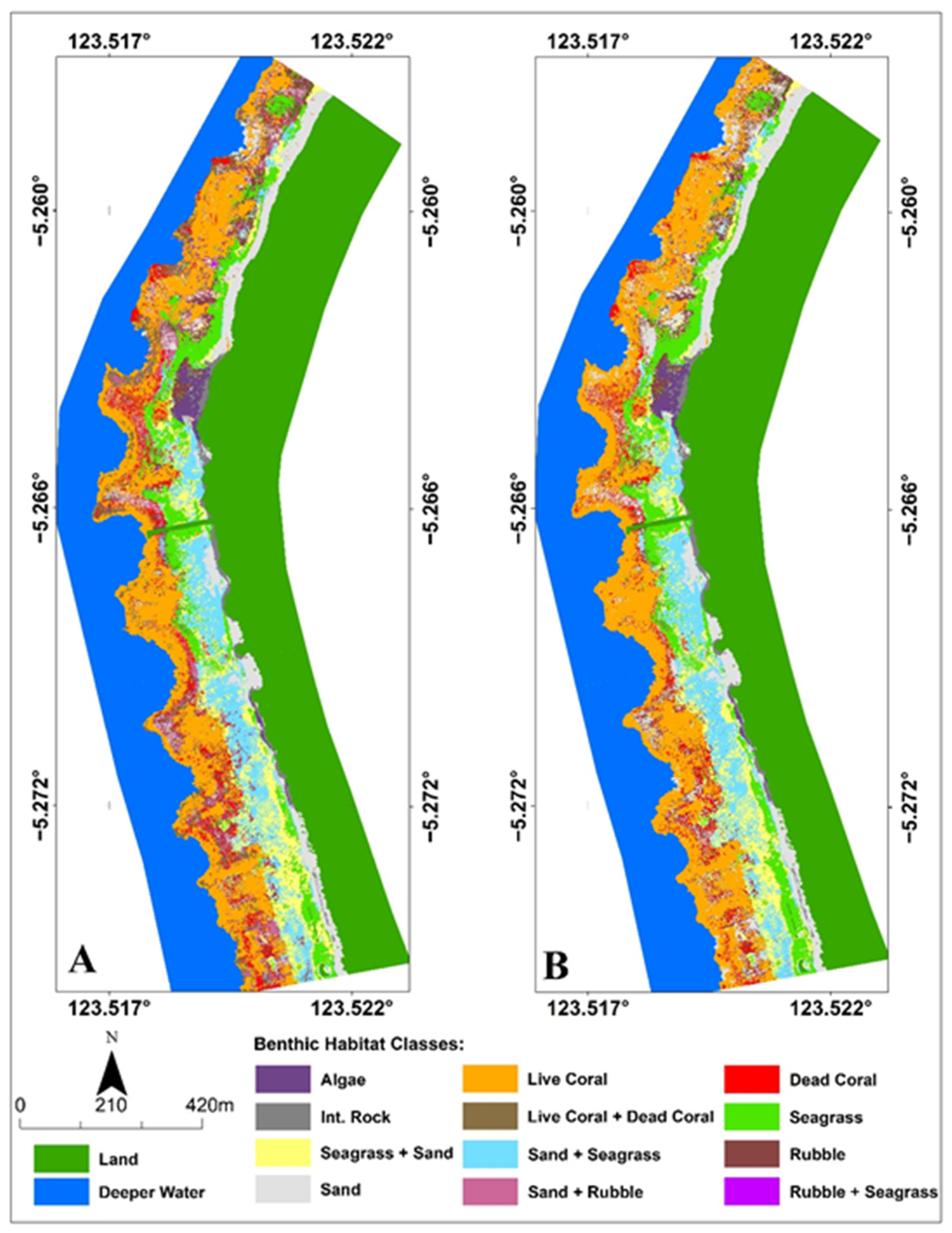

3.3. Image Classification Level 2 (Benthic Habitat Classification)

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Anggoro, A.; Siregar, V.P.; Agus, S.B. Geomorphic zones mapping of coral reef ecosystem with OBIA method, case study in Pari Island. J. Penginderaan Jauh 2015, 12, 1–12. [Google Scholar]

- Zhang, C.; Selch, D.; Xie, Z.; Roberts, C.; Cooper, H.; Chen, G. Object-based benthic habitat mapping in the Florida Keys from hyperspectral imagery. Estuar. Coast. Shelf Ser. 2013, 134, 88–97. [Google Scholar] [CrossRef]

- Wilson, J.R.; Ardiwijaya, R.L.; Prasetia, R. A Study of the Impact of the 2010 Coral Bleaching Event on Coral Communities in Wakatobi National Park; Report No.7/12; The Nature Conservancy, Indo-Pacific Division: Sanur, Indonesia, 2012; 25p. [Google Scholar]

- Suyarso; Budiyanto, A. Studi Baseline Terumbu Karang di Lokasi DPL Kabupaten Wakatobi; COREMAP II (Coral Reef Rehabilitation and Management Program)-LIPI: Jakarta, Indonesia, 2008; 107p. [Google Scholar]

- Anonim. Coral reef rehabilitation and management program. In CRITC Report: Base line study Wakatobi Sulawesi Tenggara; COREMAP: Jakarta, Indonesia, 2001; 123p. [Google Scholar]

- Balai Taman Nasional Wakatobi. Rencana pengelolaan taman nasional Wakatobi Tahun 1998–2023; Proyek kerjasama departemen kehutanan PHKA balai taman nasional Wakatobi, Pemerintah Kabupaten Wakatobi; The Nature Conservancy dan WWF-Indonesia: Bau-Bau, Indonesia, 2008. [Google Scholar]

- Haapkyla, J.; Unsworth, R.K.F.; Seymour, A.S.; Thomas, J.M.; Flavel, M.; Willis, B.L.; Smith, D.J. Spation-temporal coral disease dynamics in the Wakatobi marine national park. South-East Sulawesi Indonesia. Dis. Aquat. Org. 2009, 87, 105–115. [Google Scholar] [CrossRef] [Green Version]

- Haapkyla, J.; Seymour, A.S.; Trebilco, J.; Smith, D. Coral disease prevalence and coral health in the Wakatobi marine park, Southeast Sulawesi, Indonesia. J. Mar. Biol. Assoc. 2007, 87, 403–414. [Google Scholar] [CrossRef]

- Crabbe, M.J.C.; Karaviotis, S.; Smith, D.J. Preliminary comparison of three coral reef sites in the Wakatobi marine national park (S.E. Sulawesi, Indonesia): Estimated recruitment dates compared with discovery Bay, Jamaica. Bull. Mar. Sci. 2004, 74, 469–476. [Google Scholar]

- Turak, E. Coral diversity and distribution. In Rapid ecologica assessment, Wakatobi National Park; Pet-Soede, L., Erdmann, M.V., Eds.; TNC-SEA-CMPA; WWF Marine Program: Jakarta, Indonesia, 2003; 189p. [Google Scholar]

- Crabbe, M.J.C.; Smith, D.J. Comparison of two reef sites in the Wakatobi marine national park (S.E. Sulawesi, Indonesia) using digital image analysis. Coral Reefs 2002, 21, 242–244. [Google Scholar]

- Unsworth, R.K.F.; Wylie, E.; Smith, D.J.; Bell, J.J. Diel trophic structuring of seagrass bed fish assemblages in the Wakatobi Marine National Park, Indonesia. Estuar. Coast. Shelf Sci. 2007, 72, 81–88. [Google Scholar] [CrossRef]

- Ilyas, T.P.; Nababan, B.; Madduppa, H.; Kushardono, D. Seagrass ecosystem mapping with and without water column correction in Pajenekang island waters, South Sulawesi. J. Ilmu Teknol. Kelaut. Trop. 2020, 12, 9–23. (In Indonesian) [Google Scholar] [CrossRef]

- Pragunanti, T.; Nababan, B.; Madduppa, H.; Kushardono, D. Accuracy assessment of several classification algorithms with and without hue saturation intensity input features on object analyses on benthic habitat mapping in the Pajenekang island waters, South Sulawesi. IOP Conf. Ser. Earth Environ. Sci. 2020, 429, 012044. [Google Scholar] [CrossRef]

- Wicaksono, P.; Aryaguna, P.A.; Lazuardi, W. Benthic habitat mapping model and cross validation using machine-learning classification algorithms. Remote Sens. 2019, 11, 1279. [Google Scholar] [CrossRef] [Green Version]

- Al-Jenaid, S.; Ghoneim, E.; Abido, M.; Alwedhai, K.; Mohammed, G.; Mansoor, S.; Wisam, E.M.; Mohamed, N. Integrating remote sensing and field survey to map shallow water benthic habitat for the Kingdom of Bahrain. J. Environ. Sci. Eng. 2017, 6, 176–200. [Google Scholar]

- Anggoro, A.; Siregar, V.P.; Agus, S.B. Multiscale classification for geomorphic zone and benthic habitats mapping using OBIA method in Pari Island. J. Penginderaan Jauh 2017, 14, 89–93. [Google Scholar]

- Wahiddin, N.; Siregar, V.P.; Nababan, B.; Jaya, I.; Wouthuyzend, S. Object-based image analysis for coral reef benthic habitat mapping with several classification algorithms. Procedia Environ. Sci. 2015, 24, 222–227. [Google Scholar] [CrossRef] [Green Version]

- Zhang, C. Applying data fusion techniques for benthic habitat mapping. ISPRS J. Photogram. Remote Sens. 2014, 104, 213–223. [Google Scholar] [CrossRef]

- Siregar, V.; Wouthuyzen, S.; Sunuddin, A.; Anggoro, A.; Mustika, A.A. Pemetaan habitat dasar dan estimasi stok ikan terumbu dengan citra satelit resolusi tinggi. J. Ilmu Teknol. Kelaut. Trop. 2013, 5, 453–463. [Google Scholar]

- Siregar, V.P. Pemetaan Substrat Dasar Perairan Dangkal Karang Congkak dan Lebar Kepulauan Seribu Menggunakan Citra Satelit QuickBird. J. Ilmu Teknol. Kelaut. Trop. 2010, 2, 19–30. [Google Scholar]

- Selamat, M.B.; Jaya, I.; Siregar, V.P.; Hestirianoto, T. Aplikasi citra quickbird untuk pemetaan 3D substrat dasar di gusung karang. J. Imiah Geomatika 2012, 8, 95–106. [Google Scholar]

- Selamat, M.B.; Jaya, I.; Siregar, V.P.; Hestirianoto, T. Geomorphology zonation and column correction for bottom substrat mapping using quickbird image. J. Ilmu Teknol. Kelaut. Trop. 2012, 2, 17–25. [Google Scholar]

- Phinn, S.R.; Roelfsema, C.M.; Mumby, P.J. Multi-scale, object-based image analysis for mapping geomorphic and ecological zones on coral reefs. Int. J. Remote Sens. 2011, 33, 3768–3797. [Google Scholar] [CrossRef]

- Shihavuddin, A.S.M.; Gracias, N.; Garcia, R.; Gleason, A.; Gintert, B. Image-based coral reef classification and thematic mapping. Remote Sens. 2013, 5, 1809–1841. [Google Scholar] [CrossRef] [Green Version]

- Andréfouët, S.; Kramer, P.; Torres-Pulliza, D.; Joyce, K.E.; Hochberg, E.J.; Garza-Pérez, R.; Mumby, P.J.; Riegl, B.; Yamano, H.; White, W.H. Multi-site evaluation of IKONOS data for classification of tropical coral reef environments. Remote Sens. Environ. 2003, 88, 128–143. [Google Scholar] [CrossRef]

- Mumby, P.J.; Edwards, A.J. Mapping marine environments with IKONOS imagery: Enhanced spatial resoltion can deliver greater thematic accuracy. Remote Sens. Environ. 2002, 82, 48–257. [Google Scholar] [CrossRef]

- Mumby, P.J.; Clark, C.D.; Green, E.P.; Edwards, A.J. Benefits of water column correction and contextual editing for mapping coral reefs. Int. J. Remote Sens. 1998, 19, 203–210. [Google Scholar] [CrossRef]

- Malthus, T.J.; Mumby, P.J. Remote sensing of the coastal zone: An overview and priorities for future research. Int. J. Remote Sens. 2003, 24, 2805–2815. [Google Scholar] [CrossRef]

- Wang, L.; Sousa, W.P.; Gong, P. Integration of object-based and pixel-based classification for mapping mangroves with IKONOS imagery. Int. J. Remote Sens. 2004, 25, 5655–5668. [Google Scholar] [CrossRef]

- Pádua, L.; Vanko, T.; Hruška, J.; Adão, T.; Sousa, J.J.; Peres, E.; Morais, R. UAS, sensors, and data processing in agroforestry: A review towards practical applications. Int. J. Remote Sens. 2017, 38, 2349–2391. [Google Scholar] [CrossRef]

- Castillo-Carrión, C.; Guerrero-Ginel, J.E. Autonomous 3D metric reconstruction from uncalibrated aerial images captured from UAVs. Int. J. Remote Sens. 2017, 38, 3027–3053. [Google Scholar] [CrossRef]

- Bazzoffi, P. Measurement of rill erosion through a new UAV-GIS methodology. Ital. J. Agron. 2015, 10, 695. [Google Scholar] [CrossRef] [Green Version]

- Brouwer, R.L.; De Schipper, M.A.; Rynne, P.F.; Graham, F.J.; Reniers, A.D.; MacMahan, J.H.M. Surfzone monitoring using rotary wing unmanned aerial vehicles. J. Atmos. Ocean. Technol. 2015, 32, 855–863. [Google Scholar] [CrossRef] [Green Version]

- Ramadhani, Y.H.; Rohmatulloh; Pominam, K.A.; Susanti, R. Pemetaan pulau kecil dengan pendekatan berbasis objek menggunakan data unmanned aerial vehicle (UAV). Maj. Ilm. Globe 2015, 17, 125–134. [Google Scholar]

- Colomina, I.; Molina, P. Unmanned aerial systems for photogrammetry and remote sensing: A review. ISPRS J. Photogram. Remote Sens. 2014, 92, 79–97. [Google Scholar] [CrossRef] [Green Version]

- Udin, W.S.; Ahmad, A. Assessment of photogrammetric mapping accuracy based on variation flying altitude using unmanned aerial vehicle. 8th International Symposium of the Digital Earth (ISDE8). IOP Conf. Ser. Earth Environ. Sci. 2014, 18. [Google Scholar] [CrossRef]

- Chao, H.Y.; Cao, Y.C.; Chen, Y.Q. Autopilots for small unmanned aerial vehicles: A survey. Int. J. Contr. Autom. Syst. 2010, 8, 36–44. [Google Scholar]

- Mitch, B.; Salah, S. Architecture for cooperative airborne simulataneous localization and mapping. J. Intell. Robot Syst. 2009, 55, 267–297. [Google Scholar]

- Nagai, M.T.; Chen, R.; Shibasaki, H.; Kumugai; Ahmed, A. UAV-borne 3-D mapping system by multisensory integration. IEEE Trans. Geosci. Remote Sens. 2009, 47, 701–708. [Google Scholar] [CrossRef]

- Rango, A.S.; Laliberte, A.S.; Herrick, J.E.; Winters, C.; Havstad, K. Development of an operational UAV/remote sensing capability for rangeland management. In Proceedings of the 23rd Bristol International Unmanned Air Vehicle Systems (UAVS) Conference, Bristol, UK, 7–9 April 2008. [Google Scholar]

- Patterson, M.C.L.; Brescia, A. Integrated sensor systems for UAS. In Proceedings of the 23rd Bristol International Unmanned Air Vehicle Systems (UAVS) Conference, Bristol, UK, 7–9 April 2008. [Google Scholar]

- Rango, A.; Laliberte, A.S.; Steele, C.; Herrick, J.E.; Bestelmeyer, B.; Schmugge, T.; Roanhorse, A.; Jenkins, V. Using unmanned aerial vehicles for rangelands: Current applications and future potentials. Environ. Pract. 2006, 8, 159–168. [Google Scholar]

- Kalanter, B.; Mansor, S.B.; Sameen, M.I.; Pradhan, B.; Shafri, H.Z.M. Drone-based land-cover mapping using a fuzzy unordered rule induction algorithm integrated into object-based image analysis. Int. J. Remote Sens. 2017, 38, 2535–2556. [Google Scholar] [CrossRef]

- Poblete-Echeverría, C.; Omeldo, G.F.; Ingram, B.; Bardeen, M. Detection and segmentation of vine canopy in ultra-high spatial resolution RGB imagery obtained from unmanned aerial vehicle (UAV): A case study in a commercial vineyard. Remote Sens. 2017, 9, 268. [Google Scholar] [CrossRef] [Green Version]

- Seier, G.; Stangl, J.; Schöttl, S.; Sulzer, W.; Sass, O. UAV and TLS for monitoring a creek in an alpine environment, Styria, Austria. Int. J. Remote Sens. 2017, 38, 2903–2920. [Google Scholar] [CrossRef]

- Weiss, M.; Baret, F. Using 3D point clouds derived from UAV RGB imagery to describe vineyard 3D macrosStructure. Remote Sens. 2017, 9, 111. [Google Scholar] [CrossRef] [Green Version]

- Lizarazo, I.; Angulo, V.; Rodríguez, J. Automatic mapping of land surface elevation changes from UAV-based imagery. Int. J. Remote Sens. 2017, 38, 2603–2622. [Google Scholar] [CrossRef]

- Rasmussen, J.; Nielsen, J.; Garcia-Ruiz, F.; Christensen, S.; Streibig, J.C. Potential uses of small unmanned aircraft systems (UAS) in weed research. Weed Res. 2013, 53, 242–248. [Google Scholar] [CrossRef]

- Zhang, C.; Kovacs, J.M. The application of small unmanned aerial systems for precision agriculture: A review. Precis. Agric. 2012, 13, 693–712. [Google Scholar]

- Rinaudo, F.; Chiabrando, F.; Lingua, A.; Spano, A. Archeological site monitoring: UAV photogrammetry can be an answer. In Proceedings of the XXII ISPRS Congress: Imaging a Sustainable Future, Melbourne, Australia, 26 August–3 September 2012; Shortis, M., Mills, J., Eds.; Int. Archives of the Photogrammetry, Remote Sensing, and Spatial Information Sciences: Hannover, Germany, 2012; Volume XXXIX-B5, pp. 583–588. [Google Scholar]

- Rogers, K.; Finn, A. Three-dimensional UAV-based atmospheric tomography. J. Atmos. Oceanic Technol. 2013, 30, 336–344. [Google Scholar]

- Kalacska, M.; Lucanus, O.; Sousa, L.; Vieira, T.; Arroyo-Mora, J.P. Freshwater fish habitat complexity mapping using above and underwater structure-from-motion photogrammetry. Remote Sens. 2018, 10, 1912. [Google Scholar] [CrossRef] [Green Version]

- Meneses, N.C.; Brunner, F.; Baier, S.; Geist, J.; Schneider, T. Quantification of extent density, and status of aquatic reed beds using point clouds derived from UAV–RGB imagery. Remote Sens. 2018, 10, 1869. [Google Scholar] [CrossRef] [Green Version]

- Shintani, C.; Fonstad, M.A. Comparing remote-sensing techniques collecting bathymetric data from a gravel-bed river. Int. J. Remote Sens. 2017, 38, 2883–2902. [Google Scholar] [CrossRef]

- Husson, E.; Reese, H.; Ecke, F. Combining spectral data and a DSM from UAS-images for improved classification of non-submerged aquatic vegetation. Remote Sens. 2016, 9, 247. [Google Scholar] [CrossRef] [Green Version]

- Casado, M.R.; Gonzalez, R.B.; Wright, R.; Bellamy, P. Quantifying the effect of aerial imagery resolution in automated hydromorphological river characterization. Remote Sens. 2016, 8, 650. [Google Scholar] [CrossRef] [Green Version]

- Carvajal-Ramírez, F.; da Silva, J.R.M.; Agüera-Vega, F.; Martínez-Carricondo, P.; Serrano, J.; Moral, F.J. Evaluation of fire seve-rity indices based on pre- and post-fire multispectral imagery sensed from UAV. Remote Sens. 2019, 11, 993. [Google Scholar] [CrossRef] [Green Version]

- Guo, Q.; Su, T.; Hu, T.; Zhao, X.; Wu, F.; Li, Y.; Liu, J.; Chen, L.; Xu, G.; Lin, G.; et al. An integrated UAV-borne lidar system for 3D habitat mapping in three forest ecosystems across China. Int. J. Remote Sens. 2017, 38, 2954–2972. [Google Scholar] [CrossRef]

- Nevalainen, O.; Honkavaara, H.; Tuominen, S.; Viljanen, N.; Hakala, T.; Yu, X.; Hyyppä, J.; Saari, H.; Pölönen, I.; Imai, N.N.; et al. Individual tree detection and classification with UAV-based photogrammetric point clouds and hyperspectral imaging. Remote Sens. 2017, 9, 185. [Google Scholar] [CrossRef] [Green Version]

- Kvicera, M.; Perez-Fontan, F.; Pechac, P. A new propagation channel synthesizer for UAVs in the presence of tree canopies. Remote Sens. 2017, 9, 151. [Google Scholar] [CrossRef] [Green Version]

- Torresan, C.; Berton, A.; Carotenuto, F.; Di Gennaro, S.F.; Gioli, B.; Matese, A.; Miglietta, F.; Vagnoli, C.; Zaldei, A.; Wallace, L. Forestry applications of UAVs in Europe: A review. Int. J. Remote Sens. 2017, 38, 2427–2447. [Google Scholar] [CrossRef]

- Kachamba, D.J.; Ørka, H.O.; Gobakken, T.; Eid, T.; Mwase, W. Biomass Estimation Using 3D Data from Unmanned Aerial Vehicle Imagery in a Tropical Woodland. Remote Sens. 2016, 8, 968. [Google Scholar] [CrossRef] [Green Version]

- Lyu, P.; Malang, Y.; Liu, H.H.T.; Lai, J.; Liu, J.; Jiang, B.; Qu, M.; Anderson, S.; Lefebvre, D.D.; Wang, Y. Autonomous cyanobacterial harmful algal blooms monitoring using multirotor UAS. Int. J. Remote Sens. 2017, 38, 2818–2843. [Google Scholar] [CrossRef]

- Long, N.; Millescamps, B.; Guillot, B.; Pouget, F.; Bertin, X. Monitoring the topography of a dynamic tidal inlet using UAV imagery. Remote Sens. 2016, 8, 387. [Google Scholar] [CrossRef] [Green Version]

- Hodgson, A.; Kelly, N.; Peel, D. Unmanned aerial vehicles (UAVs) for surveying marine fauna: A dugong case study. PLoS ONE 2013, 8, e79556. [Google Scholar] [CrossRef] [Green Version]

- Goncalves, J.A.; Henriques, R. UAV photogrammetry for topographic monitoring of coastal areas. ISPRS J. Photogramm. Remote Sens. 2015, 104, 101–111. [Google Scholar]

- Samiappan, S.; Turnage, G.; Hathcock, L.; Casagrande, L.; Stinson, P.; Moorhead, R. Using unmanned aerial vehicles for high-resolution remote sensing to map invasive Phragmites australis in coastal wetlands. Int. J. Remote Sens. 2017, 38, 2199–2217. [Google Scholar] [CrossRef]

- Su, L.; Gibeaut, J. Using UAS hyperspatial RGB imagery for identifying beach zones along the South Texas coast. Remote Sens. 2017, 9, 159. [Google Scholar] [CrossRef] [Green Version]

- Klemas, V.V. Coastal and environmental remote sensing from unmanned aerial vehicles: An overview. J. Coast. Res. 2015, 31, 1260–1267. [Google Scholar] [CrossRef] [Green Version]

- Ventura, D.; Bonifazi, A.; Gravina, M.F.; Belluscio, A.; Ardizzone, G. Mapping and classification of ecologically sensitive marine habitats using unmanned aerial vehicle (UAV) imagery and object-based image analysis (OBIA). Remote Sens. 2018, 10, 1331. [Google Scholar]

- Papakonstantinou, A.; Stamati, C.; Topouzelis, K. Comparison of true-color and multispectral unmanned aerial systems imagery for marine habitat mapping using object-based image analysis. Remote Sens. 2020, 12, 554. [Google Scholar] [CrossRef] [Green Version]

- Rende, S.F.; Bosman, A.; Di Mento, R.; Bruno, F.; Lagudi, A.; Irving, A.D.; Dattola, L.; Di Giambattista, L.; Lanera, P.; Proietti, R.; et al. Ultra-High-Resolution Mapping of Posidonia oceanica (L.) Delile Meadows through Acoustic, Optical Data and Object-based Image Classification. J. Mar. Sci. Eng. 2020, 8, 647. [Google Scholar] [CrossRef]

- Kim, K.L.; Ryu, J.H. Generation of large-scale map of surface sedimentary facies in intertidal zone by using UAV data and object-based image analysis (OBIA). Korean J. Remote Sens. 2020, 36, 277–292. [Google Scholar]

- Hafizt, M.; Manessa, M.D.M.; Adi, N.S.; Prayudha, B. Benthic habitat mapping by combining lyzenga’s optical model and relative water depth model in Lintea Island, Southeast Sulawesi. The 5th Geoinformation Science Symposium. IOP Conf. Ser. Earth Environ. Sci. 2017, 98, 012037. [Google Scholar] [CrossRef] [Green Version]

- Yulius Novianti, N.; Arifin, T.; Salim, H.L.; Ramdhan, M.; Purbani, D. Coral reef spatial distribution in Wangiwangi island waters, Wakatobi. J. Ilmu Teknol. Kelaut. Trop. 2015, 7, 59–69. [Google Scholar]

- Adji, A.S. Suitability analysis of multispectral satellite sensors for mapping coral reefs in Indonesia case study: Wakatobi marine national park. Mar. Res. Indones. 2014, 39, 73–78. [Google Scholar] [CrossRef]

- Purbani, D.; Yulius; Ramdhan, M.; Arifin, T.; Salim, H.L.; Novianti, N. Beach characteristics of Wakatobi National Park to support marine eco-tourism: A case study of Wangiwangi island. Depik 2014, 3, 137–145. [Google Scholar]

- Balai Taman Nasional Wakatobi. Informasi Taman Nasional Wakatobi; Balai Taman Nasional Wakatobi: Bau-Bau, Indonesia, 2009; 12p. [Google Scholar]

- Supriatna, J. Melestarikan Alam Indonesia; Yayasan Obor: Jakarta, Indonesia, 2008; 482p. [Google Scholar]

- Rangka, N.A.; Paena, M. Potensi dan kesesuaian lahan budidaya rumput laut (Kappaphycus alvarezii) di sekitar perairan Kab. Wakatobi Prov. Sulawesi Tenggara. J. Ilm. Perikan. Kelaut. 2012, 4, 151–159. [Google Scholar]

- DroneDeploy. Drone Buyer’s Guide: The Ultimate Guide to Choosing a Mapping Drone for Your Business; DroneDeploy: San Fransisco, CA, USA, 2017; 37p. [Google Scholar]

- DroneDeploy. Crop Scouting with Drones: Identifying Crop Variability with UAVs (a Guide to Evaluating Plant Health and Detecting Crop Stress with Drone Data); DroneDeploy: San Fransisco, CA, USA, 2017; 14p. [Google Scholar]

- Da-Jiang Innovations Science and Technology. Phantom 3 Professional: User Manual; DJI: Shenzhen, China, 2016; 57p. [Google Scholar]

- Congalton, R.G.; Green, K. Assessing the Accuracy of Remotely Sensed Data Principles and Practices, 2nd ed.; CRC Taylor & Francis: Boca Raton, FL, USA, 2009; 200p. [Google Scholar]

- Roelfsema, C.; Phinn, S. Evaluating eight field and remote sensing approaches for mapping the benthos of three different coral reef environments in Fiji. In Remote Sensing of Inland, Coastal, and Oceanic Waters; Frouin, R.J., Andrefouet, S., Kawamura, H., Lynch, M.J., Pan, D., Platt, T., Eds.; SPIE—The International Society for Optical Engineering: Bellingham, WA, USA, 2008; p. p71500F. [Google Scholar]

- Casella, E.; Collin, A.; Harris, D.; Ferse, S.; Bejarano, S.; Parravicini, V.; Hench, J.L.; Rovere, A. Mapping coral reefs using consumer-grade drones and structure from motion photogrammetry techniques. Coral Reefs 2017, 36, 269–275. [Google Scholar] [CrossRef]

- Mount, R. Acquisition of through-water aerial survey images: Surface effects and the prediction of sun glitter and subsurface illumination. Photogramm. Eng. Remote Sens. 2005, 71, 407–1415. [Google Scholar] [CrossRef]

- Mount, R. Rapid monitoring of extent and condition of seagrass habitats with aerial photography “mega- quadrats”. Spat. Sci. 2007, 52, 105–119. [Google Scholar] [CrossRef]

- Green, E.; Edwards, A.J.; Clark, C. Remote Sensing Handbook for Tropical Coastal Management; Unesco Pub.: Paris, France, 2000; 316p. [Google Scholar]

- Blaschke, T. Object based image analysis for remote sensing. ISPRS J. Photogram 2010, 65, 2–16. [Google Scholar] [CrossRef] [Green Version]

- Navulur, K. Multispectral Image Analysis Using the Object-Oriented Paradigm; Taylor and Francis Group, LLC: London, UK, 2007; 165p. [Google Scholar]

- Trimble. Ecognition Developer: User Guide; Trimble Germany GmbH: Munchen, Germany, 2014. [Google Scholar]

- Benfield, S.L.; Guzman, H.M.; Mair, J.M.; Young, J.A.T. Mapping the distribution of coral reefs and associated sublittoral habitats in Pacific Panama: A comparison of optical satellite sensors and classification methodologies. Int. J. Remote Sens. 2007, 28, 5047–5070. [Google Scholar] [CrossRef]

- Zitello, A.G.; Bauer, L.J.; Battista, T.A.; Mueler, P.W.; Kendall, M.S.; Monaco, M.E. Shallow-Water Benthic Habitats of St. Jhon, U.S. Virgin Island; NOS NCCOS 96; NOAA Technical Memorandum: Silver Spring, MD, USA, 2009; 53p. [Google Scholar]

- Ahmad, A.; Tahar, K.N.; Udin, W.S.; Hashim, K.A.; Darwin, N.; Room, M.H.M.; Hamid, N.F.A.; Azhar, N.A.M.; Azmi, S.M. Digital aerial imagery of unmanned aerial vehicle for various applications. In Proceedings of the IEEE International Conference on System, Computing and Engineering, Penang, Malaysia, 29 November–1 December 2013; pp. 535–540. [Google Scholar]

- Kondraju, T.T.; Mandla, V.R.B.; Mahendra, R.S.; Kumar, T.S. Evaluation of various image classification techniques on Landsat to identify coral reefs. Geomat. Nat. Hazards Risk 2013, 5, 173–184. [Google Scholar] [CrossRef] [Green Version]

- Mountrakis, G.; Im, J.; Ogole, C. Support vector machines in remote sensing: A review. ISPRS J. Photogramm. 2011, 66, 247–259. [Google Scholar]

- Zhang, C.; Xie, Z. Object-based vegetation mapping in the Kissimmee river watershed using hymap data and machine learning techniques. Wetlands 2013, 33, 233–244. [Google Scholar] [CrossRef]

- Afriyie, E.O.; Mariano, V.Y.; Luna, D.A. Digital aerial images for coastal remote sensing application. In Proceeding of the 36th Asian conference on remote sensing, Fostering Resilient Growth in Asia, Quezon City, Philippines, 19–23 October 2015. [Google Scholar]

- Mastu, L.O.K.; Nababan, B.; Panjaitan, J.J. Object based mapping on benthic habitat using Sentinel-2 imagery of the Wangiwangi island waters of the Wakatobi District. J. Ilmu Teknol. Kelaut. Trop. 2018, 10, 381–396. [Google Scholar] [CrossRef]

- Toole, D.A.; Siegel, D.A.; Menzies, D.W.; Neumann, M.J.; Smith, R.C. Remote-sensing reflectance determinations in the coastal ocean environment: Impact of instrumental characteristics and environmental variability. Appl. Opt. 2000, 39, 456–469. [Google Scholar] [CrossRef] [PubMed]

| Aircraft | |

|---|---|

| Weight | 1280 g |

| Max. Speed | 16 m/s (ATTI mode, no wind) |

| Max. Service Ceiling Above Sea Level | 6000 m (Default altitude limit: 120 m above takeoff point) |

| Max. Flight time | Approximately 23 minutes |

| GPS Mode | GPS/GLONASS |

| Camera | |

| Sensor | Sony EXMOR 1/2.3” Effective pixels: 12.4 M (total pixels: 12.76 M), Sensor width 6.16 mm, Sensor height 4.62 mm |

| Lens | FOV 94° 20 mm (35 mm format equivalent) f/2.8 (Focal lenght 3.61 mm) |

| Image Max. Size (width × height) | 4000 × 3000 |

| Pixel size | 1.56 × 1.56 micro meter |

| Supported File Formats |

|

| Benthic Habitat | Description | Code |

|---|---|---|

| algae | algae dominant | A |

| intertidal rock | intertidal rock dominant | IR |

| live coral | live coral dominant | LC |

| live coral + dead coral * | live coral dominant mix with dead coral | LCDC |

| dead coral | dead coral dominant | DC |

| seagrass | seagrass dominant | S |

| seagrass + sand | seagrass dominant mix with sand | SSd |

| sand | sand dominant | Sd |

| sand + seagrass | sand dominant mix with seagrass | SdS |

| sand + rubble ** | sand dominant mix with rubble | SdR |

| rubble | rubble dominant | R |

| rubble + seagrass *** | rubble dominant mix with seagrass | RS |

| Field Sat. | A | IR | LC | LC DC | DC | S | SSd | Sd | SdS | SdR | R | RS | Total | UA |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| A | 7 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 7 | 100 |

| IR | 1 | 13 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 14 | 93 |

| LC | 0 | 0 | 43 | 4 | 2 | 1 | 0 | 0 | 0 | 0 | 2 | 2 | 54 | 80 |

| LCDC | 0 | 0 | 1 | 7 | 2 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 11 | 64 |

| DC | 0 | 0 | 0 | 1 | 9 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 11 | 82 |

| S | 0 | 0 | 1 | 0 | 1 | 24 | 0 | 0 | 0 | 0 | 1 | 1 | 28 | 86 |

| SSd | 0 | 0 | 0 | 1 | 0 | 3 | 12 | 0 | 1 | 0 | 0 | 0 | 17 | 71 |

| Sd | 0 | 0 | 0 | 1 | 1 | 0 | 0 | 21 | 1 | 2 | 0 | 0 | 26 | 81 |

| SdS | 0 | 0 | 0 | 0 | 2 | 0 | 2 | 0 | 12 | 1 | 2 | 0 | 19 | 63 |

| SdR | 0 | 0 | 1 | 0 | 1 | 0 | 0 | 2 | 0 | 9 | 2 | 1 | 16 | 56 |

| R | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 1 | 10 | 0 | 12 | 83 |

| RS | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 1 | 2 | 50 |

| Total | 8 | 13 | 46 | 14 | 18 | 29 | 14 | 25 | 14 | 13 | 18 | 5 | 217 | |

| PA | 88 | 100 | 93 | 50 | 50 | 83 | 86 | 84 | 86 | 69 | 56 | 20 | OA | 77.4 |

| Field Sat. | A | IR | LC | DC | S | SSd | Sd | SdS | R | Total | UA |

|---|---|---|---|---|---|---|---|---|---|---|---|

| A | 7 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 7 | 100 |

| IR | 1 | 13 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 14 | 93 |

| LC | 0 | 0 | 55 | 4 | 1 | 0 | 0 | 0 | 5 | 65 | 85 |

| DC | 0 | 0 | 1 | 9 | 0 | 0 | 1 | 0 | 0 | 11 | 82 |

| S | 0 | 0 | 1 | 1 | 24 | 0 | 0 | 0 | 2 | 28 | 86 |

| SSd | 0 | 0 | 1 | 0 | 3 | 12 | 0 | 1 | 0 | 17 | 71 |

| Sd | 0 | 0 | 2 | 2 | 0 | 0 | 33 | 1 | 3 | 41 | 80 |

| SdS | 0 | 0 | 0 | 2 | 0 | 2 | 1 | 12 | 2 | 19 | 63 |

| R | 0 | 0 | 0 | 0 | 1 | 0 | 3 | 0 | 11 | 15 | 73 |

| Total | 8 | 13 | 60 | 18 | 29 | 14 | 38 | 14 | 23 | 217 | |

| PA | 88 | 100 | 92 | 50 | 83 | 86 | 87 | 86 | 48 | OA | 81.1 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Nababan, B.; Mastu, L.O.K.; Idris, N.H.; Panjaitan, J.P. Shallow-Water Benthic Habitat Mapping Using Drone with Object Based Image Analyses. Remote Sens. 2021, 13, 4452. https://doi.org/10.3390/rs13214452

Nababan B, Mastu LOK, Idris NH, Panjaitan JP. Shallow-Water Benthic Habitat Mapping Using Drone with Object Based Image Analyses. Remote Sensing. 2021; 13(21):4452. https://doi.org/10.3390/rs13214452

Chicago/Turabian StyleNababan, Bisman, La Ode Khairum Mastu, Nurul Hazrina Idris, and James P. Panjaitan. 2021. "Shallow-Water Benthic Habitat Mapping Using Drone with Object Based Image Analyses" Remote Sensing 13, no. 21: 4452. https://doi.org/10.3390/rs13214452