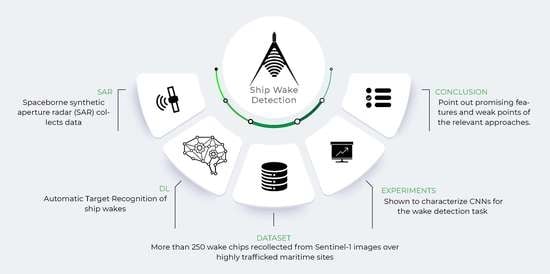

First Results on Wake Detection in SAR Images by Deep Learning

Abstract

:1. Introduction

2. Selected Object Detectors

2.1. Two-Stage Detectors

2.1.1. Faster R-CNN

2.1.2. Mask R-CNN

2.1.3. Cascade Mask R-CNN

2.2. One-Stage Detectors

2.3. Backbone Network

3. Wake Imaging Mechanism

4. Dataset Description

5. Setup and Metrics

5.1. Setup

5.2. Evaluation Metrics

6. Results

6.1. Results on SSWD

6.2. Results on a Sentinel-1 Product

6.3. Results on X-Band Products

7. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Balkin, R. The International Maritime Organization and Maritime Security. Tul. Mar. LJ 2006, 30, 1. [Google Scholar]

- Tetreault, B.J. Use of the Automatic Identification System (AIS) for Maritime Domain wareness (MDA). In Proceedings of the Oceans 2005 Mts/IEEE, Washington, DC, USA, 17–23 September 2005; pp. 1590–1594. [Google Scholar]

- Iceye. Dark Vessel Detection for Maritime Security with SAR Data. 2021. Available online: https://www.iceye.com/use-cases/security/dark-vessel-detection-for-maritime-security (accessed on 19 May 2021).

- exactEarth. exactEarth | AIS Vessel Tracking | Maritime Ship Monitoring | Home. 2021. Available online: https://www.exactearth.com/ (accessed on 19 May 2021).

- Graziano, M.D.; Renga, A.; Moccia, A. Integration of Automatic Identification System (AIS) Data and Single-Channel Synthetic Aperture Radar (SAR) Images by SAR-Based Ship Velocity Estimation for Maritime Situational Awareness. Remote Sens. 2019, 11, 2196. [Google Scholar] [CrossRef] [Green Version]

- Li, J.; Qu, C.; Shao, J. Ship Detection in SAR Images Based on an Improved Faster R-CNN. 2017 SAR in Big Data Era: Models, Methods and Applications (BIGSARDATA); IEEE: Piscataway, NJ, USA, 2017; pp. 1–6. [Google Scholar]

- Pei, J.; Huang, Y.; Huo, W.; Zhang, Y.; Yang, J.; Yeo, T.S. SAR automatic target recognition based on multiview deep learning framework. IEEE Trans. Geosci. Remote Sens. 2017, 56, 2196–2210. [Google Scholar] [CrossRef]

- Zhao, P.; Liu, K.; Zou, H.; Zhen, X. Multi-stream convolutional neural network for SAR automatic target recognition. Remote Sens. 2018, 10, 1473. [Google Scholar] [CrossRef] [Green Version]

- Potin, P.; Rosich, B.; Miranda, N.; Grimont, P. Sentinel-1a/-1b mission status. In Proceedings of the 12th European Conference on Synthetic Aperture Radar— VDE (EUSAR 2018), Berlin, Germany, 2–6 June 2018; pp. 1–5. [Google Scholar]

- Karakuş, O.; Achim, A. On Solving SAR Imaging Inverse Problems Using Nonconvex Regularization with a Cauchy-Based Penalty. IEEE Trans. Geosci. Remote Sens. 2020, 59, 5828–5840. [Google Scholar] [CrossRef]

- Graziano, M.D.; D Errico, M.; Rufino, G. Ship heading and velocity analysis by wake detection in SAR images. Acta Astronaut. 2016, 128, 72–82. [Google Scholar] [CrossRef]

- Liu, P.; Zhao, C.; Li, X.; He, M.; Pichel, W. Identification of ocean oil spills in SAR imagery based on fuzzy logic algorithm. Int. J. Remote Sens. 2010, 31, 4819–4833. [Google Scholar] [CrossRef]

- Gu, F.; Zhang, H.; Wang, C. A two-component deep learning network for SAR image denoising. IEEE Access 2020, 8, 17792–17803. [Google Scholar] [CrossRef]

- Schmitt, M.; Hughes, L.H.; Zhu, X.X. The SEN1-2 dataset for deep learning in SAR-optical data fusion. arXiv 2018, arXiv:1807.01569. [Google Scholar] [CrossRef] [Green Version]

- Cha, M.; Majumdar, A.; Kung, H.; Barber, J. Improving SAR automatic target recognition using simulated images under deep residual refinements. In Proceedings of the 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Calgary, AB, Canada, 15–20 April 2018; pp. 2606–2610. [Google Scholar]

- Wang, Y.; Wang, C.; Zhang, H.; Dong, Y.; Wei, S. A SAR dataset of ship detection for deep learning under complex backgrounds. Remote Sens. 2019, 11, 765. [Google Scholar] [CrossRef] [Green Version]

- Chang, Y.L.; Anagaw, A.; Chang, L.; Wang, Y.C.; Hsiao, C.Y.; Lee, W.H. Ship detection based on YOLOv2 for SAR imagery. Remote Sens. 2019, 11, 786. [Google Scholar] [CrossRef] [Green Version]

- Kang, K.m.; Kim, D.j. Ship velocity estimation from ship wakes detected using convolutional neural networks. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 4379–4388. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. arXiv 2015, arXiv:1506.01497. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 2012, 25, 1097–1105. [Google Scholar] [CrossRef]

- Zeiler, M.D.; Fergus, R. Visualizing and understanding convolutional networks. In European Conference on Computer Vision; Springer: Zurich, Switzerland, 2014; pp. 818–833. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. 2015. Available online: http://xxx.lanl.gov/abs/1512.03385 (accessed on 19 May 2021).

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. arXiv 2013, arXiv:1311.2524. [Google Scholar]

- Hoiem, D.; Divvala, S.K.; Hays, J.H. Pascal VOC 2008 Challenge. World Literature Today. 2009. Available online: http://host.robots.ox.ac.uk/pascal/VOC/voc2008/index.html (accessed on 19 May 2021).

- Liu, Y.C.; Ma, C.Y.; He, Z.; Kuo, C.W.; Chen, K.; Zhang, P.; Wu, B.; Kira, Z.; Vajda, P. Unbiased teacher for semi-supervised object detection. arXiv 2021, arXiv:2102.09480. [Google Scholar]

- Xu, M.; Zhang, Z.; Hu, H.; Wang, J.; Wang, L.; Wei, F.; Bai, X.; Liu, Z. End-to-End Semi-Supervised Object Detection with Soft Teacher. arXiv 2021, arXiv:2106.09018. [Google Scholar]

- Law, H.; Deng, J. Cornernet: Detecting Objects as Paired Keypoints. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 734–750. [Google Scholar]

- Zhou, X.; Wang, D.; Krähenbühl, P. Objects as points. arXiv 2019, arXiv:1904.07850. [Google Scholar]

- Tian, Z.; Shen, C.; Chen, H.; He, T. Fcos: Fully convolutional one-stage object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019; pp. 9627–9636. [Google Scholar]

- Beal, J.; Kim, E.; Tzeng, E.; Park, D.H.; Zhai, A.; Kislyuk, D. Toward transformer-based object detection. arXiv 2020, arXiv:2012.09958. [Google Scholar]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-end object detection with transformers. In European Conference on Computer Vision; Springer: Zurich, Switzerland, 2020; pp. 213–229. [Google Scholar]

- Khan, S.; Naseer, M.; Hayat, M.; Zamir, S.W.; Khan, F.S.; Shah, M. Transformers in vision: A survey. arXiv 2021, arXiv:2101.01169. [Google Scholar]

- Memisevic, R.; Zach, C.; Pollefeys, M.; Hinton, G.E. Gated softmax classification. Adv. Neural Inf. Process. Syst. 2010, 23, 1603–1611. [Google Scholar]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-CNN. 2018. Available online: http://xxx.lanl.gov/abs/1703.06870 (accessed on 19 May 2021).

- Han, J.; Moraga, C. The influence of the sigmoid function parameters on the speed of backpropagation learning. In International Workshop on Artificial Neural Networks; Springer: Torreomolinos, Spain, 1995; pp. 195–201. [Google Scholar]

- Cai, Z.; Vasconcelos, N. Cascade R-CNN: High Quality Object Detection and Instance Segmentation. 2019. Available online: http://xxx.lanl.gov/abs/1906.09756 (accessed on 19 May 2021).

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal loss for dense object detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature Pyramid Networks for Object Detection. 2016. Available online: http://xxx.lanl.gov/abs/1612.03144 (accessed on 19 May 2021).

- Pichel, W.G.; Clemente-colón, P.; Wackerman, C.C.; Friedman, K.S. Ship and Wake Detection. In SAR Marine Users Manual; NOAA: Washington, DC, USA, 2004; Available online: https://www.sarusersmanual.com/ (accessed on 19 May 2021).

- Darmon, A.; Benzaquen, M.; Raphaël, E. Kelvin wake pattern at large Froude numbers. J. Fluid Mech. 2014, 738, R3. [Google Scholar] [CrossRef] [Green Version]

- Tunaley, J.K. Wakes from Go-Fast and Small Planing Boats; London Research and Development Corporation: Ottawa, ON, Canada, 2014. [Google Scholar]

- Tings, B.; Velotto, D. Comparison of ship wake detectability on C-band and X-band SAR. Int. J. Remote Sens. 2018, 39, 4451–4468. [Google Scholar] [CrossRef] [Green Version]

- Lyden, J.D.; Hammond, R.R.; Lyzenga, D.R.; Shuchman, R.A. Synthetic aperture radar imaging of surface ship wakes. J. Geophys. Res. Ocean. 1988, 93, 12293–12303. [Google Scholar] [CrossRef]

- Panico, A.; Graziano, M.; Renga, A. SAR-Based Vessel Velocity Estimation from Partially Imaged Kelvin Pattern. IEEE Geosci. Remote Sens. Lett. 2017, 14, 2067–2071. [Google Scholar] [CrossRef]

- Hennings, I.; Romeiser, R.; Alpers, W.; Viola, A. Radar imaging of Kelvin arms of ship wakes. Int. J. Remote Sens. 1999, 20, 2519–2543. [Google Scholar] [CrossRef]

- Graziano, M.D.; Grasso, M.; D’ Errico, M. Performance Analysis of Ship Wake Detection on Sentinel-1 SAR Images. Remote Sens. 2017, 9, 1107. [Google Scholar] [CrossRef] [Green Version]

- Dutta, A.; Zisserman, A. The VIA annotation software for images, audio and video. In Proceedings of the 27th ACM International Conference on Multimedia, Nice, France, 21–25 October 2019; pp. 2276–2279. [Google Scholar]

- Ouchi, K. On the multilook images of moving targets by synthetic aperture radars. IEEE Trans. Antennas Propag. 1985, 33, 823–827. [Google Scholar] [CrossRef]

- Wu, Y.; Kirillov, A.; Massa, F.; Lo, W.Y.; Girshick, R. Detectron2. 2019. Available online: https://github.com/facebookresearch/detectron2 (accessed on 19 May 2019).

- Wei, S.; Zeng, X.; Qu, Q.; Wang, M.; Su, H.; Shi, J. HRSID: A High-Resolution SAR Images Dataset for Ship Detection and Instance Segmentation. IEEE Access 2020, 8, 120234–120254. [Google Scholar] [CrossRef]

- Everingham, M.; Van Gool, L.; Williams, C.K.; Winn, J.; Zisserman, A. The pascal visual object classes (voc) challenge. Int. J. Comput. Vis. 2010, 88, 303–338. [Google Scholar] [CrossRef] [Green Version]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In European Conference on Computer Vision; Springer: Zurich, Switzerland, 2014; pp. 740–755. [Google Scholar]

| Parameter | Value |

|---|---|

| BATCH_SIZE_PER_IMAGE | 256 |

| BBOX_REG_LOSS_TYPE | smooth_l1 |

| BBOX_REG_LOSS_WEIGHT | 1.0 |

| BBOX_REG_WEIGHTS | (1.0, 1.0, 1.0, 1.0) |

| BOUNDARY_THRESH | −1 |

| HEAD_NAME | StandardRPNHead |

| IN_FEATURES | [‘res4’] |

| IOU_LABELS | [0, −1, 1] |

| IOU_THRESHOLDS | [0.3, 0.7] |

| LOSS_WEIGHT | 1.0 |

| NMS_THRESH | 0.7 |

| POSITIVE_FRACTION | 0.5 |

| POST_NMS_TOPK_TEST | 1000 |

| POST_NMS_TOPK_TRAIN | 2000 |

| PRE_NMS_TOPK_TEST | 6000 |

| PRE_NMS_TOPK_TRAIN | 12,000 |

| SMOOTH_L1_BETA | 0.0 |

| Parameter | Value |

|---|---|

| BBOX_REG_LOSS_TYPE | smooth_L1 |

| BBOX_REG_WEIGHTS | (1.0, 1.0, 1.0, 1.0) |

| FOCAL_LOSS_ALPHA | 0.25 |

| FOCAL_LOSS_GAMMA | 2.0 |

| IN_FEATURES | [‘p3’, ‘p4’, ‘p5’, ‘p6’, ‘p7’] |

| IOU_LABELS | [0, −1, 1] |

| IOU_THRESHOLDS | [0.4, 0.5] |

| NMS_THRESH_TEST | 0.5 |

| Model | B1 | B2 | UT | |||

|---|---|---|---|---|---|---|

| Faster R-CNN | ResNet50 | C4 | ✕ | 61.21 | 95.01 | 64.26 |

| DC5 | ✕ | 60.92 | 96.92 | 69.80 | ||

| DC5 | ✓ | 59.97 | 96.19 | 71.01 | ||

| FPN | ✕ | 61.50 | 98.62 | 63.44 | ||

| ResNet101 | C4 | ✕ | 62.39 | 98.51 | 64.88 | |

| DC5 | ✕ | 61.50 | 94.28 | 66.30 | ||

| FPN | ✕ | 63.91 | 95.07 | 75.75 | ||

| RetinaNet | ResNet50 | FPN | ✓ | 60.54 | 94.98 | 69.59 |

| FPN | ✕ | 62.24 | 96.59 | 69.48 | ||

| ResNet101 | FPN | ✕ | 65.27 | 97.99 | 71.41 | |

| Mask R-CNN | ResNet50 | C4 | ✓ | 61.17 | 94.09 | 61.26 |

| C4 | ✕ | 60.88 | 94.59 | 71.35 | ||

| DC5 | ✓ | 61.10 | 96.64 | 63.29 | ||

| DC5 | ✕ | 61.89 | 96.90 | 66.49 | ||

| FPN | ✓ | 60.51 | 94.98 | 69.59 | ||

| FPN | ✕ | 60.95 | 94.42 | 65.03 | ||

| ResNet101 | C4 | ✕ | 65.91 | 96.24 | 78.34 | |

| DC5 | ✕ | 65.68 | 96.66 | 64.60 | ||

| FPN | ✕ | 65.49 | 99.06 | 81.18 | ||

| Cascade | ResNet50 | FPN | ✓ | 64.07 | 95.94 | 70.26 |

| Mask R-CNN | FPN | ✕ | 67.63 | 95.99 | 73.15 |

| Date | 6 July 2020 | |

| Image ID | S1A_IW_GRDH_1SDV_20200706T171436_20200706T171501_033337_03DCC6_1122 | |

| Image ID | S1A_IW_GRDH_1SDV_20200706T171411_20200706T171436_033337_03DCC6_3302 | |

| Wind@Genoa | Speed: 2 m/s | Direction: 204°N |

| Wind@LaSpezia | Speed: 5 m/s | Direction: 134°N |

| Date | 18 July 2020 | |

| Image ID | S1A_IW_GRDH_1SDV_20200718T171436_20200718T171501_033512_03E220_DEFC | |

| Image ID | S1A_IW_GRDH_1SDV_20200718T171411_20200718T171436_033512_03E220_1765 | |

| Wind@Genoa | Speed: 2.2 m/s | Direction: 259°N |

| Wind@LaSpezia | Speed: 2.9 m/s | Direction: 149°N |

| Date | 23 August 2020 | |

| Image ID | S1A_IW_GRDH_1SDV_20200823T171414_20200823T171439_034037_03F36B_5DA2 | |

| Image ID | S1A_IW_GRDH_1SDV_20200823T171439_20200823T171504_034037_03F36B_447E | |

| Wind@Genoa | Speed: 2 m/s | Direction: 188°N |

| Wind@LaSpezia | Speed: 2.5 m/s | Direction: 150°N |

| Date | 29 August 2020 | |

| Image ID | S1B_IW_GRDH_1SDV_20200829T171409_20200829T171434_023141_02BF02_2FD4 | |

| Image ID | S1B_IW_GRDH_1SDV_20200829T171344_20200829T171409_023141_02BF02_36A0 | |

| Wind@Genoa | Speed: 1.1 m/s | Direction: 194°N |

| Wind@LaSpezia | Speed: 9.4 m/s | Direction: 210°N |

| Parameter | CSK Image | TSX Image |

|---|---|---|

| Acquisition Date | 30 June 2008 | 9 June 2011 |

| Acquisition Mode | Stripmap | Stripmap |

| Product Type | SLC | SLC |

| Incidence Angle (local) | ∼24° | ∼28° |

| Polarization | HH | VV |

| Resolution (slant range-azimuth) | 3 m × 3 m | 1.2 m × 6.6 m |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Del Prete, R.; Graziano, M.D.; Renga, A. First Results on Wake Detection in SAR Images by Deep Learning. Remote Sens. 2021, 13, 4573. https://doi.org/10.3390/rs13224573

Del Prete R, Graziano MD, Renga A. First Results on Wake Detection in SAR Images by Deep Learning. Remote Sensing. 2021; 13(22):4573. https://doi.org/10.3390/rs13224573

Chicago/Turabian StyleDel Prete, Roberto, Maria Daniela Graziano, and Alfredo Renga. 2021. "First Results on Wake Detection in SAR Images by Deep Learning" Remote Sensing 13, no. 22: 4573. https://doi.org/10.3390/rs13224573

APA StyleDel Prete, R., Graziano, M. D., & Renga, A. (2021). First Results on Wake Detection in SAR Images by Deep Learning. Remote Sensing, 13(22), 4573. https://doi.org/10.3390/rs13224573