Detection and Classification of Rice Infestation with Rice Leaf Folder (Cnaphalocrocis medinalis) Using Hyperspectral Imaging Techniques

Abstract

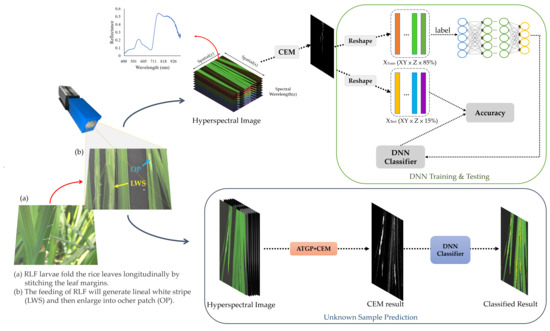

1. Introduction

2. Materials and Methods

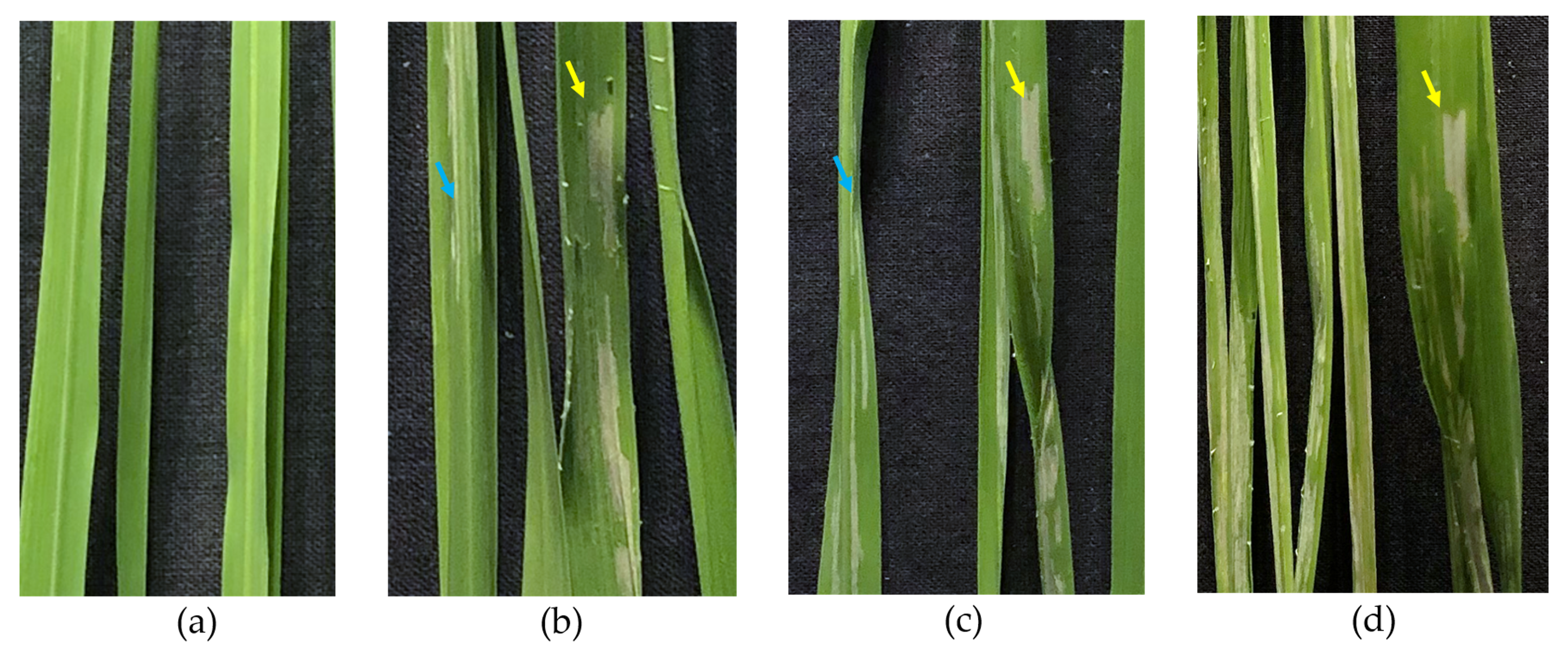

2.1. Insect Breeding

2.2. Preparation of Rice Samples

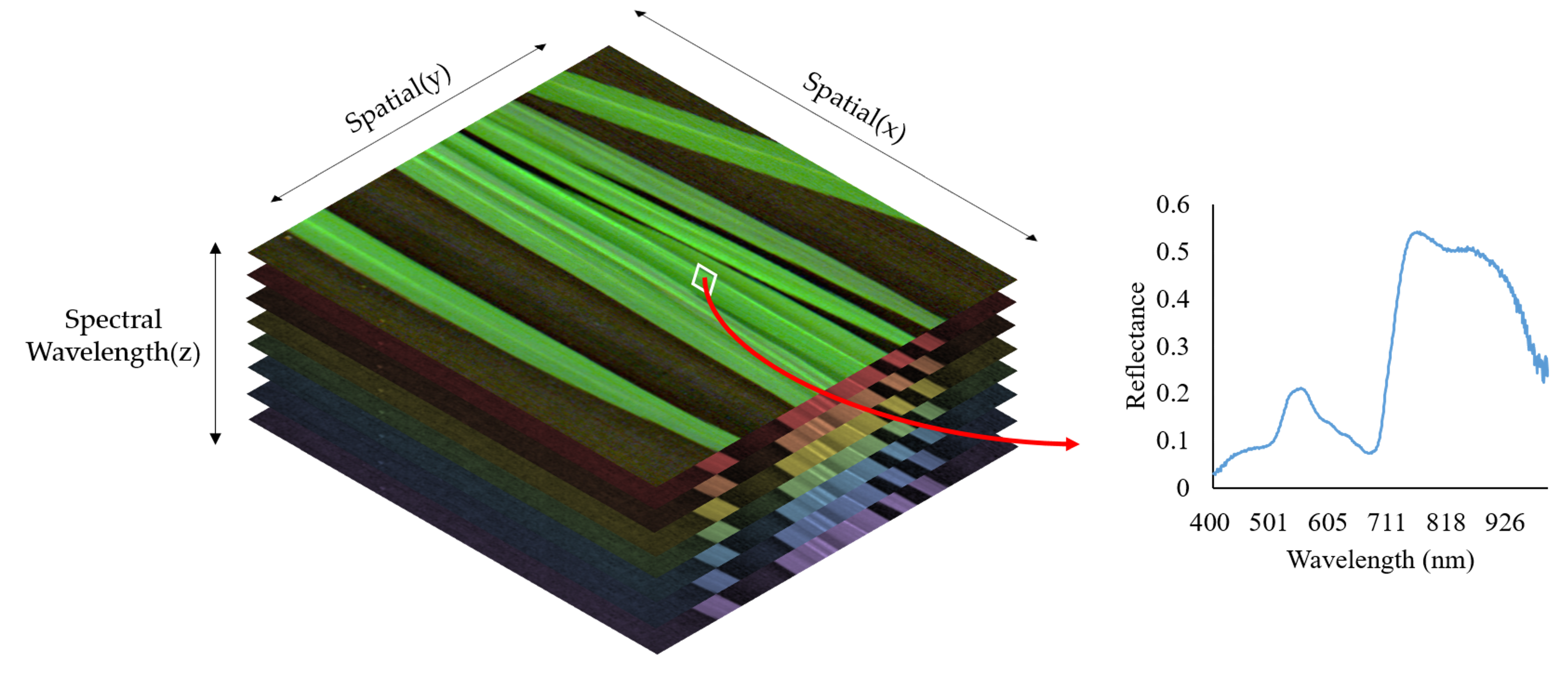

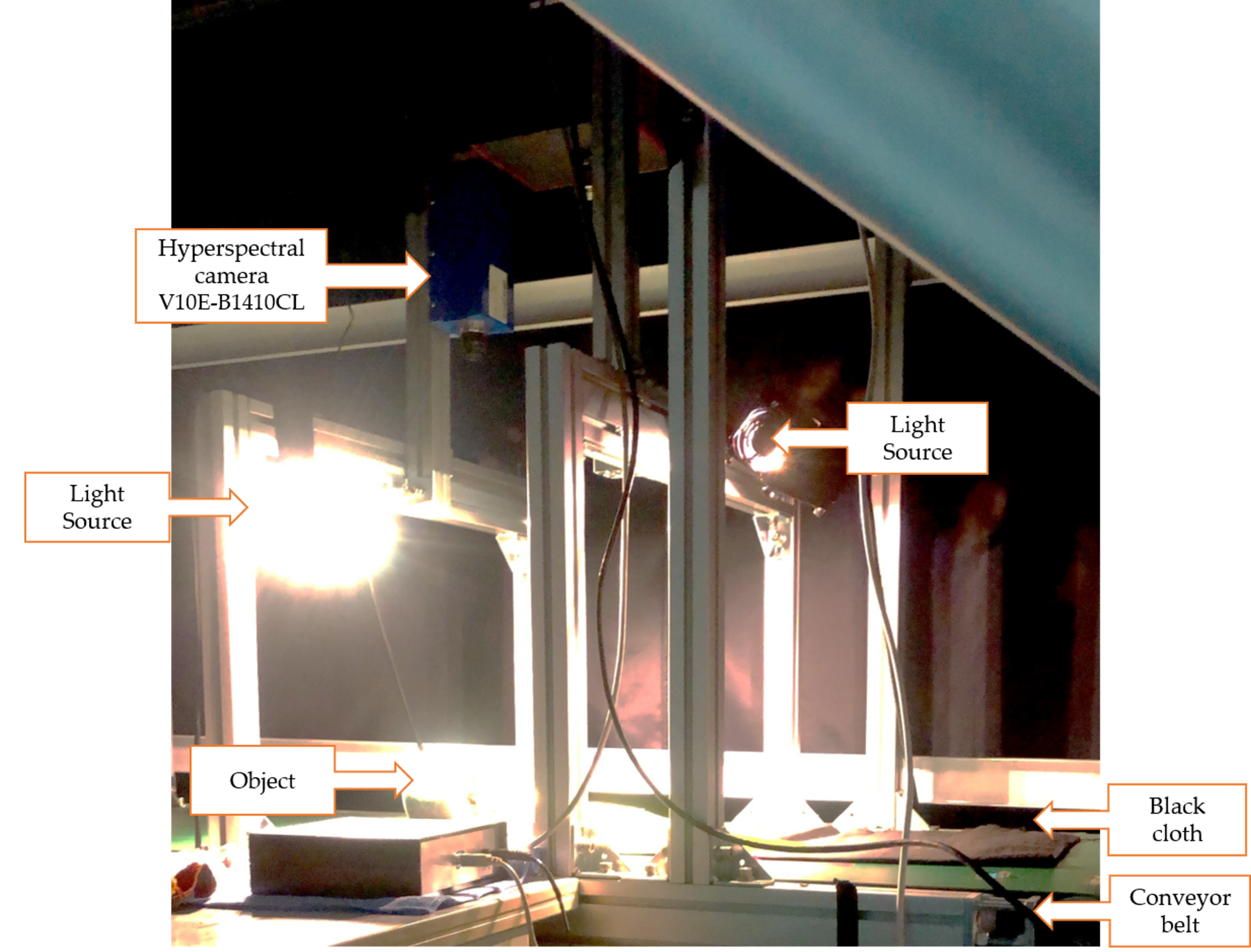

2.3. Hyperspectral Imaging System and Imaging Acquisition

2.3.1. Hyperspectral Sensor

2.3.2. Image Acquisition

2.3.3. Calibration

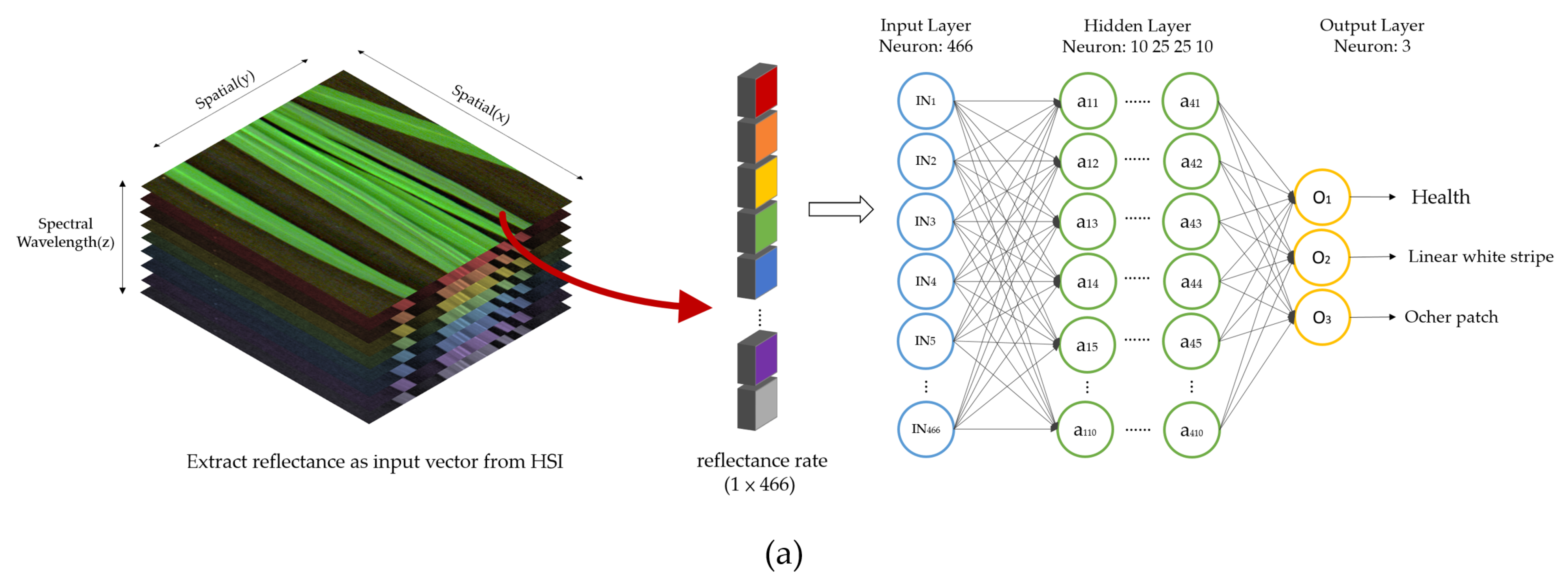

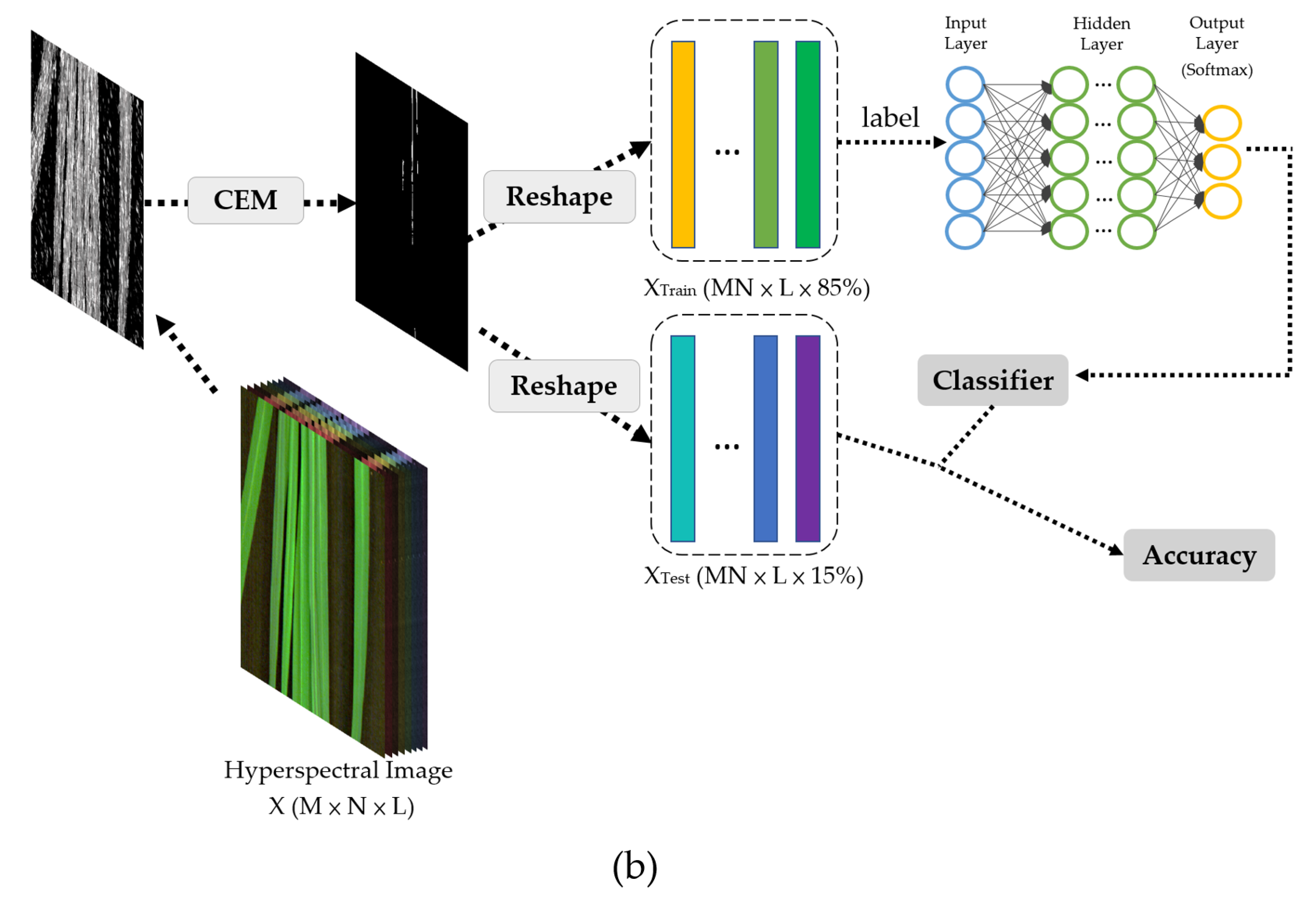

2.4. Spectral Information Extraction

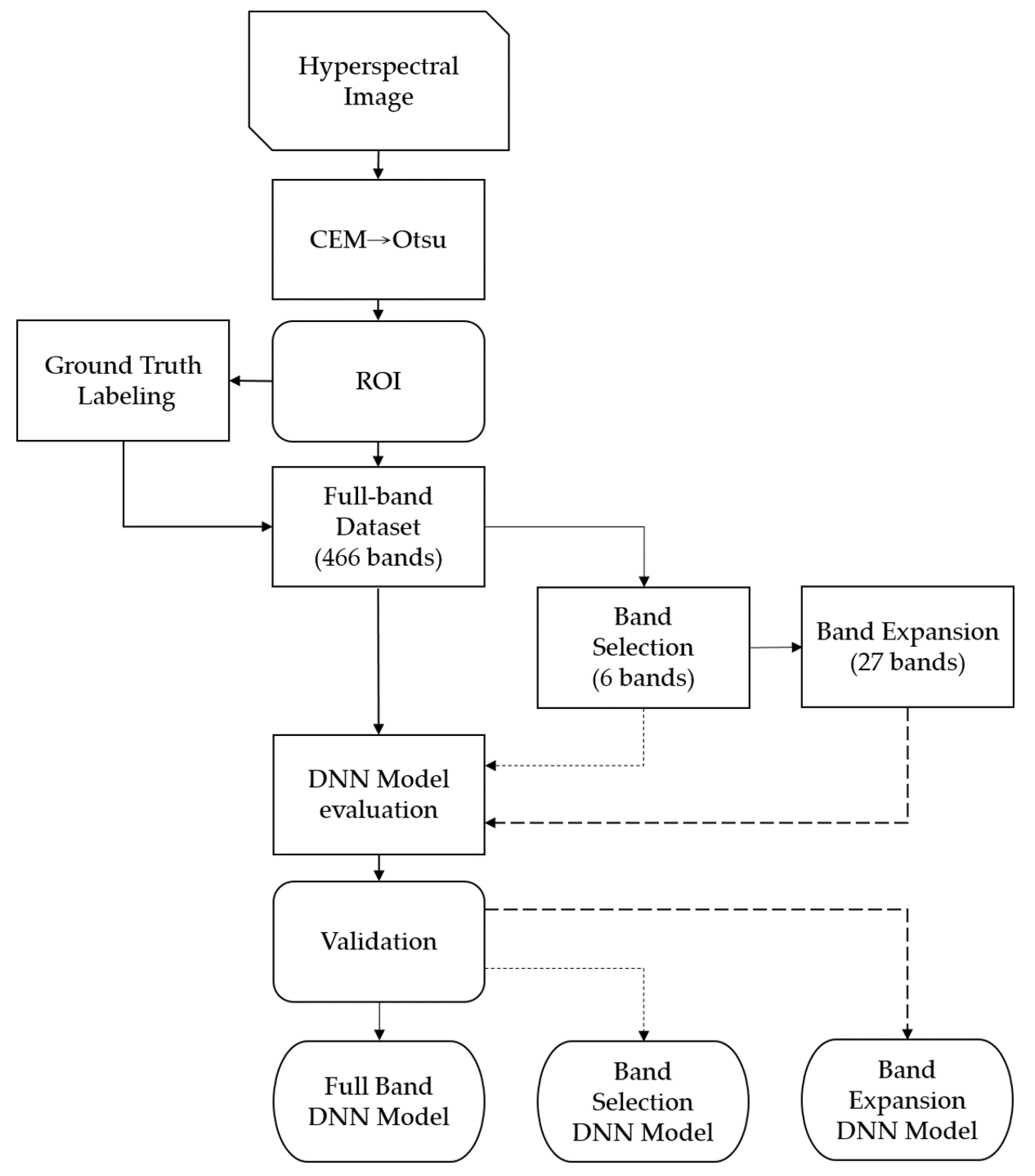

2.5. Band Selection

2.5.1. Band Prioritization

2.5.2. Band Decorrelation

2.6. Band Expansion Process

2.7. Data Training Models

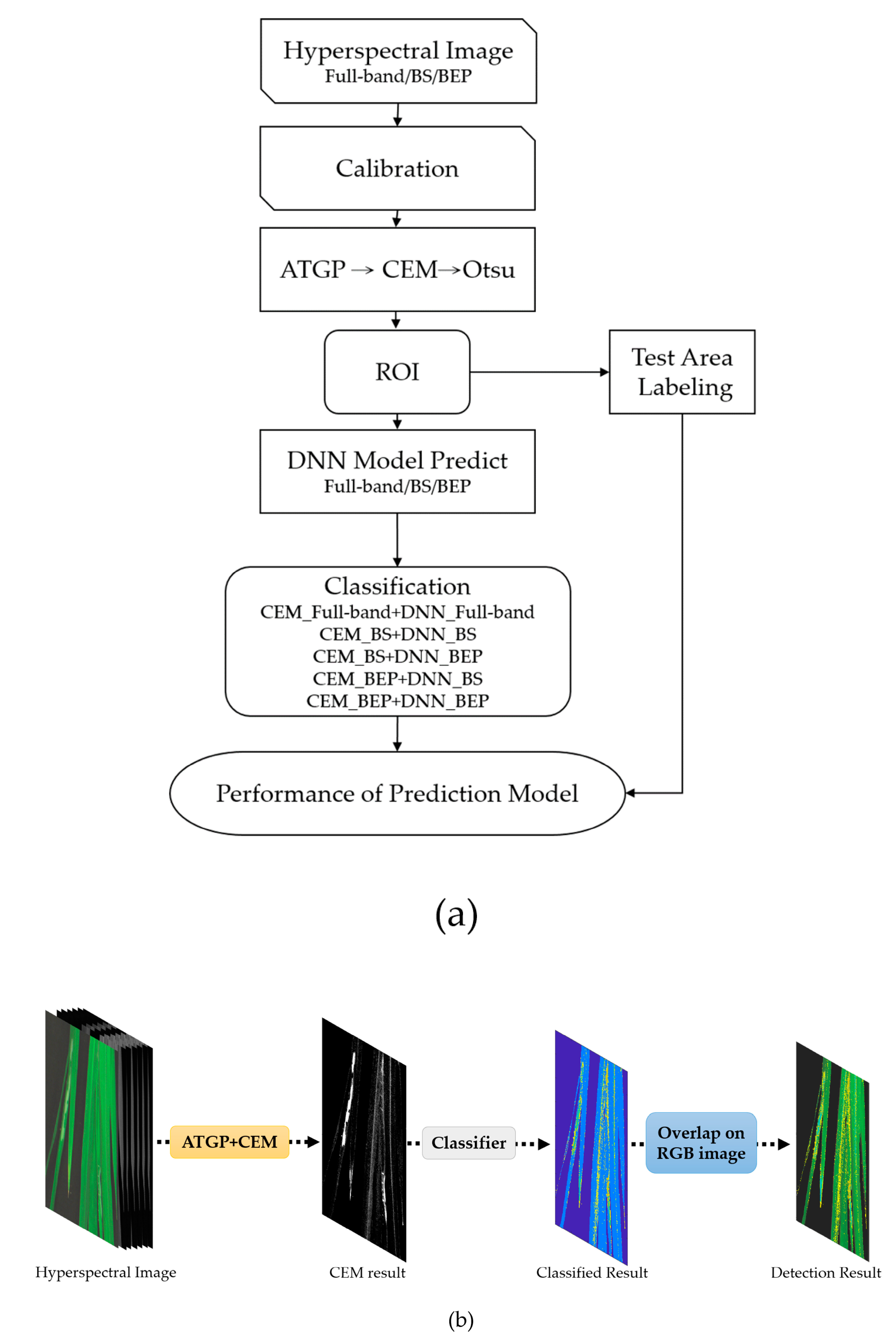

2.8. Model Test for Unknown Samples

2.9. Predict Unknown Samplings

- (i)

- recall

- (ii)

- precision

- (iii)

- Dice similarity coefficient

3. Results and Discussion

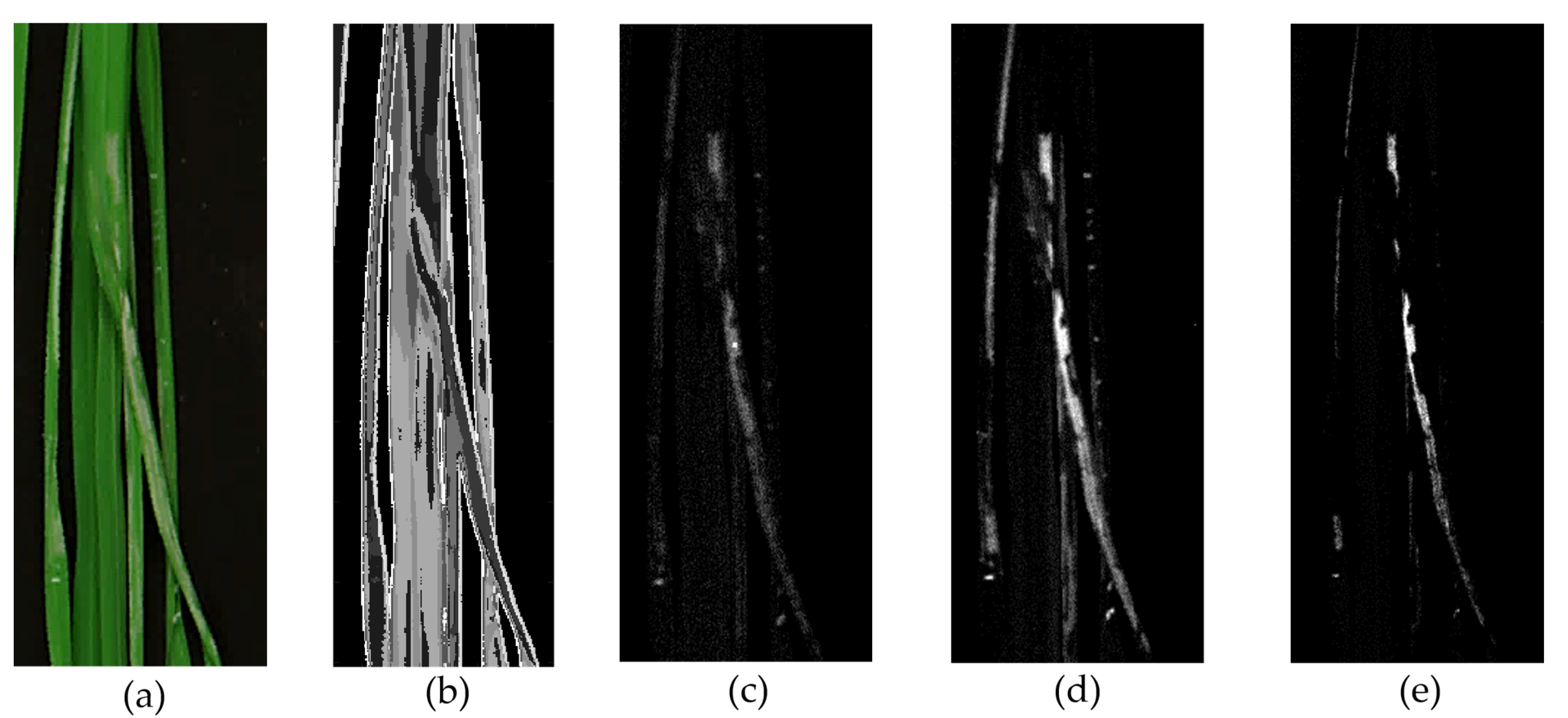

3.1. Images and Spectral Signatures of Healthy and RLF-Infested Rice Leaves

3.2. Band Selection and Band Expansion Process

3.3. ROI Detection with CEM in Full Bands, Band Selection, and Band Expansion Process

3.4. DNN Model for Classification of Testing Dataset

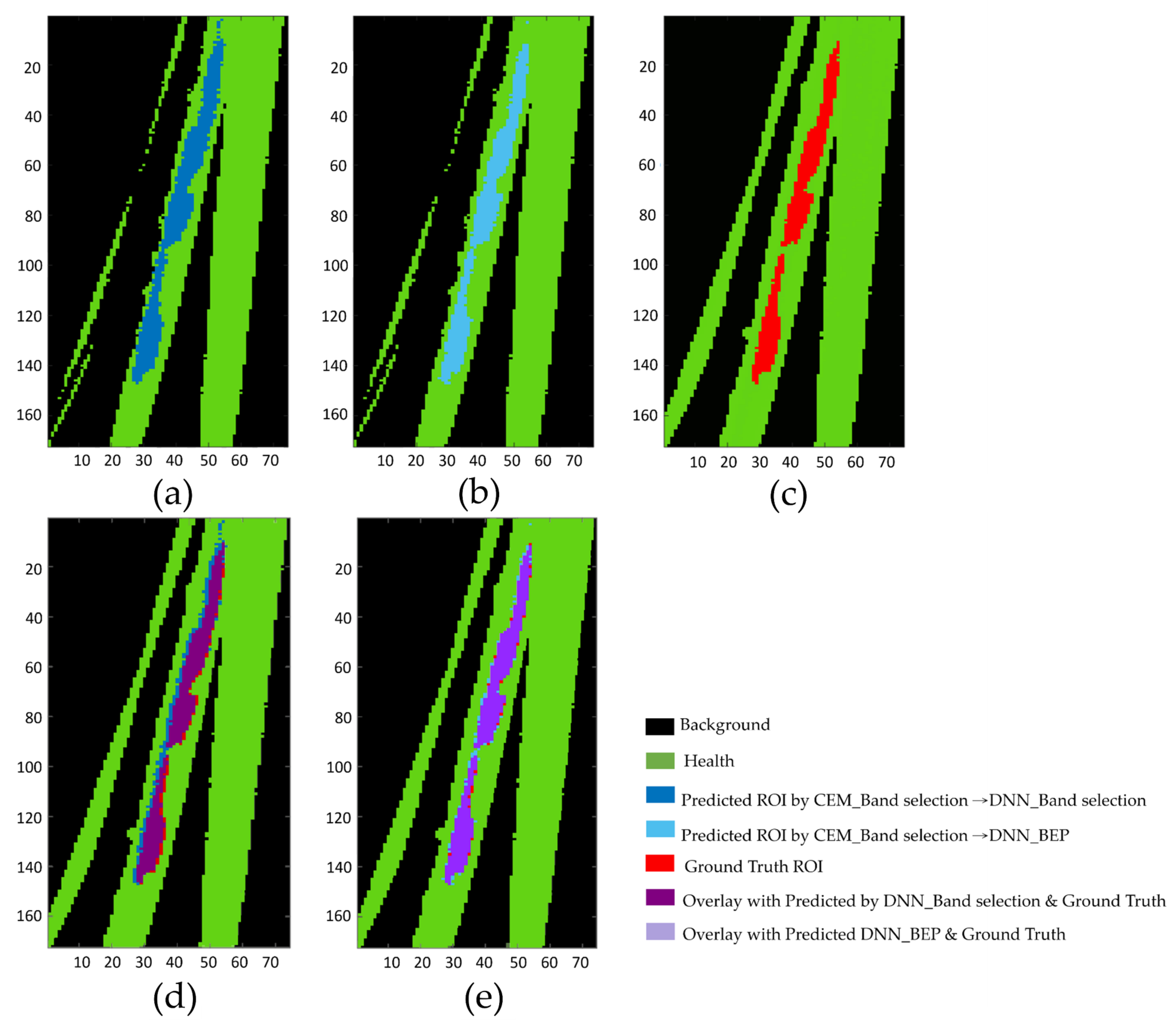

3.5. Prediction of Unknown Samples

3.6. Discussion

4. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Khan, Z.R.; Barrion, A.T.; Litsinger, J.A.; Castilla, N.P.; Joshi, R.C. A bibliography of rice leaf folders (Lepidoptera: Pyralidae)-Mini review. Insect Sci. Appl. 1988, 9, 129–174. [Google Scholar]

- Park, H.H.; Ahn, J.J.; Park, C.G. Temperature-dependent development of Cnaphalocrocis medinalis Guenée (Lepidoptera: Pyralidae) and their validation in semi-field condition. J. Asia Pac. Entomol. 2014, 17, 83–91. [Google Scholar] [CrossRef][Green Version]

- Bodlah, M.A.; Gu, L.L.; Tan, Y.; Liu, X.D. Behavioural adaptation of the rice leaf folder Cnaphalocrocis medinalis to short-term heat stress. J. Insect Physiol. 2017, 100, 28–34. [Google Scholar] [CrossRef] [PubMed]

- Padmavathi, C.; Katti, G.; Padmakumari, A.P.; Voleti, S.R.; Subba Rao, L.V. The effect of leaffolder Cnaphalocrocis medinalis (Guenee) (Lepidoptera: Pyralidae) injury on the plant physiology and yield loss in rice. J. Appl. Entomol. 2013, 137, 249–256. [Google Scholar] [CrossRef]

- Pathak, M.D. Utilization of Insect-Plant Interactions in Pest Control. In Insects, Science and Society; Pimentel, D., Ed.; Academic Press: London, UK, 1975; pp. 121–148. [Google Scholar]

- Murugesan, S.; Chelliah, S. Yield losses and economic injury by rice leaf folder. Indian J. Agri. Sci. 1987, 56, 282–285. [Google Scholar]

- Kushwaha, K.S.; Singh, R. Leaf folder (LF) outbreak in Haryana. Int. Rice Res. Newsl. 1984, 9, 20. [Google Scholar]

- Bautista, R.C.; Heinrichs, E.A.; Rejesus, R.S. Economic injury levels for the rice leaffolder Cnaphalocrocis medinalis (Lepidoptera: Pyralidae): Insect infestation and artificial leaf removal. Environ. Entomol. 1984, 13, 439–443. [Google Scholar] [CrossRef]

- Heong, K.L.; Hardy, B. Planthoppers: New Threats to the Sustainability of Intensive Rice Production Systems in Asia; International Rice Research Institute: Los Banos, Philippines, 2009. [Google Scholar]

- Norton, G.W.; Heong, K.L.; Johnson, D.; Savary, S. Rice pest management: Issues and opportunities. In Rice in the Global Economy: Strategic Research and Policy Issues for Food Security; Pandey, S., Byerlee, D., Dawe, D., Dobermann, A., Mohanty, S., Rozelle, S., Hardy, B., Eds.; International Rice Research Institute: Los Baños, Philippines, 2010; pp. 297–332. [Google Scholar]

- Lowe, A.; Harrison, N.; French, A.P. Hyperspectral image analysis techniques for the detection and classification of the early onset of plant disease and stress. Plant Methods. 2017, 13, 80. [Google Scholar] [CrossRef]

- Sytar, O.; Brestic, M.; Zivcak, M.; Olsovska, K.; Kovar, M.; Shao, H.; He, X. Applying hyperspectral imaging to explore natural plant diversity towards improving salt stress tolerance. Sci. Total Environ. 2017, 578, 90–99. [Google Scholar]

- Thomas, S.; Kuska, M.T.; Bohnenkamp, D.; Brugger, A.; Alisaac, E.; Wahabzada, M.; Behmann, J.; Mahlein, A.-K. Benefits of hyperspectral imaging for plant disease detection and plant protection: A technical perspective. J. Plant. Dis. Prot. 2017, 125, 1–16. [Google Scholar] [CrossRef]

- Zhao, Y.; Yu, K.; Feng, C.; Cen, H.; He, Y. Early detection of aphid (myzus persicae) infestation on chinese cabbage by hyperspectral imaging and feature extraction. Trans. Asabe 2017, 60, 1045–1051. [Google Scholar] [CrossRef]

- Wu, X.; Zhang, W.; Qiu, Z.; Cen, H.; He, Y. A novel method for detection of pieris rapae larvae on cabbage leaves using nir hyperspectral imaging. Appl. Eng. Agric. 2016, 32, 311–316. [Google Scholar]

- Harsanyi, J.C. Detection and Classification of Subpixel Spectral Signatures in Hyperspectral Image Sequences. Ph.D. Thesis, Department of Electrical Engineering, University of Maryland Baltimore County, College Park, MD, USA, August 1993. [Google Scholar]

- Pearson, K. LIII. On lines and planes of closest fit to systems of points in space. Philos. Mag. 1901, 2, 559–572. [Google Scholar] [CrossRef]

- Cortes, C.; Vapnik, V. Support-Vector Networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Carranza-García, M.; García-Gutiérrez, J.; Riquelme, J.C. A framework for evaluating land use and land cover classification using convolutional neural networks. Remote Sens. 2019, 11, 274. [Google Scholar] [CrossRef]

- Hinton, G.E.; Osindero, S.; Teh, Y.-W. A Fast Learning Algorithm for Deep Belief Nets. Neural Comput. 2006, 18, 1527–1554. [Google Scholar] [CrossRef] [PubMed]

- Fan, Y.; Wang, T.; Qiu, Z.; Peng, J.; Zhang, C.; He, Y. Fast Detection of Striped Stem-Borer (Chilo suppressalis Walker) Infested Rice Seedling Based on Visible/Near-Infrared Hyperspectral Imaging System. Sensors 2017, 17, 2470. [Google Scholar] [CrossRef]

- Araujo, M.C.U.; Saldanha, T.C.B.; Galvao, R.K.H.; Yoneyama, T.; Chame, H.C.; Visani, V. The successive projections algorithm for variable selection in spectroscopic multicomponent analysis. Chemom. Intell. Lab. Syst. 2001, 57, 65–73. [Google Scholar] [CrossRef]

- Al-Allaf, O.N.A. Improving the performance of backpropagation neural network algorithm for image compression/decompression system. J. Comput. Sci. 2010, 6, 834–838. [Google Scholar]

- Chen, S.Y.; Chang, C.Y.; Ou, C.S.; Lien, C.T. Detection of Insect Damage in Green Coffee Beans Using VIS-NIR Hyperspectral Imaging. Remote Sens. 2020, 12, 2348. [Google Scholar] [CrossRef]

- Huang, W.; Lamb, D.W.; Niu, Z.; Zhang, Y.; Liu, L.; Wang, J. Identification of yellow rust in wheat using in-situ spectral reflectance measurements and airborne hyperspectral imaging. Precis. Agric. 2007, 8, 187–197. [Google Scholar] [CrossRef]

- Dang, H.Q.; Kim, I.K.; Cho, B.K.; Kim, M.S. Detection of Bruise Damage of Pear Using Hyperspectral Imagery. In Proceedings of the 12th International Conference on Control Automation and Systems, Jeju Island, Korea, 17–21 October 2012; pp. 1258–1260. [Google Scholar]

- Ma, K.; Kuo, Y.; Ouyang, Y.C.; Chang, C. Improving pesticide residues detection using band prioritization and constrained energy minimization. In Proceedings of the 2017 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Fort Worth, TX, USA, 23–28 July 2017; pp. 4802–4805. [Google Scholar]

- Ren, H.; Chang, C.-I. Automatic spectral target recognition in hyperspectral imagery. IEEE Trans. Aerosp. Electron. Syst. 2003, 39, 1232–1249. [Google Scholar]

- Kaur, D.; Kaur, Y. Various Image Segmentation Techniques: A Review. Int. J. Comput. Sci. Mob. Comput. 2014, 3, 809–814. [Google Scholar]

- Woebbecke, D.M.; Meyer, G.E.; Von Bargen, K.; Mortensen, D.A. Color Indices for Weed Identification under Various Soil, Residue and Lighting Conditions. Trans. ASAE 1995, 38, 259–269. [Google Scholar] [CrossRef]

- Senthilkumaran, N.; Vaithegi, S. Image Segmentation by Using Thresholding Techniques for Medical Images. Comput. Sci. Eng. Int. J. 2016, 6, 1–13. [Google Scholar]

- Chang, C.-I. Target signature-constrained mixed pixel classification for hyperspectral imagery. IEEE Trans. Geosci. Remote Sens. 2002, 40, 1065–1081. [Google Scholar] [CrossRef]

- Chang, C.I.; Du, Q.; Sun, T.-L.; Althouse, M. A joint band prioritization and band-decorrelation approach to band selection for hyperspectral image classification. IEEE Trans. Geosci. Remote. Sens. 1999, 37, 2631–2641. [Google Scholar] [CrossRef]

- Chang, C.I.; Liu, K.H. Progressive band selection of spectral unmixing for hyperspectral imagery. IEEE Trans. Geosci. Remote Sens. 2014, 52, 2002–2017. [Google Scholar] [CrossRef]

- Ouyang, Y.C.; Chen, H.M.; Chai, J.W.; Chen, C.C.; Poon, S.K.; Yang, C.W.; Lee, S.K.; Chang, C.I. Band expansion process-based over-complete independent component analysis for multispectral processing of magnetic resonance images. IEEE Trans. Biomed. Eng. 2008, 55, 1666–1677. [Google Scholar] [CrossRef]

- Zhang, L.; Zhang, L.; Du, B. Deep Learning for Remote Sensing Data: A Technical Tutorial on the State of the Art. IEEE Geosci. Remote Sens. Mag. 2016, 4, 22–40. [Google Scholar] [CrossRef]

- Knipling, E.B. Physical and physiological basis for the reflection of visible and near-infrared radiation from vegetation. Remote Sens. Environ. 1970, 1, 155–159. [Google Scholar] [CrossRef]

- Youden, W.J. Index for rating diagnostic tests. Cancer 1950, 3, 32–35. [Google Scholar] [CrossRef]

- Chen, S.-Y.; Lin, C.; Tai, C.-H.; Chuang, S.-J. Adaptive Window-Based Constrained Energy Minimization for Detection of Newly Grown Tree Leaves. Remote Sens. 2018, 10, 96. [Google Scholar] [CrossRef]

- Camps-Valls, G.; Bruzzone, L. Kernel-based methods for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 2005, 43, 1351–1362. [Google Scholar] [CrossRef]

- Bengio, Y.; LeCun, Y. Scaling Learning Algorithms towards AI. In Large-Scale Kernel Machines; MIT Press: Cambridge, MA, USA, 2007; pp. 1–41. ISBN 1002620262. [Google Scholar]

- Bengio, Y.; Courville, A.C.; Vincent, P. Representation Learning: A Review and New Perspectives. IEEE TPAMI 2013, 35, 1798–1828. [Google Scholar] [CrossRef]

- Hsu, Y.; Ouyang, Y.C.; Lu, Y.L.; Ouyang, M.; Guo, H.Y.; Liu, T.S.; Chen, H.M.; Wu, C.C.; Wen, C.H.; Shin, M.S.; et al. Using Hyperspectral Imaging and Deep Neural Network to Detect Fusarium Wilton Phalaenopsis. In Proceedings of the 2021 IEEE International Geoscience and Remote Sensing Symposium IGARS, Brussels, Belgium, 11–16 July 2021; pp. 4416–4419. [Google Scholar]

- Mique, E.L.; Palaoag, T.D. Rice Pest and Disease Detection Using Convolutional Neural Network. In Proceedings of the 2018 International Conference on Information Science and System, Jeju Island, Korea, 27–29 April 2018; pp. 147–151. [Google Scholar]

- Rahman, C.R.; Arko, P.S.; Ali, M.E.; Iqbal Khan, M.A.; Apon, S.H.; Nowrin, F.; Wasif, A. Identification and recognition of rice diseases and pests using convolutional neural networks. Biosyst. Eng. 2020, 194, 112–120. [Google Scholar] [CrossRef]

| Sample Types | |||

|---|---|---|---|

| HL | D1 OP | D6 OP | |

| Band selection 1 | 297 | 301 | |

| Pixel numbers used for DNN Training | 5936 | 6015 | 6962 |

| Pixel numbers used for DNN Testing | 1000 | 1000 | 1000 |

| Model | Criteria | Accuracy (%) | OA 3 (%) | Time (s) | ||

|---|---|---|---|---|---|---|

| HL | Early 1 OP | Late 2 OP | ||||

| Full-band | - | 97.3 | 93.6 | 94.0 | 95.0 | 14.88 |

| Band selection (6 bands) | Variance | 96.0 | 84.4 | 85.1 | 88.5 | 7.18 |

| Entropy | 97.2 | 87.1 | 86.5 | 90.3 | 5.79 | |

| Skewness | 95.7 | 82.5 | 81.6 | 86.6 | 4.96 | |

| Kurtosis | 97.4 | 78.7 | 86.5 | 87.5 | 6.32 | |

| SNR | 97.8 | 78.4 | 78.9 | 85.0 | 6.98 | |

| Band expansion process (27 bands) | Variance | 97.0 | 83.3 | 84.1 | 88.1 | 7.88 |

| Entropy | 97.1 | 82.8 | 83.5 | 87.8 | 6.83 | |

| Skewness | 96.6 | 76.2 | 81.7 | 84.8 | 5.85 | |

| Kurtosis | 96.3 | 78.2 | 86.8 | 87.1 | 6.79 | |

| SNR | 96.9 | 78.0 | 81.3 | 85.4 | 7.43 | |

| Analysis Method | Pixel Number | OA (%) | |||

|---|---|---|---|---|---|

| TP 2 | FP 3 | TN 4 | FN 5 | ||

| CEM_Full-band→DNN_Full-band | 317 | 341 | 11,781 | 289 | 95.05 |

| CEM_band selection→DNN_band selection 1 | 497 | 138 | 11,984 | 109 | 98.05 |

| CEM_band selection→DNN_BEP | 488 | 138 | 11,984 | 178 | 97.98 |

| CEM_BEP→DNN_band selection | 318 | 17 | 12,105 | 288 | 97.60 |

| CEM_BE→DNN_BEP | 302 | 18 | 12,104 | 304 | 97.47 |

| Positive 6 | Negative 7 | ||||

| Ground Truth | 606 | 12,122 | |||

| Analysis Method | Recall | Precision | Accuracy | Dice Similarity Coefficient | Time (s) |

|---|---|---|---|---|---|

| CEM _Full-band→DNN_Full-band | 0.523 | 0.482 | 0.951 | 0.670 | 3.672 |

| CEM_band selection→DNN_band selection | 0.820 | 0.783 | 0.981 | 0.801 | 0.336 |

| CEM_band selection→DNN_BEP | 0.805 | 0.780 | 0.980 | 0.755 | 0.381 |

| CEM_BEP→DNN_band selection | 0.525 | 0.949 | 0.976 | 0.676 | 0.559 |

| CEM_BEP→DNN_BEP | 0.498 | 0.915 | 0.974 | 0.652 | 0.604 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liang, G.-C.; Ouyang, Y.-C.; Dai, S.-M. Detection and Classification of Rice Infestation with Rice Leaf Folder (Cnaphalocrocis medinalis) Using Hyperspectral Imaging Techniques. Remote Sens. 2021, 13, 4587. https://doi.org/10.3390/rs13224587

Liang G-C, Ouyang Y-C, Dai S-M. Detection and Classification of Rice Infestation with Rice Leaf Folder (Cnaphalocrocis medinalis) Using Hyperspectral Imaging Techniques. Remote Sensing. 2021; 13(22):4587. https://doi.org/10.3390/rs13224587

Chicago/Turabian StyleLiang, Gui-Chou, Yen-Chieh Ouyang, and Shu-Mei Dai. 2021. "Detection and Classification of Rice Infestation with Rice Leaf Folder (Cnaphalocrocis medinalis) Using Hyperspectral Imaging Techniques" Remote Sensing 13, no. 22: 4587. https://doi.org/10.3390/rs13224587

APA StyleLiang, G.-C., Ouyang, Y.-C., & Dai, S.-M. (2021). Detection and Classification of Rice Infestation with Rice Leaf Folder (Cnaphalocrocis medinalis) Using Hyperspectral Imaging Techniques. Remote Sensing, 13(22), 4587. https://doi.org/10.3390/rs13224587