An Adaptive-Parameter Pixel Unmixing Method for Mapping Evergreen Forest Fractions Based on Time-Series NDVI: A Case Study of Southern China

Abstract

:1. Introduction

2. Materials and Methods

2.1. Study Area

2.2. Data Preprocessing

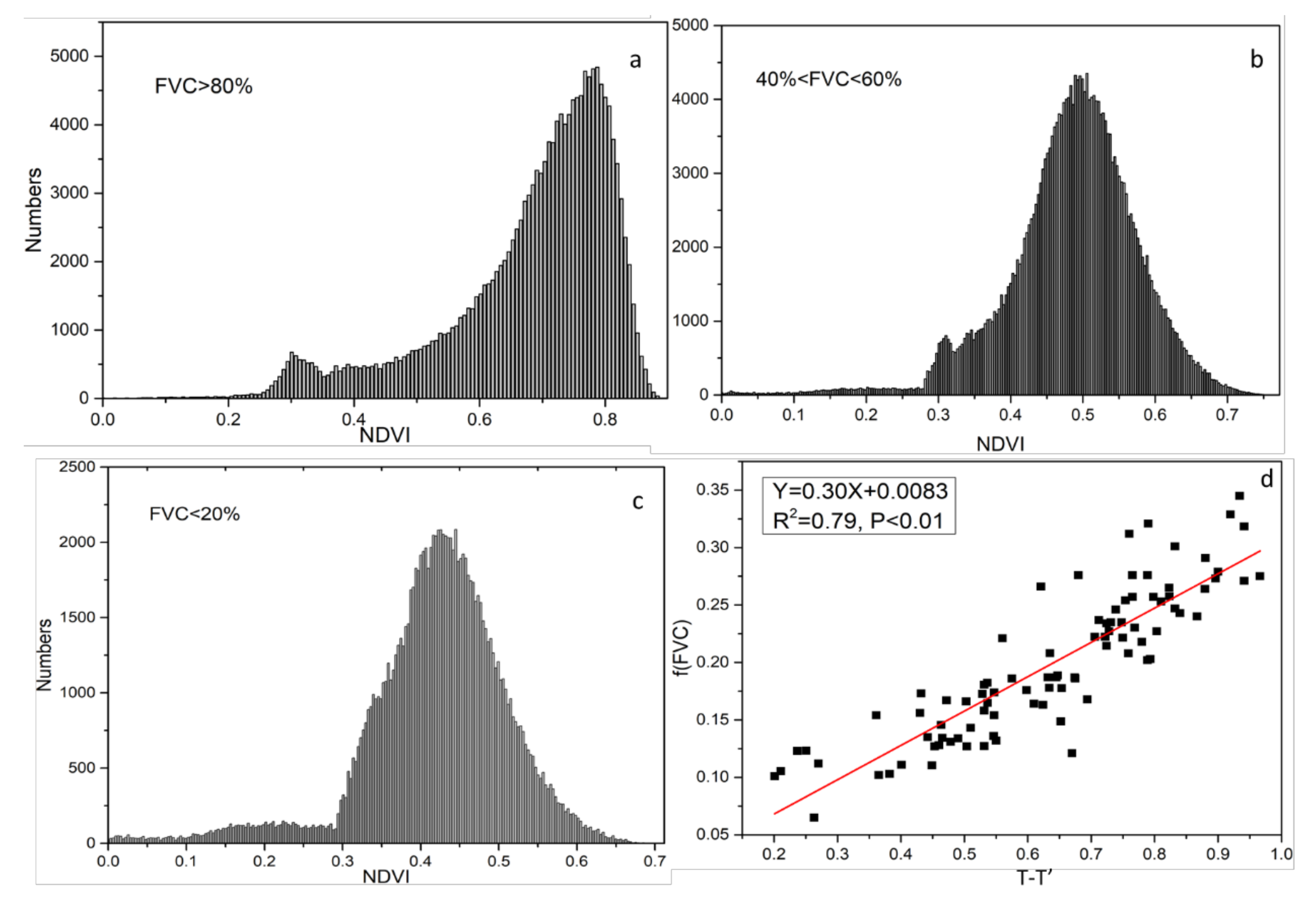

2.3. Otsu Threshold Selection Method

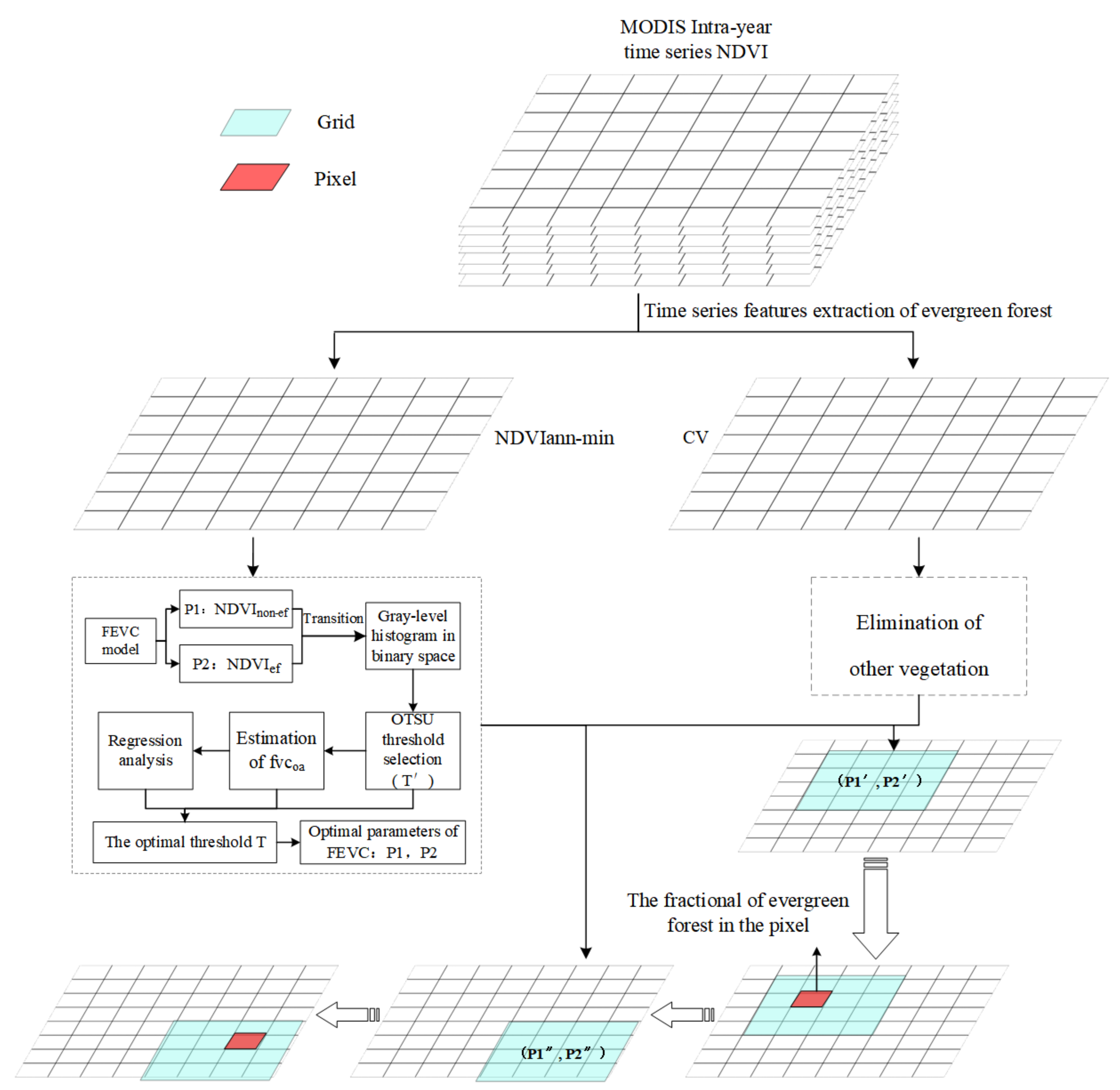

2.4. FEVC Model Based on the Linear Unmixing Model and NDVIann-min Image

2.5. Automatic Acquisition of FEVC Model Parameters

2.6. Gridded Adaptive Parameters Estimation Model

2.7. Accuracy Assessment

3. Results

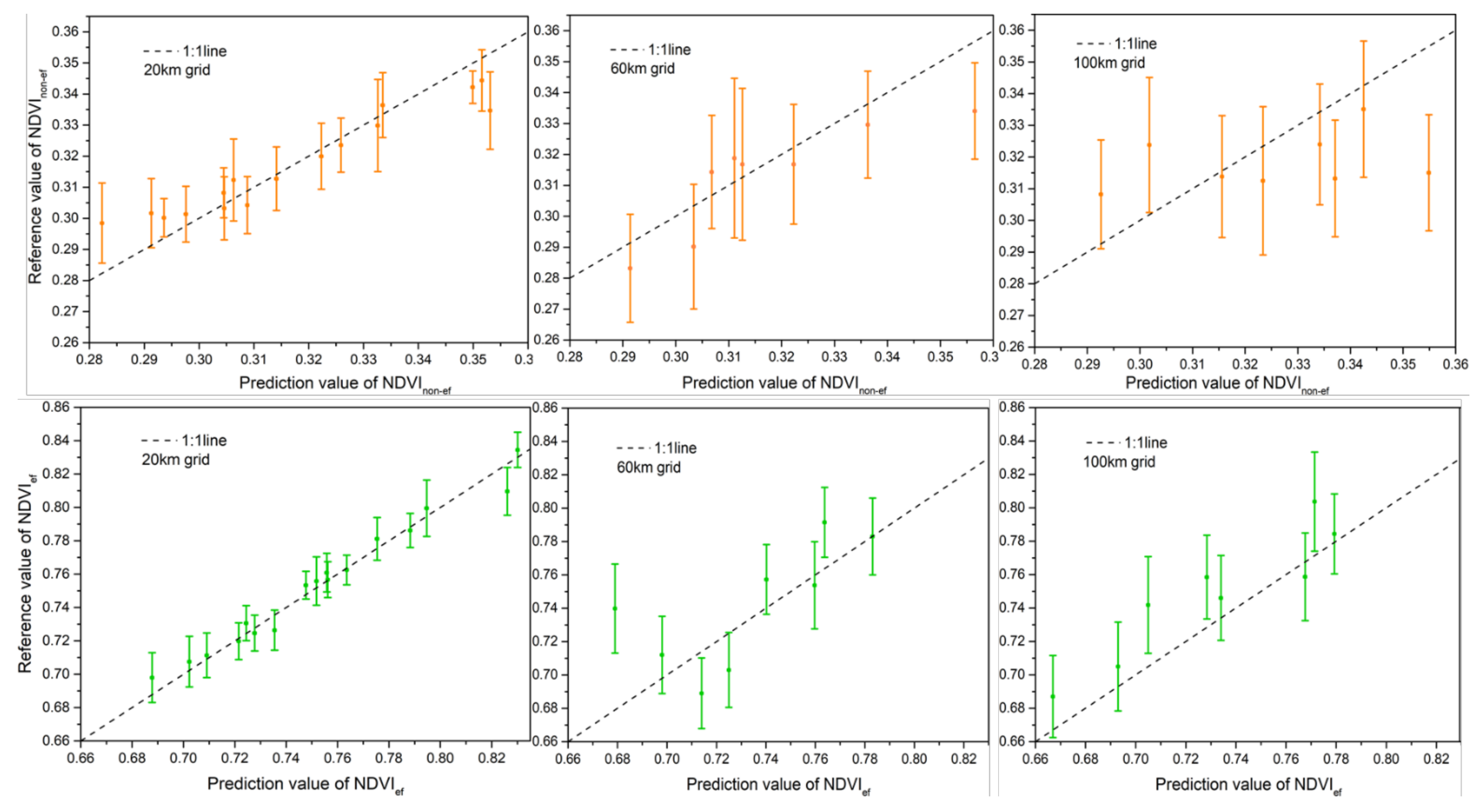

3.1. Determination of Grid Size

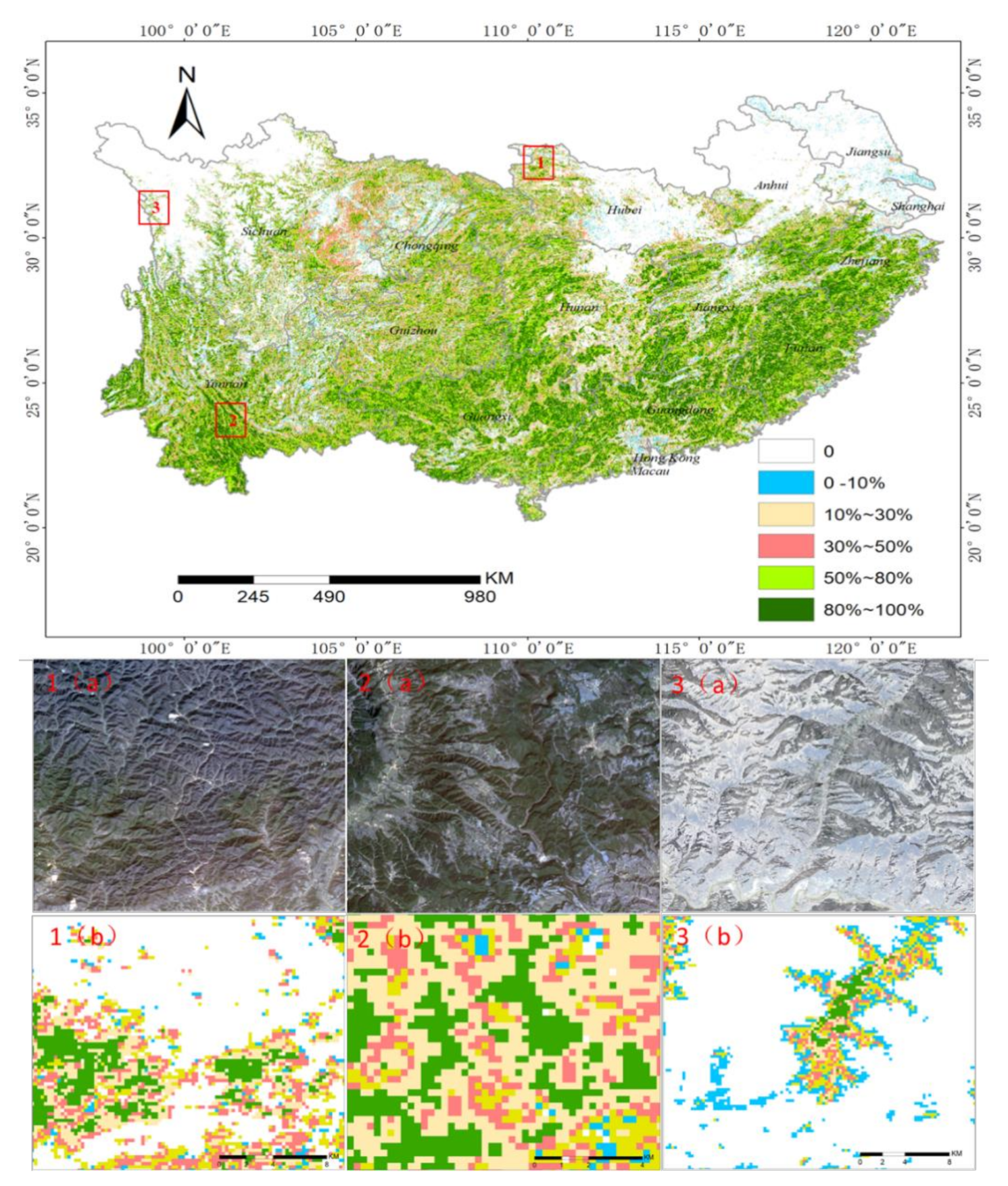

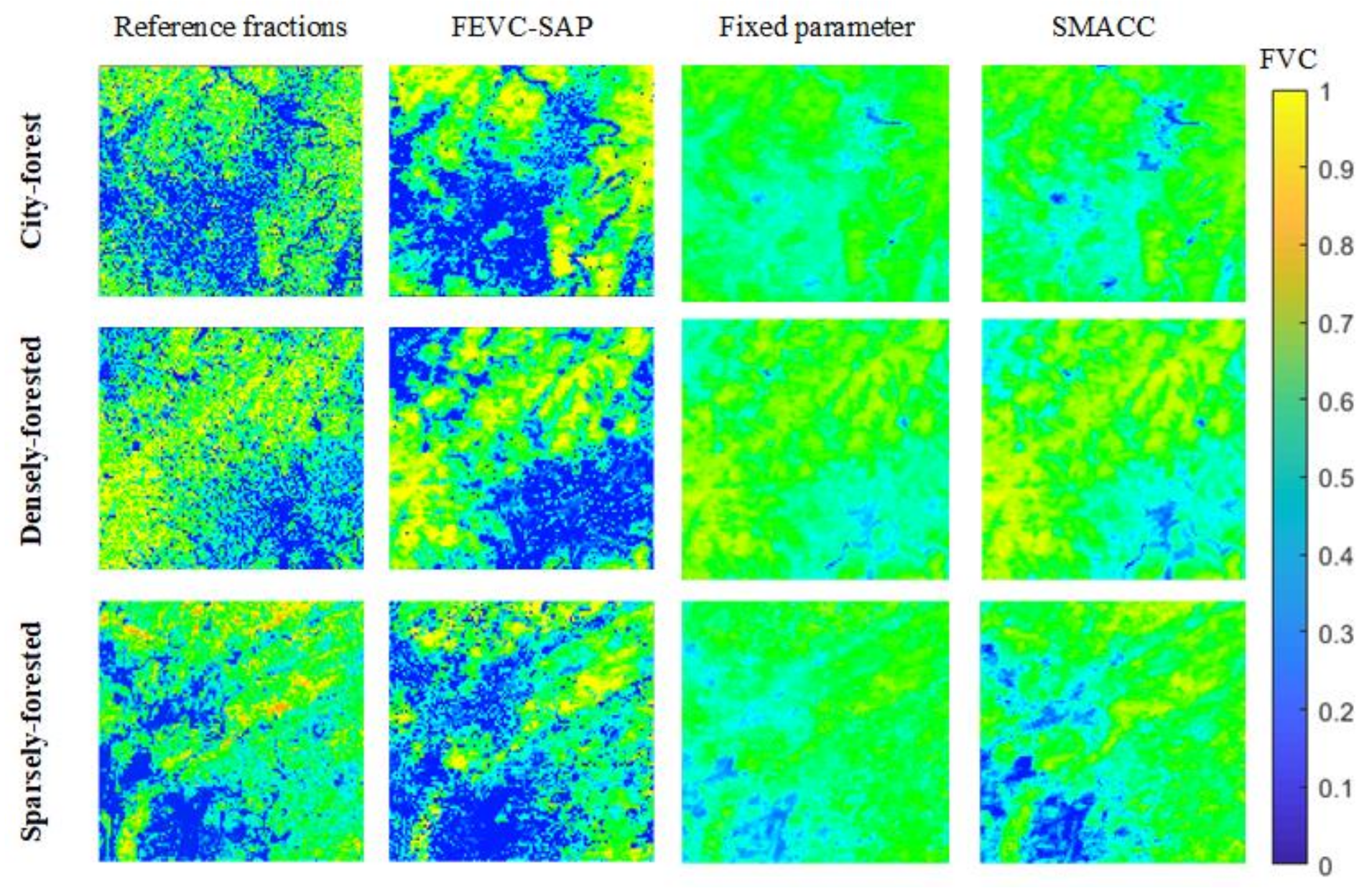

3.2. Mapping of Fractional Evergreen Forest Cover

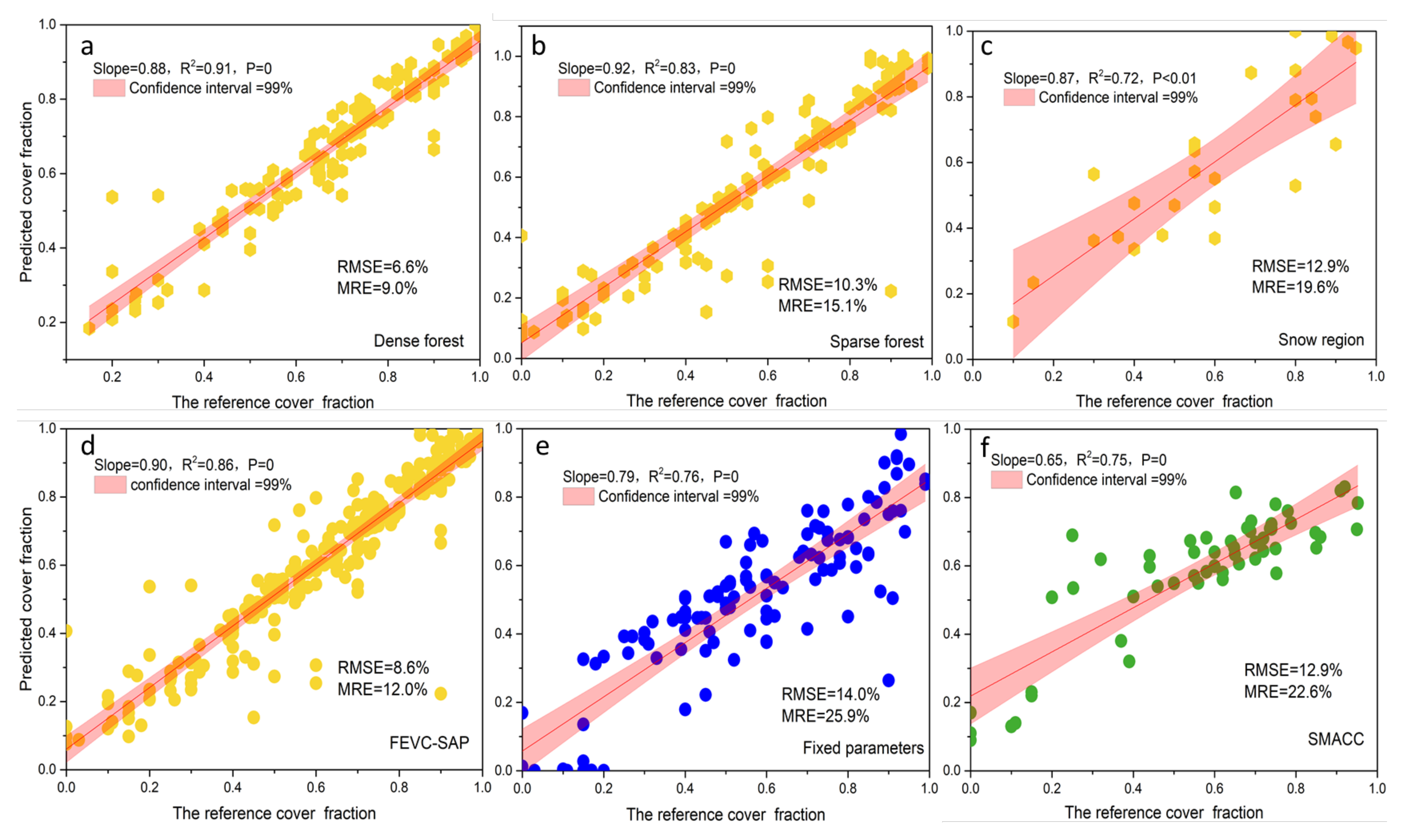

3.3. Model Accuracy Validation

4. Discussion

4.1. Elimination of Large-Scale Spatial Heterogeneity Based on Grids

4.2. Applicability and Sensitivity of the FEVC-SAP Method

4.3. Uncertainty and Future Research

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Bonan, G.B. Forests and Climate Change: Forcings, Feedbacks, and the Climate Benefits of Forests. Science 2008, 320, 1444–1449. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Foley, J.A.; DeFries, R.; Asner, G.P.; Barford, C.; Bonan, G.; Carpenter, S.R.; Chapin, F.S.; Coe, M.; Daily, G.C.; Gibbs, H.K.; et al. Global consequences of land use. Science 2005, 309, 570–574. [Google Scholar] [CrossRef] [Green Version]

- Pongratz, J.; Reick, C.H.; Raddatz, T.; Claussen, M. Biogeophysical versus biogeochemical climate response to historical anthropogenic land cover change. Geophys. Res. Lett. 2010, 37. [Google Scholar] [CrossRef] [Green Version]

- Wu, X.; Wang, X.; Xia, X.; Fang, J.; Wu, Y. Forest biomass is strongly shaped by forest height across boreal to tropical forests n China. J. Plant Ecol. 2015, 8, 559–567. [Google Scholar] [CrossRef] [Green Version]

- Morin, X.; Fahse, L.; Scherer-Lorenzen, M.; Bugmann, H. Tree species richness promotes productivity in temperate forests through strong complementarity between species. Ecol. Lett. 2011, 14, 1211–1219. [Google Scholar] [CrossRef] [PubMed]

- Paquette, A.; Messier, C. The effect of biodiversity on tree productivity: From temperate to boreal forests. Glob. Ecol. Biogeogr. 2011, 20, 170–180. [Google Scholar] [CrossRef] [Green Version]

- Feng, X.; Fu, B.; Piao, S.; Wang, S.; Ciais, P.; Zeng, Z.; Lü, X.F.B.F.S.W.Y.; Zeng, Y.; Li, Y.; Jiang, X.; et al. Revegetation in China’s Loess Plateau is approaching sustainable water resource limits. Nat. Clim. Chang. 2016, 6, 1019–1022. [Google Scholar] [CrossRef]

- Baccini, A.; Goetz, S.; Walker, W.S.; Laporte, N.T.; Sun, M.; Sulla-Menashe, D.; Hackler, J.L.; Beck, P.S.A.; Dubayah, R.O.; Friedl, M.A.; et al. Estimated carbon dioxide emissions from tropical deforestation improved by carbon-density maps. Nat. Clim. Chang. 2012, 2, 182–185. [Google Scholar] [CrossRef]

- Ørka, H.O.; Dalponte, M.; Gobakken, T.; Næsset, E.; Ene, L.T. Characterizing forest species composition using multiple remote sensing data sources and inventory approaches. Scand. J. For. Res. 2013, 28, 677–688. [Google Scholar] [CrossRef]

- Kennedy, R.E.; Yang, Z.G.; Cohen, W.B. Detecting trends in forest disturbance and recovery using yearly Landsat time series: LandTrendr—Temporal segmentation algorithms. Remote Sens. Environ. 2010, 114, 2897–2910. [Google Scholar] [CrossRef]

- Herold, M.; Románcuesta, R.M.; Hirata, Y.; Laake, P.V.; Asner, G.; Heymell, V. A review of methods to measure and monitor historical forest degradation. Unasylva 2012, 62, 16–24. [Google Scholar]

- Huang, B.; Zhao, B.; Song, Y. Urban land-use mapping using a deep convolutional neural network with high spatial reso-lution multispectral remote sensing imagery. Remote. Sens. Environ. 2018, 214, 73–86. [Google Scholar] [CrossRef]

- Kataria, A.; Singh, M. A review of data classification using k-nearest neighbour algorithm. Int. J. Emerg. Technol. Adv. Eng. 2013, 3, 354–360. [Google Scholar]

- Miettinen, J.; Shi, C.; Tan, W.J.; Liew, S.C. 2010 land cover map of insular Southeast Asia in 250-m spatial resolution. Remote. Sens. Lett. 2012, 3, 11–20. [Google Scholar] [CrossRef]

- Roberts, D.A.; Gardner, M.; Church, R.; Ustin, S.; Scheer, G.; Green, R.O. Mapping Chaparral in the Santa Monica Mountains Using Multiple Endmember Spectral Mixture Models. Remote. Sens. Environ. 1998, 65, 267–279. [Google Scholar] [CrossRef]

- Jia, K.; Yang, L.; Liang, S.; Xiao, Z.; Zhao, X. Long-Term Global Land Surface Satellite (GLASS) Fractional Vegetation Cover Product Derived From MODIS and AVHRR Data. IEEE J. Sel. Top. Appl. Earth Obs. Remote. Sens. 2018, 12, 508–518. [Google Scholar] [CrossRef]

- Plaza, A.; Martínez, P.; Pérez, R. Spatial/Spectral Endmember Extraction by Multidimensional Morpho-logical Operations. IEEE Trans. Geosci. Remote. Sens. 2002, 40, 2025–2041. [Google Scholar] [CrossRef] [Green Version]

- Heinz, D.C.; Chang, C.I. Fully Constrained Least Squares Linear Spectral Mixture Analysis Method for Material Quantifica-tion in Hyperspectral Imagery. IEEE Trans. Geosci. Remote. Sens. 2001, 39, 529–545. [Google Scholar] [CrossRef] [Green Version]

- Keshava, N.; Mustard, J.F. Spectral unmixing. IEEE Signal Process. Mag. 2002, 19, 44–57. [Google Scholar] [CrossRef]

- Jia, K.; Liang, S.; Gu, X.; Baret, F.; Wei, X.; Wang, X.; Yao, Y.; Yang, L.; Li, Y. Fractional vegetation cover estimation algorithm for Chinese GF-1 wide field view data. Remote. Sens. Environ. 2016, 177, 184–191. [Google Scholar] [CrossRef]

- Bioucas-Dias, J.M.; Plaza, A.; Dobigeon, N.; Parente, M.; Du, Q.; Gader, P.; Chanussot, J. Hyperspectral Unmixing Overview: Geometrical, Statistical, and Sparse Regression-Based Approaches. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2012, 5, 354–379. [Google Scholar] [CrossRef] [Green Version]

- Heylen, R.; Parente, M.; Gader, P. A Review of Nonlinear Hyperspectral Unmixing Methods. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 1844–1868. [Google Scholar] [CrossRef]

- Qu, Y.; Wang, J.; Wan, H.; Li, X.; Zhou, G. A Bayesian network algorithm for retrieving the characterization of land surface vegetation. Remote. Sens. Environ. 2008, 112, 613–622. [Google Scholar] [CrossRef]

- Xiao, J.; Moody, A. A comparison of methods for estimating fractional green vegetation cover within a desert-to-upland transition zone in central New Mexico, USA. Remote. Sens. Environ. 2005, 98, 237–250. [Google Scholar] [CrossRef]

- Price, J.C. On the information content of soil reflectance spectra. Remote. Sens. Environ. 1990, 33, 113–121. [Google Scholar] [CrossRef]

- Jacquemoud, S.; Verhoef, W.; Baret, F.; Bacour, C.; Zarco-Tejada, P.J.; Asner, G.P.; François, C.; Ustin, S.L. PROSPECT+SAIL models: A review of use for vegetation characterization. Remote. Sens. Environ. 2009, 113, S56–S66. [Google Scholar] [CrossRef]

- Johnson, B.; Tateishi, R.; Kobayashi, T. Remote Sensing of Fractional Green Vegetation Cover Using Spatially-Interpolated Endmembers. Remote. Sens. 2012, 4, 2619–2634. [Google Scholar] [CrossRef] [Green Version]

- Yuan, H.; Wu, C.; Lu, L.; Wang, X. A new algorithm predicting the end of growth at five evergreen conifer forests based on nighttime temperature and the enhanced vegetation index. ISPRS J. Photogramm. Remote. Sens. 2018, 144, 390–399. [Google Scholar] [CrossRef]

- Bullock, E.L.; Woodcock, C.E.; Olofsson, P. Monitoring tropical forest degradation using spectral unmixing and Landsat time series analysis. Remote. Sens. Environ. 2020, 238, 110968. [Google Scholar] [CrossRef]

- Wang, Q.; Ding, X.; Tong, X.; Atkinson, P.M. Spatio-temporal spectral unmixing of time-series images. Remote. Sens. Environ. 2021, 259, 112407. [Google Scholar] [CrossRef]

- Achard, F.; Eva, H.; Mayaux, P. Tropical forest mapping from coarse spatial resolution satellite data: Production and accuracy assessment issues. Int. J. Remote. Sens. 2001, 22, 2741–2762. [Google Scholar] [CrossRef]

- Kou, W.; Liang, C.; Wei, L.; Hernandez, A.J.; Yang, X. Phenology-Based Method for Mapping Tropical Evergreen Forests by Integrating of MODIS and Landsat Imagery. Forests 2017, 8, 34. [Google Scholar] [CrossRef]

- Qin, Y.; Xiao, X.; Dong, J.; Zhang, G.; Roy, P.S.; Joshi, P.K.; Gilani, H.; Murthy, M.S.R.; Jin, C.; Wang, J.; et al. Mapping forests in monsoon Asia with ALOS PALSAR 50-m mosaic images and MODIS imagery in 2010. Sci. Rep. 2016, 6, 20880. [Google Scholar] [CrossRef] [PubMed]

- Healey, S.P.; Cohen, W.B.; Spies, T.A.; Moeur, M.; Pflugmacher, D.; Whitley, M.G.; Lefsky, M. The Relative Impact of Harvest and Fire upon Landscape-Level Dynamics of Older Forests: Lessons from the Northwest Forest Plan. Ecosystems 2008, 11, 1106–1119. [Google Scholar] [CrossRef] [Green Version]

- Lo, C.P.; Choi, J. A hybrid approach to urban land use/cover mapping using Landsat 7 Enhanced Thematic Mapper Plus (ETM+) images. Int. J. Remote. Sens. 2004, 25, 2687–2700. [Google Scholar] [CrossRef]

- Masek, J.G.; Huang, C.; Wolfe, R.; Cohen, W.; Hall, F.; Kutler, J.; Nelson, P. North American forest disturbance mapped from a decadal Landsat record. Remote. Sens. Environ. 2008, 112, 2914–2926. [Google Scholar] [CrossRef]

- Funk, C.; Budde, M.E. Phenologically-tuned MODIS NDVI-based production anomaly estimates for Zimbabwe. Remote Sens. Environ. 2009, 113, 115–125. [Google Scholar] [CrossRef]

- Mildrexler, D.J.; Zhao, M.; Running, S.W. Testing a MODIS Global Disturbance Index across North America. Remote. Sens. Environ. 2009, 113, 2103–2117. [Google Scholar] [CrossRef]

- Roy, D.P.; Jin, Y.; Lewis, P.E.; Justice, C.O. Prototyping a global algorithm for systematic fire-affected area mapping using MODIS time series data. Remote. Sens. Environ. 2005, 97, 137–162. [Google Scholar] [CrossRef]

- Spruce, J.P.; Sader, S.; Ryan, R.E.; Smoot, J.; Kuper, P.; Ross, K.; Prados, D.; Russell, J.; Gasser, G.; McKellip, R. Assessment of MODIS NDVI time series data products for detecting forest defoliation by gypsy moth outbreaks. Remote. Sens. Environ. 2011, 115, 427–437. [Google Scholar] [CrossRef]

- Verbesselt, J.; Hyndman, R.; Newnham, G.; Culvenor, D. Detecting trend and seasonal changes in satellite image time series. Remote Sens. Environ. 2010, 114, 106–115. [Google Scholar] [CrossRef]

- Wardlow, B.D.; Egbert, S.L.; Kastens, J.H. Analysis of time-series MODIS 250 m vegetation index data for crop classification in the U.S. Central Great Plains. Remote. Sens. Environ. 2007, 108, 290–310. [Google Scholar] [CrossRef] [Green Version]

- Zhan, X.; Sohlberg, R.A.; Townshend, J.R.G.; DiMiceli, C.; Carroll, M.L.; Eastman, J.C.; Hansen, M.C.; DeFries, R.S. Detection of land cover changes using MODIS 250 m data. Remote. Sens. Environ. 2002, 83, 336–350. [Google Scholar] [CrossRef]

- Yang, Y.; Wu, T.; Wang, S.; Li, H. Fractional evergreen forest cover mapping by MODIS time-series FEVC-CV methods at sub-pixel scales. ISPRS J. Photogramm. Remote. Sens. 2020, 163, 272–283. [Google Scholar] [CrossRef]

- Xiao, L.; Ouyang, H.; Fan, C. An improved Otsu method for threshold segmentation based on set mapping and trapezoid region intercept histogram. Optik 2019, 196, 163106. [Google Scholar] [CrossRef]

- Gorelick, N.; Hancher, M.; Dixon, M.; Ilyushchenko, S.; Thau, D.; Moore, R. Google Earth Engine: Planetary-scale geospatial analysis for everyone. Remote Sens. Environ. 2017, 202, 18–27. [Google Scholar] [CrossRef]

- Marcal, A.R.S.; Wright, G.G. The use of ’overlapping’ NOAA-AVHRR NDVI maximum value composites for Scotland and initial comparisons with the land cover census on a Scottish Regional and District basis. Int. J. Remote. Sens. 1997, 18, 491–503. [Google Scholar] [CrossRef] [Green Version]

- Shen, H.; Li, H.; Qian, Y.; Zhang, L.; Yuan, Q. An effective thin cloud removal procedure for visible remote sensing images. ISPRS J. Photogramm. Remote. Sens. 2014, 96, 224–235. [Google Scholar] [CrossRef]

- Zhu, Z.; Woodcock, C.E. Object-based cloud and cloud shadow detection in Landsat imagery. Remote. Sens. Environ. 2012, 118, 83–94. [Google Scholar] [CrossRef]

- Ostu, N.; Nobuyuki, O.; Otsu, N. A thresholding selection method from gray level histogram. IEEE SMC-8 1979, 9, 62–66. [Google Scholar] [CrossRef] [Green Version]

- Wu, B.F.; Li, M.M.; Yan, C.Z.; Zhou, W.F.; Yan, C.Z. Developing Method of Vegetation FRACTION estimation by Remote Sensing for Soil Loss Equation: A Case in the Upper Basin of Miyun Reservoir. In Proceedings of the 2004 IEEE International Geoscience and Remote Sensing Symposium, Anchorage, AK, USA, 20–24 September 2004; pp. 4352–4355. [Google Scholar]

- Ding, Y.; Zhang, H.; Li, Z.; Xin, X.; Zheng, X.; Zhao, K. Comparison of fractional vegetation cover estimations using dimidiate pixel models and look-up table inversions of the PROSAIL model from Landsat 8 OLI data. J. Appl. Remote. Sens. 2016, 10, 36022. [Google Scholar] [CrossRef]

- Qi, J.; Marsett, R.C.; Moran, M.S.; Goodrich, D.C.; Heilman, P.; Kerr, Y.; Dedieu, G.; Chehbouni, A.; Zhang, X. Spatial and temporal dynamics of vegetation in the San Pedro River basin area. Agric. For. Meteorol. 2000, 105, 55–68. [Google Scholar] [CrossRef] [Green Version]

- Gonzalez, R.C.; Woods, R.E. Digital Image Processing; Prentice Hall International: Hoboken, NJ, USA, 2008; Volume 28, pp. 484–486. [Google Scholar]

- Teng, S.P.; Chen, Y.K.; Cheng, K.S.; Lo, H.C. Hypothesis-test-based land cover change detection using multi-temporal satellite images—A comparative study. Adv. Space Res. 2008, 41, 1744–1754. [Google Scholar] [CrossRef]

- Qi, J.; Kerr, Y.; Chehbouni, A. External factor consideration in vegetation index development. Val D’isere 1994, 1, 17–21. [Google Scholar]

- Zhang, X.; Wu, B. A temporal transformation method of fractional vegetation cover derived from high and moderate resolution remote sensing data. Acta Ecol. Sin. 2015, 35, 1155–1164. [Google Scholar] [CrossRef] [Green Version]

- Carlson, T.N.; Ripley, D.A. On the relation between NDVI, fractional vegetation cover, and leaf area index. Remote. Sens. Environ. 1997, 62, 241–252. [Google Scholar] [CrossRef]

- Kaufman, Y.J.; Tanre, D. Atmospherically resistant vegetation index (ARVI) for EOS-MODIS. IEEE Trans. Geosci. Remote Sens. 1992, 30, 261–270. [Google Scholar] [CrossRef]

- Xu, J.; Zhang, Y.; Miao, D. Three-way confusion matrix for classification: A measure driven view. Inf. Sci. 2020, 507, 772–794. [Google Scholar] [CrossRef]

- Mentaschi, L.; Besio, G.; Cassola, F.; Mazzino, A. Problems in RMSE-based wave model validations. Ocean Model. 2013, 72, 53–58. [Google Scholar] [CrossRef]

- Gruninger, J.H.; Ratkowski, A.J.; Hoke, M.L. The sequential maximum angle convex cone (SMACC) endmember model. Algorithms Technol. Multispectral Hyperspectral Ultraspectral Imag. X 2004, 5425, 1–14. [Google Scholar] [CrossRef]

- Zeng, X.; Dickinson, R.E.; Walker, A.; Shaikh, M.; DeFries, R.S.; Qi, J. Derivation and Evaluation of Global 1-km Fractional Vegetation Cover Data for Land Modeling. J. Appl. Meteorol. 2000, 39, 826–839. [Google Scholar] [CrossRef]

- Zhang, C.; Ma, L.; Chen, J.; Rao, Y.; Zhou, Y.; Chen, X. Assessing the impact of endmember variability on linear Spectral Mixture Analysis (LSMA): A theoretical and simulation analysis. Remote. Sens. Environ. 2019, 235, 111471. [Google Scholar] [CrossRef]

- Metzler, J.W.; Sader, S.A. Model development and comparison to predict softwood and hardwood per cent cover using high and medium spatial resolution imagery. Int. J. Remote. Sens. 2005, 26, 3749–3761. [Google Scholar] [CrossRef]

- Hassan, M.A.; Yang, M.; Rasheed, A.; Yang, G.; Reynolds, M.P.; Xia, X.; Xiao, Y.; He, Z. A rapid monitoring of NDVI across the wheat growth cycle for grain yield prediction using a multi-spectral UAV platform. Plant Sci. 2019, 282, 95–103. [Google Scholar] [CrossRef]

- Jiménez-Muñoz, J.C.; Sobrino, J.A.; Plaza, A.; Guanter, L.; Moreno, J.; Martinez, P. Comparison Between Fractional Vegetation Cover Retrievals from Vegetation Indices and Spectral Mixture Analysis: Case Study of PROBA/CHRIS Data Over an Agricultural Area. Sensors 2009, 9, 768–793. [Google Scholar] [CrossRef]

- Dymond, C.C.; Mladenoff, D.J.; Radeloff, V.C. Phenological differences in Tasseled Cap indices improve deciduous forest classification. Remote. Sens. Environ. 2002, 80, 460–472. [Google Scholar] [CrossRef]

- Zurita-Milla, R.; Gómez-Chova, L.; Guanter, L.; Clevers, J.G.P.W.; Camps-Valls, G. Multitemporal Unmixing of Medium-Spatial-Resolution Satellite Images: A Case Study Using MERIS Images for Land-Cover Mapping. IEEE Trans. Geosci. Remote Sens. 2011, 49, 4308–4317. [Google Scholar] [CrossRef]

| Number of Scenes | Acquisition Date | Coverage | Cloud Cover |

|---|---|---|---|

| 8 scenes | 1 January 2017~25 February 2017 | E 99.3°~E 119.7°, N 21.6°~E 36.8° | <4% |

| 13 scenes | 1 December 2017~27 February 2018 | ||

| 11 scenes | 1 December 2018~15 February 2019 |

| Actual Results | ||||

|---|---|---|---|---|

| Class 1 | Class 2 | Class n | ||

| Predicted results | Class 1 | P11 | P12 | P1n |

| Class 2 | P21 | P22 | P2n | |

| … | … | … | … | |

| Class n | Pn1 | Pn2 | Pnn | |

| Product | Validation Data (Number) | |||

|---|---|---|---|---|

| Evergreen Forest | Other | UA | ||

| FEVC-SAP | Evergreen forest | 828 | 86 | 90.7% |

| Other | 170 | 960 | 84.9% | |

| PA | 83.3% | 91.7% | OA | |

| 87.5% | ||||

| MCD 12 | Evergreen forest | 457 | 750 | 37.9% |

| Other | 161 | 1104 | 87.2% | |

| PA | 73.9% | 59.5% | OA | |

| 63.1% | ||||

| GLC 30 | Evergreen forest | 1016 | 135 | 88.3% |

| Other | 350 | 1055 | 75.1% | |

| PA | 74.3% | 88.6% | OA | |

| 81.0% | ||||

| FEVC-SAP | Validation Data (Number) | |||

|---|---|---|---|---|

| Regions | Evergreen Forest | Other | UA | |

| Dense forest | Evergreen forest | 456 | 48 | 90.4% |

| Other | 40 | 204 | 83.6% | |

| PA | 91.9% | 80.9% | OA | |

| 88.2% | ||||

| Sparse forest | Evergreen forest | 214 | 30 | 87.7% |

| Other | 48 | 358 | 88.1% | |

| PA | 81.6% | 92.2 | OA | |

| 88.0% | ||||

| Snow region | Evergreen forest | 178 | 18 | 90.8% |

| Other | 82 | 398 | 82.9% | |

| PA | 68.4% | 95.6% | OA | |

| 85.2% | ||||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, Y.; Wu, T.; Zeng, Y.; Wang, S. An Adaptive-Parameter Pixel Unmixing Method for Mapping Evergreen Forest Fractions Based on Time-Series NDVI: A Case Study of Southern China. Remote Sens. 2021, 13, 4678. https://doi.org/10.3390/rs13224678

Yang Y, Wu T, Zeng Y, Wang S. An Adaptive-Parameter Pixel Unmixing Method for Mapping Evergreen Forest Fractions Based on Time-Series NDVI: A Case Study of Southern China. Remote Sensing. 2021; 13(22):4678. https://doi.org/10.3390/rs13224678

Chicago/Turabian StyleYang, Yingying, Taixia Wu, Yuhui Zeng, and Shudong Wang. 2021. "An Adaptive-Parameter Pixel Unmixing Method for Mapping Evergreen Forest Fractions Based on Time-Series NDVI: A Case Study of Southern China" Remote Sensing 13, no. 22: 4678. https://doi.org/10.3390/rs13224678