A Spatial-Spectral Feature Descriptor for Hyperspectral Image Matching

Abstract

:1. Introduction

2. Related Work

2.1. Spatial Descriptors

2.2. Multidimensional Descriptors

2.3. Hyperspectral Descriptors

3. Method

- (1)

- Spatial descriptors construction. There are two steps to construct spatial descriptors. The first step is to extract spatial interest points from an HSI in a new space produced by dimensional reduction. The second involves descriptors generating which describes the image spatial feature.

- (2)

- Spectral descriptors construction. After obtained the spatial interest points, we construct the spectral descriptors from the spectral gradient of surrounding neighbors. HOSG is used to construct the spectral descriptors. Additionally, normalized methods are used to eliminate the influences caused by incident changes in the environment.

- (3)

- A combination of the spatial and spectral feature. We obtain a spatial-spectral descriptor of 256 elements by concatenating the spatial descriptor of 128 elements and the spectral descriptor of 128 elements. The spatial and spectral descriptors have the same weight.

3.1. Spatial Descriptor

3.1.1. Dimensional Reduction by PCA

3.1.2. Spatial Descriptor Construction

- (1)

- Local extremum detection. Local extremum points are identified by constructing a Gaussian pyramid and searching for local peaks in a series of DOG images. Taylor expansion is also applied to get the interpolated estimate for a more accurate local extremum. Moreover, candidate interest points are eliminated if found to be unstable.

- (2)

- Dominant orientation assignment. To achieve invariance to image rotation, dominant orientation are assigned to each keypoint based on local image properties. An orientation histogram is formed from the gradient orientation of sample points within a region around the keypoint.

- (3)

- Keypoint descriptors. A keypoint descriptor that should be highly distinctive and invariable to some environmental variations is then created by first computing the gradient magnitude and orientation at each image sample point in a region around the keypoint location. Figure 3 illustrates an example of constructing a spatial descriptor.

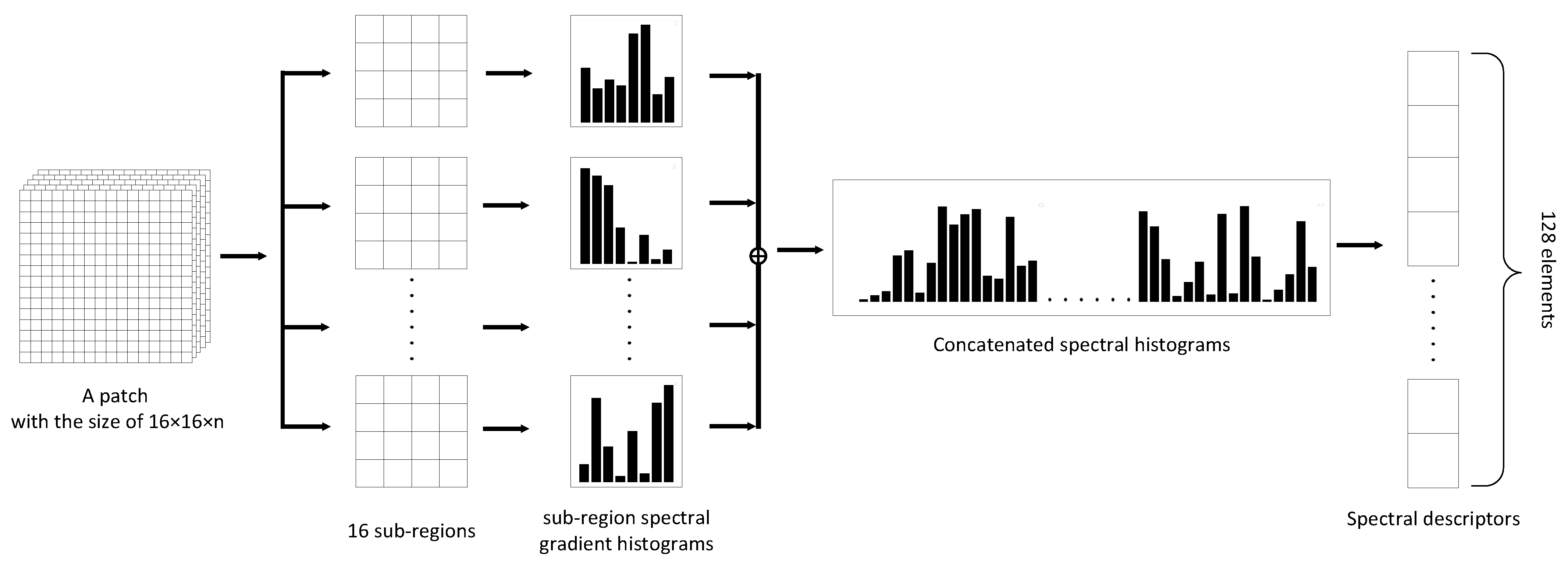

3.2. Spectral Descriptor

- (1)

- Keypoints assignment. Same as SIFT, the potential keypoints, which should be invariant to scale and orientation, are identified by using a DOG function. Thereby, the coordinate and orientation of each spatial keypoint are determined and applied to construct the spectral descriptors

- (2)

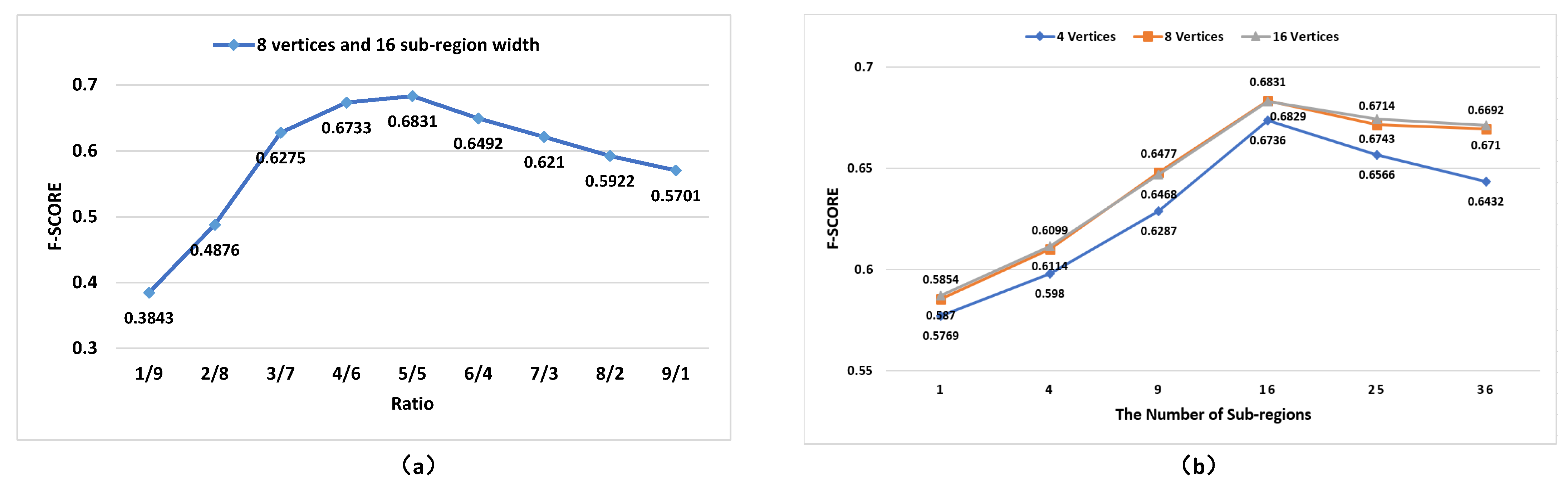

- Sub-region division. We first designate a (n is the number of spectral bands) patch surrounding the centre of each keypoint and rotate it to align its orientation assigned by previous step. Then, the patch is split up regularly into 16 sub-regions with a size of .

- (3)

- Vertices division. In this paper, the spectral gradient is evenly divided into eight vertices whose magnitude primarily ranges from −0.04 to 0.04. However, a few spectral gradient values are larger than 0.04 or smaller than −0.04. According to our statistics, most of those values are abnormal caused by the unstable imaging state of the hyperspectral sensor. Thus, we modify them to moderate the adverse effects. Specifically, the values smaller than −0.04 are increased to −0.04, while the values larger than 0.04 are decreased to 0.04. Moreover, most datasets of HSI have a similar range of spectral gradient magnitude (−0.04 to 0.04) after normalization. In this case, we believe the range (−0.04 to 0.04) can be applied to most HSI datasets.

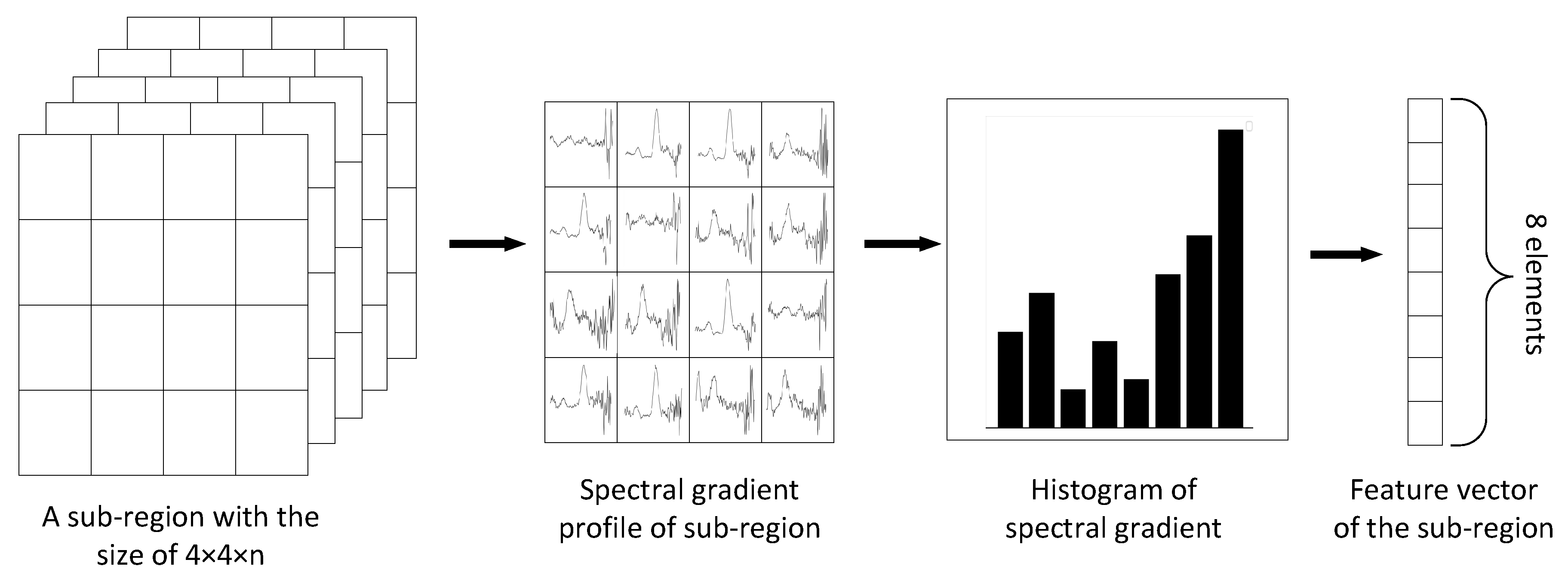

- (4)

- Extracting the HOSG of the sub-region. The main steps of extracting the HOSG of a sub-region is depicted in Figure 6. Specifically, the spectral gradient profile is first calculated for each pixel in the sub-region. Second, we obtain a gradient histogram for the sub-region by accumulating the spectral gradient magnitude into eight vertices, which summarizes the contents of the sub-region. Finally, a vector with eight elements is constructed to represent HOSG of a sub-region.

- (5)

- Spectral feature vector construction and normalization. Based on the previous steps, we obtain 16 vectors from one patch, which respectively represent 16 sub-regions. Consequently, a spectral feature vector of elements is constructed for each keypoint by concatenating the vectors of sub-regions. In that way, our method preserves the physical significance of HSI. In addition, the spectral descriptor should be normalized to reduce the negative impacts resulting from the changes in incident illumination. Specifically, the spectral feature vector is firstly normalized to the range of [−1,1]. A change in spectral profile in which each pixel value is multiplied by a constant will multiply gradients by the same constant, so this change will be canceled by vector normalization. Therefore, the descriptor is invariant to spectral changes. After that, we update the large gradient magnitudes by thresholding the values in the feature vector to each be no larger than 0.2 and no smaller than −0.2, and then renormalizing to the range of [−1,1]. This means that matching the magnitudes for large gradients is no longer as important, and that the distribution of orientations has greater emphasis. Note that the threshold values of 0.2 and −0.2 are commonly used for feature vector normalization in many works, such as SIFT [30], 3D-SIFT [24], and SS-SIFT [28], to reduce the negative impacts resulting from the changes in incident illumination.

3.3. Spatial-Spectral Descriptor

Spatial-Spectral Descriptor Construction

3.4. Evaluation Metrics

4. Experiments

4.1. Experiment Settings

- (1)

- UAV HSIs. The UAV dataset, containing 18 sequence images, are collected via a UAV-borne hyperspectral sensor carried on a DJI M600 UAV provided by Sichuan Dualix Spectral Imaging Technology Company, Ltd., Chengdu, China. The aerial images have 176 spectral bands ranging from 400 nm to 1000 nm, with a spectral resolution of 3 nm, an image size of 1057 × 960 pixels, and a ground sample distance (GSD) of 10 cm. These images may come across projective distortion or nonrigid transformation due to the unstable imaging condition. The data is collected in a botanic garden, containing various objects, such as vegetation, artifacts, soil and many other categories. The transformation matrices of dataset are calculated previously.

- (2)

- Ground platform HSIs. The ground platform HSIs are provided by a public dataset (http://icvl.cs.bgu.ac.il/hyperspectral, accessed on 12 September 2021)—“BGU ICVL Hyperspectral Image Dataset” [46]. Images are collected at 1392 × 1300 spatial resolution over 31 spectral bands ranging from 400 nm to 700 nm. The data exhibit large changes in illumination, imaging condition and viewpoint. Images with overlapping regions are selected to perform matching experiments.

- (1)

- Descriptor construction. The spatial descriptors are extracted from the first principal component of HSI produced by PCA algorithm while the multimodal descriptors are extracted from the whole HSI cube.

- (2)

- Descriptor matching. Euclidean distances of descriptors are used to measure the similarity between the descriptor of images I1 and I2. Nearest neighbor matching has been detected if the minimum Euclidean distance between descriptor of one point in I1 and its nearest neighbour in I2 is less than 0.7.

- (3)

- Matching metrics. We evaluate the raw matching performance on a per image pair basis using the evaluation metrics demonstrated in Section 3.4. In addition, we also focus on the downstream performance of descriptors by evaluating the matching results obtained from RANSAC [49]. RANSAC is one of the most popular algorithms for outlier removal with a superior precision in complex scenes according to the existing studies.

4.2. Parameters Initialization

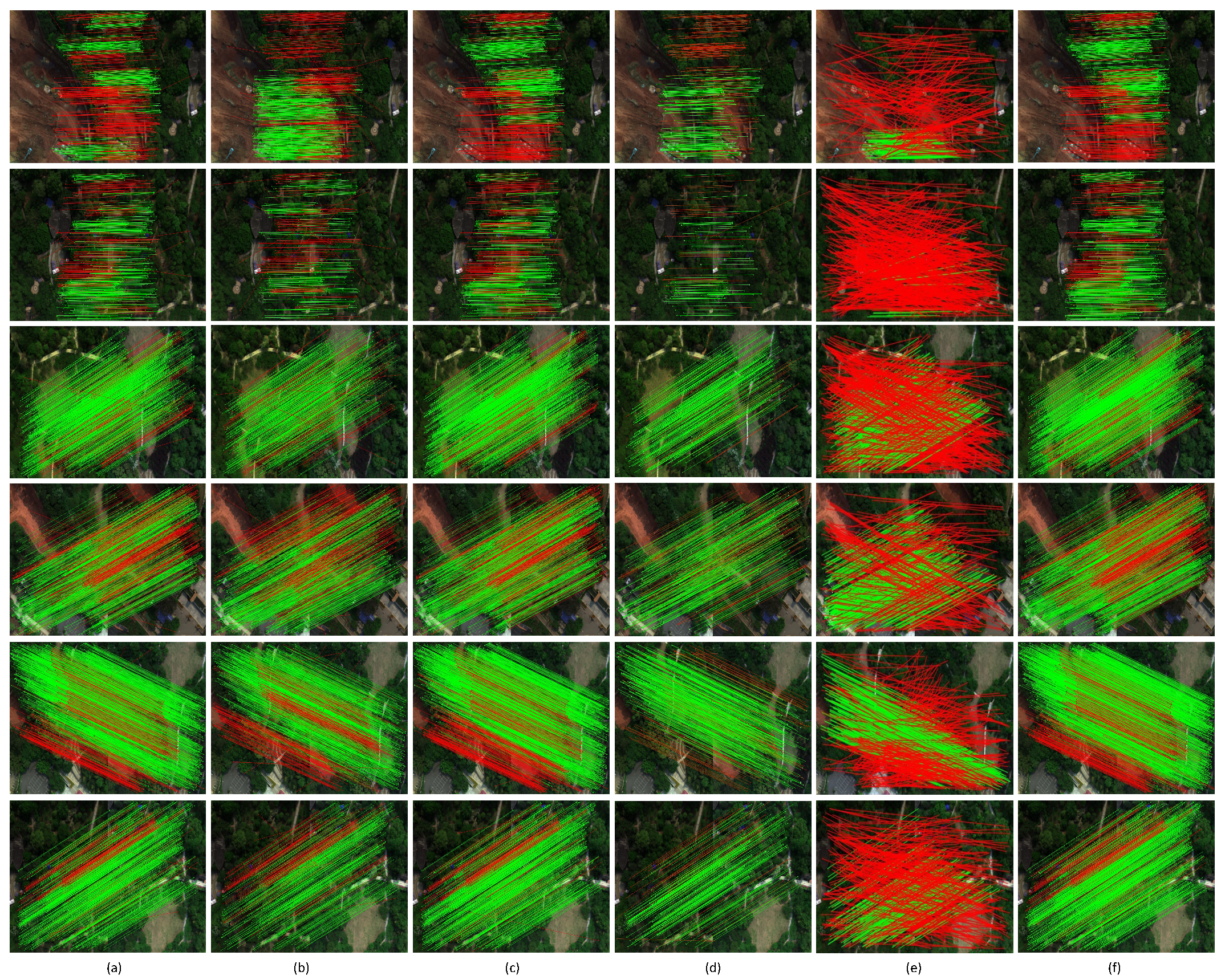

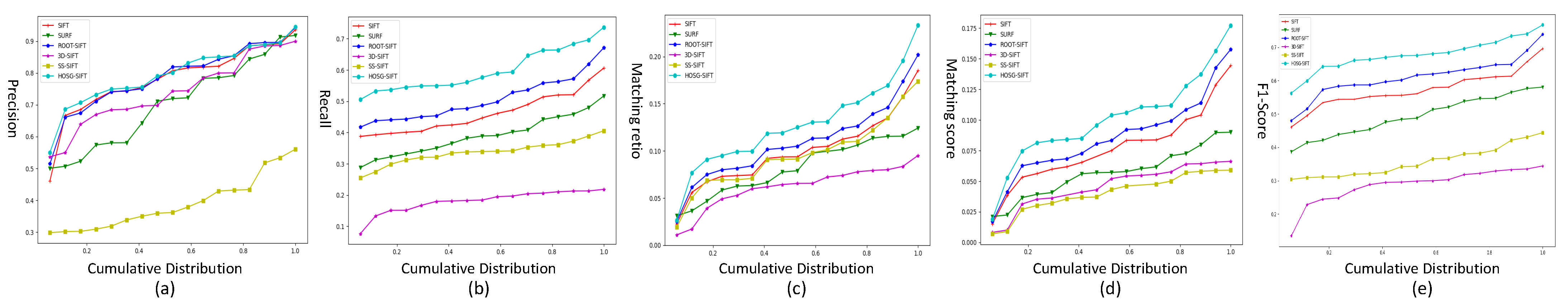

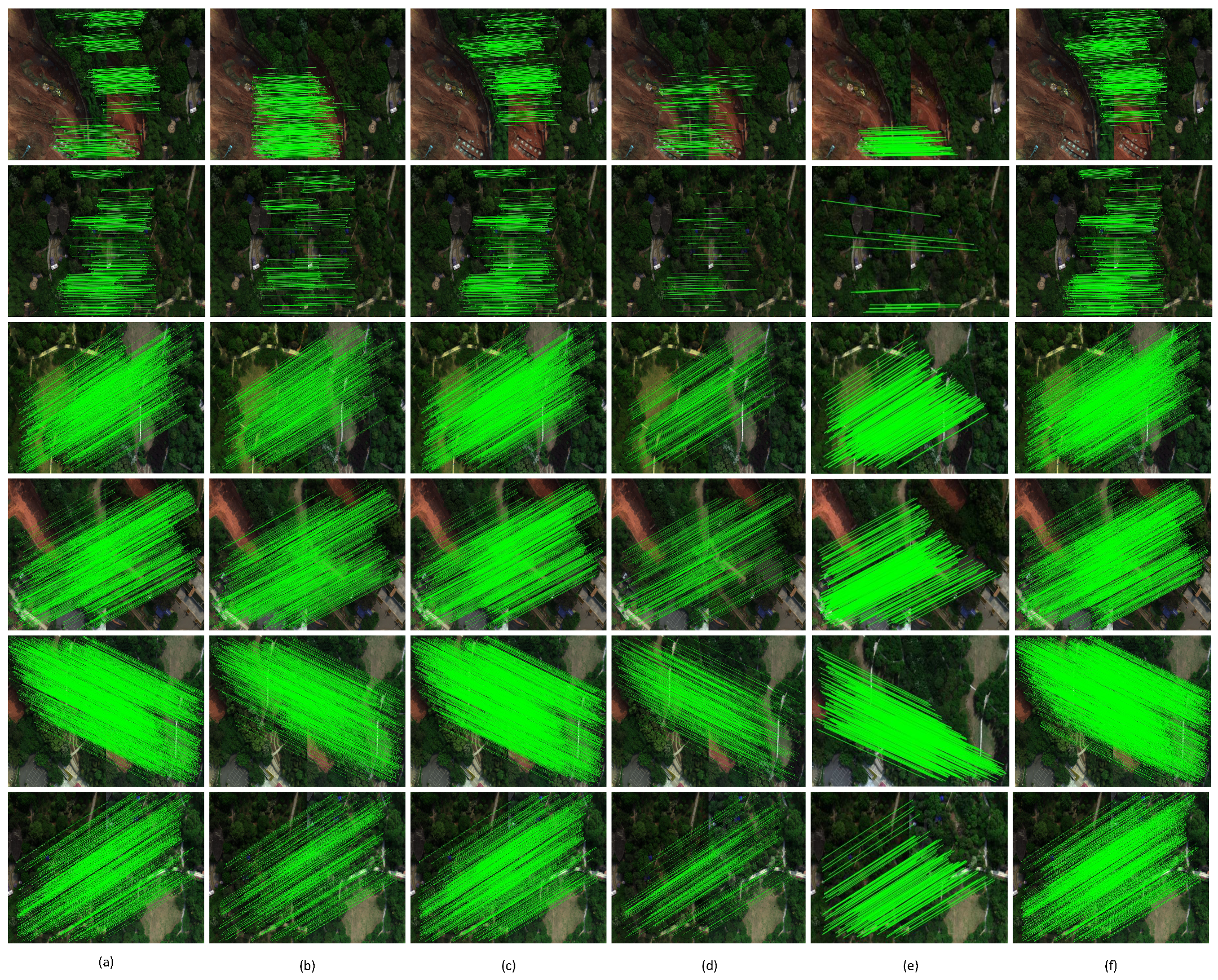

4.3. Matching Results in UAV Dataset

4.3.1. Detected Feature Points of Putative Matching Results

4.3.2. Evaluation Metrics of Putative Matching Results

4.3.3. Matching Results from RANSAC

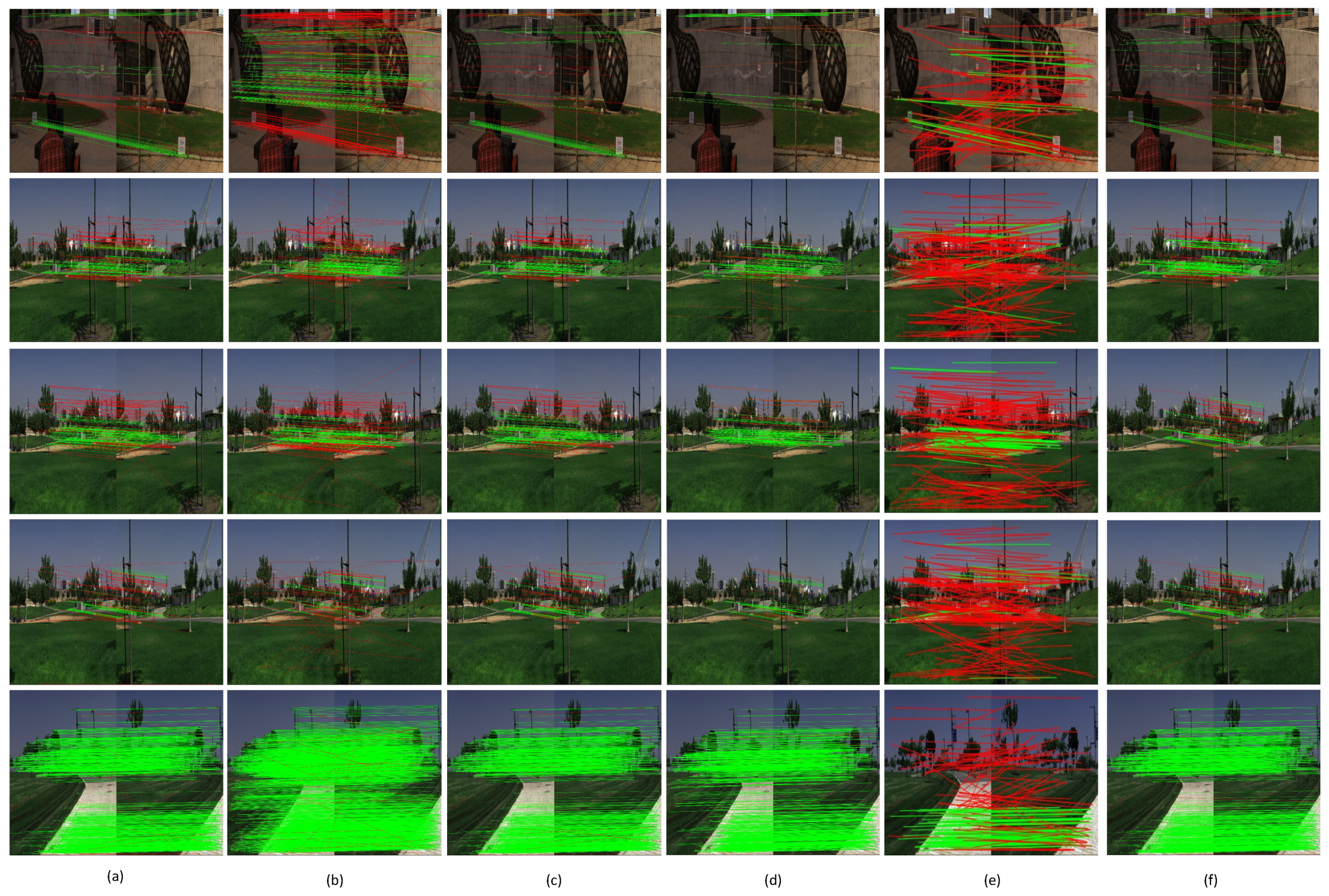

4.4. Matching Results on ICVL Dataset

4.5. Running Time Comparison

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Adão, T.; Hruška, J.; Pádua, L.; Bessa, J.; Peres, E.; Morais, R.; Sousa, J.J. Hyperspectral imaging: A review on UAV-based sensors, data processing and applications for agriculture and forestry. Remote Sens. 2017, 9, 1110. [Google Scholar] [CrossRef] [Green Version]

- Fu, Y.; Yang, G.; Song, X.; Li, Z.; Xu, X.; Feng, H.; Zhao, C. Improved estimation of winter wheat aboveground biomass using multiscale textures extracted from UAV-based digital images and hyperspectral feature analysis. Remote Sens. 2021, 13, 581. [Google Scholar] [CrossRef]

- Guo, A.; Huang, W.; Dong, Y.; Ye, H.; Ma, H.; Liu, B.; Wu, W.; Ren, Y.; Ruan, C.; Geng, Y. Wheat yellow rust detection using UAV-based hyperspectral technology. Remote Sens. 2021, 13, 123. [Google Scholar] [CrossRef]

- Thenkabail, P.S.; Smith, R.B.; De Pauw, E. Hyperspectral vegetation indices and their relationships with agricultural crop characteristics. Remote Sens. Environ. 2000, 71, 158–182. [Google Scholar] [CrossRef]

- Wu, J.L.; Ho, C.R.; Huang, C.C.; Srivastav, A.L.; Tzeng, J.H.; Lin, Y.T. Hyperspectral sensing for turbid water quality monitoring in freshwater rivers: Empirical relationship between reflectance and turbidity and total solids. Sensors 2014, 14, 22670–22688. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Gao, L.; Yao, D.; Li, Q.; Zhuang, L.; Zhang, B.; Bioucas-Dias, J.M. A new low-rank representation based hyperspectral image denoising method for mineral mapping. Remote Sens. 2017, 9, 1145. [Google Scholar] [CrossRef] [Green Version]

- Feng, Y.Z.; Sun, D.W. Application of hyperspectral imaging in food safety inspection and control: A review. Crit. Rev. Food Sci. Nutr. 2012, 52, 1039–1058. [Google Scholar] [CrossRef]

- Lu, G.; Fei, B. Medical hyperspectral imaging: A review. J. Biomed. Opt. 2014, 19, 010901. [Google Scholar] [CrossRef]

- Schonberger, J.L.; Hardmeier, H.; Sattler, T.; Pollefeys, M. Comparative evaluation of hand-crafted and learned local features. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1482–1491. [Google Scholar]

- Ma, J.; Ye, X.; Zhou, H.; Mei, X.; Fan, F. Loop-Closure Detection Using Local Relative Orientation Matching. IEEE Trans. Intell. Transp. Syst. 2021, 1–14. [Google Scholar] [CrossRef]

- Li, J.; Wang, Z.; Lai, S.; Zhai, Y.; Zhang, M. Parallax-tolerant image stitching based on robust elastic warping. IEEE Trans. Multimed. 2017, 20, 1672–1687. [Google Scholar] [CrossRef]

- Lee, K.Y.; Sim, J.Y. Warping residual based image stitching for large parallax. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 8198–8206. [Google Scholar]

- Yang, Y.; Lee, X. Four-band thermal mosaicking: A new method to process infrared thermal imagery of urban landscapes from UAV flights. Remote Sens. 2019, 11, 1365. [Google Scholar] [CrossRef] [Green Version]

- Zhang, H.; Xu, H.; Tian, X.; Jiang, J.; Ma, J. Image fusion meets deep learning: A survey and perspective. Inf. Fusion 2021, 76, 323–336. [Google Scholar] [CrossRef]

- Xu, H.; Ma, J.; Jiang, J.; Guo, X.; Ling, H. U2Fusion: A unified unsupervised image fusion network. IEEE Trans. Pattern Anal. Mach. Intell. 2020. [Google Scholar] [CrossRef]

- Nesbit, P.R.; Hugenholtz, C.H. Enhancing UAV–SFM 3D model accuracy in high-relief landscapes by incorporating oblique images. Remote Sens. 2019, 11, 239. [Google Scholar] [CrossRef] [Green Version]

- Jiang, S.; Jiang, C.; Jiang, W. Efficient structure from motion for large-scale UAV images: A review and a comparison of SfM tools. ISPRS J. Photogramm. Remote Sens. 2020, 167, 230–251. [Google Scholar] [CrossRef]

- Meinen, B.U.; Robinson, D.T. Mapping erosion and deposition in an agricultural landscape: Optimization of UAV image acquisition schemes for SfM-MVS. Remote Sens. Environ. 2020, 239, 111666. [Google Scholar] [CrossRef]

- Sattler, T.; Leibe, B.; Kobbelt, L. Fast image-based localization using direct 2d-to-3d matching. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 667–674. [Google Scholar]

- Sattler, T.; Leibe, B.; Kobbelt, L. Efficient & effective prioritized matching for large-scale image-based localization. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 1744–1756. [Google Scholar]

- Zia, A.; Zhou, J.; Gao, Y. Exploring Chromatic Aberration and Defocus Blur for Relative Depth Estimation From Monocular Hyperspectral Image. IEEE Trans. Image Process. 2021, 30, 4357–4370. [Google Scholar] [CrossRef] [PubMed]

- Luo, B.; Chanussot, J. Hyperspectral image classification based on spectral and geometrical features. In Proceedings of the 2009 IEEE International Workshop on Machine Learning for Signal Processing, Grenoble, France, 1–4 September 2009; pp. 1–6. [Google Scholar]

- Lu, B.; Dao, P.D.; Liu, J.; He, Y.; Shang, J. Recent advances of hyperspectral imaging technology and applications in agriculture. Remote Sens. 2020, 12, 2659. [Google Scholar] [CrossRef]

- Allaire, S.; Kim, J.J.; Breen, S.L.; Jaffray, D.A.; Pekar, V. Full orientation invariance and improved feature selectivity of 3D SIFT with application to medical image analysis. In Proceedings of the 2008 IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops, Anchorage, AK, USA, 23–28 June 2008; pp. 1–8. [Google Scholar]

- Tsai, F.; Lai, J.S. Feature extraction of hyperspectral image cubes using three-dimensional gray-level cooccurrence. IEEE Trans. Geosci. Remote Sens. 2013, 51, 3504–3513. [Google Scholar] [CrossRef]

- Tang, Y.Y.; Lu, Y.; Yuan, H. Hyperspectral image classification based on three-dimensional scattering wavelet transform. IEEE Trans. Geosci. Remote Sens. 2014, 53, 2467–2480. [Google Scholar] [CrossRef]

- Everts, I.; Van Gemert, J.C.; Gevers, T. Evaluation of color spatio-temporal interest points for human action recognition. IEEE Trans. Image Process. 2014, 23, 1569–1580. [Google Scholar] [CrossRef] [Green Version]

- Al-Khafaji, S.L.; Zhou, J.; Zia, A.; Liew, A.W.C. Spectral-spatial scale invariant feature transform for hyperspectral images. IEEE Trans. Image Process. 2017, 27, 837–850. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Ma, J.; Jiang, X.; Fan, A.; Jiang, J.; Yan, J. Image matching from handcrafted to deep features: A survey. Int. J. Comput. Vis. 2021, 129, 23–79. [Google Scholar] [CrossRef]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Bay, H.; Ess, A.; Tuytelaars, T.; Van Gool, L. Speeded-up robust features (SURF). Comput. Vis. Image Underst. 2008, 110, 346–359. [Google Scholar] [CrossRef]

- Arandjelović, R.; Zisserman, A. Three things everyone should know to improve object retrieval. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 2911–2918. [Google Scholar]

- Ke, Y.; Sukthankar, R. PCA-SIFT: A more distinctive representation for local image descriptors. In Proceedings of the 2004 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Washington, DC, USA, 27 June–2 July 2004; Volume 2, p. II-II. [Google Scholar]

- Dong, J.; Soatto, S. Domain-size pooling in local descriptors: DSP-SIFT. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 5097–5106. [Google Scholar]

- Yi, K.M.; Trulls, E.; Lepetit, V.; Fua, P. Lift: Learned invariant feature transform. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2016; pp. 467–483. [Google Scholar]

- Barroso-Laguna, A.; Riba, E.; Ponsa, D.; Mikolajczyk, K. Key. net: Keypoint detection by handcrafted and learned cnn filters. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Long Beach, CA, USA, 16–17 June 2019; pp. 5836–5844. [Google Scholar]

- DeTone, D.; Malisiewicz, T.; Rabinovich, A. Superpoint: Self-supervised interest point detection and description. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Salt Lake City, UT, USA, 18–22 June 2018; pp. 224–236. [Google Scholar]

- Mishchuk, A.; Mishkin, D.; Radenovic, F.; Matas, J. Working hard to know your neighbor’s margins: Local descriptor learning loss. arXiv 2017, arXiv:1705.10872. [Google Scholar]

- Jiang, X.; Ma, J.; Xiao, G.; Shao, Z.; Guo, X. A review of multimodal image matching: Methods and applications. Inf. Fusion 2021, 73, 22–71. [Google Scholar] [CrossRef]

- Cheung, W.; Hamarneh, G. n-SIFT: n-Dimensional Scale Invariant Feature Transform. IEEE Trans. Image Process. 2009, 18, 2012–2021. [Google Scholar] [CrossRef] [Green Version]

- Scovanner, P.; Ali, S.; Shah, M. A 3-dimensional sift descriptor and its application to action recognition. In Proceedings of the 15th ACM International Conference on Multimedia, New York, NY, USA, 24–29 September 2007; pp. 357–360. [Google Scholar]

- Rister, B.; Horowitz, M.A.; Rubin, D.L. Volumetric image registration from invariant keypoints. IEEE Trans. Image Process. 2017, 26, 4900–4910. [Google Scholar] [CrossRef]

- Dorado-Munoz, L.P.; Velez-Reyes, M.; Mukherjee, A.; Roysam, B. A vector SIFT detector for interest point detection in hyperspectral imagery. IEEE Trans. Geosci. Remote Sens. 2012, 50, 4521–4533. [Google Scholar] [CrossRef]

- Angelopoulou, E.; Lee, S.W.; Bajcsy, R. Spectral gradient: A material descriptor invariant to geometry and incident illumination. In Proceedings of the Seventh IEEE International Conference on Computer Vision, Corfu, Greece, 20–27 September 1999; Volume 2, pp. 861–867. [Google Scholar]

- Wold, S.; Esbensen, K.; Geladi, P. Principal component analysis. Chemom. Intell. Lab. Syst. 1987, 2, 37–52. [Google Scholar] [CrossRef]

- Arad, B.; Ben-Shahar, O. Sparse Recovery of Hyperspectral Signal from Natural RGB Images. In European Conference on Computer Vision; Springer: Cham, Switzerland, 2016; pp. 19–34. [Google Scholar]

- Jin, Y.; Mishkin, D.; Mishchuk, A.; Matas, J.; Fua, P.; Yi, K.M.; Trulls, E. Image matching across wide baselines: From paper to practice. Int. J. Comput. Vis. 2021, 129, 517–547. [Google Scholar] [CrossRef]

- Zhang, Y.; Wang, J.; Xu, S.; Liu, X.; Zhang, X. MLIFeat: Multi-level information fusion based deep local features. In Proceedings of the Asian Conference on Computer Vision, Kyoto, Japan, 30 November–4 December 2020. [Google Scholar]

- Fischler, M.A.; Bolles, R.C. Random sample consensus: A paradigm for model fitting with applications to image analysis and automated cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Ma, J.; Zhao, J.; Jiang, J.; Zhou, H.; Guo, X. Locality preserving matching. Int. J. Comput. Vis. 2019, 127, 512–531. [Google Scholar] [CrossRef]

- Ma, J.; Jiang, X.; Jiang, J.; Zhao, J.; Guo, X. LMR: Learning a two-class classifier for mismatch removal. IEEE Trans. Image Process. 2019, 28, 4045–4059. [Google Scholar] [CrossRef] [PubMed]

| Source Image | Figure 2a | Figure 2b | Figure 2c | Figure 2d |

|---|---|---|---|---|

| number of detected points | 582 | 728 | 345 | 5455 |

| Name | Version |

|---|---|

| Operation System | Windows 10 |

| CPU | AMD Core R5-4600U@2.1 GHz |

| Language | Python 3.6 |

| RAM | 16GB |

| Method | Number of Feature Points | Number of Matches | Number of Inliers | Number of Outliers | Ratio of Inliers (%) |

|---|---|---|---|---|---|

| SIFT | 7162 | 710 | 553 | 157 | 77.99 |

| SURF | 7844 | 644 | 452 | 192 | 70.32 |

| ROOT-SIFT | 7162 | 780 | 614 | 166 | 78.61 |

| 3D-SIFT | 5908 | 364 | 269 | 95 | 73.98 |

| SS-SIFT | 11,673 | 1107 | 431 | 676 | 38.97 |

| HOSG-SIFT | 7162 | 915 | 727 | 188 | 79.54 |

| Method | Precision | Recall | Matching Ratio | Matching Score | F1-Score |

|---|---|---|---|---|---|

| SIFT | 77.99 | 46.19 | 9.92 | 7.74 | 57.37 |

| SURF | 70.32 | 38.98 | 8.21 | 5.66 | 49.39 |

| ROOT-SIFT | 78.61 | 50.73 | 10.90 | 8.59 | 61.10 |

| 3D-SIFT | 73.98 | 18.01 | 6.17 | 4.59 | 28.77 |

| SS-SIFT | 38.97 | 33.61 | 9.48 | 3.69 | 35.71 |

| HOSG-SIFT | 79.54 | 59.87 | 12.77 | 10.10 | 67.77 |

| Method | Precision | Recall | Matching Ratio | Matching Score | F1-Score |

|---|---|---|---|---|---|

| SIFT | 45.98 | 56.71 | 9.15 | 6.23 | 50.35 |

| SURF | 41.50 | 78.93 | 14.33 | 9.55 | 53.95 |

| ROOT-SIFT | 47.12 | 57.66 | 10.11 | 6.99 | 51.30 |

| 3D-SIFT | 43.78 | 45.33 | 5.18 | 3.29 | 44.19 |

| SS-SIFT | 23.97 | 37.45 | 4.41 | 2.17 | 29.32 |

| HOSG-SIFT | 49.08 | 60.68 | 11.52 | 7.87 | 53.72 |

| Method | UAV | ICVL |

|---|---|---|

| SIFT | 8.7 | 1.1 |

| SURF | 4.1 | 1.7 |

| ROOT-SIFT | 9.2 | 1.6 |

| 3D-SIFT | 1917.5 | 88.2 |

| SS-SIFT | 2354.9 | 105.2 |

| HOSG-SIFT | 305.8 | 17.9 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yu, Y.; Ma, Y.; Mei, X.; Fan, F.; Huang, J.; Ma, J. A Spatial-Spectral Feature Descriptor for Hyperspectral Image Matching. Remote Sens. 2021, 13, 4912. https://doi.org/10.3390/rs13234912

Yu Y, Ma Y, Mei X, Fan F, Huang J, Ma J. A Spatial-Spectral Feature Descriptor for Hyperspectral Image Matching. Remote Sensing. 2021; 13(23):4912. https://doi.org/10.3390/rs13234912

Chicago/Turabian StyleYu, Yang, Yong Ma, Xiaoguang Mei, Fan Fan, Jun Huang, and Jiayi Ma. 2021. "A Spatial-Spectral Feature Descriptor for Hyperspectral Image Matching" Remote Sensing 13, no. 23: 4912. https://doi.org/10.3390/rs13234912

APA StyleYu, Y., Ma, Y., Mei, X., Fan, F., Huang, J., & Ma, J. (2021). A Spatial-Spectral Feature Descriptor for Hyperspectral Image Matching. Remote Sensing, 13(23), 4912. https://doi.org/10.3390/rs13234912