A scSE-LinkNet Deep Learning Model for Daytime Sea Fog Detection

Abstract

:1. Introduction

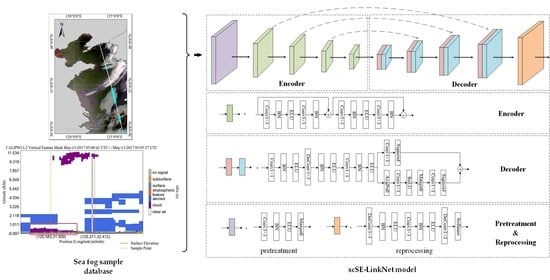

2. Materials

2.1. Study Area

2.2. Datasets

2.2.1. Aqua/MODIS Data

2.2.2. CALIPSO/CALIOP Data

2.2.3. Fog Monitoring Report

2.2.4. ICOADS Data

2.2.5. Meteorological Station Data

3. Method

3.1. LinkNet Backbone

3.2. SENet Backbone

3.3. scSE-LinkNet Backbone

4. Experiment

4.1. Data Processing

4.2. Experimental Settings

4.3. Experimental Results

4.3.1. Performance Comparison of CNN Models

4.3.2. Validation with Measured Data

4.3.3. Validation with CALIOP VFM Products

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Deng, J.; Bai, J.; Liu, J.; Wang, X.; Shi, H. Detection of daytime fog using MODIS multispectral data. Meteorol. Sci. Technol. 2006, 34, 188–193. [Google Scholar]

- Zhang, S.; Bao, X. The main advances in sea fog research in China. Period. Ocean Univ. China 2008, 03, 359–366. [Google Scholar]

- Mahdavi, S.; Amani, M.; Bullock, T.; Beale, S. A Probability-Based Daytime Algorithm for Sea Fog Detection Using GOES-16 Imagery. IEEE J. Sel. Topics Appl. Earth Observ. 2021, 14, 1363–1373. [Google Scholar] [CrossRef]

- Yuan, Y.; Qiu, Z.; Sun, D.; Wang, S.; Yue, X. Daytime sea fog retrieval based on GOCI data: A case study over the Yellow Sea. Opt. Express 2016, 24, 87–801. [Google Scholar] [CrossRef] [PubMed]

- Fu, G.; Guo, J.; Pendergrass, A.; Li, P. An analysis and modeling study of a sea fog event of over the Yellow and Bohai Seas. J. Ocean Univ. China 2008, 7, 27–34. [Google Scholar] [CrossRef]

- Koračin, D.; Dorman, C.E.; Lewis, J.M.; Hudson, J.G.; Wilcox, E.M.; Torregrosa, A. Marine fog: A review. Atmos. Res. 2014, 143, 142–175. [Google Scholar] [CrossRef]

- Lee, T.F.; Turk, F.J.; Richardson, K. Stratus and Fog Products Using GOES-8–9 3.9-μm Data. Weather Forecast. 1997, 12, 664–677. [Google Scholar] [CrossRef]

- Cermak, J.; Bendix, J. A novel approach to fog/low stratus detection using Meteosat 8 data. Atmos. Res. 2008, 87, 279–292. [Google Scholar] [CrossRef]

- Bendix, J.; Thies, B.; Cermak, J.; Nauß, T. Ground Fog Detection from Space Based on MODIS Daytime Data—A Feasibility Study. Weather Forecast. 2005, 20, 989–1005. [Google Scholar] [CrossRef]

- Heo, K.Y.; Min, S.Y.; Ha, K.J.; Kim, J.H. Discrimination between sea fog and low stratus using texture structure of MODIS satellite images. Korean J. Remote Sens. 2008, 24, 571–581. [Google Scholar]

- Ryu, H.S.; Hong, S. Sea Fog Detection Based on Normalized Difference Snow Index Using Advanced Himawari Imager Observations. Remote Sens. 2020, 12, 1521. [Google Scholar] [CrossRef]

- Han, J.H.; Suh, M.S.; Yu, H.Y.; Roh, N.Y. Development of Fog Detection Algorithm Using GK2A/AMI and Ground Data. Atmos. Remote Sens. 2020, 12, 3181. [Google Scholar] [CrossRef]

- Yang, J.-H.; Yoo, J.-M.; Choi, Y.-S. Advanced Dual-Satellite Method for Detection of Low Stratus and Fog near Japan at Dawn from FY-4A and Himawari-8. Remote Sens. 2021, 13, 1042. [Google Scholar] [CrossRef]

- Deng, Y.; Wang, J.; Cao, J.; Cao, C. Detection of Daytime Fog in South China Sea Using MODIS Data. J. Trop. Meteorol. 2014, 20, 386–390. [Google Scholar]

- Zhang, S.; Yi, L. A Comprehensive Dynamic Threshold Algorithm for Daytime Sea Fog Retrieval over the Chinese Adjacent Seas. Pure Appl. Geophys. 2013, 170, 1931–1944. [Google Scholar] [CrossRef]

- Wan, J.H.; Jiang, L.; Xiao, Y.F.; Sheng, H. Sea fog detection based on dynamic threshold algorithm at dawn and dusk time. In Proceedings of the International Archives of Photogrammetry, Remote Sensing and Spatial Information Sciences, Nanjing, China, 25–27 October 2019. [Google Scholar]

- Wu, X.; Li, S. Automatic sea fog detection over Chinese adjacent oceans using Terra/MODIS data. Int. J. Remote Sens. 2014, 35, 7430–7457. [Google Scholar] [CrossRef]

- Wu, D.; Lu, B.; Zhang, T.; Yan, F. A method of detecting sea fogs using CALIOP data and its application to improve MODIS-based sea fog detection. J. Quant. Spectrosc. Radiat. Transf. 2015, 153, 88–94. [Google Scholar] [CrossRef]

- Xiao, Y.; Zhang, J.; Qin, P. An Algorithm for Daytime Sea Fog Detection over the Greenland Sea Based on MODIS and CALIOP Data. J. Coast. Res. 2019, 90, 95–103. [Google Scholar] [CrossRef]

- Wan, J.; Su, J.; Liu, S.; Sheng, H. The research on the spectral characteristics of sea fog based on CALIOP and MODIS data. In Proceedings of the International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences, Beijing, China, 7–10 May 2018. [Google Scholar]

- Shin, D.; Kim, J.H. A New Application of Unsupervised Learning to Nighttime Sea Fog Detection. Asia Pac. J. Atmos. Sci. 2018, 54, 527–544. [Google Scholar] [CrossRef] [Green Version]

- Kim, D.; Park, M.S.; Park, Y.J.; Kim, W. Geostationary Ocean Color Imager (GOCI) Marine Fog Detection in Combination with Himawari-8 Based on the Decision Tree. Remote Sens. 2020, 12, 149. [Google Scholar] [CrossRef] [Green Version]

- Drönner, J.; Korfhage, N.; Egli, S.; Mühling, M.; Thies, B.; Bendix, J.; Freisleben, B.; Seeger, B. Fast Cloud Segmentation Using Convolutional Neural Networks. Remote Sens. 2018, 10, 1782. [Google Scholar] [CrossRef] [Green Version]

- Liu, S.; Yi, L.; Zhang, S.; Xue, Y. A Study of Daytime Sea Fog Retrieval over the Yellow Sea Based on Fully Convolutional Networks. Trans. Oceanol. Limnol. 2019, 6, 13–22. [Google Scholar]

- Zhu, C.; Wan, J.; Liu, S.; Xiao, Y. Sea Fog Detection Using U-Net Deep Learning Model Based on Modis Data. In Proceedings of the 2019 10th Workshop on Hyperspectral Imaging and Signal Processing: Evolution in Remote Sensing (WHISPERS), Amsterdam, The Netherlands, 24–26 September 2019. [Google Scholar]

- Jeon, H.K.; Kim, S.; Edwin, J.; Yang, C.S. Sea Fog Identification from GOCI Images Using CNN Transfer Learning Models. Electronics 2020, 9, 311. [Google Scholar] [CrossRef]

- Koračin, D.; Dorman, C.E. Marine Fog: Challenges and Advancements in Observations, Modeling, and Forecasting, 3rd ed.; Springer: Cham, Switzerland, 2017; p. 2. [Google Scholar]

- Freeman, E.; Woodruff, S.D.; Worley, S.J.; Lubker, S.J.; Kent, E.C.; Angel, W.E.; Berry, D.I.; Brohan, P.; Eastman, R.; Gates, L.; et al. ICOADS Release 3.0: A major update to the historical marine climate record. Int. J. Climatol. 2016, 27, 2211–2232. [Google Scholar] [CrossRef] [Green Version]

- Chaurasia, A.; Culurciello, E. LinkNet: Exploiting encoder representations for efficient semantic segmentation. In Proceedings of the 2017 IEEE Visual Communications and Image Processing (VCIP), St. Petersburg, FL, USA, 10–13 December 2017. [Google Scholar]

- Hu, J.; Shen, L.; Albanie, S.; Sun, G.; Wu, E. Squeeze-and-Excitation Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 2011–2023. [Google Scholar] [CrossRef] [Green Version]

- Roy, A.G.; Navab, N.; Wachinger, C. Concurrent spatial and channel ‘squeeze & excitation’in fully convolutional networks. In Proceedings of the Medical Image Computing and Computer Assisted Intervention (MICCAI), Granada, Spain, 16–20 September 2018. [Google Scholar]

- Meng, L.K.; Tao, L.; Li, J.Y.; Wang, C.X. A system for automatic processing of MODIS L1B data. In Proceedings of the 8th International Symposium on Spatial Accuracy Assessment in Natural Resources and Environmental Sciences, Shanghai, China, 25–27 June 2008. [Google Scholar]

- GU, L.J.; Ren, R.Z.; Wang, H.F. MODIS imagery geometric precision correction based on longitude and latitude information. J. China Univ. Posts Telecommun. 2010, 17, 73–78. [Google Scholar] [CrossRef]

- Zhang, J.; Zhang, S.; Wu, X.; Liu, Y.; Liu, J. The research on Yellow Sea sea fog based on MODIS data: Sea fog properties retrieval and spatial-temporal distribution. Period. Ocean Univ. China 2009, 39, 311–318. [Google Scholar]

- Zhao, J.; Wu, D.; Zhao, Y. A Method for Sea Fog Detection Using CALIOP Data. Period. Ocean Univ. China 2017, 47, 9–15. [Google Scholar]

- Zhu, S.; Yuille, A. Region competition: Unifying snakes, region growing, and Bayes/MDL for multiband image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 1996, 18, 884–900. [Google Scholar]

- Wang, Z.; Gao, X.; Zhang, Y. HA-Net: A Lake Water Body Extraction Network Based on Hybrid-Scale Attention and Transfer Learning. Remote Sens. 2021, 13, 4121. [Google Scholar] [CrossRef]

- Clevert, D.A.; Unterthiner, T.; Hochreiter, S. Fast and accurate deep network learning by exponential linear units (ELUs). In Proceedings of the International Conference on Learning Representations, San Juan, PR, USA, 2–4 March 2016. [Google Scholar]

- Lin, T.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal Loss for Dense Object Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 318–327. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelshein, N.; Antiga, L.; et al. Pytorch: An imperative style, high-performance deep learning library. In Proceedings of the Advances in Neural Information Processing Systems, Vancouver, BC, Canada, 8–14 December 2019. [Google Scholar]

- Wang, Z.Q.; Zhou, Y.; Wang, S.X.; Wang, F.T.; Xu, Z.Y. House building extraction from high-resolution remote sensing images based on IEU-Net. Natl. Remote Sens. Bull. 2021, 25, 2245–2254. [Google Scholar]

| Station Name | Station Number | Latitude and Longitude |

|---|---|---|

| Dandong | 54497 | (40.03°N, 124.33°E) |

| Dalian | 54662 | (38.91°N, 121.64°E) |

| Weihai | 54776 | (37.40°N, 122.70°E) |

| Yantai | 54863 | (36.78°N, 121.18°E) |

| Qingdao | 54857 | (36.07°N, 120.33°E) |

| Rizhao | 54945 | (35.47°N, 119.56°E) |

| Tanggu | 54623 | (39.05°N, 117.72°E) |

| Platform | Version | CPU | GPU |

|---|---|---|---|

| Windows 10 | Python 3.7 PyTorch 1.2.0 | AMD Ryzen 5 3600 CPU (3.80 GHz) | NVIDIA 2060 SUPER GPU (8 GB RAM) |

| CNN Models | POD | FAR | CSI | HSS |

|---|---|---|---|---|

| FCN | 0.909 | 0.197 | 0.743 | 0.819 |

| U-Net | 0.880 | 0.202 | 0.719 | 0.799 |

| LinkNet | 0.916 | 0.171 | 0.771 | 0.841 |

| scSE-LinkNet | 0.924 | 0.143 | 0.800 | 0.864 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Guo, X.; Wan, J.; Liu, S.; Xu, M.; Sheng, H.; Yasir, M. A scSE-LinkNet Deep Learning Model for Daytime Sea Fog Detection. Remote Sens. 2021, 13, 5163. https://doi.org/10.3390/rs13245163

Guo X, Wan J, Liu S, Xu M, Sheng H, Yasir M. A scSE-LinkNet Deep Learning Model for Daytime Sea Fog Detection. Remote Sensing. 2021; 13(24):5163. https://doi.org/10.3390/rs13245163

Chicago/Turabian StyleGuo, Xiaofei, Jianhua Wan, Shanwei Liu, Mingming Xu, Hui Sheng, and Muhammad Yasir. 2021. "A scSE-LinkNet Deep Learning Model for Daytime Sea Fog Detection" Remote Sensing 13, no. 24: 5163. https://doi.org/10.3390/rs13245163

APA StyleGuo, X., Wan, J., Liu, S., Xu, M., Sheng, H., & Yasir, M. (2021). A scSE-LinkNet Deep Learning Model for Daytime Sea Fog Detection. Remote Sensing, 13(24), 5163. https://doi.org/10.3390/rs13245163