A Photogrammetry-Based Workflow for the Accurate 3D Construction and Visualization of Museums Assets

Abstract

:1. Introduction

- the user-friendly 3D visualization interface previously developed [20] considering many categories of final users, to let them access all the features characterizing the whole communicative system;

- the deployment on different output hardware, spanning from touch high definition displays to more immersive devices like VR goggles.

- a flexible acquisition environment based, besides the described equipment, on the standard acquisition conditions and an open-source 3D photogrammetric software available also as one-click button application targeted to non-expert users too;

- a software solution for the visualization based on Unity 3D rendering engine, which supports RTR Physically Based Shading (PBR) through its High Definition Render Pipeline (HDRP) and some templates that can be profitably used by heterogeneous skilled operators to achieve both fast and accurate visualization results;

- a workflow including both developed software solutions (to achieve accurate color reproduction, to enhance the photogrammetric processing and to optimize the geometric processing to generate 3D models with high visual quality in the RTR) and automated routines to solve the typical problems appearing with this class of models.

- the image pre-processing to minimize the problems of the photogrammetric pipeline from images acquired with smartphone cameras and to generate color corrected 3D models;

- the extraction of light 3D models to be visualized on multiple consumer devices at high fidelity.

2. Background

2.1. Smartphone Cameras

2.2. General and Photogrammetric Workflow

2.3. 3D Artefact Classification

- Master Model: it supplies the highest quality replica of the original object in terms of spatial and color information contents and it is intended for professional uses;

- Derived Models: derived from a ‘Master Model,’ they are intended for museums visitors or in web application for inventory purposes. These models require a lower resolution and they present different features, especially concerning the components. Polygons can be triangular or quadrangular (or both) and they can have adaptive or isotropic resolution. Depending on their final use they can be called mid-poly (intermediate resolution) or low-poly (drastically reduced resolution).

2.4. Authoring Environment for Interactive RTR Visualization

- the effect of the subsurface dispersion is modeled extending the BRDF to the BSDF (Bidirectional Scattering Distribution Function), a quantity that consists of the sum of the BRDF and the Bidirectional Transmittance Distribution Function (BTDF). The latter function expresses how light passes through a (semi)transparent surface;

- the interactions between lights and materials are considered as scale-dependent phenomena, to better fit the features and behavior of the rendering engine.

- macrostructure: the shape and the geometry of the object;

- mesostructure: all those elements still visible with the naked eye but not responsible for the global shape definition of the model (e.g., small bumps that cause interreflections and self-shadowing);

- microstructure: the microscopical structure not visible to the human eye that contributes to the final aspect of the object occluding or deviating light and projecting shadows and highlights.

2.5. Image Pre-Processing

2.5.1. Image Denoise

- perceptually flat regions should be as smooth as possible, and noise should be completely removed from these regions;

- image boundaries should be well preserved and not blurred;

- texture detail should not be lost;

- the global contrast should be preserved (i.e., the low frequencies of denoised and input images should be equal);

- no artefacts should appear in the denoised image;

- original color needs to be preserved.

- Noise measurement;

- Denoise inferred by previous measurements.

2.5.2. Color Correction

2.6. Mesh Processing

- photometric stereotechniques [70];

- 2D image processing, using filtering techniques over a heightmap, as a Sobel filter.

3. Used Devices and Workflow Overview

3.1. Devices

- A smartphone with a mid-range camera system (i.e., cameras of mid-range phones that, as their names suggest, are the phones having a moderation of specs, quality and price). The three years old Apple iPhone X was chosen due to its camera features (image resolution, sharpness, color accuracy) and its capabilities very close to a prosumer SLR camera. It features a dual-12MP (wide + telephoto) camera setup. The wide-angle sensor sits behind a 4 mm and f/1.8 lens, the telephoto presents a 6 mm and f/2.4 lens. The iPhone X is equipped with Sony Exmor RS sensors using deep trench isolation technology to prevent light leakage between the neighboring pixels—the wide-angle camera has a 1.22 µm pixel size and the telephoto camera has a 1.0 µm pitch. The iPhone X takes advantage of image processing and noise reduction algorithms. Optical image stabilization is available for both wide-angle and telephoto cameras and it could be disabled using Pro capture software as Adobe Lightroom CC (Adobe Inc., San Jose, CA, USA).

- An illuminator kit to ensure controlled and high-quality light to get uniform, diffuse illumination. The illuminant was supplied by a series of Relio2 illumination devices [71], a very small lamp (35 × 35 × 35 mm, 80 g) emitting continuous spectrum light at a Correlated Color Temperature (CCT) of 4000 °K, a neutral white with high color rendering, a brightness of 40,000 lux at 0.25 m and a Color Rendering Index (CRI) > 95% with high color reliability on all wavelengths. It avoids the excessive emission of heat and harmful UV and IR radiation, which could damage the most fragile artefacts hosted in museums.

- A color target ensuring the right color reproduction. We selected the smartphone-sized CCP to guarantee full compatibility with existing data of the ColorChecker Classic (X-Rite Inc., Grand Rapids, MI, USA) with dimensions fully appropriate with most of the objects collected in a small-medium museum.

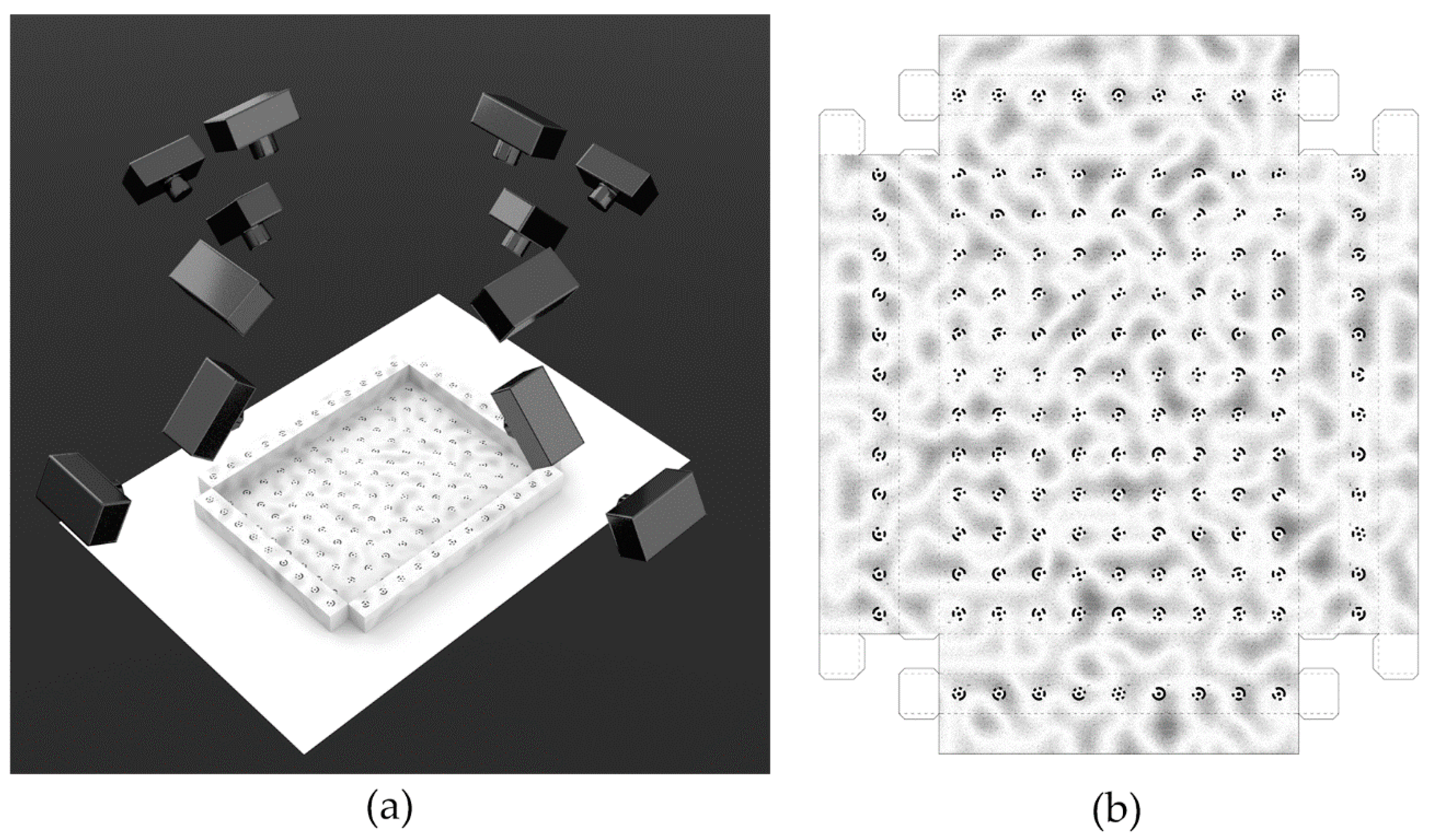

- A rotating support with a set of Ringed Automatically Detected (RAD) coded target. They are both printed upon the circular flat surface hosting the artefact to be digitized, as well as on six regularly arranged cubes, textured with RAD targets to help alignment and scaling of photogrammetric models. These cubes are firmly connected to the rotating table profile by metal rods, radially placed along the circular plate’s thickness and rotating with it. We avoided solutions with stepper motors and controllers to drive the movement of the table as, for example, in [15], requiring too complex management, use and difficulties in the fabrication for CH operators. We preferred to prepare guidelines for a correct use instead. To address the mismatch problem between the limited, accurate, number of tie points belonging to the rotating table extracted by the software (i.e., the highly constrained by the RAD targets tie points) and the larger but less accurate, number of points extracted on the object, a procedural texture to the surface of the rotary table was applied. Its purpose is to fill large blank areas between the targets–poor in homologous points–and supply frame alignment process with a more balanced condition. According to the workflow we developed, some typical issues generated by the general use of camera parameters were addressed, such as the proper adjustment of the camera-object distance that produces a correct depth of field. Even though the process can be easily applied by users with average photographic skills, the common macro problems appear when taking pictures of close objects, mainly due to the adverse ratio between distance and focal length. To keep in focus all over the image frame the targets placed on the calibration plate, the aperture size was decreased to get an acceptable depth of field or it was reduced raising the camera elevation [72]. We addressed this problem in the guidelines where solutions and tricks are explained taking our case as an example. The iPhone X camera was used at an approximately 40° inclination and an object-to-camera distance of 620 mm. The aperture was set to f/1.8. The focal length was 4 mm and the sensor size is 4.92 mm. At an object-to-camera distance of 1100 mm, assuming a circle of confusion of 3 µm and applying the calculations detailed by [73], the depth-of-field will be 930 mm. Referring to Figure 4, the width of the sheet with the RAD targets is 348 mm, which, when viewed at a 40° angle, reduces to a depth of d1 = 348 cos(40°) = 266 mm. This would suggest that the depth-of-field is adequate for this application, especially given that the f/22 aperture would be expected to introduce diffraction blurring of approximately 10 µm.

- A 3D test-field, formed by 150 RAD coded targets, using 12-bit length coordinates, which can be easily printed, using a 600-dpi b/w printer on rigid paper sheet, cut targets out and glue them (Figure 5). The coordinates for targets 1–108 were known, while targets on the edge of the frame were not given due to the difficult determination of the errors introduced by the paper thickness and construction problems. This shape will supply the photogrammetric application–used for the determination of the internal orientation–with a 3D point array since the shape of our objects is in general far from being flat.

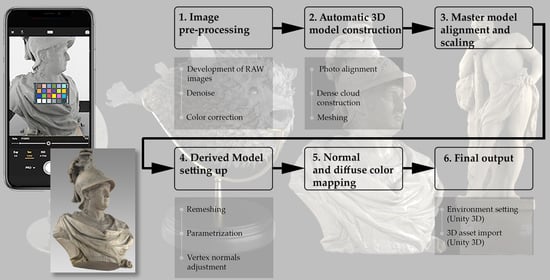

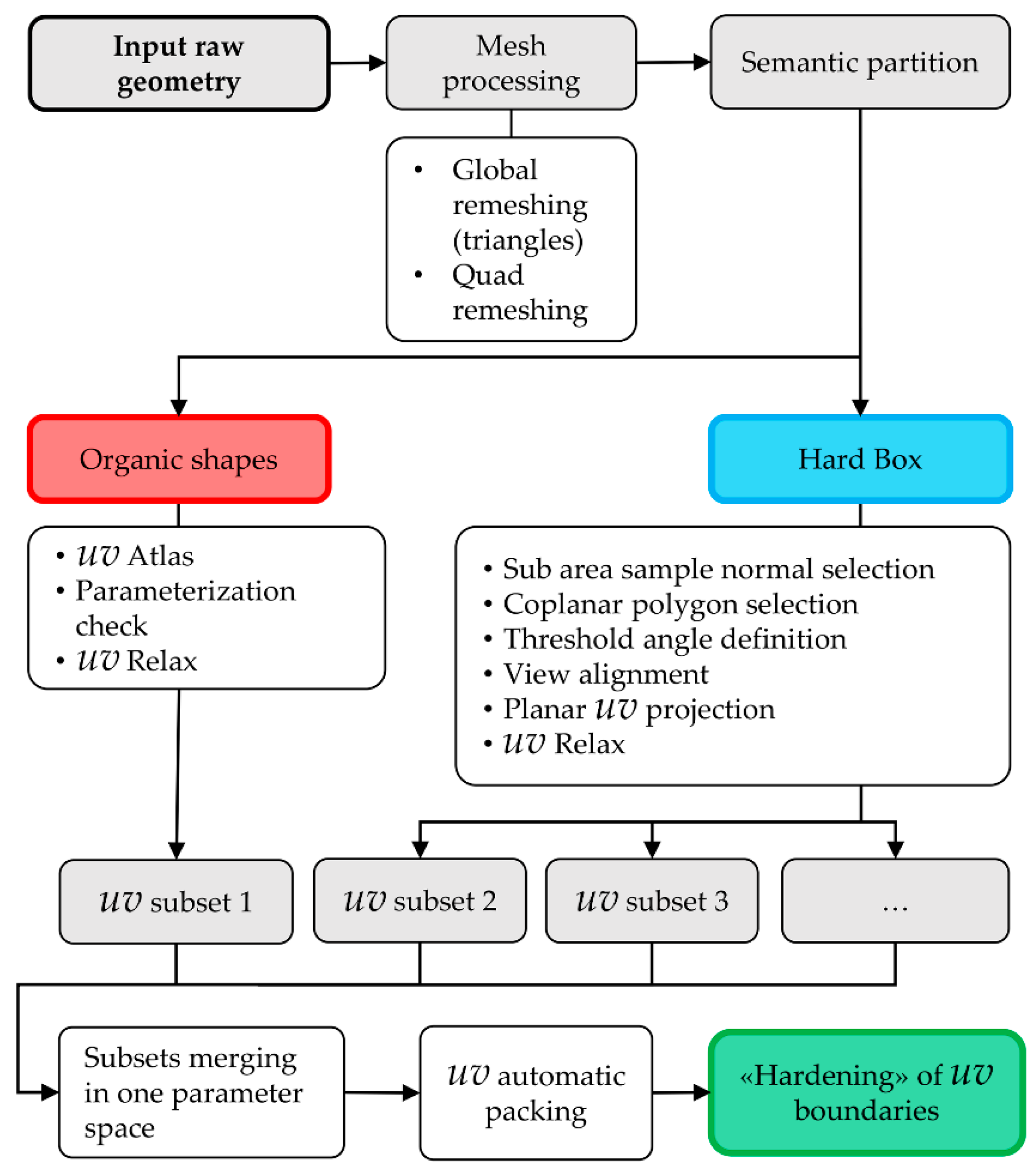

3.2. Workflow Overview

- To place a CCP target in the scene;

- To take photographs camera, using a predefined sequence network geometry;

- To handle some script to automatically activate the different phases of the photogrammetric reconstruction, with only one extra-step to scale the 3D model (specifying measurements);

- To import the model in the application template designed using Unity 3D environment.

- Images pre-processing: development of RAW images, denoise and color correction;

- Automatic 3D model construction: photo alignment, dense cloud construction, meshing;

- Master model alignment and scaling;

- Derived Model setting up (remeshing, parametrization, vertex normals adjustment);

- Normal and diffuse color mapping;

- Setting the environment with Unity 3D and importing the 3D model.

- Automated image pre-processing as in [74], aimed at enhancing photograph quality (radiometric calibrated images) yielding better results during the following image orientation and dense image matching;

- Outliers filtering (incorrect matches removing), exploiting the Random Sample Consensus (RANSAC) algorithm [77] and a robust estimation of the acquisition geometry through linear models that impose geometric constraints;

- Estimation of the parameters of the internal and external orientation. The approximate intrinsic parameters used in the essential matrix computation are obtained from the image EXIF tags. We then apply the Bundle Adjustment (BA) [78] in the variant implemented in the software used, a customized version of the open-source COLMAP [35,79];

- Dense point cloud reconstruction, exploiting semi-global matching algorithms;

- Dense point cloud interpolation, mesh reconstruction, mesh texture mapping and 3D model scale. This and the previous phases are based on a completely automated version of n-frames SURE, a state-of-the-art commercial software, designed to generate 3D spatial data [80] based on Semi Global Matching [81] and that can be implemented as a web-service. An open-source solution was also tested with minimal loss of quality. To scale the 3D model the user has just to place markers in two different photos in correspondence of the four-crosses mark placed on the CCP target corners. Distances among crosses are known, they are constant across different targets and accurately measured in laboratory on many samples. Using the rotating table, it is possible to take advantage of another solution: a simple. XML file with RAD coded target coordinates is imported in the reconstructed scene file.

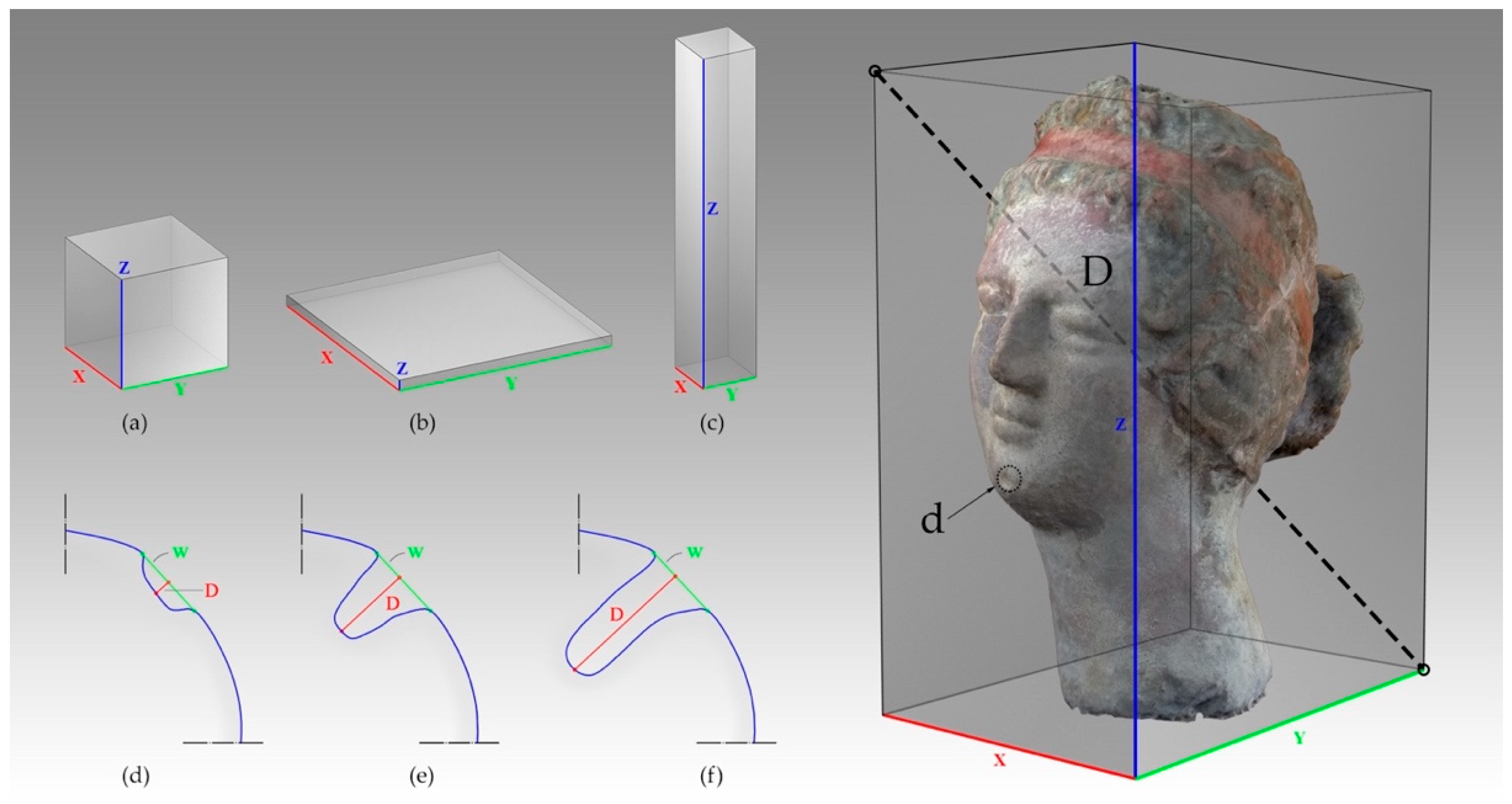

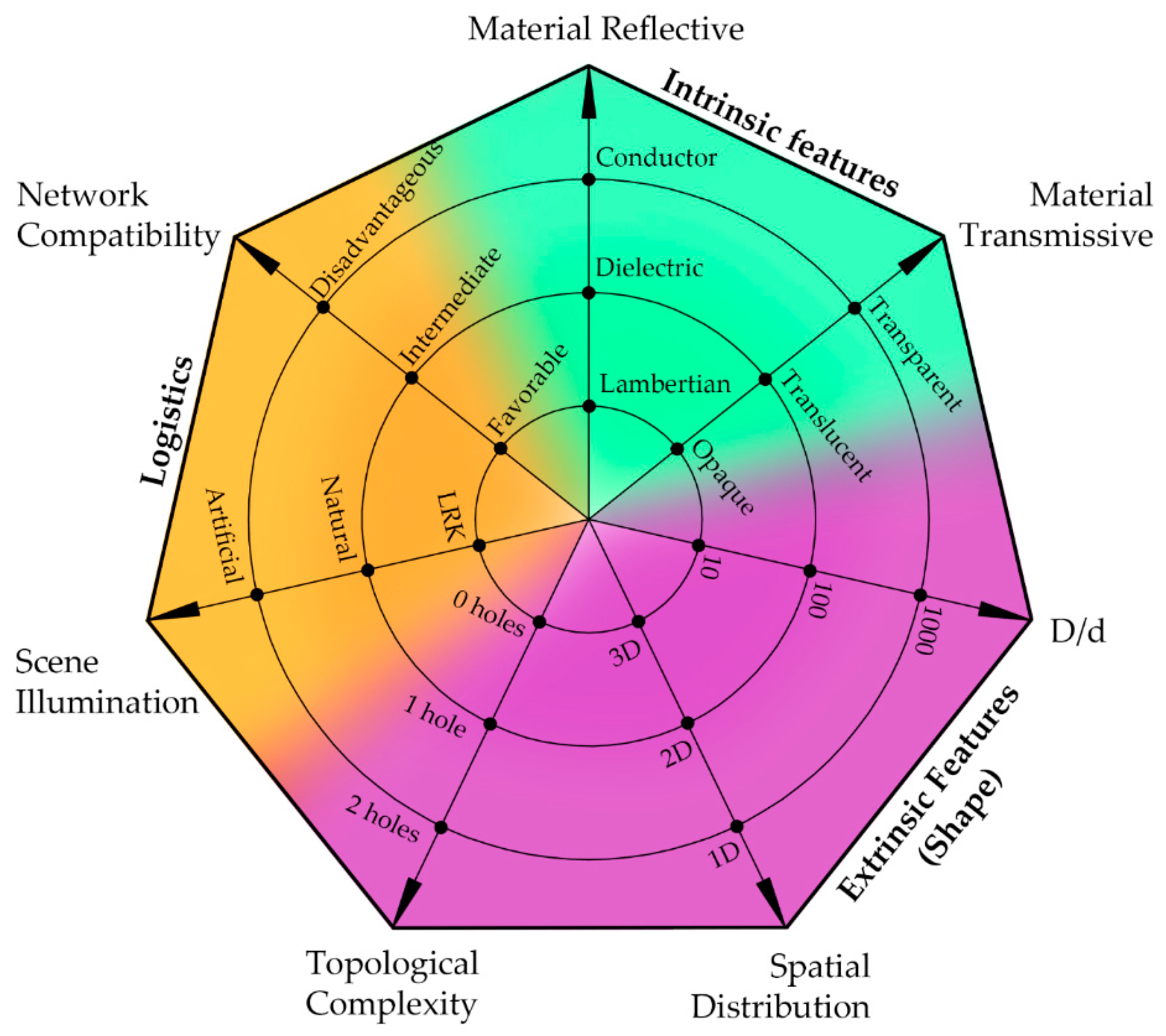

3.3. Formal Characterization of the Acquired Objects

- the formal and material characteristics of the artefact;

- the recurrence of the formal and material peculiarities identified;

- the tools and digital survey methods available and suitable;

- the accessibility and consequent maneuverability of the artefact.

- Extrinsic features

- ○

- Spatial distribution (SD) (Figure 7a–c) affects surveying activity. The most unfavorable scenario occurs when one dimension is prevailing on the other two (X ≃ Y << Z), followed by the case in which the distribution of the masses that form the object is two-dimensional (X ≃ Y >> Z);

- ○

- Topological complexity (TC) plays a crucial role since it affects every operation of the proposed workflow: when the object is not a simply connected space, for example, it presents through holes or even in case it has blind holes acquisition planning and further phases (parameterization, texturing, etc.) will be increasingly complex;

- ○

- Boundary conditions (BC): availability of surrounding working areas free from obstacles.

- Intrinsic features

- ○

- D/d ratio: where D is the diagonal length of the bounding box containing a piece of a collection and d is the average length distance among vertices, namely the model resolution. The higher this value the greater the complexity of the survey as high detail on a large object is required (Figure 7, right);

- ○

- Surface characteristics: minimal surface detail rendered, surface and textural properties, different reflectance behavior (e.g., Lambertian, specular dielectric, translucent, transparent surface, etc.);

- ○

- Cavity ratio (CR) is a parameter that qualifies the impossibility of digital acquisition for some parts of the surveyed object. It depends on the surveying technique and on the adopted device; it is given by the ratio between D and W, where D is the depth and W is the hole width. For values between 0 and 1 the planning of the survey is simple, but it is progressively more complicated for values greater than 1 (Figure 7e,f).

3.4. Authoring Environment for Interactive RTR Visualization

- Step 1. Materials’ appearance reproduction;

- Step 2. RTR visualization development with accurate color reproduction on a 100% sRGB capable display using an RTR engine running on multiple devices;

- Step 3. Production of a visualization and navigation graphic interface based on common gestures.

- light, with its actual spectral composition in rendered models;

- color, with an accurate simulation to mimic material appearance under different light directions;

- surface, with precise replica at different scales of the object’s surface.

4. Image Pre-Processing

4.1. Raw Image Reconstruction

4.2. Image Denoise

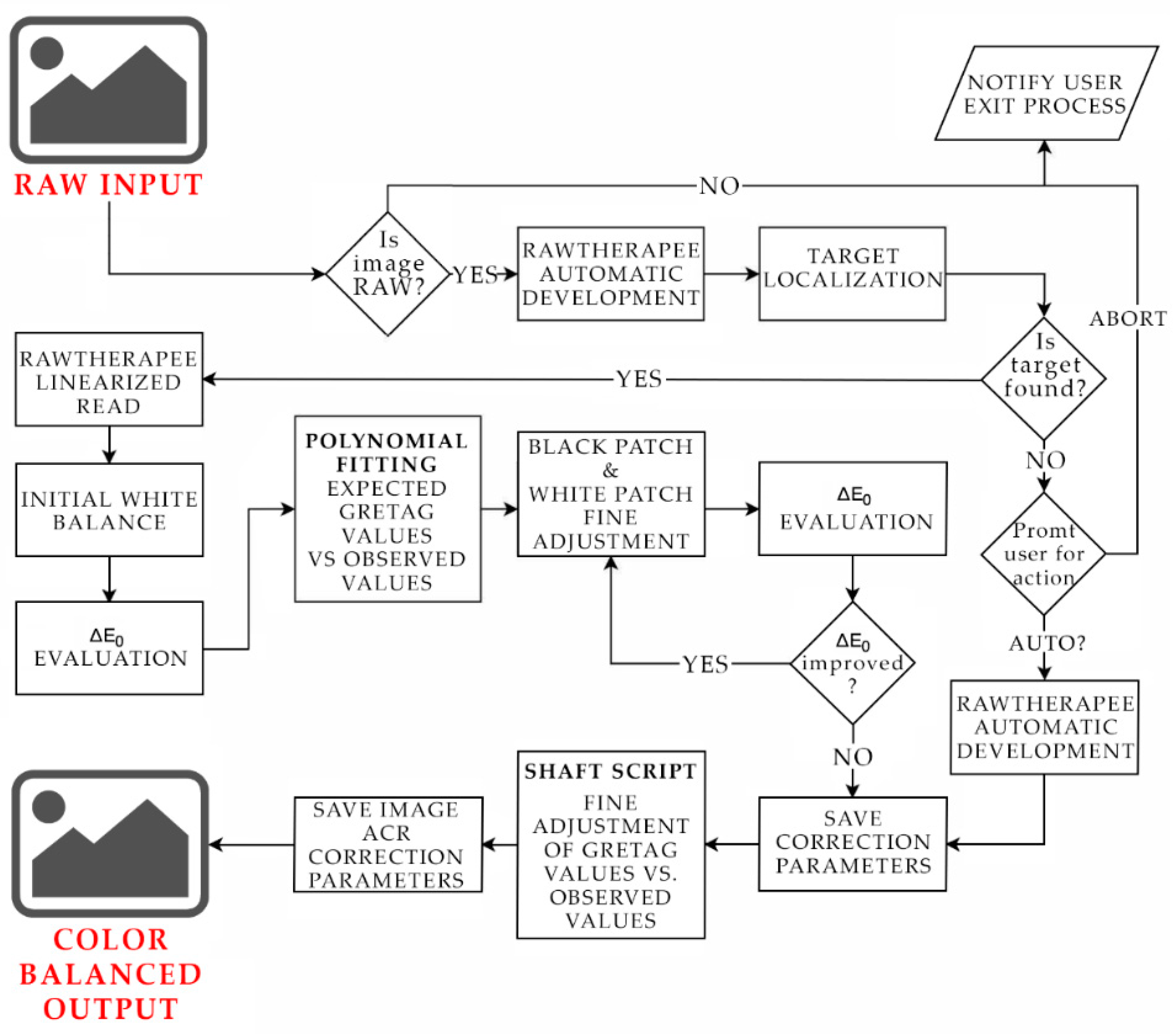

4.3. Color Correction

- a physical reference chart acquired under the current illumination (corresponding to the illumination to be discarded), in our case the described (see Section 2.5.2) CCP;

- a color space reference with the ideal data values for the chart patches. In our case ideal data values are the 8-bit measured by Denny Pascale [89] for targets built before the end of 2014 and the X-Rite reference values [90] for targets built after the end of 2014, to skip, with a minimum loss, a complicated spectrometric measurement. The color space used is the common, device independent CIEXYZ [91];

- a way to relate or to convert the device color space to the reference color space chart. This step in our solution is based on the SHAFT (Saturation & Hue Adaptive Fine Tuning) [92], a software for target-based CC supported by RawTherapee [93]. SHAFT exploits a set of optimization and enhancement techniques on exposure, contrast, white balance, hue and saturation and is based on the linear CC for successive approximations approach. SHAFT can recognize the target on the image allowing a completely automated process. To avoid its main limitation (i.e., the use on original highly incorrect images, with high color dominant, could fail) SHAFT is coupled with a polynomial regression correction [94] (Figure 8). In the present case the SHAFT was adapted, embedding appropriate tests before the processing, to identify possible inconsistencies in the images depending from specific smartphone cameras features: (1) the today’s emphasis that manufacturers give to the amplification of color saturation, to have a better color perception on the small smartphone screen, with an incidence that, in the case of Apple Display P3 can reach up to 10%; and (2) the exposure correction by the gain that can limit a faithful color reproduction in the case of images too dark corrected hiddenly.

- a way to measure and show errors in the device’s rendering of the reference chart. We used the CIEDE2000 [95] color difference metrics acknowledged as colorimetry standards by ISO and recommended by the Commission Internationale de l’Éclairage (CIE) [96] for color differences within the range 0–5 CIELAB units [97]. The formula computes color accuracy in terms of the mean camera chroma relative to the mean ideal chroma. The equation is, in practice, the Euclidean distance in L*a*b* color space between captured image values and measured values with some correction factors and is recommended by CIE mainly and compensates using coefficients for perceptual non-uniformities of the L*a*b* color space and thus correlates better with perceptual color difference than earlier equations [98];

- an output color space. We selected the rendered space IEC 61966-2-1 sRGB, the today default color space for multimedia application [99], allowing consistent color reproduction across different media and full support of 3D API graphics used (Microsoft Direct X by Microsoft Corp., Redmond, Washington, USA and Apple Metal, by Apple Inc., Cupertino, CA, USA). Its potential issues (non-linearity, smaller amplitude of the human perceived color space) affect a little the quality of rendering also because it can represent all the colors displayable on a today prosumer monitor, that is the target of this project.

5. Geometry Processing

- the quality of the acquired mesh geometry, which using smartphones is usually noisy, could present artefacts and could generate irregularities in the subsequent texture mapping;

- the need to have a visually faithful but lightweight 3D model to be used on low-end hardware in the museums or on the go.

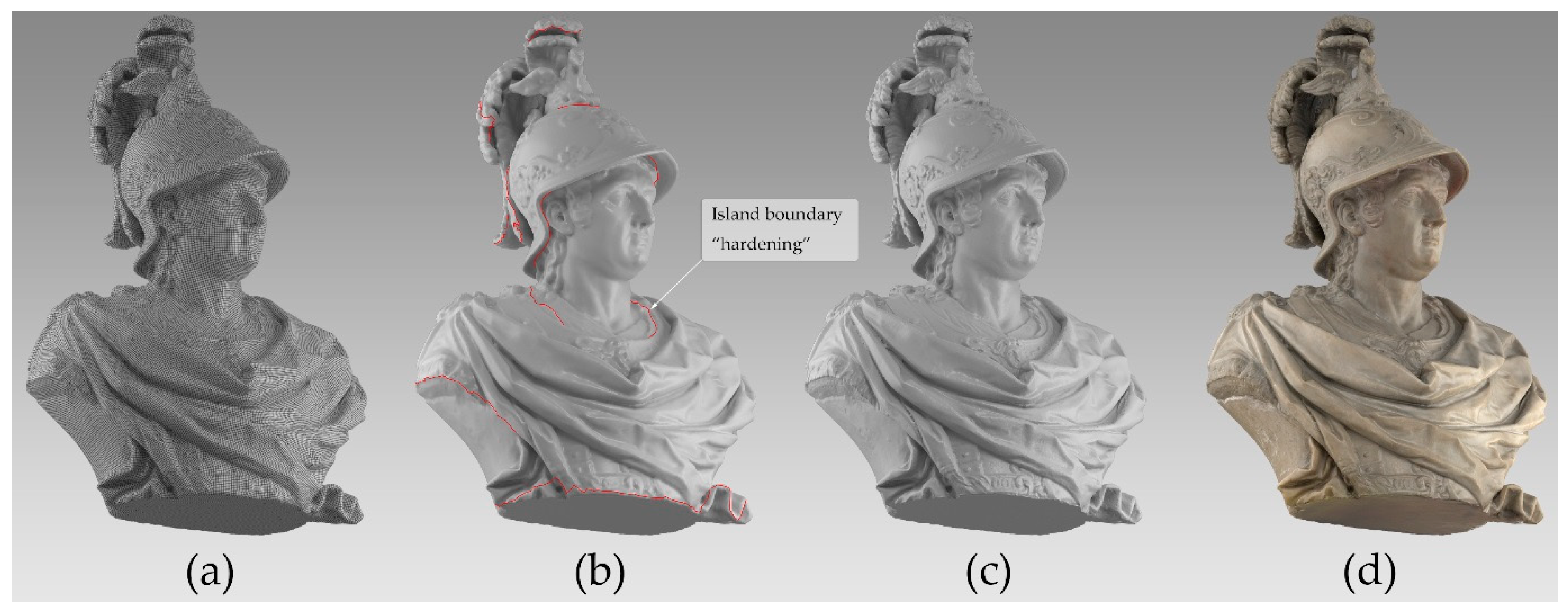

5.1. Mesh Post-Processing

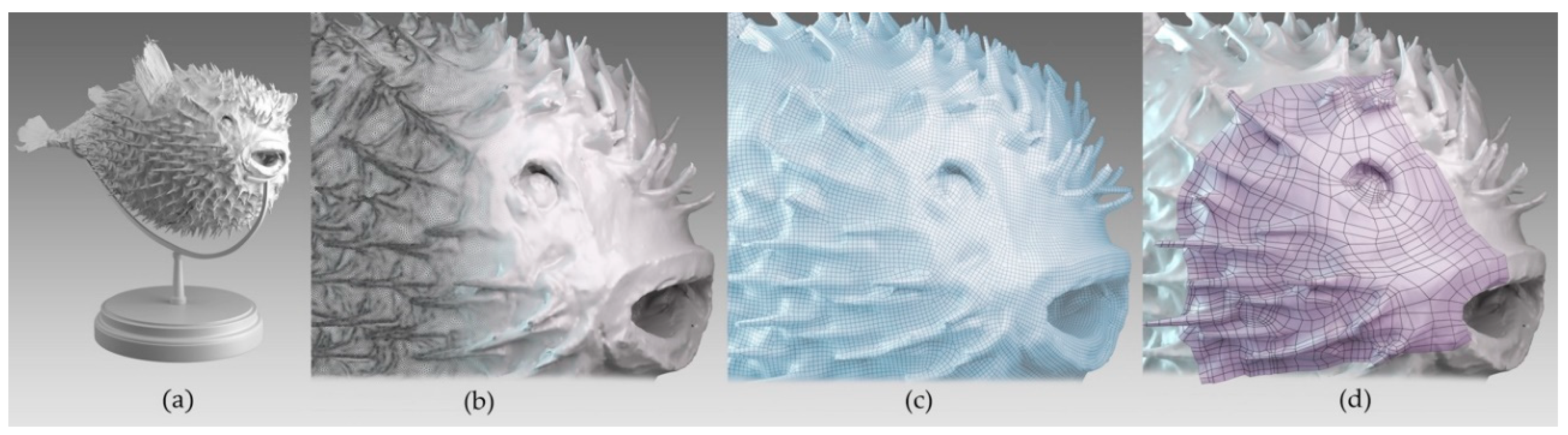

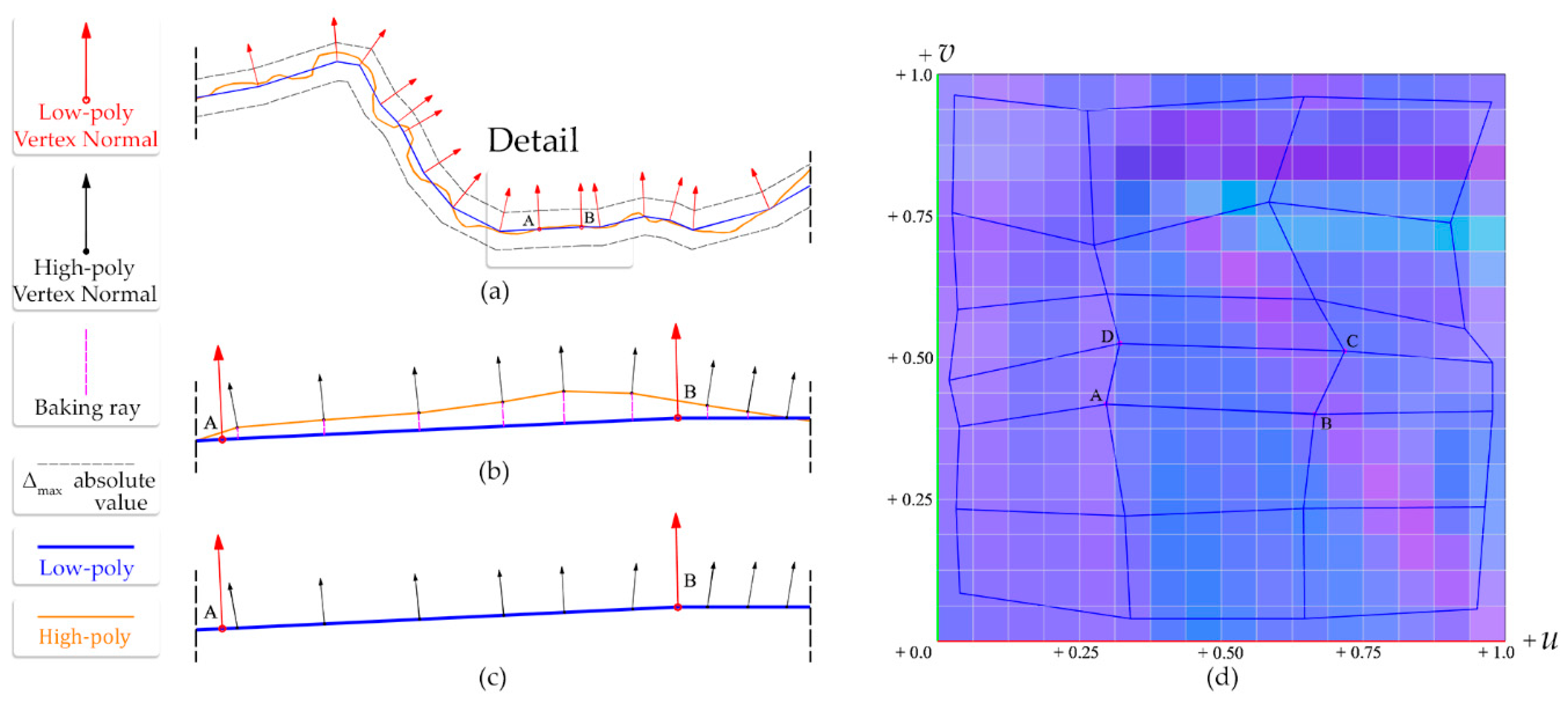

5.2. Mesh Parameterization and Normal Mapping

- Correct size of the texture determination. The low-poly mesh must be parameterized considering two aspects: keep as low as possible the number of islands and to have a correct texel density, necessary to get rid of over-detailed or under-detailed areas relative to the rest of the rendered scene. As the best parameterization has a size that occupies the parameter space the inequation to evaluate the proper resolution of the normal map is the following:where is the number of vertex normals, is the fill rate and 22n is the area in pixels resulting from a number two power usually used as a texture size. We implemented this inequation at the top of the baking process. An example is in Figure 13.

- Smoothing groups and “Mikktspace” implementation. To accurately control normals behavior we adopted a solution very common in the game industry, the so-called “Mikktspace,” which calculates normal maps in tangent space [102] joint with the use of the smoothing group technique:

- the “Mikktspace” technique prevents problems linked to (a) the math error which occurs from the usual mismatch between the normal map baker and the pixel shader used for rendering, resulting in shading seams (i.e., unwanted hard edges which become visible when the model is lit/shaded); (b) order-dependencies which can result in different tangent spaces depending on which order faces are given in or the order of vertices to a face;

- by identifying the polygons in a mesh that should appear to be smoothly connected, smoothing groups allow 3D modeling software to estimate the surface normal at any point on the mesh and by averaging the vertex normals in the mesh data that describes the mesh, allow to have both hard edges and soft edges.

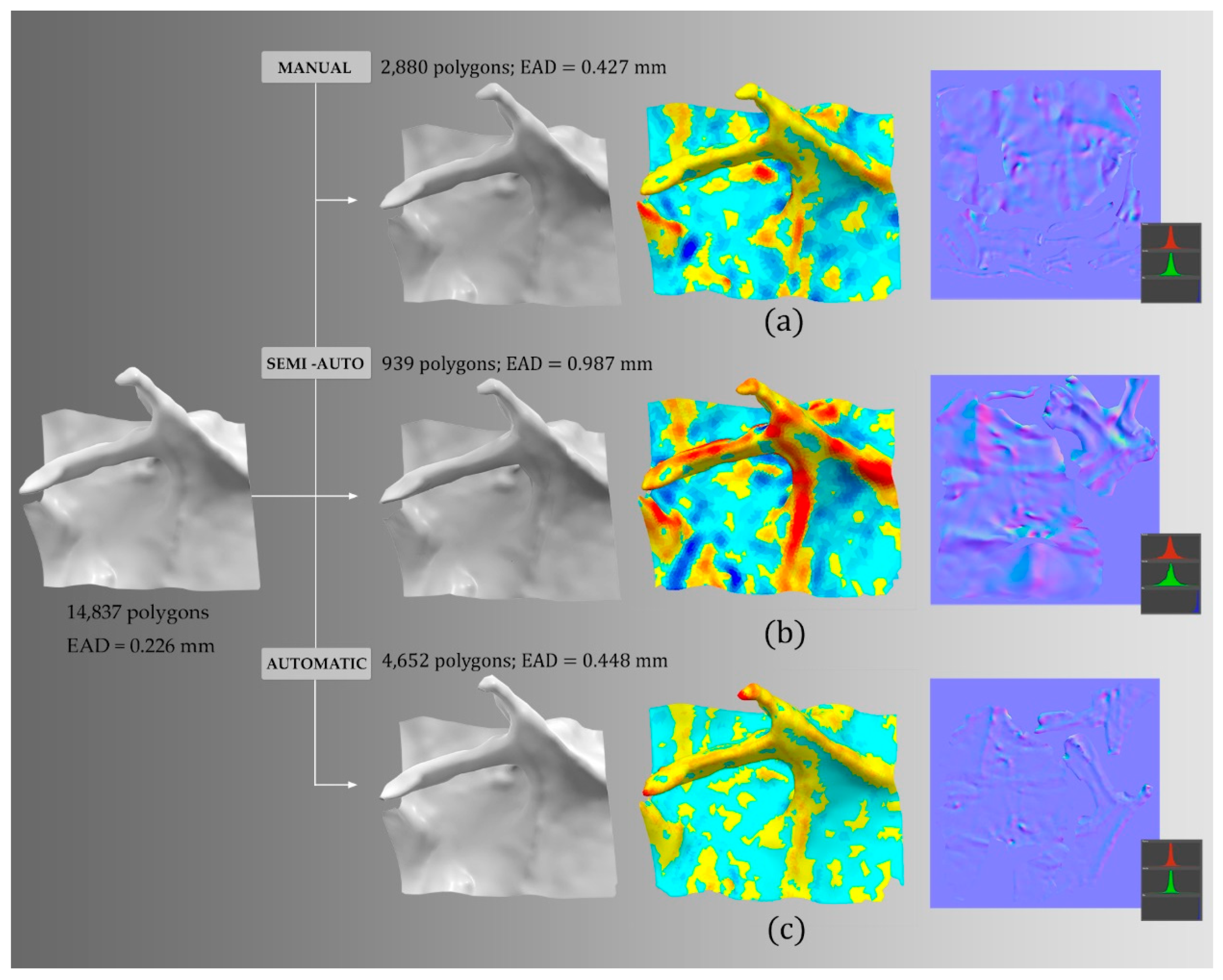

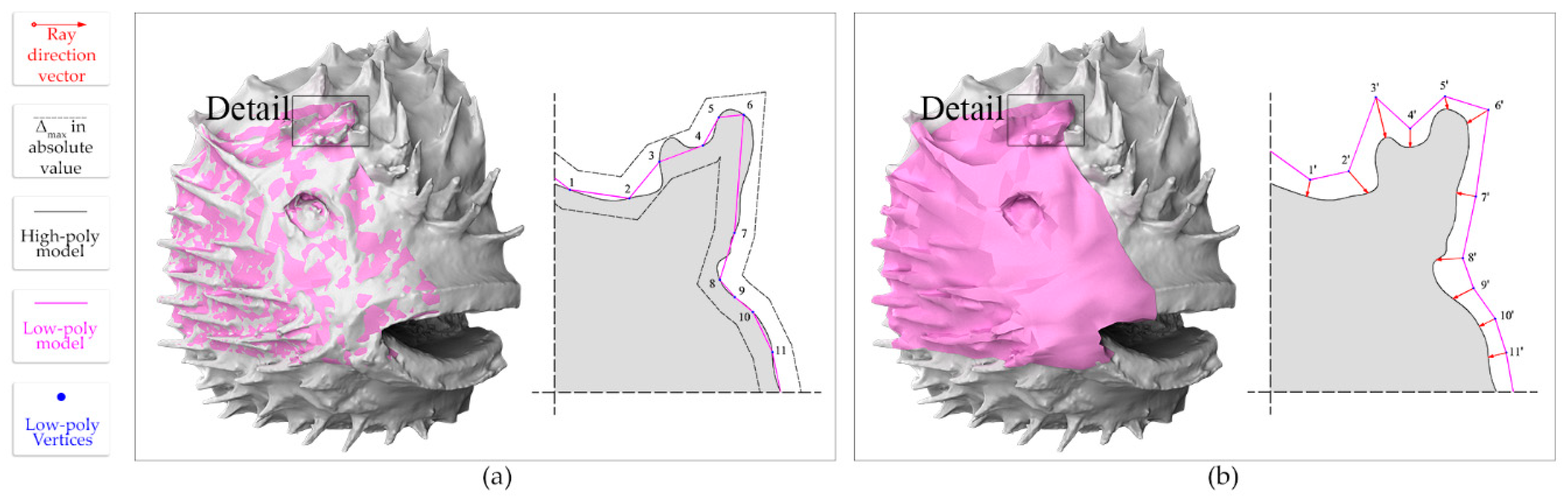

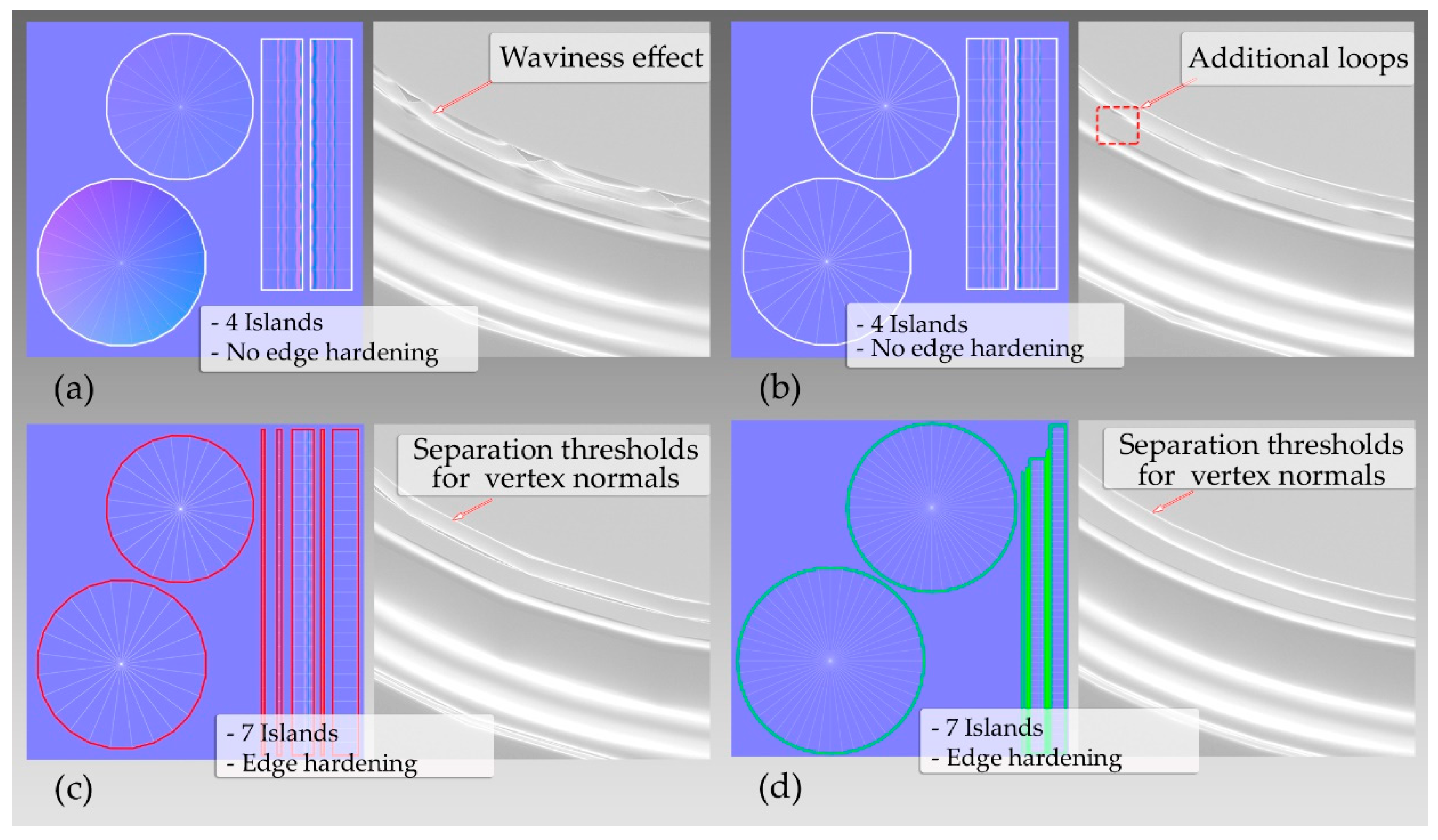

- Errors in the normal map generation due to misinterpretation of geometry and topology corrections. Using automatic/manual/semi-automatic reduction techniques starting from a high-poly model open the way to a series of cases: sometimes diseconomies are generated in the production of the models or the normal maps are useless or further cases marked by a strong reduction in the number of polygons potentially generate more shading errors. (Figure 14a–c). Currently four solutions allow to solve problems such as:

- the use of a ‘cage’ (or projection cage) providing the low-poly model with better vertex normals flow, that is, saving in the low-poly model an alternative version, generally produced by an offset, then manually arranged in certain regions and stored as a morph (Figure 15);

- the adaptive triangulation of quadrangular polygons before baking [103];

- the introduction of additional loops of quad-polygons in the proximity of curved sequences of edges to reduce ‘waviness’ effect on cylindrical and bent surfaces (Figure 16);

- the calculation of the normal map at twice the resolution strictly necessary (as stated by the Nyquist-Shannon theorem) and then downsampling at 50% of the size.

6. Assessment of the Proposed Workflow

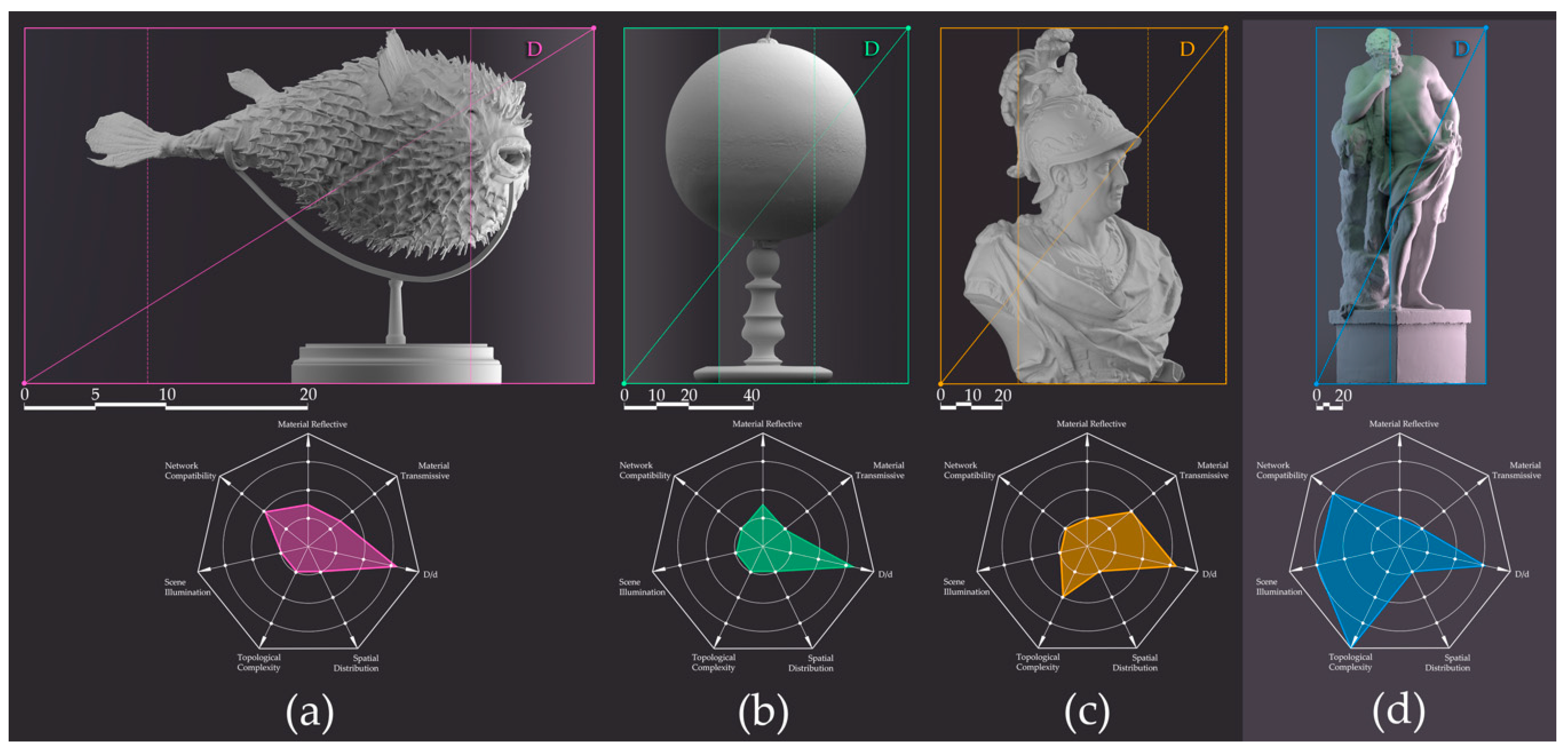

6.1. Case Studies

- A Porcupinefish (Diodon Antennatus) undergone to complete taxidermy treatment: bounding box = 35 × 19 × 25 cm, highly specular skin and tiny details (Figure 20a).

- A Globe by astronomer Horn d’Arturo: bounding box = 31 × 31 × 46 cm, highly reflective regular surface with a considerable Fresnel effect (Figure 20b).

- A bust of the scientist, military, geologist Luigi Ferdinando Marsili (1658–1730): bounding box = 41 × 67 × 99 cm, made in Carrara marble a highly translucent, non-homogeneous material at the scale of the measurement process (Figure 20c).

- A statue representing Hercules (bounding box = 100 × 90 × 275 cm, made in sandstone). Due to its size and its exhibition conditions (Figure 20d).

6.2. Devices, Equipment Used and Acquisition Setup

- Nikon D5200 SLR camera featuring 16.2 million effective pixels equipped with an f = 18 mm focal length lens during the acquisition. Remaining technical features of this camera and the Apple iPhone X camera are in Table 3.

- Two Terrestrial Laser Scanners (TLS) with different characteristics to better measure the features of the different objects digitized:

- ○

- Faro Focus X 130 (Faro Technologies Inc., Lake Mary, FL, USA) with measurement range between 0.6 m and 130 m, measurement speed of 122,000–976,000 points/s, 3D point accuracy of ±2 mm at 10 m;

- ○

- NextEngine 2020i (NextEngine Inc., Santa Monica, CA, USA) with operational field of 342.9 × 256.54 mm (wide lens), measurement speed of 50,000 points/s, resolution of 0.149 mm, 3D point accuracy of ±0.3 mm.

- graduated rotating table with RAD coded targets with known spatial coordinates applied;

- static lighting set consisting of two groups of four Relio2 (Montirone (BS), Italy) illuminators oriented respectively at an angle of ±45° towards the optical axis of the camera;

- dynamic lighting set (mounted on telescopic arms with the possibility of panning and tilting) consisting of two groups of eight Relio2 illuminators oriented at ±45° with respect to the optical axes of the camera (Figure 3). This second array of lights enables different positions and orientations with respect to the object and better approximates a lighting panel;

- resizable box structure to host objects to be captured, consisting of translucent white walls suitable to diffuse the light coming from the Relio2 illuminator array;

- curtain made of black matte surfaces to mitigate the Fresnel effect on contours of reflective materials.

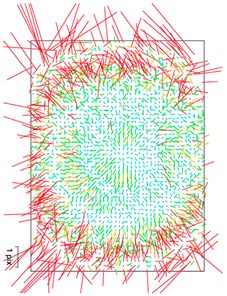

6.3. Camera Calibration Procedure

- Self-calibration in COLMAP. By default, COLMAP tries to refine the intrinsic camera parameters (except principal point) automatically during the reconstruction. In SfM, if enough images are in the dataset and the intrinsic camera parameters between multiple images can be shared, these parameters should be better estimated than ones manually obtained with a calibration pattern. This is true only if enough images are in the dataset and the intrinsic camera parameters between multiple images is shared. Using the OpenCV model camera the following camera calibration parameters are calculated: f focal length; cx, cy principal point coordinates; K1, K2 radial distortion coefficients; P1, P2 tangential distortion coefficients;

- RAD coded target based geometric calibration in Agisoft Metashape. Every center of RAD coded target is reconstructed by 8 rays and more to enhance the accuracy allowing to calculate the Brown’s camera model parameters: fx, fy focal length coordinates; cx, cy principal point coordinates, K1, K2, K3 radial distortion coefficients; P1, P2 = tangential distortion coefficients.

6.4. Performance Evaluation

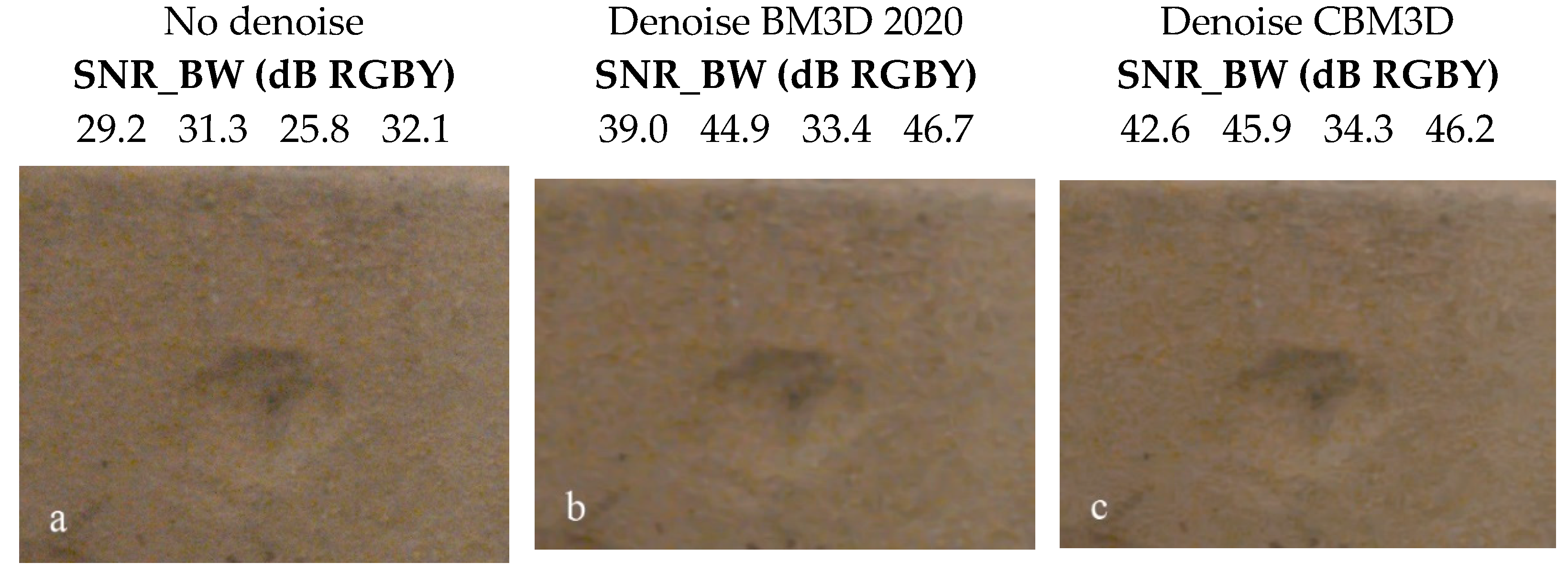

6.4.1. Color Fidelity and Denoise Effects

- the efficiency of CBM3D-new compared to the standard implementation of the BM3D;

- SNR (signal-to-noise ratio) expressed in decibel for the patch 19–24 of the CCP target for R, G, B and L (luminance):

6.4.2. Calibration Effects

6.4.3. Photogrammetric Pipeline Efficiency

- the number of oriented images;

- Bundle Adjustment (BA) (re-projection error);

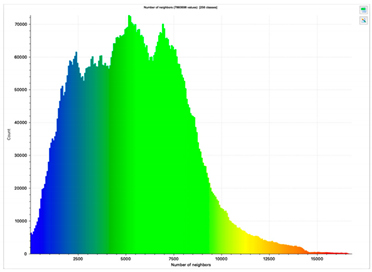

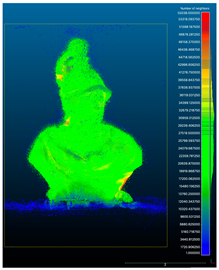

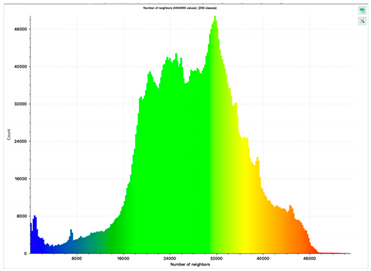

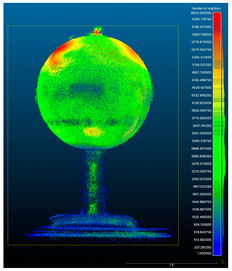

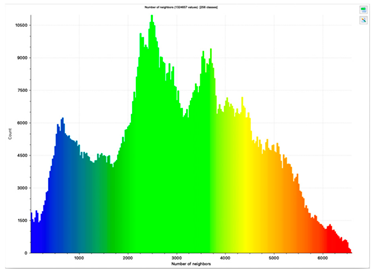

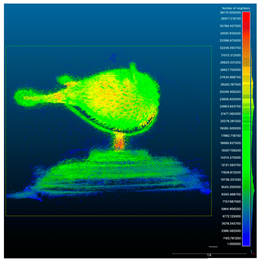

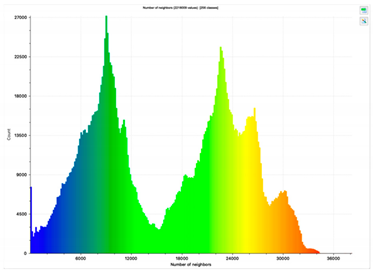

- number of points collected in the dense point cloud. The dense matching procedure was applied using n-frames SURE starting from the camera orientation results achieved in COLMAP. The absolute number of points extracted and the point density distribution (the Local Density Computation–LDC) for the different dense clouds were estimated using the software CloudCompare [109]. The LDC tool counts, for each 3D point of the cloud, the number of neighbors N (inside a sphere of a radius R, fixed at 2 cm);

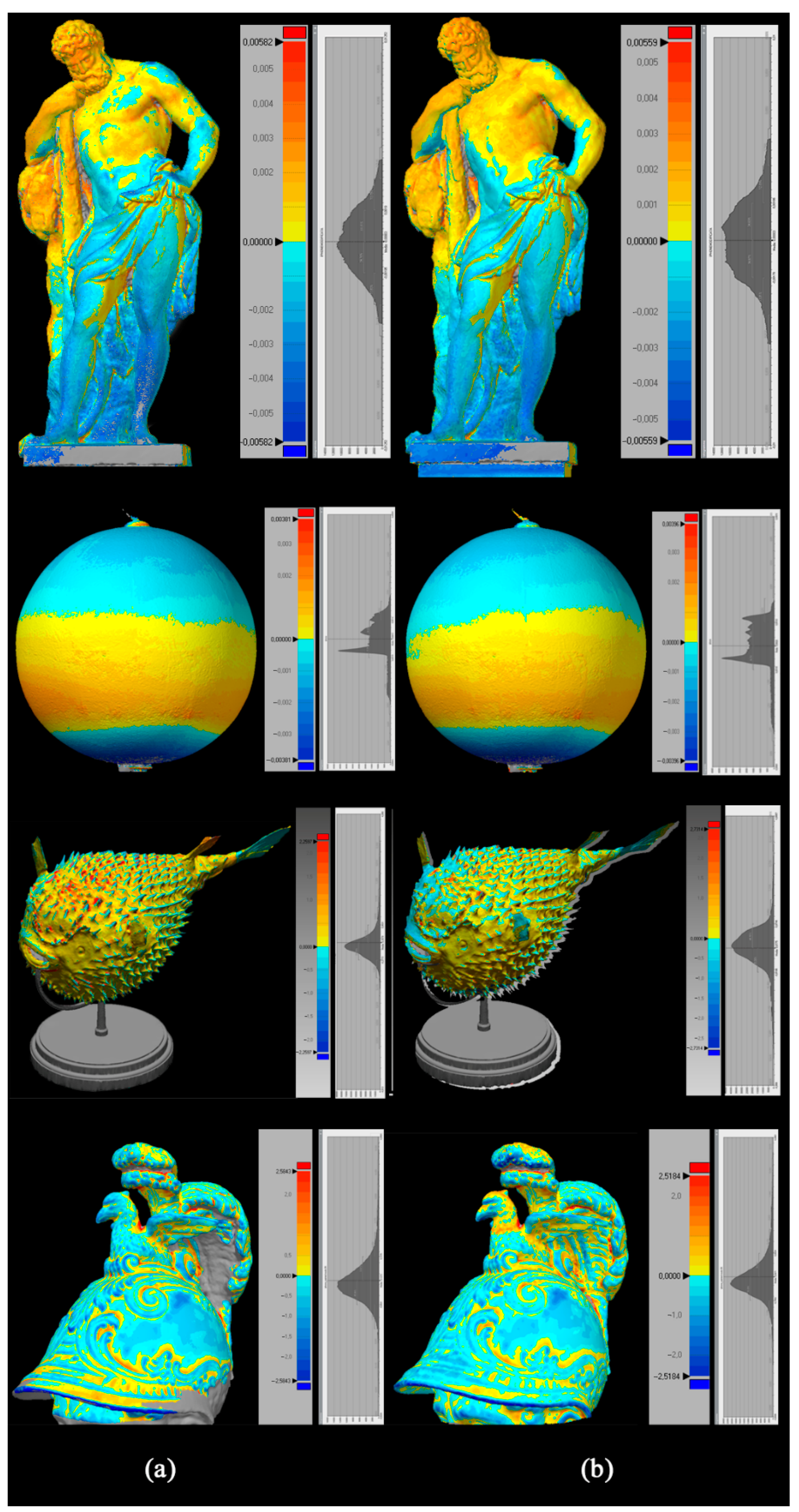

- the comparison of the dense point cloud to the ground truth of the object. The four photogrammetric models were compared with the TLS and the SLR camera models using CloudCompare. The comparison is preceded by an alignment and registration phase to correctly align reconstruction and reference laser scanned model. The alignment is guaranteed by a two-step process: a first phase of coarse alignment, performed manually by specifying pairs of corresponding points that are aligned using the Horn technique [110] and a second phase of fine registration, did using the Iterative Closest Point (ICP) algorithm [111,112].

7. Results

- color fidelity and denoise effects;

- calibration effects;

- photogrammetry pipeline.

8. Discussion

9. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Negri, M.; Marini, G. Le 100 Parole dei Musei; Marsilio: Venezia, Italy, 2020. [Google Scholar]

- Europeana DSI 2—Access to Digital Resources of European Heritage—Deliverable D4.4. Report on ENUMERATE Core Survey 4. Available online: https://pro.europeana.eu/files/Europeana_Professional/Projects/Project_list/Europeana_DSI-2/Deliverables/ (accessed on 17 November 2020).

- Europeana Pro. Available online: https://pro.europeana.eu/page/digital-collections (accessed on 17 November 2020).

- Grussenmeyer, P.; Al Khalil, O. A Comparison of Photogrammetry Software Packages for the Documentation of Buildings. In Proceedings of the International Federation of Surveyors, Saint Julian’s, Malta, 18–21 September 2000. [Google Scholar]

- Nocerino, E.; Stathopoulou, E.K.; Rigon, S.; Remondino, F. Surface Reconstruction Assessment in Photogrammetric Applications. Sensors 2020, 20, 5863. [Google Scholar] [CrossRef] [PubMed]

- Deseilligny, M.P.; De Luca, L.; Remondino, F. Automated image-based procedures for accurate artefacts 3D modeling and orthoimage generation. J. Geoinformatics FCE CTU 2011, 6. [Google Scholar] [CrossRef]

- Petrelli, D. Making virtual reconstructions part of the visit: An exploratory study. Digit. Appl. Archaeol. Cult. Herit. 2019, 15, e00123. [Google Scholar] [CrossRef]

- Pierdicca, R.; Frontoni, E.; Zingaretti, P.; Sturari, M.; Clini, P.; Quattrini, R. Advanced Interaction with Paintings by Augmented Reality and High-Resolution Visualization: A Real Case Exhibition. In Augmented and Virtual Reality. AVR 2015; Lecture Notes in Computer Science; Springer: Cham, Germany, 2015; Volume 9254. [Google Scholar]

- Berthelot, M.; Nony, N.; Gugi, L.; Bishop, A.; De Luca, L. The Avignon Bridge: A 3D reconstruction project integrating archaeological, historical and geomorphological issues. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2015, 40, 223–227. [Google Scholar] [CrossRef] [Green Version]

- Fassi, F.; Mandelli, A.; Teruggi, S.; Rechichi, F.; Fiorillo, F.; Achille, C. VR for Cultural Heritage. Augmented Reality, Virtual Reality, and Computer Graphics. AVR 2016; Lecture Notes in Computer Science; Springer: Cham, Germany, 2016; Volume 9769. [Google Scholar]

- Pescarin, S.; D’Annibale, E.; Fanini, B.; Ferdani, D. Prototyping on site Virtual Museums: The case study of the co-design approach to the Palatine hill in Rome (Barberini Vineyard) exhibition. In Proceedings of the 3rd Digital Heritage International Congress (DigitalHERITAGE) Held Jointly with 24th International Conference on Virtual Systems & Multimedia (VSMM 2018), San Francisco, CA, USA, 26–30 October 2018; pp. 1–8. [Google Scholar]

- Agus, M.; Marton, F.; Bettio, F.; Hadwiger, M.; Gobbetti, E. Data-Driven Analysis of Virtual 3D Exploration of a Large Sculpture Collection in Real-World Museum Exhibitions. J. Comput. Cult. Herit. 2018, 11, 1–20. [Google Scholar] [CrossRef]

- Available online: https://www.cultlab3d.de/ (accessed on 21 November 2020).

- Available online: http://witikon.eu/ (accessed on 21 November 2020).

- Menna, F.; Nocerino, E.; Morabito, D.; Farella, E.M.; Perini, M.; Remondino, F. An open source low-cost automatic system for image-based 3D digitization. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, 42, 155–162. [Google Scholar] [CrossRef] [Green Version]

- Potenziani, M.; Callieri, M.; Dellepiane, M.; Corsini, M.; Ponchio, F.; Scopigno, R. 3DHOP: 3D Heritage Online Presenter. Comput. Graph. 2015, 52, 129–141. [Google Scholar] [CrossRef]

- Available online: https://sketchfab.com/ (accessed on 13 June 2020).

- Available online: https://sketchfab.com/britishmuseum (accessed on 21 November 2020).

- Gaiani, M.; Apollonio, F.I.; Fantini, F. Evaluating smartphones color fidelity and metric accuracy for the 3D documentation of small artefacts. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, 42, 539–547. [Google Scholar] [CrossRef] [Green Version]

- Apollonio, F.I.; Foschi, R.; Gaiani, M.; Garagnani, S. How to analyze, preserve, and communicate Leonardo’s drawing? A solution to visualize in RTR fine art graphics established from “the best sense”. J. Comput. Cult. Herit. 2020. [Google Scholar]

- Akca, D.; Gruen, A. Comparative geometric and radiometric evaluation of mobile phone and still video cameras. Photogramm. Rec. 2009, 24, 217–245. [Google Scholar] [CrossRef]

- Tanskanen, P.; Kolev, K.; Meier, L.; Camposeco, F.; Saurer, O.; Pollefeys, M. Live metric 3D reconstruction on mobile phones. In Proceedings of the IEEE International Conference on Computer Vision, Sydney, NSW, Australia, 1–8 December 2013; pp. 65–72. [Google Scholar]

- Kos, A.; Tomažič, S.; Umek, A. Evaluation of Smartphone Inertial Sensor Performance for Cross-Platform Mobile Applications. Sensors 2016, 16, 477. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Smartphones vs Cameras: Closing the Gap on Image Quality, Posted on 19 March 2020 by David Cardinal 2020. Available online: https://www.dxomark.com/smartphones-vs-cameras-closing-the-gap-on-image-quality/ (accessed on 21 November 2020).

- Nocerino, E.; Lago, F.; Morabito, D.; Remondino, F.; Porzi, L.; Poiesi, F.; Rota Bulo, S.; Chippendale, P.; Locher, A.; Havlena, M.; et al. A smartphone-based 3D pipeline for the creative industry—The REPLICATE EU Project. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, 42, 535–541. [Google Scholar] [CrossRef] [Green Version]

- Poiesi, F.; Locher, A.; Chippendale, P.; Nocerino, E.; Remondino, F.; Van Gool, L. Cloud-based collaborative 3D reconstruction using smartphones. In Proceedings of the 14th European Conference on Visual Media Production (CVMP 2017), London, UK, 11–13 December 2017; ACM: New York, NY, USA, 2017; pp. 1–9. [Google Scholar]

- Pintore, G.; Garro, V.; Ganovelli, F.; Agus, M.; Gobbetti, E. Omnidirectional image capture on mobile devices for fast automatic generation of 2.5D indoor maps. In Proceedings of the IEEE Winter Conference on Applications of Computer Vision (WACV), Lake Placid, NY, USA, 7–10 March 2016; pp. 1–9. [Google Scholar]

- Ondruska, P.; Kohli, P.; Izad, S. MobileFusion: Real-time Volumetric Surface Reconstruction and Dense Tracking on Mobile Phones. IEEE Trans. Vis. Comput. Graph. 2015, 21, 1251–1258. [Google Scholar] [CrossRef] [PubMed]

- Apollonio, F.I.; Gaiani, M.; Baldissini, S. Color definition of open-air Architectural heritage and Archaeology artworks with the aim of conservation. Digit. Appl. Archaeol. Cult. Herit. 2017, 7, 10–31. [Google Scholar]

- Gaiani, M.; Remondino, F.; Apollonio, F.I.; Ballabeni, A. An Advanced Pre-Processing Pipeline to Improve Automated Photogrammetric Reconstructions of Architectural Scenes. Remote Sens. 2016, 8, 178. [Google Scholar] [CrossRef] [Green Version]

- Gaiani, M.; Apollonio, F.I.; Bacci, G.; Ballabeni, A.; Bozzola, M.; Foschi, R.; Garagnani, S.; Palermo, R. Seeing inside drawings: A system for analysing, conserving, understanding and communicating Leonardo’s drawings. In Leonardo in Vinci. At the Origins of the Genius; Giunti Editore: Milano, Italy, 2019; pp. 207–240. [Google Scholar]

- Ullman, S. The interpretation of structure from motion. Proc. R. Soc. Lond. 1979, 203, 405–426. [Google Scholar]

- Hartley, R.; Zisserman, A. Multiple View Geometry in Computer Vision; Cambridge University Press: New York, NY, USA, 2003. [Google Scholar]

- Hartmann, W.; Havlena, M.; Schindler, K. Recent developments in large-scale tie-point matching. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, 115, 47–62. [Google Scholar] [CrossRef]

- Schonberger, J.L.; Frahm, J.-M. Structure-from-motion revisited. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 4104–4113. [Google Scholar]

- Remondino, F.; Spera, M.G.; Nocerino, E.; Menna, F.; Nex, F. State of the art in high density image matching. Photogramm. Rec. 2014, 146, 144–166. [Google Scholar] [CrossRef] [Green Version]

- Gonizzi-Barsanti, S.; Remondino, F.; Jiménez Fernández-Palacios, B.; Visintini, D. Critical factors and guidelines for 3D surveying and modelling in Cultural Heritage. Int. J. Herit. Digit. Era 2015, 3, 142–158. [Google Scholar]

- Toschi, I.; Capra, A.; De Luca, L.; Beraldin, J.-A.; Cournoyer, L. On the evaluation of photogrammetric methods for dense 3D surface reconstruction in a metrological context. In ISPRS Annals of the Photogrammetry, Remote Sensing and Spatial Information Sciences, Proceedings of the ISPRS Technical Commission V Symposium, Riva del Garda, Italy, 23–25 June 2014; ISPRS: Hannover, Germany, 2014; Volume 2, pp. 371–378. [Google Scholar]

- Remondino, F.; Gaiani, M.; Apollonio, F.I.; Ballabeni, A.; Ballabeni, M.; Morabito, D. 3D Documentation of 40 Km of Historical Porticoes—The Challenge. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, 41, 711–718. [Google Scholar] [CrossRef]

- Apollonio, F.I.; Gaiani, M.; Basilissi, W.; Rivaroli, L. Photogrammetry driven tools to support the restoration of open-air bronze surfaces of sculptures: An integrated solution starting from the experience of the Neptune Fountain in Bologna. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, 42, 47–54. [Google Scholar] [CrossRef] [Green Version]

- Apollonio, F.I.; Ballabeni, M.; Bertacchi, S.; Fallavollita, F.; Foschi, R.; Gaiani, M. From documentation images to restauration support tools: A path following the Neptune Fountain in Bologna design process. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, 42, 329–336. [Google Scholar] [CrossRef] [Green Version]

- Remondino, F.; Nocerino, E.; Toschi, I.; Menna, F. A critical review of automated photogrammetric processing of large datasets. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, 42, 591–599. [Google Scholar] [CrossRef] [Green Version]

- Barsanti, S.G.; Guidi, G.; De Luca, L. Segmentation of 3D Models for Cultural Heritage Structural Analysis–Some Critical Issues. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, 4, 115. [Google Scholar] [CrossRef] [Green Version]

- Grilli, E.; Remondino, F. Classification of 3D Digital Heritage. Remote Sens. 2019, 11, 847. [Google Scholar] [CrossRef] [Green Version]

- Oses, N.; Dornaika, F.; Moujahid, A. Image-based delineation and classification of built heritage masonry. Remote Sens. 2014, 6, 1863–1889. [Google Scholar] [CrossRef] [Green Version]

- Rushmeier, H.; Bernardini, F.; Mittleman, J.; Taubin, G. Acquiring Input for Rendering at Appropriate Levels of Detail: Digitizing a Pietà. In Rendering Techniques ’98. EGSR 1998; Eurographics; Springer: Vienna, Austria, 1998. [Google Scholar]

- Apollonio, F.I.; Gaiani, M.; Benedetti, B. 3D reality-based artefact models for the management of archaeological sites using 3D GIS: A framework starting from the case study of the Pompeii Archaeological area. J. Archaeol. Sci. 2012, 39, 1271–1287. [Google Scholar] [CrossRef]

- Nicodemus, F.E. Directional reflectance and emissivity of an opaque surface. Appl. Opt. 1965, 4, 767–775. [Google Scholar] [CrossRef]

- Guarnera, D.; Guarnera, G.C.; Ghosh, A.; Denk, C.; Glencross, M. BRDF Representation and Acquisition. Comput. Graph. Forum 2016, 35, 625–650. [Google Scholar] [CrossRef] [Green Version]

- Westin, S.H.; Arvo, J.; Torrance, K.E. Predicting reflectance functions from complex surfaces. In Proceedings of the SIGGRAPH 92; ACM: New York, NY, USA, 1992; pp. 255–264. [Google Scholar]

- Torrance, K.E.; Sparrow, E.M. Theory for off-specular reflection from roughened surfaces. J. Opt. Soc. Am. 1967, 57, 1105–1114. [Google Scholar] [CrossRef]

- Burley, B. Extending Disney’s Physically Based BRDF with Integrated Subsurface Scattering. In SIGGRAPH’15 Courses; ACM: New York, NY, USA, 2015; Article 22. [Google Scholar]

- Goral, C.M.; Torrance, K.E.; Greenberg, D.P.; Battaile, B. Modeling the Interaction of Light between Diffuse Surfaces. In Proceedings of the SIGGRAPH 84; ACM: New York, NY, USA, 1984; pp. 213–222. [Google Scholar]

- Stamatopoulos, C.; Fraser, C.; Cronk, S. Accuracy aspects of utilizing RAW imagery in photogrammetric measurement. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2012, 39, 387–392. [Google Scholar] [CrossRef] [Green Version]

- IEEE. IEEE Standard for Camera Phone Image Quality (CPIQ); IEEE P1858; IEEE: New York, NY, USA, 2017. [Google Scholar]

- ISO. Graphic Technology and Photography—Color Characterisation of Digital Still Cameras (DSCs); ISO 17321-1; Standardization, I.O.F.; ISO: Geneva, Switzerland, 2012. [Google Scholar]

- Wandell, B.A.; Farrell, J.E. Water into wine: Converting scanner RGB to tristimulus XYZ. Device-Indep. Color Imaging Imaging Syst. Integr. 1993, 1909, 92–100. [Google Scholar]

- McCamy, C.S.; Marcus, H.; Davidson, J. A color-rendition chart. J. Appl. Photogr. Eng. 1976, 2, 95–99. [Google Scholar]

- Botsch, M.; Kobbelt, L.; Pauly, M.; Alliez, P.; Levy, B. Polygon Mesh Processing; A K Peters: Natick, MA, USA; CRC Press: Boca Raton, FL, USA, 2010. [Google Scholar]

- Alliez, P.; Ucelli, G.; Gotsman, C.; Attene, M. Recent Advances in Remeshing of Surfaces. In Shape Analysis and Structuring; De Floriani, L., Spagnuolo, M., Eds.; Springer: Berlin/Heidelberg, Germany, 2008. [Google Scholar]

- Alliez, P.; Cohen-Steiner, D.; Devillers, O.; Levy, B.; Desbrun, M. Anisotropic polygonal remeshing. ACM Trans. Graph. 2003, 22, 485–493. [Google Scholar] [CrossRef]

- Bommes, D.; Lévy, B.; Pietroni, N.; Puppo, E.; Silva, C.; Tarini, M.; Zorin, D. Quad-Mesh Generation and Processing: A Survey. Comput. Graph. Forum 2013, 32, 51–76. [Google Scholar] [CrossRef]

- Guidi, G.; Angheleddu, D. Displacement Mapping as a Metric Tool for Optimizing Mesh Models Originated by 3D Digitization. ACM J. Comput. Cult. Herit. 2016, 9, 1–23. [Google Scholar] [CrossRef] [Green Version]

- Schertler, N.; Tarini, M.; Jakob, W.; Kazhdan, M.; Gumhold, S.; Panozzo, D. Field-Aligned Online Surface Reconstruction. ACM Trans. Graph. 2017, 36, 1–13. [Google Scholar] [CrossRef] [Green Version]

- De Rose, T.; Kass, M.; Truong, T. Subdivision surfaces in character animation. In Proceedings of the SIGGRAPH 98; ACM: New York, NY, USA, 1998; pp. 85–94. [Google Scholar]

- Akenine-Möller, T.; Haines, E.; Hoffman, N. Real-Time Rendering, 4th ed.; Taylor & Francis: Abingdon, UK; CRC Press: Boca Raton, FL, USA, 2018. [Google Scholar]

- Blinn, J.F. Simulation of Wrinkled Surfaces. Comput. Graph. 1978, 12, 286–292. [Google Scholar] [CrossRef]

- Cohen, J.; Olano, M.; Manocha, D. Appearance-Preserving Simplification. In Proceedings of the SIGGRAPH 98; ACM: New York, NY, USA, 1998; pp. 115–122. [Google Scholar]

- Cignoni, P.; Montani, C.; Scopigno, R.; Rocchini, C. A general method for preserving attribute values on simplified meshes. In Proceedings of the Conference on Visualization ‘98 (VIS ‘98), Research Triangle Park, NC, USA, 18–23 October 1998; pp. 59–66. [Google Scholar]

- Woodham, R.J. Photometric method for determining surface orientation from multiple images. Opt. Eng. 1980, 19, 139–144. [Google Scholar] [CrossRef]

- Relio2. Available online: www.relio.it (accessed on 17 November 2020).

- Collins, T.; Woolley, S.I.; Gehlken, E.; Ch’ng, E. Automated Low-Cost Photogrammetric Acquisition of 3D Models from Small Form-Factor Artefacts. Electronics 2019, 8, 1441. [Google Scholar] [CrossRef] [Green Version]

- Kraus, K. Photogrammetry. Advanced Methods and Applications; Dummler: Bonn, Germany, 1997; Volume 2. [Google Scholar]

- Gaiani, M.; Apollonio, F.I.; Ballabeni, A.; Remondino, F. Securing Color Fidelity in 3D Architectural Heritage Scenarios. Sensors 2017, 17, 2437. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Morel, J.-M.; Yu, G. ASIFT: A new framework for fully affine invariant comparison. SIAM J. Imaging Sci. 2009, 2, 438–469. [Google Scholar] [CrossRef]

- Gaiani, M. I Portici di Bologna Architettura, Modelli 3D e Ricerche Tecnologiche; Bononia University Press: Bologna, Italy, 2015. [Google Scholar]

- Fischler, M.A.; Bolles, R.C. Random Sample Consensus: A Paradigm for Model Fitting with Applications to Image Analysis and Automated Cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Triggs, B.; McLauchlan, P.F.; Hartley, R.I.; Fitzgibbon, A.W. Bundle adjustment—A modern synthesis. In International Workshop on Vision Algorithms; Springer: Berlin/Heidelberg, Germany, 1999; pp. 298–372. [Google Scholar]

- Schönberger, J.L.; Zheng, E.; Pollefeys, M.; Frahm, J.M. Pixelwise View Selection for Unstructured Multi-View Stereo. In Proceedings of the European Conference on Computer Vision (ECCV), Amsterdam, The Netherlands, 8–16 October 2016. [Google Scholar]

- Wenzel, K.; Rothermel, M.; Haala, N.; Fritsch, D. SURE–The Ifp Software for Dense Image Matching; Wichmann: Berlin/Offenbach, Germany; VDE Verlag: Berlin, Germany, 2013. [Google Scholar]

- Hirschmüller, H. Stereo processing by semiglobal matching and mutual information. IEEE Trans. Pattern Anal. Mach. Intell. 2007, 30, 328–341. [Google Scholar]

- Jakob, W.; Tarini, M.; Panozzo, D.; Sorkine-Hornung, O. Instant field-aligned meshes. ACM Trans. Graph. 2015, 34, 6. [Google Scholar] [CrossRef]

- García-León, J.; Sánchez-Allegue, P.; Peña-Velasco, C.; Cipriani, L.; Fantini, F. Interactive dissemination of the 3D model of a baroque altarpiece: A pipeline from digital survey to game engines. SCIRES-IT Sci. Res. Inf. Technol. 2018, 8, 59–76. [Google Scholar]

- Ballabeni, A.; Apollonio, F.; Gaiani, M.; Remondino, F. Advances in image pre-processing to improve automated 3D reconstruction. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2015, 40, 315–323. [Google Scholar] [CrossRef] [Green Version]

- Karaimer, H.C.; Brown, M.S. A Software Platform for Manipulating the Camera Imaging Pipeline. In Computer Vision—ECCV 2016; Lecture Notes in Computer Science; Leibe, B., Matas, J., Sebe, N., Welling, M., Eds.; Springer: Cham, Germany, 2016; Volume 9905, pp. 429–444. [Google Scholar]

- Dabov, K.; Foi, A.; Katkovnik, V.; Egiazarian, K. Image denoising by sparse 3-D transform-domain collaborative filtering. IEEE Trans. Image Process. 2007, 16, 2080–2095. [Google Scholar] [CrossRef]

- Mäkinen, Y.; Azzari, L.; Foi, A. Collaborative Filtering of Correlated Noise: Exact Transform-Domain Variance for Improved Shrinkage and Patch Matching. IEEE Trans. Image Process. 2020, 29, 8339–8354. [Google Scholar] [CrossRef]

- Plötz, T.; Roth, S. Benchmarking Denoising Algorithms with Real Photographs. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 2750–2759. [Google Scholar]

- Pascale, D. RGB Coordinates of the Macbeth ColourChecker; The BabelColour Company: Montreal, QC, Canada, 2006; pp. 1–16. [Google Scholar]

- Available online: https://www.xrite.com/service-support/new_color_specifications_for_colorchecker_sg_and_classic_charts (accessed on 21 November 2020).

- Wyszecki, G.; Stiles, W.S. Color Science—Concepts and Methods, Quantitative Data and Formulae; Wiley: Danvers, MA, USA, 2000. [Google Scholar]

- Gaiani, M.; Ballabeni, A. SHAFT (SAT & HUE Adaptive Fine Tuning), a new automated solution for target-based color correction. Color Colorimetry Multidiscip. Contrib. 2018, 14, 69–80. [Google Scholar]

- Available online: https://github.com/Beep6581/RawTherapee (accessed on 21 November 2020).

- Kim, E.-S.; Lee, S.-H.; Jang, S.-W.; Sohng, K.-I. Adaptive colorimetric characterization of camera for the variation of white balance. IEICE Trans. Electron. 2005, 88, 2086–2089. [Google Scholar] [CrossRef] [Green Version]

- Sharma, G.; Wu, W.; Dalal, E.N. The CIEDE2000 Color-Difference Formula: Implementation Notes, Supplementary Test Data, and Mathematical Observations. Color Res. Appl. 2005, 30, 21–30. [Google Scholar] [CrossRef]

- ISO. Colorimetry—Part 6: CIEDE2000 Color-Difference Formula; ISO/CIE 11664-6; ISO: Geneva, Switzerland, 2014. [Google Scholar]

- Melgosa, M.; Alman, D.H.; Grosman, M.; Gómez-Robledo, L.; Trémeau, A.; Cui, G.; García, P.A.; Vázquez, D.; Li, C.; Luo, M.R. Practical demonstration of the CIEDE2000 corrections to CIELAB using a small set of sample pairs. Color Res. Appl. 2013, 38, 429–436. [Google Scholar] [CrossRef]

- Mokrzycki, W.S.; Tatol, M. Color difference Delta E—A survey. Mach. Graph. Vis. 2012, 20, 383–411. [Google Scholar]

- Stokes, M.; Anderson, M.; Chandrasekar, S.; Motta, R. A Standard Default Color Space for the Internet-sRGB. 1996. Available online: http://www.w3.org/Graphics/Color/sRGB.html (accessed on 17 November 2020).

- Botsch, M.; Kobbelt, L. A Remeshing Approach to Multiresolution Modeling. In Proceedings of the Eurographics/ACM SIGGRAPH Symposium on Geometry Processing, SGP ‘04, Nice, France, 8–10 July 2004; ACM: New York, NY, USA, 2004; pp. 185–192. [Google Scholar]

- Dunyach, M.; Vanderhaeghe, D.; Barthe, L.; Botsch, M. Adaptive Remeshing for Real-Time Mesh Deformation. In Proceedings of the Eurographics 2013, Girona, Spain, 6–10 May 2013; The Eurographics Association: Geneve, Switzerland, 2013; pp. 29–32. [Google Scholar]

- Mikkelsen, M. Simulation of Wrinkled Surfaces Revisited. Master’s Thesis, University of Copenhagen, Copenhagen, Denmark, 26 March 2008. [Google Scholar]

- Cipriani, L.; Fantini, F.; Bertacchi, S. 3D models mapping optimization through an integrated parameterization approach: Cases studies from Ravenna. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2014, 45, 173–180. [Google Scholar] [CrossRef] [Green Version]

- Brown, D.C. Close-range camera calibration. Photogramm. Eng. 1971, 37, 855–866. [Google Scholar]

- Fryer, J.G. Camera calibration. In Close Range Photogrammetry and Machine Vision; Atkinson, K.B., Ed.; Chapter 6; Whittles Publishing: Dunbeath, UK, 2001; pp. 156–179. [Google Scholar]

- Remondino, F.; Fraser, C.S. Digital camera calibration methods: Considerations and comparisons. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2006, 36, 266–272. [Google Scholar]

- Song, T.; Luo, M.R. Testing color-difference formulae on complex images using a CRT monitor. In Proceedings of the IS & T and SID Eighth Color Imaging Conference, Scottsdale, AZ, USA, 7–10 November 2000; pp. 44–48. [Google Scholar]

- Imatest, L. 2020. Available online: https://www.imatest.com/products/imatest-master/ (accessed on 29 January 2021).

- Girardeau-Montaut, D. CloudCompare (Version 2.11.2) [GPL Software]. 2020. Available online: http://www.cloudcompare.org/ (accessed on 18 June 2020).

- Horn, B.K. Closed-form solution of absolute orientation using unit quaternions. JOSA A 1987, 4, 629–642. [Google Scholar] [CrossRef]

- Chen, Y.; Medioni, G. Object modelling by registration of multiple range images. Image Vis. Comput. 1992, 10, 145–155. [Google Scholar] [CrossRef]

- Rusinkiewicz, S.; Levoy, M. Efficient variants of the ICP algorithm. In Proceedings of the Third International Conference on 3-D Digital Imaging and Modeling, Quebec City, QC, Canada, 28 May–1 June 2001; pp. 145–152. [Google Scholar]

| INTRINSIC FEATURES | EXTRINSIC FEATURES | ||||||

|---|---|---|---|---|---|---|---|

| D/d D = the Diagonal of the Bounding Box; D = Model Resolution | Cavity Ratio (CR) | Surface Characteristics | Spatial Distribution (SD) | Topological Complexity (TC) | Boundary Condition (BC) | ||

| Material Reflective | Material Transmissive | Texture | |||||

| 10 (1) | <1 (1) | Lambertian (1) | Opaque (1) | Even distribution (1) | 3D X ≃ Y ≃ Z (1) | 0 holes (1) | 5 sides free (1) |

| 100 (2) | 1 (2) | Dielectric (2) | Translucent (2) | Odd distribution (2) | 2D X ≃ Y >> Z (2) | 1 hole (2) | 4 sides free (2) |

| 1000 (3) | >1 (3) | Conductor (3) | Transparent (3) | Absent (3) | 1D X ≃ Y << Z (3) | 2 holes (3) | 3 sides free (3) |

| >1000 (N) | >>1 (4) | n holes (N) | 2 sides free (4) | ||||

| Collection Pieces | D/D Ratio | Cavity Ratio (CV) | Material Reflective | Material Transmissive | Texture | Topological Complexity (TC) (TC) | Spatial Distribution (SC) (SD) | Boundary Condition (BC) | Preesence of Movable Parts | Score |

|---|---|---|---|---|---|---|---|---|---|---|

| (a) Hercules | 1527.15 | <1 (1) | Lambertian (1) | Opaque (1) | Even distribution (1) | 4 hole (5) | 3D (1) | 3 sides free (3) | No (1) | 14 |

| (b) Porcupine fish | 2404 | <1 (1) | Mixed (1), (2), (3) | Opaque (1) | Even distribution (1) | 0 hole (1) | 3D (1) | 5 sides free (1) | One degree of freedom (rotation) (2) | 9–11 |

| (c) Horn d’Arturo Globe | 322.5 | <1 (1) | Dielectric (2) | Opaque (1) | Even distribution (1) | 0 holes (1) | 3D (1) | 5 sides free (1) | One degree of freedom (rotation) (2) | 10 |

| (d) Marsili bust | 2536 | <1 (1) | Dielectric (2) | Translucent (2) | Even distribution (1) | 1 hole (2) | 3D (1) | 5 sides free (1) | No (1) | 11 |

| Nikon D5200 | iPhone X | |

|---|---|---|

| Sensor name | Sony | Sony Exmor RS IMX315 |

| Sensor type | APS-C CMOS | CMOS |

| Sensor diagonal (mm) | 28.21 | 6.15 |

| Sensor Size | 23.5 × 15.6 mm | 4.92 × 3.69 mm |

| Image resolution | 6000 × 4000 px | 4032 × 3024 px |

| Pixel size | 3.9 micron | 1.22 micron |

| Focal length | 18 mm, equivalent to 28 mm on a full-frame camera | 4 mm, equivalent to 28 mm on a full-frame camera |

| Hercules | Porcupinefish | Horn d’Arturo’s Globe | Marsili Bust | |

|---|---|---|---|---|

| Rotating table | no | yes | yes | yes |

| Relio2 4 × 2 arrey (static) | no | no | yes | no |

| Relio2 8 × 2 arrey (movable) | no | yes | no | yes |

| White Canvas box | no | yes | no | yes |

| Black Canvas curtain | no | no | yes | no |

| Hercules | Porcupinefish | Horn d’Arturo’s Globe | Marsili Bust | |

|---|---|---|---|---|

| Photos | 378 | 221 | 100 | 542 |

| Rows | 12 | 6 | 6 | 8 |

| iPhone X | Nikon D5200 | |||||||

|---|---|---|---|---|---|---|---|---|

| ΔE*00 Mean | ΔE*00 Max | ΔL* | Exposure Error (F-Stops) | ΔE*00 Mean | ΔE*00 Max | ΔL* | Exposure Error (F-Stops) | |

| Porcupinefish | 3.67 | 8.11 | 2.52 | −0.04 | 2.79 | 6.79 | 1.72 | −0.03 |

| Horn d’Arturo’s Globe | 3.05 | 7.38 | 2.02 | −0.10 | 2.47 | 6.43 | 1.43 | −0.01 |

| Marsili Bust | 3.48 | 3.16 | 1.67 | 0.10 | 2.70 | 2.51 | 1.54 | 0.07 |

| Heracles | 3.52 | 10.19 | 1.68 | −0.03 | 2.91 | 9.55 | 1.35 | −0.02 |

| Mean Error | 3.49 | 5.22 | 1.78 | 0.05 | 2.74 | 4.22 | 1.53 | 0.049 |

| ΔE*00 Mean | ΔE*00 Max | ΔL* | Exposure Error (F-Stops) | SNR_BW (Db RGBY) | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| ND | D | ND | D | ND | D | ND | D | ND | D | |

| Porcupinefish | 4.07 | 4.06 | 8.26 | 8.26 | −0.77 | −0.76 | 0.15 | 0.14 | 30.8 33.6 33.1 34.3 | 31.2 33.8 33.2 34.5 |

| Horn d’Arturo’s Globe | 3.26 | 3.26 | 11 | 11 | 3.28 | 3.28 | 0.39 | 0.39 | 31.0 32.2 30.7 33.2 | 31.3 32.4 30.9 33.4 |

| Marsili Bust | 2.81 | 2.81 | 7.35 | 7.36 | −0.10 | −0.10 | 0.10 | 0.10 | 26.3 28.5 25.5 29.2 | 26.4 28.5 25.6 29.3 |

| Heracles | 3.57 | 3.55 | 11.5 | 11.4 | 0.09 | 0.07 | 0.13 | 0.13 | 30.3 31.8 27.0 32.6 | 30.5 32.1 27.3 32.8 |

| Metashape | Colmap | ||

|---|---|---|---|

| Reprojection Error (Pixel) | 0.176576 | 0.56433 |  |

| RMS Error (Pixel) | 0.9740 | 0.89169 | |

| Total Error Control Points | 0.09 mm | 0.08 mm | |

| F (Focal Length) | 3319.06123 | 3331.1384425 | |

| Cx(Principal Point (x)) | 24.15132 | 25.354289 | |

| Cy (Principal Point (y)) | −4.88879 | −5.603050 | |

| Radial K1 | 0.22259 | 0.106672 | |

| Radial K2 | −1.25532 | −0.330726 | |

| Tangential P1 | −0.00076 | −0.001048 | |

| Tangential P2 | −0.001925 | −0.000411 |

| Marsili Bust | Porcupinefish | Horn d’Arturo’s Globe | Heracles | |||||

|---|---|---|---|---|---|---|---|---|

| Uncalibr. | Calibr. | Uncalibr. | Calibr. | Uncalibr. | Calibr. | Uncalibr. | Calibr. | |

| Mean BA reprojection error (px) | 0.8395 | 0.83592 | 0.66088 | 0.65838 | 0.57023 | 0.57203 | 0.75527 | 0.75084 |

| Numb. oriented images | 582/582 | 582/582 | 141/141 | 141/141 | 76/76 | 76/76 | 299/299 | 299/299 |

| Observations | 1,333,312 | 1,322,218 | 220,568 | 219,749 | 222,170 | 116,293 | 595,392 | 590,599 |

| Points | 194,267 | 192,593 | 50,390 | 50,422 | 42,881 | 24,976 | 115,222 | 114,514 |

| Numb. 3D points dense matching | 6,314,286 | 6,217,820 | 1,815,027 | 1,769,016 | 1,541,992 | 715,654 | 9,325,104 | 9,307,794 |

| Marsili Bust | Porcupinefish | Horn d’Arturo’s Globe | Heracles | |||||

|---|---|---|---|---|---|---|---|---|

| iPhone X | Nikon D5200 | iPhone X | Nikon D5200 | iPhone X | Nikon D5200 | iPhone X | Nikon D5200 | |

| Mean BA Reprojection Error (px) | 0.8395 | 0.5430 | 0.6608 | 0.44330 | 0.5703 | 0.4929 | 0.7552 | 0.5982 |

| Numb. Oriented Images | 582/582 | 582/582 | 141/141 | 141/141 | 76/76 | 76/76 | 299/299 | 299/299 |

| Observations | 13,33,312 | 3,115,014 | 220,568 | 352,664 | 222,170 | 344,729 | 595,392 | 774,034 |

| Points | 194.267 | 401.725 | 50.390 | 90.115 | 42.881 | 60.341 | 115.222 | 176.958 |

| Numb. 3D points Dense Matching | 6,314,286 | 6,864,321 | 1,815,027 | 3,216,005 | 1,541,992 | 1,645,099 | 9,325,104 | 30,615,902 |

| Maps of the Number of Neighbors | Histograms of the Number of Neighbors | |

|---|---|---|

| Heracles 7,860,689 pts. |  |  |

| Marsili bust 4,404,955 pts. |  |  |

| Horn d’Arturo’s Globe 1,324,657 pts. |  |  |

| Porcupine fish 2,218,009 pts. |  |  |

| Comparison | Mean Error | Standard Deviation | Percentage of Samples within ±1 Σ | |

|---|---|---|---|---|

| Porcupinefish | iPhone X–NextEngine | 0.357 mm | 0.635 mm | 82.59% |

| iPhone X– Nikon D5200 | 0.077 mm | 0.897 mm | 81.99% | |

| Horn d’Arturo’s Globe | iPhone X–NextEngine | 0.190 mm | 1.241 mm | 73.84% |

| iPhone X– Nikon D5200 | 1.030 mm | 0.891 mm | 82.83% | |

| Marsili Bust | iPhoneX–NextEngine | 0.472 mm | 0.513 mm | 90.83% |

| iPhone X– Nikon D5200 | 4.120 mm | 0.808 mm | 77.97% | |

| Heracles | iPhoneX–Faro TLS | −0.030 mm | 1.932 mm | 67.41% |

| iPhone X– Nikon D5200 | −0.130 mm | 1.873 mm | 67.94% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Apollonio, F.I.; Fantini, F.; Garagnani, S.; Gaiani, M. A Photogrammetry-Based Workflow for the Accurate 3D Construction and Visualization of Museums Assets. Remote Sens. 2021, 13, 486. https://doi.org/10.3390/rs13030486

Apollonio FI, Fantini F, Garagnani S, Gaiani M. A Photogrammetry-Based Workflow for the Accurate 3D Construction and Visualization of Museums Assets. Remote Sensing. 2021; 13(3):486. https://doi.org/10.3390/rs13030486

Chicago/Turabian StyleApollonio, Fabrizio Ivan, Filippo Fantini, Simone Garagnani, and Marco Gaiani. 2021. "A Photogrammetry-Based Workflow for the Accurate 3D Construction and Visualization of Museums Assets" Remote Sensing 13, no. 3: 486. https://doi.org/10.3390/rs13030486

APA StyleApollonio, F. I., Fantini, F., Garagnani, S., & Gaiani, M. (2021). A Photogrammetry-Based Workflow for the Accurate 3D Construction and Visualization of Museums Assets. Remote Sensing, 13(3), 486. https://doi.org/10.3390/rs13030486