Performance and Feasibility of Drone-Mounted Imaging Spectroscopy for Invasive Aquatic Vegetation Detection

Abstract

:1. Introduction

2. Materials and Methods

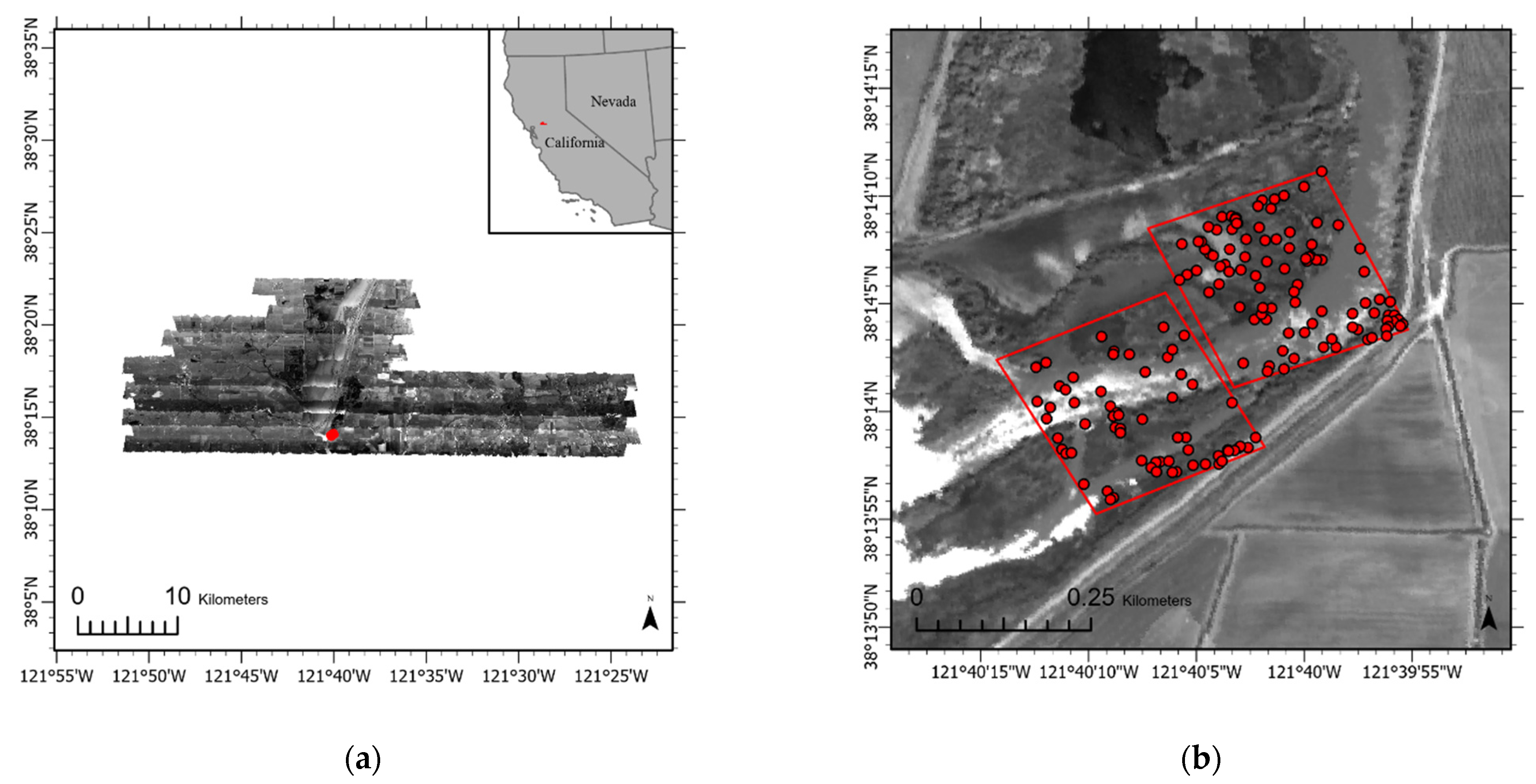

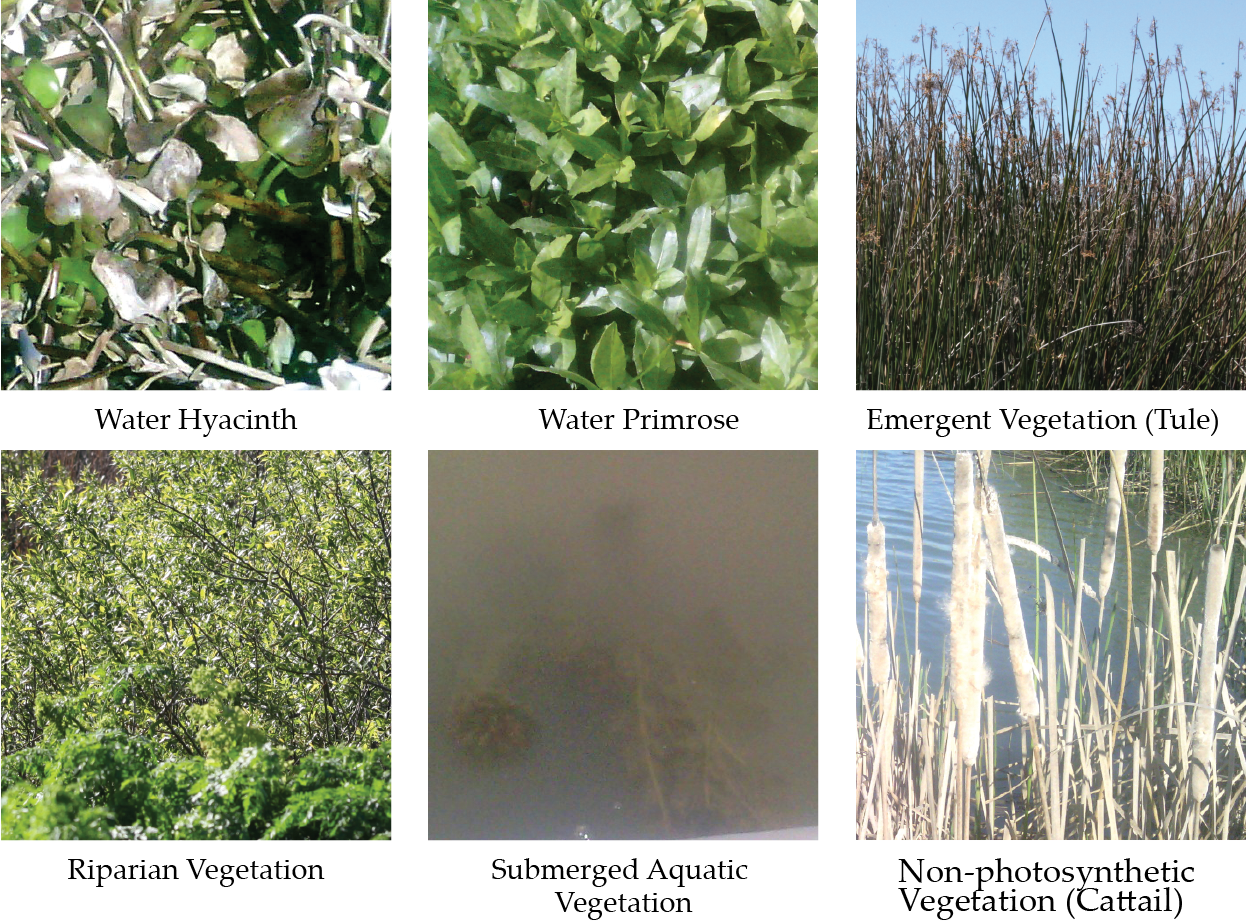

2.1. Study Site and Target Classes

2.2. Imaging Spectroscopy Data

2.3. Ground Reference Data

2.4. Classifier

RF Modeling

2.5. Mapping

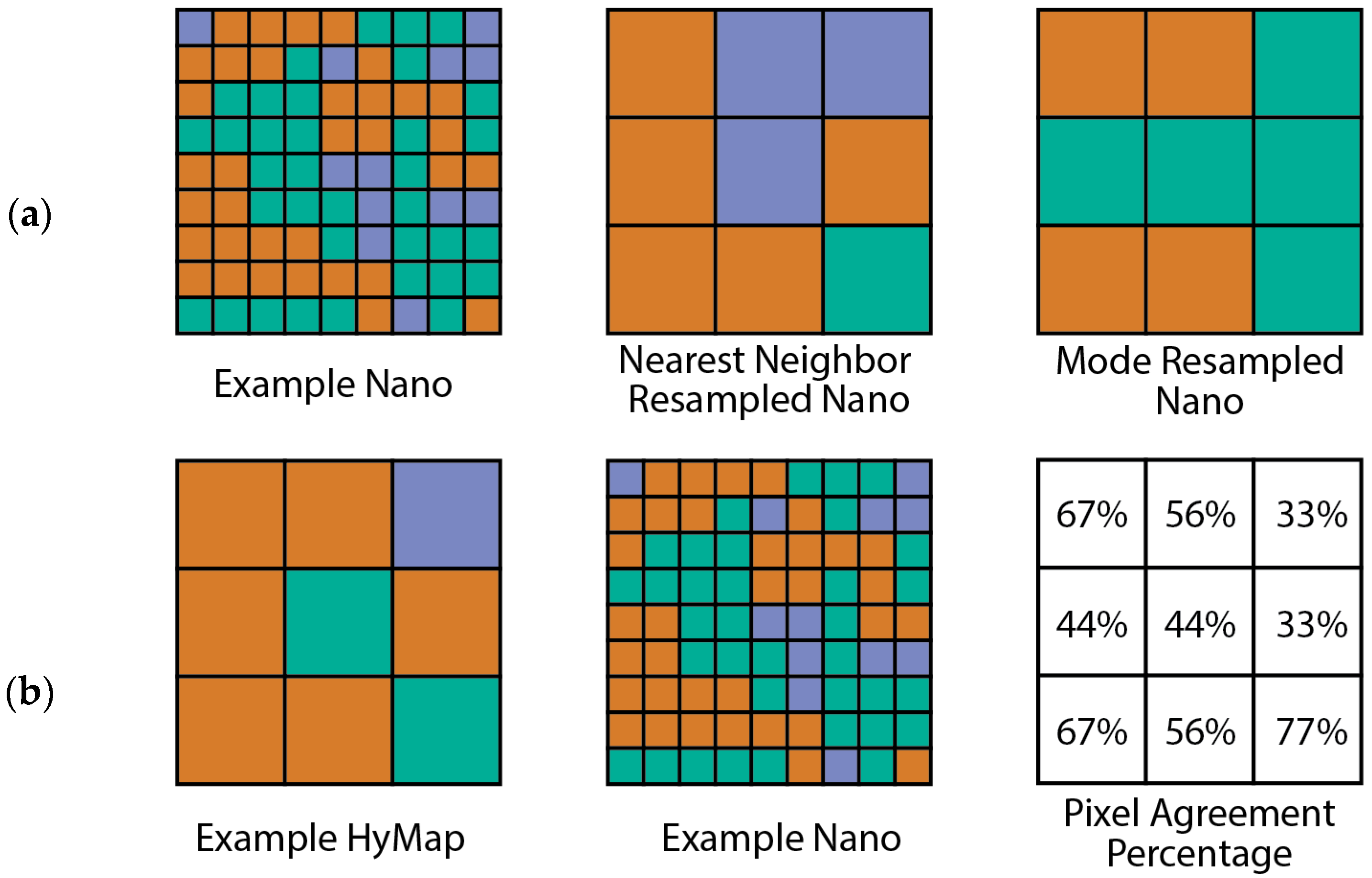

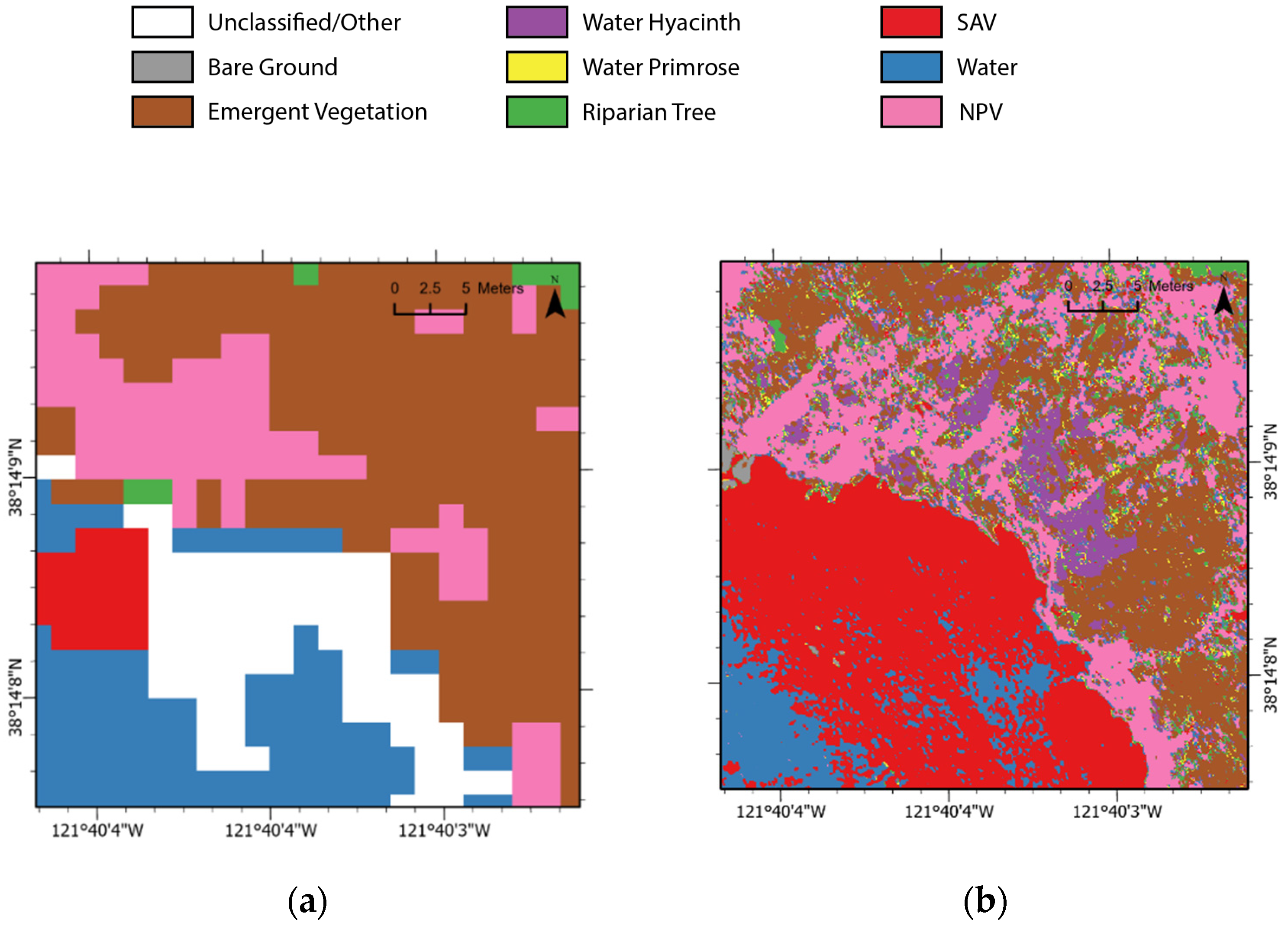

Map Comparisons

- Class Area

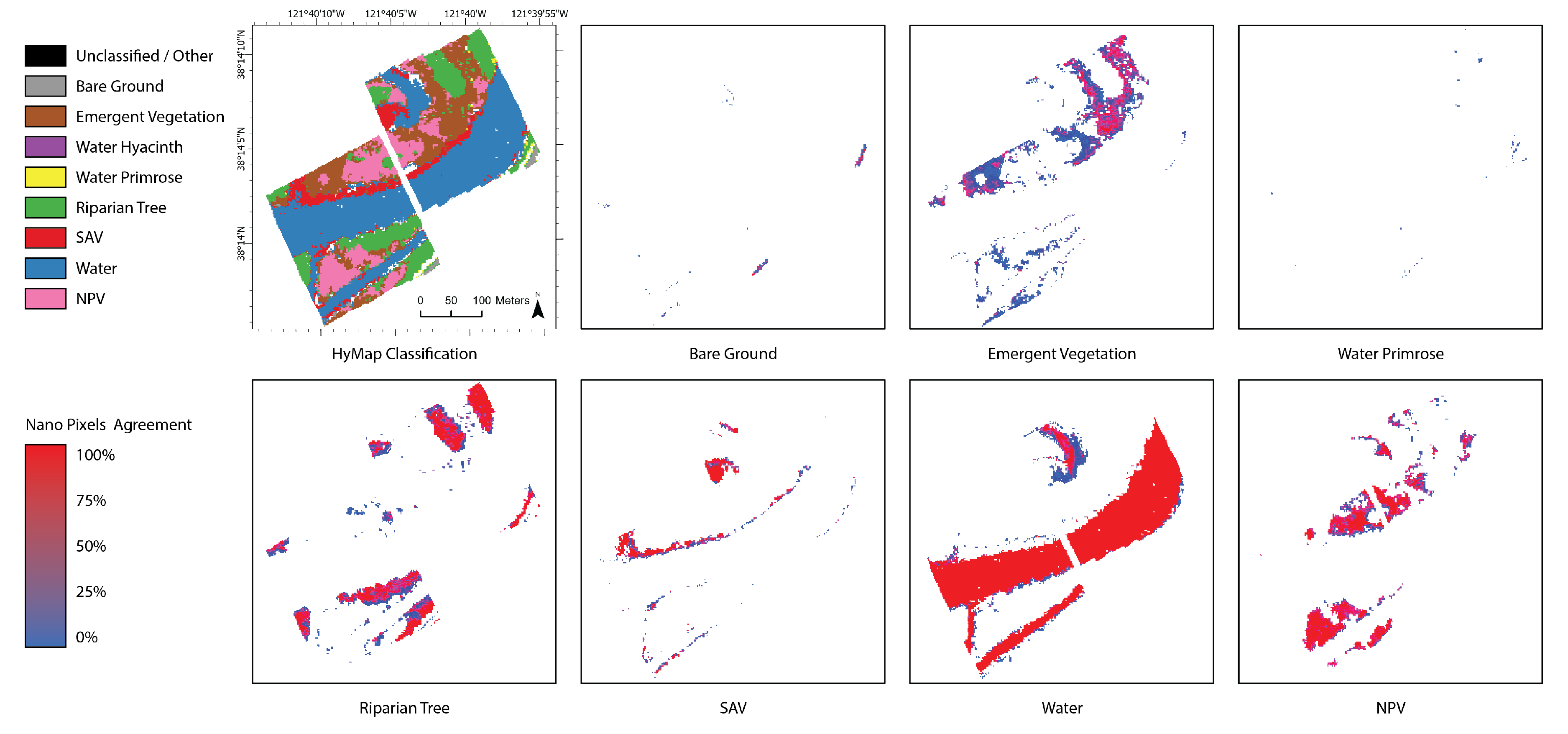

- Percentage of Nano pixels in agreement with HyMap pixels

- Upscaled Nano agreement with HyMap

3. Results

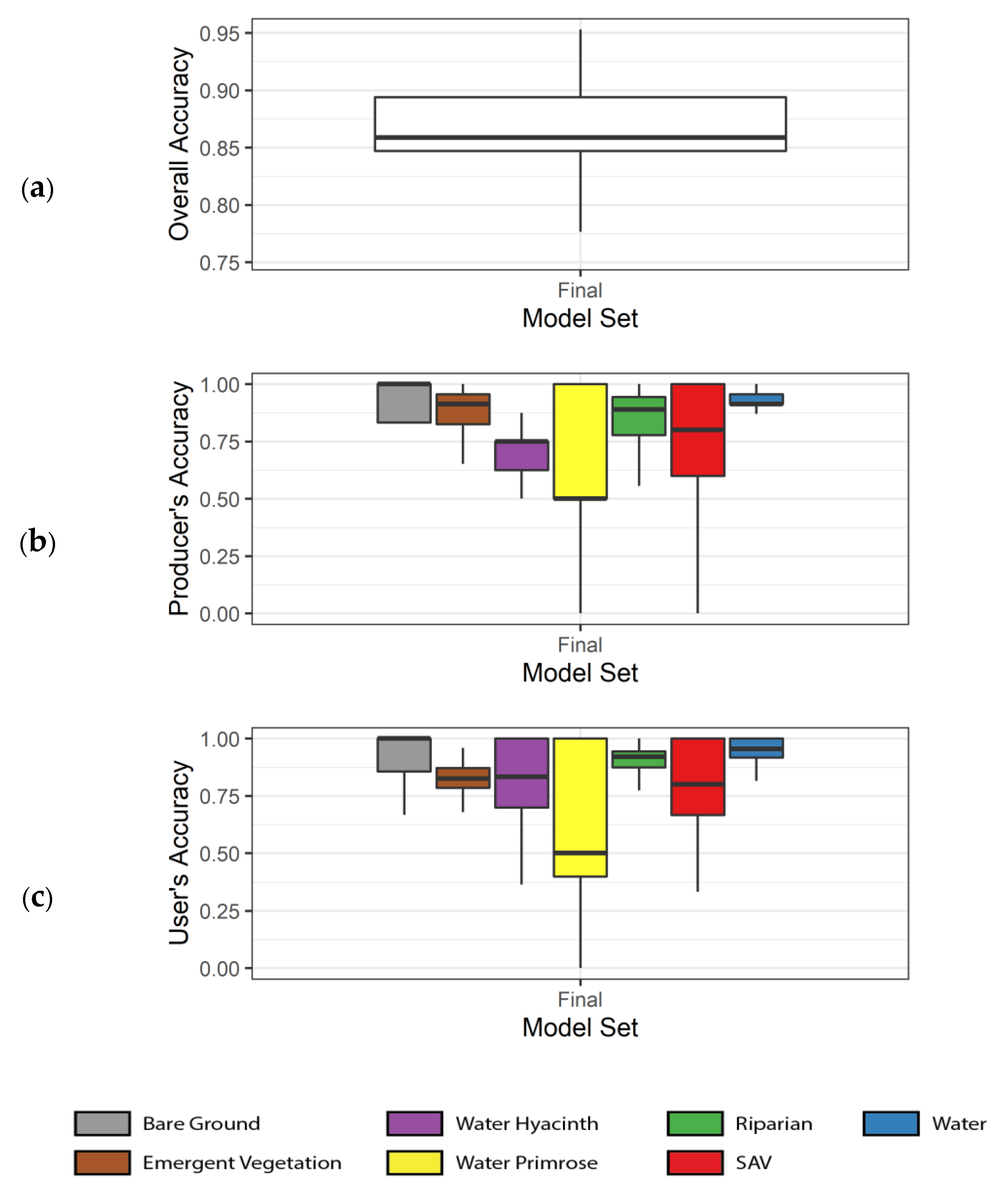

3.1. Model Accuracies

3.2. Variable Reduction and Importance

3.3. Maps

3.3.1. Class Area Comparison

3.3.2. Percent Pixel Agreement

3.3.3. Upscaled Nano Maps Compared to HyMap Comparisons

4. Discussion

4.1. Model Accuracies and Comparison

4.2. Nano vs. HyMap Maps: How Do They Compare?

4.3. Management Relevance and Operational Considerations

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Bolch, E.A.; Santos, M.J.; Ade, C.; Khanna, S.; Basinger, N.T.; Reader, M.O.; Hestir, E.L. Remote Detection of Invasive Alien Species. In Remote Sensing of Plant Biodiversity; Cavender-Bares, J., Gamon, J., Townsend, P., Eds.; Springer Nature: Cham, Switzerland, 2020; pp. 267–308. ISBN 978-3-030-33156-6. [Google Scholar]

- Pimentel, D.; Zuniga, R.; Morrison, D. Update on the environmental and economic costs associated with alien-invasive species in the United States. Ecol. Econ. 2005, 52, 273–288. [Google Scholar] [CrossRef]

- Byers, J.E.; Noonburg, E.G. Scale dependent effects of biotic resistance to biological invasion. Ecology 2003, 84, 1428–1433. [Google Scholar] [CrossRef]

- Khanna, S.; Santos, M.J.; Boyer, J.D.; Shapiro, K.D.; Bellvert, J.; Ustin, S.L. Water primrose invasion changes successional pathways in an estuarine ecosystem. Ecosphere 2018, 9, e02418. [Google Scholar] [CrossRef]

- Allen, J.M.; Bradley, B.A. Out of the weeds? Reduced plant invasion risk with climate change in the continental United States. Biol. Conserv. 2016, 203, 306–312. [Google Scholar] [CrossRef] [Green Version]

- Ricciardi, A. Are modern biological invasions an unprecedented form of global change? Conserv. Biol. 2007. [CrossRef] [PubMed]

- Seebens, H.; Blackburn, T.M.; Dyer, E.E.; Genovesi, P.; Hulme, P.E.; Jeschke, J.M.; Pagad, S.; Pyšek, P.; Winter, M.; Arianoutsou, M.; et al. No saturation in the accumulation of alien species worldwide. Nat. Commun. 2017, 8, 14435. [Google Scholar] [CrossRef]

- Mortensen, D.A.; Rauschert, E.S.J.; Nord, A.N.; Jones, B.P. Forest Roads Facilitate the Spread of Invasive Plants. Invasive Plant Sci. Manag. 2009, 2, 191–199. [Google Scholar] [CrossRef]

- Masters, G.; Norgrove, L. Climate change and invasive alien species. UK CABI Work. Pap. 2010, 1. [Google Scholar]

- Hulme, P.E. Invasion pathways at a crossroad: Policy and research challenges for managing alien species introductions. J. Appl. Ecol. 2015, 52, 1418–1424. [Google Scholar] [CrossRef]

- UN General Assembly. Transforming our world: The 2030 Agenda for Sustainable Development. United Nations A/RES/70/1. New York, NY, USA, 21 October 2015. Available online: https://www.un.org/en/development/desa/population/migration/generalassembly/docs/globalcompact/A_RES_70_1_E.pdf (accessed on 29 January 2021).

- Hestir, E.L.; Khanna, S.; Andrew, M.E.; Santos, M.J.; Viers, J.H.; Greenberg, J.A.; Rajapakse, S.S.; Ustin, S.L. Identification of invasive vegetation using hyperspectral remote sensing in the California Delta ecosystem. Remote Sens. Environ. 2008, 112, 4034–4047. [Google Scholar] [CrossRef]

- Jollineau, M.Y.; Howarth, P.J. Mapping an inland wetland complex using hyperspectral imagery. Int. J. Remote Sens. 2008, 29, 3609–3631. [Google Scholar] [CrossRef]

- Hunter, P.D.; Gilvear, D.J.; Tyler, A.N.; Willby, N.J.; Kelly, A. Mapping macrophytic vegetation in shallow lakes using the Compact Airborne Spectrographic Imager (CASI). Aquat. Conserv. Mar. Freshw. Ecosyst. 2010, 20, 717–727. [Google Scholar] [CrossRef]

- Khanna, S.; Santos, M.J.; Ustin, S.L.; Haverkamp, P.J. International Journal of Remote Sensing An integrated approach to a biophysiologically based classification of floating aquatic macrophytes. Int. J. Remote Sens. 2011, 32, 1067–1094. [Google Scholar] [CrossRef]

- Hestir, E.L.; Greenberg, J.A.; Ustin, S.L. Classification trees for aquatic vegetation community prediction using imaging spectroscopy. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2012, 5, 1572–1584. [Google Scholar] [CrossRef]

- Zhao, D.; Jiang, H.; Yang, T.; Cai, Y.; Xu, D.; An, S. Remote sensing of aquatic vegetation distribution in Taihu Lake using an improved classification tree with modified thresholds. J. Environ. Manag. 2012, 95, 98–107. [Google Scholar] [CrossRef]

- Santos, M.J.; Khanna, S.; Hestir, E.L.; Andrew, M.E.; Rajapakse, S.S.; Greenberg, J.A.; Anderson, L.W.J.; Ustin, S.L. Use of Hyperspectral Remote Sensing to Evaluate Efficacy of Aquatic Plant Management. Invasive Plant Sci. Manag. 2009, 2, 216–229. [Google Scholar] [CrossRef]

- Doughty, C.L.; Cavanaugh, K.C. Mapping coastal wetland biomass from high resolution unmanned aerial vehicle (UAV) imagery. Remote Sens. 2019, 11, 540. [Google Scholar] [CrossRef] [Green Version]

- Zhong, Y.; Wang, X.; Xu, Y.; Wang, S.; Jia, T.; Hu, X.; Zhao, J.; Wei, L.; Zhang, L. Mini-UAV-Borne Hyperspectral Remote Sensing: From Observation and Processing to Applications. IEEE Geosci. Remote Sens. Mag. 2018, 6, 46–62. [Google Scholar] [CrossRef]

- Turner, D.J.; Malenovsky, Z.; Lucieer, A.; Turnbull, J.D.; Robinson, S.A. Optimizing Spectral and Spatial Resolutions of Unmanned Aerial System Imaging Sensors for Monitoring Antarctic Vegetation. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 3813–3825. [Google Scholar] [CrossRef]

- Banerjee, B.P.; Raval, S.; Cullen, P.J. UAV-hyperspectral imaging of spectrally complex environments. Int. J. Remote Sens. 2020, 41, 4136–4159. [Google Scholar] [CrossRef]

- Melville, B.; Lucieer, A.; Aryal, J. Classification of Lowland Native Grassland Communities Using Hyperspectral Unmanned Aircraft System (UAS) Imagery in the Tasmanian Midlands. Drones 2019, 3, 5. [Google Scholar] [CrossRef] [Green Version]

- Tabacchi, E.; Correll, D.L.; Hauer, R.; Pinay, G.; Planty-Tabacchi, A.M.; Wissmar, R.C. Development, maintenance and role of riparian vegetation in the river landscape. Freshw. Biol. 1998, 40, 497–516. [Google Scholar] [CrossRef]

- Barbier, E.B.; Hacker, S.D.; Kennedy, C.; Koch, E.W.; Stier, A.C.; Silliman, B.R. The value of estuarine and coastal ecosystem services. Ecol. Monogr. 2011, 81, 169–193. [Google Scholar] [CrossRef]

- Cohen, A.N.; Carlton, J.T. Accelerating invasion rate in a highly invaded estuary. Science 1998, 279, 555–558. [Google Scholar] [CrossRef] [Green Version]

- Khanna, S.; Acuña, S.; Contreras, D.; Griffiths, W.K.; Lesmeister, S.; Reyes, R.C.; Schreier, B.; Wu, B.J. Invasive Aquatic Vegetation Impacts on Delta Operations, Monitoring, and Ecosystem and Human Health. Interag. Ecol. Progr. Newsl. 2019, 34, 8–19. [Google Scholar]

- Hestir, E.L.; Schoellhamer, D.H.; Greenberg, J.; Morgan-King, T.; Ustin, S.L. The Effect of Submerged Aquatic Vegetation Expansion on a Declining Turbidity Trend in the Sacramento-San Joaquin River Delta. Estuaries Coasts 2016, 39, 1100–1112. [Google Scholar] [CrossRef] [Green Version]

- Tobias, V.D.; Conrad, J.L.; Mahardja, B.; Khanna, S. Impacts of water hyacinth treatment on water quality in a tidal estuarine environment. Biol. Invasions 2019, 21, 3479–3490. [Google Scholar] [CrossRef] [Green Version]

- Conrad, J.L.; Chapple, D.; Bush, E.; Hard, E.; Caudill, J.; Madsen, J.D.; Pratt, W.; Acuna, S.; Rasmussen, N.; Gilbert, P.; et al. Critical Needs for Control of Invasive Aquatic Vegetation in the Sacramento-San Joaquin Delta (Report). Delta Stewardship Council. 2020. Available online: https://www.deltacouncil.ca.gov/pdf/dpiic/meeting-materials/2020-03-02-item-4-aquatic-weeds-paper.pdf (accessed on 29 January 2021).

- Underwood, E.C.; Mulitsch, M.J.; Greenberg, J.A.; Whiting, M.L.; Ustin, S.L.; Kefauver, S.C. Mapping invasive aquatic vegetation in the sacramento-san Joaquin Delta using hyperspectral imagery. Environ. Monit. Assess. 2006, 121, 47–64. [Google Scholar] [CrossRef]

- Ustin, S.L.; Khanna, S.; Lay, M.; Shapiro, K.D. Enhancement of Delta Smelt (Hypomesus transpacificus) Habitat through Adaptive Management of Invasive Aquatic Weeds in the Sacramento-San Joaquin Delta & Suisun; Report; California Department of Water Resources: Sacramento, CA, USA, 2019. [Google Scholar]

- Cohen, A.N.; Carlton, J.T. Nonindigenous aquatic species in a United States estuary: A case study of the biological invasions of the San Francisco Bay and Delta (Report). US Fish and Wildlife Service. 1995. Available online: http://bioinvasions.org/wp-content/uploads/1995-SFBay-Invasion-Report.pdf (accessed on 29 January 2021).

- Venugopal, G. Monitoring the Effects of Biological Control of Water Hyacinths Using Remotely Sensed Data: A Case Study of Bangalore, India. Singap. J. Trop. Geogr. 2002, 19, 91–105. [Google Scholar] [CrossRef]

- Jetter, K.M.; Nes, K.; Tahoe, L. The cost to manage invasive aquatic weeds in the California Bay-Delta. ARE Updat. 2018, 21, 9–11. [Google Scholar]

- Toft, J.D.; Simenstad, C.A.; Cordell, J.R.; Grimaldo, L.F. The Effects of Introduced Water Hyacinth on Habitat Structure, Invertebrate Assemblages, and Fish Diets. Estuar. Res. Fed. Estuaries 2003, 26, 746–758. [Google Scholar] [CrossRef]

- Santos, M.J.; Anderson, L.W.; Ustin, S.L.; Santos, M.J.; Ustin, S.L.; Anderson, L.W. Effects of invasive species on plant communities: An example using submersed aquatic plants at the regional scale. Biol. Invasions 2011, 13, 443–457. [Google Scholar] [CrossRef] [Green Version]

- Cocks, T.; Jenssen, R.; Stewart, A.; Wilson, I.; Shields, T. The hymap TM airborne hyperspectral sensor: The system, calibration and performance. In Proceedings of the 1st EARSeL workshop on Imaging Spectroscopy, Zurich, Switzerland, 6–8 October 1998; pp. 37–42. [Google Scholar]

- Ustin, S.L.; Khanna, S.; Lay, M.; Shapiro, K.D. Enhancement of Delta Smelt (Hypomesus transpacificus) Habitat through Adaptive Management of Invasive Aquatic Weeds in the Sacramento-San Joaquin Delta; Report; California Department of Fish and Wildlife: Sacramento, CA, USA, 2018. [Google Scholar]

- Mellor, A.; Boukir, S.; Haywood, A.; Jones, S. Exploring issues of training data imbalance and mislabelling on random forest performance for large area land cover classification using the ensemble margin. ISPRS J. Photogramm. Remote Sens. 2015, 105, 155–168. [Google Scholar] [CrossRef]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef] [Green Version]

- Ustin, S.L.; Khanna, S.; Lay, M.; Shapiro, K.; Ghajarnia, N. Remote Sensing of the Sacramento-San Joaquin Delta to Enhance Mapping for Invasive and Native Aquatic Vegetation Plant Species; Report; California Department of Water Resources: Sacramento, CA, USA, 2020. [Google Scholar]

- Burai, P.; Deák, B.; Valkó, O.; Tomor, T. Classification of Herbaceous Vegetation Using Airborne Hyperspectral Imagery. Remote Sens. 2015, 7, 2046–2066. [Google Scholar] [CrossRef] [Green Version]

- Marcinkowska-Ochtyra, A.; Jarocińska, A.; Bzdęga, K.; Tokarska-Guzik, B. Classification of Expansive Grassland Species in Different Growth Stages Based on Hyperspectral and LiDAR Data. Remote Sens. 2018, 10, 2019. [Google Scholar] [CrossRef] [Green Version]

- Vapnik, V. Pattern recognition using generalized portrait method. Autom. Remote. Control 1963, 24, 774–780. [Google Scholar]

- Atkinson, J.T.; Ismail, R.; Robertson, M. Mapping Bugweed (Solanum mauritianum) Infestations in Pinus patula Plantations Using Hyperspectral Imagery and Support Vector Machines. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 17–28. [Google Scholar] [CrossRef]

- Ghosh, A.; Fassnacht, F.E.; Joshi, P.K.; Kochb, B. A framework for mapping tree species combining hyperspectral and LiDAR data: Role of selected classifiers and sensor across three spatial scales. Int. J. Appl. Earth Obs. Geoinf. 2014, 26, 49–63. [Google Scholar] [CrossRef]

- Sabat-Tomala, A.; Raczko, E.; Zagajewski, B. Comparison of Support Vector Machine and Random Forest Algorithms for Invasive and Expansive Species Classification Using Airborne Hyperspectral Data. Remote Sens. 2020, 12, 516. [Google Scholar] [CrossRef] [Green Version]

- Santos, M.J.; Hestir, E.L.; Khanna, S.; Ustin, S.L. Image spectroscopy and stable isotopes elucidate functional dissimilarity between native and nonnative plant species in the aquatic environment. New Phytol. 2012, 193, 683–695. [Google Scholar] [CrossRef] [PubMed]

- Santos, M.J.; Khanna, S.; Hestir, E.L.; Greenberg, J.A.; Ustin, S.L. Measuring landscape-scale spread and persistence of an invaded submerged plant community from airborne Remote sensing. Ecol. Appl. 2016, 26, 1733–1744. [Google Scholar] [CrossRef]

- Khanna, S.; Santos, M.J.; Hestir, E.L.; Ustin, S.L. Plant community dynamics relative to the changing distribution of a highly invasive species, Eichhornia crassipes: A remote sensing perspective. Biol. Invasions 2012, 14, 717–733. [Google Scholar] [CrossRef]

- Kuhn, M. Building Predictive Models in R Using the caret Package. J. Stat. Softw. Artic. 2008, 28, 1–26. [Google Scholar] [CrossRef] [Green Version]

- Liaw, A.; Wiener, M. Classification and Regression by randomForest. R News 2002, 2, 18–22. [Google Scholar]

- Coulston, J.W.; Blinn, C.E.; Thomas, V.A.; Wynne, R.H. Approximating prediction uncertainty for random forest regression models. Photogramm. Eng. Remote Sens. 2016, 82, 189–197. [Google Scholar] [CrossRef] [Green Version]

- Loosvelt, L.; Peters, J.; Skriver, H.; Lievens, H.; Van Coillie, F.M.B.; De Baets, B.; Verhoest, N.E.C. Random Forests as a tool for estimating uncertainty at pixel-level in SAR image classification. Int. J. Appl. Earth Obs. Geoinf. 2012, 19, 173–184. [Google Scholar] [CrossRef]

- Sinha, P.; Gaughan, A.E.; Stevens, F.R.; Nieves, J.J.; Sorichetta, A.; Tatem, A.J. Assessing the spatial sensitivity of a random forest model: Application in gridded population modeling. Comput. Environ. Urban Syst. 2019, 75, 132–145. [Google Scholar] [CrossRef]

- Story, M.; Congalton, R.G. Accuracy Assessment: A User’s Perspective. Photogramm. Eng. Remote Sens. 1986, 52, 397–399. [Google Scholar]

- Foody, G.M. Explaining the unsuitability of the kappa coefficient in the assessment and comparison of the accuracy of thematic maps obtained by image classification. Remote Sens. Environ. 2020, 239, 111630. [Google Scholar] [CrossRef]

- Pontius, R.G.; Millones, M. Death to Kappa: Birth of quantity disagreement and allocation disagreement for accuracy assessment. Int. J. Remote Sens. 2011, 32, 4407–4429. [Google Scholar] [CrossRef]

- Lenhert, L.W.; Meyer, H.; Obermeier, W.A.; Silva, B.; Regeling, B.; Thies, B.; Bendix, J. Hyperspectral Data Analysis in R: The hsdar Package. J. Stat. Softw. 2019, 89, 1–23. [Google Scholar] [CrossRef] [Green Version]

- Gonçalves, J.; Pôças, I.; Marcos, B.; Mücher, C.A.; Honrado, J.P. SegOptim-A new R package for optimizing object-based image analyses of high-spatial resolution remotely-sensed data. Int. J. Appl. Earth Obs. Geoinf. 2019, 76, 218–230. [Google Scholar] [CrossRef]

- Grizonnet, M.; Michel, J.; Poughon, V.; Inglada, J.; Savinaud, M.; Cresson, R. Orfeo ToolBox: Open source processing of remote sensing images. Open Geospat. Data Softw. Stand. 2017, 2, 15. [Google Scholar] [CrossRef] [Green Version]

- Radoux, J.; Defourny, P. A quantitative assessment of boundaries in automated forest stand delineation using very high resolution imagery. Remote Sens. Environ. 2007, 110, 468–475. [Google Scholar] [CrossRef]

- Whiteside, T.G.; Boggs, G.S.; Maier, S.W. Comparing object-based and pixel-based classifications for mapping savannas. Int. J. Appl. Earth Obs. Geoinf. 2011, 13, 884–893. [Google Scholar] [CrossRef]

- Fox, E.W.; Hill, R.A.; Leibowitz, S.G.; Olsen, A.R.; Thornbrugh, D.J.; Weber, M.H. Assessing the accuracy and stability of variable selection methods for random forest modeling in ecology. Environ. Monit. Assess. 2017, 189, 316. [Google Scholar] [CrossRef]

- Pearson, K.X. On the criterion that a given system of deviations from the probable in the case of a correlated system of variables is such that it can be reasonably supposed to have arisen from random sampling. Lond. Edinb. Dublin Philos. Mag. J. Sci. 1900, 50, 157–175. [Google Scholar] [CrossRef] [Green Version]

- Hestir, E.L.; Brando, V.E.; Bresciani, M.; Giardino, C.; Matta, E.; Villa, P.; Dekker, A.G. Measuring freshwater aquatic ecosystems: The need for a hyperspectral global mapping satellite mission. Remote Sens. Environ. 2015, 167, 181–195. [Google Scholar] [CrossRef] [Green Version]

- Ustin, S.L.; Roberts, D.A.; Gamon, J.A.; Asner, G.P.; Green, R.O. Using imaging spectroscopy to study ecosystem processes and properties. Bioscience 2004, 54, 523–534. [Google Scholar] [CrossRef]

- Belgiu, M.; Drăgu, L. Random forest in remote sensing: A review of applications and future directions. ISPRS J. Photogramm. Remote Sens. 2016, 114, 24–31. [Google Scholar] [CrossRef]

- Ghamisi, P.; Yokoya, N.; Li, J.; Liao, W.; Liu, S.; Plaza, J.; Rasti, B.; Plaza, A. Advances in Hyperspectral Image and Signal Processing: A Comprehensive Overview of the State of the Art. IEEE Geosci. Remote Sens. Mag. 2017, 5, 37–78. [Google Scholar] [CrossRef] [Green Version]

- Kaewpijit, S.; Moigne, J.L.; El-Ghazawi, T. Automatic reduction of hyperspectral imagery using wavelet spectral analysis. IEEE Trans. Geosci. Remote Sens. 2003, 41, 863–871. [Google Scholar] [CrossRef]

- Agarwal, A.; El-Ghazawi, T.; El-Askary, H.; Le-Moigne, J. Efficient hierarchical-PCA dimension reduction for hyperspectral imagery. In Proceedings of the ISSPIT 2007–2007 IEEE International Symposium on Signal Processing and Information Technology, Giza, Egypt, 15–18 December 2007; pp. 353–356. [Google Scholar]

- Millard, K.; Richardson, M. Wetland mapping with LiDAR derivatives, SAR polarimetric decompositions, and LiDAR–SAR fusion using a random forest classifier. Can. J. Remote Sens. 2013, 39, 290–307. [Google Scholar] [CrossRef]

- Millard, K.; Richardson, M. On the importance of training data sample selection in Random Forest image classification: A case study in peatland ecosystem mapping. Remote Sens. 2015, 7, 8489–8515. [Google Scholar] [CrossRef] [Green Version]

- Breidenbach, J.; Næsset, E.; Lien, V.; Gobakken, T.; Solberg, S. Prediction of species specific forest inventory attributes using a nonparametric semi-individual tree crown approach based on fused airborne laser scanning and multispectral data. Remote Sens. Environ. 2010, 114, 911–924. [Google Scholar] [CrossRef]

- Congalton, R.G.; Green, K. Assessing the Accuracy of Remotely Sensed Data; CRC Press: Boca Raton, FL, USA, 2019; ISBN 9780429052729. [Google Scholar]

- He, H.S.; Ventura, S.J.; Mladenoff, D.J. Effects of spatial aggregation approaches on classified satellite imagery. Int. J. Geogr. Inf. Sci. 2002, 16, 93–109. [Google Scholar] [CrossRef]

| Map Class | Description |

|---|---|

| Unclassified | Unclassified land cover or area outside of analysis |

| Bare Ground | Asphalt, gravel, levee riprap, and bare soil |

| Emergent Vegetation (EMR) | Cat tail (Typha spp.), common reed (Phragmites australis), giant reed (Arundo donax), and tule (Schoenoplectus spp.) |

| Water Hyacinth | Water Hyacinth (Eichhornia crassipes) |

| Water Primrose | Water Primrose (Ludwigia spp.) |

| Riparian | Shrubs and trees in the area including willow species (Salix spp.) |

| Submerged Aquatic Vegetation (SAV) | Numerous species; dominant ones include: Brazillian waterweed (Egeria densa), coontail (Ceratophyllum demersum), and watermilfoil (Myriophyllum spicatum) [37] |

| Water | Water |

| Other Vegetation | Species or cover not observed in the UAV study region including pennywort (Hydrocotyle spp.) and mosquito fern (Azolla spp.) |

| Non-Photosynthetic Vegetation (NPV) | Senescent or dead vegetation |

| HyMap | Nano-Hyperspec | |

|---|---|---|

| Type | whiskbroom | pushbroom |

| Spectral Range | 450–2480 nm | 400–1000 nm |

| Number of bands | 128 | 270 |

| Spectral Resolution | 15–18 nm | 2.2 nm |

| Signal to Noise | >500:1 | >15:1 (1000 nm) < 140:1 (550 nm) |

| Spatial Resolution * | 1.7 m | 0.051 m |

| Swath Width (FOV°) | 61.3 | 15.85 |

| Operational Altitude | >458 m ** | <122 m *** |

| Platform | 1975 Rockwell International 500-S | DJI-M600P |

| Year | Sensor | Classification Model | Overall Accuracy | Kappa Coefficient |

|---|---|---|---|---|

| 2019 | Nano | RFC 153 | 0.941 | 0.926 |

| 2019 | HyMap | CSTARS | 0.857 | 0.830 |

| 2018 | HyMap | CSTARS | 0.908 | 0.900 |

| Class | HyMap Area (m2) | Nano Area (m2) | Nano NN Resampled Area (m2) | Nano MW Resampled Area (m2) |

|---|---|---|---|---|

| Bare ground | 858.3 | 930.4 | 956.6 | 1002.8 |

| EMR | 19,097.1 | 12,077.1 | 14,687 | 11,666.9 |

| Water Hyacinth | 0.00 | 1589.4 | 124.3 | 145 |

| Water Primrose | 349.7 | 1616.5 | 317.9 | 17.3 |

| Riparian | 14,952.9 | 12,583 | 11,456 | 13,146.6 |

| SAV | 6988 | 9023 | 8594.9 | 8869.4 |

| Water | 41,269.2 | 44,822.9 | 42,422.3 | 41,257.6 |

| Other | 679.2 | 0 | 0 | 0 |

| NPV | 13,577.2 | 16,989.3 | 20,883.1 | 21,177.9 |

| Class | Mean Agreement | Standard Deviation |

|---|---|---|

| Bare Ground | 31.92% | 3.15% |

| Emergent | 30.82% | 28.77%. |

| Water Hyacinth | NA | NA |

| Water Primrose | 4.17% | 6.63% |

| Riparian | 62.62% | 36.97% |

| SAV | 47.64% | 43.12% |

| Water | 88.50% | 28.67% |

| NPV | 69.17% | 32.71% |

| Type | Class | Resampled | Moving Window |

|---|---|---|---|

| Overall Error | 29.18% | 28.12% | |

| Omission Error | Bare ground | 63.97% | 61.51% |

| EMR | 58.95% | 58.62% | |

| Water Primrose | 100.00% | 100.00% | |

| Riparian Shrub | 32.56% | 30.04% | |

| SAV | 50.79% | 50.52% | |

| Water | 11.54% | 11.13% | |

| NPV | 18.55% | 15.96% | |

| Commission Error | Bare ground | 67.72% | 67.72% |

| EMR | 32.65% | 32.65% | |

| Water Primrose | 100.00% | 100.00% | |

| Riparian Shrub | 21.10% | 21.10% | |

| SAV | 61.19% | 61.19% | |

| Water | 11.34% | 11.34% | |

| NPV | 46.38% | 46.38% |

| Sensor | Area Covered (hectares) | Flight Time Estimate (hrs) | Approximate Data Volume (GB) | Approximate Deployment Costs |

|---|---|---|---|---|

| HyMap | 74,123.42 | 16 | 700 | $150,000 |

| Nano—This Study | 10.53 | 1 | 600 | $62,780 |

| Nano—Entire Delta | 74,123.42 | 7040 | 2,111,880 | $865,014 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bolch, E.A.; Hestir, E.L.; Khanna, S. Performance and Feasibility of Drone-Mounted Imaging Spectroscopy for Invasive Aquatic Vegetation Detection. Remote Sens. 2021, 13, 582. https://doi.org/10.3390/rs13040582

Bolch EA, Hestir EL, Khanna S. Performance and Feasibility of Drone-Mounted Imaging Spectroscopy for Invasive Aquatic Vegetation Detection. Remote Sensing. 2021; 13(4):582. https://doi.org/10.3390/rs13040582

Chicago/Turabian StyleBolch, Erik A., Erin L. Hestir, and Shruti Khanna. 2021. "Performance and Feasibility of Drone-Mounted Imaging Spectroscopy for Invasive Aquatic Vegetation Detection" Remote Sensing 13, no. 4: 582. https://doi.org/10.3390/rs13040582