Polish Cadastre Modernization with Remotely Extracted Buildings from High-Resolution Aerial Orthoimagery and Airborne LiDAR

Abstract

:1. Introduction

2. Deep Learning-Based Building Extraction-Related Works

3. Study Area, Materials and Methods

3.1. Study Area

3.2. Data Used

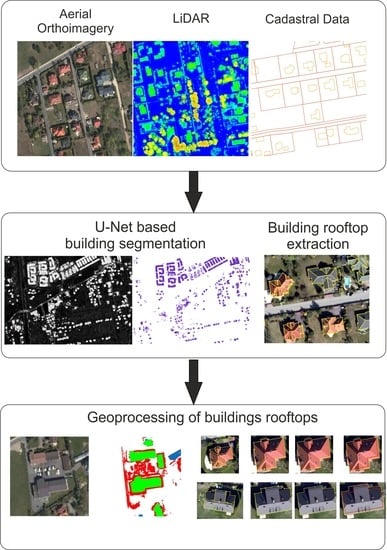

3.3. Workflow and Applied

3.3.1. Building Extraction by U-Net

3.3.2. Building Rooftop Extraction Using LiDAR Data

3.3.3. Geoprocessing of Building Roof Outlines and Final Evaluation of Building Extraction

4. Results

4.1. Buildings Segmentation

- variation in the spatial pattern of buildings and their surroundings, i.e., trees, paved roads, driveways, vehicles, porches, small garden houses or play-grounds;

- multiple colors of roofs, i.e., reddish, grayish, whitish and greenish, as well as roof installations such as satellite TV antennas, solar panels, dormers;

- building types, e.g., single-family detached or attached, semi-detached, multi-family buildings.

4.2. Building Rooftop Patches Extraction

4.3. Geoprocessing of Building Rooftop Outlines

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Enemark, S.; Williamson, I.; Wallace, J. Building Modern Land Administration Systems in Developed Economies. J. Spat. Sci. 2005, 50, 51–68. [Google Scholar] [CrossRef]

- Choi, H.O. An Evolutionary Approach to Technology Innovation of Cadastre for Smart Land Management Policy. Land 2020, 9, 50. [Google Scholar] [CrossRef] [Green Version]

- Williamson, L.P.; Ting, L. Land administration and cadastral trends—A framework for re-engineering. Comput. Environ. Urban Syst. 2001, 25, 339–366. [Google Scholar] [CrossRef] [Green Version]

- Bennett, R.; Wallace, J.; Williamson, I. Organising land information for sustainable land administration. Land Use Policy 2008, 25, 126–138. [Google Scholar] [CrossRef]

- Agenda 21. Action Programme—Agenda 21. 1992. Available online: https://sustainabledevelopment.un.org/content/documents/Agenda21.pdf (accessed on 25 September 2020).

- Agenda 2030. Transforming Our World: The 2030 Agenda for Sustainable Development. Available online: https://sdgs.un.org/2030agenda (accessed on 25 September 2020).

- UN Economic and Social Council. Progress towards the Sustainable Development Goals. Report of the Secretary-General. E/2017/66, 28 July 2016–27 July 2017. Available online: https://www.un.org/ga/search/view_doc.asp?symbol=E/2017/66&Lang=E (accessed on 25 September 2020).

- Estoque, R.C. A Review of the Sustainability Concept and the State of SDG Monitoring Using Remote Sensing. Remote Sens. 2020, 12, 1770. [Google Scholar] [CrossRef]

- Kodors, S.; Rausis, A.; Ratkevics, A.; Zvirgzds, J.; Teilans, A.; Ansone, J. Real Estate Monitoring System Based on Remote Sensing and Image Recognition Technologies. Procedia Comput. Sci. 2017, 104, 460–467. [Google Scholar] [CrossRef]

- Zahir, A. Assessing Usefulness of High-Resolution Satellite Imagery (HRSI) in GIS-based Cadastral Land Information System. J. Settl. Spat. Plan 2012, 3, 111–114. [Google Scholar]

- Janowski, A.; Renigier-Biłozor, M.; Walacik, M.; Chmielewska, A. Remote measurement of building usable floor area—Algorithms fusion. Land Use Policy 2021, 100, 104938. [Google Scholar] [CrossRef]

- Bielecka, E.; Dukaczewski, D.; Janczar, E. Spatial Data Infrastructure in Poland–lessons learnt from so far achievements. Geod. Cartogr. 2018, 67, 3–20. [Google Scholar] [CrossRef]

- Mika, M.; Kotlarz, P.; Jurkiewicz, M. Strategy for Cadastre development in Poland in 1989–2019. Surv. Rev. 2020, 52, 555–563. [Google Scholar] [CrossRef]

- Mika, M. An analysis of possibilities for the establishment of a multipurpose and multidimensional cadastre in Poland. Land Use Policy 2018, 77, 446–453. [Google Scholar] [CrossRef]

- Noszczyk, T.; Hernik, J. Understanding the cadastre in rural areas in Poland after the socio-political transformation. J. Spat. Sci. 2019, 64, 73–95. [Google Scholar] [CrossRef]

- Geodetic and Cartographic Law; (Official Journal 2010 No 193, Item 1287); Official Journal of Laws: Warsaw, Poland, 2010.

- Kocur-Bera, K.; Stachelek, M. Geo-Analysis of Compatibility Determinants for Data in the Land and Property Register (LPR). Geosciences 2019, 9, 303. [Google Scholar] [CrossRef] [Green Version]

- GUGiK. Budowa Zintegrowanego Systemu Informacji o Nieruchomościach –Faza II; Development of the Integrated System of Real Estate Information: Warsaw, Poland, 2018. Available online: http://www.gugik.gov.pl/__data/assets/pdf_file/0009/92664/ZSIN-II.pdf (accessed on 7 January 2021).

- EGiB Regulation. Regulation of the Minister of Regional Development and Construction of 28 February 2019 Amending the 2001 Regulation on the Register of Land and Buildings; Official Journal 2019, Item 397; Official Journal of Laws: Poland, Warsaw, 2019. [Google Scholar]

- Aung, H.T.; Pha, S.H.; Takeuchi, W. Building footprint extraction in Yangon city from monocular optical satellite image using deep learning. Geocarto Int. 2020. [Google Scholar] [CrossRef]

- Wang, S.; Hou, X.; Zhao, X. Automatic building extraction from high-resolution aerial imagery via fully convolutional encoder-decoder network with non-local block. IEEE Access 2020, 8, 7313–7322. [Google Scholar] [CrossRef]

- Liu, Y.; Zhou, J.; Qi, W.; Li, X.; Gross, L.; Shao, Q.; Zhao, Z.; Ni, L.; Fan, X.; Li, Z. ARC-Net: An Efficient Network for Building Extraction from High-Resolution Aerial Images. IEEE Access 2020, 8, 154997–155010. [Google Scholar] [CrossRef]

- Zhang, Z.; Vosselman, G.; Gerke, M.; Persello, C.; Tuia, D.; Yang, M.Y. Detecting Building Changes between Airborne Laser Scanning and Photogrammetric Data. Remote Sens. 2019, 11, 2417. [Google Scholar] [CrossRef] [Green Version]

- Maltezos, E.; Doulamis, N.; Doulamis, A.; Ioannidis, C. Deep convolutional neural networks for building extraction from orthoimages and dense image matching point clouds. J. Appl. Remote Sens. 2017, 11, 042620. [Google Scholar] [CrossRef]

- Bennett, R.; Oosterom, P.; Lemmen, C.; Koeva, M. Remote Sensing for Land Administration. Remote Sens. 2020, 12, 2497. [Google Scholar] [CrossRef]

- Baltsavias, E.P. Object extraction and revision by image analysis using existing geodata and knowledge: Current status and steps towards operational systems. ISPRS J. Photogramm. Remote Sens. 2004, 58, 129–151. [Google Scholar] [CrossRef]

- Lee, D.H.; Lee, K.M.; Lee, S.U. Fusion of Lidar and imagery for reliable building extraction. Photogramm. Eng. Remote Sens. 2008, 74, 215–225. [Google Scholar] [CrossRef] [Green Version]

- Rottensteiner, F.; Trinder, J.; Clode, S.; Kubik, K. Using the Dempster–Shafer method for the fusion of LIDAR data and multispectral images for building detection. Inf. Fusion 2005, 6, 283–300. [Google Scholar] [CrossRef]

- Sohn, G.; Dowman, I. Data fusion of high-resolution satellite imagery and LIDAR data for automatic building extraction. ISPRS J. Photogramm. Remote Sens. 2007, 62, 43–63. [Google Scholar] [CrossRef]

- Lai, X.; Yang, J.; Li, Y.; Wang, M. A Building Extraction Approach Based on the Fusion of LiDAR Point Cloud and Elevation Map Texture Features. Remote Sens. 2019, 11, 1636. [Google Scholar] [CrossRef] [Green Version]

- Ma, L.; Liu, Y.; Zhang, X.; Ye, Y.; Yin, G.; Johnson, B.A. Deep learning in remote sensing applications: A meta-analysis and review. ISPRS J. Photogramm. Remote Sens. 2019, 152, 166–177. [Google Scholar] [CrossRef]

- Zhuo, X.; Fraundorfer, F.; Kurz, F.; Reinartz, P. Optimization of OpenStreetMap Building Footprints Based on Semantic Information of Oblique UAV Images. Remote Sens. 2018, 10, 624. [Google Scholar] [CrossRef] [Green Version]

- Huang, J.F.; Zhang, X.C.; Xin, Q.C.; Sun, Y.; Zhang, P.C. Automatic building extraction from high-resolution aerial images and LiDAR data using gated residual refinement network. ISPRS J. Photogramm. Remote Sens. 2019, 151, 91–105. [Google Scholar] [CrossRef]

- Shao, Z.; Tang, P.; Wang, Z.; Saleem, N.; Yam, S.; Sommai, C. BRRNet: A Fully Convolutional Neural Network for Automatic Building Extraction from High-Resolution Remote Sensing Images. Remote Sens. 2020, 12, 1050. [Google Scholar] [CrossRef] [Green Version]

- Liu, Y.; Gross, L.; Li, Z.; Li, X.; Fan, X.; Qi, W. Automatic building extraction on high-resolution remote sensing imagery using deep convolutional encoder-decoder with spatial pyramid pooling. IEEE Access 2019, 7, 128774–128786. [Google Scholar] [CrossRef]

- Kang, W.; Xiang, Y.; Wang, F.; You, H. EU-Net: An Efficient Fully Convolutional Network for Building Extraction from Optical Remote Sensing Images. Remote Sens. 2019, 11, 2813. [Google Scholar] [CrossRef] [Green Version]

- Wu, G.; Shao, X.; Guo, Z.; Chen, Q.; Yuan, W.; Shi, X.; Xu, Y.; Shibasaki, R. Automatic Building Segmentation of Aerial Imagery Using Multi-Constraint Fully Convolutional Networks. Remote Sens. 2018, 10, 407. [Google Scholar] [CrossRef] [Green Version]

- Bittner, K.; Adam, F.; Cui, S.Y.; Korner, M.; Reinartz, P. Building footprint extraction from VHR remote sensing images combined with normalized DSMs using fused fully convolutional networks. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 2615–2629. [Google Scholar] [CrossRef] [Green Version]

- Reis, S.; Torun, A.T.; Bilgilioğlu, B.B. Investigation of Availability of Remote Sensed Data in Cadastral Works. In Cadastre: Geo-Information Innovations in Land Administration; Yomralioglu, T., McLaughlin, J., Eds.; Springer: Cham, Switzerland, 2017. [Google Scholar] [CrossRef]

- Nguyen, T.H.; Daniel, S.; Guériot, D.; Sintès, C.; Le Caillec, J.-M. Super-Resolution-Based Snake Model—An Unsupervised Method for Large-Scale Building Extraction using Airborne LiDAR Data and Optical Image. Remote Sens. 2020, 12, 1702. [Google Scholar] [CrossRef]

- Gilani, S.A.N.; Awrangjeb, M.; Lu, G. An Automatic Building Extraction and Regularisation Technique Using LiDAR Point Cloud Data and Orthoimage. Remote Sens. 2016, 8, 258. [Google Scholar] [CrossRef] [Green Version]

- Girard, N.; Tarabalka, Y. End-to-end learning of polygons for remote sensing image classification. In Proceedings of the IGARSS 2018–2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018; IEEE: Valencia, Spain, 2018; pp. 2083–2086. [Google Scholar]

- Zhao, K.; Kang, J.; Jung, J.; Sohn, G. Building Extraction from Satellite Images Using Mask R-CNN with Building Boundary Regularization. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Salt Lake City, UT, USA, 18–22 June 2018; pp. 247–251. [Google Scholar] [CrossRef]

- Girard, N.; Smirnov, D.; Solomon, J.; Tarabalka, Y. Polygonal Building Segmentation by Frame Field Learning. arXiv 2020, arXiv:2004.14875. [Google Scholar]

- Li, Z.; Wegner, J.D.; Lucchi, A. Topological Map Extraction from Overhead Images. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Korea, 27–28 October 2019; pp. 1715–1724. [Google Scholar] [CrossRef] [Green Version]

- Zhao, W.; Ivanov, I.; Persello, C.; Stein, A. Building Outline Delineation: From Very High Resolution Remote Sensing Imagery to Polygons with an Improved End-to-End Learning Framework. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2020, 43, 731–735. [Google Scholar] [CrossRef]

- Avbelj, J.; Muller, R.; Bamler, R. A Metric for Polygon Comparison and Building Extraction Evaluation. IEEE Geosci. Remote Sens. Lett. 2015, 12, 170–174. [Google Scholar] [CrossRef] [Green Version]

- Dey, E.K.; Awrangjeb, M. A Robust Performance Evaluation Metric for Extracted Building Boundaries from Remote Sensing Data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 4030–4043. [Google Scholar] [CrossRef]

- Degórska, B. Spatial growth of urbanised land within the Warsaw Metropolitan Area in the first decade of the 21st century. Geogr. Pol. 2012, 85, 77–95. [Google Scholar] [CrossRef] [Green Version]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015; Navab, N., Hornegger, J., Wells, W., Frangi, A., Eds.; MICCAI 2015, Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2015; Volume 9351. [Google Scholar] [CrossRef] [Green Version]

- Harris. Harris Geospatial Solutions, 2020: Train Deep Learning Models. Available online: https://www.l3harrisgeospatial.com/docs/TrainDeepLearningModels.html (accessed on 2 November 2020).

- Pan, Z.; Xu, J.; Guo, Y.; Hu, Y.; Wang, G. Deep Learning Segmentation and Classification for Urban Village Using a Worldview Satellite Image Based on U-Net. Remote Sens. 2020, 12, 1574. [Google Scholar] [CrossRef]

- Liu, W.; Yang, M.; Xie, M.; Guo, Z.; Li, E.; Zhang, L.; Pei, T.; Wang, D. Accurate Building Extraction from Fused DSM and UAV Images Using a Chain Fully Convolutional Neural Network. Remote Sens. 2019, 11, 2912. [Google Scholar] [CrossRef] [Green Version]

- DeepAI. Available online: https://deepai.org/machine-learning-glossary-and-terms/machine-learning (accessed on 30 December 2020).

- Rottensteiner, F.; Briese, C. A New Method for Building Extraction in Urban Areas from High-Resolution LIDAR Data. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2002, 34 Pt 3A, 295–301. [Google Scholar]

- Africani, P.; Bitelli, G.; Lambertini, A.; Minghetti, A.; Paselli, E. Integration of LIDAR data into amunicipal GIS to study solar radiation. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2013, XL–1-W1-1, 1–6. [Google Scholar] [CrossRef] [Green Version]

- Baillard, C.; Schmid, C.; Zisserman, A.; Fitzgibbon, A. Automatic line matching and 3D reconstruction of buildings from multiple views. IAPRS 1999, 32, 69–80. [Google Scholar]

- Vosselman, G.; Dijkman, S. 3D building model reconstruction from point clouds and ground plans. In Proceedings of the ISPRS Workshop: Land Surface Mapping and Characterization Using Laser Altimetry, Annapolis, MD, USA, 22–24 October 2001; Hofton, M.A., Ed.; pp. 37–43. Available online: http://www.isprs.org/proceedings/XXXIV/3-W4/pdf/Vosselman.pdf (accessed on 29 January 2021).

- Douglas, D.; Peucker, T. Algorithms for the reduction of the number of points required to represent a digitized line or its caricature. Can. Cartogr. 1973, 10, 112–122. [Google Scholar] [CrossRef] [Green Version]

- Lupa, M.; Kozioł, K.; Leśniak, A. An Attempt to Automate the Simplification of Building Objects in Multiresolution Databases. In Beyond Databases, Architectures and Structures; Kozielski, S., Mrozek, D., Kasprowski, P., Małysiak-Mrozek, B., Kostrzewa, D., Eds.; BDAS 2015, Communications in Computer and Information Science; Springer: Cham, Switzerland, 2015; Volume 521. [Google Scholar] [CrossRef]

- Khoshboresh-Masouleh, M.; Alidoost, F.; Hossein, A. Multiscale building segmentation based on deep learning for remote sensing RGB images from different sensors. J. Appl. Remote Sens. 2020, 14, 034503. [Google Scholar] [CrossRef]

- Hanus, P.; Benduch, P.; Pęska-Siwik, A. Budynek na mapie ewidencyjnej, kontur budynku i bloki budynku. Przegląd Geod. 2017, 7, 15–20. (In Polish) [Google Scholar] [CrossRef]

- Buśko, M. Modernization of the Register of Land and Buildings with Reference to Entering Buildings into the Real Estate Cadastre in Poland. In Proceedings of the International Conference on Environmental Engineering. Vilnius Gediminas Technical University, Vilnius, Lithuania, 27–28 April 2017. [Google Scholar] [CrossRef]

- Ministry Regulation, 2020; Regulation of the Ministry of Development of 18 August 2020 r. On Technical Standards for the Performance of Situational and Height Measurements as Well as the Development and Transfer of the Results of These Measurements to the State Geodetic and Cartographic Resource. Official Journal 2020, item 1429. Available online: https://www.dziennikustaw.gov.pl/D2020000142901.pdf (accessed on 28 September 2020).

- Ostrowski, W.; Pilarska, M.; Charyton, J.; Bakuła, K. Analysis of 3D building models accuracy based on the airborne laser scanning point clouds. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2018, 42, 797–804. [Google Scholar] [CrossRef] [Green Version]

| Overall Accuracy (%) | Precision | Recall | F1-Score | Per-Object IoU Mean |

|---|---|---|---|---|

| 89.5 | 0.765 | 0.807 | 0.785 | 0.748 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wierzbicki, D.; Matuk, O.; Bielecka, E. Polish Cadastre Modernization with Remotely Extracted Buildings from High-Resolution Aerial Orthoimagery and Airborne LiDAR. Remote Sens. 2021, 13, 611. https://doi.org/10.3390/rs13040611

Wierzbicki D, Matuk O, Bielecka E. Polish Cadastre Modernization with Remotely Extracted Buildings from High-Resolution Aerial Orthoimagery and Airborne LiDAR. Remote Sensing. 2021; 13(4):611. https://doi.org/10.3390/rs13040611

Chicago/Turabian StyleWierzbicki, Damian, Olga Matuk, and Elzbieta Bielecka. 2021. "Polish Cadastre Modernization with Remotely Extracted Buildings from High-Resolution Aerial Orthoimagery and Airborne LiDAR" Remote Sensing 13, no. 4: 611. https://doi.org/10.3390/rs13040611