Autonomous Repeat Image Feature Tracking (autoRIFT) and Its Application for Tracking Ice Displacement

Abstract

1. Introduction

2. Methodology

2.1. autoRIFT: The Feature Tracking Module

2.2. Geogrid: The Geocoding Module

2.3. Combinative use of autoRIFT and Geogrid

3. Results

3.1. Study Site and Dataset

- (1)

- Stable surfaces (stationary or slow-flowing ice surface m/year, shown as the shaded “purple” area in Figure 3): We first extracted the reference velocity over stable surfaces and used the median absolute deviation (MAD) of the residual to characterize the displacement accuracy. Since the surface velocities for areas of “stable” flow experience negligible temporal variability, we used the 20-year ice-sheet-wide velocity mosaic [37,38] derived from the synthesis of SAR/InSAR data and Landsat-8 optical imagery as the reference velocity map. Stable surfaces were identified as those areas with a velocity less than 15 m/year, which primarily consisted of rocks in our study area, as shown in Figure 3 as most ice in this area flows at a rate greater than 15 m/year.

- (2)

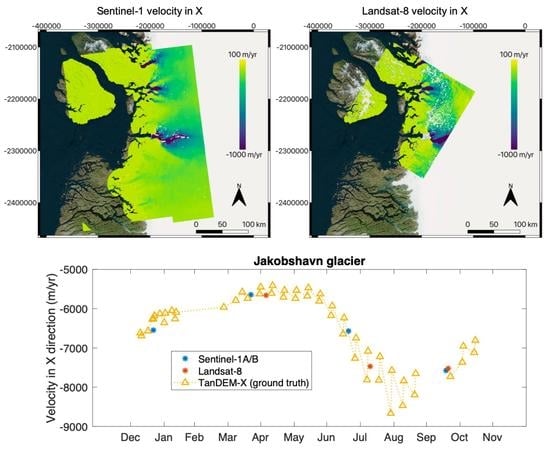

- To characterize errors for the fast-flowing glacier outlet (N , W ; marked as the “red” star in Figure 3), we used dense time series of SAR/InSAR-derived velocity estimates [38,39] from TanDEM-X mission as the truth dataset and calculated the difference (relative percentage) relative to estimates generated using autoRIFT.

3.2. Data Processing

3.3. Error Characterization

3.4. Validation with TanDEM-X Time Series

3.5. Runtime and Accuracy Comparison

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Conflicts of Interest

Appendix A. Derivation of the Conversion Matrix

Appendix B. Error Propagation due to the Failure of Slope Parallel Assumption

References

- Bindschadler, R.; Scambos, T. Satellite-image-derived velocity field of an Antarctic ice stream. Science 1991, 252, 242–246. [Google Scholar] [CrossRef] [PubMed]

- Frolich, R.; Doake, C. Synthetic aperture radar interferometry over Rutford Ice Stream and Carlson Inlet, Antarctica. J. Glaciol. 1998, 44, 77–92. [Google Scholar] [CrossRef][Green Version]

- Fahnestock, M.; Scambos, T.; Moon, T.; Gardner, A.; Haran, T.; Klinger, M. Rapid large-area mapping of ice flow using Landsat 8. Remote. Sens. Environ. 2016, 185, 84–94. [Google Scholar] [CrossRef]

- Strozzi, T.; Kouraev, A.; Wiesmann, A.; Wegmüller, U.; Sharov, A.; Werner, C. Estimation of Arctic glacier motion with satellite L-band SAR data. Remote. Sens. Environ. 2008, 112, 636–645. [Google Scholar] [CrossRef]

- Fahnestock, M.; Bindschadler, R.; Kwok, R.; Jezek, K. Greenland ice sheet surface properties and ice dynamics from ERS-1 SAR imagery. Science 1993, 262, 1530–1534. [Google Scholar] [CrossRef] [PubMed]

- Strozzi, T.; Luckman, A.; Murray, T.; Wegmuller, U.; Werner, C.L. Glacier motion estimation using SAR offset-tracking procedures. IEEE Trans. Geosci. Remote. Sens. 2002, 40, 2384–2391. [Google Scholar] [CrossRef]

- de Lange, R.; Luckman, A.; Murray, T. Improvement of satellite radar feature tracking for ice velocity derivation by spatial frequency filtering. IEEE Trans. Geosci. Remote Sens. 2007, 45, 2309–2318. [Google Scholar] [CrossRef]

- Nagler, T.; Rott, H.; Hetzenecker, M.; Scharrer, K.; Magnússon, E.; Floricioiu, D.; Notarnicola, C. Retrieval of 3D-glacier movement by high resolution X-band SAR data. In Proceedings of the 2012 IEEE International Geoscience and Remote Sensing Symposium, Munich, Germany, 22–27 July 2012; pp. 3233–3236. [Google Scholar]

- Kusk, A.; Boncori, J.P.M.; Dall, J. An automated system for ice velocity measurement from SAR. In Proceedings of the EUSAR 2018, 12th European Conference on Synthetic Aperture Radar, Aachen, Germany, 4–7 June 2018; pp. 1–4. [Google Scholar]

- Mouginot, J.; Rignot, E.; Scheuchl, B.; Millan, R. Comprehensive annual ice sheet velocity mapping using Landsat-8, Sentinel-1, and RADARSAT-2 data. Remote. Sens. 2017, 9, 364. [Google Scholar] [CrossRef]

- Riveros, N.C.; Euillades, L.D.; Euillades, P.A.; Moreiras, S.M.; Balbarani, S. Offset tracking procedure applied to high resolution SAR data on Viedma Glacier, Patagonian Andes, Argentina. Adv. Geosci. 2013, 35, 7–13. [Google Scholar] [CrossRef]

- Gourmelen, N.; Kim, S.; Shepherd, A.; Park, J.; Sundal, A.; Björnsson, H.; Pálsson, F. Ice velocity determined using conventional and multiple-aperture InSAR. Earth Planet. Sci. Lett. 2011, 307, 156–160. [Google Scholar] [CrossRef]

- Joughin, I.R.; Winebrenner, D.P.; Fahnestock, M.A. Observations of ice-sheet motion in Greenland using satellite radar interferometry. Geophys. Res. Lett. 1995, 22, 571–574. [Google Scholar] [CrossRef]

- Yu, J.; Liu, H.; Jezek, K.C.; Warner, R.C.; Wen, J. Analysis of velocity field, mass balance, and basal melt of the Lambert Glacier–Amery Ice Shelf system by incorporating Radarsat SAR interferometry and ICESat laser altimetry measurements. J. Geophys. Res. Solid Earth 2010, 115. [Google Scholar] [CrossRef]

- Mouginot, J.; Rignot, E.; Scheuchl, B. Continent-Wide, Interferometric SAR Phase, Mapping of Antarctic Ice Velocity. Geophys. Res. Lett. 2019, 46, 9710–9718. [Google Scholar] [CrossRef]

- Rignot, E.; Jezek, K.; Sohn, H.G. Ice flow dynamics of the Greenland ice sheet from SAR interferometry. Geophys. Res. Lett. 1995, 22, 575–578. [Google Scholar] [CrossRef]

- Gray, A.; Mattar, K.; Vachon, P.; Bindschadler, R.; Jezek, K.; Forster, R.; Crawford, J. InSAR results from the RADARSAT Antarctic Mapping Mission data: Estimation of glacier motion using a simple registration procedure. Proceedins of the 1998 IEEE International Geoscience and Remote Sensing, Seattle, WA, USA, 6–10 July 1998; Volume 3, pp. 1638–1640. [Google Scholar]

- Michel, R.; Rignot, E. Flow of Glaciar Moreno, Argentina, from repeat-pass Shuttle Imaging Radar images: Comparison of the phase correlation method with radar interferometry. J. Glaciol. 1999, 45, 93–100. [Google Scholar] [CrossRef]

- Joughin, I.R.; Kwok, R.; Fahnestock, M.A. Interferometric estimation of three-dimensional ice-flow using ascending and descending passes. IEEE Trans. Geosci. Remote Sens. 1998, 36, 25–37. [Google Scholar] [CrossRef]

- Joughin, I.; Gray, L.; Bindschadler, R.; Price, S.; Morse, D.; Hulbe, C.; Mattar, K.; Werner, C. Tributaries of West Antarctic ice streams revealed by RADARSAT interferometry. Science 1999, 286, 283–286. [Google Scholar] [CrossRef] [PubMed]

- Joughin, I. Ice-sheet velocity mapping: A combined interferometric and speckle-tracking approach. Ann. Glaciol. 2002, 34, 195–201. [Google Scholar] [CrossRef]

- Liu, H.; Zhao, Z.; Jezek, K.C. Synergistic fusion of interferometric and speckle-tracking methods for deriving surface velocity from interferometric SAR data. IEEE Geosci. Remote Sens. Lett. 2007, 4, 102–106. [Google Scholar] [CrossRef]

- Sánchez-Gámez, P.; Navarro, F.J. Glacier surface velocity retrieval using D-InSAR and offset tracking techniques applied to ascending and descending passes of Sentinel-1 data for southern Ellesmere ice caps, Canadian Arctic. Remote Sens. 2017, 9, 442. [Google Scholar] [CrossRef]

- Joughin, I.; Smith, B.E.; Howat, I.M. A complete map of Greenland ice velocity derived from satellite data collected over 20 years. J. Glaciol. 2018, 64, 1–11. [Google Scholar] [CrossRef] [PubMed]

- Merryman Boncori, J.P.; Langer Andersen, M.; Dall, J.; Kusk, A.; Kamstra, M.; Bech Andersen, S.; Bechor, N.; Bevan, S.; Bignami, C.; Gourmelen, N.; et al. Intercomparison and validation of SAR-based ice velocity measurement techniques within the Greenland Ice Sheet CCI project. Remote Sens. 2018, 10, 929. [Google Scholar] [CrossRef]

- Rosen, P.A.; Hensley, S.; Peltzer, G.; Simons, M. Updated repeat orbit interferometry package released. Eos Trans. Am. Geophys. Union 2004, 85, 47. [Google Scholar] [CrossRef]

- Rosen, P.A.; Gurrola, E.M.; Agram, P.; Cohen, J.; Lavalle, M.; Riel, B.V.; Fattahi, H.; Aivazis, M.A.; Simons, M.; Buckley, S.M. The InSAR Scientific Computing Environment 3.0: A Flexible Framework for NISAR Operational and User-Led Science Processing. In Proceedings of the IGARSS 2018-2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018; pp. 4897–4900. [Google Scholar]

- Willis, M.J.; Zheng, W.; Durkin, W.; Pritchard, M.E.; Ramage, J.M.; Dowdeswell, J.A.; Benham, T.J.; Bassford, R.P.; Stearns, L.A.; Glazovsky, A.; et al. Massive destabilization of an Arctic ice cap. Earth Planet. Sci. Lett. 2018, 502, 146–155. [Google Scholar] [CrossRef]

- Willis, M.J.; Melkonian, A.K.; Pritchard, M.E.; Ramage, J.M. Ice loss rates at the Northern Patagonian Icefield derived using a decade of satellite remote sensing. Remote. Sens. Environ. 2012, 117, 184–198. [Google Scholar] [CrossRef]

- Mouginot, J.; Scheuchl, B.; Rignot, E. Mapping of ice motion in Antarctica using synthetic-aperture radar data. Remote Sens. 2012, 4, 2753–2767. [Google Scholar] [CrossRef]

- Millan, R.; Mouginot, J.; Rabatel, A.; Jeong, S.; Cusicanqui, D.; Derkacheva, A.; Chekki, M. Mapping surface flow velocity of glaciers at regional scale using a multiple sensors approach. Remote Sens. 2019, 11, 2498. [Google Scholar] [CrossRef]

- Casu, F.; Manconi, A.; Pepe, A.; Lanari, R. Deformation time-series generation in areas characterized by large displacement dynamics: The SAR amplitude pixel-offset SBAS technique. IEEE Trans. Geosci. Remote. Sens. 2011, 49, 2752–2763. [Google Scholar] [CrossRef]

- Euillades, L.D.; Euillades, P.A.; Riveros, N.C.; Masiokas, M.H.; Ruiz, L.; Pitte, P.; Elefante, S.; Casu, F.; Balbarani, S. Detection of glaciers displacement time-series using SAR. Remote Sens. Environ. 2016, 184, 188–198. [Google Scholar] [CrossRef]

- Gardner, A.S.; Moholdt, G.; Scambos, T.; Fahnstock, M.; Ligtenberg, S.; van den Broeke, M.; Nilsson, J. Increased West Antarctic and unchanged East Antarctic ice discharge over the last 7 years. Cryosphere 2018, 12, 521–547. [Google Scholar] [CrossRef]

- Stein, A.N.; Huertas, A.; Matthies, L. Attenuating stereo pixel-locking via affine window adaptation. In Proceedings of the 2006 IEEE International Conference on Robotics and Automation, Orlando, FL, USA, 15–19 May 2006; pp. 914–921. [Google Scholar]

- Tsang, L.; Kong, J.A. Scattering of Electromagnetic Waves: Advanced Topics; John Wiley & Sons: Hoboken, NJ, USA, 2004; Volume 26. [Google Scholar]

- Joughin, I.; Smith, B.; Howat, I.; Scambos, T. MEaSUREs Multi-year Greenland Ice Sheet Velocity Mosaic, Version 1; NASA National Snow and Ice Data Center Distributed Active Archive Center: Boulder, CO, USA; Available online: https://doi.org/10.5067/QUA5Q9SVMSJG (accessed on 2 July 2020).

- Joughin, I.; Smith, B.E.; Howat, I.M.; Scambos, T.; Moon, T. Greenland flow variability from ice-sheet-wide velocity mapping. J. Glaciol. 2010, 56, 415–430. [Google Scholar] [CrossRef]

- Joughin, I.; Smith, B.; Howat, I.; Scambos, T. MEaSUREs Greenland Ice Velocity: Selected Glacier Site Velocity Maps from InSAR, Version 3; NASA National Snow and Ice Data Center Distributed Active Archive Center: Boulder, CO, USA; Available online: https://doi.org/10.5067/YXMJRME5OUNC (accessed on 2 July 2020).

- Lemos, A.; Shepherd, A.; McMillan, M.; Hogg, A.E.; Hatton, E.; Joughin, I. Ice velocity of Jakobshavn Isbræ, Petermann Glacier, Nioghalvfjerdsfjorden, and Zachariæ Isstrøm, 2015–2017, from Sentinel 1-a/b SAR imagery. Cryosphere 2018, 12, 2087–2097. [Google Scholar] [CrossRef]

- Howat, I.; Negrete, A.; Smith, B. MEaSUREs Greenland Ice Mapping Project (GIMP) Digital Elevation Model, Version 1; NASA National Snow and Ice Data Center Distributed Active Archive Center: Boulder, CO, USA; Available online: https://doi.org/10.5067/NV34YUIXLP9W (accessed on 2 July 2020).

- Howat, I.M.; Negrete, A.; Smith, B.E. The Greenland Ice Mapping Project (GIMP) land classification and surface elevation data sets. Cryosphere 2014, 8, 1509–1518. [Google Scholar] [CrossRef]

- Liao, H.; Meyer, F.J.; Scheuchl, B.; Mouginot, J.; Joughin, I.; Rignot, E. Ionospheric correction of InSAR data for accurate ice velocity measurement at polar regions. Remote. Sens. Environ. 2018, 209, 166–180. [Google Scholar] [CrossRef]

- Nagler, T.; Rott, H.; Hetzenecker, M.; Wuite, J.; Potin, P. The Sentinel-1 mission: New opportunities for ice sheet observations. Remote Sens. 2015, 7, 9371–9389. [Google Scholar] [CrossRef]

- Joughin, I.; Smith, B.E.; Howat, I. Greenland Ice Mapping Project: Ice Flow Velocity Variation at sub-monthly to decadal time scales. Cryosphere 2018, 12, 2211. [Google Scholar] [CrossRef] [PubMed]

| Spaceborne Sensor | Acquisition Date | Path/Frame (Path/Row) | Slant-range/Azimuth (X/Y) Pixel Spacing (m) |

|---|---|---|---|

| Sentinel-1A | 20170104 | 90/222, 90/227 | 3.67/15.59 |

| Sentinel-1B | 20170110 | 90/222, 90/227 | 3.67/15.59 |

| Sentinel-1B | 20170404 | 90/222, 90/227 | 3.67/15.59 |

| Sentinel-1A | 20170410 | 90/222, 90/227 | 3.67/15.59 |

| Sentinel-1B | 20170416 | 90/222, 90/227 | 3.67/15.59 |

| Sentinel-1A | 20170422 | 90/222, 90/227 | 3.67/15.59 |

| Sentinel-1B | 20170428 | 90/222, 90/227 | 3.67/15.59 |

| Sentinel-1A | 20170703 | 90/222, 90/227 | 3.67/15.59 |

| Sentinel-1B | 20170709 | 90/222, 90/227 | 3.67/15.59 |

| Sentinel-1B | 20171001 | 90/222, 90/227 | 3.67/15.59 |

| Sentinel-1A | 20171007 | 90/222, 90/227 | 3.67/15.59 |

| Sentinel-1A | 20170221 | 90/222 | 3.67/15.59 |

| Sentinel-1B | 20170227 | 90/222 | 3.67/15.59 |

| Landsat-8 | 20170208 | 9/11 | 15.0/15.0 |

| Landsat-8 | 20170224 | 9/11 | 15.0/15.0 |

| Landsat-8 | 20170413 | 9/11 | 15.0/15.0 |

| Landsat-8 | 20170429 | 9/11 | 15.0/15.0 |

| Landsat-8 | 20170718 | 9/11 | 15.0/15.0 |

| Landsat-8 | 20170803 | 9/11 | 15.0/15.0 |

| Landsat-8 | 20170819 | 9/11 | 15.0/15.0 |

| Landsat-8 | 20170920 | 9/11 | 15.0/15.0 |

| Landsat-8 | 20171022 | 9/11 | 15.0/15.0 |

| Landsat-8 | 20180721 | 9/11 | 15.0/15.0 |

| Spaceborne Sensor | Acquisition Date | Temporal Baseline (days) | Valid ROI Coverage (percentage) | Velocity Error in Geographic X/Y (m/year) | Velocity Error in Slant-Range/Azimuth (m/year) | Difference (Relative Percentage) of Jakobshavn Velocity in Geographic X/Y (m/year) |

|---|---|---|---|---|---|---|

| Sentinel-1A/B | 20170104–20170110 | 6 | 87% | 27/78 | 21/88 | −228(−4%)/−325(−5%) |

| Sentinel-1A/B | 20170404–20170410 | 6 | 100% | 12/39 | 8/44 | 253(4%)/−447(−8%) |

| Sentinel-1B | 20170404–20170416 | 12 | 94% | 12/35 | 8/41 | N/A |

| Sentinel-1A/B | 20170404–20170422 | 18 | 84% | 14/36 | 10/40 | N/A |

| Sentinel-1B | 20170404–20170428 | 24 | 60% | 15/34 | 10/38 | N/A |

| Sentinel-1A/B | 20170703–20170709 | 6 | 43% | 15/44 | 10/44 | 163(2%)/−32(−1%) |

| Sentinel-1A/B | 20171001–20171007 | 6 | 88% | 30/87 | 21/94 | 432(5%)/−218(−3%) |

| Landsat-8 | 20170208–20170224 | 16 | 52% | 91/160 | N/A | N/A |

| Landsat-8 | 20170413–20170429 | 16 | 74% | 74/75 | N/A | −136(−2%)/−341(−6%) |

| Landsat-8 | 20170718–20170803 | 16 | 84% | 22/31 | N/A | 346(4%)/−506(−6%) |

| Landsat-8 | 20170718–20170819 | 32 | 11% | 23/27 | N/A | N/A |

| Landsat-8 | 20170718–20170920 | 64 | 31% | 24/35 | N/A | N/A |

| Landsat-8 | 20170718–20180721 | 368 | 48% | 2/2 | N/A | N/A |

| Landsat-8 | 20170920–20171022 | 32 | 30% | 61/82 | N/A | −119(−2%)/−671(−9%) |

| Dense Ampcor | Standard autoRIFT | Standard autoRIFT | Intelligent autoRIFT | Intelligent autoRIFT | |

|---|---|---|---|---|---|

| Grid type | Image | Image | Geographic | Geographic | Geographic |

| Grid spacing | 32 × 32 ➀ 64 × 64 ➁ | 32 × 32 ➀ 64 × 64 ➁ | 240 m × 240 m ➀ 480 m × 480 m ➁ | 240 m × 240 m ➀ 480 m × 480 m ➁ | 240 m × 240 m ➀ 480 m × 480 m ➁ |

| Window (chip) size | 32 × 32 ➀ 64 × 64 ➁ | 32 × 32 ➀ 64 × 64 ➁ | 32 × 32 ➀ 64 × 64 ➁ | 32 × 32 ➀ 64 × 64 ➁ | 32 × 32 ➀ 64 × 64 ➁ |

| Search distance | 62 × 16 | 62 × 16 | 62 × 16 | 25 × 25 | Spatially-varying × 4 |

| Downstream search displacement | 0 × 0 | 0 × 0 | 0 × 0 | Spatially-varying | Spatially-varying |

| Oversampling ratio | 64 | 64 | 64 | 64 | 64 |

| Multithreading (cores) | 8 | 1 | 1 | 1 | 1 |

| Preprocessing | Co-registration | Co-registration, high-pass filtering, uint8 conversion | Co-registration, high-pass filtering, uint8 conversion | Co-registration, high-pass filtering, uint8 conversion | Co-registration, high-pass filtering, uint8 conversion |

| Runtime (min/core) | 480.0 ➀ 368.0 ➁ | 6.4 ➀ 2.6 ➁ 8.0 ➂ | 7.6 ➂ | 6.2 ➂ | 5.6 ➂ |

| i/j-direction displacement error metrics (pixel) | 0.039/0.055 ➀ 0.023/0.039 ➁ | 0.031/0.047 ➀ 0.016/0.031 ➁ 0.031/0.047 ➂ | 0.031/0.039 ➂ | 0.031/0.039 ➂ | 0.031/0.039 ➂ |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lei, Y.; Gardner, A.; Agram, P. Autonomous Repeat Image Feature Tracking (autoRIFT) and Its Application for Tracking Ice Displacement. Remote Sens. 2021, 13, 749. https://doi.org/10.3390/rs13040749

Lei Y, Gardner A, Agram P. Autonomous Repeat Image Feature Tracking (autoRIFT) and Its Application for Tracking Ice Displacement. Remote Sensing. 2021; 13(4):749. https://doi.org/10.3390/rs13040749

Chicago/Turabian StyleLei, Yang, Alex Gardner, and Piyush Agram. 2021. "Autonomous Repeat Image Feature Tracking (autoRIFT) and Its Application for Tracking Ice Displacement" Remote Sensing 13, no. 4: 749. https://doi.org/10.3390/rs13040749

APA StyleLei, Y., Gardner, A., & Agram, P. (2021). Autonomous Repeat Image Feature Tracking (autoRIFT) and Its Application for Tracking Ice Displacement. Remote Sensing, 13(4), 749. https://doi.org/10.3390/rs13040749