The OBIA method outlined herein accurately classified high-resolution UAS orthoimagery of the herbicide control treatment study with overall accuracy exceeding 89% for all six trials as shown in

Table 4. Given these image classification accuracy assessment results, the final classified images from each trial could be used with confidence to assess CFH community coverage based on mapping parameters (e.g., sensor, ground sample distance) and changes over time due to herbicide control. Furthermore, the areal coverage assessment results suggest that water resource managers can accurately determine the response of an invasive FLAV species to a management technique by using UAS in small, localized project areas to assess changes in area coverage over time.

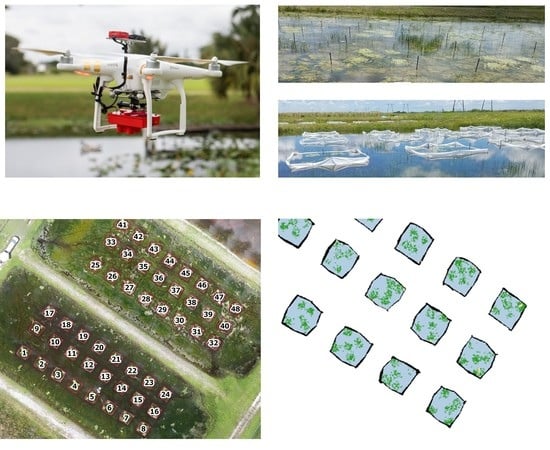

4.1. UAS Operational Considerations

A primary objective of this study was to determine the impact that sensor choice has on the classification of CFH. The NIR bands found in multispectral sensors such as the MicaSense RedEdge can aid in determining vegetation health (e.g., input into NDVI computation) while also providing additional feature object information for the RF classifier as shown in

Table 3. This additional object information can be helpful in discriminating between vegetation and non-vegetation classes. During the 14AT trials, emergent aquatic vegetation (EAV) was more prevalent in the TPs than it was on 00AT as shown in

Figure 9. Furthermore, the EAV and CFH classes were the most frequently confused classes in the accuracy assessment confusion matrices found in

Table A1,

Table A2,

Table A3,

Table A4,

Table A5 and

Table A6. The multispectral classified image derived from imagery at the lowest GSD (2.7 cm/pix at 40 m AGL) had the best performance in discriminating between these two vegetation classes as shown in

Table A3. When comparing multispectral (2.7 cm/pix GSD) and RGB (1.7 cm/pix GSD) datasets collected at the same flight altitude (40 m), the results from the RE40 dataset showed a small but significant improvement in the accuracy of measuring areal coverage at a 95% confidence level. Based on the manufacturer’s recommendation, multispectral data was only collected at the flight altitude of 60 m (4.1 cm/pix GSD) for the pre-treatment assessment date (00AT). Thus, a comparison between datasets from the same flight altitude (40 m) was only conducted for 14AT where data was available.

While multispectral sensor performance showed statistically significant improvement over RGB-only datasets at certain spatial resolutions for accurately measuring areal coverage, water resource managers must also balance other operational considerations. The first consideration is cost. At present, prosumer drones (e.g., DJI Phantom series used herein) are approximately

$1500 with standard visual cameras (e.g., Sony EXMOR) included in the price. Quality UAS multispectral cameras (e.g., MicaSense RedEdge) cost approximately

$5000 without accounting for a stable UAS platform to carry the sensor. Suitable UAS platforms range in price from a few hundred to a few thousand dollars. Once startup costs are accounted for, operational considerations must be evaluated as well. Multispectral cameras such as the RedEdge typically have a limited field of view relative to standard optical cameras as shown in

Table 1. To maintain the 70–80% image sidelap necessary for quality SfM-derived orthophoto mosaics, additional flight lines in the field are required [

38]. This corresponds to both additional images and flight time as shown in

Table 2. Consequently, data volume can differ considerably across sensors as well. The RedEdge sensor comprises five cameras, each operating on a different portion of the electromagnetic spectrum. For every image location, a single uncompressed 2.4 megabytes (MB) tif image is captured by each camera. Meanwhile, the Sony EXMOR camera captures the RGB bands in one compressed jpeg image that is approximately 5 MB in size. For this small 0.8 ha project, raw multispectral imagery collected at 40 m AGL on 14AT was approximately 1,980 MB of imagery files and the Sony imagery collected at the same 40 m AGL was 590 MB. While specifications certainly vary by sensor, UAS operators need to be cognizant of file storage and subsequent processing demands especially with multispectral imagery which is typically stored and processed in an uncompressed format. Thus, the implementation of multispectral imaging into a UAS operational workflow is not a trivial decision based purely on accuracy performance.

Similar to other UAS projects targeting specific vegetation species [

43,

44], flight altitude and in turn GSD impacted the accuracy of CFH detection in this study. This is evidenced by the differences in CFH class accuracy from the classified image accuracy assessments (

Table A4 and

Table A6) as well as the small but significant difference in areal coverage accuracy (

Figure 10) between the RedEdge trial flown at 40 m (RE40) and the RedEdge trial flown at 80 m (RE80). As a result, water resource managers must find an acceptable balance between potentially small but significant accuracy improvements noted above and the additional operational constraints imposed by achieving a higher spatial resolution. In this 0.8 ha study, the flight time for RE40 was nearly 10 min longer than the 7-min, RE80 flight as shown in

Table 2. The lower flight altitude not only restricts the mission areal coverage per takeoff, but it also leads to increased volumes of data: 1,980 MB of imagery for RE40 versus 1,280 MB of imagery for RE80 on 14AT. Given these operational considerations and the ability to still obtain a high overall accuracy assessment (e.g., 89.6% with a 5.5 cm/pix GSD), many water resource managers may be willing to forego the marginal accuracy improvements of capturing data at a higher spatial resolution. One potential way to mitigate the time constraints of flying lower is to improve the spatial resolution of the multispectral sensor. For example, the newest, multispectral MicaSense sensor is the Altum, which offers a 50% improvement in spatial resolution relative to the MicaSense RedEdge [

64]. Thus, operational efficiencies can be gained by flying 50% higher with the newer Altum sensor and maintaining the same GSD as the RedEdge. Alternatively, managers willing to forego the highest accuracies could fly the Altum at maximum allowable altitudes without a waiver (e.g., 121.9 m in the United States) to obtain a GSD of 5.3 cm/pix. With these operational parameters, UAS practitioners could reduce the amount of data acquired and subsequent SfM processing time while still creating high-accuracy datasets.

Even after optimizing operations through sensor selection and UAS flight planning parameters, multiple factors can still influence the accuracy of the results. For this project, the datasets were tightly georeferenced using stationary GCPs to ensure the best possible dataset alignment across the various trials. In a larger, natural wetland setting, access to well-distributed GCPs will be minimal. In these situations, georeferencing datasets using on-board, post-processed kinematic (PPK) GNSS can provide the best available positioning solution with misalignment errors similar to using GCPs [

65,

66,

67]. The implementation of PPK GNSS requires either a PPK-enabled UAS platform or additional positioning sensors mounted to the existing UAS platform. Either scenario results in additional financial costs above and beyond the cost of the prosumer drone to mitigate dataset misalignment. During data acquisition, a poor sun angle can cause sun glint on the water surface. Sun glint can cause misalignments in the SfM processing, potentially adversely impacting the accuracy of resultant classified imagery [

51,

68].

Another environmental factor that impacts floating wetland vegetation more so than terrestrial vegetation is vegetation movement. While FLAV is tethered to the bottom, the vegetation is still susceptible to drift on the water’s surface. This can cause individual vegetation leaves to cluster or disperse depending on water and wind currents. When plants disperse, the spatial resolution of the imagery must be high enough to capture individual leaves on the water surface, or underestimation of invasive vegetation will result. Meanwhile, plants that cluster together can cause overlapping leaves and in turn, result in underestimation of the subject vegetation class area coverage as well. While some field conditions can be mitigated (e.g., sun angle planning, PPK implementation), there is inherent noise in the classified image datasets that water resource managers need to be aware of when integrating UAS mapping and analysis in the decision-making process.

4.2. Future Considerations

To effectively study herbicide efficacy on highly invasive vegetation in a randomized block design field trial, meticulous planning went into planting equal amounts of CFH in each TP and subsequently containing the CFH from entering the larger wetland complex surrounding the treatment ponds. Hence, water flow was restricted to the treatment ponds and plastic sheeting was used to form a physical barrier surrounding each TP and the subject vegetation. Due to these constraints, there was less mixing of invasive and native wetland vegetation than would be encountered when finding CFH in natural areas. A field study that investigates the management of CFH in a natural setting similar to Lishawa et al. [

69], which investigated the management of

Typha spp., would be the next progression in determining the value that multispectral remote sensing adds when water resource managers are faced with greater vegetative biodiversity than encountered in this project.

Thus far, CFH was accurately detected at all spatial resolutions tested in this project. A natural progression would be to further optimize data acquisition efficiency by collecting lower spatial resolution, multispectral imagery with the RedEdge sensor (e.g., 6.8 cm/pix at 100 m AGL, 8.3 cm/pix at 121.9 m AGL) and with the Sony sensor (e.g., 2.6 cm/pix at 60 m AGL, 3.4 cm/pix at 80 m AGL). If CFH communities can still be mapped accurately at these lower resolutions, water resource managers would have additional opportunities to reduce processing time and the amount of data acquired. Other data acquisition (e.g., image sidelap/overlap) and image processing (e.g., segmentation and classification parameters) variables were standardized for this project, but further investigation may yield additional accuracy improvements. On the data collection side, the sidelap and overlap parameters were each set to 80% for both sensors to ensure that no issues were encountered during SfM alignment and subsequent orthophoto mosaic generation. Reducing the sidelap parameter would reduce the number of flight lines leading to reductions in data acquisition time and the volume of data acquired. To test, a water resource manager could run a sample experiment by collecting UAS imagery at an extremely high sidelap of 90% or 95%. During SfM processing, datasets could be generated using every other or every third flightline to find the point at which the final classified imagery accuracy degrades for the given wetland environment and target vegetation species. This could help optimize data collection for temporal monitoring of larger areas with similar landcover characteristics.

The image processing parameters were standardized during the image segmentation and image classification process. Given prior studies on the superior performance of RF classifiers for OBIA land cover classification [

60], testing of additional classifier algorithms was not undertaken. Furthermore, while additional testing of RF parameters and object features may yield improvements in the accuracy assessment of the classified images, these improvements if significant would be minimal given the high overall accuracy assessments found in

Table 4. For future consideration, the feature objects of importance for the RF classifier were exported from eCognition. The mean values for the green and blue bands and the indices (i.e., NDVI, VDVI, VB) were most important for object classification. Meanwhile, the standard deviation value of the spectral bands, the mean and standard deviation of the DSM, and the GLCM Dissimilarity object feature were characterized as the least important. The relative importance of these object features may be applicable going forward for subsequent monitoring studies of similar FLAV species especially when considering the use of band indices for discriminating between vegetation and non-vegetation classes [

29,

43,

44,

48].

As the fields of deep learning and artificial intelligence continue to evolve, the adoption of these approaches to temporal vegetation monitoring is certainly encouraged [

49]. For larger monitoring projects of invasive vegetation management techniques, a deep learning framework incorporating high-resolution UAS imagery as the training data for satellite imagery would be a valuable tool for water resource managers. The success of previous studies expanding the scale of remote sensing projects through the fusion of datasets from multiple sensor types provides additional support for this effort [

28,

30].