Autonomous, Onboard Vision-Based Trash and Litter Detection in Low Altitude Aerial Images Collected by an Unmanned Aerial Vehicle

Abstract

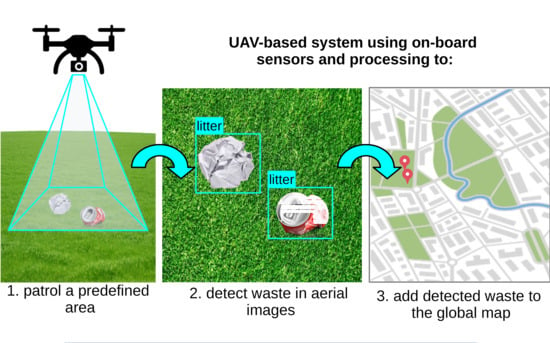

:1. Introduction

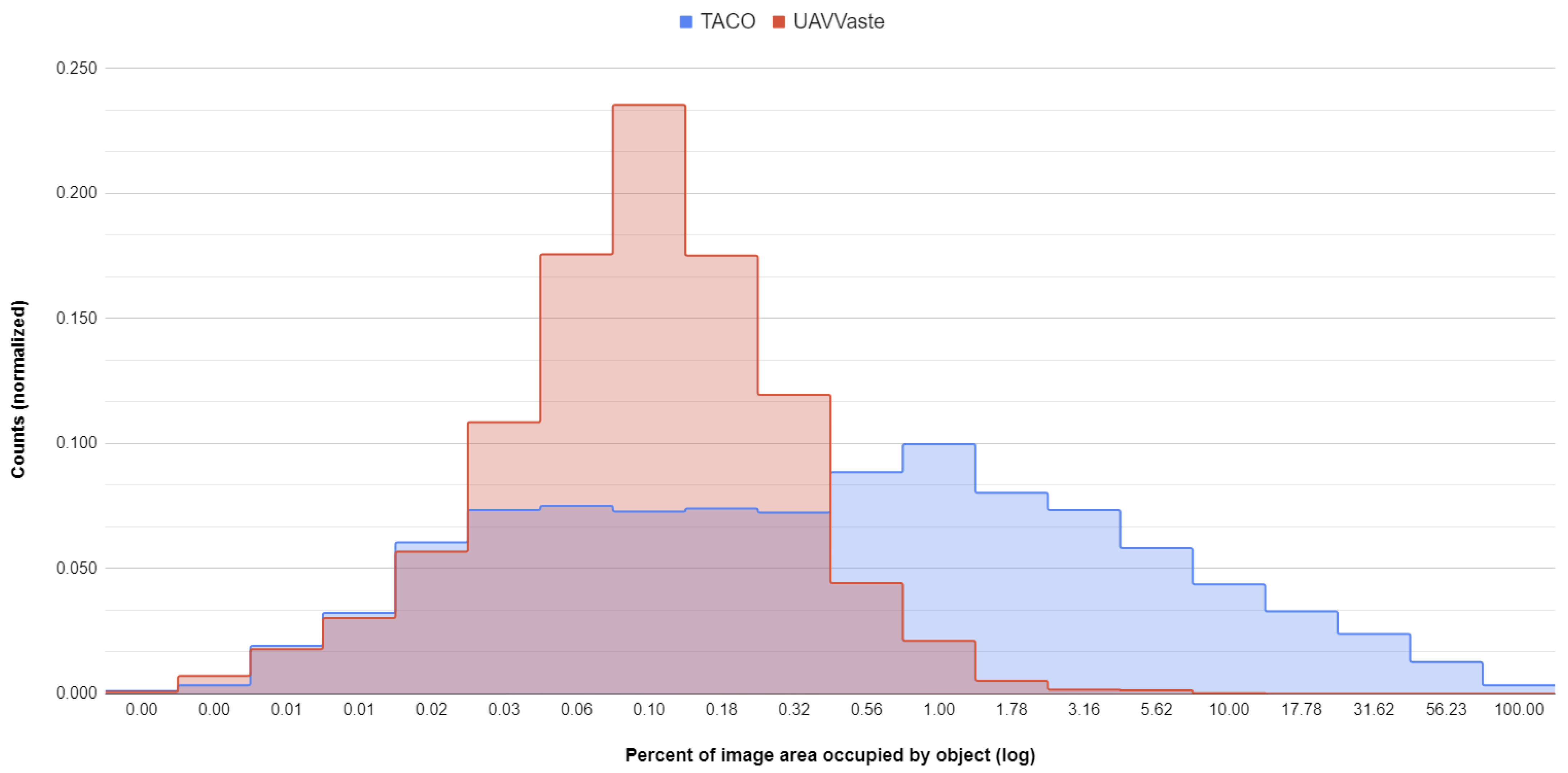

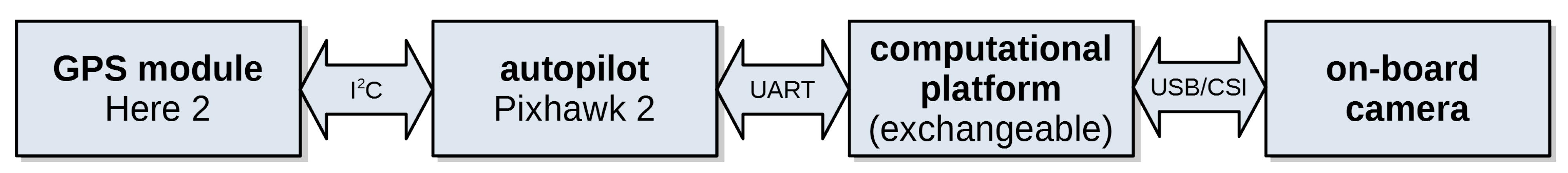

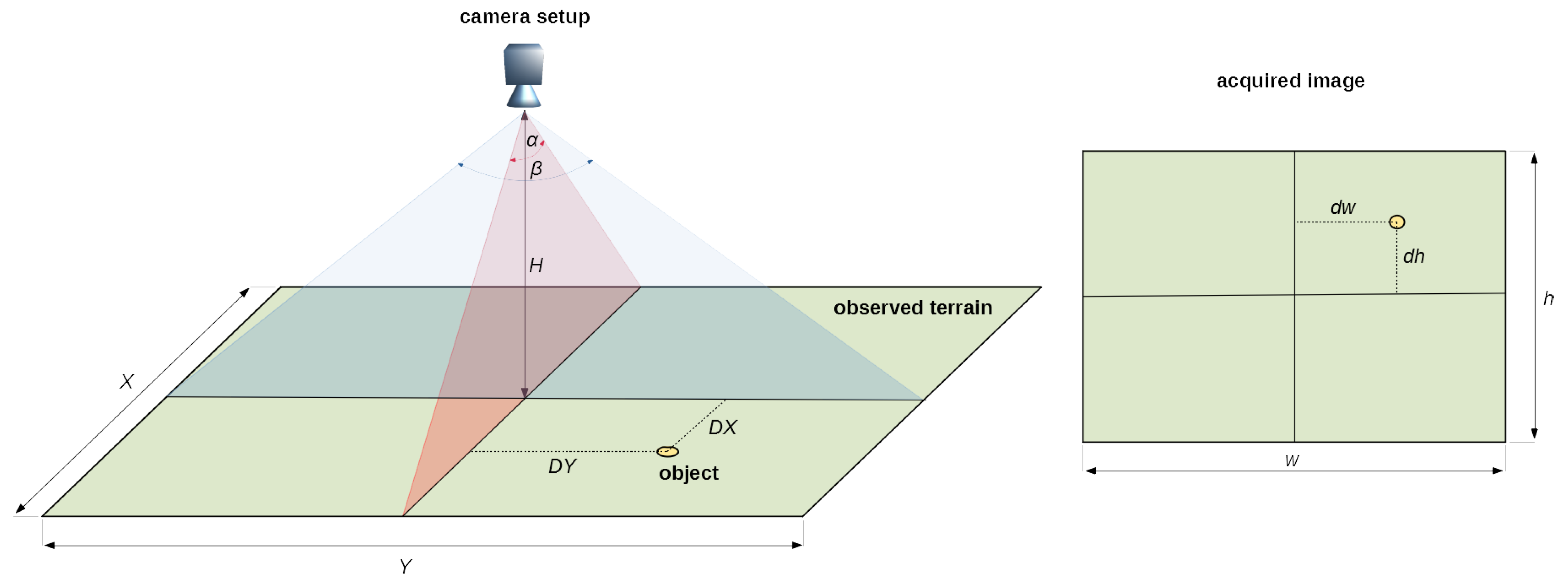

2. Materials and Methods

- Nvidia Xavier NX is a system-on-chip designed for embedded applications, integrating multi-core embedded 64-bit CPU and an embedded GPU in a single integrated circuit [39]. The CPU is a six-core, high-performance 64-bit Nvidia Carmel ARM-compatible processor clocked at up to 1.9 GHz (with two cores active, 1.4 GHz otherwise). The GPU is based on Volta architecture, with 384 CUDA cores. Moreover, it is equipped with 64 tensor cores and two Nvidia deep learning accelerator (NVDLA) engines dedicated for deep learning workloads. The CPU and the GPU both share the system memory. The development platform is fitted with 8 GB low power DDRL4 RAM clocked at 1600 MHz with a 128-bit interface, which translates to 51.2 GB/s bandwidth.

- Google Coral USB (Tensor Processing Unit) is an accelerator for edge processing based on the Google Tensor Processing Unit (TPU) architecture. The original design is an application-specific integrated circuit (ASIC) designed to be used in datacenters, with machine learning training and inference workloads, with the goal of optimizing the performance to power consumption ratio. Coral is dubbed the edge TPU and is a scaled-down version for embedded applications. An essential part of the edge TPU is the systolic matrix coprocessor with the performance of 4 TOPS, along with a tightly coupled memory pool. It is an inference-only device, and the neural network model needs to be compiled with dedicated tools enabling quantisation in order to be executed on this architecture. The accelerator connects to the host system by either USB (the option used in the presented research) or PCIe interface [41].

- Neural Compute Stick 2 (NCS2) is a fanless device in a USB flash drive form factor used for parallel processing acceleration. It is powered by a Myriad X VPU (vision processing unit) that can be applied to various tasks from the computer vision and machine learning domains. The main design goal was to enable relatively high data processing performance in power-constrained devices. This makes the chips a natural choice for powering the devices destined for edge processing. The high performance is facilitated by using 16 SHAVE (Streaming Hybrid Architecture Vector Engine) very long instruction word (VLIW) cores within the SoC, and an additional neural compute engine. Combining multi-core VLIW processing with ample local storage for data caching for each core enables high throughputs for SIMD workloads. It is directly supported by the OpenVINO toolkit, which handles neural network numerical representation and code translation [41,55].

- SSD detector with lightweight backend implemented in TensorFlow Lite [56] was included in the evaluation since it is one of the algorithms that operate without issues on Google Coral. The model uses quantization to 8-bit integers.

- YOLOv3 and YOLOv4 were tested, along with their lightweight (“lite”) variants to provide a range of solutions, since architectures with the best accuracy may not be fast enough for real-time processing, especially on resource-limited architectures.

- Models compiled for Nvidia Xavier NX were optimized with TensorRT [57] with the full precision floating point (FP32) variant, half-precision (FP16) variant and, quantized 8-bit variant where possible for potential performance gains. Performance trade-offs in terms of accuracy were also investigated.

- Tests performed using Xavier NX included analysis for a range of batch sizes to assess data transfer operations’ impact on the frame rate, with the highest frame rate presented as the evaluation’s result. USB accelerators do not operate on batches, so the images were sent one by one.

- Models used with the NCS2 were compiled using the OpenVINO toolkit [55], which translates the code to this architecture and performs conversion of weights and activations to the FP16 format.

- For comparison, the models were also executed on the microprocessors of the Raspberry Pi 4 and the Nvidia Xavier NX platforms. The tested models were based on the TensorFlow Lite implementation [56].

- Both USB accelerators were tested using the USB 2.0 and USB 3.0 ports of Raspberry Pi. The Coral accelerator was tested with two variants of the libedgetpu library—The std variant for regular clock speed and the max variant with higher clock speed, resulting in potentially higher performance. The higher clock frequency might require additional cooling for stable operation.

3. Results

- M1-mAP at a fixed IoU of 50%, also called mAP@50. Since such degree of overlap of predicted and ground truth bounding boxes for small objects is sufficient, and we are mostly interested in whether or not an object was detected to put it on the map. This is the main detection quality metric.

- M2—For this metric, the detections are performed with IoU ranging from 0.5 to 0.95 with a 0.05 step. For each step, all detections with IoU in this range are considered positive detections, so, as the threshold IoU increases, there will be less true positive detections. All the detections across all steps contribute towards the final mAP computation by averaging their partial results. This is the main quality metric for the detection task in the COCO competition.

- M3, M4, and M5 is a set of metrics computed as M2, but for small objects (M3—Longer bounding box side under 32 pixels), medium-size objects (M4—Longer bounding box side 32 to 96 pixels) and large objects (M5—longer bounding box side over 96 pixels).

- M6, M7, and M8 are recall values directly corresponding to (and computed for the same sets of detections as) M3 to M5. These are included to give an overview of how many objects are missed and to give an idea of their breakdown by size.

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Campbell, F. People Who Litter; ENCAMS: Wigan, UK, 2007. [Google Scholar]

- Riccio, L.J. Management Science in New York’s Department of Sanitation. Interfaces 1984, 14, 1–13. [Google Scholar] [CrossRef]

- Dufour, C. Unpleasant or tedious jobs in the industrialised countries. Int. Labour Rev. 1978, 117, 405. [Google Scholar]

- Proença, P.F.; Simões, P. TACO: Trash Annotations in Context for Litter Detection. arXiv 2020, arXiv:2003.06975. [Google Scholar]

- Lowe, D.G. Object recognition from local scale-invariant features. In Proceedings of the Seventh IEEE International Conference on Computer Vision, Kerkyra, Greece, 20–27 September 1999; Volume 2, pp. 1150–1157. [Google Scholar]

- Dalal, N.; Triggs, B. Histograms of oriented gradients for human detection. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–25 June 2005; Volume 1, pp. 886–893. [Google Scholar]

- Felzenszwalb, P.F.; Girshick, R.B.; McAllester, D.; Ramanan, D. Object detection with discriminatively trained part-based models. IEEE Trans. Pattern Anal. Mach. Intell. 2009, 32, 1627–1645. [Google Scholar] [CrossRef] [Green Version]

- Malisiewicz, T.; Gupta, A.; Efros, A.A. Ensemble of exemplar-SVMs for object detection and beyond. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 89–96. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 24–27 June 2014; pp. 580–587. [Google Scholar]

- Girshick, R. Fast R-CNN. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. arXiv 2015, arXiv:1506.01497. [Google Scholar] [CrossRef] [Green Version]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. Ssd: Single shot multibox detector. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; pp. 21–37. [Google Scholar]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal loss for dense object detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Elsken, T.; Metzen, J.H.; Hutter, F. Neural architecture search: A survey. arXiv 2018, arXiv:1808.05377. [Google Scholar]

- Real, E.; Aggarwal, A.; Huang, Y.; Le, Q.V. Regularized evolution for image classifier architecture search. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 21 January–1 February 2019; Volume 33, pp. 4780–4789. [Google Scholar]

- Zoph, B.; Cubuk, E.D.; Ghiasi, G.; Lin, T.Y.; Shlens, J.; Le, Q.V. Learning data augmentation strategies for object detection. arXiv 2019, arXiv:1906.11172. [Google Scholar]

- Tan, M.; Pang, R.; Le, Q.V. Efficientdet: Scalable and efficient object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 10781–10790. [Google Scholar]

- Tan, M.; Le, Q.V. Efficientnet: Rethinking model scaling for convolutional neural networks. arXiv 2019, arXiv:1905.11946. [Google Scholar]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; pp. 740–755. [Google Scholar]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Misra, D. Mish: A self regularized non-monotonic neural activation function. arXiv 2019, arXiv:1908.08681. [Google Scholar]

- Ghiasi, G.; Lin, T.Y.; Le, Q.V. Dropblock: A regularization method for convolutional networks. arXiv 2018, arXiv:1810.12890. [Google Scholar]

- Yang, Z.; Wang, Z.; Xu, W.; He, X.; Wang, Z.; Yin, Z. Region-aware Random Erasing. In Proceedings of the 2019 IEEE 19th International Conference on Communication Technology (ICCT), Xi’an, China, 16–19 October 2019; pp. 1699–1703. [Google Scholar]

- Rezatofighi, H.; Tsoi, N.; Gwak, J.; Sadeghian, A.; Reid, I.; Savarese, S. Generalized intersection over union: A metric and a loss for bounding box regression. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 658–666. [Google Scholar]

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.Y.M. Scaled-YOLOv4: Scaling Cross Stage Partial Network. arXiv 2020, arXiv:2011.08036. [Google Scholar]

- Säckinger, E.; Boser, B.E.; Bromley, J.M.; LeCun, Y.; Jackel, L.D. Application of the ANNA neural network chip to high-speed character recognition. IEEE Trans. Neural Netw. 1992, 3, 498–505. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Jouppi, N.P.; Young, C.; Patil, N.; Patterson, D.; Agrawal, G.; Bajwa, R.; Bates, S.; Bhatia, S.; Boden, N.; Borchers, A.; et al. In-datacenter performance analysis of a tensor processing unit. In Proceedings of the 2017 ACM/IEEE 44th Annual International Symposium on Computer Architecture (ISCA), Toronto, ON, Canada, 24–28 June 2017; pp. 1–12. [Google Scholar]

- Schneider, D. Deeper and cheaper machine learning [top tech 2017]. IEEE Spectr. 2017, 54, 42–43. [Google Scholar] [CrossRef]

- Sugiarto, I.; Liu, G.; Davidson, S.; Plana, L.A.; Furber, S.B. High performance computing on spinnaker neuromorphic platform: A case study for energy efficient image processing. In Proceedings of the 2016 IEEE 35th International Performance Computing and Communications Conference (IPCCC), Las Vegas, NV, USA, 9–11 December 2016; pp. 1–8. [Google Scholar]

- Verhelst, M.; Moons, B. Embedded deep neural network processing: Algorithmic and processor techniques bring deep learning to IoT and edge devices. IEEE Solid-State Circuits Mag. 2017, 9, 55–65. [Google Scholar] [CrossRef]

- Lin, D.; Talathi, S.; Annapureddy, S. Fixed point quantization of deep convolutional networks. In Proceedings of the International Conference on Machine Learning, New York, NY, USA, 19–24 June 2016; pp. 2849–2858. [Google Scholar]

- Hubara, I.; Courbariaux, M.; Soudry, D.; El-Yaniv, R.; Bengio, Y. Binarized neural networks. In Proceedings of the 30th International Conference on Neural Information Processing Systems, Barcelona, Spain, 5–10 December 2016; pp. 4107–4115. [Google Scholar]

- Cheng, J.; Wang, P.s.; Li, G.; Hu, Q.h.; Lu, H.q. Recent advances in efficient computation of deep convolutional neural networks. Front. Inf. Technol. Electron. Eng. 2018, 19, 64–77. [Google Scholar] [CrossRef] [Green Version]

- Cheng, Y.; Wang, D.; Zhou, P.; Zhang, T. Model compression and acceleration for deep neural networks: The principles, progress, and challenges. IEEE Signal Process. Mag. 2018, 35, 126–136. [Google Scholar] [CrossRef]

- Meier, L.; Tanskanen, P.; Heng, L.; Lee, G.H.; Fraundorfer, F.; Pollefeys, M. PIXHAWK: A micro aerial vehicle design for autonomous flight using onboard computer vision. Auton. Robot. 2012, 33, 21–39. [Google Scholar] [CrossRef]

- Ebeid, E.; Skriver, M.; Jin, J. A survey on open-source flight control platforms of unmanned aerial vehicle. In Proceedings of the 2017 Euromicro Conference on Digital System Design (DSD), Vienna, Austria, 30 August–1 October 2017; pp. 396–402. [Google Scholar]

- Franklin, D.; Hariharapura, S.S.; Todd, S. Bringing Cloud-Native Agility to Edge AI Devices with the NVIDIA Jetson Xavier NX Developer Kit. 2020. Available online: https://developer.nvidia.com/blog/bringing-cloud-native-agility-to-edge-ai-with-jetson-xavier-nx/ (accessed on 15 December 2012).

- Upton, E.; Halfacree, G. Raspberry Pi User Guide; John Wiley & Sons: Hoboken, NJ, USA, 2014. [Google Scholar]

- Libutti, L.A.; Igual, F.D.; Pinuel, L.; De Giusti, L.; Naiouf, M. Benchmarking performance and power of USB accelerators for inference with MLPerf. In Proceedings of the 2nd Workshop on Accelerated Machine Learning (AccML), Valencia, Spain, 31 May 2020. [Google Scholar]

- Mittal, P.; Sharma, A.; Singh, R. Deep learning-based object detection in low-altitude UAV datasets: A survey. Image Vis. Comput. 2020, 104, 104046. [Google Scholar] [CrossRef]

- Ammour, N.; Alhichri, H.; Bazi, Y.; Benjdira, B.; Alajlan, N.; Zuair, M. Deep learning approach for car detection in UAV imagery. Remote. Sens. 2017, 9, 312. [Google Scholar] [CrossRef] [Green Version]

- Wang, X.; Cheng, P.; Liu, X.; Uzochukwu, B. Fast and accurate, convolutional neural network based approach for object detection from UAV. In Proceedings of the IECON 2018-44th Annual Conference of the IEEE Industrial Electronics Society, Washington, DC, USA, 21–23 October 2018; pp. 3171–3175. [Google Scholar]

- Zhang, X.; Izquierdo, E.; Chandramouli, K. Dense and small object detection in uav vision based on cascade network. In Proceedings of the IEEE International Conference on Computer Vision Workshops, Seoul, Korea, 27 October–2 November 2019. [Google Scholar]

- Tong, K.; Wu, Y.; Zhou, F. Recent advances in small object detection based on deep learning: A review. Image Vis. Comput. 2020, 97, 103910. [Google Scholar] [CrossRef]

- Robicquet, A.; Sadeghian, A.; Alahi, A.; Savarese, S. Learning social etiquette: Human trajectory understanding in crowded scenes. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 8–16 October 2016; pp. 549–565. [Google Scholar]

- Zhu, P.; Wen, L.; Du, D.; Bian, X.; Hu, Q.; Ling, H. Vision Meets Drones: Past, Present and Future. arXiv 2020, arXiv:2001.06303. [Google Scholar]

- Božić-Štulić, D.; Marušić, Ž.; Gotovac, S. Deep learning approach in aerial imagery for supporting land search and rescue missions. Int. J. Comput. Vis. 2019, 127, 1256–1278. [Google Scholar] [CrossRef]

- Lo, H.S.; Wong, L.C.; Kwok, S.H.; Lee, Y.K.; Po, B.H.K.; Wong, C.Y.; Tam, N.F.Y.; Cheung, S.G. Field test of beach litter assessment by commercial aerial drone. Mar. Pollut. Bull. 2020, 151, 110823. [Google Scholar] [CrossRef] [PubMed]

- Merlino, S.; Paterni, M.; Berton, A.; Massetti, L. Unmanned Aerial Vehicles for Debris Survey in Coastal Areas: Long-Term Monitoring Programme to Study Spatial and Temporal Accumulation of the Dynamics of Beached Marine Litter. Remote. Sens. 2020, 12, 1260. [Google Scholar] [CrossRef] [Green Version]

- Nazerdeylami, A.; Majidi, B.; Movaghar, A. Autonomous litter surveying and human activity monitoring for governance intelligence in coastal eco-cyber-physical systems. Ocean. Coast. Manag. 2021, 200, 105478. [Google Scholar] [CrossRef]

- Hong, J.; Fulton, M.; Sattar, J. A Generative Approach Towards Improved Robotic Detection of Marine Litter. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; pp. 10525–10531. [Google Scholar]

- Panwar, H.; Gupta, P.; Siddiqui, M.K.; Morales-Menendez, R.; Bhardwaj, P.; Sharma, S.; Sarker, I.H. AquaVision: Automating the detection of waste in water bodies using deep transfer learning. Case Stud. Chem. Environ. Eng. 2020, 2, 100026. [Google Scholar] [CrossRef]

- Gorbachev, Y.; Fedorov, M.; Slavutin, I.; Tugarev, A.; Fatekhov, M.; Tarkan, Y. OpenVINO deep learning workbench: Comprehensive analysis and tuning of neural networks inference. In Proceedings of the IEEE International Conference on Computer Vision Workshops, Seoul, Korea, 27–28 October 2019. [Google Scholar]

- Lee, J.; Chirkov, N.; Ignasheva, E.; Pisarchyk, Y.; Shieh, M.; Riccardi, F.; Sarokin, R.; Kulik, A.; Grundmann, M. On-device neural net inference with mobile gpus. arXiv 2019, arXiv:1907.01989. [Google Scholar]

- Gray, A.; Gottbrath, C.; Olson, R.; Prasanna, S. Deploying Deep Neural Networks with NVIDIA TensorRT. 2017. Available online: https://developer.nvidia.com/blog/deploying-deep-learning-nvidia-tensorrt/ (accessed on 15 December 2012).

- Kaehler, A.; Bradski, G. Learning OpenCV 3: Computer Vision in C++ with the OpenCV Library; O’Reilly Media, Inc.: Newton, MA, USA, 2016. [Google Scholar]

- Rehder, J.; Nikolic, J.; Schneider, T.; Hinzmann, T.; Siegwart, R. Extending kalibr: Calibrating the extrinsics of multiple IMUs and of individual axes. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–21 May 2016; pp. 4304–4311. [Google Scholar]

- Geng, K.; Chulin, N. Applications of multi-height sensors data fusion and fault-tolerant Kalman filter in integrated navigation system of UAV. Procedia Comput. Sci. 2017, 103, 231–238. [Google Scholar] [CrossRef]

- Kanellakis, C.; Nikolakopoulos, G. Survey on computer vision for UAVs: Current developments and trends. J. Intell. Robot. Syst. 2017, 87, 141–168. [Google Scholar] [CrossRef] [Green Version]

- Al-Kaff, A.; Martin, D.; Garcia, F.; de la Escalera, A.; Armingol, J.M. Survey of computer vision algorithms and applications for unmanned aerial vehicles. Expert Syst. Appl. 2018, 92, 447–463. [Google Scholar] [CrossRef]

- Krishnamoorthi, R. Quantizing deep convolutional networks for efficient inference: A whitepaper. arXiv 2018, arXiv:1806.08342. [Google Scholar]

- Altawy, R.; Youssef, A.M. Security, privacy, and safety aspects of civilian drones: A survey. ACM Trans. Cyber-Phys. Syst. 2016, 1, 7. [Google Scholar] [CrossRef]

- Lynch, S. OpenLitterMap. com–open data on plastic pollution with blockchain rewards (littercoin). Open Geospat. Data Softw. Stand. 2018, 3, 6. [Google Scholar] [CrossRef]

| Designation | Description |

|---|---|

| horizontal camera viewing angle [] | |

| vertical camera viewing angle [] | |

| H | UAV altitude relative to ground level [m] |

| X | width of the terrain area observed by the camera [m] |

| Y | height of the terrain area observed by the camera [m] |

| w | image width [px] |

| h | image height [px] |

| displacement of object’s b. box center relative to the image center along the x-axis [px] | |

| displacement of object’s b. box center relative to the image center along the y-axis [px] | |

| displacement of object’s center relative to the observed area center along the x-axis [m] | |

| displacement of object’s center relative to the observed area center along the y-axis [m] |

| Metric | YOLOv4 | YOLOv3 | YOLOv4-CSP | YOLOv4-tiny-3l | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| FP32 | FP16 | INT8 | FP32 | FP16 | INT8 | FP32 | FP16 | INT8 | FP32 | FP16 | INT8 | ||

| M1 | 0.785 | 0.784 | 0.166 | 0.694 | 0.694 | 0.075 | 0.736 | 0.745 | * | 0.615 | 0.614 | * | |

| M2 | 0.476 | 0.473 | 0.101 | 0.342 | 0.340 | 0.040 | 0.424 | 0.424 | 0.336 | 0.335 | |||

| M3 | 0.282 | 0.280 | 0.014 | 0.152 | 0.152 | 0.000 | 0.219 | 0.219 | 0.137 | 0.144 | |||

| M4 | 0.546 | 0.542 | 0.128 | 0.416 | 0.415 | 0.040 | 0.498 | 0.498 | 0.422 | 0.417 | |||

| M5 | 0.611 | 0.603 | 0.332 | 0.373 | 0.373 | 0.274 | 0.561 | 0.559 | 0.335 | 0.335 | |||

| M6 | 0.330 | 0.334 | 0.011 | 0.218 | 0.216 | 0.000 | 0.276 | 0.275 | 0.218 | 0.226 | |||

| M7 | 0.593 | 0.590 | 0.137 | 0.472 | 0.472 | 0.046 | 0.558 | 0.558 | 0.472 | 0.470 | |||

| M8 | 0.639 | 0.635 | 0.352 | 0.400 | 0.400 | 0.304 | 0.591 | 0.591 | 0.361 | 0.361 | |||

| Metric | YOLOv4-tiny | YOLOv3-tiny | EfficientDet-d1 | EfficientDet-d3 | MobileNetV2 SSD | ||||||||

| FP32 | FP16 | INT8 | FP32 | FP16 | INT8 | FP32 | FP16 | FP32 | FP16 | FP32 | FP16 | INT8 | |

| M1 | 0.566 | 0.566 | 0.270 | 0.239 | 0.232 | 0.000 | 0.669 | 0.669 | 0.751 | 0.750 | 0.545 | * | 0.000 |

| M2 | 0.280 | 0.281 | 0.109 | 0.064 | 0.063 | 0.000 | 0.338 | 0.338 | 0.440 | 0.445 | 0.255 | 0.000 | |

| M3 | 0.131 | 0.132 | 0.074 | 0.018 | 0.018 | 0.000 | 0.097 | 0.095 | 0.150 | 0.153 | 0.068 | 0.000 | |

| M4 | 0.358 | 0.360 | 0.139 | 0.085 | 0.084 | 0.000 | 0.440 | 0.444 | 0.548 | 0.555 | 0.343 | 0.000 | |

| M5 | 0.114 | 0.114 | 0.000 | 0.023 | 0.023 | 0.000 | 0.589 | 0.589 | 0.612 | 0.612 | 0.409 | 0.000 | |

| M6 | 0.199 | 0.201 | 0.110 | 0.047 | 0.049 | 0.000 | 0.239 | 0.241 | 0.350 | 0.346 | 0.180 | 0.000 | |

| M7 | 0.411 | 0.412 | 0.169 | 0.140 | 0.139 | 0.000 | 0.549 | 0.556 | 0.633 | 0.639 | 0.450 | 0.000 | |

| M8 | 0.113 | 0.113 | 0.000 | 0.022 | 0.022 | 0.000 | 0.635 | 0.635 | 0.648 | 0.652 | 0.527 | 0.000 | |

| Neural Network Variant | ATPI [s] | FPS |

|---|---|---|

| YOLOv4 FP32 | 0.1868 | 5.352 |

| YOLOv4 FP16 | 0.0519 | 19.262 |

| YOLOv4 INT8 | 0.0288 | 34.709 |

| YOLOv3 FP32 | 0.1883 | 5.317 |

| YOLOv3 FP16 | 0.0475 | 21.054 |

| YOLOv3 INT8 | 0.0235 | 42.513 |

| YOLOv4-CSP FP32 | 0.1554 | 6.435 |

| YOLOv4-CSP FP16 | 0.0429 | 23.308 |

| YOLOv4-CSP INT8 | * | |

| YOLOv4-tiny-3l FP32 | 0.0258 | 38.732 |

| YOLOv4-tiny-3l FP16 | 0.0086 | 116.560 |

| YOLOv4-tiny-3l INT8 | * | |

| YOLOv4-tiny FP32 | 0.0223 | 44.889 |

| YOLOv4-tiny FP16 | 0.0076 | 132.245 |

| YOLOv4-tiny INT8 | 0.0057 | 176.0286 |

| YOLOv3-tiny FP32 | 0.0195 | 51.158 |

| YOLOv3-tiny FP16 | 0.0074 | 135.350 |

| YOLOv3-tiny INT8 | 0.0045 | 222.589 |

| EfficientDet-d1 FP32 | 0.1143 | 8.745 |

| EfficientDet-d1 FP16 | 0.0609 | 16.415 |

| EfficientDet-d3 FP32 | 0.3547 | 2.819 |

| EfficientDet-d3 FP16 | 0.1805 | 5.540 |

| Libedgetpu1-Std | Libedgetpu1-Max | |||||||

|---|---|---|---|---|---|---|---|---|

| USB 3.0 | USB 2.0 | USB 3.0 | USB 2.0 | |||||

| ATPI [s] | FPS | ATPI [s] | FPS | ATPI [s] | FPS | ATPI [s] | FPS | |

| SSD | 0.0418 | 23.9266 | 0.0862 | 11.5996 | 0.0316 | 31.6766 | 0.0764 | 13.0832 |

| USB 3.0 | USB 2.0 | |||

|---|---|---|---|---|

| ATPI [s] | FPS | ATPI [s] | FPS | |

| YOLOv4 | 1.0273 | 0.9734 | 1.1863 | 0.8429 |

| YOLOv4-tiny | 0.0894 | 11.1885 | 0.1229 | 8.1388 |

| YOLOv3 | 1.1000 | 0.9091 | 1.2471 | 0.8019 |

| YOLOv3-tiny | 0.2064 | 4.8453 | 0.2729 | 3.6642 |

| Jetson Xavier NX—tf.lite CPU NVIDIA Carmel ARMv8.2 | ATPI [s] | FPS |

|---|---|---|

| EfficientDet-d1 | 1.9070 | 0.524 |

| EfficientDet-d3 | 6.9869 | 0.143 |

| YOLOv4 | 16.981 | 0.059 |

| YOLOv4-tiny | 1.6994 | 0.588 |

| SSD | 0.5691 | 1.757 |

| Raspberry Pi 4B—tf.lite CPU BCM271, Cortex-A72x4 | ATPI [s] | FPS |

| EfficientDet-d1 | 3.6274 | 0.2756 |

| EfficientDet-d3 | 11.8212 | 0.0846 |

| YOLOv4 | 14.4206 | 0.0693 |

| YOLOv4-tiny | 1.0848 | 0.9218 |

| SSD | 0.8236 | 1.2141 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kraft, M.; Piechocki, M.; Ptak, B.; Walas, K. Autonomous, Onboard Vision-Based Trash and Litter Detection in Low Altitude Aerial Images Collected by an Unmanned Aerial Vehicle. Remote Sens. 2021, 13, 965. https://doi.org/10.3390/rs13050965

Kraft M, Piechocki M, Ptak B, Walas K. Autonomous, Onboard Vision-Based Trash and Litter Detection in Low Altitude Aerial Images Collected by an Unmanned Aerial Vehicle. Remote Sensing. 2021; 13(5):965. https://doi.org/10.3390/rs13050965

Chicago/Turabian StyleKraft, Marek, Mateusz Piechocki, Bartosz Ptak, and Krzysztof Walas. 2021. "Autonomous, Onboard Vision-Based Trash and Litter Detection in Low Altitude Aerial Images Collected by an Unmanned Aerial Vehicle" Remote Sensing 13, no. 5: 965. https://doi.org/10.3390/rs13050965

APA StyleKraft, M., Piechocki, M., Ptak, B., & Walas, K. (2021). Autonomous, Onboard Vision-Based Trash and Litter Detection in Low Altitude Aerial Images Collected by an Unmanned Aerial Vehicle. Remote Sensing, 13(5), 965. https://doi.org/10.3390/rs13050965