1. Introduction

Cheatgrass (

Bromus tectorum) was unintentionally introduced in North America in the late 19th century from Eurasia and is now found in every state in the contiguous US [

1]. In the western US, it has become a dominant component in many shrubland and grassland ecosystems [

2,

3], resulting in an increase in fine fuels that can lead to a cycle of increased fire frequency, fire severity, and irreversible loss of native vegetation and wildlife habitat [

4,

5,

6]. Detailed spatial information on the presence and abundance of cheatgrass is needed to better understand factors affecting its spread, assess fire risk, and be able to identify and prioritize areas for invasion treatment and fuels management. However, such information is lacking for much of the ostensibly invaded area in the western US, with exceptions for parts of the Great Basin ecoregion [

7,

8,

9,

10,

11,

12,

13].

Cheatgrass invasion has been especially devastating in sagebrush (

Artemisia spp.) ecosystems, which are home to a variety of sagebrush-obligate species such as greater sage-grouse (

Centrocercus urophasianus). Sage-grouse historically occurred throughout a vast (~125,000 km

2) area of the western US and portions of southern Alberta and British Columbia, Canada, but now occupies approximately half that area [

14]. Remote sensing approaches to mapping cheatgrass for such a large area represents a significant challenge due to diverse environmental conditions and difficulties obtaining enough ground-truth data to train predictive models. Downs et al. [

15], which we revisit later in this section, mapped cheatgrass for the sage-grouse range with moderate success (71% accuracy). To establish context for the current work, we broadly review the various approaches previously taken to map cheatgrass.

Remote sensing approaches to mapping cheatgrass distribution, percent cover, and dynamics (e.g., die-off, potential habitat, phenological metrics) generally fall into three categories: those focusing on spectral signatures or phenological indicators in overhead imagery [

10,

12,

13,

16,

17,

18,

19,

20,

21]; those based on modeling the ecological niche of cheatgrass using known ranges of biophysical conditions of where cheatgrass is known to occur [

22,

23]; and those combining elements of those two approaches [

7,

8,

11,

15,

24,

25]. Much attention has been given to deriving phenological indicators of cheatgrass presence from spectral indices, such as the Normalized Difference Vegetation Index (NDVI), because its life cycle differs from many of the native plant species in its North American range. Cheatgrass is a winter annual that may begin growth in the late fall and senesce in late spring, whereas many native dryland ecosystem plants begin growing in mid to late spring and continue growth through summer under favorable precipitation conditions [

2,

26]. Thus, cheatgrass can be identified indirectly by assessing pixel-level chronologies of NDVI [

7,

10,

12,

13,

19]. Phenological differences between cheatgrass and non-target vegetation can be difficult to detect in drier or cooler years, which is why some have focused on using imagery from years when cheatgrass is more likely to show an amplified NDVI response to above-normal winter or early spring precipitation [

10,

12]. Furthermore, the strength of this response vary among different landscapes because the timing of cheatgrass growth and senescence varies across ecological gradients such elevation [

12], soils [

27], and climatic conditions [

10,

12,

28].

The separability of cheatgrass from other vegetation in overhead imagery (either by phenology or spectral characteristics) is also affected by its relative abundance within a pixel. Some have elected to use sensor platforms with a high revisit rate and coarse spatial resolution, such as MODIS [

7,

8,

21,

24,

29] or AVHRR [

10], which offer better potential for capturing within-season variation of cheatgrass growth but contain more spectral heterogeneity due to their coarse spatial resolution. Others have chosen platforms such as Landsat-7 or -8 in favor of their finer spatial resolution, but at the expense of less-frequent return cycles and risk of missing peak NDVI [

10,

12,

13,

17,

18,

19,

21]. Some have used multiple sensors to make independent predictions of cheatgrass at different geographic scales (e.g., [

10]) or temporal periods (e.g., [

12,

21]). Recently, Harmonized Landsat and Sentinel-2 (HLS) data [

30] was used to map invasive annual exotic grass percent cover, the dominant component of which the authors assumed is cheatgrass [

31]. To our knowledge, combining concurrent data from multiple sensors with complimentary return cycles, spatial resolution, and spectral information to map cheatgrass has not been attempted.

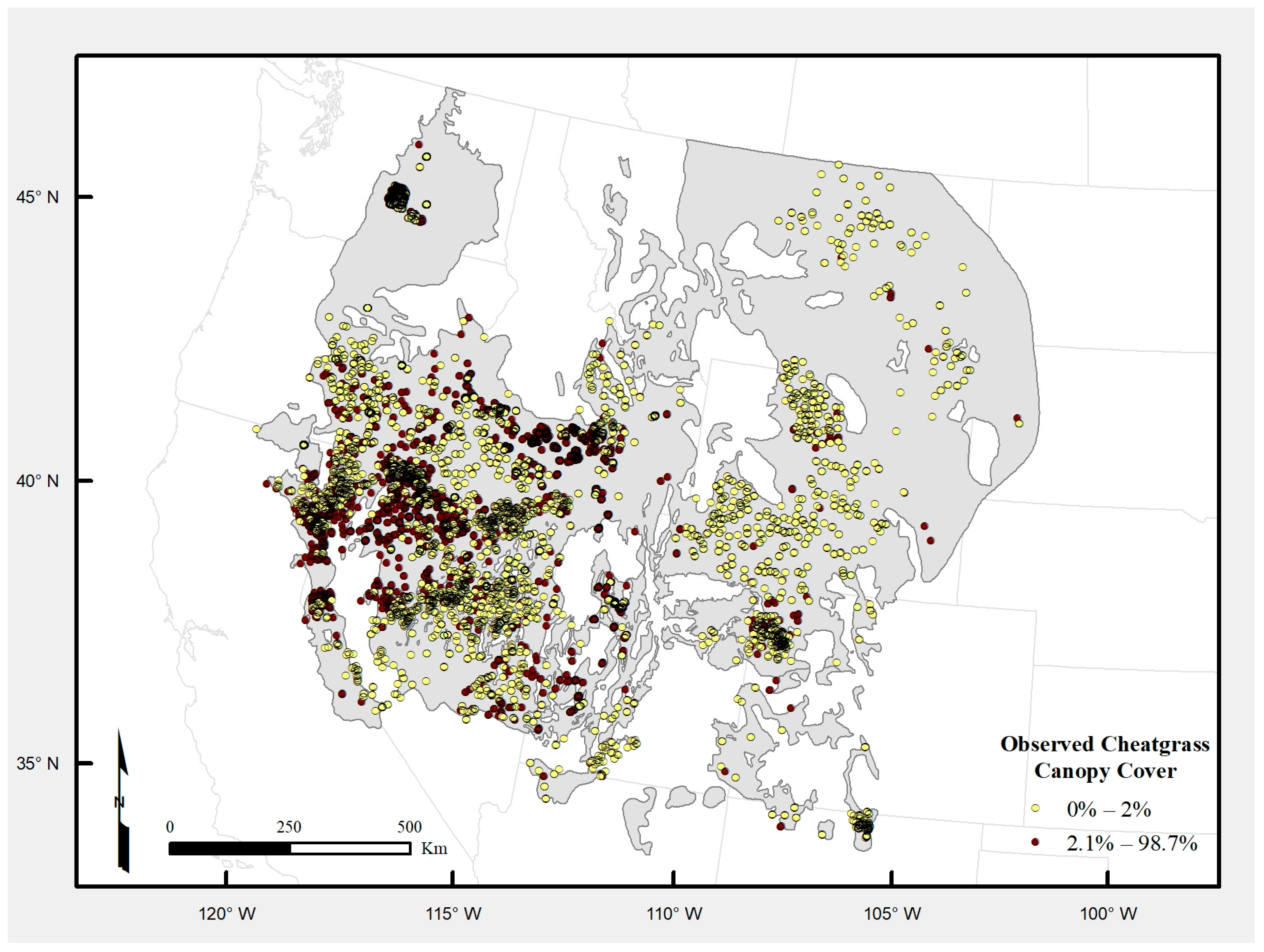

We revisit Downs et al. [

15], which became an important source of data and motivation for this study. Their approach utilized cheatgrass observations compiled from unrelated field campaigns throughout the western US to train a Generalized Additive Model that considered both remotely sensed phenological data (NDVI) and a broad suite of biophysical factors such as soil-moisture temperature regimes, vegetation type, potential relative radiation, growing degree days, and climatic factors (see

Section 2 for data descriptions). However, 37 of 48 potentially useful biophysical factors were excluded from their model due to high correlation. While their model achieved reasonable (71%) test accuracy, they expressed uncertainty about map accuracy east of the Continental Divide due to substantially fewer observations in that region. We hypothesize that using a larger volume of remote sensing data and more robust machine learning approaches, including those in the deep learning domain, might benefit the problem.

Deep learning (DL) algorithms have received broad attention for environmental remote sensing applications such as land cover mapping, environmental parameter retrieval, data fusion and downscaling [

32], as well as other remote sensing tasks such as image preprocessing, classification, target recognition, and scene understanding [

33,

34,

35,

36]. Reasons for the rise in popularity include well-demonstrated improvements in performance, ability to derive highly discriminative features from complex data, scalability to a diverse range of Big Earth Data applications, and improved accessibility to the broader scientific community [

32,

35,

36,

37]. DL is also seen as a potentially powerful tool for extracting information more effectively from the rapidly increasing volumes of heterogeneous Earth Observation (EO) data [

38,

39,

40].

Despite the advances with DL in remote sensing, its application to the field remains challenging due in part to a comparative lack of volume and diversity of labeled data as seen in other domains, and limited transferability of pre-trained models to remote sensing applications [

34,

37]. Zhang et al. [

35] propose four key research topics for DL in remote sensing that remain largely unanswered: (1) maintaining the learning performance of DL methods with fewer adequate training samples; (2) dealing with the greater complexity of information and structure of remote sensing images; (3) transferring feature detectors learned by deep networks from one dataset to another; and (4) determining the proper depth of DL models for a given dataset. The availability of training data is a relevant concern in this study as the number of field observations is less than what many DL practitioners prefer and is typically seen in the literature. This concern applies broadly to the use of DL in spatial modeling of native and nonnative species, where field data are often time-consuming and expensive to collect or may not be readily accessible from other sources [

12].

Our goal is to derive more discriminative, higher-resolution models of cheatgrass occurrence using Downs et al. [

15] as a starting point. We expand from there by using DL and more traditional machine learning approaches to combine concurrent time series of Landsat-7 ETM+ and MODIS data. Our first objective is to compare the performance of all model types and configurations to identify a single high-performing model configuration. The second objective is to construct a consensus-based ensemble of the preferred model to generate a 30-m ground sample distance (GSD) map of cheatgrass occurrence for the historic range of sage-grouse. The results of this study are intended to provide more detailed information than previously available on the extent of cheatgrass invasion in the western US to support multiple land management agencies’ efforts to mitigate impacts of cheatgrass invasion and facilitate further scientific investigation of factors affecting its spread.

4. Discussion

This study focused on developing more discriminative, higher-resolution models of cheatgrass occurrence for the historic range of the greater sage-grouse, using Downs et al. [

15] as a baseline. In doing so, we were able to improve overall accuracy by approximately 7% and increase spatial resolution from 250- to 30-m GSD, relative to the previous study. We consider these improvements biologically significant because even minor differences in accuracy can result in large differences in predicted areal extents, especially for species that are widespread over large geographic areas like cheatgrass [

67,

68]. The accuracy of our accuracy-

F1-balanced cheatgrass map (78.1%) is comparable to other studies that focused on much smaller regions in the Great Basin, Snake River Plain, and Colorado Plateau. For example, Bradley and Mustard [

10] achieved 64% and 72% accuracy, respectively, using AVHRR and Landsat-7. In a related study [

12], accuracies ranged from 54% to 74% using Landsat MSS, TM, and ETM+. It is worth noting, however, that these studies predicted more monotypic areas heavily infested with cheatgrass, whereas our study focused on identifying areas with at least 2% canopy cover of cheatgrass. Singh and Glenn [

17] achieved 77% accuracy in southern Idaho using Landsat. Bishop et al. [

19] reports higher model accuracies (85–92%) for seven national parks in the Colorado Plateau, although it is worth noting these estimates are based on the combined area of low and high probability of occurrence classes where cheatgrass was considered present if it occurred at >10% canopy cover; thus, making interpretation of accuracy difficult. In summary, we find our results encouraging compared to previous studies given the difference in geographic scale and ecological diversity of our study area, as well as lower threshold for detection of cheatgrass occurrence.

Combining biophysical, ancillary, and satellite data generally improved the performance of the four model classes that we tested, lending further credence to approaches for mapping cheatgrass that incorporate ecological niche factors and remote sensing [

7,

8,

11,

15,

24,

25]. Looking more closely at the satellite data, we found that combining concurrent MODIS and Landsat-7 data generally improves model performance compared to using either sensor alone. We attribute this result to choosing sensors with spectral-spatial characteristics that are complimentary to mapping cheatgrass and selecting robust machine learning techniques that are well-suited for deriving discriminative features from multi-modal data. This approach is simpler than fusing satellite data and provides greater flexibility for choosing and testing sensors for a given application. However, we do not discourage data fusion or use of fused satellite data such as HLS [

30], which has shown to be useful for mapping exotic annual grass cover [

31]. In fact, DL algorithms have shown promise for performing pixel-level image fusion [

69]. As such, the combined modeling and data fusion capabilities of DL make it an intriguing tool for leveraging the rapidly increasing volume of EO imagery [

38,

39].

The similar performance among model architectures in this study underscores the importance of evaluating multiple analysis methods and variable combinations. In a meta-analysis of land cover mapping literature, accuracy differences due to algorithm choice were not found to be as large as those due to the type of data used [

70]. While DL algorithms have been proven superior in many remote sensing applications [

35,

36,

37], their performance also hinges on having sufficiently large datasets to learn highly discriminative features in the data. However, what defines “sufficiently large” is not common knowledge and depends on the complexity of the problem and learning algorithm [

71]. This topic is considered by some to be one of the major research topics for DL in remote sensing that remains largely unanswered [

35]. We did observe a benefit to all models from adding 10% more data, suggesting that sample size may be a limiting factor in our cross-model comparison. The performance of DL models in this study is still encouraging, however, given the circumstances and comparable performance to LR and RF under a limited data regime. This is consistent with others who have shown good performance using DL for similar land use/land-cover applications [

71,

72,

73,

74,

75]. Acquiring more field data was beyond the scope of this study but should be a priority for future research given that more data has likely become available since the previous study.

We chose relatively simple DL methods as a logical first step to assess whether DL was appropriate for our application and might warrant investigating more computationally intensive methods such as convolutional neural networks (CNNs). CNNs are commonly used in overhead imagery remote sensing due to their ability to take advantage of information in neighboring pixels [

36]. However, CNNs may not perform well in cases when the phenomena of interest occur in mixed pixels or exists in the sub-pixel space [

74], such as is the case with cheatgrass. Furthermore, the problem can be exacerbated if higher resolution imagery is not available or there is significant cloud cover present. These considerations and greater ease of use of the DNN and JRNN methods factored into our decision to exclude CNN from this study. However, we suggest the relative success of the DNN and JRNN methods does warrant future testing of CNN approaches, and a logical next step might be developing joint DNN-CNN or JRNN-CNN architectures for a semi-supervised classification.