1. Introduction

Drones, also called unmanned aerial vehicles (UAVs), have become increasingly popular for both military purposes and domestic uses. However, due to their agility, accessibility, and low cost, drones can fly above prohibited areas and as such pose significant security risks. For example, remote-controlled drones have repeatedly violated the boundaries of protected areas such as airports and military bases. Additionally, they may also be used to engaged in illegal activities, such as invasion of privacy, smuggling, and industrial espionage [

1,

2]. Thus, there is a strong need to develop methods to detect drones and defend against them autonomously.

Many techonolgy have been utilized to deal with this problem, including those based on acoustic [

3,

4], lidar [

5], radar [

6], RF signal detection, and optical camera [

7,

8,

9,

10,

11] sensors. Acoustic sensors [

6,

12], installed on microphone arrays, can be employed to detect the specific sound of drone rotors. However, this may not work in noisy environments, such as airports. Meanwhile, drone surveillance using lidar is still doubtful, due to the cost-effectiveness, massive data output, cloud sensitivity, and so on. Although, radar has been used for several decades to detect flying vehicles there are still some difficulties in detecting drones using radars when a drone’s electromagnetic signature is low and its traveling velocity is slower [

13,

14]. By intercepting the communications between drones and ground operators [

14,

15], RF-based detection has widely been used for jamming drone movement in the commercial market. However, this approach may fail when the drone navigates using a pre-programmed flight path, which does not require ground operators. With the improvement of deep learning—specifically deep convolutional neural network (CNN) algorithms—tracking with optical cameras has become a leading approach in the detection of drones, as it has presented several advantages, such as higher robustness and accuracy, larger ranges, and better interpretability [

10].

AlexNet [

16] first demonstrated the robustness and accuracy of deep CNN for object detection. Since then, more and more end-to-end CNN-based network models have been proposed for the detection task. Mainly because the designed features do not require a manual design, ultimately increases the generalization capacity of the model [

17]. In the recent market, two popular CNN-based network architectures used for object detection exist. Among them, the two-stage [

18,

19,

20,

21] detector has better accuracy of detection over the single stage-detector [

22,

23] however, it is not ideal for real-time object detection due to the high computational cost required. In contrast, to improve the accuracy while preserving the low computational cost of the one-stage detector, several improvements have been made to the one-stage detector network, including the anchor shape prior box [

23], the loss of class imbalance [

24], the cascade of different feature resolution layers [

25], and the feature pyramid network (FPN) [

26].

A deep CNN can discover more critical features of the object in order to better detect and track targets obtained from optical cameras, compared with other machine learning algorithms [

27]. However, the detection and tracking of UAVs for surveillance using a pan-tilt-zoom (PTZ) camera is challenging, compared to a static camera, due to the camera motion and the training parameters of the CNN model. For the standard object detection models to detect and track a target, the model may misdetect and generate a false alarm, or fail to track a target due to the computational complexity and response time of the PTZ motor.

To detect and track a UAV using a PTZ camera by overcoming the challenge mentioned above, it needs to design a low computational complexity algorithm. Many researchers have proposed drone detection methods using single-stage deep CNNs. As an example, the airborne visual detection and tracking of cooperative UAVs by exploiting a deep CNN has been proposed by [

11]. In the proposed method, each frame is divided into a square grid, and each square grid is used as an input to a light-weight YoLo [

23] network, in order to detect and track the drone. A deep learning-based strategy to detect and track drones using several cameras (DTDUSC) has also been proposed by [

10]. In both [

10,

11] methods, light-weight YoLo is used as the backbone of the network model, taking advantage of its low computational cost. However, the detector may miss targets, mainly due to camera motion and the target being below the horizon. Besides, both network models struggle to detect small drones and may fail to generalize to drones with new or unusual aspect ratios or configurations. One of the main reasons for such a struggle is the utilization of an anchor box, due to the constraints of the bounding box’s minimum and maximum size. Thus, utilizing anchors to detect a target reduces the detection accuracy, due to the size invariance of objects in different ranges, and increases the computational complexity, because of the post-processing necessity to filter the final bounding box from the candidate bounding boxes.

Researchers have also recently focused on anchor-free methods, such as CornerNet [

28] and ExtremeNet [

29]. CornerNet detects the upper-left and lower-right corners of the bounding box, in order to determine the target’s position and size. However, the semantic information of these corners is comparatively weak and difficult to detect. Besides, in this method, post-processing is required to combine two points that belong to the same target. ExtremeNet uses the best key point estimation framework to find the extreme points by predicting the target center. However, its computational cost is high and its detection accuracy is not significantly improved, due to the higher number of points involved. In addition, the network pays too much attention to edges, which can easily cause misdetection and false alarms. To address these issues, ref. [

30] introduced CenterNet, which only needs to extract the center point of each object, without requiring post-processing, effectively reducing the false alarm detection and computational cost.

In addition to UAV detection, many researchers have also recently proposed tracking algorithms using traditional or machine learning-based methods, which can be integrated with a detection algorithm. Simple online real-time tracking (SORT) is proposed in [

31], which is a simple and effective way of tracking multiple detected objects using the Kalman filter [

32]. However, the contextual information of consecutive video frames was given little attention and the method was designed for online detection-tracking. Furthermore, the model fails to predict the target’s next state, due to occlusions, different viewpoints, and so on. Many researchers have proposed methods to improve the tracking accuracy by adding or using deep learning approaches to curb this problem. Alternatively, ref. [

33] used long-short term memory (LSTM) and proposed a real-time recurrent regression network for the visual tracking of generic objects (

). This method showed promising results, in terms of the tracking approach, for real-time application. However, the tracking result was not suitable for small targets in a cluttered region, generated false alarms, and failed under rapid movement. Comparing SORT with

, SORT is fast, straightforward, outperforms in predicting the future target state, and it performs better with small-sized targets under Gaussian noise.

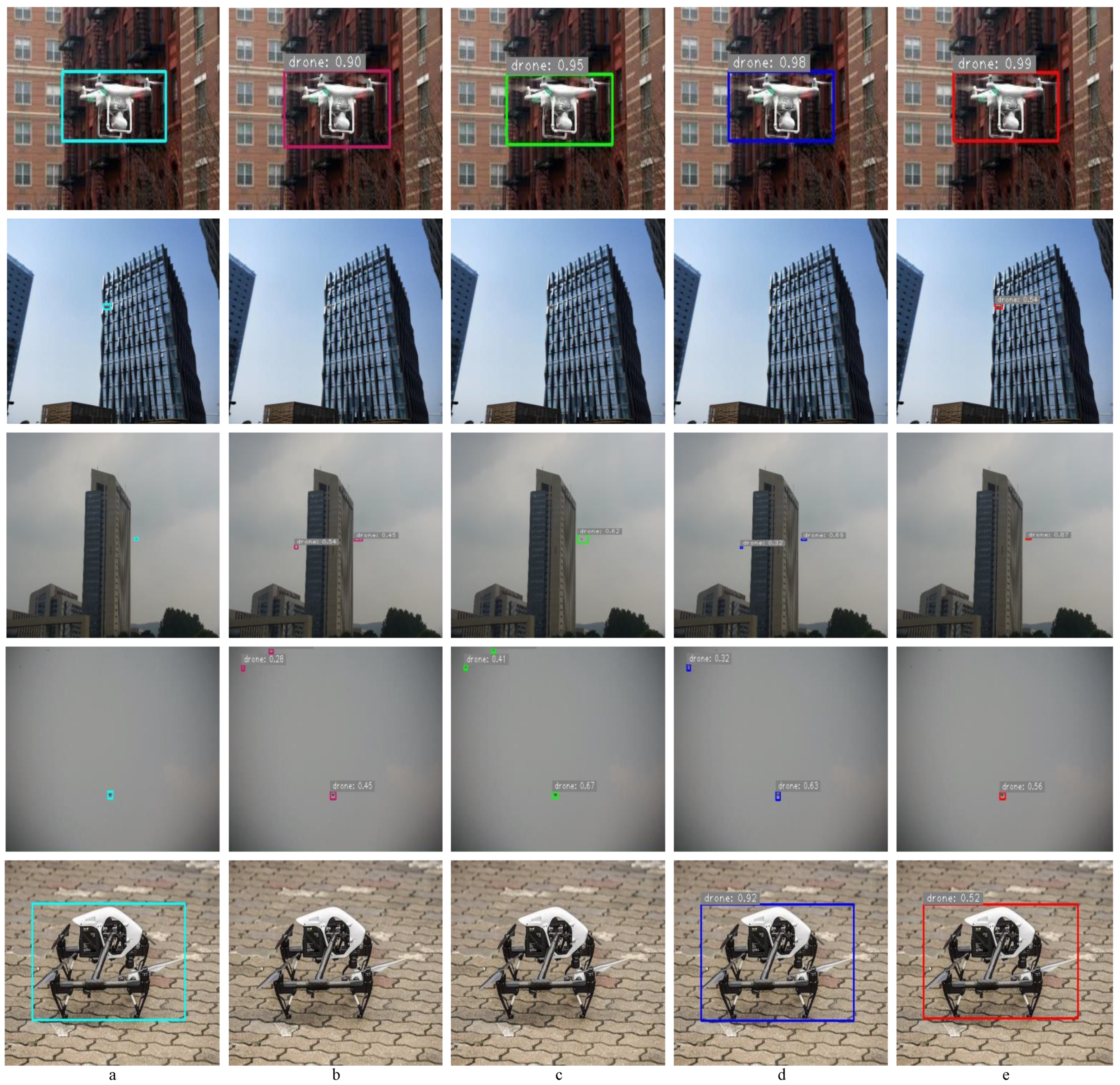

Overall, as shown in

Figure 1, to effectively detect and track a drone in real-time using a PTZ camera, we propose a novel CNN-based UAV detection algorithm integrated with a Kalman filter-based tracking algorithm. The detection method was designed with a small number of training parameters. Similarly, the tracking was designed by using CNNs with the Kalman filter, in order to reduce the computational cost and enlarge the target region within a frame, thus improving the detection accuracy for small targets.

In the detection step, the key point estimation of the modified CenterNet [

30] and the network feature aggregation of FPN [

26] motivated us to design a new CNN architecture called cross-scale feature aggregation CenterNet (CFACN), in order to obtain better performance with a small number of training parameters. We used the CenterNet key-point estimation approach and modified its key point estimation to use an anchor-free method, thus improving the detection of small targets and reducing the computational complexity.

Figure 2 shows a comparison of anchor-based and key point-based detection approaches. In

Figure 2a, the anchor boxes are generated to obtain a bounding box for the target, whereas

Figure 2b shows the center key point-based method, using one key point for one object. Furthermore, in the tracking stage, we design a lightweight detector, region-of-interest cross-scale feature aggregation CenterNet (ROI-CFACN), which is used as a tracker with the Kalman filter, in order to improve detection and training accuracy.

The proposed methods use the CFACN and ROI-CFACN with the Kalman filter, in order to detect and track UAVs from video data. Firstly, the CFACN or ROI-CFACN networks are used to extract features for different pixel size frames at different frames, to recognize and obtain the bounding boxes of UAVs from a video frame. Then, the bounding boxes pass through the region-of-interest-scale-crop-resize (RSCR) method to get a ROI for the next frame. Finally, according to the result obtained from either CFACN or ROI-CFACN in the video frames, we use the Kalman filter algorithm to predict or update the UAV state directly using a result from CNN detection. The main contributions of this paper are summarized as follows:

- (1)

The novel CFACN and ROI-CFACN methods are proposed to detect UAVs from video data. These methods use cross-scale feature aggregation (CFA) to effectively estimate the center key point, size, and regression offset of the UAVs. In the estimation, CFA uses bi-directional information flows between different layers of features in up–down directions, in order to improve accuracy and efficiency. Furthermore, CFA helps the network to learn the effect of the up layer on the down layer (and vice versa), due to its feedback flow;

- (2)

The RSCR algorithm, which uses the contextual information of consecutive frames, is designed to merge the CFACN and ROI-CFACN methods. This algorithm helps to flow the information between the two proposed networks. It also helps the ROI-CFACN to focus on the ROI by removing the background effect and enlarging the small target. This algorithm not only improves the accuracy, but also further reduces the computational complexity of the method;

- (3)

A dynamic state estimation approach is designed to track the UAV. The dynamic state uses eight state-based vectors for target state estimation and tracks the drone by using either a simple detection-based online tracking, using the result obtained from the CNN, or a tracking-based detection approach, using the Kalman-estimated state.

The remainder of this paper is organized as follows:

Section 2 introduces the proposed method in detail. In

Section 3, the proposed method is evaluated. In

Section 4, the discussion is presented. Finally, in

Section 5, our conclusions are provided.

2. Proposed Method

For UAV detection applications using a PTZ camera, the overall latency can be obtained by assessing three stages (i.e., detection, tracking, and PTZ controller). The controller section is beyond the scope of this article. The remaining stages—detection and tracking—discussed in this article is designed to work in real-time applications and implemented on the parallel algorithm concept, where each stage works separately and transfers data in between.

As shown in

Figure 1, the overall proposed method mainly consists of CFACN, RSCR, ROI-CFACN, and dynamic state estimation to detect and track UAVs. In the detection part, CFAN is used to obtain the bounding boxes of a target in the previous frame. The final candidate bounding box is selected according to its size or the bounding box prediction score, and is used to initialize the tracker and set it as the RSRC input value. In the current frame, the input frame, of dimensions 512 × 512 pixels, is cropped to 128 × 128 pixels, according to the ROI obtained from the RSCR, and used in ROI-CFACN to predict the target bounding box, thus minimizing the target search region and enlarging small targets. The final candidate bounding box obtained from each frame is saved as the measured state. In the tracking part, a Bayesian approach using the Kalman filter algorithm is employed to predict the drone’s state in the current frame and sends the necessary data to the controller, in order to control the rotating turret’s direction and speed. Details about the CFACN, ROI-CFACN, RSCR, and dynamic state estimation methods are discussed in the following.

2.1. CFACN and ROI-CFACN Architecture

The CFACN uses 512 × 512 pixel frame as an input, in orderto identify and localize the drone and propose candidate ROIs. ROI-CFACN, the second network, uses the cropped 128 × 128 pixel frame as input, in order to localize the drones with lower pixel scale by reducing the background complexity. This strategy allows us not only to reduce the computational cost, but also limits the number of false alarms, which tend to occur when the target search is carried out across the entire image plane. To address this, we designed a CFA method on the basis of ResNet-18. The overall proposed detector method is key point-based and built on top of residual blocks, with CFA as the building block of the network.

In the proposed method, a consecutive frame from a video is taken as input to a full convolutional CNN to generate a heatmap (to obtain the center point), size (height and width of the bounding box), and offset, as shown in

Figure 3. First, assume

is an input frame obtained from the video, with width

W and height

H, from which we expect to predict

, where

S is output stride size and

C is the number of key point categories. In the generated key point,

. It is considered as a detected key point; if

, it is treated as background. If the

is the ground truth bounding box of the target, its center point is set to 1 at

where

and its offset point at

p is

. The other information about the target is obtained from the key point, stride, and image information. All the center point is obtained from the predicted

and then regressed to obtain the target bounding box size. The value at each key point is used as the confidence score, and regression at its position is used to obtain the bounding box size. The position coordinates are then calculated as:

where

is the offset prediction and

is the predicted target size.

CenterNet-based models only detect the center point and obtain minimum target information, resulting in more misdetections and false alarms for UAV targets. We designed a modification of the key point estimation step of CenterNet and a new CNN architecture, called CFA, in order to extract more important information through its feedback connection, to solve this problem.

Cross-Scale Feature Aggregation

Conventional multi-scale feature aggregation aims to aggregate different feature layers. Formally, after a feature is extracted using the backbone network, given a list of multi-scale features

, as shown in

Figure 4a, where

represents the feature output at level

, our objective is to find the transformation

f that can effectively aggregate different features and output a list of new features:

. As an example,

Figure 4b shows the conventional top-down FPN approach [

26]. The convolutional feature levels from layers 3–7 are taken as input features

, where

represents a feature level with resolution of

in the input image.

For instance, if the input resolution is 512 × 512, then

represents the feature level 3

with a resolution of 64 × 64. FPN aggregates multi-scale features in a top-down manner:

where Resize is usually an upsampling or downsampling operation for feature matching and Conv is usually a convolutional operation for feature processing. This kind of conventional method generally suppresses the accuracy of the results, due to one-way information flow between the layers. In addition, as shown in

Figure 4c,d, deep multi-scale feature aggregation (MFA) has been proposed to improve the result. However, this network also requires more parameters, thus making its computational complexity high. As a concrete example, CenterNet uses different backbones to compare their accuracy and efficiency trade-offs, from which it has been shown that the deep MFA has better accuracy, but reduced efficiency. In order to improve both the accuracy and efficiency under limited computational resources, we propose a novel MFA- and CFA-based network, as shown in

Figure 5.

As shown in

Figure 5a, in CenterNet, the network is designed to have a single information flow through the network. In order to improve the accuracy, multi-scale feature aggregation CenterNet (MFACN) was designed using the MFA parallel information flow network, as shown in

Figure 5b. To further improve MFACN, the CFA bi-directional parallel flow network with a feedback architecture was designed, as shown in

Figure 5c.

ResNet [

34], shown in

Figure 4a, is used to extract the features in different layers as a backbone. Then, we use the ResNet outputs from layers 2 to 5 (i.e., P2, P3, P4, and P5) as inputs to CFA. In the design of a CFA, we first minimize the up–down nodes. Our target is simple: Minimize the computational cost and improve the detection accuracy, by minimizing the up-node aggregation between different layers and connected in cross-triangular form. This led us to design a simple network. Secondly, we increase the down-node aggregation by connecting the last intermediate (P4, P3, and P2) layers with the last convolution layer of each stage (P5, P4, and P3, respectively) in the CFA, as shown by the red connections in

Figure 5c, in order to improve the detection accuracy. Furthermore, to obtain an improved detector through the down–up nodes, using downsampling or upsampling layers (e.g., a pooling or upsampling layer) is not effective when the network layer is small. To address this problem, we use a convolutional layer with a stride of two as the downsampling layer and a transposed convolution layer as the upsampling layer, in order to better understand the correlation between the different sizes of output feature layers. The CFA is obtained as:

where

is the ReLu function, ConvSt is a convolution and downsampling operation with stride 2 for feature processing and feature matching, TransConv is a transposed convolution and upsampling operation for feature processing and feature matching, and

is the intermediate feature at level 4 on the top-down pathway, which shows the information flow of different features in an up–down direction.

The CFA is simple but effective, allowing for a higher-resolution output (stride 4). At the same time, we change the channels of the four transposed convolution layers of CFA to 512, 256, 128, and 64, respectively. The up-convolutional filters are initialized using the normal Gaussian distribution with mean zero.

As shown in

Figure 6, the base detector CFACN mainly consists of three parts: ResNet-18 as its backbone, CFA, and the prediction header network. Both CFACN and ROI-CFACN frameworks include 3 × 3 convolution, 1 × 1 convolution, Keypoint heatmap prediction header, regression prediction header, and size prediction header.

ROI-CFACN is the lightweight version of CFACN; the difference is that the channel depth of the CFA layer in the aggregation network is 256, 128, 64, and 32, respectively. The backbone in both scenarios has the same layer and channel depth. As shown in

Figure 6, for each of the frameworks, the features of the backbones are passed through the CFA, then through an isolated 3 × 3 convolution, ReLu, and another 1 × 1 convolution. In total, CFACN and ROI-CFACN have a backbone, cross-scale aggregation, two 3 × 3 convolutional layers, one 1 × 1 convolutional layer, and three prediction layers.

In addition to the advantages of CFA—that is, key point estimation and post-processing without non-maximum suppression (NMS)—we also need to consider the contextual information of consecutive frames in the videos. Utilizing the contextual information of detected UAVs in CFACN and RSCR allows us to obtain the ROI of the next frame for ROI-CFACN. This further reduces the effect of background complexity and enlarges the UAV area to improve the accuracy and reduce the computational complexity. Then, ROI-CFACN is used to detect the UAV within the ROI obtained from the RSCR algorithm. However, for the ROI-CFACN to be effective, the base detector plays a significant role. If the accuracy of the base detector is low, ROI-CFACN cannot be used, and the method is not adequate. On the other hand, when the detection accuracy of the base detector is high, the method is more effective.

2.2. ROI Formulation

The final candidate bounding boxes obtained in frame t from the detection result of CFACN 512 × 512 pixel size is passed through RSCR and used as a ROI in frame . However, when using ROI in ROI-CFACN, we have two major issues. First, the target location in frame t does not always overlap the target in frame ; second, the network input of ROI-CFACN has a fixed size. To solve these issues, we consider the maximum distance the drone could jump from between frames t and , thus widening our search region area. To widen the search region and avoid the error of the prediction bounding box mismatching the ground truth, the final candidate bounding box is first scaled by a constant and set as an ROI. In our experiment, was set to 2. Furthermore, the image is cropped and reshaped to a 128 × 128 pixel size. Finally, the reshaped image is used as input for the ROI-CFACN.

2.3. Tracking on the Current Image Plane

The goal of the tracker is to associate localized objects across a sequence of video frames. The tracking algorithm obtains the measurement state, from either CFACN or ROI-CFACN, in order to estimate the motion and related data. In our work, we modified the dynamic model of SORT, based on the Kalman filter algorithm, to find the optimized state estimate from the input state, previous state estimate, and mathematical models. SORT works best for linear systems with Gaussian processes involved. However, SORT may fail in many applications, due to occlusions, different viewpoints, and so on. To improve this, the Kalman filter associated with lightweight deep learning and ROI was used. When the state scenario has a Gaussian distribution, the prediction state is used to find ROI otherwise, a deep learning framework is utilized to recover the point of view. Overall, in the tracking algorithm, the Bayesian optimization method—which returns more accurate results than just one single observed measurement—is used first. Whenever a new measurement comes in, the filter updates the new estimate, such that the error estimation vector between the estimated states and the measured state is minimized. The recursive manner of state measuring, prediction, and updating make it a useful method under estimation accuracy and time constraints, as highly required for the considered application.

Secondly, to avoid the aspect ratio of the target bounding box being constant as in SORT. In the dynamic model, the state of a drone is defined in an eight-state space, as shown in Equation (

2):

where

represents the center of each target bounding box;

represents the width and height of each target bounding box; and

represent their respective velocities. When either of the detectors detects the target location and is associated with a target, the velocity component is solved optimally by a Kalman filter framework and the detection bounding box is used to update the target state and the turret rotation is adjusted accordingly (i.e., online detection-tracking). If no detection is associated with the target, its state is simply predicted (without correction) using the linear velocity model (i.e., tracking-detection).

Finally, to avoid an increase of the computational cost when the detection is not associated with a target, a recovery time is utilized for the ROI-CFACN, to detect a target within five consecutive frames in the tracking-detection state. During the recovery time, if the ROI-CFACN does not obtain the target bounding box, the whole algorithm is reset and the CFACN method is re-adopted.

Our approach is quite general, as it can handle a variety of UAV detection situations [

35,

36]. Empirical performance evaluations established the advantages of the proposed method over other state-of-the-art algorithms, as detailed below.