The Urban Observatory: A Multi-Modal Imaging Platform for the Study of Dynamics in Complex Urban Systems

Abstract

1. Introduction

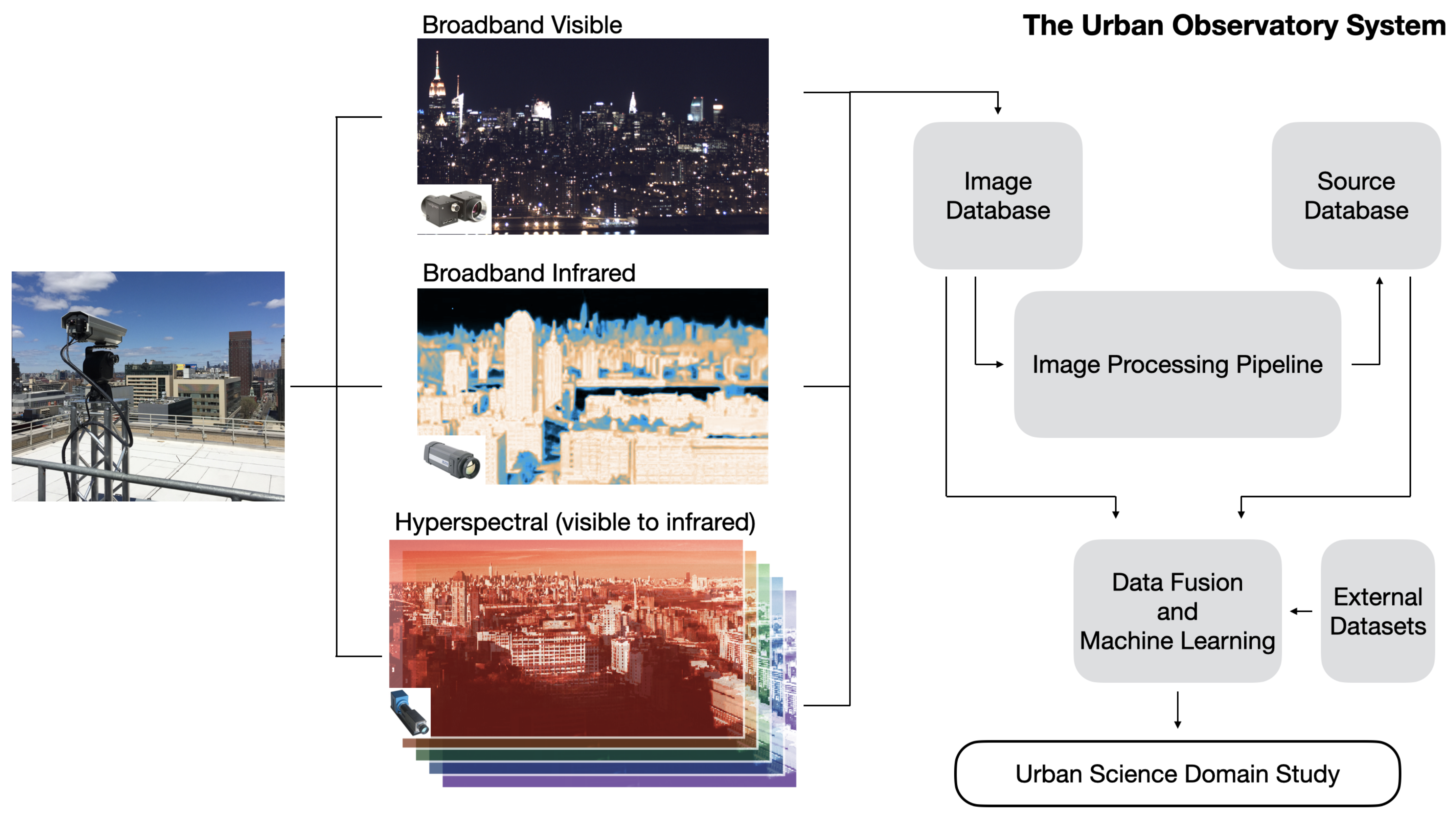

2. Instrumentation

- Drives the imaging devices remotely;

- Pulls and queues data transfer from the deployed devices;

- Performs signal processing, computer vision, and machine learning tasks on the images and associated products; and

- Holds and serves imaging and source databases associated with the data collection and analysis.

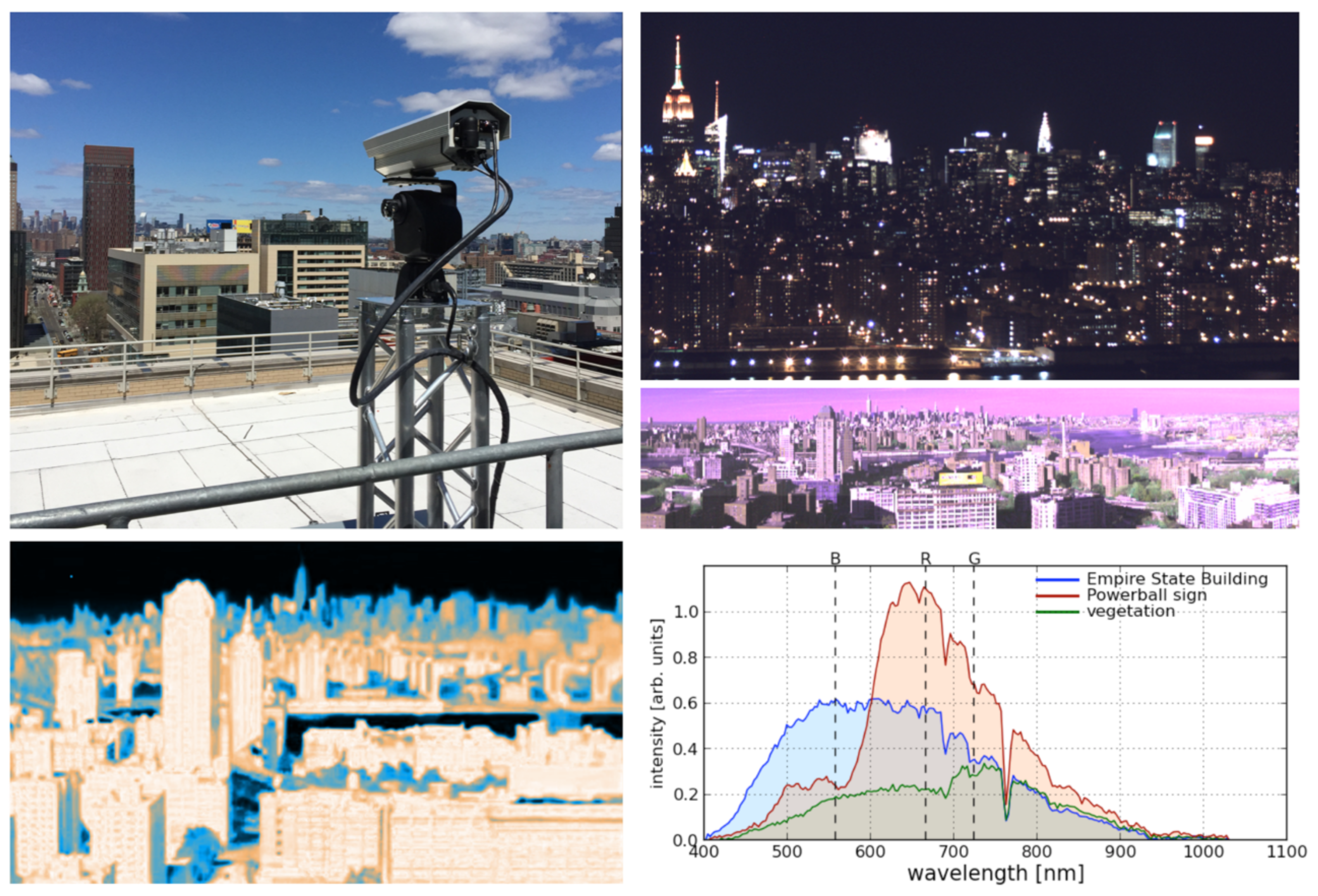

2.1. Broadband Visible

2.2. Broadband Infrared

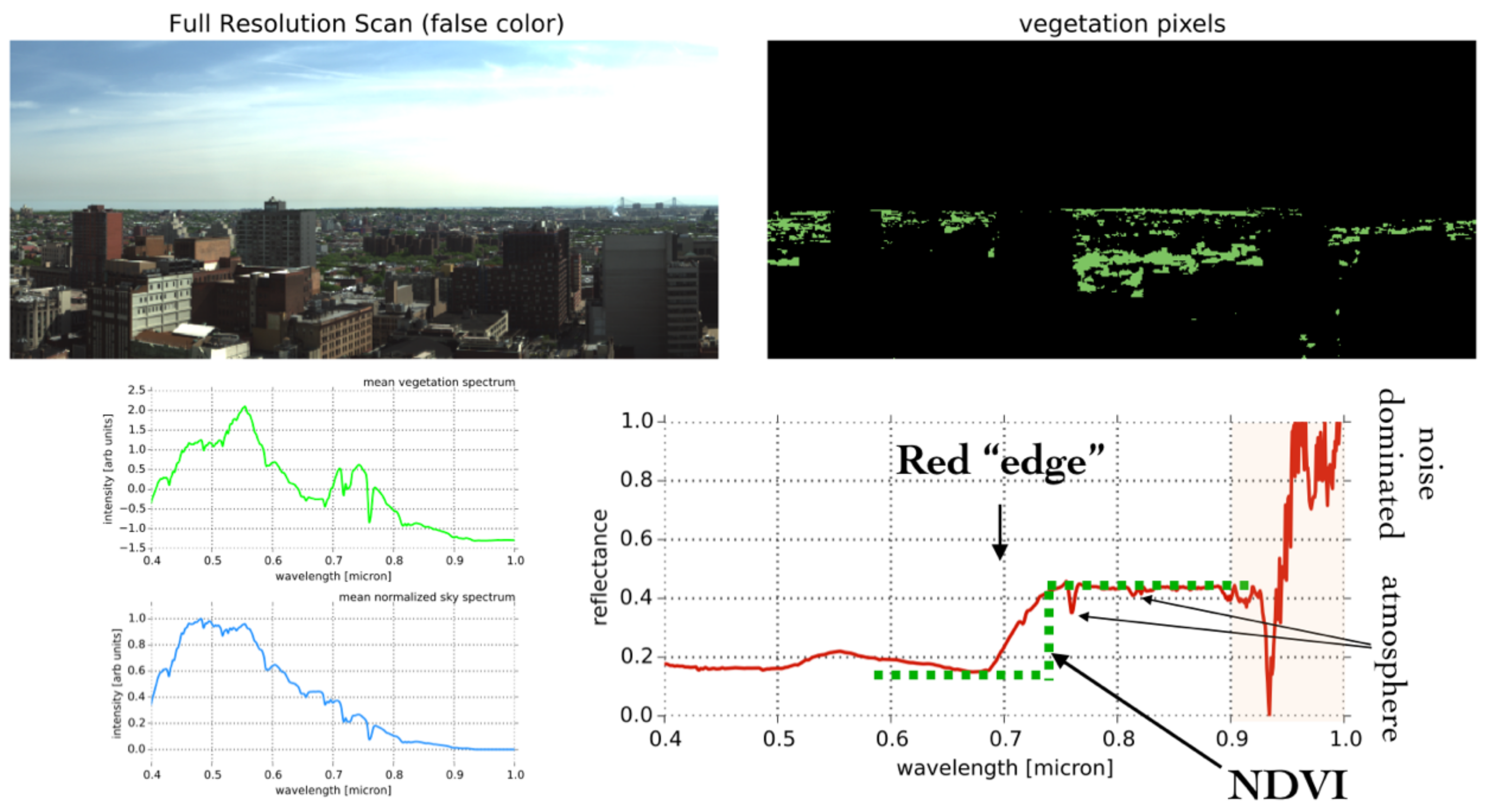

2.3. Visible and Near Infrared Hyperspectral

2.4. Long Wave Infrared Hyperspectral

2.5. Data Fusion

2.6. Privacy Protections and Ethical Considerations

3. Urban Science and Domains

3.1. Energy

3.1.1. Remote Energy Monitoring

3.1.2. Lighting Technologies and End-Use

3.1.3. Grid Stability and Phase

3.1.4. Building Thermography at Scale

3.2. Environment

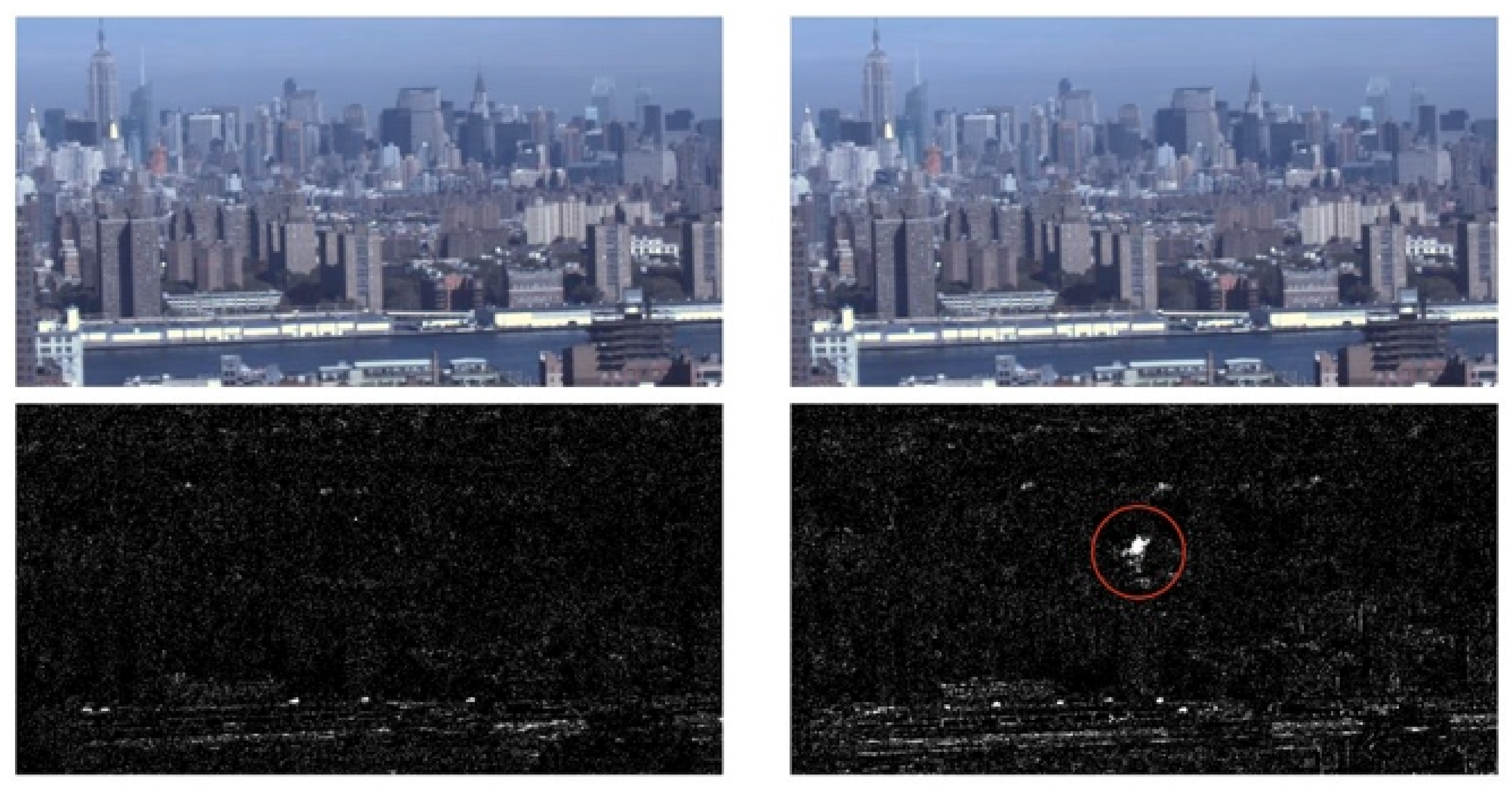

3.2.1. Soot Plumes and Steam Venting

3.2.2. Remote Speciation of Pollution Plumes

3.2.3. Urban Vegetative Health

3.2.4. Ecological Impacts of Light Pollution

3.3. Human Factors

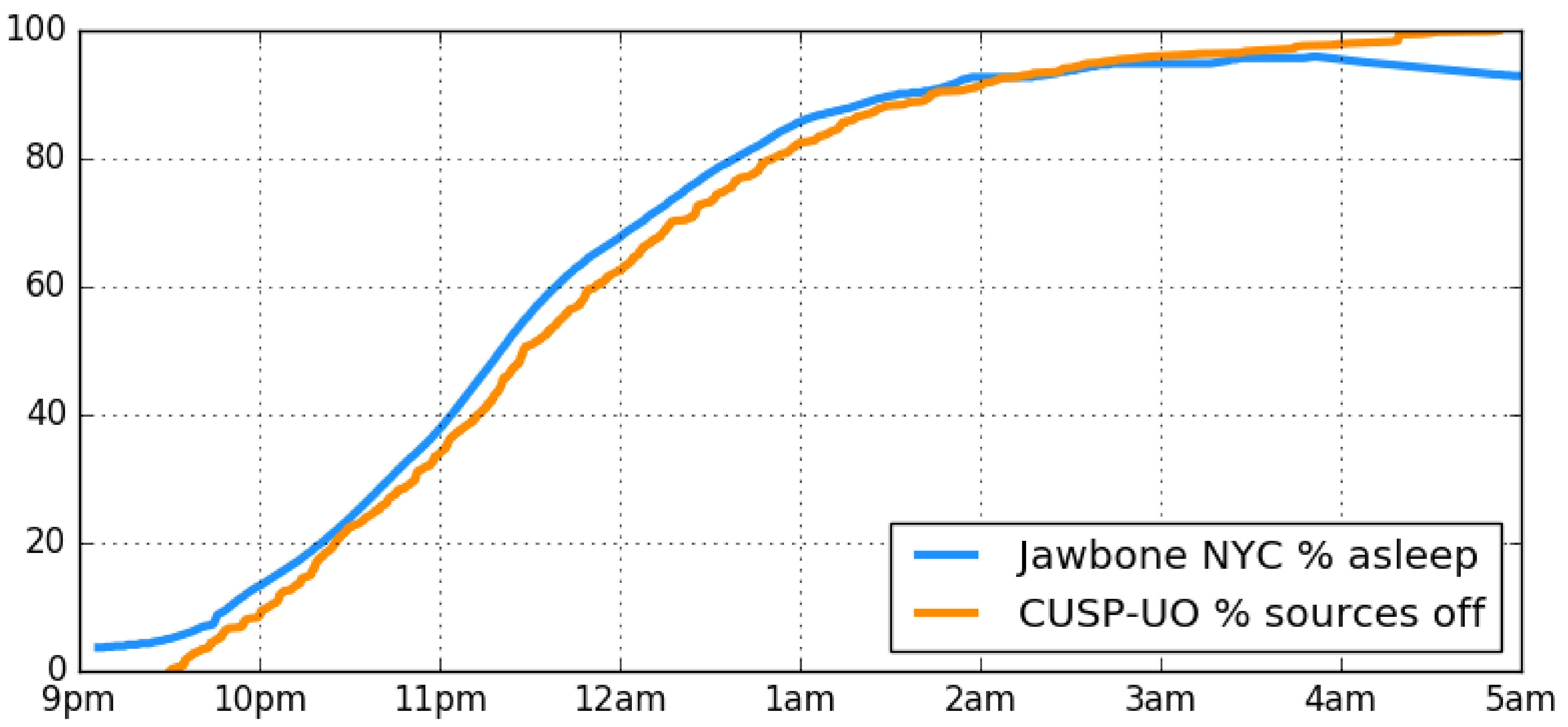

3.3.1. Patterns of Lighting Activity and Circadian Phase

3.3.2. Technology Adoption and Rebound

4. Discussion

- Temporal granularity: the cadence provided by the UO is not currently possible (or practical) for any spaceborne or airborne platform. However the timescales accessible to the UO align with patterns of life that present in other urban data sets (energy consumption, circadian rhythms, heating/cooling, technological choice, vegetative health, aviation migration, etc.) enabling the fusion of these data to inform the time-dependent dynamical properties of urban systems.

- Oblique observational angles: even low-lying cities have a significant vertical component and purely downward-facing platforms are not able to capture these features. This is particularly important for several of the indicators of lived experience described in Section 3 such as light pollution, the effects of which (e.g., circadian rhythm disruption, sky glow, and impacts on migratory species) are due to light emitted “out” or “down” as opposed to “up”, or the variation in heating and cooling properties of multi-floor buildings as a function of height in the building.

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A. Backend Infrastructure

- Camera control devices—Each imaging device is equipped with a mini-computer that opens a direct link with the camera itself. This machine is tasked with communicating directly with the camera, lens, and other peripherals and issuing image acquisition commands. In certain instances, this computer can also be used to perform edge computations including compression or sub-sampling of the data. Acquired data may be saved temporarily on disk on this machine for buffered transfer over an encrypted session back through the gateway server, or be written directly to bulk data storage.

- Gateway server—The main communications hub between our computing platform and the deployed instrumentation is a gateway server that works on a pub sub model, issuing scheduled commands to the edge mini computers. This hub is also responsible for the pull (from the deployment) and push (to the bulk data storage) functionality for the data acquisition as well as the firewalled gateway for remote connections of UO users to interact with the databases in our computing platform.

- Bulk data storage—At full operational capacity, a UO site (consisting of a broadband visible camera operating at 0.1 Hz, broadband infrared camera operating at 0.1 Hz, a DSLR operating in video mode, and a VNIR hyperspectral camera operating at 10−3 Hz) acquires roughly 2–3 TB per day. This data rate necessitates not only careful data buffering and transfer protocols to minimize packet loss from the remote devices, but also a large bulk data storage with an appropriate catalog for the imaging data. This ∼PB-scale storage server is connected to our computing servers using NFS (Network File System) protocols for computational speed. This storage server also hosts parallel source catalogs that store information extracted from the data.

- Computing server—Our main computing cluster that is used to process UO data consists of a dedicated >100 core machine that is primarily tasked with processing pipelines including: registration, image correction, source extraction, etc. We have designed our own custom platform as a service interface that seamlessly allows UO users to interact with the data while background data processing and cataloging tasks operate continuously.

- GPU mini-cluster—Several of the data processing tasks described in Section 3 require the building and training of machine learning models with large numbers of parameters including convolutional neural networks. For these tasks, we use a GPU mini-cluster that is directly connected to our main computing server and which is continuously fed streaming input data from which objects and object features are extracted.

References

- United Nations. 2018 Revision of World Urbanization Prospects; United Nations: Rome, Italy, 2018. [Google Scholar]

- Batty, M. Cities and Complexity: Understanding Cities with Cellular Automata, Agent-Based Models, and Fractals; MIT Press: Cambridge, MA, USA, 2007. [Google Scholar]

- Batty, M. The size, scale, and shape of cities. Science 2008, 319, 769–771. [Google Scholar] [CrossRef]

- White, R.; Engelen, G.; Uljee, I. Modeling Cities and Regions as Complex Systems: From Theory to Planning Applications; MIT Press: Cambridge, MA, USA, 2015. [Google Scholar]

- Bai, X.; McAllister, R.R.; Beaty, R.M.; Taylor, B. Urban policy and governance in a global environment: Complex systems, scale mismatches and public participation. Curr. Opin. Environ. Sustain. 2010, 2, 129–135. [Google Scholar] [CrossRef]

- Wilson, A.G. Complex Spatial Systems: The Modelling Foundations of Urban and Regional Analysis; Routledge: London, UK, 2014. [Google Scholar]

- Chang, S.E.; McDaniels, T.; Fox, J.; Dhariwal, R.; Longstaff, H. Toward disaster-resilient cities: Characterizing resilience of infrastructure systems with expert judgments. Risk Anal. 2014, 34, 416–434. [Google Scholar] [CrossRef]

- Moffatt, S.; Kohler, N. Conceptualizing the built environment as a social–ecological system. Build. Res. Inf. 2008, 36, 248–268. [Google Scholar] [CrossRef]

- Jifeng, W.; Huapu, L.; Hu, P. System dynamics model of urban transportation system and its application. J. Trans. Syst. Eng. Inf. Technol. 2008, 8, 83–89. [Google Scholar]

- Aleta, A.; Meloni, S.; Moreno, Y. A multilayer perspective for the analysis of urban transportation systems. Sci. Rep. 2017, 7, 44359. [Google Scholar] [CrossRef]

- Batty, M. Cities as Complex Systems: Scaling, Interaction, Networks, Dynamics and Urban Morphologies; UCL Centre for Advanced Spatial Analysis: London, UK, 2009. [Google Scholar]

- Albeverio, S.; Andrey, D.; Giordano, P.; Vancheri, A. The Dynamics of Complex Urban Systems: An Interdisciplinary Approach; Springer: New York, NY, USA, 2007. [Google Scholar]

- Diener, E.; Suh, E. Measuring quality of life: Economic, social, and subjective indicators. Soc. Indic. Res. 1997, 40, 189–216. [Google Scholar] [CrossRef]

- Shapiro, J.M. Smart cities: Quality of life, productivity, and the growth effects of human capital. Rev. Econ. Stat. 2006, 88, 324–335. [Google Scholar] [CrossRef]

- Węziak-Białowolska, D. Quality of life in cities—Empirical evidence in comparative European perspective. Cities 2016, 58, 87–96. [Google Scholar] [CrossRef]

- Frank, L.D.; Engelke, P.O. The built environment and human activity patterns: Exploring the impacts of urban form on public health. J. Plan. Lit. 2001, 16, 202–218. [Google Scholar] [CrossRef]

- Frumkin, H.; Frank, L.; Jackson, R.J. Urban Sprawl and Public Health: Designing, Planning, and Building for Healthy Communities; Island Press: Washington, DC, USA, 2004. [Google Scholar]

- Godschalk, D.R. Urban hazard mitigation: Creating resilient cities. Nat. Hazard. Rev. 2003, 4, 136–143. [Google Scholar] [CrossRef]

- Fiksel, J. Sustainability and resilience: Toward a systems approach. Sustain. Sci. Pract. Policy 2006, 2, 14–21. [Google Scholar] [CrossRef]

- Collier, M.J.; Nedović-Budić, Z.; Aerts, J.; Connop, S.; Foley, D.; Foley, K.; Newport, D.; McQuaid, S.; Slaev, A.; Verburg, P. Transitioning to resilience and sustainability in urban communities. Cities 2013, 32, S21–S28. [Google Scholar] [CrossRef]

- Townsend, A.M. Smart Cities: Big Data, Civic Hackers, and the Quest for a New Utopia; WW Norton & Company: New York, NY, USA, 2013. [Google Scholar]

- Kitchin, R. The Data Revolution: Big Data, Open Data, Data Infrastructures and their Consequences; Sage: Thousand Oaks, CA, USA, 2014. [Google Scholar]

- Batty, M. Big data, smart cities and city planning. Dial. Hum. Geogr. 2013, 3, 274–279. [Google Scholar] [CrossRef] [PubMed]

- Batty, M. The New Science of Cities; MIT Press: Cambridge, MA, USA, 2013. [Google Scholar]

- Townsend, A. Cities of data: Examining the new urban science. Public Cult. 2015, 27, 201–212. [Google Scholar] [CrossRef]

- Kitchin, R. The ethics of smart cities and urban science. Philos. Trans. R. Soc. A 2016, 374, 20160115. [Google Scholar] [CrossRef]

- Acuto, M.; Parnell, S.; Seto, K.C. Building a global urban science. Nat. Sustain. 2018, 1, 2–4. [Google Scholar] [CrossRef]

- Batty, M. Fifty years of urban modeling: Macro-statics to micro-dynamics. In The Dynamics of Complex Urban Systems; Springer: Berlin/Heidelberg, Germany, 2008; pp. 1–20. [Google Scholar]

- Bettencourt, L.M. Cities as complex systems. Modeling Complex Systems for Public Policies; IPEA: Brasilia, Brasil, 2015; pp. 217–236. [Google Scholar]

- Faghih-Imani, A.; Hampshire, R.; Marla, L.; Eluru, N. An empirical analysis of bike sharing usage and rebalancing: Evidence from Barcelona and Seville. Transp. Res. Part A Policy Pract. 2017, 97, 177–191. [Google Scholar] [CrossRef]

- Dobler, G.; Vani, J.; Dam, T.T.L. Patterns of Urban Foot Traffic Dynamics. arXiv 2019, arXiv:1910.02380. [Google Scholar]

- Berghauser Pont, M.; Stavroulaki, G.; Marcus, L. Development of urban types based on network centrality, built density and their impact on pedestrian movement. Environ. Plan. B 2019, 46, 1549–1564. [Google Scholar] [CrossRef]

- Huebner, G.; Shipworth, D.; Hamilton, I.; Chalabi, Z.; Oreszczyn, T. Understanding electricity consumption: A comparative contribution of building factors, socio-demographics, appliances, behaviours and attitudes. Appl. Energy 2016, 177, 692–702. [Google Scholar] [CrossRef]

- Rasul, A.; Balzter, H.; Smith, C. Diurnal and seasonal variation of surface urban cool and heat islands in the semi-arid city of Erbil, Iraq. Climate 2016, 4, 42. [Google Scholar] [CrossRef]

- Sun, R.; Lü, Y.; Yang, X.; Chen, L. Understanding the variability of urban heat islands from local background climate and urbanization. J. Clean. Prod. 2019, 208, 743–752. [Google Scholar] [CrossRef]

- Chen, W.; Tang, H.; Zhao, H. Diurnal, weekly and monthly spatial variations of air pollutants and air quality of Beijing. Atmos. Environ. 2015, 119, 21–34. [Google Scholar] [CrossRef]

- Masiol, M.; Squizzato, S.; Formenton, G.; Harrison, R.M.; Agostinelli, C. Air quality across a European hotspot: Spatial gradients, seasonality, diurnal cycles and trends in the Veneto region, NE Italy. Sci. Total Environ. 2017, 576, 210–224. [Google Scholar] [CrossRef]

- Cheng, Z.; Caverlee, J.; Lee, K.; Sui, D. Exploring millions of footprints in location sharing services. In Proceedings of the International AAAI Conference on Web and Social Media, Barcelona, Spain, 17–21 July 2011; Volume 5. [Google Scholar]

- Noulas, A.; Scellato, S.; Lambiotte, R.; Pontil, M.; Mascolo, C. A tale of many cities: Universal patterns in human urban mobility. PLoS ONE 2012, 7, e37027. [Google Scholar] [CrossRef]

- Hasan, S.; Schneider, C.M.; Ukkusuri, S.V.; González, M.C. Spatiotemporal patterns of urban human mobility. J. Stat. Phys. 2013, 151, 304–318. [Google Scholar] [CrossRef]

- Alessandretti, L.; Sapiezynski, P.; Lehmann, S.; Baronchelli, A. Multi-scale spatio-temporal analysis of human mobility. PLoS ONE 2017, 12, e0171686. [Google Scholar] [CrossRef]

- Henderson, M.; Yeh, E.T.; Gong, P.; Elvidge, C.; Baugh, K. Validation of urban boundaries derived from global night-time satellite imagery. Int. J. Remote Sens. 2003, 24, 595–609. [Google Scholar] [CrossRef]

- Small, C.; Pozzi, F.; Elvidge, C.D. Spatial analysis of global urban extent from DMSP-OLS night lights. Remote Sens. Environ. 2005, 96, 277–291. [Google Scholar] [CrossRef]

- Li, X.; Zhou, Y. Urban mapping using DMSP/OLS stable night-time light: a review. Int. J. Remote Sens. 2017, 38, 6030–6046. [Google Scholar] [CrossRef]

- Mydlarz, C.; Salamon, J.; Bello, J.P. The implementation of low-cost urban acoustic monitoring devices. Appl. Acoust. 2017, 117, 207–218. [Google Scholar] [CrossRef]

- Bello, J.P.; Silva, C.; Nov, O.; Dubois, R.L.; Arora, A.; Salamon, J.; Mydlarz, C.; Doraiswamy, H. Sonyc: A system for monitoring, analyzing, and mitigating urban noise pollution. Commun. ACM 2019, 62, 68–77. [Google Scholar] [CrossRef]

- Li, C.; Chiang, A.; Dobler, G.; Wang, Y.; Xie, K.; Ozbay, K.; Ghandehari, M.; Zhou, J.; Wang, D. Robust vehicle tracking for urban traffic videos at intersections. In Proceedings of the 13th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), Colorado Springs, CO, USA, 23–26 August 2016; pp. 207–213. [Google Scholar]

- Xie, Y.; Weng, Q. Detecting urban-scale dynamics of electricity consumption at Chinese cities using time-series DMSP-OLS (Defense Meteorological Satellite Program-Operational Linescan System) nighttime light imageries. Energy 2016, 100, 177–189. [Google Scholar] [CrossRef]

- Urban, R. Extraction and modeling of urban attributes using remote sensing technology. In People and Pixels: Linking Remote Sensing and Social Science; National Academies Press: Washington, DC, USA, 1998; p. 164. [Google Scholar]

- Jensen, J.R.; Cowen, D.C. Remote sensing of urban/suburban infrastructure and socio-economic attributes. Photogramm. Eng. Remote Sens. 1999, 65, 611–622. [Google Scholar]

- Chen, X.L.; Zhao, H.M.; Li, P.X.; Yin, Z.Y. Remote sensing image-based analysis of the relationship between urban heat island and land use/cover changes. Remote Sens. Environ. 2006, 104, 133–146. [Google Scholar] [CrossRef]

- Benediktsson, J.A.; Pesaresi, M.; Amason, K. Classification and feature extraction for remote sensing images from urban areas based on morphological transformations. IEEE Trans. Geosci. Remote Sens. 2003, 41, 1940–1949. [Google Scholar] [CrossRef]

- Weng, Q.; Quattrochi, D.; Gamba, P.E. Urban Remote Sensing; CRC Press: Boca Raton, FL, USA, 2018. [Google Scholar]

- Pohl, C.; Van Genderen, J.L. Review article multisensor image fusion in remote sensing: Concepts, methods and applications. Int. J. Remote Sens. 1998, 19, 823–854. [Google Scholar] [CrossRef]

- Maktav, D.; Erbek, F.; Jürgens, C. Remote sensing of urban areas. Int. J. Remote Sens. 2005, 26, 655–659. [Google Scholar] [CrossRef]

- Anderson, J.R. A Land use Furthermore, Land Cover Classification System for use with Remote Sensor Data; US Government Printing Office: Washington, DC, USA, 1976; Volume 964.

- Welch, R. Monitoring urban population and energy utilization patterns from satellite data. Remote Sens. Environ. 1980, 9, 1–9. [Google Scholar] [CrossRef]

- Elvidge, C.D.; Baugh, K.E.; Kihn, E.A.; Kroehl, H.W.; Davis, E.R.; Davis, C.W. Relation between satellite observed visible-near infrared emissions, population, economic activity and electric power consumption. Int. J. Remote Sens. 1997, 18, 1373–1379. [Google Scholar] [CrossRef]

- Amaral, S.; Câmara, G.; Monteiro, A.M.V.; Quintanilha, J.A.; Elvidge, C.D. Estimating population and energy consumption in Brazilian Amazonia using DMSP night-time satellite data. Comput. Environ. Urban Syst. 2005, 29, 179–195. [Google Scholar] [CrossRef]

- Chand, T.K.; Badarinath, K.; Elvidge, C.; Tuttle, B. Spatial characterization of electrical power consumption patterns over India using temporal DMSP-OLS night-time satellite data. Int. J. Remote Sens. 2009, 30, 647–661. [Google Scholar] [CrossRef]

- Shi, K.; Chen, Y.; Yu, B.; Xu, T.; Yang, C.; Li, L.; Huang, C.; Chen, Z.; Liu, R.; Wu, J. Detecting spatiotemporal dynamics of global electric power consumption using DMSP-OLS nighttime stable light data. Appl. Energy 2016, 184, 450–463. [Google Scholar] [CrossRef]

- Longcore, T.; Rich, C. Ecological light pollution. Front. Ecol. Environ. 2004, 2, 191–198. [Google Scholar] [CrossRef]

- Bennie, J.; Davies, T.W.; Duffy, J.P.; Inger, R.; Gaston, K.J. Contrasting trends in light pollution across Europe based on satellite observed night time lights. Sci. Rep. 2014, 4, 1–6. [Google Scholar] [CrossRef] [PubMed]

- Falchi, F.; Cinzano, P.; Duriscoe, D.; Kyba, C.C.; Elvidge, C.D.; Baugh, K.; Portnov, B.A.; Rybnikova, N.A.; Furgoni, R. The new world atlas of artificial night sky brightness. Sci. Adv. 2016, 2, e1600377. [Google Scholar] [CrossRef]

- Hall, G.B.; Malcolm, N.W.; Piwowar, J.M. Integration of remote sensing and GIS to detect pockets of urban poverty: The case of Rosario, Argentina. Trans. GIS 2001, 5, 235–253. [Google Scholar] [CrossRef]

- Elvidge, C.D.; Sutton, P.C.; Ghosh, T.; Tuttle, B.T.; Baugh, K.E.; Bhaduri, B.; Bright, E. A global poverty map derived from satellite data. Comput. Geosci. 2009, 35, 1652–1660. [Google Scholar] [CrossRef]

- Xie, M.; Jean, N.; Burke, M.; Lobell, D.; Ermon, S. Transfer learning from deep features for remote sensing and poverty mapping. In Proceedings of the Thirtieth AAAI Conference on Artificial Intelligence, Phoenix, AZ, USA, 12–17 February 2016. [Google Scholar]

- Masek, J.; Lindsay, F.; Goward, S. Dynamics of urban growth in the Washington DC metropolitan area, 1973–1996, from Landsat observations. Int. J. Remote Sens. 2000, 21, 3473–3486. [Google Scholar] [CrossRef]

- Weng, Q. Land use change analysis in the Zhujiang Delta of China using satellite remote sensing, GIS and stochastic modelling. J. Environ. Manag. 2002, 64, 273–284. [Google Scholar] [CrossRef] [PubMed]

- Xiao, J.; Shen, Y.; Ge, J.; Tateishi, R.; Tang, C.; Liang, Y.; Huang, Z. Evaluating urban expansion and land use change in Shijiazhuang, China, by using GIS and remote sensing. Landsc. Urban Plan. 2006, 75, 69–80. [Google Scholar] [CrossRef]

- Gaston, K.J.; Bennie, J.; Davies, T.W.; Hopkins, J. The ecological impacts of nighttime light pollution: A mechanistic appraisal. Biol. Rev. 2013, 88, 912–927. [Google Scholar] [CrossRef]

- Chepesiuk, R. Missing the dark: Health effects of light pollution. Environ. Health Perspect. 2009. [Google Scholar] [CrossRef]

- Falchi, F.; Cinzano, P.; Elvidge, C.D.; Keith, D.M.; Haim, A. Limiting the impact of light pollution on human health, environment and stellar visibility. J. Environ. Manag. 2011, 92, 2714–2722. [Google Scholar] [CrossRef] [PubMed]

- Newell, J.P.; Cousins, J.J. The boundaries of urban metabolism: Towards a political—Industrial ecology. Prog. Hum. Geogr. 2015, 39, 702–728. [Google Scholar] [CrossRef]

- Dobler, G.; Ghandehari, M.; Koonin, S.E.; Nazari, R.; Patrinos, A.; Sharma, M.S.; Tafvizi, A.; Vo, H.T.; Wurtele, J.S. Dynamics of the urban lightscape. Inf. Syst. 2015, 54, 115–126. [Google Scholar] [CrossRef]

- Dobler, G.; Ghandehari, M.; Koonin, S.E.; Sharma, M.S. A hyperspectral survey of New York City lighting technology. Sensors 2016, 16, 2047. [Google Scholar] [CrossRef]

- York, D.G.; Adelman, J.; Anderson, J.E., Jr.; Anderson, S.F.; Annis, J.; Bahcall, N.A.; Bakken, J.; Barkhouser, R.; Bastian, S.; Berman, E.; et al. The sloan digital sky survey: Technical summary. Astronom. J. 2000, 120, 1579. [Google Scholar] [CrossRef]

- Kaiser, N.; Aussel, H.; Burke, B.E.; Boesgaard, H.; Chambers, K.; Chun, M.R.; Heasley, J.N.; Hodapp, K.W.; Hunt, B.; Jedicke, R.; et al. Pan-STARRS: A large synoptic survey telescope array. In Survey and Other Telescope Technologies and Discoveries; International Society for Optics and Photonics: Bellingham, WA, USA, 2002; Volume 4836, pp. 154–164. [Google Scholar]

- Qamar, F.; Dobler, G. Pixel-Wise Classification of High-Resolution Ground-Based Urban Hypers pectral Images with Convolutional Neural Networks. Remote Sens. 2020, 12, 2540. [Google Scholar] [CrossRef]

- Ghandehari, M.; Aghamohamadnia, M.; Dobler, G.; Karpf, A.; Buckland, K.; Qian, J.; Koonin, S. Mapping refrigerant gases in the new york city skyline. Sci. Rep. 2017, 7, 1–10. [Google Scholar] [CrossRef]

- Ahearn, S.C.; Ahn, H.J. Quality assurance and potential applications of a high density lidar data set for the city of New York. In Proceedings of the ASPRS Annual Conference, New York, NY, USA, 1–5 May 2011; p. 9. [Google Scholar]

- Floridi, L.; Cowls, J.; Beltrametti, M.; Chatila, R.; Chazerand, P.; Dignum, V.; Luetge, C.; Madelin, R.; Pagallo, U.; Rossi, F.; et al. AI4People—an ethical framework for a good AI society: Opportunities, risks, principles, and recommendations. Minds Mach. 2018, 28, 689–707. [Google Scholar] [CrossRef]

- Benjamin, R. Race after technology: Abolitionist tools for the new jim code. Soc. Forces 2019. [Google Scholar] [CrossRef]

- Adams, A.A.; Ferryman, J.M. The future of video analytics for surveillance and its ethical implications. Secur. J. 2015, 28, 272–289. [Google Scholar] [CrossRef]

- Tom Yeh, M. Designing a moral compass for the future of computer vision using speculative analysis. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Honolulu, HI, USA, 21–26 July 2017; pp. 64–73. [Google Scholar]

- Lane, J.; Stodden, V.; Bender, S.; Nissenbaum, H. Privacy, Big Data, and the Public Good: Frameworks for Engagement; Cambridge University Press: Cambridge, UK, 2014. [Google Scholar]

- McCallister, E. Guide to Protecting the Confidentiality of Personally Identifiable Information; Diane Publishing: Darby, PA, USA, 2010; Volume 800. [Google Scholar]

- Raji, I.D.; Gebru, T.; Mitchell, M.; Buolamwini, J.; Lee, J.; Denton, E. Saving face: Investigating the ethical concerns of facial recognition auditing. In Proceedings of the AAAI/ACM Conference on AI, Ethics, and Society, New York, NY, USA, 7–8 February 2020; pp. 145–151. [Google Scholar]

- Liu, Z.; Sarkar, S. Outdoor recognition at a distance by fusing gait and face. Image Vis. Comput. 2007, 25, 817–832. [Google Scholar] [CrossRef]

- Sweeney, L. k-anonymity: A model for protecting privacy. Int. J. Uncertain. Fuzziness Knowl. Based Syst. 2002, 10, 557–570. [Google Scholar] [CrossRef]

- Bertino, E.; Verma, D.; Calo, S. A policy system for control of data fusion processes and derived data. In Proceedings of the 21st International Conference on Information Fusion (FUSION), IEEE, Cambridge, UK, 10–13 July 2018; pp. 807–813. [Google Scholar]

- Dwork, C. Differential privacy: A survey of results. In Proceedings of the International Conference on Theory and Applications of Models of Computation, Changsha, China, 18–20 October 2018; Springer: New York, NY, USA, 2008; pp. 1–19. [Google Scholar]

- Dwork, C.; Roth, A. The algorithmic foundations of differential privacy. Found. Trends Theor. Comput. Sci. 2014, 9, 211–407. [Google Scholar] [CrossRef]

- Baur, J.; Dobler, G.; Bianco, F.; Sharma, M.; Karpf, A. Persistent Hyperspectral Observations of the Urban Lightscape. In Proceedings of the 2018 IEEE Global Conference on Signal and Information Processing (GlobalSIP), IEEE, Anaheim, CA, USA, 26–29 November 2018; pp. 983–987. [Google Scholar]

- Bianco, F.B.; Koonin, S.E.; Mydlarz, C.; Sharma, M.S. Hypertemporal imaging of NYC grid dynamics: Short paper. In Proceedings of the 3rd ACM International Conference on Systems for Energy-Efficient Built Environments, Trento, Italy, 12–15 September 2016; pp. 61–64. [Google Scholar]

- Bianco, F.B.; Dobler, G.G.; Koonin, S.E. System, Method, and Computer-Accessible Medium for Remote Sensing of the Electrical Distribution Grid with Hypertemporal Imaging. U.S. Patent Application No. 16,581,966, 26 March 2020. [Google Scholar]

- Steers, B.; Kastelan, J.; Tsai, C.C.; Bianco, F.B.; Dobler, G. Detection of polluting plumes ejected from NYC buildings. Authorea Prepr. 2019. [Google Scholar] [CrossRef]

- Hillman, T.; Ramaswami, A. Greenhouse Gas Emission Footprints and Energy use Benchmarks for Eight US Cities; ACS Publications: Washington, DC, USA, 2010. [Google Scholar]

- Doll, C.N.; Muller, J.P.; Elvidge, C.D. Night-time imagery as a tool for global mapping of socioeconomic parameters and greenhouse gas emissions. Ambio 2000, 29, 157–162. [Google Scholar] [CrossRef]

- Kyba, C.; Garz, S.; Kuechly, H.; De Miguel, A.S.; Zamorano, J.; Fischer, J.; Hölker, F. High-resolution imagery of earth at night: New sources, opportunities and challenges. Remote Sens. 2015, 7, 1–23. [Google Scholar] [CrossRef]

- Zhao, M.; Zhou, Y.; Li, X.; Cao, W.; He, C.; Yu, B.; Li, X.; Elvidge, C.D.; Cheng, W.; Zhou, C. Applications of satellite remote sensing of nighttime light observations: Advances, challenges, and perspectives. Remote Sens. 2019, 11, 1971. [Google Scholar] [CrossRef]

- Levin, N.; Kyba, C.C.; Zhang, Q.; de Miguel, A.S.; Román, M.O.; Li, X.; Portnov, B.A.; Molthan, A.L.; Jechow, A.; Miller, S.D.; et al. Remote sensing of night lights: A review and an outlook for the future. Remote Sens. Environ. 2020, 237, 111443. [Google Scholar] [CrossRef]

- Letu, H.; Hara, M.; Tana, G.; Nishio, F. A saturated light correction method for DMSP/OLS nighttime satellite imagery. IEEE Trans. Geosci. Remote Sens. 2011, 50, 389–396. [Google Scholar] [CrossRef]

- Shi, K.; Chen, Y.; Li, L.; Huang, C. Spatiotemporal variations of urban CO2 emissions in China: A multiscale perspective. Appl. Energy 2018, 211, 218–229. [Google Scholar] [CrossRef]

- He, C.; Ma, Q.; Liu, Z.; Zhang, Q. Modeling the spatiotemporal dynamics of electric power consumption in Mainland China using saturation-corrected DMSP/OLS nighttime stable light data. Int. J. Digit. Earth 2014, 7, 993–1014. [Google Scholar] [CrossRef]

- Li, X.; Levin, N.; Xie, J.; Li, D. Monitoring hourly night-time light by an unmanned aerial vehicle and its implications to satellite remote sensing. Remote Sens. Environ. 2020, 247, 111942. [Google Scholar] [CrossRef]

- Kontokosta, C.E. Local Law 84 Energy Benchmarking Data: Report to the New York City Mayor’s Office of Long-Term Planning and Sustainability. Retrieved Febr. 2012, 9, 2018. [Google Scholar]

- Nageler, P.; Zahrer, G.; Heimrath, R.; Mach, T.; Mauthner, F.; Leusbrock, I.; Schranzhofer, H.; Hochenauer, C. Novel validated method for GIS based automated dynamic urban building energy simulations. Energy 2017, 139, 142–154. [Google Scholar] [CrossRef]

- Sikder, S.K.; Nagarajan, M.; Kar, S.; Koetter, T. A geospatial approach of downscaling urban energy consumption density in mega-city Dhaka, Bangladesh. Urban Clim. 2018, 26, 10–30. [Google Scholar] [CrossRef]

- Elvidge, C.D.; Cinzano, P.; Pettit, D.; Arvesen, J.; Sutton, P.; Small, C.; Nemani, R.; Longcore, T.; Rich, C.; Safran, J.; et al. The Nightsat mission concept. Int. J. Remote Sens. 2007, 28, 2645–2670. [Google Scholar] [CrossRef]

- Elvidge, C.D.; Keith, D.M.; Tuttle, B.T.; Baugh, K.E. Spectral identification of lighting type and character. Sensors 2010, 10, 3961–3988. [Google Scholar] [CrossRef] [PubMed]

- Zheng, Q.; Weng, Q.; Huang, L.; Wang, K.; Deng, J.; Jiang, R.; Ye, Z.; Gan, M. A new source of multi-spectral high spatial resolution night-time light imagery—JL1-3B. Remote Sens. Environ. 2018, 215, 300–312. [Google Scholar] [CrossRef]

- Swan, L.G.; Ugursal, V.I. Modeling of end-use energy consumption in the residential sector: A review of modeling techniques. Renew. Sustain. Energy Rev. 2009, 13, 1819–1835. [Google Scholar] [CrossRef]

- Anderson, S.T.; Newell, R.G. Information programs for technology adoption: The case of energy-efficiency audits. Resour. Energy Econ. 2004, 26, 27–50. [Google Scholar] [CrossRef]

- Kruse, F.A.; Elvidge, C.D. Identifying and mapping night lights using imaging spectrometry. In Proceedings of the 2011 Aerospace Conference, IEEE, Big Sky, MT, USA, 5–12 March 2011; pp. 1–6. [Google Scholar]

- Kruse, F.A.; Elvidge, C.D. Characterizing urban light sources using imaging spectrometry. In Proceedings of the 2011 Joint Urban Remote Sensing Event, IEEE, Munich, Germany, 11–13 April 2011; pp. 149–152. [Google Scholar]

- Kolláth, Z.; Dömény, A.; Kolláth, K.; Nagy, B. Qualifying lighting remodelling in a Hungarian city based on light pollution effects. J. Quant. Spectrosc. Radiat. Transf. 2016, 181, 46–51. [Google Scholar] [CrossRef]

- Alamús, R.; Bará, S.; Corbera, J.; Escofet, J.; Palà, V.; Pipia, L.; Tardà, A. Ground-based hyperspectral analysis of the urban nightscape. ISPRS J. Photogramm. Remote Sens. 2017, 124, 16–26. [Google Scholar] [CrossRef]

- Barentine, J.C.; Walker, C.E.; Kocifaj, M.; Kundracik, F.; Juan, A.; Kanemoto, J.; Monrad, C.K. Skyglow changes over Tucson, Arizona, resulting from a municipal LED street lighting conversion. J. Quant. Spectrosc. Radiat. Transf. 2018, 212, 10–23. [Google Scholar] [CrossRef]

- Meier, J.M. Temporal Profiles of Urban Lighting: Proposal for a research design and first results from three sites in Berlin. Int. J. Sustain. Lighting 2018. [Google Scholar] [CrossRef]

- Bará, S.; Rodríguez-Arós, Á.; Pérez, M.; Tosar, B.; Lima, R.C.; Sánchez de Miguel, A.; Zamorano, J. Estimating the relative contribution of streetlights, vehicles, and residential lighting to the urban night sky brightness. Lighting Res. Technol. 2019, 51, 1092–1107. [Google Scholar] [CrossRef]

- Sheinin, M.; Schechner, Y.Y.; Kutulakos, K.N. Computational imaging on the electric grid. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 6437–6446. [Google Scholar]

- Sheinin, M.; Schechner, Y.Y.; Kutulakos, K.N. Rolling shutter imaging on the electric grid. In Proceedings of the 2018 IEEE International Conference on Computational Photography (ICCP), IEEE, Pittsburgh, PA, USA, 4–6 May 2018; pp. 1–12. [Google Scholar]

- Shah, Z.; Yen, A.; Pandey, A.; Taneja, J. GridInSight: Monitoring Electricity Using Visible Lights. In Proceedings of the 6th ACM International Conference on Systems for Energy-Efficient Buildings, Cities, and Transportation, New York, NY, USA, 10–13 November 2019; pp. 243–252. [Google Scholar]

- Liu, Y.; You, S.; Yao, W.; Cui, Y.; Wu, L.; Zhou, D.; Zhao, J.; Liu, H.; Liu, Y. A distribution level wide area monitoring system for the electric power grid—FNET/GridEye. IEEE Access 2017, 5, 2329–2338. [Google Scholar] [CrossRef]

- Gopakumar, P.; Mallikajuna, B.; Reddy, M.J.B.; Mohanta, D.K. Remote monitoring system for real time detection and classification of transmission line faults in a power grid using PMU measurements. Prot. Control Modern Power Syst. 2018, 3, 1–10. [Google Scholar] [CrossRef]

- Balaras, C.A.; Argiriou, A. Infrared thermography for building diagnostics. Energy Build. 2002, 34, 171–183. [Google Scholar] [CrossRef]

- Kylili, A.; Fokaides, P.A.; Christou, P.; Kalogirou, S.A. Infrared thermography (IRT) applications for building diagnostics: A review. Appl. Energy 2014, 134, 531–549. [Google Scholar] [CrossRef]

- Barreira, E.; de Freitas, V.P. Evaluation of building materials using infrared thermography. Constr. Build. Mater. 2007, 21, 218–224. [Google Scholar] [CrossRef]

- Fox, M.; Coley, D.; Goodhew, S.; De Wilde, P. Thermography methodologies for detecting energy related building defects. Renew. Sustain. Energy Rev. 2014, 40, 296–310. [Google Scholar] [CrossRef]

- Lo, C.P.; Quattrochi, D.A.; Luvall, J.C. Application of high-resolution thermal infrared remote sensing and GIS to assess the urban heat island effect. Int. J. Remote Sens. 1997, 18, 287–304. [Google Scholar] [CrossRef]

- Sobrino, J.A.; Oltra-Carrió, R.; Sòria, G.; Jiménez-Muñoz, J.C.; Franch, B.; Hidalgo, V.; Mattar, C.; Julien, Y.; Cuenca, J.; Romaguera, M.; et al. Evaluation of the surface urban heat island effect in the city of Madrid by thermal remote sensing. Int. J. Remote Sens. 2013, 34, 3177–3192. [Google Scholar] [CrossRef]

- Huang, X.; Wang, Y. Investigating the effects of 3D urban morphology on the surface urban heat island effect in urban functional zones by using high-resolution remote sensing data: A case study of Wuhan, Central China. ISPRS J. Photogramm. Remote Sens. 2019, 152, 119–131. [Google Scholar] [CrossRef]

- Kaplan, H. Practical Applications of Infrared Thermal Sensing and Imaging Equipment; SPIE Press: Bellingham, WA, USA, 2007; Volume 75. [Google Scholar]

- Ghandehari, M.; Emig, T.; Aghamohamadnia, M. Surface temperatures in New York City: Geospatial data enables the accurate prediction of radiative heat transfer. Sci. Rep. 2018, 8, 1–10. [Google Scholar] [CrossRef]

- Schwartz, J. Particulate air pollution and chronic respiratory disease. Environ. Res. 1993, 62, 7–13. [Google Scholar] [CrossRef]

- Penttinen, P.; Timonen, K.; Tiittanen, P.; Mirme, A.; Ruuskanen, J.; Pekkanen, J. Ultrafine particles in urban air and respiratory health among adult asthmatics. Eur. Respir. J. 2001, 17, 428–435. [Google Scholar] [CrossRef]

- Janssen, N.A.; Brunekreef, B.; van Vliet, P.; Aarts, F.; Meliefste, K.; Harssema, H.; Fischer, P. The relationship between air pollution from heavy traffic and allergic sensitization, bronchial hyperresponsiveness, and respiratory symptoms in Dutch schoolchildren. Environ. Health Perspect. 2003, 111, 1512–1518. [Google Scholar] [CrossRef]

- D’Amato, G.; Cecchi, L.; D’amato, M.; Liccardi, G. Urban air pollution and climate change as environmental risk factors of respiratory allergy: An update. J. Investig. Allergol. Clin. Immunol. 2010, 20, 95–102. [Google Scholar] [PubMed]

- Caplin, A.; Ghandehari, M.; Lim, C.; Glimcher, P.; Thurston, G. Advancing environmental exposure assessment science to benefit society. Nat. Commun. 2019, 10, 1–11. [Google Scholar] [CrossRef] [PubMed]

- Han, L.; Zhou, W.; Li, W. Increasing impact of urban fine particles (PM 2.5) on areas surrounding Chinese cities. Sci. Rep. 2015, 5, 12467. [Google Scholar] [CrossRef]

- Ramanathan, V.; Feng, Y. Air pollution, greenhouse gases and climate change: Global and regional perspectives. Atmos. Environ. 2009, 43, 37–50. [Google Scholar] [CrossRef]

- Seto, K.C.; Güneralp, B.; Hutyra, L.R. Global forecasts of urban expansion to 2030 and direct impacts on biodiversity and carbon pools. Proc. Natl. Acad. Sci. USA 2012, 109, 16083–16088. [Google Scholar] [CrossRef] [PubMed]

- Yuan, M.; Huang, Y.; Shen, H.; Li, T. Effects of urban form on haze pollution in China: Spatial regression analysis based on PM2. 5 remote sensing data. Appl. Geogr. 2018, 98, 215–223. [Google Scholar] [CrossRef]

- Dickinson, J.; Tenorio, A. Inventory of New York City Greenhouse Gas Emissions; Mayor’s Office of Long-Term Planning and Sustainability: New York, NY, USA, 2011.

- Adachi, K.; Chung, S.H.; Buseck, P.R. Shapes of soot aerosol particles and implications for their effects on climate. J. Geophys. Res. Atmos. 2010, 115. [Google Scholar] [CrossRef]

- Wu, Y.; Arapi, A.; Huang, J.; Gross, B.; Moshary, F. Intra-continental wildfire smoke transport and impact on local air quality observed by ground-based and satellite remote sensing in New York City. Atmos. Environ. 2018, 187, 266–281. [Google Scholar] [CrossRef]

- McIvor, A.M. Background subtraction techniques. Proc. Image Vis. Comput. 2000, 4, 3099–3104. [Google Scholar]

- Brutzer, S.; Höferlin, B.; Heidemann, G. Evaluation of background subtraction techniques for video surveillance. In Proceedings of the CVPR 2011, IEEE, Colorado Springs, CO, USA, 20–25 June 2011; pp. 1937–1944. [Google Scholar]

- Mou, B.; He, B.J.; Zhao, D.X.; Chau, K.w. Numerical simulation of the effects of building dimensional variation on wind pressure distribution. Eng. Appl. Comput. Fluid Mech. 2017, 11, 293–309. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Girshick, R. Fast r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. arXiv 2015, arXiv:1506.01497. [Google Scholar] [CrossRef]

- Li, C.; Dobler, G.; Feng, X.; Wang, Y. TrackNet: Simultaneous Object Detection and Tracking and Its Application in Traffic Video Analysis. arXiv 2019, arXiv:1902.01466. [Google Scholar]

- Manolakis, D.; Pieper, M.; Truslow, E.; Lockwood, R.; Weisner, A.; Jacobson, J.; Cooley, T. Longwave infrared hyperspectral imaging: Principles, progress, and challenges. IEEE Geosci. Remote Sens. Mag. 2019, 7, 72–100. [Google Scholar] [CrossRef]

- Horler, D.; Dockray, M.; Barber, J. The red edge of plant leaf reflectance. Int. J. Remote Sens. 1983, 4, 273–288. [Google Scholar] [CrossRef]

- Elvidge, C.D. Visible and near infrared reflectance characteristics of dry plant materials. Remote Sens. 1990, 11, 1775–1795. [Google Scholar] [CrossRef]

- Xue, J.; Su, B. Significant remote sensing vegetation indices: A review of developments and applications. J. Sens. 2017, 2017. [Google Scholar] [CrossRef]

- Rouse, J.; Haas, R.; Schell, J.; Deering, D. Monitoring vegetation systems in the Great Plains with ERTS. NASA Spec. Publ. 1974, 351, 309. [Google Scholar]

- Robinson, N.P.; Allred, B.W.; Jones, M.O.; Moreno, A.; Kimball, J.S.; Naugle, D.E.; Erickson, T.A.; Richardson, A.D. A dynamic Landsat derived normalized difference vegetation index (NDVI) product for the conterminous United States. Remote Sens. 2017, 9, 863. [Google Scholar] [CrossRef]

- Gamon, J.; Serrano, L.; Surfus, J. The photochemical reflectance index: An optical indicator of photosynthetic radiation use efficiency across species, functional types, and nutrient levels. Oecologia 1997, 112, 492–501. [Google Scholar] [CrossRef]

- Garbulsky, M.F.; Peñuelas, J.; Gamon, J.; Inoue, Y.; Filella, I. The photochemical reflectance index (PRI) and the remote sensing of leaf, canopy and ecosystem radiation use efficiencies: A review and meta-analysis. Remote Sens. Environ. 2011, 115, 281–297. [Google Scholar] [CrossRef]

- Thenkabail, P.S.; Lyon, J.G.; Huete, A. Advances in Hyperspectral Remote Sensing of Vegetation and Agricultural Croplands; Taylor & Francis Group: New York, NY, USA, 2012. [Google Scholar]

- Meroni, M.; Rossini, M.; Guanter, L.; Alonso, L.; Rascher, U.; Colombo, R.; Moreno, J. Remote sensing of solar-induced chlorophyll fluorescence: Review of methods and applications. Remote Sens. Environ. 2009, 113, 2037–2051. [Google Scholar] [CrossRef]

- Meroni, M.; Rossini, M.; Picchi, V.; Panigada, C.; Cogliati, S.; Nali, C.; Colombo, R. Assessing steady-state fluorescence and PRI from hyperspectral proximal sensing as early indicators of plant stress: The case of ozone exposure. Sensors 2008, 8, 1740–1754. [Google Scholar] [CrossRef] [PubMed]

- Karnosky, D.F.; Skelly, J.M.; Percy, K.E.; Chappelka, A.H. Perspectives regarding 50 years of research on effects of tropospheric ozone air pollution on US forests. Environ. Pollut. 2007, 147, 489–506. [Google Scholar] [CrossRef] [PubMed]

- Žibret, G.; Kopačková, V. Comparison of two methods for indirect measurement of atmospheric dust deposition: Street-dust composition and vegetation-health status derived from hyperspectral image data. Ambio 2019, 48, 423–435. [Google Scholar] [CrossRef]

- Hernández-Clemente, R.; Hornero, A.; Mottus, M.; Penuelas, J.; González-Dugo, V.; Jiménez, J.; Suárez, L.; Alonso, L.; Zarco-Tejada, P.J. Early diagnosis of vegetation health from high-resolution hyperspectral and thermal imagery: Lessons learned from empirical relationships and radiative transfer modelling. Curr. For. Rep. 2019, 5, 169–183. [Google Scholar] [CrossRef]

- Rich, C.; Longcore, T. Ecological Consequences of Artificial Night Lighting; Island Press: Washington, DC, USA, 2013. [Google Scholar]

- Gauthreaux, S.A., Jr.; Belser, C.G.; Rich, C.; Longcore, T. Effects of artificial night lighting on migrating birds. Ecological Consequences of Artificial Night Lighting; Island Press: Washington, DC, USA, 2006; pp. 67–93. [Google Scholar]

- Van Doren, B.M.; Horton, K.G.; Dokter, A.M.; Klinck, H.; Elbin, S.B.; Farnsworth, A. High-intensity urban light installation dramatically alters nocturnal bird migration. Proc. Natl. Acad. Sci. USA 2017, 114, 11175–11180. [Google Scholar] [CrossRef]

- La Sorte, F.A.; Fink, D.; Buler, J.J.; Farnsworth, A.; Cabrera-Cruz, S.A. Seasonal associations with urban light pollution for nocturnally migrating bird populations. Glob. Change Biol. 2017, 23, 4609–4619. [Google Scholar] [CrossRef] [PubMed]

- Cabrera-Cruz, S.A.; Smolinsky, J.A.; Buler, J.J. Light pollution is greatest within migration passage areas for nocturnally-migrating birds around the world. Sci. Rep. 2018, 8, 1–8. [Google Scholar]

- Horton, K.G.; Nilsson, C.; Van Doren, B.M.; La Sorte, F.A.; Dokter, A.M.; Farnsworth, A. Bright lights in the big cities: Migratory birds’ exposure to artificial light. Front. Ecol. Environ. 2019, 17, 209–214. [Google Scholar] [CrossRef]

- Cabrera-Cruz, S.A.; Smolinsky, J.A.; McCarthy, K.P.; Buler, J.J. Urban areas affect flight altitudes of nocturnally migrating birds. J. Anim. Ecol. 2019, 88, 1873–1887. [Google Scholar] [CrossRef] [PubMed]

- Cabrera-Cruz, S.A.; Cohen, E.B.; Smolinsky, J.A.; Buler, J.J. Artificial light at night is related to broad-scale stopover distributions of nocturnally migrating landbirds along the Yucatan Peninsula, Mexico. Remote Sens. 2020, 12, 395. [Google Scholar] [CrossRef]

- NOAA National Weather Service (NWS) Radar Operations Center. NOAA Next Generation Radar (NEXRAD) Level 2 Base Data; NOAA National Centers for Environmental Information: Washington, DC, USA, 1991.

- Wilt, B. In the City that We Love. The Jawbone Blog. 2014. Available online: https://www.jawbone.com/blog/jawbone-up-data-by-city/ (accessed on 18 August 2014).

- Zeitzer, J.M.; Dijk, D.J.; Kronauer, R.E.; Brown, E.N.; Czeisler, C.A. Sensitivity of the human circadian pacemaker to nocturnal light: Melatonin phase resetting and suppression. J. Physiol. 2000, 526, 695–702. [Google Scholar] [CrossRef]

- Rea, M.S.; Figueiro, M.G.; Bierman, A.; Bullough, J.D. Circadian light. J. Circad. Rhythm. 2010, 8, 2. [Google Scholar] [CrossRef] [PubMed]

- Pauley, S.M. Lighting for the human circadian clock: Recent research indicates that lighting has become a public health issue. Med. Hypotheses 2004, 63, 588–596. [Google Scholar] [CrossRef]

- Mason, I.C.; Boubekri, M.; Figueiro, M.G.; Hasler, B.P.; Hattar, S.; Hill, S.M.; Nelson, R.J.; Sharkey, K.M.; Wright, K.P.; Boyd, W.A.; et al. Circadian health and light: A report on the National Heart, Lung, and Blood Institute’s workshop. J. Biol. Rhythm. 2018, 33, 451–457. [Google Scholar] [CrossRef] [PubMed]

- Dolfin, M.; Leonida, L.; Outada, N. Modeling human behavior in economics and social science. Phys. Life Rev. 2017, 22, 1–21. [Google Scholar] [CrossRef] [PubMed]

- Müller-Hansen, F.; Schlüter, M.; Mäs, M.; Donges, J.F.; Kolb, J.J.; Thonicke, K.; Heitzig, J. Towards representing human behavior and decision making in Earth system models–an overview of techniques and approaches. Earth Syst. Dynam. 2017, 8, 977–1007. [Google Scholar] [CrossRef]

- Greening, L.A.; Greene, D.L.; Difiglio, C. Energy efficiency and consumption—The rebound effect—A survey. Energy Policy 2000, 28, 389–401. [Google Scholar] [CrossRef]

- Kyba, C.C.; Kuester, T.; De Miguel, A.S.; Baugh, K.; Jechow, A.; Hölker, F.; Bennie, J.; Elvidge, C.D.; Gaston, K.J.; Guanter, L. Artificially lit surface of Earth at night increasing in radiance and extent. Sci. Adv. 2017, 3, e1701528. [Google Scholar] [CrossRef]

- Tsao, J.Y.; Saunders, H.D.; Creighton, J.R.; Coltrin, M.E.; Simmons, J.A. Solid-state lighting: An energy-economics perspective. J. Phys. D Appl. Phys. 2010, 43, 354001. [Google Scholar] [CrossRef]

- Alberti, M. Modeling the urban ecosystem: A conceptual framework. Environ. Plan. B Plan. Des. 1999, 26, 605–629. [Google Scholar] [CrossRef]

- Grimm, N.B.; Grove, J.G.; Pickett, S.T.; Redman, C.L. Integrated approaches to long-term studies of urban ecological systems: Urban ecological systems present multiple challenges to ecologists—Pervasive human impact and extreme heterogeneity of cities, and the need to integrate social and ecological approaches, concepts, and theory. BioScience 2000, 50, 571–584. [Google Scholar]

- Alberti, M. Advances in Urban Ecology: Integrating Humans and Ecological Processes in Urban Ecosystems; Number 574.5268 A4; Springer: New York, NY, USA, 2008. [Google Scholar]

- Golubiewski, N. Is there a metabolism of an urban ecosystem? An ecological critique. Ambio 2012, 41, 751–764. [Google Scholar] [CrossRef]

| Urban Observatory Modalities and Urban Science Drivers | ||||

|---|---|---|---|---|

| Urban Science Domain | Observational Timescale | Spatial Resolutin | Image Modality | Current Status |

| Remote Energy Monitoring | 0.1 Hz | 1 m | BB Vis & IR | in progress |

| Lighting Technology | 1 mHz | 1 m | VNIR HSI | [76,94] |

| Grid Stability & Phase | 10 Hz | 1 m | BB Vis | [95,96] |

| Building Thermography | 1 Hz | 1 m | BB IR | in progress |

| Soot Plumes and Steam Venting | 0.1 Hz | 10 m | BB Vis | [97] |

| Remote Speciation of Pollution Plumes | 3 mHz | 10 m | LWIR HSI | [80] |

| Urban Vegetative Health | 1 mHz | 10 m | VNIR HSI | in progress |

| Ecological Impacts of Light Pollution | 0.1 Hz | 10 m | BB Vis | in progress |

| Patterns of Lighting Activity | 0.1 Hz | 1 m | BB Vis | [75] |

| Technology Adoption and Rebound | 0.1 Hz | 1 m | BB Vis & VNIR HSI | in progress |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Dobler, G.; Bianco, F.B.; Sharma, M.S.; Karpf, A.; Baur, J.; Ghandehari, M.; Wurtele, J.; Koonin, S.E. The Urban Observatory: A Multi-Modal Imaging Platform for the Study of Dynamics in Complex Urban Systems. Remote Sens. 2021, 13, 1426. https://doi.org/10.3390/rs13081426

Dobler G, Bianco FB, Sharma MS, Karpf A, Baur J, Ghandehari M, Wurtele J, Koonin SE. The Urban Observatory: A Multi-Modal Imaging Platform for the Study of Dynamics in Complex Urban Systems. Remote Sensing. 2021; 13(8):1426. https://doi.org/10.3390/rs13081426

Chicago/Turabian StyleDobler, Gregory, Federica B. Bianco, Mohit S. Sharma, Andreas Karpf, Julien Baur, Masoud Ghandehari, Jonathan Wurtele, and Steven E. Koonin. 2021. "The Urban Observatory: A Multi-Modal Imaging Platform for the Study of Dynamics in Complex Urban Systems" Remote Sensing 13, no. 8: 1426. https://doi.org/10.3390/rs13081426

APA StyleDobler, G., Bianco, F. B., Sharma, M. S., Karpf, A., Baur, J., Ghandehari, M., Wurtele, J., & Koonin, S. E. (2021). The Urban Observatory: A Multi-Modal Imaging Platform for the Study of Dynamics in Complex Urban Systems. Remote Sensing, 13(8), 1426. https://doi.org/10.3390/rs13081426