A Practical Method for High-Resolution Burned Area Monitoring Using Sentinel-2 and VIIRS

Abstract

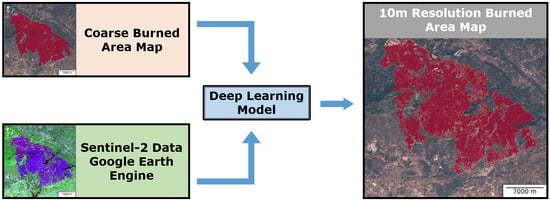

1. Introduction

2. Data and Methods

2.1. Daily Burned Areas Using BA-Net

2.2. Split Individual Fire Events

- 1.

- Create a buffer around the burned pixels using kernel convolution with kernel size pixels;

- 2.

- Use ndimage.label function to split the fires spatially without taking into account the temporal component;

- 3.

- Remove fire events smaller than 25 pixels;

- 4.

- For each retained event, look at the dates of burning and split the event every time the temporal distance is greater than 2 days;

- 5.

- For each newly separated event run ndimage.label to separate events that may have been previously connected by a third one;

- 6.

- Remove again fire events smaller than 25 pixels if any is present after the temporal split.

2.3. Sentinel-2 Composites Using Google Earth Engine

2.4. Model and Training

2.4.1. Create Dataset

- 1.

- NBR was computed for each event using the expression NBR = (NIR − SWIR) / (NIR + SWIR);

- 2.

- The difference of prefire and postfire NBR (dNBR) was computed;

- 3.

- The median dNBR was then computed inside and outside the coarse burned mask, using the BA-Net product;

- 4.

- The dNBR threshold to define the burned region was defined for each event as the mean point between the two medians of step 3;

- 5.

- The resulting mask was cleaned using the method described in Section 2.2 with a spatial buffer of 10 pixels, a minimum pixel size of 100 and keeping only the burned regions representing at least 10% of the total burned area of each event. The choice of the buffer size, minimum pixel size and the 10% criteria was done by visual interpretation with the goal of removing any existing noise around the main burned patch;

- 6.

- Finally, each mask was evaluated visually, together with dNBR data, and events looking “unnatural” were discarded.

2.4.2. Define Model

2.4.3. Train Model

2.5. Validation of the Six Case Studies

3. Results

3.1. Computation Benchmark

3.2. Feature Importance

3.3. Case Studies

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Pausas, J.G.; Llovet, J.; Rodrigo, A.; Vallejo, R. Are wildfires a disaster in the Mediterranean basin?—A review. Int. J. Wildland Fire 2008, 17, 713. [Google Scholar] [CrossRef]

- Bowman, D.M.J.S.; Williamson, G.J.; Abatzoglou, J.T.; Kolden, C.A.; Cochrane, M.A.; Smith, A.M.S. Human exposure and sensitivity to globally extreme wildfire events. Nat. Ecol. Evol. 2017, 1. [Google Scholar] [CrossRef] [PubMed]

- Turco, M.; Rosa-Cánovas, J.J.; Bedia, J.; Jerez, S.; Montávez, J.P.; Llasat, M.C.; Provenzale, A. Exacerbated fires in Mediterranean Europe due to anthropogenic warming projected with non-stationary climate-fire models. Nat. Commun. 2018, 9. [Google Scholar] [CrossRef]

- Ruffault, J.; Curt, T.; Moron, V.; Trigo, R.M.; Mouillot, F.; Koutsias, N.; Pimont, F.; Martin-StPaul, N.; Barbero, R.; Dupuy, J.L.; et al. Increased likelihood of heat-induced large wildfires in the Mediterranean Basin. Sci. Rep. 2020, 10. [Google Scholar] [CrossRef] [PubMed]

- Fernandez-Manso, A.; Quintano, C.; Roberts, D.A. Burn severity influence on post-fire vegetation cover resilience from Landsat MESMA fraction images time series in Mediterranean forest ecosystems. Remote Sens. Environ. 2016, 184, 112–123. [Google Scholar] [CrossRef]

- Hislop, S.; Jones, S.; Soto-Berelov, M.; Skidmore, A.; Haywood, A.; Nguyen, T. Using Landsat Spectral Indices in Time-Series to Assess Wildfire Disturbance and Recovery. Remote Sens. 2018, 10, 460. [Google Scholar] [CrossRef]

- Freire, J.G.; DaCamara, C.C. Using cellular automata to simulate wildfire propagation and to assist in fire management. Nat. Hazards Earth Syst. Sci. 2019, 19, 169–179. [Google Scholar] [CrossRef]

- Loepfe, L.; Lloret, F.; Román-Cuesta, R.M. Comparison of burnt area estimates derived from satellite products and national statistics in Europe. Int. J. Remote Sens. 2011, 33, 3653–3671. [Google Scholar] [CrossRef]

- Mangeon, S.; Field, R.; Fromm, M.; McHugh, C.; Voulgarakis, A. Satellite versus ground-based estimates of burned area: A comparison between MODIS based burned area and fire agency reports over North America in 2007. Anthr. Rev. 2015, 3, 76–92. [Google Scholar] [CrossRef]

- Chuvieco, E.; Mouillot, F.; van der Werf, G.R.; Miguel, J.S.; Tanase, M.; Koutsias, N.; García, M.; Yebra, M.; Padilla, M.; Gitas, I.; et al. Historical background and current developments for mapping burned area from satellite Earth observation. Remote. Sens. Environ. 2019, 225, 45–64. [Google Scholar] [CrossRef]

- Chuvieco, E.; Lizundia-Loiola, J.; Pettinari, M.L.; Ramo, R.; Padilla, M.; Tansey, K.; Mouillot, F.; Laurent, P.; Storm, T.; Heil, A.; et al. Generation and analysis of a new global burned area product based on MODIS 250m reflectance bands and thermal anomalies. Earth Syst. Sci. Data 2018, 10, 2015–2031. [Google Scholar] [CrossRef]

- Giglio, L.; Boschetti, L.; Roy, D.P.; Humber, M.L.; Justice, C.O. The Collection 6 MODIS burned area mapping algorithm and product. Remote Sens. Environ. 2018, 217, 72–85. [Google Scholar] [CrossRef]

- Pinto, M.M.; Libonati, R.; Trigo, R.M.; Trigo, I.F.; DaCamara, C.C. A deep learning approach for mapping and dating burned areas using temporal sequences of satellite images. ISPRS J. Photogramm. Remote Sens. 2020, 160, 260–274. [Google Scholar] [CrossRef]

- Humber, M.L.; Boschetti, L.; Giglio, L.; Justice, C.O. Spatial and temporal intercomparison of four global burned area products. Int. J. Digit. Earth 2018, 12, 460–484. [Google Scholar] [CrossRef]

- Roteta, E.; Bastarrika, A.; Padilla, M.; Storm, T.; Chuvieco, E. Development of a Sentinel-2 burned area algorithm: Generation of a small fire database for sub-Saharan Africa. Remote Sens. Environ. 2019, 222, 1–17. [Google Scholar] [CrossRef]

- Bastarrika, A.; Chuvieco, E.; Martín, M.P. Mapping burned areas from Landsat TM/ETM + data with a two-phase algorithm: Balancing omission and commission errors. Remote Sens. Environ. 2011, 115, 1003–1012. [Google Scholar] [CrossRef]

- Stroppiana, D.; Bordogna, G.; Carrara, P.; Boschetti, M.; Boschetti, L.; Brivio, P. A method for extracting burned areas from Landsat TM/ETM+ images by soft aggregation of multiple Spectral Indices and a region growing algorithm. ISPRS J. Photogramm. Remote Sens. 2012, 69, 88–102. [Google Scholar] [CrossRef]

- Hawbaker, T.J.; Vanderhoof, M.K.; Beal, Y.J.; Takacs, J.D.; Schmidt, G.L.; Falgout, J.T.; Williams, B.; Fairaux, N.M.; Caldwell, M.K.; Picotte, J.J.; et al. Mapping burned areas using dense time-series of Landsat data. Remote Sens. Environ. 2017, 198, 504–522. [Google Scholar] [CrossRef]

- Drusch, M.; Del Bello, U.; Carlier, S.; Colin, O.; Fernandez, V.; Gascon, F.; Hoersch, B.; Isola, C.; Laberinti, P.; Martimort, P.; et al. Sentinel-2: ESA’s optical high-resolution mission for GMES operational services. Remote Sens. Environ. 2012, 120, 25–36. [Google Scholar] [CrossRef]

- Filipponi, F. Exploitation of Sentinel-2 Time Series to Map Burned Areas at the National Level: A Case Study on the 2017 Italy Wildfires. Remote Sens. 2019, 11, 622. [Google Scholar] [CrossRef]

- Ban, Y.; Zhang, P.; Nascetti, A.; Bevington, A.R.; Wulder, M.A. Near Real-Time Wildfire Progression Monitoring with Sentinel-1 SAR Time Series and Deep Learning. Sci. Rep. 2020, 10. [Google Scholar] [CrossRef] [PubMed]

- Knopp, L.; Wieland, M.; Rättich, M.; Martinis, S. A Deep Learning Approach for Burned Area Segmentation with Sentinel-2 Data. Remote Sens. 2020, 12, 2422. [Google Scholar] [CrossRef]

- Gorelick, N.; Hancher, M.; Dixon, M.; Ilyushchenko, S.; Thau, D.; Moore, R. Google Earth Engine: Planetary-scale geospatial analysis for everyone. Remote Sens. Environ. 2017, 202, 18–27. [Google Scholar] [CrossRef]

- San-Miguel-Ayanz, J.; Durrant, T.; Boca, R.; Libertà, G.; Branco, A.; de Rigo, D.; Ferrari, D.; Maianti, P.; Vivancos, T.A.; Costa, H.; et al. Forest fires in Europe. Middle East N. Afr. 2017, 2018. [Google Scholar] [CrossRef]

- Turco, M.; Jerez, S.; Augusto, S.; Tarín-Carrasco, P.; Ratola, N.; Jiménez-Guerrero, P.; Trigo, R.M. Climate drivers of the 2017 devastating fires in Portugal. Sci. Rep. 2019, 9. [Google Scholar] [CrossRef]

- Archibald, S.; Roy, D.P. Identifying individual fires from satellite-derived burned area data. In Proceedings of the 2009 IEEE International Geoscience and Remote Sensing Symposium, Cape Town, South Africa, 12–17 July 2009. [Google Scholar] [CrossRef]

- Nogueira, J.; Ruffault, J.; Chuvieco, E.; Mouillot, F. Can We Go Beyond Burned Area in the Assessment of Global Remote Sensing Products with Fire Patch Metrics? Remote Sens. 2016, 9, 7. [Google Scholar] [CrossRef]

- Laurent, P.; Mouillot, F.; Yue, C.; Ciais, P.; Moreno, M.V.; Nogueira, J.M.P. FRY, a global database of fire patch functional traits derived from space-borne burned area products. Sci. Data 2018, 5. [Google Scholar] [CrossRef]

- Virtanen, P.; Gommers, R.; Oliphant, T.E.; Haberland, M.; Reddy, T.; Cournapeau, D.; Burovski, E.; Peterson, P.; Weckesser, W.; Bright, J.; et al. SciPy 1.0: Fundamental algorithms for scientific computing in Python. Nat. Methods 2020, 17, 261–272. [Google Scholar] [CrossRef]

- Long, T.; Zhang, Z.; He, G.; Jiao, W.; Tang, C.; Wu, B.; Zhang, X.; Wang, G.; Yin, R. 30 m Resolution Global Annual Burned Area Mapping Based on Landsat Images and Google Earth Engine. Remote Sens. 2019, 11, 489. [Google Scholar] [CrossRef]

- Cocke, A.E.; Fulé, P.Z.; Crouse, J.E. Comparison of burn severity assessments using Differenced Normalized Burn Ratio and ground data. Int. J. Wildland Fire 2005, 14, 189. [Google Scholar] [CrossRef]

- Miller, J.D.; Thode, A.E. Quantifying burn severity in a heterogeneous landscape with a relative version of the delta Normalized Burn Ratio (dNBR). Remote Sens. Environ. 2007, 109, 66–80. [Google Scholar] [CrossRef]

- Quintano, C.; Fernández-Manso, A.; Fernández-Manso, O. Combination of Landsat and Sentinel-2 MSI data for initial assessing of burn severity. Int. J. Appl. Earth Obs. Geoinf. 2018, 64, 221–225. [Google Scholar] [CrossRef]

- Rolnick, D.; Veit, A.; Belongie, S.; Shavit, N. Deep learning is robust to massive label noise. arXiv 2017, arXiv:1705.10694. [Google Scholar]

- Ioffe, S.; Szegedy, C. Batch normalization: Accelerating deep network training by reducing internal covariate shift. In Proceedings of the International Conference on Machine Learning, PMLR, Lille, France, 7–9 July 2015; pp. 448–456. [Google Scholar]

- Nair, V.; Hinton, G.E. Rectified linear units improve restricted boltzmann machines. In Proceedings of the 27th International Conference on International Conference on Machine Learning, Haifa, Israel, 21–24 June 2010. [Google Scholar]

- Micikevicius, P.; Narang, S.; Alben, J.; Diamos, G.; Elsen, E.; Garcia, D.; Ginsburg, B.; Houston, M.; Kuchaiev, O.; Venkatesh, G.; et al. Mixed precision training. arXiv 2017, arXiv:1710.03740. [Google Scholar]

- Wu, Y.; He, K. Group Normalization. In Computer Vision—ECCV 2018; Springer: Munich, Germany, 2018; pp. 3–19. [Google Scholar] [CrossRef]

- Smith, L.N. A disciplined approach to neural network hyper-parameters: Part 1–learning rate, batch size, momentum, and weight decay. arXiv 2018, arXiv:1803.09820. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Shorten, C.; Khoshgoftaar, T.M. A survey on Image Data Augmentation for Deep Learning. J. Big Data 2019, 6. [Google Scholar] [CrossRef]

- Howard, J.; Gugger, S. Fastai: A Layered API for Deep Learning. Information 2020, 11, 108. [Google Scholar] [CrossRef]

- Padilla, M.; Stehman, S.V.; Ramo, R.; Corti, D.; Hantson, S.; Oliva, P.; Alonso-Canas, I.; Bradley, A.V.; Tansey, K.; Mota, B.; et al. Comparing the accuracies of remote sensing global burned area products using stratified random sampling and estimation. Remote Sens. Environ. 2015, 160, 114–121. [Google Scholar] [CrossRef]

- Pinto, M.M.; DaCamara, C.C.; Trigo, I.F.; Trigo, R.M.; Turkman, K.F. Fire danger rating over Mediterranean Europe based on fire radiative power derived from Meteosat. Nat. Hazards Earth Syst. Sci. 2018, 18, 515–529. [Google Scholar] [CrossRef]

- DaCamara, C.C.; Libonati, R.; Pinto, M.M.; Hurduc, A. Near- and Middle-Infrared Monitoring of Burned Areas from Space. In Satellite Information Classification and Interpretation; IntechOpen: London, UK, 2019. [Google Scholar] [CrossRef]

- Lagouvardos, K.; Kotroni, V.; Giannaros, T.M.; Dafis, S. Meteorological Conditions Conducive to the Rapid Spread of the Deadly Wildfire in Eastern Attica, Greece. Bull. Am. Meteorol. Soc. 2019, 100, 2137–2145. [Google Scholar] [CrossRef]

- Boschetti, L.; Roy, D.; Justice, C. International Global Burned Area Satellite Product Validation Protocol Part I–Production and Standardization of Validation Reference Data; Committee on Earth Observation Satellites: Maryland, MD, USA, 2009; pp. 1–11. [Google Scholar]

- Pulvirenti, L.; Squicciarino, G.; Fiori, E.; Fiorucci, P.; Ferraris, L.; Negro, D.; Gollini, A.; Severino, M.; Puca, S. An Automatic Processing Chain for Near Real-Time Mapping of Burned Forest Areas Using Sentinel-2 Data. Remote Sens. 2020, 12, 674. [Google Scholar] [CrossRef]

- Escuin, S.; Navarro, R.; Fernández, P. Fire severity assessment by using NBR (Normalized Burn Ratio) and NDVI (Normalized Difference Vegetation Index) derived from LANDSAT TM/ETM images. Int. J. Remote Sens. 2007, 29, 1053–1073. [Google Scholar] [CrossRef]

- Libonati, R.; DaCamara, C.C.; Pereira, J.M.C.; Peres, L.F. On a new coordinate system for improved discrimination of vegetation and burned areas using MIR/NIR information. Remote Sens. Environ. 2011, 115, 1464–1477. [Google Scholar] [CrossRef]

| Region Name | Bounding Box | Time | Use |

|---|---|---|---|

| Western Iberia | 36.0 to 44.0N 10.0 to 6.0W | June to October 2017 and August 2018 | Train/Test |

| French Riviera | 42.8 to 44.0N 4.8 to 7.0E | July 2017 | Test |

| Attica Greece | 37.5 to 38.5N 22.5 to 24.4E | July 2018 | Test |

| FireID | CEMS ID | Source Name | Source Resolution | Published Time (UTC) |

|---|---|---|---|---|

| Portugal 1 | EMSR207 | SPOT-6-7/Other | 1.5 m/Other | 2017-06-22 19:56:12 |

| Portugal 2 | EMSR303 | SPOT-6-7 | 1.5 m | 2018-08-10 17:00:48 |

| French Riviera 1 | EMSR214 | SPOT-6 | 1.5 m | 2017-07-31 14:58:03 |

| French Riviera 2 | EMSR214 | SPOT-6 | 1.5 m | 2017-07-28 19:16:39 |

| Attica Greece 1 | EMSR300 | SPOT-6-7 | 1.5 m | 2018-07-30 17:28:17 |

| Attica Greece 2 | EMSR300 | Pleiades-1A-1B | 0.5 m | 2018-07-26 16:38:00 |

| FireID | Sentinel-2 mage Size | Sentinel-2 Data Size on Disk | GEE Download Time | Inference Time (CPU) | Inference Time (GPU) | Burned Area (ha) |

|---|---|---|---|---|---|---|

| Portugal 1 | 4733 × 4732 | 300 MB | 51 min | 152 s | 50 s | 42,333 |

| Portugal 2 | 3419 × 3418 | 161 MB | 25 min | 76 s | 21 s | 23,868 |

| French Riviera 1 | 870 × 881 | 9 MB | 3 min | 5 s | 1 s | 489 |

| French Riviera 2 | 1315 × 1327 | 19 MB | 4 min | 11 s | 2 s | 1344 |

| Attica Greece 1 | 2262 × 2260 | 62 MB | 13 min | 32 s | 7 s | 4363 |

| Attica Greece 2 | 1093 × 1081 | 16 MB | 4 min | 7 s | 1 s | 1232 |

| FireID | Commission Error | Omission Error | Dice |

|---|---|---|---|

| Portugal 1 | 0.034 | 0.097 | 0.933 |

| Portugal 2 | 0.016 | 0.122 | 0.928 |

| French Riviera 1 | 0.074 | 0.065 | 0.931 |

| French Riviera 2 | 0.072 | 0.047 | 0.941 |

| Attica Greece 1 | 0.007 | 0.225 | 0.870 |

| Attica Greece 2 | 0.059 | 0.093 | 0.924 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pinto, M.M.; Trigo, R.M.; Trigo, I.F.; DaCamara, C.C. A Practical Method for High-Resolution Burned Area Monitoring Using Sentinel-2 and VIIRS. Remote Sens. 2021, 13, 1608. https://doi.org/10.3390/rs13091608

Pinto MM, Trigo RM, Trigo IF, DaCamara CC. A Practical Method for High-Resolution Burned Area Monitoring Using Sentinel-2 and VIIRS. Remote Sensing. 2021; 13(9):1608. https://doi.org/10.3390/rs13091608

Chicago/Turabian StylePinto, Miguel M., Ricardo M. Trigo, Isabel F. Trigo, and Carlos C. DaCamara. 2021. "A Practical Method for High-Resolution Burned Area Monitoring Using Sentinel-2 and VIIRS" Remote Sensing 13, no. 9: 1608. https://doi.org/10.3390/rs13091608

APA StylePinto, M. M., Trigo, R. M., Trigo, I. F., & DaCamara, C. C. (2021). A Practical Method for High-Resolution Burned Area Monitoring Using Sentinel-2 and VIIRS. Remote Sensing, 13(9), 1608. https://doi.org/10.3390/rs13091608