Hyperspectral Pansharpening in the Reflective Domain with a Second Panchromatic Channel in the SWIR II Spectral Domain

Abstract

:1. Introduction

- Component Substitution (CS) methods efficiently preserve the spatial information but cause spectral distorsions [21]. Among the best-suited methods for HS pansharpening, we can cite Gram–Schmidt (GS) adaptive (GSA) [22], Brovey transform (also called Gain) [23,24,25], and Spatially Organized Spectral Unmixing (SOSU) [25].

- In contrast, MultiResolution Analysis (MRA) methods better preserve spectral information, at the expense of the spatial information [21]. The Modulation Transfer Function-Generalized Laplacian Pyramid (MTF-GLP) [26], MTF-GLP with high pass modulation (MTF-GLP-HPM) [27], and the Optimized Injection Model (OIM) [28], are among the best options among MRA methods for HS pansharpening.

- To balance the preservation of the spatial and spectral information, hybrid methods aim to compensate the defaults of the previous two classes but need to set more parameters [8]. They include recent methods adapted to HS pansharpening, mostly based on Guided Filters (GF), like GF Pansharpening (GFP) [29,30], Average Filter and GF (AFGF) [31] or Adaptive Weighted Regression and GF (AWRGF) [32].

- Bayesian and Matrix Factorization (MF) approaches provide high spatial and spectral performance but need prior knowledge about the degradation model, and they imply a higher computation cost [8]. Efficient methods for HS pansharpening are Bayesian Sparse [33,34], a two-step generalisation of Hyperspectral Superresolution (HySure) [35], and a recent variational approach called Spectral Difference Minimization (SDM) [36] for Bayesian approaches as well as Coupled Nonnegative MF (CNMF) [37] and Joint-Criterion Nonnegative MF (JCNMF) [38] for MF approaches.

- A recently emerged class based on deep-learning (neural-network) supervised models has led to significant advances in image fusion. However, it greatly depends on training data [39]. That is why these methods generally need a large amount of data to provide good performance. Among deep-learning methods adapted to HS pansharpening, one can cite Detailed-based Deep Laplacian PanSharpening (DDLPS) [40], HS Pansharpening with Deep Priors (HPDP) [41], and HS Pansharpening using 3-D Generative Adversarial Networks (HPGAN) [42].

- Shadowed pixels, which represent a significant proportion of urban scenes, and potential error sources [46];

- Transition areas, to assess if edges between materials are preserved in fusion results;

- Pixel groups of varying heterogenity levels, to evaluate methods according to the scene spatial complexity.

2. Methodology

- All images are spectral radiances.

- The HS image covers the reflective (0.4–2.5 m) spectral domain. The two PAN channels are inside the visible domain and the SWIR II (2.0–2.5 m) spectral domain, respectively.

- The HS and PAN images are fully registered, and the HS/PAN spatial resolution ratio, 𝓇, is an integer. Thus, each HS pixel covers the same area as PAN pixels at this higher spatial resolution. We call these pixels subpixels with respect to the HS data.

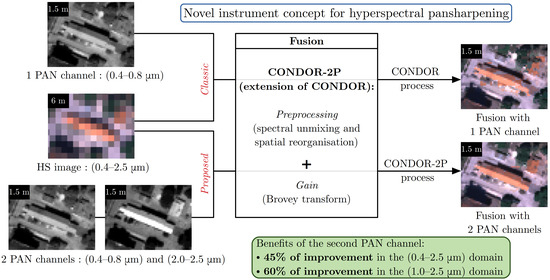

2.1. Description of Gain-2P

2.2. Description of CONDOR-2P

2.2.1. CONDOR Principle

- The segmentation step splits the high-spatial-resolution PAN image into several regions with low spectral variability.

- Separately for each segmented region consisting of a set of HS pixels, the endmember extraction step aims to estimate the associated endmembers.

- The mixed pixel detection step identifies the mixed HS pixels by referring to the segmentation map (step 1).

- For each mixed HS pixel (step 3), the endmember selection step gathers a list of possible endmembers depending on the corresponding segmented regions (steps 1 and 2) and the neighbouring pure pixels.

- For each mixed HS pixel, the spatial reorganisation step assigns the right endmembers to the right subpixels (following hypothesis 4 in the introduction of Section 2), to preserve as much as possible the spatial and spectral information of the PAN and HS images.

- The Gain process applies a scale factor derived from the PAN image and independent of the spectral band to all pixels of the reorganised image.

2.2.2. CONDOR-2P: Method Description

- The spatial reorganisation step (step 5), to minimize a cost function composed of two PAN criteria (Section 2.2.3);

- The fusion process (step 6), by using Gain-2P (Section 2.1).

2.2.3. CONDOR-2P: Spatial Reorganisation Improvement

Defining the PAN Reconstruction Errors

Properties

- P-1 One and only one endmember is assigned to each region, i.e.,:

- P-2 Each subpixel belongs to one and only one region, i.e.,:

- P-3 The Boolean and terms verify:

Development of the PAN Reconstruction Errors

Modelling the Whole Optimisation Problem

2.3. Selected Spectral Domains

2.3.1. SWIR Band Selection

- The whole (2.0–2.5 m) SWIR II spectral interval, partially identified in brown in Figure 4;

- The (2.025–2.35 m) spectral interval, identified in green in Figure 4, corresponding to the SWIR II spectral band of Sentinel-2 [51] (more precisely: (2.027–2.377 m) for S2A and (2.001–2.371 m) for S2B). It also corresponds to the HS spectral range retained in the SWIR II domain after removing the non-exploitable HS spectral bands (see Section 3.1.1) for the three tested datasets, as shown in Figure 4;

2.3.2. Gain-2P Limit Selection

- ;

- ;

- .

2.4. Performance-Assessment Protocol

2.4.1. Wald’s Protocol and Quality Criteria

- The whole images (global analysis);

- Various spatial regions (refined analysis): pixel groups built according to specific similarities (transition zones, shadowed areas, pixels of similar variance ranges, as described in Section 2.4.2);

- Each pixel of the scene (local analysis) for the global and spectral criteria: this evaluation is used to analyse the spatial variation of the error (Section 2.4.3).

2.4.2. Refined Analysis: Pixel Group Location

Transition Areas

- The first test focuses on spectral-radiance variations and is useful to detect an irradiance change for one single material. It is applied to the PAN image, and is based on the Canny edge detector [55], except that a simple threshold is used to identify the pixels associated with transitions (to ensure thick edges and thus complete transitions): a pixel is regarded as part of transition if and only if the corresponding value in the edge-detection map is superior to the mean value of this map.

- The second test aims to identify distinct materials, even if their spectral radiances in the PAN image are similar. It is applied to the REF image (high-spatial-resolution HS image, see Section 3.1) and computes, for each pixel, the SAM between its spectrum and the ones of the four closest neighbouring pixels (left, right, top, and bottom), to deduce a single summed SAM value. A pixel is regarded as part of transition if and only if the corresponding value of the SAM variation map is superior to a fixed threshold. Here, a threshold of times the averaged value of the SAM variation map was empirically set. Then, to ensure thick edges (and thus complete transitions), we applied two morphological operations to this mask: the first one removing isolated points, followed by a dilatation.

Shadowed and Sunlit Pixels

Variance Ranges

2.4.3. Local Analysis: Spatial Error Variation

2.4.4. Generation of the Classification Map

Supervised Classification: Training and Validation of the Model

Land-Cover Maps

3. Datasets

3.1. Image Simulation

3.1.1. REF Image

3.1.2. HS Image

3.1.3. PAN Images

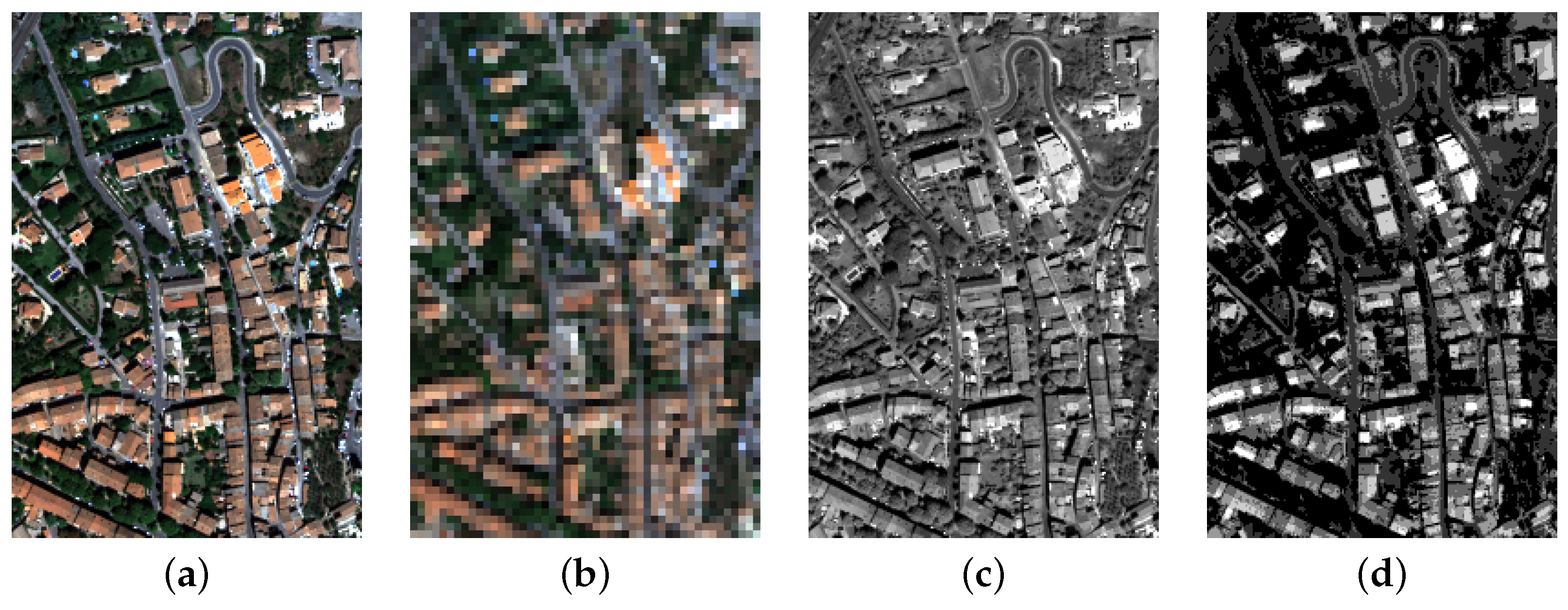

3.2. Dataset Description

- Peri-urban dataset (“stadium”, Figure 5): The extracted REF image represents a part of a sports complex from Canjuers (France). It covers a small scene ( pixels), which includes a stadium, buildings, and vegetation. This dataset was used to test different spectral intervals for the second PAN channel, before performing complete analyses with the following two datasets.

- Peri-urban dataset (“Toulon”, Figure 6): The extracted REF image covers a part of the airport of Toulon-Hyères (France). This image, which contains pixels, is larger and more complex than “Stadium” as it includes buildings, natural (fields, wastelands, trees, and a park) and man-made (roads, car and aircraft parking areas, and a landing strip) soils, a stadium, and very reflective structures (greenhouses and cars).

- Urban dataset (“Gardanne”, Figure 7): The extracted REF image represents a part of Gardanne (France). It contains pixels and covers residential areas (suburbs with spaced houses and gardens) and a compact city centre.

4. Results

- Section 4.1: selecting the optimal SWIR band by testing the three intervals defined in Section 2.3.1;

- Section 4.2: selecting the optimal Gain-2P limit, by testing the two values defined in Section 2.3.2;

- Section 4.3: comparing the fusion methods using one PAN channel with their extended versions using two PAN channels, to demonstrate the benefit of this instrument concept;

- Section 4.4: comparing CONDOR-2P with Gain-2P, to determine the most appropriate method in the case of two PAN channels.

- Number of endmembers extracted per region: 2;

- Pure pixel selection neighbourhood: 2;

- Weighting of cost functions for the spatial reorganisation optimisation problem: for CONDOR (PAN criterion only, no HS criterion), for CONDOR-2P (see Section 2.2.3).

4.1. Sensitivity Study: SWIR Band of the Second PAN Image

4.2. Sensitivity Study: Gain-2P Limit

4.2.1. Visual Analysis

- Isolated artefacts, for example, on the tree edges (see red frame in Figure 8), with the limit;

- Spectral distortions (colours non-representative of the REF image) with the limit. These spectral distorsions are due to the fact that the scale factor is not the same for all three displayed spectral bands ( and ) with the limit, contrary to the one. Therefore, this degradation only depends on representation choices.

4.2.2. Global and Refined Analyses

4.2.3. Local Analysis

- In the peri-urban case, the error values from outliers in the SWIR domain were relatively low (i.e., close to the ones in the VNIR domain) with the first scale factor, and the second scale factor increased these error values with the spectral band. It is therefore preferable to set the limit to .

- In the urban case, the error values from outliers in the SWIR spectral domain were much higher with the first scale factor, and the second one decreased them. The limit therefore provides better local results.

4.3. Method Performance Assessment with One and Two PAN Channels

4.3.1. Global and Refined Analyses

4.3.2. Land-Cover-Classification Maps

4.4. Comparison of Gain-2P and CONDOR-2P

4.4.1. Global and Refined Analyses

4.4.2. Local Visual Analysis

4.5. Synthesis

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| CC | Cross Correlation (quality criterion) |

| CONDOR | Combinatorial Optimisation for 2D ORganisation (fusion method) |

| ERGAS | Erreur Relative Globale Adimensionnelle de Synthèse (quality criterion) |

| GS | Gram-Schmidt (fusion method) |

| GSA | Gram–Schmidt adaptive (fusion method) |

| HS | HyperSpectral (image) |

| MILP | Mixed Integer Linear Programming (optimisation problem) |

| MNG | Mean Normalised Gap (quality criterion) |

| MS | MultiSpectral (image) |

| NG | Normalised Gap (quality criterion) |

| NMSE | Normalised Mean Square Error (quality criterion) |

| NRMSE | Normalised Root Mean Square Error (quality criterion) |

| PAN | PANchromatic (image) |

| PRISMA | PRecursore IperSpettrale della Missione Applicativa (instrument) |

| REF | REFerence (image) |

| RGB | Red-Green-Blue (colour composite) |

| RMSE | Root Mean Square Error (quality criterion) |

| SAM | Spectral Angle Mapper (quality criterion) |

| SLSTR | Sea and Land Surface Temperature Radiometer (instrument) |

| SOSU | Spatially Organized Spectral Unmixing (fusion method) |

| SWIR | Short-Wave InfraRed (1.0–2.5 m) spectral domain |

| VIS | VISible (0.4–0.8 m) spectral domain |

| VNIR | Visible and Near-InfraRed (0.4–1.0 m) spectral domain |

References

- Shalaby, A.; Tateishi, R. Remote sensing and GIS for mapping and monitoring land cover and land-use changes in the Northwestern coastal zone of Egypt. Appl. Geogr. 2007, 27, 28–41. [Google Scholar] [CrossRef]

- Miraglio, T.; Adeline, K.; Huesca, M.; Ustin, S.; Briottet, X. Monitoring LAI, chlorophylls, and carotenoids content of a woodland savanna using hyperspectral imagery and 3D radiative transfer modeling. Remote Sens. 2020, 12, 28. [Google Scholar] [CrossRef] [Green Version]

- Hu, S.; Wang, L. Automated urban land-use classification with remote sensing. Int. J. Remote Sens. 2013, 34, 790–803. [Google Scholar] [CrossRef]

- Donnay, J.P.; Barnsley, M.J.; Longley, P.A. Remote Sensing and Urban Analysis; CRC Press: Boca Raton, FL, USA, 2000. [Google Scholar]

- Sabins, F.F. Remote Sensing: Principles and Applications; Waveland Press: Long Grove, IN, USA, 2007. [Google Scholar]

- Benediktsson, J.A.; Ghamisi, P. Spectral-Spatial Classification of Hyperspectral Remote Sensing Images; Artech House: Norwood, MA, USA, 2015. [Google Scholar]

- Lier, P.; Valorge, C.; Briottet, X. Satellite Imagery from Acquisition Principle to Processing of Optical Images for Observing the Earth; CEPADUES Editions: Toulouse, France, 2012. [Google Scholar]

- Loncan, L.; De Almeida, L.B.; Bioucas-Dias, J.M.; Briottet, X.; Chanussot, J.; Dobigeon, N.; Fabre, S.; Liao, W.; Licciardi, G.A.; Simoes, M.; et al. Hyperspectral pansharpening: A review. IEEE Geosci. Remote Sens. Mag. 2015, 3, 27–46. [Google Scholar] [CrossRef] [Green Version]

- Zhang, Y. Understanding image fusion. Photogramm. Eng. Remote Sens. 2004, 70, 657–661. [Google Scholar]

- Gleyzes, M.A.; Perret, L.; Kubik, P. Pleiades system architecture and main performances. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2012, 39, 537–542. [Google Scholar] [CrossRef] [Green Version]

- Bicknell, W.E.; Digenis, C.J.; Forman, S.E.; Lencioni, D.E. EO-1 advanced land imager. In Earth Observing Systems IV; International Society for Optics and Photonics: Bellingham, MA, USA, 1999; Volume 3750, pp. 80–88. [Google Scholar]

- Porter, W.M.; Enmark, H.T. A system overview of the airborne visible/infrared imaging spectrometer (AVIRIS). In Imaging Spectroscopy I; International Society for Optics and Photonics: Bellingham, MA, USA, 1987; Volume 834, pp. 22–31. [Google Scholar]

- Cocks, T.; Jenssen, R.; Stewart, A.; Wilson, I.; Shields, T. The HyMap airborne hyperspectral sensor: The system, calibration and performance. In Proceedings of the 1st EARSeL workshop on Imaging Spectroscopy, Zurich, Switzerland, 6–8 October 1998; pp. 37–42. [Google Scholar]

- Briottet, X.; Feret, J.B.; Jacquemoud, S.; Lelong, C.; Rocchini, D.; Schaepman, M.E.; Sheeren, D.; Skidmore, A.; Somers, B.; Gomez, C.; et al. European Hyperspectral Explorer: HYPEX-2—A new space mission for vegetation biodiversity, bare continental surfaces, coastal zones and urban area ecosystems. In Proceedings of the 10th EARSeL SIG Imaging Spectroscopy Workshop, Zurich, Switzerland, 19–21 April 2017. [Google Scholar]

- Stuffler, T.; Kaufmann, C.; Hofer, S.; Förster, K.; Schreier, G.; Mueller, A.; Eckardt, A.; Bach, H.; Penné, B.; Benz, U.; et al. The EnMAP hyperspectral imager—An advanced optical payload for future applications in Earth observation programmes. Acta Astronaut. 2007, 61, 115–120. [Google Scholar] [CrossRef]

- Pearlman, J.; Carman, S.; Segal, C.; Jarecke, P.; Clancy, P.; Browne, W. Overview of the Hyperion imaging spectrometer for the NASA EO-1 mission. IGARSS 2001. Scanning the Present and Resolving the Future. In Proceedings of the IEEE 2001 International Geoscience and Remote Sensing Symposium (Cat. No. 01CH37217), Sydney, NSW, Australia, 9–13 July 2001; IEEE: Piscataway, NJ, USA, 2001; Volume 7, pp. 3036–3038. [Google Scholar]

- Galeazzi, C.; Sacchetti, A.; Cisbani, A.; Babini, G. The PRISMA program. In Proceedings of the IGARSS 2008—2008 IEEE International Geoscience and Remote Sensing Symposium, Boston, MA, USA, 8–11 July 2008; IEEE: Piscataway, NJ, USA, 2008; Volume 4, pp. IV-105–IV-108. [Google Scholar]

- Michel, S.; Gamet, P.; Lefevre-Fonollosa, M.J. HYPXIM—A hyperspectral satellite defined for science, security and defence users. In Proceedings of the 2011 3rd Workshop on Hyperspectral Image and Signal Processing: Evolution in Remote Sensing (WHISPERS), Lisbon, Portugal, 6–9 June 2011; IEEE: Piscataway, NJ, USA, 2011; pp. 1–4. [Google Scholar]

- Briottet, X.; Marion, R.; Carrere, V.; Jacquemoud, S.; Chevrel, S.; Prastault, P.; D’oria, M.; Gilouppe, P.; Hosford, S.; Lubac, B.; et al. HYPXIM: A new hyperspectral sensor combining science/defence applications. In Proceedings of the 2011 3rd Workshop on Hyperspectral Image and Signal Processing: Evolution in Remote Sensing (WHISPERS), Lisbon, Portugal, 6–9 June 2011; IEEE: Piscataway, NJ, USA, 2011; pp. 1–4. [Google Scholar]

- Ungar, S.G.; Pearlman, J.S.; Mendenhall, J.A.; Reuter, D. Overview of the earth observing one (EO-1) mission. IEEE Trans. Geosci. Remote Sens. 2003, 41, 1149–1159. [Google Scholar] [CrossRef]

- Cetin, M.; Musaoglu, N. Merging hyperspectral and panchromatic image data: Qualitative and quantitative analysis. Int. J. Remote Sens. 2009, 30, 1779–1804. [Google Scholar] [CrossRef]

- Aiazzi, B.; Baronti, S.; Selva, M. Improving component substitution pansharpening through multivariate regression of MS + Pan data. IEEE Trans. Geosci. Remote Sens. 2007, 45, 3230–3239. [Google Scholar] [CrossRef]

- Saroglu, E.; Bektas, F.; Musaoglu, N.; Goksel, C. Fusion of multisensor remote sensing data: Assessing the quality of resulting images. Int. Arch. Photogram. Remote Sens. Spatial. Inform. Sci. 2004, 35, 575–579. [Google Scholar]

- Vivone, G.; Alparone, L.; Chanussot, J.; Dalla Mura, M.; Garzelli, A.; Licciardi, G.A.; Restaino, R.; Wald, L. A critical comparison among pansharpening algorithms. IEEE Trans. Geosci. Remote Sens. 2014, 53, 2565–2586. [Google Scholar] [CrossRef]

- Loncan, L. Fusion of Hyperspectral and Panchromatic Images with very High Spatial Resolution. Ph.D. Thesis, Université Grenoble Alpes, Grenoble, France, 2016. [Google Scholar]

- Aiazzi, B.; Alparone, L.; Baronti, S.; Garzelli, A.; Selva, M. MTF-tailored multiscale fusion of high-resolution MS and Pan imagery. Photogramm. Eng. Remote Sens. 2006, 72, 591–596. [Google Scholar] [CrossRef]

- Vivone, G.; Restaino, R.; Dalla Mura, M.; Licciardi, G.; Chanussot, J. Contrast and error-based fusion schemes for multispectral image pansharpening. IEEE Geosci. Remote Sens. Lett. 2013, 11, 930–934. [Google Scholar] [CrossRef] [Green Version]

- Dong, W.; Xiao, S.; Xue, X.; Qu, J. An improved hyperspectral pansharpening algorithm based on optimized injection model. IEEE Access 2019, 7, 16718–16729. [Google Scholar] [CrossRef]

- Qu, J.; Li, Y.; Dong, W. A new hyperspectral pansharpening method based on guided fliter. In Proceedings of the 2017 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Fort Worth, TX, USA, 23–28 July 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 5125–5128. [Google Scholar]

- Qu, J.; Li, Y.; Dong, W. Hyperspectral pansharpening with guided filter. IEEE Geosci. Remote Sens. Lett. 2017, 14, 2152–2156. [Google Scholar] [CrossRef]

- Qu, J.; Li, Y.; Dong, W. Fusion of hyperspectral and panchromatic images using an average filter and a guided filter. J. Vis. Commun. Image Represent. 2018, 52, 151–158. [Google Scholar] [CrossRef]

- Dong, W.; Xiao, S. An Adaptive Weighted Regression and Guided Filter Hybrid Method for Hyperspectral Pansharpening. TIIS 2019, 13, 327–346. [Google Scholar]

- Elad, M.; Aharon, M. Image denoising via sparse and redundant representations over learned dictionaries. IEEE Trans. Image Process. 2006, 15, 3736–3745. [Google Scholar] [CrossRef]

- Wei, Q.; Bioucas-Dias, J.; Dobigeon, N.; Tourneret, J.Y. Hyperspectral and multispectral image fusion based on a sparse representation. IEEE Trans. Geosci. Remote Sens. 2015, 53, 3658–3668. [Google Scholar] [CrossRef] [Green Version]

- Lin, H.; Zhang, A. Fusion of hyperspectral and panchromatic images using improved HySure method. In Proceedings of the 2017 2nd International Conference on Image, Vision and Computing (ICIVC), Chengdu, China, 2–4 June 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 489–493. [Google Scholar]

- Huang, Z.; Chen, Q.; Shen, Y.; Chen, Q.; Liu, X. An improved variational method for hyperspectral image pansharpening with the constraint of Spectral Difference Minimization. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, 42, 753–760. [Google Scholar] [CrossRef] [Green Version]

- Yokoya, N.; Yairi, T.; Iwasaki, A. Coupled nonnegative matrix factorization unmixing for hyperspectral and multispectral data fusion. IEEE Trans. Geosci. Remote Sens. 2011, 50, 528–537. [Google Scholar] [CrossRef]

- Karoui, M.S.; Deville, Y.; Benhalouche, F.Z.; Boukerch, I. Hypersharpening by joint-criterion nonnegative matrix factorization. IEEE Trans. Geosci. Remote Sens. 2016, 55, 1660–1670. [Google Scholar] [CrossRef]

- Kaur, G.; Saini, K.S.; Singh, D.; Kaur, M. A Comprehensive Study on Computational Pansharpening Techniques for Remote Sensing Images. In Archives of Computational Methods in Engineering; Springer: Berlin/Heidelberg, Germany, 2021; pp. 1–18. [Google Scholar]

- Li, K.; Xie, W.; Du, Q.; Li, Y. DDLPS: Detail-based deep Laplacian pansharpening for hyperspectral imagery. IEEE Trans. Geosci. Remote Sens. 2019, 57, 8011–8025. [Google Scholar] [CrossRef]

- Xie, W.; Lei, J.; Cui, Y.; Li, Y.; Du, Q. Hyperspectral pansharpening with deep priors. IEEE Trans. Neural Netw. Learn. Syst. 2019, 31, 1529–1543. [Google Scholar] [CrossRef]

- Xie, W.; Cui, Y.; Li, Y.; Lei, J.; Du, Q.; Li, J. HPGAN: Hyperspectral Pansharpening Using 3-D Generative Adversarial Networks. IEEE Trans. Geosci. Remote Sens. 2021, 59, 463–477. [Google Scholar] [CrossRef]

- Sun, L.; Wu, F.; He, C.; Zhan, T.; Liu, W.; Zhang, D. Weighted Collaborative Sparse and L1/2 Low-Rank Regularizations with Superpixel Segmentation for Hyperspectral Unmixing. IEEE Geosci. Remote Sens. Lett. 2020, 1–5. [Google Scholar] [CrossRef]

- Constans, Y.; Fabre, S.; Seymour, M.; Crombez, V.; Briottet, X.; Deville, Y. Fusion of hyperspectral and panchromatic data by spectral unmixing in the reflective domain. ISPRS-Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2020, XLIII-B3-2020, 567–574. [Google Scholar] [CrossRef]

- Constans, Y.; Fabre, S.; Carfantan, H.; Seymour, M.; Crombez, V.; Briottet, X.; Deville, Y. Fusion of panchromatic and hyperspectral images in the reflective domain by a combinatorial approach and application to urban landscape. In Proceedings of the 2021 IEEE International Geoscience and Remote Sensing Symposium IGARSS, Brussels, Belgium, 11–16 July 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 2648–2651. [Google Scholar]

- Lu, D.; Hetrick, S.; Moran, E. Land cover classification in a complex urban-rural landscape with QuickBird imagery. Photogramm. Eng. Remote Sens. 2010, 76, 1159–1168. [Google Scholar] [CrossRef] [Green Version]

- Liao, W.; Huang, X.; Van Coillie, F.; Gautama, S.; Pižurica, A.; Philips, W.; Liu, H.; Zhu, T.; Shimoni, M.; Moser, G.; et al. Processing of multiresolution thermal hyperspectral and digital color data: Outcome of the 2014 IEEE GRSS data fusion contest. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 2984–2996. [Google Scholar] [CrossRef]

- Liao, W.; Huang, X.; Van Coillie, F.; Thoonen, G.; Pižurica, A.; Scheunders, P.; Philips, W. Two-stage fusion of thermal hyperspectral and visible RGB image by PCA and Guided filter. In Proceedings of the 2015 7th Workshop on Hyperspectral Image and Signal Processing: Evolution in Remote Sensing (WHISPERS), Tokyo, Japan, 2–5 June 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 1–4. [Google Scholar]

- Forrest, J.J.H. COIN Branch and Cut. COIN-OR. Available online: http://www.coin-or.org (accessed on 5 November 2021).

- Makhorin, A. GLPK (GNU Linear Programming Kit). 2008. Available online: http://www.gnu.org/s/glpk/glpk.html (accessed on 5 November 2021).

- Drusch, M.; Del Bello, U.; Carlier, S.; Colin, O.; Fernandez, V.; Gascon, F.; Hoersch, B.; Isola, C.; Laberinti, P.; Martimort, P.; et al. Sentinel-2: ESA’s optical high-resolution mission for GMES operational services. Remote Sens. Environ. 2012, 120, 25–36. [Google Scholar] [CrossRef]

- Donlon, C.; Berruti, B.; Buongiorno, A.; Ferreira, M.H.; Féménias, P.; Frerick, J.; Goryl, P.; Klein, U.; Laur, H.; Mavrocordatos, C.; et al. The global monitoring for environment and security (GMES) sentinel-3 mission. Remote Sens. Environ. 2012, 120, 37–57. [Google Scholar] [CrossRef]

- Wald, L.; Ranchin, T.; Mangolini, M. Fusion of satellite images of different spatial resolutions: Assessing the quality of resulting images. Photogramm. Eng. Remote Sens. 1997, 63, 691–699. [Google Scholar]

- Pei, W.; Wang, G.; Yu, X. Performance evaluation of different references based image fusion quality metrics for quality assessment of remote sensing Image fusion. In Proceedings of the 2012 IEEE International Geoscience and Remote Sensing Symposium, Munich, Germany, 22–27 July 2012; IEEE: Piscataway, NJ, USA, 2012; pp. 2280–2283. [Google Scholar]

- Canny, J. A computational approach to edge detection. IEEE Trans. Pattern Anal. Mach. Intell. 1986, 6, 679–698. [Google Scholar] [CrossRef]

- Nagao, M.; Matsuyama, T.; Ikeda, Y. Region extraction and shape analysis in aerial photographs. Comput. Graph. Image Process. 1979, 10, 195–223. [Google Scholar] [CrossRef]

- Hayslett, H.T. Statistics; Elsevier: Amsterdam, The Netherlands, 2014. [Google Scholar]

- Williamson, D.F.; Parker, R.A.; Kendrick, J.S. The box plot: A simple visual method to interpret data. Ann. Intern. Med. 1989, 110, 916–921. [Google Scholar] [CrossRef]

- Chutia, D.; Bhattacharyya, D.; Sarma, K.K.; Kalita, R.; Sudhakar, S. Hyperspectral remote sensing classifications: A perspective survey. Trans. GIS 2016, 20, 463–490. [Google Scholar] [CrossRef]

- Belgiu, M.; Drăguţ, L. Random forest in remote sensing: A review of applications and future directions. ISPRS J. Photogramm. Remote Sens. 2016, 114, 24–31. [Google Scholar] [CrossRef]

- Browne, M.W. Cross-validation methods. J. Math. Psychol. 2000, 44, 108–132. [Google Scholar] [CrossRef] [Green Version]

- Purushotham, S.; Tripathy, B. Evaluation of classifier models using stratified tenfold cross validation techniques. In International Conference on Computing and Communication Systems; Springer: Berlin/Heidelberg, Germany, 2011; pp. 680–690. [Google Scholar]

- Stehman, S.V. Selecting and interpreting measures of thematic classification accuracy. Remote Sens. Environ. 1997, 62, 77–89. [Google Scholar] [CrossRef]

- Rousset-Rouviere, L.; Coudrain, C.; Fabre, S.; Baarstad, I.; Fridman, A.; Løke, T.; Blaaberg, S.; Skauli, T. Sysiphe, an airborne hyperspectral imaging system for the VNIR-SWIR-MWIR-LWIR region. In Proceedings of the 7th EARSeL Workshop on Imaging Spectroscopy, Edinburgh, UK, 11–13 April 2011; pp. 1–12. [Google Scholar]

- Rousset-Rouviere, L.; Coudrain, C.; Fabre, S.; Ferrec, Y.; Poutier, L.; Viallefont, F.; Rivière, T.; Ceamanos, X.; Loke, T.; Fridman, A.; et al. SYSIPHE, an airborne hyperspectral imaging system from visible to thermal infrared. Results from the 2015 airborne campaign. In Proceedings of the 10th EARSEL SIG Imaging Spectroscopy, Zurich, Switzerland, 19–21 April 2017. [Google Scholar]

- Comaniciu, D.; Meer, P. Mean shift: A robust approach toward feature space analysis. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 24, 603–619. [Google Scholar] [CrossRef] [Green Version]

- Madani, A.A. Selection of the optimum Landsat Thematic Mapper bands for automatic lineaments extraction, Wadi Natash area, south eastern desert, Egypt. Asian J. Geoinform. 2001, 3, 71–76. [Google Scholar]

- Yuan, Y.; Zhang, L.; Su, L.; Ye, Z. Study on shortwave infrared multispectral horizontal imgaing performance under haze weather condition. In AOPC 2019: Optical Spectroscopy and Imaging; International Society for Optics and Photonics: Bellingham, MA, USA, 2019; Volume 11337, p. 113370M. [Google Scholar]

- HySpex SWIR-640. Available online: https://www.hyspex.com/hyspex-products/hyspex-classic/hyspex-swir-640/ (accessed on 27 September 2021).

- Aiazzi, B.; Alparone, L.; Baronti, S.; Garzelli, A.; Selva, M. Pansharpening of hyperspectral images: A critical analysis of requirements and assessment on simulated PRISMA data. In Image and Signal Processing for Remote Sensing XIX; International Society for Optics and Photonics: Bellingham, MA, USA, 2013; Volume 8892, p. 889203. [Google Scholar]

| SWIR II PAN Domain (μm) | Method | Spectral Domain | MNG (%) | SAM () | RMSE | ERGAS | CC |

|---|---|---|---|---|---|---|---|

| (2.0–2.5) | Gain-2P | VNIR | 4.2 | 2.5 | 4.9 | 2.0 | 0.96 |

| SWIR | 3.2 | 0.9 | 1.9 | 1.3 | 0.96 | ||

| Reflective | 3.7 | 2.6 | 3.8 | 1.7 | 0.96 | ||

| CONDOR-2P | VNIR | 3.7 | 2.2 | 4.7 | 1.9 | 0.96 | |

| SWIR | 3.0 | 0.9 | 2.0 | 1.3 | 0.96 | ||

| Reflective | 3.4 | 2.3 | 3.7 | 1.6 | 0.96 | ||

| (2.025–2.35) | Gain-2P | VNIR | 4.2 | 2.5 | 4.9 | 2.0 | 0.96 |

| SWIR | 3.2 | 0.9 | 1.9 | 1.3 | 0.96 | ||

| Reflective | 3.7 | 2.6 | 3.8 | 1.7 | 0.96 | ||

| CONDOR-2P | VNIR | 3.7 | 2.2 | 4.7 | 1.9 | 0.96 | |

| SWIR | 3.0 | 0.9 | 1.9 | 1.3 | 0.96 | ||

| Reflective | 3.3 | 2.3 | 3.6 | 1.6 | 0.96 | ||

| (2.2–2.3) | Gain-2P | VNIR | 4.2 | 2.5 | 4.9 | 2.0 | 0.96 |

| SWIR | 3.1 | 0.9 | 1.9 | 1.3 | 0.96 | ||

| Reflective | 3.7 | 2.6 | 3.8 | 1.7 | 0.96 | ||

| CONDOR-2P | VNIR | 3.7 | 2.2 | 4.7 | 1.9 | 0.96 | |

| SWIR | 2.9 | 0.9 | 1.9 | 1.3 | 0.96 | ||

| Reflective | 3.3 | 2.3 | 3.7 | 1.6 | 0.96 |

| PAN Channels | Method | Spectral Domain | “Toulon” | “Gardanne” | ||||

|---|---|---|---|---|---|---|---|---|

| MNG (%) | SAM (°) | RMSE | MNG (%) | SAM (°) | RMSE | |||

| 1 PAN channel | Gain | VNIR | 6.8 | 3.7 | 7.2 | 13.2 | 6.2 | 9.1 |

| SWIR | 11.1 | 1.7 | 3.6 | 19.6 | 2.8 | 5.3 | ||

| Reflective | 9.0 | 3.8 | 5.7 | 16.4 | 6.5 | 7.5 | ||

| CONDOR | VNIR | 8.6 | 4.5 | 8.6 | 17.0 | 7.8 | 11.7 | |

| SWIR | 13.9 | 2.2 | 4.0 | 24.1 | 3.5 | 6.3 | ||

| Reflective | 11.2 | 4.7 | 6.7 | 20.5 | 8.1 | 9.4 | ||

| 2 PAN channels, limit | Gain-2P | VNIR | 6.9 | 3.7 | 7.3 | 13.3 | 6.3 | 9.2 |

| SWIR | 7.5 | 1.7 | 6.0 | 12.8 | 2.8 | 8.6 | ||

| Reflective | 7.2 | 4.4 | 6.6 | 13.0 | 7.4 | 8.9 | ||

| CONDOR-2P | VNIR | 6.5 | 3.5 | 6.7 | 13.0 | 6.2 | 8.7 | |

| SWIR | 7.0 | 1.6 | 5.2 | 12.2 | 2.6 | 7.6 | ||

| Reflective | 6.8 | 4.1 | 6.0 | 12.5 | 7.1 | 8.1 | ||

| 2 PAN channels, limit | Gain-2P | VNIR | 6.8 | 3.7 | 7.2 | 13.2 | 6.2 | 9.1 |

| SWIR | 5.8 | 1.8 | 3.4 | 9.7 | 3.1 | 4.6 | ||

| Reflective | 6.3 | 3.8 | 5.7 | 11.5 | 6.4 | 7.2 | ||

| CONDOR-2P | VNIR | 6.5 | 3.5 | 6.6 | 12.9 | 6.1 | 8.6 | |

| SWIR | 5.5 | 1.7 | 3.2 | 9.6 | 2.9 | 4.6 | ||

| Reflective | 6.0 | 3.6 | 5.2 | 11.3 | 6.4 | 6.9 | ||

| PAN Channels | Method | Spectral Domain | “Toulon” | “Gardanne” | ||||

|---|---|---|---|---|---|---|---|---|

| MNG (%) | SAM (°) | RMSE | MNG (%) | SAM (°) | RMSE | |||

| 1 PAN channel | Gain | VNIR | 10.1 | 5.4 | 9.7 | 16.4 | 7.8 | 10.7 |

| SWIR | 16.7 | 2.4 | 4.7 | 23.1 | 3.4 | 6.4 | ||

| Reflective | 13.4 | 5.7 | 7.7 | 19.7 | 8.0 | 8.8 | ||

| CONDOR | VNIR | 11.9 | 6.3 | 11.0 | 19.9 | 9.3 | 13.0 | |

| SWIR | 20.1 | 3.0 | 5.1 | 27.4 | 4.1 | 7.3 | ||

| Reflective | 16.0 | 6.5 | 8.6 | 23.7 | 9.5 | 10.6 | ||

| 2 PAN channels, limit | Gain-2P | VNIR | 10.2 | 5.4 | 9.8 | 16.5 | 7.8 | 10.8 |

| SWIR | 11.0 | 2.4 | 7.8 | 15.5 | 3.4 | 10.0 | ||

| Reflective | 10.5 | 6.4 | 8.8 | 16.0 | 9.0 | 10.3 | ||

| CONDOR-2P | VNIR | 9.6 | 5.1 | 8.9 | 15.9 | 7.6 | 10.0 | |

| SWIR | 10.3 | 2.2 | 6.8 | 14.8 | 3.2 | 8.7 | ||

| Reflective | 9.9 | 6.0 | 7.9 | 15.3 | 8.6 | 9.4 | ||

| 2 PAN channels, 1.35 μm limit | Gain-2P | VNIR | 10.1 | 5.4 | 9.7 | 16.4 | 7.8 | 10.7 |

| SWIR | 8.8 | 2.8 | 4.6 | 11.8 | 3.6 | 5.4 | ||

| Reflective | 9.4 | 5.6 | 7.6 | 14.1 | 8.0 | 8.5 | ||

| CONDOR-2P | VNIR | 9.6 | 5.1 | 8.8 | 15.8 | 7.5 | 10.0 | |

| SWIR | 8.2 | 2.5 | 4.3 | 11.5 | 3.4 | 5.4 | ||

| Reflective | 8.9 | 5.3 | 7.0 | 13.7 | 7.8 | 8.0 | ||

| Pixel Group | Proportion | Gain | CONDOR | Gain-2P | CONDOR-2P |

|---|---|---|---|---|---|

| Whole images | 100% | 85 | 82 | 91 | 92 |

| Sunlit pixels | 96% | 85 | 82 | 91 | 92 |

| Shadowed pixels | 4% | 89 | 87 | 91 | 92 |

| HS pixels with variance < 5 | 64% | 91 | 88 | 95 | 95 |

| HS pixels with variance | 14% | 77 | 73 | 87 | 89 |

| HS pixels with variance | 7% | 72 | 71 | 84 | 85 |

| HS pixels with variance > 15 | 16% | 74 | 71 | 84 | 85 |

| Pixels in transition areas | 50% | 76 | 73 | 86 | 87 |

| Pixels out of transition areas | 50% | 94 | 91 | 97 | 97 |

| Pixel Group | Proportion | Gain | CONDOR | Gain-2P | CONDOR-2P |

|---|---|---|---|---|---|

| Whole images | 100% | 78 | 73 | 87 | 86 |

| Sunlit pixels | 92% | 77 | 71 | 86 | 86 |

| Shadowed pixels | 8% | 94 | 94 | 95 | 95 |

| HS pixels with variance < 5 | 30% | 84 | 77 | 90 | 90 |

| HS pixels with variance | 23% | 78 | 72 | 87 | 87 |

| HS pixels with variance | 15% | 76 | 70 | 86 | 86 |

| HS pixels with variance > 15 | 32% | 74 | 71 | 83 | 83 |

| Pixels in transition areas | 62% | 74 | 70 | 84 | 84 |

| Pixels out of transition areas | 38% | 85 | 77 | 91 | 90 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Constans, Y.; Fabre, S.; Seymour, M.; Crombez, V.; Deville, Y.; Briottet, X. Hyperspectral Pansharpening in the Reflective Domain with a Second Panchromatic Channel in the SWIR II Spectral Domain. Remote Sens. 2022, 14, 113. https://doi.org/10.3390/rs14010113

Constans Y, Fabre S, Seymour M, Crombez V, Deville Y, Briottet X. Hyperspectral Pansharpening in the Reflective Domain with a Second Panchromatic Channel in the SWIR II Spectral Domain. Remote Sensing. 2022; 14(1):113. https://doi.org/10.3390/rs14010113

Chicago/Turabian StyleConstans, Yohann, Sophie Fabre, Michael Seymour, Vincent Crombez, Yannick Deville, and Xavier Briottet. 2022. "Hyperspectral Pansharpening in the Reflective Domain with a Second Panchromatic Channel in the SWIR II Spectral Domain" Remote Sensing 14, no. 1: 113. https://doi.org/10.3390/rs14010113

APA StyleConstans, Y., Fabre, S., Seymour, M., Crombez, V., Deville, Y., & Briottet, X. (2022). Hyperspectral Pansharpening in the Reflective Domain with a Second Panchromatic Channel in the SWIR II Spectral Domain. Remote Sensing, 14(1), 113. https://doi.org/10.3390/rs14010113