Shadow Removal from UAV Images Based on Color and Texture Equalization Compensation of Local Homogeneous Regions

Abstract

:1. Introduction

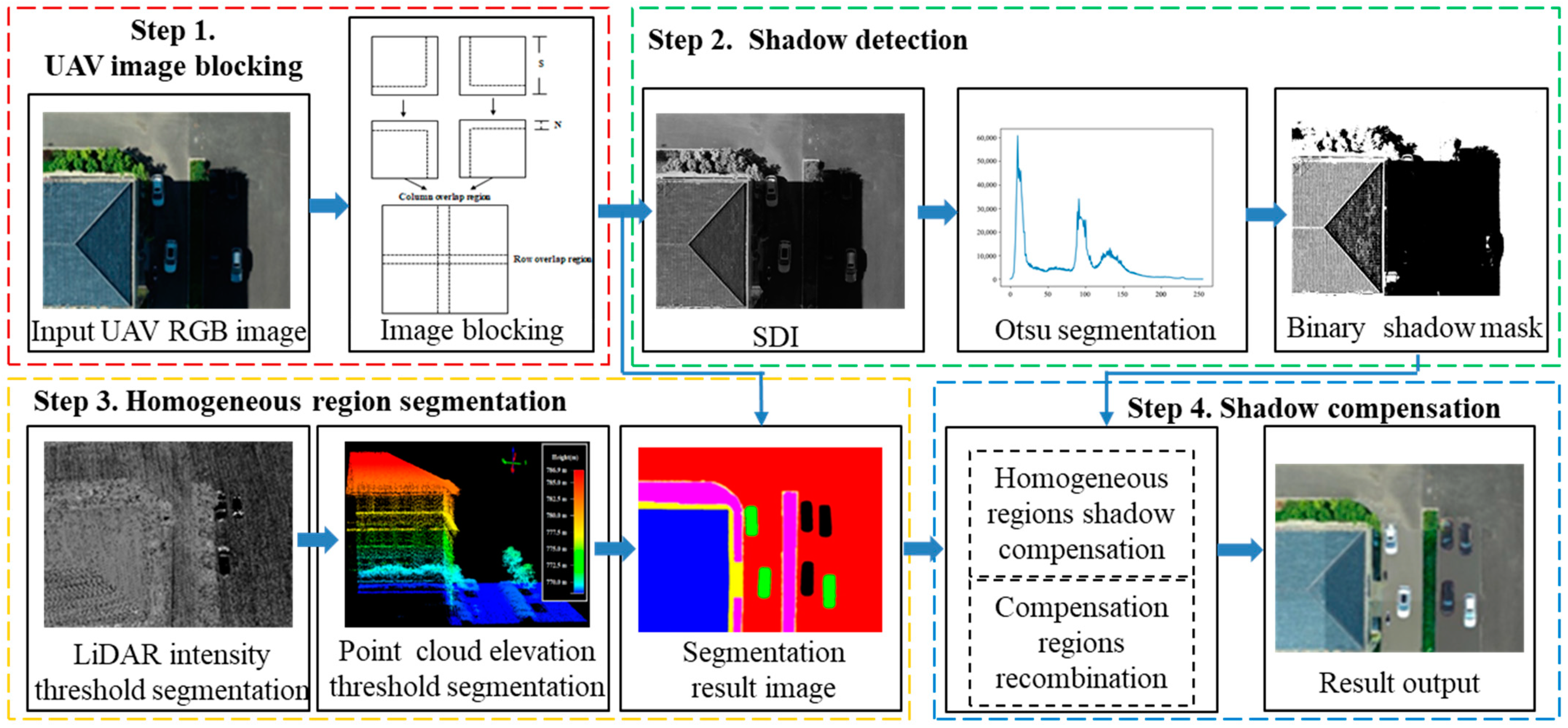

2. Methodology

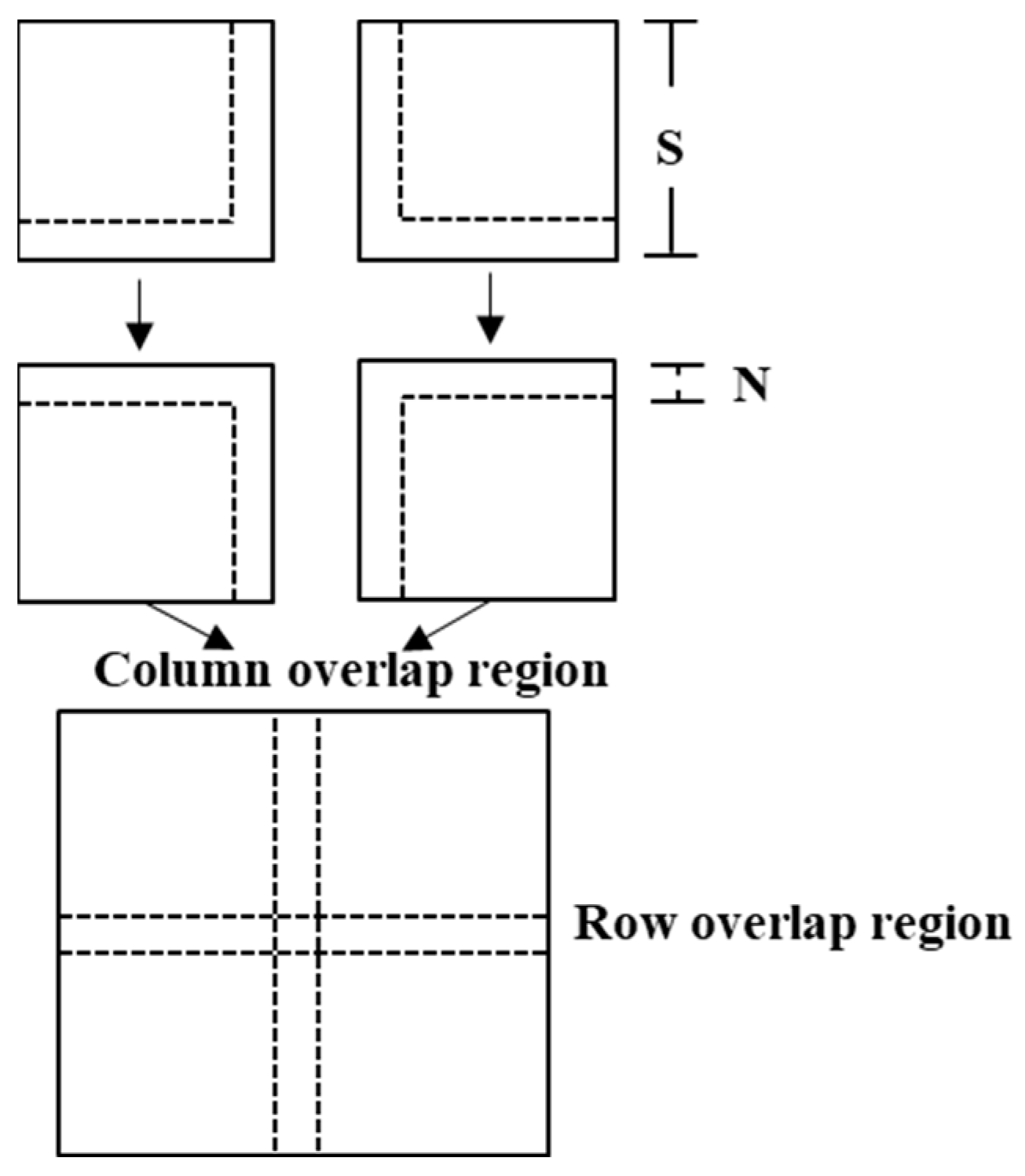

2.1. UAV Image Blocking

2.2. Shadow Detection

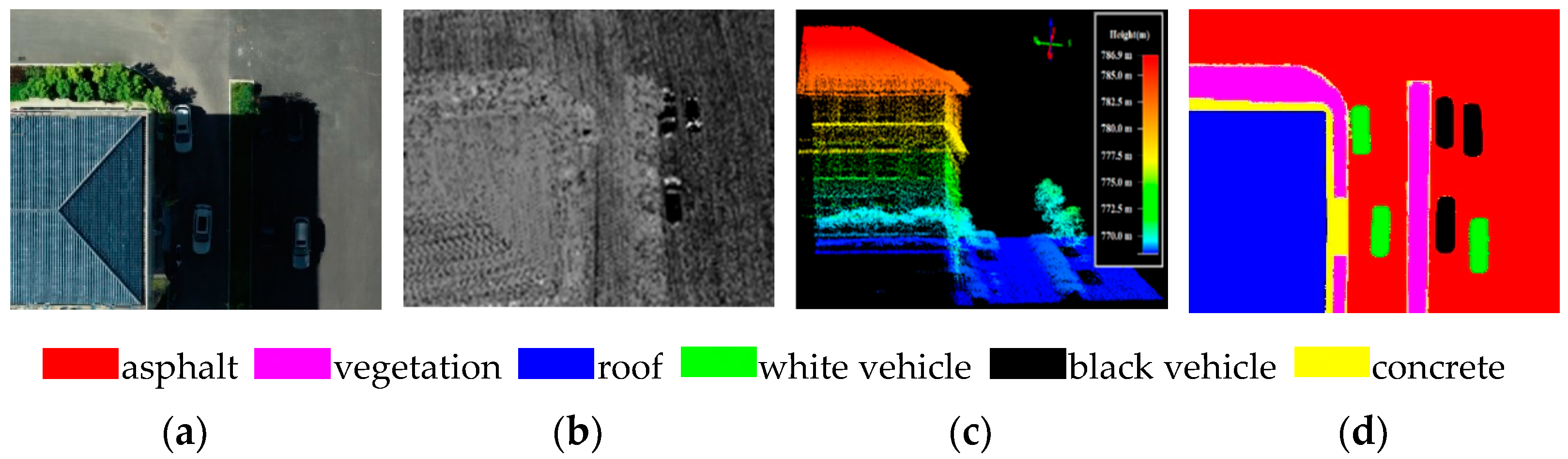

2.3. Homogeneous Region Segmentation

- (1)

- Enhance the contrast of the intensity data using a histogram equalization method.

- (2)

- Calculate the statistical characteristics of the LiDAR intensity and elevation for each image region (each block).

- (3)

- A homogeneous region is selected using thresholding segmentation.

2.4. Shadow Compensation

| Algorithm 1. Shadow compensation algorithm |

| Input: UAV RGB image ; The number of homogeneous regions, n; t. Output: The result of shadow compensation, , 1. All shadow regions are represented by S and all non-shadow regions are represented by U; 2. Find the non-shadow region corresponding to the homogeneous shadow region , ; 3. for (j = 1; j m; j++) do 4. for (i = 1; i n; i ++) do 5. compute the average value of in the q-band, (); 6. compute the average value of in the q-band, (); 7. ; //the entropy value 8. if (ent t) then 9. ; //the ratio of direct light to ambient light. 10. ; //shadow compensation in the q-band. 11. else 12. //the difference between the non-shadow and shadow regions. 13. ;//shadow compensation in the q-band. 14. end if 15. ;//the shadow compensation result of 16. end for 17. end for 18. return ; |

3. Experiments

3.1. Experiment Data

3.2. Experiment Design

3.2.1. Experiment Design of Shadow Detection

3.2.2. Experiment Design of Shadow Compensation

- (1)

- Color difference (CD)

- (2)

- Shadow standard deviation index (SSDI)

- (3)

- Gradient similarity (GS)

3.3. Experimental Result

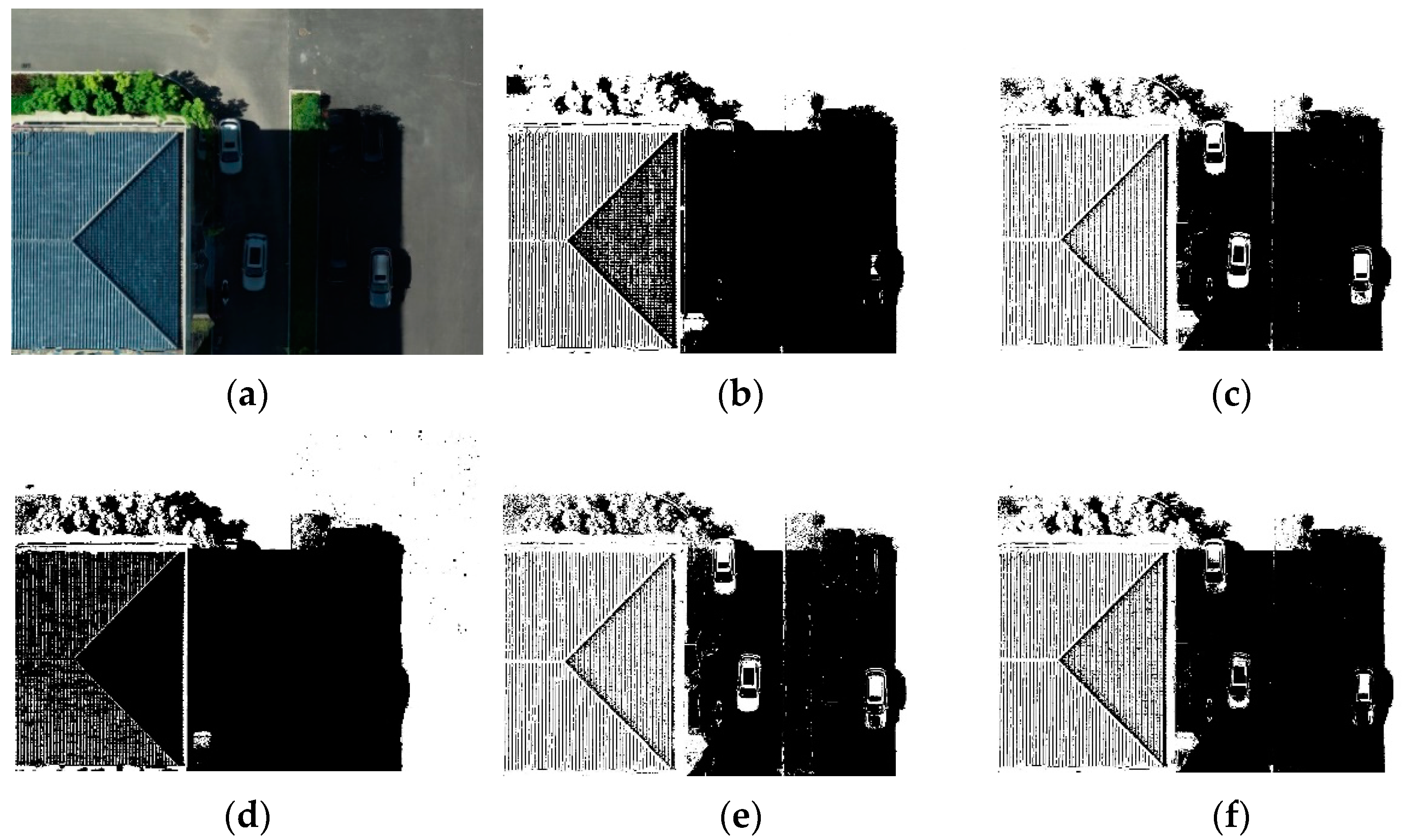

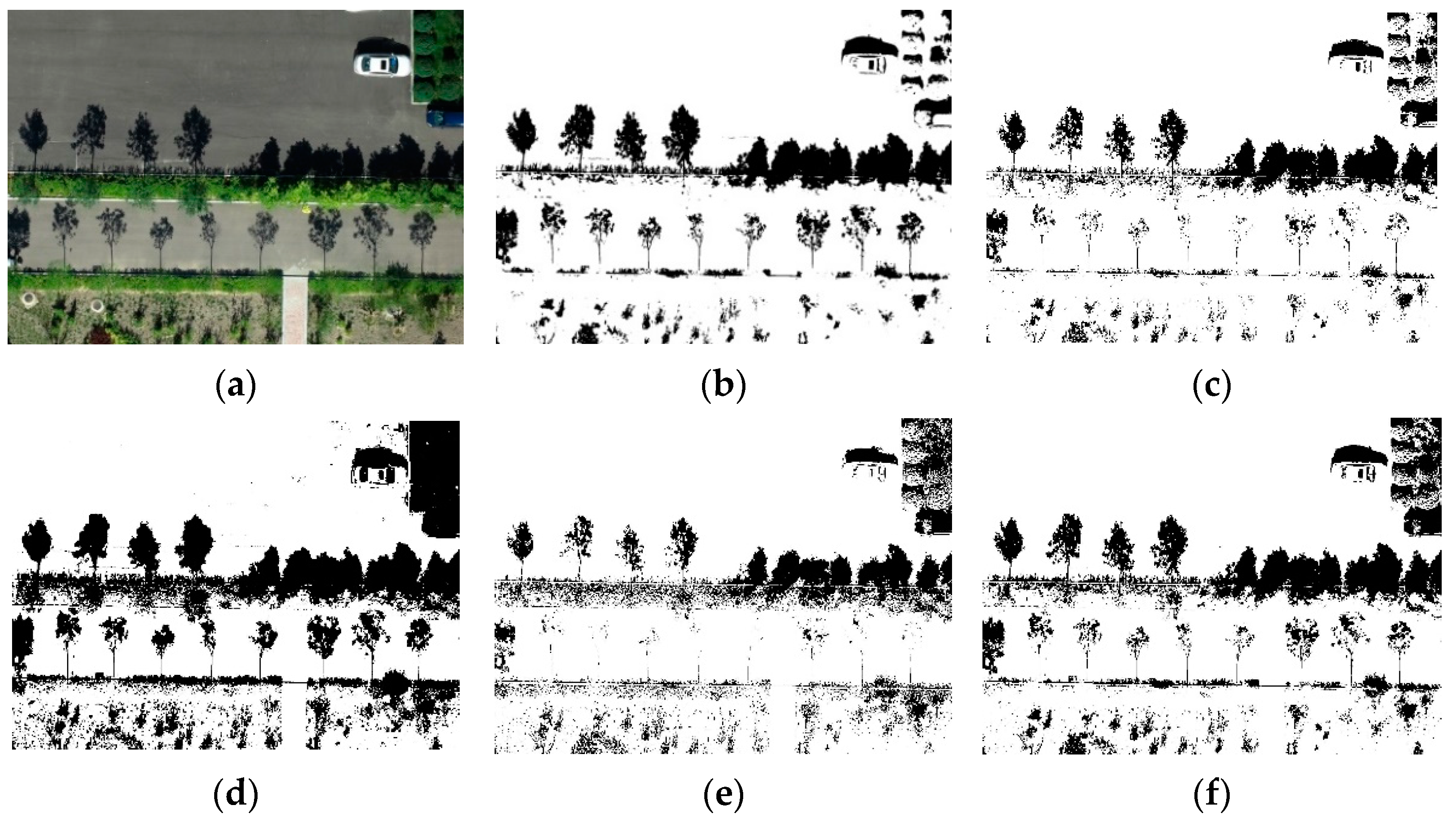

3.3.1. Experiment Result of Shadow Detection

3.3.2. Experiment Result of Shadow Detection

4. Discussion

4.1. Sensitivity of Parameter Settings

4.2. Analysis of Experimental Results of Shadow Detection

4.2.1. Subjective Evaluation and Discussion

4.2.2. Objective Evaluation and Discussion

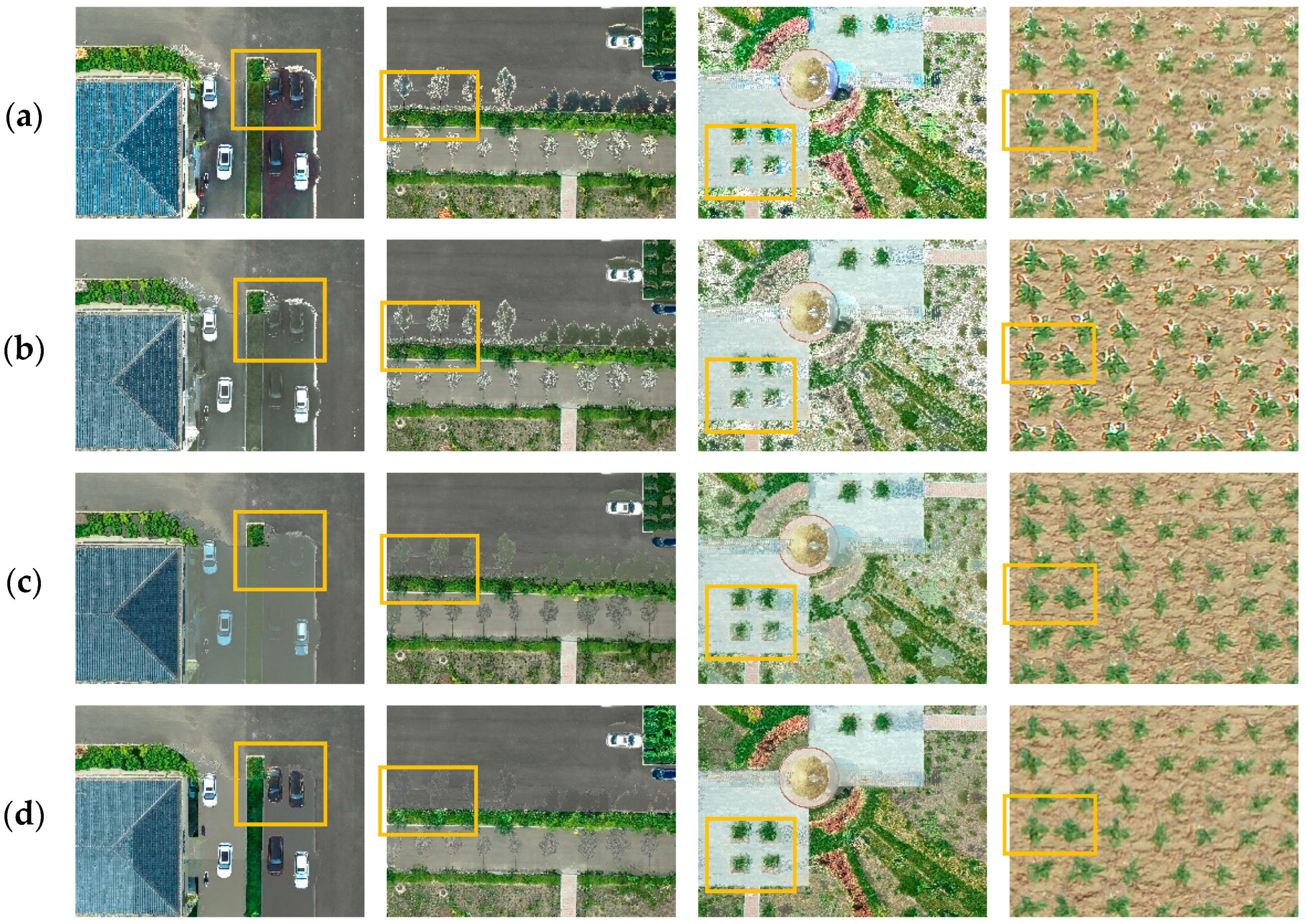

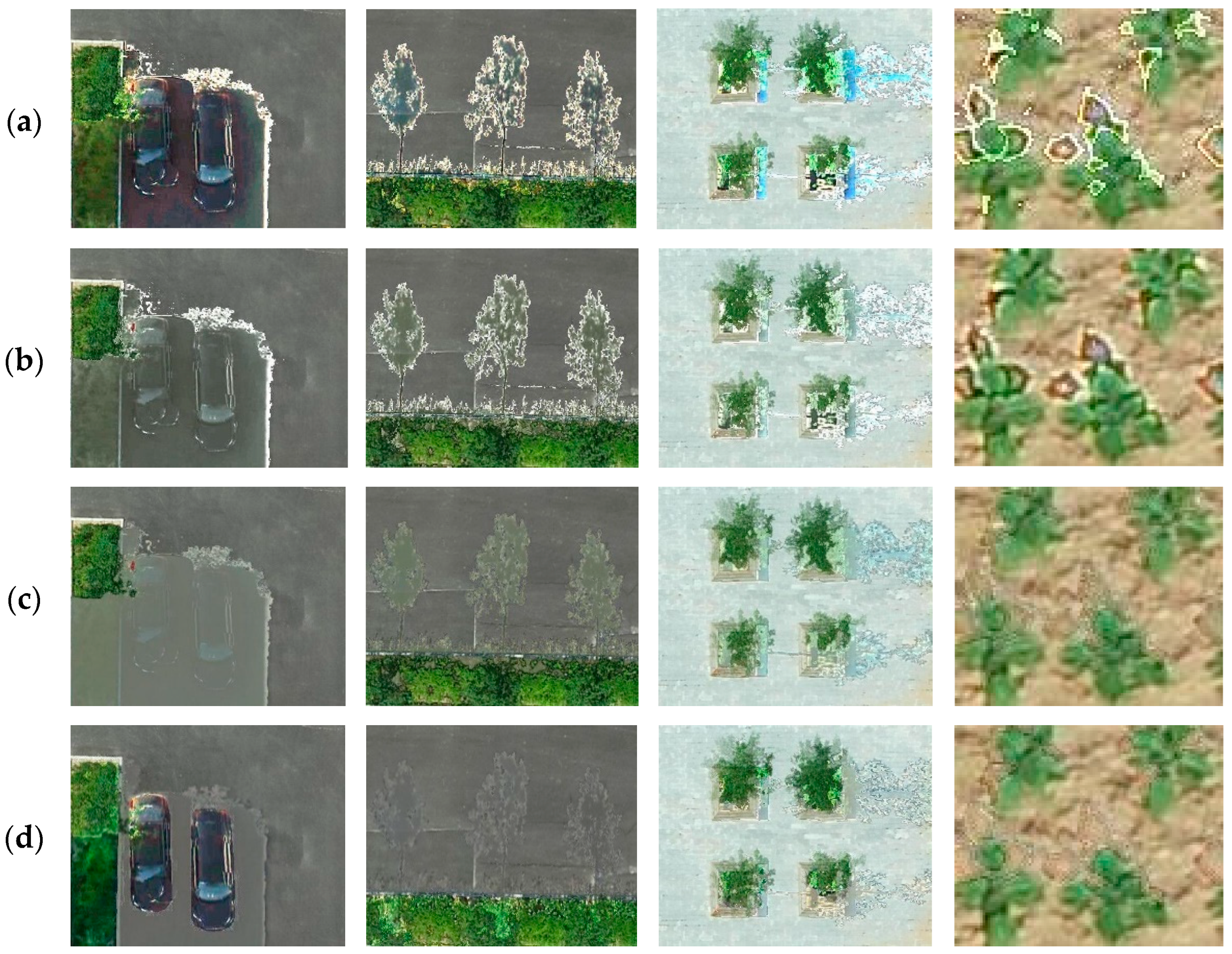

4.3. Analysis of Experimental Results of Shadow Compensation

4.3.1. Subjective Evaluation and Discussion

4.3.2. Objective Evaluation and Discussion

5. Conclusions

- (1)

- The new shadow detection index, defined based on the R, G, and B bands of a UAV image, can effectively enhance the shadow, and is conducive to accurate shadow extraction of UAV RGB remote sensing images. The average overall accuracy of shadow detection is 98.23% and the average F1 score is 95.84%.

- (2)

- The proposed method was tested in scenes containing quite complex surface features and a great variety of objects, and it performed well. In the visual effect, the color and texture details of the shadow regions are effectively compensated, and the shadow border is not obvious. The compensated image had high consistency with the real scenes. Likewise, in the quantitative analysis, the average color difference is 1.891, the average shadow standard deviation index is 15.419, and the average gradient similarity is 0.726. It achieved the best results compared with the aforementioned testing methods and proved the effectiveness of the proposed method.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Adeline, K.; Chen, M.; Briottet, X.; Pang, S.K.; Paparoditis, N. Shadow detection in very high spatial resolution aerial images: A comparative study. ISPRS J. Photogramm. Remote Sens. Environ. 2013, 80, 21–38. [Google Scholar] [CrossRef]

- Zhou, W.; Huang, G.; Troy, A.; Cadenasso, M.L. Object-based land cover classification of shaded areas in high spatial resolution imagery of urban areas: A comparison study. Remote Sens. Environ. 2009, 113, 1769–1777. [Google Scholar] [CrossRef]

- Ankush, A.; Kumar, S.; Singh, D. An Adaptive Technique to Detect and Remove Shadow from Drone Data. J. Indian Soc. Remote 2021, 49, 491–498. [Google Scholar]

- Amin, B.; Riaz, M.M.; Ghafoor, A. Automatic shadow detection and removal using image matting. Signal Processing 2019, 170, 107415. [Google Scholar] [CrossRef]

- Arévalo, V.; González, J.; Ambrosio, G. Shadow detection in colour high-resolution satellite images. Int. J. Remote Sens. 2008, 29, 1945–1963. [Google Scholar] [CrossRef]

- Mostafa, Y.; Abdelwahab, M.A. Corresponding regions for shadow restoration in satellite high-resolution images. Int. J. Remote Sens. 2018, 39, 7014–7028. [Google Scholar] [CrossRef]

- Gao, M.; Yang, F.; Wei, H.; Liu, X. Individual Maize Location and Height Estimation in Field from UAV-Borne LiDAR and RGB Images. Remote Sens. 2022, 14, 2292. [Google Scholar] [CrossRef]

- Zhang, Y.; Chen, G.; Vukomanovic, J.; Singh, K.; Liu, Y.; Holden, S.; Meentemeyer, R.K. Recurrent Shadow Attention Model (RSAM) for shadow removal in high-resolution urban land-cover mapping. Remote Sens. Environ. 2020, 247, 111945. [Google Scholar] [CrossRef]

- Luo, Y.; Xin, J.; He, H.; Geomatics, F.O. Research on shadow detection method of WorldView-Ⅱremote sensing image. Sci. Surv. Mapp. 2017, 42, 132–142. [Google Scholar]

- Wen, Z.; Wu, S.; Chen, J.; Lyu, M.; Jiang, Y. Radiance transfer process based shadow correction method for urban regions in high spatial resolution image. J. Remote Sens. 2016, 20, 138–148. [Google Scholar]

- Inoue, N.; Yamasaki, T. Learning from Synthetic Shadows for Shadow Detection and Removal. IEEE Trans. Circuits Syst. Video Technol. 2021, 31, 4187–4197. [Google Scholar] [CrossRef]

- Gilberto, A.; Roque, A.O.; Francisco, J.S.; Luis, L. An Approach for Shadow Detection in Aerial Images Based on Multi-Channel Statistics. IEEE Access 2021, 9, 34240–34250. [Google Scholar]

- Han, H.; Han, C.; Lan, T.; Huang, L.; Hu, C.; Xue, X. Automatic Shadow Detection for Multispectral Satellite Remote Sensing Images in Invariant Color Spaces. Appl. Sci. 2020, 10, 6467. [Google Scholar] [CrossRef]

- Luo, H.; Shao, Z. A Shadow Detection Method from Urban High Resolution Remote Sensing Image Based on Color Features of Shadow. In Proceedings of the International Symposium on Information Science and Engineering (ISISE), Shanghai, China, 14–16 December 2012; pp. 48–51. [Google Scholar]

- Liu, Y.; Wei, Y.; Tao, S.; Dai, Q.; Wang, W.; Wu, M. Object-oriented detection of building shadow in TripleSat-2 remote sensing imagery. J. Appl. Remote Sens. 2020, 14, 036508. [Google Scholar] [CrossRef]

- Bendig, J.; Bolten, A.; Bennertz, S.; Broscheit, J.; Eichfuss, S.; Bareth, G. Estimating Biomass of Barley Using Crop Surface Models (CSMs) Derived from UAV-Based RGB Imaging. Remote Sens. 2014, 6, 10395–10412. [Google Scholar] [CrossRef] [Green Version]

- Duan, G.; Gong, H.; Li, X.; Chen, B. Shadow extraction based on characteristic components and object-oriented method for high-resolution images. J. Appl. Remote Sens. 2014, 18, 760–778. [Google Scholar]

- Zhang, H.; Sun, K.; Li, W. Object-Oriented Shadow Detection and Removal from Urban High-Resolution Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. Lett. 2014, 52, 6972–6982. [Google Scholar] [CrossRef]

- Ehlem, Z.; Mohammed, F.B.; Mohammed, K.; Mohammed, D.; Belkacem, K. New shadow detection and removal approach to improve neural stereo correspondence of dense urban VHR remote sensing images. Eur. J. Remote Sens. 2015, 48, 447–463. [Google Scholar]

- Luo, H.; Wang, L.; Shao, Z.; Li, D. Development of a multi-scale object-based shadow detection method for high spatial resolution image. Remote Sens. Lett. 2015, 6, 59–68. [Google Scholar] [CrossRef]

- Tolt, G.; Shimoni, M.; Ahlberg, J. A shadow detection method for remote sensing images using VHR hyperspectral and LIDAR data. In Proceedings of the International Geoscience and Remote Sensing Symposium, Vancouver, BC, Canada, 24–29 July 2011; pp. 4423–4426. [Google Scholar]

- Liu, X.; Hou, Z.; Shi, Z.; Bo, Y.; Cheng, J. A shadow identification method using vegetation indices derived from hyperspectral data. Int. J. Remote Sens. 2017, 38, 5357–5373. [Google Scholar] [CrossRef]

- Shao, Q.; Xu, C.; Zhou, Y.; Dong, H. Cast shadow detection based on the YCbCr color space and topological cuts. J. Supercomput. 2020, 76, 3308–3326. [Google Scholar] [CrossRef]

- Sun, G.; Huang, H.; Weng, Q.; Zhang, A.; Jia, X.; Ren, J.; Sun, L.; Chen, X. Combinational shadow index for building shadow extraction in urban areas from Sentinel-2A MSI imagery. Int. J. Appl. Earth Obs. Geoinf. 2019, 78, 53–65. [Google Scholar] [CrossRef]

- Tsai, V.J.D. A comparative study on shadow compensation of color aerial images in invariant color models. IEEE Trans. Geosci. Remote Sens. Lett. 2006, 44, 1661–1671. [Google Scholar] [CrossRef]

- Zhou, T.; Fu, H.; Sun, C. Shadow Detection and Compensation from Remote Sensing Images under Complex Urban Conditions. Remote Sens. 2021, 13, 699. [Google Scholar] [CrossRef]

- Ma, H.; Qin, Q.; Shen, X. Shadow Segmentation and Compensation in High Resolution Satellite Images. In Proceedings of the International Geoscience and Remote Sensing Symposium, Boston, MA, USA, 7–11 July 2008; pp. 1036–1039. [Google Scholar]

- Wen, Z.; Shao, G.; Mirza, Z.A.; Chen, J.; Lu, M.; Wu, S. Restoration of shadows in multispectral imagery using surface reflectance relationships with nearby similar areas. Int. J. Remote Sens. 2015, 36, 4195–4212. [Google Scholar] [CrossRef]

- Mostafa, Y. A Review on Various Shadow Detection and Compensation Techniques in Remote Sensing Images. Can. J. Remote Sens. 2017, 43, 545–562. [Google Scholar] [CrossRef]

- Zhang, L.; Zhang, Q.; Xiao, C. Shadow Remover: Image Shadow Removal Based on Illumination Recovering Optimization. IEEE Trans. Image Processing 2015, 24, 4623–4636. [Google Scholar] [CrossRef]

- Mo, N.; Zhu, R.; Yan, L.; Zhao, Z. Deshadowing of Urban Airborne Imagery Based on Object-Oriented Automatic Shadow Detection and Regional Matching Compensation. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 585–605. [Google Scholar] [CrossRef]

- Luo, S.; Shen, H.; Li, H.; Chen, Y. Shadow removal based on separated illumination correction for urban aerial remote sensing images. Signal Processing 2019, 165, 197–208. [Google Scholar] [CrossRef]

- Shahtahmassebi, A.; Yang, N.; Wang, K.; Moore, N.; Shen, Z. Review of shadow detection and de-shadowing methods in remote sensing. Chin. Geogr. Sci. 2013, 23, 403–420. [Google Scholar] [CrossRef] [Green Version]

- Sabri, M.A.; Aqel, S.; Aarab, A. A multiscale based approach for automatic shadow detection and removal in natural images. Multimed. Tools Appl. 2019, 78, 11263–11275. [Google Scholar] [CrossRef]

- Su, N.; Zhang, Y.; Tian, S.; Yan, Y.; Miao, X. Shadow Detection and Removal for Occluded Object Information Recovery in Urban High-Resolution Panchromatic Satellite Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 2568–2582. [Google Scholar] [CrossRef]

- Yago, V.T.F.; Hoai, M.; Samaras, D. Leave-one-out kernel optimization for shadow detection and removal. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 682–695. [Google Scholar]

- Saritha, M.; Govindan, V.K. Shadow Detection and Removal from a Single Image Using LAB Color Space. Cybern. Inf. Technol. 2013, 13, 95–103. [Google Scholar]

- Jian, Y.; Yu, H.; John, C. Fully constrained linear spectral unmixing based global shadow compensation for high resolution satellite imagery of urban areas. Int. J. Appl. Earth Obs. Geoinf. 2015, 38, 88–98. [Google Scholar]

- Song, H.; Huang, B.; Zhang, K. Shadow Detection and Reconstruction in High-Resolution Satellite Images via Morphological Filtering and Example-Based Learning. IEEE Trans. Geosci. Remote Sens. 2014, 52, 2545–2554. [Google Scholar] [CrossRef]

- Silva, G.F.; Carneiro, G.B.; Doth, R.; Amaral, L.A.; De Azevedo, D.F.G. Near real-time shadow detection and removal in aerial motion imagery application. ISPRS J. Photogramm. Remote Sens. Lett. 2017, 140, 104–121. [Google Scholar] [CrossRef]

- Gilberto, A.; Francisco, J.S.; Marco, A.G.; Roque, A.O.; Luis, A.M. A Novel Shadow Removal Method Based upon Color Transfer and Color Tuning in UAV Imaging. Appl. Sci. 2021, 11, 11494. [Google Scholar]

- Awad, M.M. Toward Robust Segmentation Results Based on Fusion Methods for Very High Resolution Optical Image and LiDAR Data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 2067–2076. [Google Scholar] [CrossRef]

- Han, X.; Wang, H.; Lu, J.; Zhao, C. Road detection based on the fusion of Lidar and image data. Int. J. Adv. Robot. Syst. 2017, 14, 1. [Google Scholar] [CrossRef] [Green Version]

- Liu, J.; Yang, J.; Fang, T. Color Property Analysis of Remote Sensing Imagery. Acta Photonica Sin. 2009, 38, 441–447. [Google Scholar]

- Woebbecke, D.M.; Meyer, G.E.; Bargen, K.V.; Mortensen, D.A. Color indices for weed identification under various soil, residual, and lighting conditions. Trans. ASAE 1995, 38, 259–269. [Google Scholar] [CrossRef]

- Otsu, N. A Threshold Selection Method from Gray-Level Histograms. IEEE Trans. Syst. Man Cybern. 1979, 9, 62–66. [Google Scholar] [CrossRef] [Green Version]

- Wanderson, S.C.; Leila, M.G.F.; Thales, S.K.; Margareth, S.; Hugo, N.B.; Ricardo, C.M.S. Segmentation of Optical Remote Sensing Images for Detecting Homogeneous Regions in Space and Time. Rev. Bras. Cartogr. 2018, 70, 1779–1801. [Google Scholar]

- Yang, F.; Wei, H.; Feng, P. A hierarchical Dempster-Shafer evidence combination framework for urban area land cover classification. Measurement 2020, 151, 105916. [Google Scholar] [CrossRef]

- Feng, P.; Yang, F.; Wei, H. A fast method for land-cover classification from LIDAR data based on hybrid Dezert-Smarandache Model. ICIC Express Lett. Part B Appl. 2015, 6, 3109–3114. [Google Scholar]

- Barrow, H.; Tenenbaum, J.; Hanson, A.; Riseman, E. Recovering intrinsic scene characteristics. Comput. Vis. Syst. 1978, 2, 2. [Google Scholar]

| Index | Ps (%) | Pn (%) | Us (%) | Un (%) | OA (%) | F1 (%) |

|---|---|---|---|---|---|---|

| SDI | 98.09 | 99.50 | 99.22 | 98.75 | 98.94 | 98.65 |

| TYCbCr | 81.40 | 99.60 | 99.40 | 87.00 | 91.51 | 89.50 |

| THSV | 99.69 | 79.28 | 79.38 | 99.68 | 88.35 | 88.38 |

| NSVDI | 80.33 | 98.54 | 97.77 | 86.23 | 90.45 | 88.20 |

| SI | 86.84 | 99.47 | 99.24 | 90.43 | 93.85 | 92.62 |

| Index | Ps (%) | Pn (%) | Us (%) | Un (%) | OA (%) | F1 (%) |

|---|---|---|---|---|---|---|

| SDI | 94.00 | 98.27 | 92.08 | 98.71 | 97.51 | 93.03 |

| TYCbCr | 75.51 | 98.45 | 90.16 | 95.53 | 94.82 | 82.19 |

| THSV | 98.13 | 84.62 | 54.56 | 99.59 | 86.76 | 70.13 |

| NSVDI | 63.13 | 93.82 | 65.28 | 93.12 | 88.96 | 64.43 |

| SI | 90.55 | 95.73 | 79.98 | 98.18 | 94.91 | 84.94 |

| Case | Illumination Correction | Color Transfer | Shadow Synthesis | Proposed Work |

|---|---|---|---|---|

| 1 | 4.863 | 4.352 | 2.936 | 1.792 |

| 2 | 4.340 | 4.053 | 3.902 | 1.943 |

| 3 | 5.654 | 4.924 | 4.761 | 1.831 |

| 4 | 5.207 | 3.896 | 3.632 | 1.998 |

| AVG | 5.016 | 4.306 | 3.807 | 1.891 |

| Case | Illumination Correction | Color Transfer | Shadow Synthesis | Proposed Work |

|---|---|---|---|---|

| 1 | 23.326 | 22.013 | 16.877 | 16.509 |

| 2 | 19.872 | 20.362 | 19.843 | 17.182 |

| 3 | 18.439 | 19.394 | 18.160 | 13.731 |

| 4 | 18.310 | 19.212 | 15.485 | 14.253 |

| AVG | 19.896 | 20.245 | 17.591 | 15.419 |

| Case | Illumination Correction | Color Transfer | Shadow Synthesis | Proposed Work |

|---|---|---|---|---|

| 1 | 0.491 | 0.574 | 0.718 | 0.769 |

| 2 | 0.503 | 0.519 | 0.582 | 0.673 |

| 3 | 0.464 | 0.483 | 0.603 | 0.721 |

| 4 | 0.510 | 0.521 | 0.596 | 0.740 |

| AVG | 0.492 | 0.524 | 0.625 | 0.726 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, X.; Yang, F.; Wei, H.; Gao, M. Shadow Removal from UAV Images Based on Color and Texture Equalization Compensation of Local Homogeneous Regions. Remote Sens. 2022, 14, 2616. https://doi.org/10.3390/rs14112616

Liu X, Yang F, Wei H, Gao M. Shadow Removal from UAV Images Based on Color and Texture Equalization Compensation of Local Homogeneous Regions. Remote Sensing. 2022; 14(11):2616. https://doi.org/10.3390/rs14112616

Chicago/Turabian StyleLiu, Xiaoxia, Fengbao Yang, Hong Wei, and Min Gao. 2022. "Shadow Removal from UAV Images Based on Color and Texture Equalization Compensation of Local Homogeneous Regions" Remote Sensing 14, no. 11: 2616. https://doi.org/10.3390/rs14112616

APA StyleLiu, X., Yang, F., Wei, H., & Gao, M. (2022). Shadow Removal from UAV Images Based on Color and Texture Equalization Compensation of Local Homogeneous Regions. Remote Sensing, 14(11), 2616. https://doi.org/10.3390/rs14112616