PlanetScope, Sentinel-2, and Sentinel-1 Data Integration for Object-Based Land Cover Classification in Google Earth Engine

Abstract

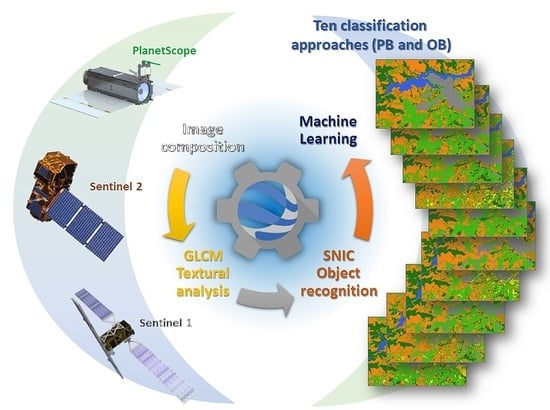

:1. Introduction

2. Materials and Methods

2.1. Study Area

2.2. Training and Validation Sample Data

2.3. Methodology

2.3.1. Satellite Data: Sentinel-2, Sentinel-1, PlanetScope

2.3.2. Dataset Composition

2.3.3. Textural Analysis and Segmentation

2.3.4. LULC Classification

2.3.5. Accuracy Assessment

3. Results

4. Discussion

4.1. GEE and other LULC Analysis Platforms

4.2. The GEE Map Composition

4.3. The Object-Based Approaches in GEE

4.4. Data Integration for Improving Classification Accuracy

4.5. Possible Future Improvements

5. Conclusions

Supplementary Materials

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Foody, G.M. Remote sensing of tropical forest environments: Towards the monitoring of environmental resources for sustainble development. Int. J. Remote Sens. 2003, 24, 4035–4046. [Google Scholar] [CrossRef]

- Noss, R.F. Last stand: Protected areas and the defense of tropical diversity. Trends Ecol. Evol. 1997, 12, 450–451. [Google Scholar] [CrossRef]

- Shalaby, A.; Tateishi, R. Remote sensing and GIS for mapping and monitoring land cover and land-use changes in the Northwestern coastal zone of Egypt. Appl. Geogr. 2007, 27, 28–41. [Google Scholar] [CrossRef]

- Vizzari, M.; Antognelli, S.; Hilal, M.; Sigura, M.; Joly, D. Ecosystem Services Along the Urban—Rural—Natural Gradient: An Approach for a Wide Area Assessment and Mapping. In Proceedings of the Part III, Computational Science and Its Applications—ICCSA 2015: 15th International Conference, Banff, AB, Canada, 22–25 June 2015; Gervasi, O., Murgante, B., Misra, S., Gavrilova, L.M., Rocha, C.A.M.A., Torre, C., Taniar, D., Apduhan, O.B., Eds.; Springer International Publishing: Cham, Switzerland, 2015; pp. 745–757. ISBN 978-3-319-21470-2. [Google Scholar]

- Vizzari, M.; Sigura, M. Urban-rural gradient detection using multivariate spatial analysis and landscape metrics. J. Agric. Eng. 2013, 44, 453–459. [Google Scholar] [CrossRef]

- Grecchi, R.C.; Gwyn, Q.H.J.; Bénié, G.B.; Formaggio, A.R.; Fahl, F.C. Land use and land cover changes in the Brazilian Cerrado: A multidisciplinary approach to assess the impacts of agricultural expansion. Appl. Geogr. 2014, 55, 300–312. [Google Scholar] [CrossRef]

- Pelorosso, R.; Apollonio, C.; Rocchini, D.; Petroselli, A. Effects of Land Use-Land Cover Thematic Resolution on Environ-mental Evaluations. Remote Sens. 2021, 13, 1232. [Google Scholar] [CrossRef]

- Shelestov, A.; Lavreniuk, M.; Kussul, N.; Novikov, A.; Skakun, S. Exploring Google Earth Engine Platform for Big Data Processing: Classification of Multi-Temporal Satellite Imagery for Crop Mapping. Front. Earth Sci. 2017, 5, 1–10. [Google Scholar] [CrossRef] [Green Version]

- Gorelick, N.; Hancher, M.; Dixon, M.; Ilyushchenko, S.; Thau, D.; Moore, R. Remote Sensing of Environment Google Earth Engine: Planetary-scale geospatial analysis for everyone. Remote Sens. Environ. 2017, 202, 18–27. [Google Scholar] [CrossRef]

- Sullivan, B. NICFI’s Satellite Imagery of the Global Tropics Now Available in Earth Engine for Analysis|by Google Earth|Google Earth and Earth Engine|Medium. Available online: https://medium.com/google-earth/nicfis-satellite-imagery-of-the-global-tropics-now-available-in-earth-engine-for-analysis-1016df52a63d (accessed on 7 February 2022).

- NICFI Tropical Forest Basemaps Now Available in Google Earth Engine. Available online: https://www.planet.com/pulse/nicfi-tropical-forest-basemaps-now-available-in-google-earth-engine/ (accessed on 7 February 2022).

- Pfeifer, M.; Disney, M.; Quaife, T.; Marchant, R. Terrestrial ecosystems from space: A review of earth observation products for macroecology applications. Glob. Ecol. Biogeogr. 2011, 21, 603–624. [Google Scholar] [CrossRef] [Green Version]

- Mather, P.; Tso, B. Classification Methods for Remotely Sensed Data, 2nd ed.; CRC Press: Boca Raton, FL, USA, 2016; ISBN 9781420090741. [Google Scholar]

- Blaschke, T. Object based image analysis for remote sensing. ISPRS J. Photogramm. Remote Sens. 2010, 65, 2–16. [Google Scholar] [CrossRef] [Green Version]

- Torres-Sánchez, J.; López-Granados, F.; Peña, J.M. An automatic object-based method for optimal thresholding in UAV images: Application for vegetation detection in herbaceous crops. Comput. Electron. Agric. 2015, 114, 43–52. [Google Scholar] [CrossRef]

- Tassi, A.; Gigante, D.; Modica, G.; Di Martino, L.; Vizzari, M. Pixel vs. Object-Based Landsat 8 Data Classification in Google Earth Engine Using Random Forest: The Case Study of Maiella National Park. Remote Sens. 2021, 13, 2299. [Google Scholar] [CrossRef]

- Ren, X.; Malik, J. Learning a classification model for segmentation. In Proceedings of the IEEE International Conference on Computer Vision, Nice, France, 13–16 October 2003. [Google Scholar]

- Ghorbanian, A.; Kakooei, M.; Amani, M.; Mahdavi, S.; Mohammadzadeh, A.; Hasanlou, M. Improved land cover map of Iran using Sentinel imagery within Google Earth Engine and a novel automatic workflow for land cover classification using migrated training samples. ISPRS J. Photogramm. Remote Sens. 2020, 167, 276–288. [Google Scholar] [CrossRef]

- Aggarwal, N.; Srivastava, M.; Dutta, M. Comparative Analysis of Pixel-Based and Object-Based Classification of High Resolution Remote Sensing Images—A Review. Int. J. Eng. Trends Technol. 2016, 38, 5–11. [Google Scholar] [CrossRef]

- Messina, G.; Peña, J.M.; Vizzari, M.; Modica, G. A Comparison of UAV and Satellites Multispectral Imagery in Monitoring Onion Crop. An Application in the ‘Cipolla Rossa di Tropea’ (Italy). Remote Sens. 2020, 12, 3424. [Google Scholar] [CrossRef]

- Tassi, A.; Vizzari, M. Object-Oriented LULC Classification in Google Earth Engine Combining SNIC, GLCM, and Machine Learning Algorithms. Remote Sens. 2020, 12, 3776. [Google Scholar] [CrossRef]

- Hall-Beyer, M. Practical guidelines for choosing GLCM textures to use in landscape classification tasks over a range of moderate spatial scales. Int. J. Remote Sens. 2017, 38, 1312–1338. [Google Scholar] [CrossRef]

- Flanders, D.; Hall-Beyer, M.; Pereverzoff, J. Preliminary evaluation of eCognition object-based software for cut block de-lineation and feature extraction. Can. J. Remote Sens. 2003, 29, 441–452. [Google Scholar] [CrossRef]

- Singh, M.; Evans, D.; Friess, D.A.; Tan, B.S.; Nin, C.S. Mapping Above-Ground Biomass in a Tropical Forest in Cambodia Using Canopy Textures Derived from Google Earth. Remote Sens. 2015, 7, 5057–5076. [Google Scholar] [CrossRef] [Green Version]

- Li, M.; Zhang, R.; Luo, H.; Gu, S.; Qin, Z. Crop Mapping in the Sanjiang Plain Using an Improved Object-Oriented Method Based on Google Earth Engine and Combined Growth Period Attributes. Remote Sens. 2022, 14, 273. [Google Scholar] [CrossRef]

- Loukika, K.N.; Keesara, V.R.; Sridhar, V. Analysis of Land Use and Land Cover Using Machine Learning Algorithms on Google Earth Engine for Munneru River Basin, India. Sustainability 2021, 13, 13758. [Google Scholar] [CrossRef]

- Luo, C.; Qi, B.; Liu, H.; Guo, D.; Lu, L.; Fu, Q.; Shao, Y. Using Time Series Sentinel-1 Images for Object-Oriented Crop Classification in Google Earth Engine. Remote Sens. 2021, 13, 561. [Google Scholar] [CrossRef]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef] [Green Version]

- Gislason, P.O.; Benediktsson, J.A.; Sveinsson, J.R. Random forests for land cover classification. Pattern Recognit. Lett. 2006, 27, 294–300. [Google Scholar] [CrossRef]

- Mountrakis, G.; Im, J.; Ogole, C. Support vector machines in remote sensing: A review. ISPRS J. Photogramm. Remote Sens. 2011, 66, 247–259. [Google Scholar] [CrossRef]

- Amani, M.; Ghorbanian, A.; Ahmadi, S.A.; Kakooei, M.; Moghimi, A.; Mirmazloumi, S.M.; Moghaddam, S.H.A.; Mahdavi, S.; Ghahremanloo, M.; Parsian, S.; et al. Google Earth Engine Cloud Computing Platform for Remote Sensing Big Data Applications: A Comprehensive Review. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 5326–5350. [Google Scholar] [CrossRef]

- Praticò, S.; Solano, F.; Di Fazio, S.; Modica, G. Machine Learning Classification of Mediterranean Forest Habitats in Google Earth Engine Based on Seasonal Sentinel-2 Time-Series and Input Image Composition Optimisation. Remote Sens. 2021, 13, 586. [Google Scholar] [CrossRef]

- Nery, T.; Sadler, R.; Solis-Aulestia, M.; White, B.; Polyakov, M.; Chalak, M. Comparing supervised algorithms in Land Use and Land Cover classification of a Landsat time-series. In Proceedings of the International Geoscience and Remote Sensing Symposium (IGARSS), Beijing, China, 10–15 July 2016. [Google Scholar]

- Rodriguez-Galiano, V.F.; Ghimire, B.; Rogan, J.; Chica-Olmo, M.; Rigol-Sanchez, J.P. An assessment of the effectiveness of a random forest classifier for land-cover classification. ISPRS J. Photogramm. Remote Sens. 2012, 67, 93–104. [Google Scholar] [CrossRef]

- Costa, J. da S.; Liesenberg, V.; Schimalski, M.B.; de Sousa, R.V.; Biffi, L.J.; Gomes, A.R.; Neto, S.L.R.; Mitishita, E.; Bispo, P. da C. Benefits of Combining ALOS/PALSAR-2 and Sentinel-2A Data in the Classification of Land Cover Classes in the Santa Catarina Southern Plateau. Remote Sens. 2021, 13, 229. [Google Scholar] [CrossRef]

- Mizuochi, H.; Iijima, Y.; Nagano, H.; Kotani, A.; Hiyama, T. Dynamic Mapping of Subarctic Surface Water by Fusion of Microwave and Optical Satellite Data Using Conditional Adversarial Networks. Remote Sens. 2021, 13, 175. [Google Scholar] [CrossRef]

- De Luca, G.; Silva, J.M.N.; Di Fazio, S.; Modica, G. Integrated use of Sentinel-1 and Sentinel-2 data and open-source machine learning algorithms for land cover mapping in a Mediterranean region. Eur. J. Remote Sens. 2022, 55, 52–70. [Google Scholar] [CrossRef]

- Tavus, B.; Kocaman, S.; Gokceoglu, C. Flood damage assessment with Sentinel-1 and Sentinel-2 data after Sardoba dam break with GLCM features and Random Forest method. Sci. Total Environ. 2022, 816, 151585. [Google Scholar] [CrossRef]

- Tavares, P.A.; Beltrão, N.E.S.; Guimarães, U.S.; Teodoro, A.C. Integration of Sentinel-1 and Sentinel-2 for Classification and LULC Mapping in the Urban Area of Belém, Eastern Brazilian Amazon. Sensors 2019, 19, 1140. [Google Scholar] [CrossRef] [Green Version]

- Carrasco, L.; O’Neil, A.W.; Daniel Morton, R.; Rowland, C.S. Evaluating Combinations of Temporally Aggregated Sentinel-1, Sentinel-2 and Landsat 8 for Land Cover Mapping with Google Earth Engine. Remote Sens. 2019, 11, 288. [Google Scholar] [CrossRef] [Green Version]

- Javhar, A.; Chen, X.; Bao, A.; Jamshed, A.; Yunus, M.; Jovid, A.; Latipa, T. Comparison of Multi-Resolution Optical Land-sat-8, Sentinel-2 and Radar Sentinel-1 Data for Automatic Lineament Extraction: A Case Study of Alichur Area, SE Pamir. Remote Sens. 2019, 11, 778. [Google Scholar] [CrossRef] [Green Version]

- Rao, P.; Zhou, W.; Bhattarai, N.; Srivastava, A.K.; Singh, B.; Poonia, S.; Lobell, D.B.; Jain, M. Using Sentinel-1, Sentinel-2, and Planet Imagery to Map Crop Type of Smallholder Farms. Remote Sens. 2021, 13, 1870. [Google Scholar] [CrossRef]

- Kpienbaareh, D.; Sun, X.; Wang, J.; Luginaah, I.; Kerr, R.B.; Lupafya, E.; Dakishoni, L. Crop Type and Land Cover Mapping in Northern Malawi Using the Integration of Sentinel-1, Sentinel-2, and PlanetScope Satellite Data. Remote Sens. 2021, 13, 700. [Google Scholar] [CrossRef]

- Brazil Data Cube—Plataforma para Análise e Visualização de Grandes Volumes de Dados Geoespaciais. Available online: http://brazildatacube.org/en/home-page-2/ (accessed on 25 March 2022).

- Carta della Natura—Italiano. Available online: https://www.isprambiente.gov.it/it/servizi/sistema-carta-della-natura (accessed on 25 March 2021).

- Hansen, M.C.; Roy, D.P.; Lindquist, E.; Adusei, B.; Justice, C.O.; Altstatt, A. A method for integrating MODIS and Landsat data for systematic monitoring of forest cover and change in the Congo Basin. Remote Sens. Environ. 2008, 112, 2495–2513. [Google Scholar] [CrossRef]

- Llano, X.C. SMByC-IDEAM. AcATaMa—QGIS Plugin for Accuracy Assessment of Thematic Maps. Available online: https://github.com/SMByC/AcATaMa (accessed on 27 May 2022).

- ESA. User Guides—Sentinel-2—Sentinel Online. Available online: https://sentinel.esa.int/web/sentinel/user-guides/sentinel-2-msi/overview (accessed on 12 October 2020).

- Santaga, F.S.; Agnelli, A.; Leccese, A.; Vizzari, M. Using Sentinel-2 for Simplifying Soil Sampling and Mapping: Two Case Studies in Umbria, Italy. Remote Sens. 2021, 13, 3379. [Google Scholar] [CrossRef]

- Planet GEE Delivery Overview. Available online: https://developers.planet.com/docs/integrations/gee/ (accessed on 8 February 2022).

- Use NICFI—Planet Lab Data—SEPAL Documentation. Available online: https://docs.sepal.io/en/latest/setup/nicfi.html (accessed on 8 February 2022).

- Phan, T.N.; Kuch, V.; Lehnert, L.W. Land Cover Classification using Google Earth Engine and Random Forest Classifier—The Role of Image Composition. Remote Sens. 2020, 12, 2411. [Google Scholar] [CrossRef]

- Lunetta, R.S.; Knight, J.F.; Ediriwickrema, J.; Lyon, J.G.; Worthy, L.D. Land-cover change detection using multi-temporal MODIS NDVI data. Remote Sens. Environ. 2006, 105, 142–154. [Google Scholar] [CrossRef]

- Woodcock, C.E.; Macomber, S.A.; Kumar, L. Vegetation mapping and monitoring. In Environmental Modelling with GIS and Remote Sensing; Taylor & Francis: London, UK, 2010. [Google Scholar]

- Singh, R.P.; Singh, N.; Singh, S.; Mukherjee, S. Normalized Difference Vegetation Index (NDVI) Based Classification to Assess the Change in Land Use/Land Cover (LULC) in Lower Assam, India. Int. J. Adv. Remote Sens. GIS 2016, 5, 1963–1970. [Google Scholar] [CrossRef]

- Moges, S.M.; Raun, W.R.; Mullen, R.W.; Freeman, K.W.; Johnson, G.V.; Solie, J.B. Evaluation of green, red, and near infrared bands for predicting winter wheat biomass, nitrogen uptake, and final grain yield. J. Plant Nutr. 2004, 27, 1431–1441. [Google Scholar] [CrossRef]

- Yang, X.; Zhao, S.; Qin, X.; Zhao, N.; Liang, L. Mapping of urban surface water bodies from sentinel-2 MSI imagery at 10 m resolution via NDWI-based image sharpening. Remote Sens. 2017, 9, 596. [Google Scholar] [CrossRef] [Green Version]

- Diek, S.; Fornallaz, F.; Schaepman, M.E.; de Jong, R. Barest Pixel Composite for agricultural areas using landsat time series. Remote Sens. 2017, 9, 1245. [Google Scholar] [CrossRef] [Green Version]

- Chen, W.; Liu, L.; Zhang, C.; Wang, J.; Wang, J.; Pan, Y. Monitoring the seasonal bare soil areas in Beijing using multi-temporal TM images. In Proceedings of the International Geoscience and Remote Sensing Symposium (IGARSS), Anchorage, AK, USA, 20–24 September 2004. [Google Scholar]

- Rouse, J.W., Jr.; Haas, R.H.; Schell, J.A.; Deering, D.W. Monitoring vegetation systems in the great plains with erts. In Proceedings of the 3rd ERTS-1 Symposium (NASA SP-351), Washington, DC, USA, 10–14 December 1973; pp. 309–317. [Google Scholar]

- Gitelson, A.A.; Kaufman, Y.J.; Merzlyak, M.N. Use of a green channel in remote sensing of global vegetation from EOS-MODIS. Remote Sens. Environ. 1996, 58, 289–298. [Google Scholar] [CrossRef]

- Hardisky, M.A.; Klemas, V.; Smart, R.M. The influence of soil salinity, growth form, and leaf moisture on the spectral ra-diance of Spartina alterniflora canopies. Photogramm. Eng. Remote Sens. 1983, 49, 77–83. [Google Scholar]

- Rikimaru, A.; Roy, P.S.; Miyatake, S. Tropical forest cover density mapping. Trop. Ecol. 2002, 43, 39–47. [Google Scholar]

- Keys, R.G. Cubic Convolution Interpolation for Digital Image Processing. IEEE Trans. Acoust. 1981, 29, 1153–1160. [Google Scholar] [CrossRef] [Green Version]

- Achanta, R.; Süsstrunk, S. Superpixels and polygons using simple non-iterative clustering. In Proceedings of the 30th IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2017, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Chen, D.M.; Stow, D. The effect of training strategies on supervised classification at different spatial resolutions. Photogramm. Eng. Remote Sens. 2002, 68, 1155–1161. [Google Scholar]

- Mueller, J.P.; Massaron, L. Training, Validating, and Testing in Machine Learning. Available online: https://www.dummies.com/programming/big-data/data-science/training-validating-testing-machine-learning/ (accessed on 7 June 2021).

- Adelabu, S.; Mutanga, O.; Adam, E. Testing the reliability and stability of the internal accuracy assessment of random forest for classifying tree defoliation levels using different validation methods. Geocarto Int. 2015, 30, 810–821. [Google Scholar] [CrossRef]

- Chen, W.; Xie, X.; Wang, J.; Pradhan, B.; Hong, H.; Bui, D.T.; Duan, Z.; Ma, J. A comparative study of logistic model tree, random forest, and classification and regression tree models for spatial prediction of landslide susceptibility. Catena 2017, 151, 147–160. [Google Scholar] [CrossRef] [Green Version]

- Congalton, R.G. A review of assessing the accuracy of classifications of remotely sensed data. Remote Sens. Environ. 1991, 37, 35–46. [Google Scholar] [CrossRef]

- Liu, C.; Frazier, P.; Kumar, L. Comparative assessment of the measures of thematic classification accuracy. Remote Sens. Environ. 2007, 107, 606–616. [Google Scholar] [CrossRef]

- Al-Saady, Y.; Merkel, B.; Al-Tawash, B.; Al-Suhail, Q. Land use and land cover (LULC) mapping and change detection in the Little Zab River Basin (LZRB), Kurdistan Region, NE Iraq and NW Iran. FOG—Freib. Online Geosci. 2015, 43, 1–32. [Google Scholar]

- Sokolova, M.; Japkowicz, N.; Szpakowicz, S. Beyond accuracy, F-score and ROC: A family of discriminant measures for performance evaluation. In Proceedings of the AAAI Workshop; Technical Report; AAAI Press: Palo Alto, CA, USA, 2006. [Google Scholar]

- Taubenböck, H.; Esch, T.; Felbier, A.; Roth, A.; Dech, S. Pattern-based accuracy assessment of an urban footprint classifi-cation using TerraSAR-X data. IEEE Geosci. Remote Sens. Lett. 2011, 8, 278–282. [Google Scholar] [CrossRef]

- Stehman, S.V. Statistical rigor and practical utility in thematic map accuracy assessment. Photogramm. Eng. Remote Sens. 2001, 67, 727–734. [Google Scholar]

- Weaver, J.; Moore, B.; Reith, A.; McKee, J.; Lunga, D. A comparison of machine learning techniques to extract human set-tlements from high resolution imagery. In Proceedings of the International Geoscience and Remote Sensing Symposium (IGARSS), Valencia, Spain, 22–27 July 2018. [Google Scholar]

- Hurskainen, P.; Adhikari, H.; Siljander, M.; Pellikka, P.K.E.; Hemp, A. Auxiliary datasets improve accuracy of object-based land use/land cover classification in heterogeneous savanna landscapes. Remote Sens. Environ. 2019, 233, 111354. [Google Scholar] [CrossRef]

- Foody, G.M. Thematic map comparison: Evaluating the statistical significance of differences in classification accuracy. Photogramm. Eng. Remote Sens. 2004, 70, 627–633. [Google Scholar] [CrossRef]

- Lunetta, R.S.; Congalton, R.G.; Fenstermaker, L.K.; Jensen, J.R.; McGwire, K.C.; Tinney, L.R. Remote sensing and geographic information system data integration: Error sources and research issues. Photogramm. Eng. Remote Sens. 1991, 57, 677–687. [Google Scholar]

- Foody, G.M.; Mathur, A. A relative evaluation of multiclass image classification by support vector machines. IEEE Trans. Geosci. Remote Sens. 2004, 42, 1335–1343. [Google Scholar] [CrossRef] [Green Version]

- Foody, G.M. Explaining the unsuitability of the kappa coefficient in the assessment and comparison of the accuracy of thematic maps obtained by image classification. Remote Sens. Environ. 2020, 239, 111630. [Google Scholar] [CrossRef]

- Olofsson, P.; Foody, G.M.; Herold, M.; Stehman, S.V.; Woodcock, C.E.; Wulder, M.A. Good practices for estimating area and assessing accuracy of land change. Remote Sens. Environ. 2014, 148, 42–57. [Google Scholar] [CrossRef]

- Mapbiomas Brasil. Available online: https://mapbiomas.org/en (accessed on 29 April 2022).

- Fernando, L.; Assis, F.G.; Ferreira, K.R.; Vinhas, L.; Maurano, L.; Almeida, C.; Carvalho, A.; Rodrigues, J.; Maciel, A.; Ca-margo, C. TerraBrasilis: A Spatial Data Analytics Infrastructure for Large-Scale Thematic Mapping. ISPRS Int. J. Geo-Inf. 2019, 8, 513. [Google Scholar] [CrossRef] [Green Version]

- PRODES—Coordenação-Geral de Observação da Terra. Available online: http://www.obt.inpe.br/OBT/assuntos/programas/amazonia/prodes (accessed on 29 April 2022).

- European Space Agency WorldCover. Available online: https://esa-worldcover.org/en (accessed on 27 May 2022).

- Karra, K.; Kontgis, C.; Statman-Weil, Z.; Mazzariello, J.C.; Mathis, M.; Brumby, S.P. Global land use/land cover with Sentinel 2 and deep learning. In Proceedings of the International Geoscience and Remote Sensing Symposium (IGARSS), Brussels, Belgium, 11–16 July 2021; Volume 2021. [Google Scholar]

- Maas, M.D. 5 Things to Consider about Google Earth Engine. Available online: https://www.matecdev.com/posts/disadvantages-earth-engine.html (accessed on 29 April 2022).

- Chang, N.B.; Bai, K. Multisensor Data Fusion and Machine Learning for Environmental Remote Sensing; CRC Press: Boca Raton, FL, USA, 2018. [Google Scholar]

- Thanh Noi, P.; Kappas, M. Comparison of Random Forest, k-Nearest Neighbor, and Support Vector Machine Classifiers for Land Cover Classification Using Sentinel-2 Imagery. Sensors 2018, 18, 18. [Google Scholar] [CrossRef] [Green Version]

- Yang, Y.; Yang, D.; Wang, X.; Zhang, Z.; Nawaz, Z. Testing accuracy of land cover classification algorithms in the qilian mountains based on gee cloud platform. Remote Sens. 2021, 13, 5064. [Google Scholar] [CrossRef]

- Caballero, G.R.; Platzeck, G.; Pezzola, A.; Casella, A.; Winschel, C.; Silva, S.S.; Ludueña, E.; Pasqualotto, N.; Delegido, J. Assessment of Multi-Date Sentinel-1 Polarizations and GLCM Texture Features Capacity for Onion and Sunflower Classification in an Irrigated Valley: An Object Level Approach. Agronomy 2020, 10, 845. [Google Scholar] [CrossRef]

- Stromann, O.; Nascetti, A.; Yousif, O.; Ban, Y. Dimensionality Reduction and Feature Selection for Object-Based Land Cover Classification based on Sentinel-1 and Sentinel-2 Time Series Using Google Earth Engine. Remote Sens. 2020, 12, 76. [Google Scholar] [CrossRef] [Green Version]

- Mathieu, R.; Aryal, J.; Chong, A.K. Object-Based Classification of Ikonos Imagery for Mapping Large-Scale Vegetation Communities in Urban Areas. Sensors 2007, 7, 2860–2880. [Google Scholar] [CrossRef] [Green Version]

- Cai, L.; Shi, W.; Miao, Z.; Hao, M. Accuracy Assessment Measures for Object Extraction from Remote Sensing Images. Remote Sens. 2018, 10, 303. [Google Scholar] [CrossRef] [Green Version]

- Radoux, J.; Bogaert, P.; Kerle, N.; Gerke, M.; Lefèvre, S.; Gloaguen, R.; Thenkabail, P.S. Good Practices for Object-Based Accuracy Assessment. Remote Sens. 2017, 9, 646. [Google Scholar] [CrossRef] [Green Version]

| Land Cover Classes | Number of Sample Points |

|---|---|

| Woodlands | 341 |

| Shrublands | 457 |

| Grasslands | 424 |

| Croplands | 422 |

| Built-up | 225 |

| Bare soil | 286 |

| Water bodies | 245 |

| Approach Type | Approach Code | Multispectral Information | Textural Information | Object Recognition |

|---|---|---|---|---|

| PB | PB_S2 | S2 | - | - |

| PB_S2S1 | S2, S1 | - | - | |

| PB_PL | PL | - | - | |

| PB_S2S1PL | S2, S1, PL | - | - | |

| OB | OB_S2 | S2 | S2 | S2 |

| OB_S2S1 | S2, S1 | S2 | S2 | |

| OB_S2S1T | S2, S1 | S2, S1 | S2 | |

| OB_PL | PL | PL | PL | |

| OB_S2S1PL | S2, S1 | PL | PL | |

| OB_PLS2S1 | PL, S2, S1 | PL | PL |

| Index | Formula | Author |

|---|---|---|

| NDVI | Rouse et al., 1973 [60] | |

| GNDVI | Gitelson et al., 1996 [61] | |

| NDMI | Hardisky et al., 1983 [62] | |

| BSI | Rikimaru et al., 2002 [63] |

| CODE | Textural Index Description |

|---|---|

| ASM | Angular Second Moment: measures the number of repeated pairs in the image |

| CONTR | Contrast: measures the local contrast of an image |

| CORR | Correlation: measures the correlation between pairs of pixels in the image |

| VAR | Variance: measures how spread out the distribution of gray-levels is in the image |

| IDM | Inverse Difference Moment: measures the homogeneity of the image |

| CODE | Number of Selected Features | Selected Spectral Bands and Indices | Textural Indices | Output Resolution (m) | OA |

|---|---|---|---|---|---|

| PB_S2 | 9 | B2, B4, B8, B11, B12, NDVI, GNDVI, NDMI, BSI | - | 10 | 0.744 |

| PB_S2S1 | 11 | B2, B4, B8, B11, B12, NDVI, GNDVI, NDMI, BSI, VV, VH | - | 10 | 0.812 |

| PB_PL | 6 | b, g, r, n, NDVI, GNDVI | - | 4.77 | 0.667 |

| PB_S2S1PL | 12 | b, g, r, n, B11, B12, NDVI, GNDVI, NDMI, BSI, VV, VH | - | 4.77 | 0.816 |

| OB_S2 | 14 | B2, B4, B8, B11, B12, NDVI, GNDVI, NDMI, BSI | ASM, CONTR, CORR, VAR, IDM | 10 | 0.816 |

| OB_S2S1 | 16 | B2, B4, B8, B11, B12, NDVI, GNDVI, NDMI, BSI, VV, VH | ASM, CONTR, CORR, VAR, IDM | 10 | 0.835 |

| OB_S2S1T | 19 | B2, B4, B8, B11, B12, NDVI, GNDVI, NDMI, BSI, VV, VH | ASM, CONTR, CORR, VAR, IDM, ASM, CONTR, IDM | 10 | 0.863 |

| OB_PL | 11 | b, g, r, n, ndvi, gndvi | asm, contr, corr, var, idm | 4.77 | 0.822 |

| OB_S2S1PL | 16 | B2, B4, B8, B11, B12, NDVI, GNDVI, NDMI, BSI, VV, VH | asm, contr, corr, var, idm | 4.77 | 0.883 |

| OB_PLS2S1 | 16 | b, r, n, B11, B12, NDVI, GNDVI, NDMI, BSI, VV, VH | asm, contr, corr, var, idm | 4.77 | 0.906 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Vizzari, M. PlanetScope, Sentinel-2, and Sentinel-1 Data Integration for Object-Based Land Cover Classification in Google Earth Engine. Remote Sens. 2022, 14, 2628. https://doi.org/10.3390/rs14112628

Vizzari M. PlanetScope, Sentinel-2, and Sentinel-1 Data Integration for Object-Based Land Cover Classification in Google Earth Engine. Remote Sensing. 2022; 14(11):2628. https://doi.org/10.3390/rs14112628

Chicago/Turabian StyleVizzari, Marco. 2022. "PlanetScope, Sentinel-2, and Sentinel-1 Data Integration for Object-Based Land Cover Classification in Google Earth Engine" Remote Sensing 14, no. 11: 2628. https://doi.org/10.3390/rs14112628

APA StyleVizzari, M. (2022). PlanetScope, Sentinel-2, and Sentinel-1 Data Integration for Object-Based Land Cover Classification in Google Earth Engine. Remote Sensing, 14(11), 2628. https://doi.org/10.3390/rs14112628