SlideSim: 3D Landslide Displacement Monitoring through a Physics-Based Simulation Approach to Self-Supervised Learning

Abstract

:1. Introduction

2. Materials and Methods

- Generation and simulation of synthetic training data through deterministic modeling of the landslide surface using a conservation of mass (COM) approach for many different input scenarios;

- End-to-end training of an optical flow predictor network using RAFT architecture and transfer learning followed by training on the simulated dataset;

- Inference and calculation of the 3D landslide displacement vector map by first feeding sequential DEM rasters through the trained model to generate 2D horizontal displacement vectors followed by deterministic computation of the vertical component of displacement.

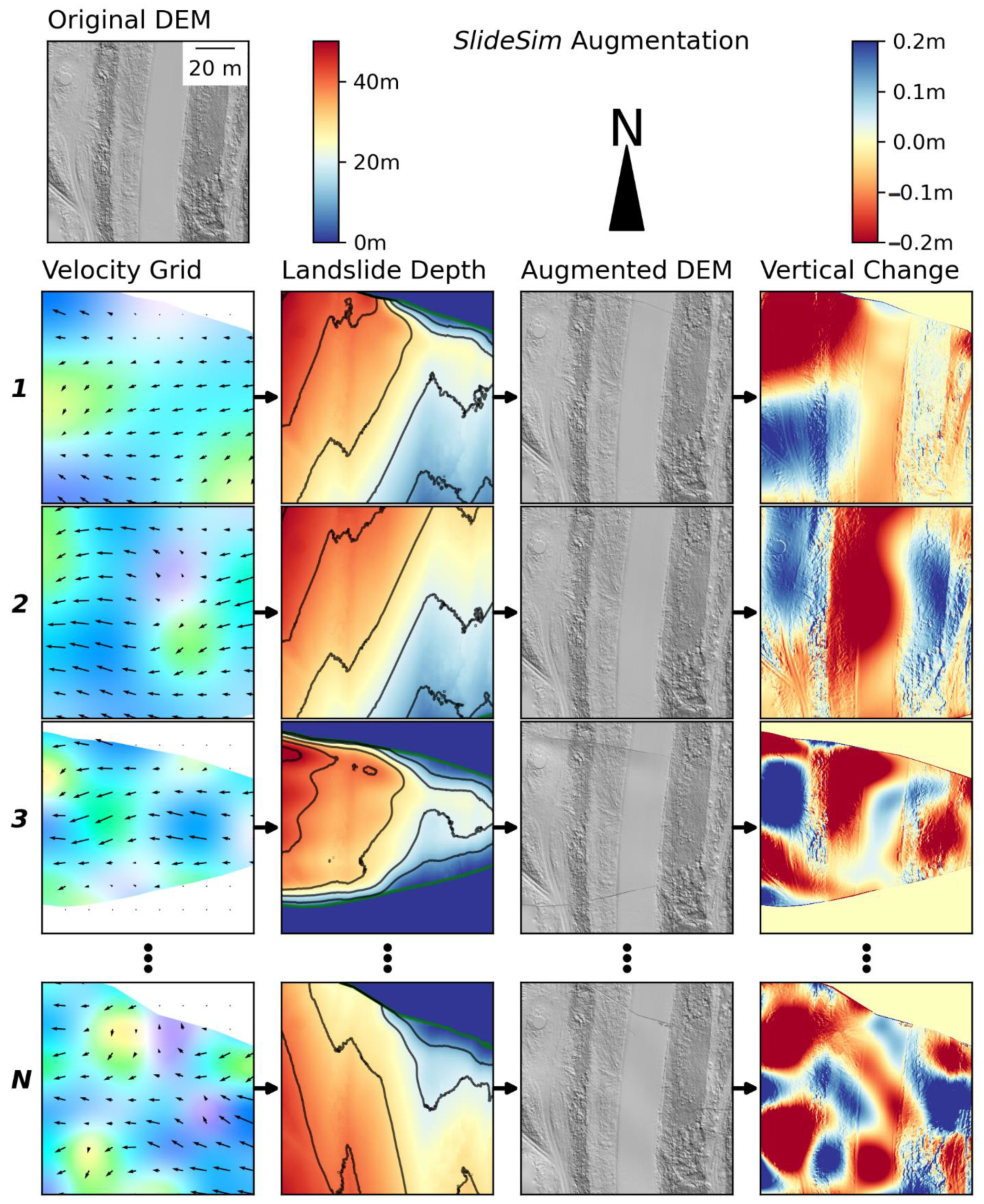

2.1. Simulation and Generation of Synthetic Training Data

2.1.1. Augmenting the Landslide Boundary

2.1.2. Augmenting the Landslide Slip Surface

2.1.3. Generating Landslide Velocity Vectors

2.2. Training of an End-to-End Optical Flow Predictor Network

2.3. Inference and Calculation of the 3D Landslide Displacement Vector Map

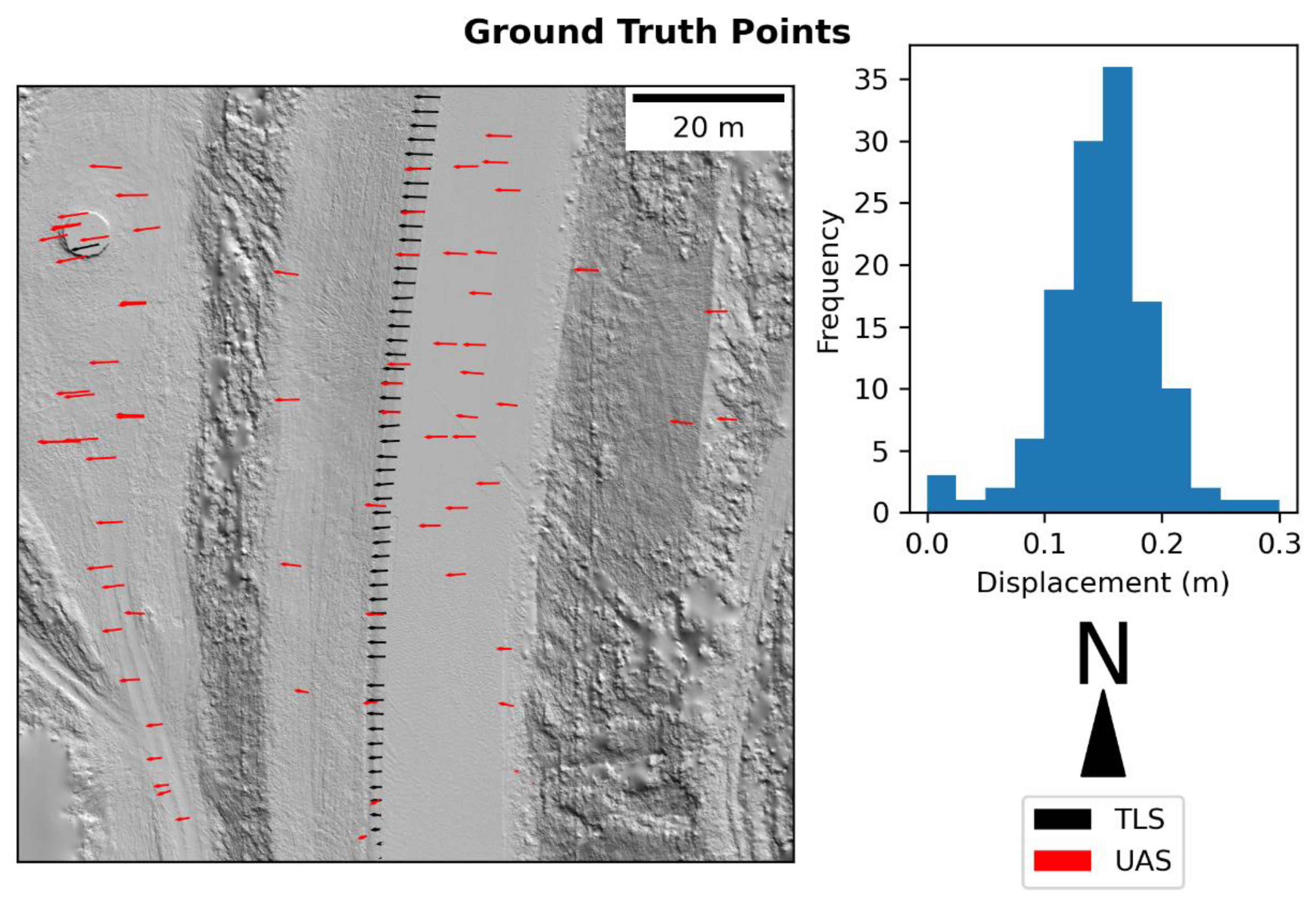

2.4. Test Dataset

- The highest point density of the TLS scans, enabling DEMs of several cell sizes to be created in order to properly assess the impact of cell size in the quality of the output displacement vectors as well as enabling the generation of high-quality ground truth points.

- A wide range of displacement magnitudes as it extends over a lateral scarp of one of the nested failures within the active portion of the landslide complex, and

- A wide variety of land cover types, ranging from west to east through: grass, sparse vegetation, paved road, and a patch of dense vegetation in the southeast (Figure 2).

2.5. Assessment of Accuracy

2.6. Experiments

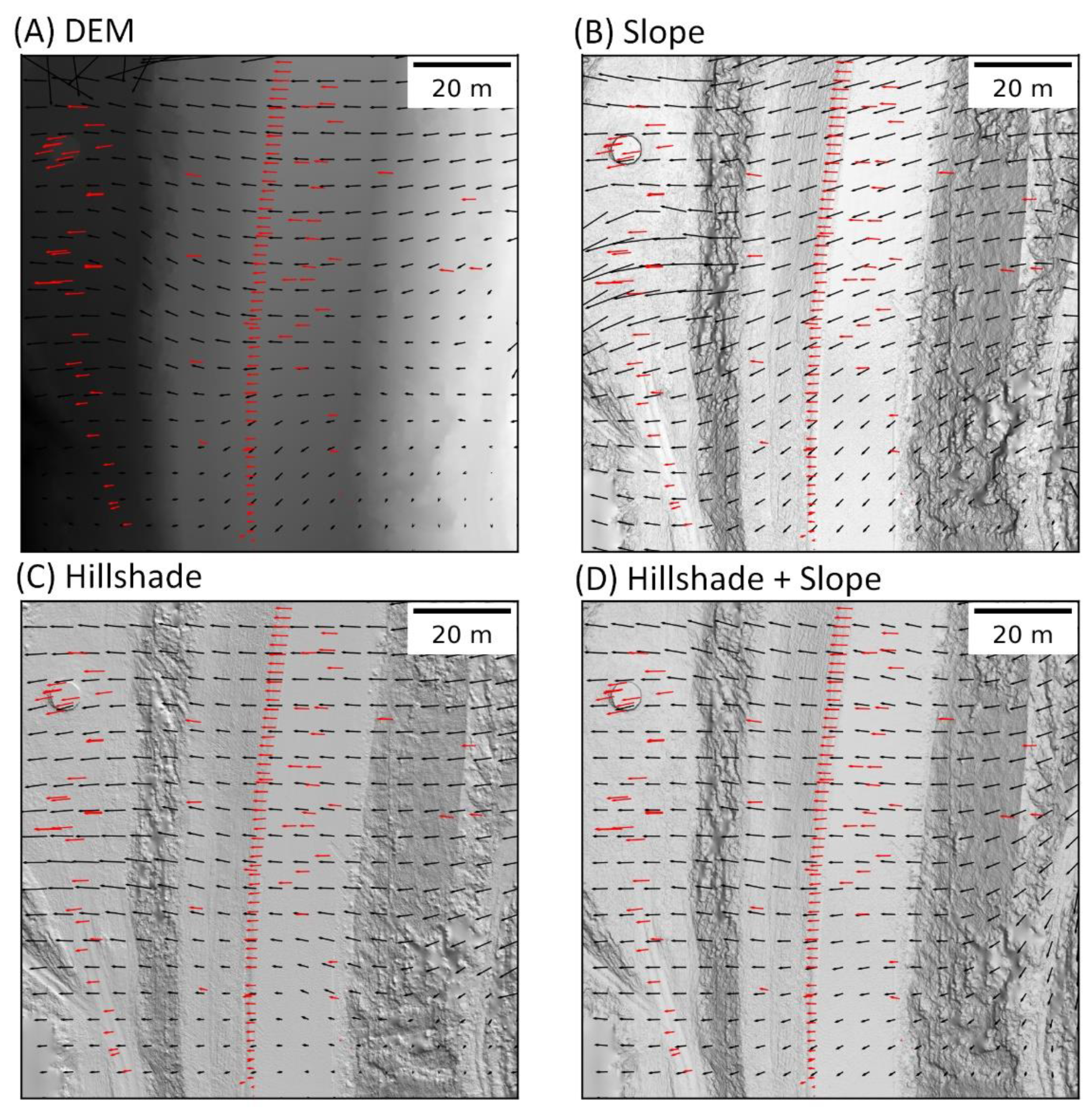

2.6.1. Experiment #1: Representation of DEM

2.6.2. Experiment #2: Cell Size of Input Model

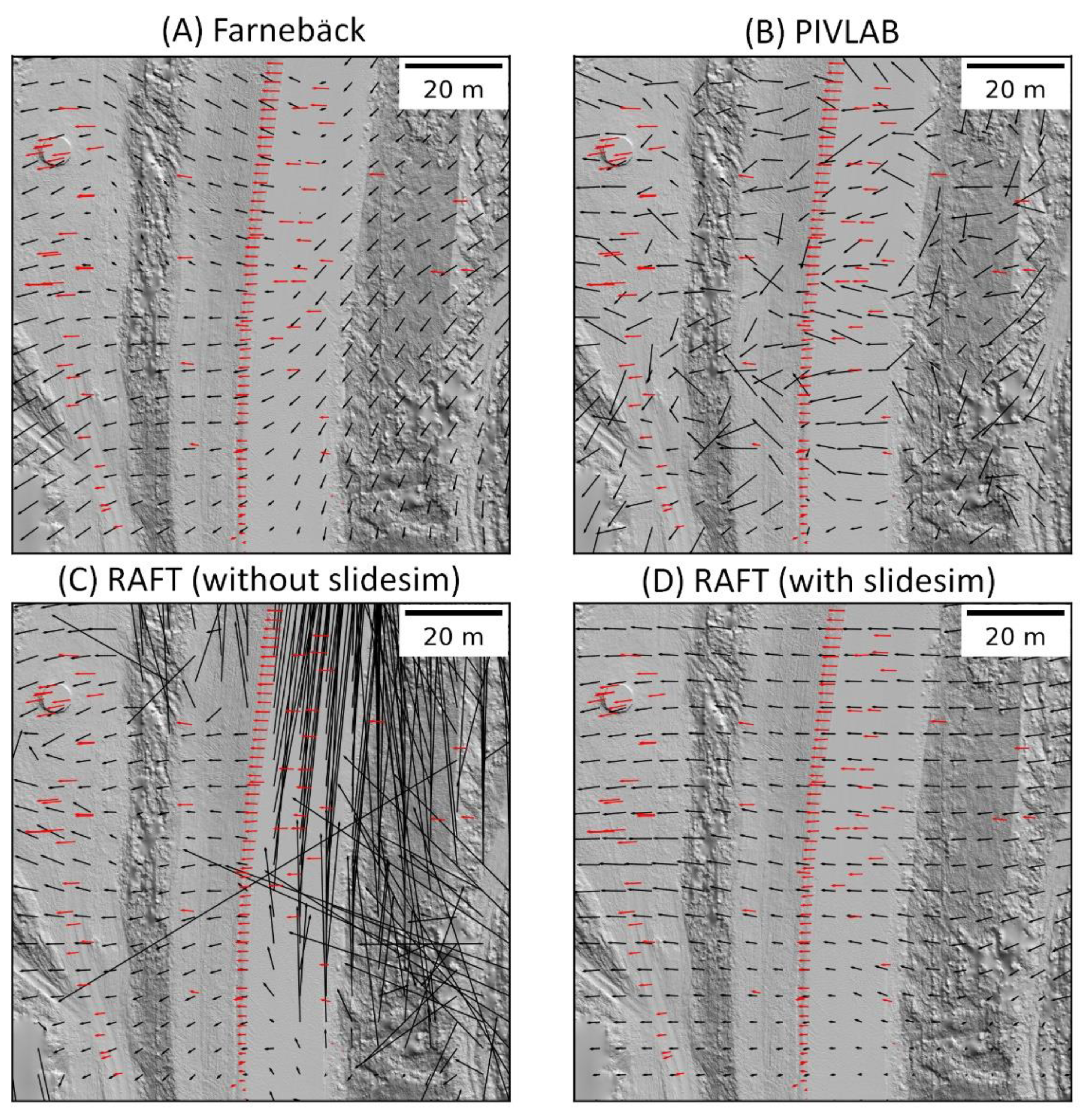

2.6.3. Experiment #3: Comparison to Other Methods

- The OpenCV implementation of Farnebäck optical flow algorithm [53], which is widely used to compute dense optical flow.

- The RAFT deep learning optical flow approach [10] in its typical implementation without additional training using SlideSim, providing a comparison to one of the most widely used deep learning based optical flow approaches trained solely on RGB images without additional landslide context.

2.6.4. Experiment #4: Vertical Component

2.6.5. Experiment #5: Data Source Flexibility

3. Results

3.1. Experiment #1: Representation of DEM

3.2. Experiment #2: Cell Size of Input Model

3.3. Experiment #3: Comparison to Other Methods

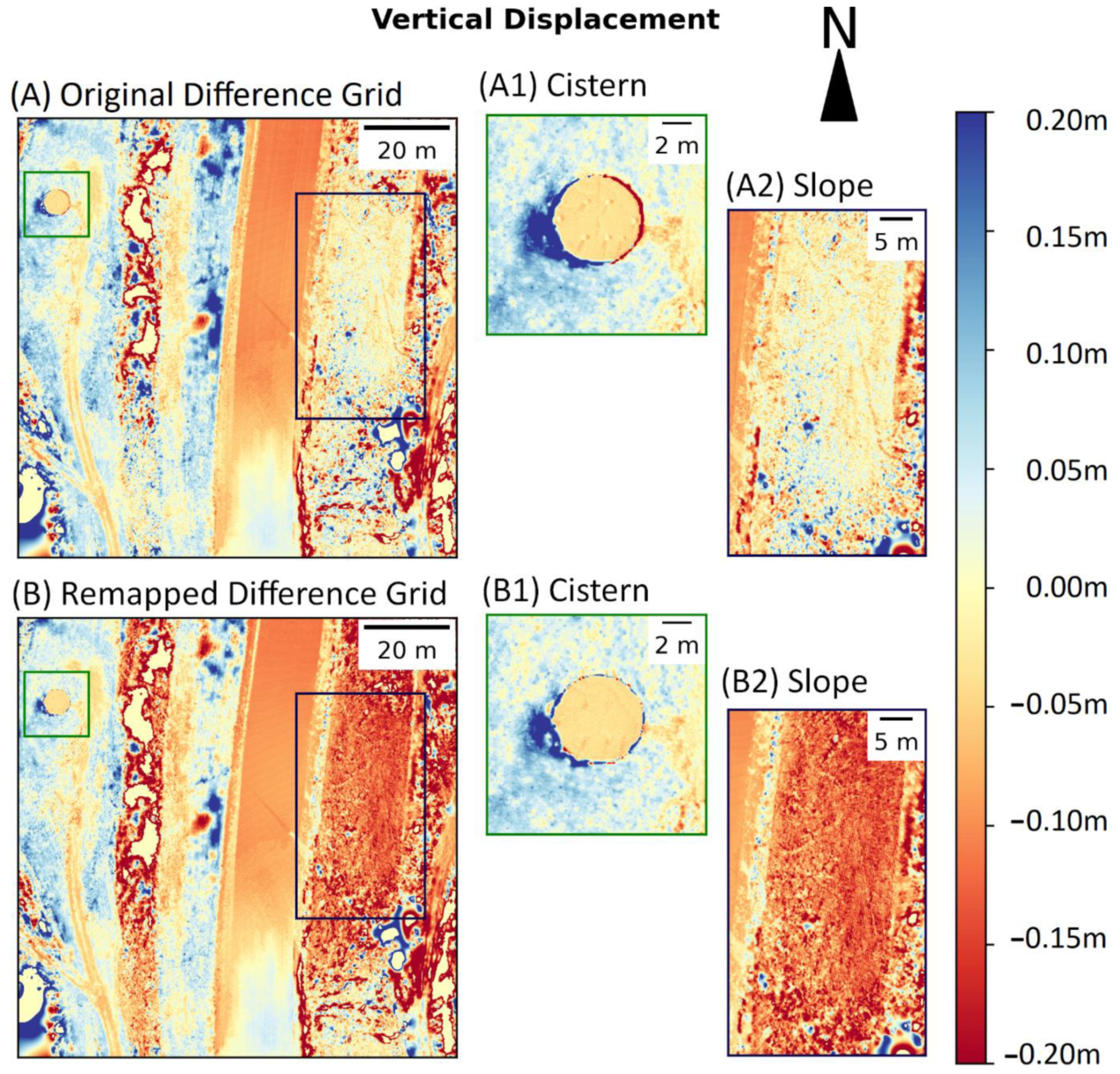

3.4. Experiment #4: Vertical Component

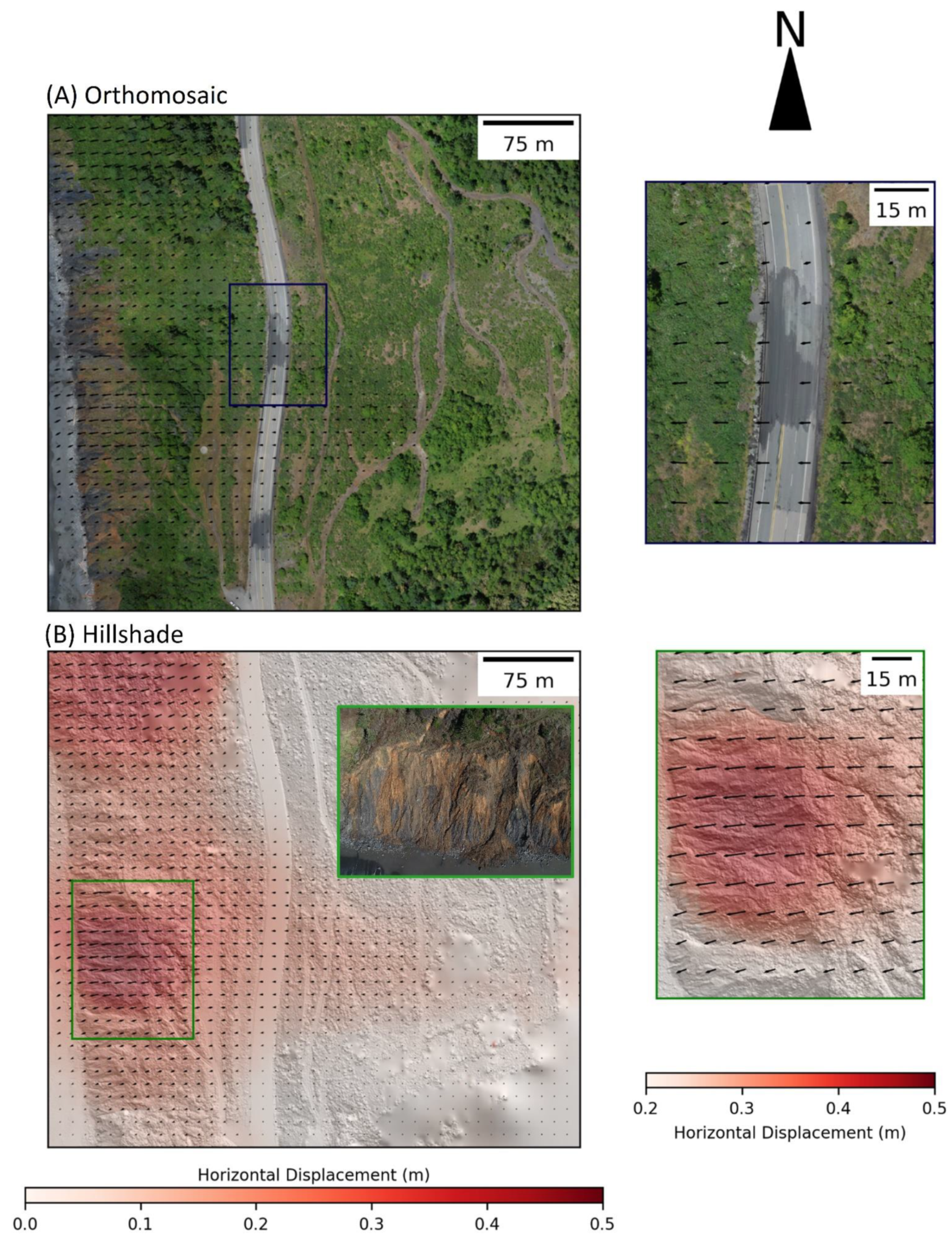

3.5. Experiment #5: Data Source Flexibility

4. Discussion

4.1. Experiment #1: Representation of DEM

4.2. Experiment #2: Cell Size of Input Model

4.3. Experiment #3: Comparison to Other Methods

4.4. Experiment #4: Vertical Component

4.5. Experiment #5: Data Source Flexibility

4.6. Limitations and Future Work

5. Conclusions

- Real world landslide displacements can be accurately measured across a set of DEMs using a deep learning model trained on synthetically generated data, demonstrating that the proposed method is capable of training a model to identify displacements that have occurred without signs of overtraining.

- SlideSim can be completed with relatively few intuitive parameters and requires no direct supervision or tuning of hyperparameters when performing inference with the model.

- A variety of representations of the DEM can be used during both training and inference of the model; however, the hillshade representation produced the highest quality and most consistent results.

- Production of an accurate and dense 2D horizontal displacement grid enables remapping of the elevation values within the DEM to compute the actual vertical displacement that has occurred, producing significantly more accurate results than conventional DEM differencing that do not account for horizontal displacement.

- The method is robust to the input data source used to generate the DEMs and the presence of vegetation artifacts within the DEM did not appear to negatively affect the performance of the method at measuring displacements.

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Höfle, B.; Rutzinger, M. Topographic Airborne LiDAR in Geomorphology: A Technological Perspective. Z. Fur Geomorphol. Suppl. 2011, 55, 1–29. [Google Scholar] [CrossRef]

- Zhang, J.; Lin, X. Advances in Fusion of Optical Imagery and LiDAR Point Cloud Applied to Photogrammetry and Remote Sensing. Int. J. Image Data Fusion 2017, 8, 1–31. [Google Scholar] [CrossRef]

- Iglhaut, J.; Cabo, C.; Puliti, S.; Piermattei, L.; O’Connor, J.; Rosette, J. Structure from Motion Photogrammetry in Forestry: A Review. Curr. For. Rep. 2019, 5, 155–168. [Google Scholar] [CrossRef] [Green Version]

- Jaboyedoff, M.; Oppikofer, T.; Abellán, A.; Derron, M.-H.; Loye, A.; Metzger, R.; Pedrazzini, A. Use of LIDAR in Landslide Investigations: A Review. Nat. Hazards 2012, 61, 5–28. [Google Scholar] [CrossRef] [Green Version]

- Lucieer, A.; de Jong, S.M.; Turner, D. Mapping Landslide Displacements Using Structure from Motion (SfM) and Image Correlation of Multi-Temporal UAV Photography. Prog. Phys. Geogr. 2014, 38, 97–116. [Google Scholar] [CrossRef]

- Jaboyedoff, M.; Derron, M.-H. Landslide Analysis Using Laser Scanners. In Developments in Earth Surface Processes; Elsevier: Amsterdam, The Netherlands, 2020; Volume 23, pp. 207–230. [Google Scholar]

- Ilg, E.; Mayer, N.; Saikia, T.; Keuper, M.; Dosovitskiy, A.; Brox, T. Flownet 2.0: Evolution of Optical Flow Estimation with Deep Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2462–2470. [Google Scholar]

- Ren, Z.; Yan, J.; Ni, B.; Liu, B.; Yang, X.; Zha, H. Unsupervised Deep Learning for Optical Flow Estimation. In Proceedings of the Thirty-First AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017. [Google Scholar]

- Hur, J.; Roth, S. Optical Flow Estimation in the Deep Learning Age. In Modelling Human Motion; Springer: Berlin/Heidelberg, Germany, 2020; pp. 119–140. [Google Scholar]

- Teed, Z.; Deng, J. Raft: Recurrent All-Pairs Field Transforms for Optical Flow. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; Springer: Berlin/Heidelberg, Germany; pp. 402–419. [Google Scholar]

- Gordon, S.; Lichti, D.; Stewart, M. Application of a High-Resolution, Ground-Based Laser Scanner for Deformation Measurements. In Proceedings of the 10th International FIG Symposium on Deformation Measurements, Orange, CA, USA, 19–22 March 2001; pp. 23–32. [Google Scholar]

- Abellán, A.; Oppikofer, T.; Jaboyedoff, M.; Rosser, N.J.; Lim, M.; Lato, M.J. Terrestrial Laser Scanning of Rock Slope Instabilities. Earth Surf. Processes Landf. 2014, 39, 80–97. [Google Scholar] [CrossRef]

- Telling, J.; Lyda, A.; Hartzell, P.; Glennie, C. Review of Earth Science Research Using Terrestrial Laser Scanning. Earth-Sci. Rev. 2017, 169, 35–68. [Google Scholar] [CrossRef] [Green Version]

- Babbel, B.J.; Olsen, M.J.; Che, E.; Leshchinsky, B.A.; Simpson, C.; Dafni, J. Evaluation of Uncrewed Aircraft Systems’ Lidar Data Quality. ISPRS Int. J. Geo-Inf. 2019, 8, 532. [Google Scholar] [CrossRef] [Green Version]

- Westoby, M.J.; Brasington, J.; Glasser, N.F.; Hambrey, M.J.; Reynolds, J.M. ‘Structure-from-Motion’Photogrammetry: A Low-Cost, Effective Tool for Geoscience Applications. Geomorphology 2012, 179, 300–314. [Google Scholar] [CrossRef] [Green Version]

- Simpson, C.H. A Multivariate Comparison of Drone-Based Structure from Motion and Drone-Based Lidar for Dense Topographic Mapping Applications. 2018. Available online: https://ir.library.oregonstate.edu/concern/graduate_thesis_or_dissertations/q524jv207 (accessed on 4 April 2022).

- Olsen, M.J.; Johnstone, E.; Driscoll, N.; Ashford, S.A.; Kuester, F. Terrestrial Laser Scanning of Extended Cliff Sections in Dynamic Environments: Parameter Analysis. J. Surv. Eng. 2009, 135, 161–169. [Google Scholar] [CrossRef]

- Kumar Mishra, R.; Zhang, Y. A Review of Optical Imagery and Airborne Lidar Data Registration Methods. Open Remote Sens. J. 2012, 5, 54–63. [Google Scholar] [CrossRef] [Green Version]

- El-Sheimy, N. Georeferencing Component of LiDAR Systems. In Topographic Laser Ranging and Scanning; CRC Press: Boca Raton, FL, USA, 2017; pp. 195–214. [Google Scholar]

- Meng, X.; Currit, N.; Zhao, K. Ground Filtering Algorithms for Airborne LiDAR Data: A Review of Critical Issues. Remote Sens. 2010, 2, 833–860. [Google Scholar] [CrossRef] [Green Version]

- Che, E.; Senogles, A.; Olsen, M.J. Vo-SmoG: A Versatile, Smooth Segment-Based Ground Filter for Point Clouds via Multi-Scale Voxelization. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2021, 8, 59–66. [Google Scholar] [CrossRef]

- Olsen, M.J.; Wartman, J.; McAlister, M.; Mahmoudabadi, H.; O’Banion, M.S.; Dunham, L.; Cunningham, K. To Fill or Not to Fill: Sensitivity Analysis of the Influence of Resolution and Hole Filling on Point Cloud Surface Modeling and Individual Rockfall Event Detection. Remote Sens. 2015, 7, 12103–12134. [Google Scholar] [CrossRef] [Green Version]

- Bitelli, G.; Dubbini, M.; Zanutta, A. Terrestrial Laser Scanning and Digital Photogrammetry Techniques to Monitor Landslide Bodies. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2004, 35, 246–251. [Google Scholar]

- Conner, J.C.; Olsen, M.J. Automated Quantification of Distributed Landslide Movement Using Circular Tree Trunks Extracted from Terrestrial Laser Scan Data. Comput. Geosci. 2014, 67, 31–39. [Google Scholar] [CrossRef]

- Pfeiffer, J.; Zieher, T.; Bremer, M.; Wichmann, V.; Rutzinger, M. Derivation of Three-Dimensional Displacement Vectors from Multi-Temporal Long-Range Terrestrial Laser Scanning at the Reissenschuh Landslide (Tyrol, Austria). Remote Sens. 2018, 10, 1688. [Google Scholar] [CrossRef] [Green Version]

- Teza, G.; Galgaro, A.; Zaltron, N.; Genevois, R. Terrestrial Laser Scanner to Detect Landslide Displacement Fields: A New Approach. Int. J. Remote Sens. 2007, 28, 3425–3446. [Google Scholar] [CrossRef]

- Telling, J.W.; Glennie, C.; Fountain, A.G.; Finnegan, D.C. Analyzing Glacier Surface Motion Using LiDAR Data. Remote Sens. 2017, 9, 283. [Google Scholar] [CrossRef] [Green Version]

- Khan, M.W.; Dunning, S.; Bainbridge, R.; Martin, J.; Diaz-Moreno, A.; Torun, H.; Jin, N.; Woodward, J.; Lim, M. Low-Cost Automatic Slope Monitoring Using Vector Tracking Analyses on Live-Streamed Time-Lapse Imagery. Remote Sens. 2021, 13, 893. [Google Scholar] [CrossRef]

- Antonello, M.; Gabrieli, F.; Cola, S.; Menegatti, E. Automated Landslide Monitoring through a Low-Cost Stereo Vision System. In Proceedings of the Workshop Popularize Artificial Intelligence at the 13th Conference of the Italian Association for Artificial Intelligence AIxIA, Turin, Italy, 5 December 2013; pp. 37–41. [Google Scholar]

- Leprince, S.; Ayoub, F.; Klinger, Y.; Avouac, J.-P. Co-Registration of Optically Sensed Images and Correlation (COSI-Corr): An Operational Methodology for Ground Deformation Measurements. In Proceedings of the 2007 IEEE International Geoscience and Remote Sensing Symposium, Barcelona, Spain, 23–28 July 2007; pp. 1943–1946. [Google Scholar]

- Suncar, O.E.; Rathje, E.M.; Buckley, S.M. Deformations of a Rapidly Moving Landslide from High-Resolution Optical Satellite Imagery. In Proceedings of the Geo-Congress 2013: Stability and Performance of Slopes and Embankments III, San Diego, CA, USA, 3–7 March 2013; pp. 269–278. [Google Scholar]

- Martin, J.G.; Rathje, E.M. Lateral Spread Deformations from the 2010–2011 New Zealand Earthquakes Measured from Satellite Images and Optical Image Correlation. In Proceedings of the 10th National Conference in Earthquake Engineering, Anchorage, AK, USA, 21–25 July 2014. [Google Scholar]

- Bickel, V.T.; Manconi, A.; Amann, F. Quantitative Assessment of Digital Image Correlation Methods to Detect and Monitor Surface Displacements of Large Slope Instabilities. Remote Sens. 2018, 10, 865. [Google Scholar] [CrossRef] [Green Version]

- Butler, D.J.; Wulff, J.; Stanley, G.B.; Black, M.J. A Naturalistic Open Source Movie for Optical Flow Evaluation. In Proceedings of the European Conference on Computer Vision, Florence, Italy, 7–13 October 2012; Springer: Berlin/Heidelberg, Germany, 2012; pp. 611–625. [Google Scholar]

- Dosovitskiy, A.; Fischer, P.; Ilg, E.; Hausser, P.; Hazirbas, C.; Golkov, V.; Van Der Smagt, P.; Cremers, D.; Brox, T. Flownet: Learning Optical Flow with Convolutional Networks. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 2758–2766. [Google Scholar]

- Booth, A.M.; Lamb, M.P.; Avouac, J.-P.; Delacourt, C. Landslide Velocity, Thickness, and Rheology from Remote Sensing: La Clapière Landslide, France. Geophys. Res. Lett. 2013, 40, 4299–4304. [Google Scholar] [CrossRef] [Green Version]

- Bunn, M.; Leshchinsky, B.; Olsen, M.J. Estimates of Three-Dimensional Rupture Surface Geometry of Deep-Seated Landslides Using Landslide Inventories and High-Resolution Topographic Data. Geomorphology 2020, 367, 107332. [Google Scholar] [CrossRef]

- Mayer, N.; Ilg, E.; Hausser, P.; Fischer, P.; Cremers, D.; Dosovitskiy, A.; Brox, T. A Large Dataset to Train Convolutional Networks for Disparity, Optical Flow, and Scene Flow Estimation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 4040–4048. [Google Scholar]

- Senogles, A. Slidepy: A Fast Multi-Threaded Python Library for 3D Landslide Modeling with SIMD Support. 2022. Available online: https://zenodo.org/record/6350744#.Ypgrv-xByMo (accessed on 4 April 2022).

- Senogles, A. Fasterraster: A Fast Multi-Threaded Python Library for Performing Raster Operations with Simple IO. 2022. Available online: https://zenodo.org/record/6350746#.Ypgr6exByMo (accessed on 4 April 2022).

- Smith, L.N.; Topin, N. Super-Convergence: Very Fast Training of Neural Networks Using Large Learning Rates. In International Society for Optics and Photonics, Proceedings of the Artificial Intelligence and Machine Learning for Multi-Domain Operations Applications, Bellingham, WA, USA, 10 May 2019; International Society for Optics and Photonics, SPIE: Bellingham, WA, USA, 2019; Volume 11006, p. 1100612. [Google Scholar]

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelshein, N.; Antiga, L.; et al. Pytorch: An Imperative Style, High-Performance Deep Learning Library. In Proceedings of the 33rd Conference on Neural Information Processing Systems (NeurIPS2019), Vancouver, Canada, 8–14 December 2019; Volume 32, pp. 8024–8035. Available online: https://proceedings.neurips.cc/paper/2019/file/bdbca288fee7f92f2bfa9f7012727740-Paper.pdf (accessed on 4 April 2022).

- Olsen, M.J.; Leshchinsky, B.; Senogles, A.; Allan, J. Coastal Landslide and Sea Cliff Retreat Monitoring for Climate Change Adaptation and Targeted Risk Assessment. SPR807 Interim Report; Oregon Department of Transportation: Salem, OR, USA, 2020.

- Olsen, M.J.; Johnstone, E.; Kuester, F.; Driscoll, N.; Ashford, S.A. New Automated Point-Cloud Alignment for Ground-Based Light Detection and Ranging Data of Long Coastal Sections. J. Surv. Eng. 2011, 137, 14–25. [Google Scholar] [CrossRef]

- AgiSoft Metashape Professional, Version 1.7.2. 2021. Available online: https://www.agisoft.com/ (accessed on 4 April 2022).

- Takasu, T.; Yasuda, A. Development of the Low-Cost RTK-GPS Receiver with an Open Source Program Package RTKLIB. In Proceedings of the International Symposium on GPS/GNSS, Jeju, Korea, 4–6 November 2009; International Convention Center: Jeju, Korea, 2009; Volume 1. [Google Scholar]

- Olsen, M.; Massey, C.; Leschinsky, B.; Senogles, A.; Wartman, J. Predicting Seismic-Induced Rockfall Hazard for Targeted Site Mitigation; FHWA-OR-RD-21-06; Oregon Department of Transportation: Salem, OR, USA, 2020. Available online: https://www.oregon.gov/odot/Programs/ResearchDocuments/SPR809RockFall.pdf (accessed on 4 April 2022).

- Nissen, E.; Krishnan, A.K.; Arrowsmith, J.R.; Saripalli, S. Three-Dimensional Surface Displacements and Rotations from Differencing Pre-and Post-Earthquake LiDAR Point Clouds. Geophys. Res. Lett. 2012, 39. [Google Scholar] [CrossRef]

- Otte, M.; Nagel, H.-H. Optical Flow Estimation: Advances and Comparisons. In Proceedings of the European Conference on Computer Vision, Stockholm, Sweden, 2–6 May 1994; Springer: Berlin/Heidelberg, Germany, 1994; pp. 49–60. [Google Scholar]

- Zevenbergen, L.W.; Thorne, C.R. Quantitative Analysis of Land Surface Topography. Earth Surf. Processes Landf. 1987, 12, 47–56. [Google Scholar] [CrossRef]

- Ziadat, F.M. Effect of Contour Intervals and Grid Cell Size on the Accuracy of DEMs and Slope Derivatives. Trans. GIS 2007, 11, 67–81. [Google Scholar] [CrossRef]

- Yang, P.; Ames, D.P.; Fonseca, A.; Anderson, D.; Shrestha, R.; Glenn, N.F.; Cao, Y. What Is the Effect of LiDAR-Derived DEM Resolution on Large-Scale Watershed Model Results? Environ. Model. Softw. 2014, 58, 48–57. [Google Scholar] [CrossRef]

- Farnebäck, G. Two-Frame Motion Estimation Based on Polynomial Expansion. In Proceedings of the Scandinavian Conference on Image Analysis, Norrköping, Sweden, 11–13 June 2003; Springer: Berlin/Heidelberg, Germany; pp. 363–370. [Google Scholar]

- Thielicke, W.; Stamhuis, E. PIVlab–towards User-Friendly, Affordable and Accurate Digital Particle Image Velocimetry in MATLAB. J. Open Res. Softw. 2014, 2, e30. [Google Scholar] [CrossRef] [Green Version]

- Reinoso, J.F.; León, C.; Mataix, J. Estimating Horizontal Displacement between DEMs by Means of Particle Image Velocimetry Techniques. Remote Sens. 2016, 8, 14. [Google Scholar] [CrossRef] [Green Version]

- Eltner, A.; Kaiser, A.; Castillo, C.; Rock, G.; Neugirg, F.; Abellán, A. Image-Based Surface Reconstruction in Geomorphometry–Merits, Limits and Developments. Earth Surf. Dyn. 2016, 4, 359–389. [Google Scholar] [CrossRef] [Green Version]

| Grid | Parameter | Value(s) | Description |

|---|---|---|---|

| Landslide Surface elevation (DEM) | # of DEMs | 2 | Number of unique DEMs used in training |

| Landslide Boundary | # of boundaries | 10 | Number of unique boundaries used in training |

| Scale Factor | 0.95 to 1.05 | Range of scale factors used to randomly resize landslide boundary | |

| Landslide Slip Surface (SSEM) | # of Slope rasters | 10 | Number of unique SSEMs used in training |

| Scale Factor (DS) | 0.8 to 1.2 | Range of scale factors used to randomly scale landslide depth | |

| 2D Horizontal Velocity | # of velocities | 1000 | Number of unique velocity grid files generated for training |

| u | 0 to −0.25 px/epoch | Range of u component velocities | |

| v | −0.1 to 0.1 px/epoch | Range of v component velocities | |

| # coarse pts | 9 to 64 | Range of coarse grid pts used to initialize velocity grid |

| Collection Date | Data Source | Cell Sizes (Δcell, m) | Extent Area (m2) | # of pts (million) | Mean pt Density (pts/0.01 m2) | Std Dev. pt Density (pts/0.01 m2) |

|---|---|---|---|---|---|---|

| 06/14/2020 | TLS | 0.025, 0.05, 0.1, 0.2 | 10,485.76 | 30 | 29.3 | 176 |

| UAS | 0.1 | 167,772.16 | 278 | 20.5 | 12.9 | |

| 06/14/2021 | TLS | 0.025, 0.05, 0.1, 0.2 | 10,485.76 | 26 | 21 | 49.2 |

| UAS | 0.1 | 167,772.16 | 176 | 15.8 | 10.3 |

| EPE Statistic | DEM | Slope | Hillshade | Hillshade + Slope |

|---|---|---|---|---|

| Min (m) | 0.003 | 0.016 | 0.001 | 0.002 |

| Max (m) | 0.088 | 0.250 | 0.099 | 0.195 |

| Mean (m) | 0.035 | 0.086 | 0.021 | 0.029 |

| Std. Dev. (m) | 0.023 | 0.036 | 0.015 | 0.025 |

| RMSE (m) | 0.042 | 0.095 | 0.026 | 0.038 |

| EPE Statistic | Δcell = 0.025 m | Δcell = 0.05 m | Δcell = 0.1 m | Δcell = 0.2 m |

|---|---|---|---|---|

| Min (m) | 0.005 | 0.001 | 0.004 | 0.039 |

| Max (m) | 0.128 | 0.099 | 0.110 | 0.139 |

| Mean (m) | 0.036 | 0.021 | 0.046 | 0.079 |

| Std. Dev. (m) | 0.019 | 0.015 | 0.023 | 0.019 |

| RMSE (m) | 0.041 | 0.026 | 0.052 | 0.081 |

| EPE Statistic | Farnebäck Optical Flow | PIVLAB | RAFT (Without SlideSim) | RAFT (With SlideSim) |

|---|---|---|---|---|

| Min (m) | 0.009 | 0.005 | 0.003 | 0.001 |

| Max (m) | 0.182 | 0.509 | 12.422 | 0.099 |

| Mean (m) | 0.070 | 0.112 | 3.071 | 0.021 |

| Std. Dev. (m) | 0.040 | 0.088 | 4.536 | 0.015 |

| RMSE (m) | 0.080 | 0.142 | 5.463 | 0.026 |

| EPE Statistic | Original Difference Grid | Remapped Difference Grid |

|---|---|---|

| Min (m) | −0.124 | −0.031 |

| Max (m) | 0.692 | 0.038 |

| Mean (m) | −0.001 | 0.001 |

| Std. Dev. (m) | 0.068 | 0.007 |

| RMSE (m) | 0.068 | 0.007 |

| EPE Statistic | UAS |

|---|---|

| Min (m) | 0.002 |

| Max (m) | 0.084 |

| Mean (m) | 0.027 |

| Std. Dev. (m) | 0.015 |

| RMSE (m) | 0.030 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Senogles, A.; Olsen, M.J.; Leshchinsky, B. SlideSim: 3D Landslide Displacement Monitoring through a Physics-Based Simulation Approach to Self-Supervised Learning. Remote Sens. 2022, 14, 2644. https://doi.org/10.3390/rs14112644

Senogles A, Olsen MJ, Leshchinsky B. SlideSim: 3D Landslide Displacement Monitoring through a Physics-Based Simulation Approach to Self-Supervised Learning. Remote Sensing. 2022; 14(11):2644. https://doi.org/10.3390/rs14112644

Chicago/Turabian StyleSenogles, Andrew, Michael J. Olsen, and Ben Leshchinsky. 2022. "SlideSim: 3D Landslide Displacement Monitoring through a Physics-Based Simulation Approach to Self-Supervised Learning" Remote Sensing 14, no. 11: 2644. https://doi.org/10.3390/rs14112644

APA StyleSenogles, A., Olsen, M. J., & Leshchinsky, B. (2022). SlideSim: 3D Landslide Displacement Monitoring through a Physics-Based Simulation Approach to Self-Supervised Learning. Remote Sensing, 14(11), 2644. https://doi.org/10.3390/rs14112644