A Superpixel-by-Superpixel Clustering Framework for Hyperspectral Change Detection

Abstract

:1. Introduction

1.1. Background of Hyperspectral Change Detection

1.1.1. Image Algorithm-Based Methods

1.1.2. Image Transform-Based Methods

1.1.3. HSI-CD Specified Methods

1.1.4. Deep Learning-Based Methods

1.2. Problem Statements

1.3. Contributions of the Paper

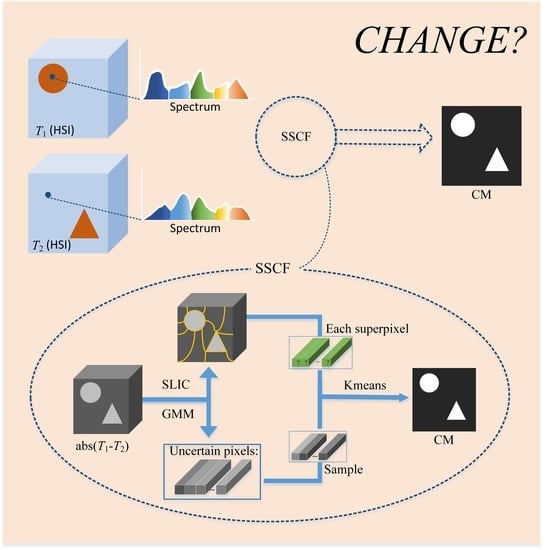

- We propose ingenious strategies to achieve the detection of subtle changes. SLIC in SSCF can spatially segment different changes according to the intensity of the changes, which greatly increases the possibility of subtle changes being detected. GMM in SSCF separates uncertain pixels from the whole image as a rough threshold to highlight changes and accurately capture subtle changes. Consequently, the experimental results show that SSCF is able to detect subtle changes without increasing the false alarm rate.

- Compared with the existing traditional methods, SSCF can achieve more accurate detection. Compared with deep learning methods, SSCF achieves higher accuracy and shorter detection time.

2. Methodology

2.1. SLIC

2.2. GMM

2.3. Proposed SSCF Method

3. Experiment

3.1. Datasets

3.2. Parameter Setup

3.3. Evaluations Measures

3.4. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Willett, R.M.; Duarte, M.F.; Davenport, M.A.; Baraniuk, R.G. Sparsity and Structure in Hyperspectral Imaging: Sensing, Reconstruction, and Target Detection. IEEE Signal Process. Mag. 2013, 31, 116–126. [Google Scholar] [CrossRef] [Green Version]

- Manolakis, D.; Marden, D.; Shaw, G.A. Hyperspectral Image Processing for Automatic Target Detection Applications. Linc. Lab. J. 2003, 14, 79–116. [Google Scholar]

- Nasrabadi, N.M. Hyperspectral Target Detection: An Overview of Current and Future Challenges. IEEE Signal Process. Mag. 2013, 31, 34–44. [Google Scholar] [CrossRef]

- Tan, K.; Hou, Z.; Wu, F.; Du, Q.; Chen, Y. Anomaly Detection for Hyperspectral Imagery Based on the Regularized Subspace Method and Collaborative Representation. Remote Sens. 2019, 11, 1318. [Google Scholar] [CrossRef] [Green Version]

- Liu, J.; Hou, Z.; Li, W.; Tao, R.; Orlando, D.; Li, H. Multipixel Anomaly Detection with Unknown Patterns for Hyperspectral Imagery. IEEE Trans. Neural Networks Learn. Syst. 2021, 2–10. [Google Scholar] [CrossRef]

- Hu, M.; Wu, C.; Zhang, L.; Du, B. Hyperspectral Anomaly Change Detection Based on Autoencoder. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 3750–3762. [Google Scholar] [CrossRef]

- Gong, H.; Li, Q.; Li, C.; Dai, H.; He, Z.; Wang, W.; Li, H.; Han, F.; Tuniyazi, A.; Mu, T. Multiscale Information Fusion for Hyperspectral Image Classification Based on Hybrid 2D-3D CNN. Remote Sens. 2021, 13, 2268. [Google Scholar] [CrossRef]

- Zhao, Y.; Yuan, Y.; Wang, Q. Fast Spectral Clustering for Unsupervised Hyperspectral Image Classification. Remote Sens. 2019, 11, 399. [Google Scholar] [CrossRef] [Green Version]

- He, L.; Li, J.; Plaza, A.; Li, Y. Discriminative Low-Rank Gabor Filtering for Spectral–Spatial Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2016, 55, 1381–1395. [Google Scholar] [CrossRef]

- Liu, S.; Chi, M.; Zou, Y.; Samat, A.; Benediktsson, J.A.; Plaza, A.; Liu, S.; Chi, M.; Zou, Y.; Samat, A.; et al. Oil Spill Detection via Multitemporal Optical Remote Sensing Images: A Change Detection Perspective. IEEE Geosci. Remote Sens. Lett. 2017, 14, 324–328. [Google Scholar] [CrossRef]

- Bovolo, F.; Bruzzone, L. A Split-Based Approach to Unsupervised Change Detection in Large-Size Multitemporal Images: Application to Tsunami-Damage Assessment. IEEE Trans. Geosci. Remote Sens. 2007, 45, 1658–1670. [Google Scholar] [CrossRef]

- Du, P.; Liu, S.; Bruzzone, L.; Bovolo, F. Target-Driven Change Detection Based on Data Transformation and Similarity Measures. In Proceedings of the 2012 IEEE International Geoscience and Remote Sensing Symposium, Munich, Germany, 22–27 July 2012. [Google Scholar]

- Coppin, P.; Lambin, E.; Jonckheere, I.; Muys, B. Digital Change Detection Methods in Natural Ecosystem Monitoring: A Review. In Analysis of Multi-Temporal Remote Sensing Images; World Scientific: Singapore, 2002; pp. 3–36. [Google Scholar]

- Mundia, C.N.; Aniya, M. Analysis of land use/cover changes and urban expansion of Nairobi city using remote sensing and GIS. Int. J. Remote Sens. 2005, 26, 2831–2849. [Google Scholar] [CrossRef]

- Wen, D.; Huang, X.; Zhang, L.; Benediktsson, J.A. A Novel Automatic Change Detection Method for Urban High-Resolution Remotely Sensed Imagery Based on Multiindex Scene Representation. IEEE Trans. Geosci. Remote Sens. 2016, 54, 609–625. [Google Scholar] [CrossRef]

- Liu, S.; Marinelli, D.; Bruzzone, L.; Bovolo, F. A Review of Change Detection in Multitemporal Hyperspectral Images: Current Techniques, Applications, and Challenges. IEEE Geosci. Remote Sens. Mag. 2019, 7, 140–158. [Google Scholar] [CrossRef]

- Bruzzone, L.; Prieto, D. Automatic analysis of the difference image for unsupervised change detection. IEEE Trans. Geosci. Remote Sens. 2000, 38, 1171–1182. [Google Scholar] [CrossRef] [Green Version]

- Celik, T. Unsupervised Change Detection in Satellite Images Using Principal Component Analysis and k-Means Clustering. IEEE Geosci. Remote Sens. Lett. 2009, 6, 772–776. [Google Scholar] [CrossRef]

- Ertürk, A.; Iordache, M.-D.; Plaza, A. Sparse Unmixing-Based Change Detection for Multitemporal Hyperspectral Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 708–719. [Google Scholar] [CrossRef]

- Dai, X.L.; Khorram, S. Remotely Sensed Change Detection Based on Artificial Neural Networks. Photogramm. Eng. Remote Sens. 1999, 65, 1187–1194. [Google Scholar]

- Singh, A. Change Detection in the Tropical Forest Environment of Northeastern India Using Landsat. In Remote Sensing and Tropical Land Management; John Wiley and Sons Ltd.: New York, NY, USA, 1986. [Google Scholar]

- Jackson, R.D. Spectral Indices in N-Space. Remote Sens. Environ. 1983, 13, 409–421. [Google Scholar] [CrossRef]

- Todd, W.J. Urban and Regional Land Use Change Detected by Using Landsat Data. J. Res. US Geol. Surv. 1977, 5, 529–534. [Google Scholar]

- Malila, W.A. Change Vector Analysis: An Approach for Detecting Forest Changes with Landsat. In Proceedings of the Sixth Annual Symposium on Machine Processing of Remotely Sensed Data and Soil Information Systems and Remote Sensing and Soil Survey, West Lafayette, IN, USA, 3–6 June 1980; pp. 326–336. [Google Scholar]

- Bovolo, F.; Marchesi, S.; Bruzzone, L. A Framework for Automatic and Unsupervised Detection of Multiple Changes in Multitemporal Images. IEEE Trans. Geosci. Remote Sens. 2012, 50, 2196–2212. [Google Scholar] [CrossRef]

- Bovolo, F.; Bruzzone, L. A Theoretical Framework for Unsupervised Change Detection Based on Change Vector Analysis in the Polar Domain. IEEE Trans. Geosci. Remote Sens. 2007, 45, 218–236. [Google Scholar] [CrossRef] [Green Version]

- Wang, Z.; Bovik, A.; Sheikh, H.; Simoncelli, E. Image Quality Assessment: From Error Visibility to Structural Similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Li, Q.; Gong, H.; Dai, H.; Li, C.; He, Z.; Wang, W.; Feng, Y.; Han, F.; Tuniyazi, A.; Li, H.; et al. Unsupervised Hyperspectral Image Change Detection via Deep Learning Self-Generated Credible Labels. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 9012–9024. [Google Scholar] [CrossRef]

- Zhang, H.; Gong, M.; Zhang, P.; Su, L.; Shi, J. Feature-Level Change Detection Using Deep Representation and Feature Change Analysis for Multispectral Imagery. IEEE Geosci. Remote Sens. Lett. 2016, 13, 1666–1670. [Google Scholar] [CrossRef]

- Nielsen, A.A.; Conradsen, K.; Simpson, J.J. Multivariate Alteration Detection (MAD) and MAF Postprocessing in Multispectral, Bitemporal Image Data: New Approaches to Change Detection Studies. Remote Sens. Environ. 1998, 64, 1–19. [Google Scholar] [CrossRef] [Green Version]

- Frank, M.; Canty, M. Unsupervised Change Detection for Hyperspectral Images. In Proceedings of the 12th JPL Airborne Earth Science Workshop, Pasadena, CA, USA, February 2003. [Google Scholar]

- Nielsen, A.A. The Regularized Iteratively Reweighted MAD Method for Change Detection in Multi- and Hyperspectral Data. IEEE Trans. Image Process. 2007, 16, 463–478. [Google Scholar] [CrossRef] [Green Version]

- Wiskott, L.; Sejnowski, T.J. Slow Feature Analysis: Unsupervised Learning of Invariances. Neural Comput. 2002, 14, 715–770. [Google Scholar] [CrossRef]

- Wiskott, L.; Berkes, P.; Franzius, M.; Sprekeler, H.; Wilbert, N. Slow Feature Analysis. Scholarpedia 2011, 6, 5282. [Google Scholar] [CrossRef]

- Wu, C.; Zhang, L.; Du, B. Kernel Slow Feature Analysis for Scene Change Detection. IEEE Trans. Geosci. Remote Sens. 2017, 55, 2367–2384. [Google Scholar] [CrossRef]

- Wu, C.; Du, B.; Zhang, L. Slow Feature Analysis for Change Detection in Multispectral Imagery. IEEE Trans. Geosci. Remote Sens. 2014, 52, 2858–2874. [Google Scholar] [CrossRef]

- Chen, Z.; Wang, B. Spectrally-Spatially Regularized Low-Rank and Sparse Decomposition: A Novel Method for Change Detection in Multitemporal Hyperspectral Images. Remote Sens. 2017, 9, 1044. [Google Scholar] [CrossRef] [Green Version]

- Wu, C.; Du, B.; Zhang, L. Hyperspectral anomalous change detection based on joint sparse representation. ISPRS J. Photogramm. Remote Sens. 2018, 146, 137–150. [Google Scholar] [CrossRef]

- Ertürk, A. Constrained Nonnegative Matrix Factorization for Hyperspectral Change Detection. In Proceedings of the 2020 Mediterranean and Middle-East Geoscience and Remote Sensing Symposium (M2GARSS), Tunis, Tunisia, 9–11 March 2020. [Google Scholar]

- Borsoi, R.A.; Imbiriba, T.; Bermudez, J.C.M.; Richard, C. Fast Unmixing and Change Detection in Multitemporal Hyperspectral Data. IEEE Trans. Comput. Imaging 2021, 7, 975–988. [Google Scholar] [CrossRef]

- Guo, Q.; Zhang, J.; Zhang, Y. Multitemporal Hyperspectral Images Change Detection Based on Joint Unmixing and Information Coguidance Strategy. IEEE Trans. Geosci. Remote Sens. 2021, 59, 9633–9645. [Google Scholar] [CrossRef]

- Guo, Q.; Zhang, J.; Zhong, C.; Zhang, Y. Change Detection for Hyperspectral Images Via Convolutional Sparse Analysis and Temporal Spectral Unmixing. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 4417–4426. [Google Scholar] [CrossRef]

- Seydi, S.T.; Shah-Hosseini, R.; Hasanlou, M. New framework for hyperspectral change detection based on multi-level spectral unmixing. Appl. Geomat. 2021, 13, 763–780. [Google Scholar] [CrossRef]

- Hou, Z.; Li, W.; Tao, R.; Du, Q. Three-Order Tucker Decomposition and Reconstruction Detector for Unsupervised Hyperspectral Change Detection. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 6194–6205. [Google Scholar] [CrossRef]

- Hou, Z.; Wei, L.; Qian, D. A Patch Tensor-Based Change Detection Method for Hyperspectral Images. In Proceedings of the 2021 IEEE International Geoscience and Remote Sensing Symposium IGARSS, Brussels, Belgium, 11–16 July 2021. [Google Scholar]

- Hou, Z.; Li, W.; Li, L.; Tao, R.; Du, Q. Hyperspectral Change Detection Based on Multiple Morphological Profiles. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–12. [Google Scholar] [CrossRef]

- Chen, H.; Wu, C.; Du, B.; Zhang, L.; Wang, L. Change Detection in Multisource VHR Images via Deep Siamese Convolutional Multiple-Layers Recurrent Neural Network. IEEE Trans. Geosci. Remote Sens. 2020, 58, 2848–2864. [Google Scholar] [CrossRef]

- Peng, D.; Zhang, Y.; Guan, H. End-to-End Change Detection for High Resolution Satellite Images Using Improved UNet++. Remote Sens. 2019, 11, 1382. [Google Scholar] [CrossRef] [Green Version]

- Li, Q.; Zhong, R.; Du, X.; Du, Y. TransUNetCD: A Hybrid Transformer Network for Change Detection in Optical Remote-Sensing Images. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5622519. [Google Scholar] [CrossRef]

- Shi, Q.; Liu, M.; Li, S.; Liu, X.; Wang, F.; Zhang, L. A Deeply Supervised Attention Metric-Based Network and an Open Aerial Image Dataset for Remote Sensing Change Detection. IEEE Trans. Geosci. Remote Sens. 2021, 60, 5604816. [Google Scholar] [CrossRef]

- Li, X.; Yuan, Z.; Wang, Q. Unsupervised Deep Noise Modeling for Hyperspectral Image Change Detection. Remote Sens. 2019, 11, 258. [Google Scholar] [CrossRef] [Green Version]

- Song, A.; Choi, J.; Han, Y.; Kim, Y. Change Detection in Hyperspectral Images Using Recurrent 3D Fully Convolutional Networks. Remote Sens. 2018, 10, 1827. [Google Scholar] [CrossRef] [Green Version]

- Wang, Q.; Yuan, Z.; Du, Q.; Li, X. GETNET: A General End-to-End 2-D CNN Framework for Hyperspectral Image Change Detection. IEEE Trans. Geosci. Remote Sens. 2019, 57, 3–13. [Google Scholar] [CrossRef] [Green Version]

- Du, B.; Ru, L.; Wu, C.; Zhang, L. Unsupervised Deep Slow Feature Analysis for Change Detection in Multi-Temporal Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2019, 57, 9976–9992. [Google Scholar] [CrossRef] [Green Version]

- Sun, J.; Liu, J.; Hu, L.; Wei, Z.; Xiao, L. A Mutual Teaching Framework with Momentum Correction for Unsupervised Hyperspectral Image Change Detection. Remote Sens. 2022, 14, 1000. [Google Scholar] [CrossRef]

- Seydi, S.T.; Hasanlou, M. A New Structure for Binary and Multiple Hyperspectral Change Detection Based on Spectral Unmixing and Convolutional Neural Network. Measurement 2021, 186, 110137. [Google Scholar] [CrossRef]

- Zhou, F.; Chen, Z. Hyperspectral Image Change Detection by Self-Supervised Tensor Network. In Proceedings of the IGARSS 2020–2020 IEEE International Geoscience and Remote Sensing Symposium, Waikoloa, HI, USA, 26 September–2 October 2020. [Google Scholar]

- Saha, S.; Kondmann, L.; Zhu, X.X. Deep no learning approach for unsupervised change detection in hyperspectral images. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2021, 3, 311–316. [Google Scholar] [CrossRef]

- Lei, J.; Li, M.; Xie, W.; Li, Y.; Jia, X. Spectral mapping with adversarial learning for unsupervised hyperspectral change detection. Neurocomputing 2021, 465, 71–83. [Google Scholar] [CrossRef]

- Hasanlou, M.; Seydi, S.T. Hyperspectral change detection: An experimental comparative study. Int. J. Remote Sens. 2018, 39, 7029–7083. [Google Scholar] [CrossRef]

- Achanta, R.; Shaji, A.; Smith, K.; Lucchi, A.; Fua, P.; Süsstrunk, S. SLIC Superpixels Compared to State-of-the-Art Superpixel Methods. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 2274–2282. [Google Scholar] [CrossRef] [Green Version]

- Friedman, N.; Russell, S. Image Segmentation in Video Sequences: A Probabilistic Approach. arXiv 2013, arXiv:1302.1539. [Google Scholar]

- Datt, B.; McVicar, T.; Van Niel, T.; Jupp, D.; Pearlman, J. Preprocessing eo-1 hyperion hyperspectral data to support the application of agricultural indexes. IEEE Trans. Geosci. Remote Sens. 2003, 41, 1246–1259. [Google Scholar] [CrossRef] [Green Version]

- Folkman, M.; Pearlman, J.; Liao, L.; Jarecke, P. Eo-1/Hyperion Hyperspectral Imager Design, Development, Characterization, and Calibration. Proc. SPIE 2001, 4151, 40–51. [Google Scholar]

- Pearlman, J.; Barry, P.; Segal, C.; Shepanski, J.; Beiso, D.; Carman, S. Hyperion, a space-based imaging spectrometer. IEEE Trans. Geosci. Remote Sens. 2003, 41, 1160–1173. [Google Scholar] [CrossRef]

- Yuan, Y.; Lv, H.; Lu, X. Semi-supervised change detection method for multi-temporal hyperspectral images. Neurocomputing 2015, 148, 363–375. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015. [Google Scholar]

| Change Map | |||

|---|---|---|---|

| 1 | 0 | ||

| Ground-truth | 1 | TP | FN |

| 0 | FP | TN | |

| Methods | China Dataset | USA Dataset |

|---|---|---|

| OA/F1/Kappa | OA/F1/Kappa | |

| CVA | 0.9689/0.9470/0.9250 | 0.9245/0.8022/0.7574 |

| SSIM | 0.9673/0.9424/0.9196 | 0.9040/0.7372/0.6824 |

| ISFA | 0.9596/0.9323/0.9035 | 0.8344/0.6920/0.5827 |

| IRMAD | 0.9652/0.9417/0.9169 | 0.9180/0.7830/0.7347 |

| MaxtreeCD | 0.9683/0.9453/0.9214 | 0.9255/0.8437/0.7940 |

| Unet | 0.9655/0.9420/0.9175 | 0.8920/0.7553/0.6861 |

| SGCL | 0.9746/0.9562/0.9383 | 0.9130/0.7655/0.7151 |

| SSCF | 0.9770/0.9597/0.9435 | 0.9383/0.8577/0.8184 |

| China Dataset | USA Dataset | |

|---|---|---|

| Unet | 556.35 | 692.74 |

| SGCL | 125.21 | 141.70 |

| SSCF | 61.50 | 73.23 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, Q.; Mu, T.; Gong, H.; Dai, H.; Li, C.; He, Z.; Wang, W.; Han, F.; Tuniyazi, A.; Li, H.; et al. A Superpixel-by-Superpixel Clustering Framework for Hyperspectral Change Detection. Remote Sens. 2022, 14, 2838. https://doi.org/10.3390/rs14122838

Li Q, Mu T, Gong H, Dai H, Li C, He Z, Wang W, Han F, Tuniyazi A, Li H, et al. A Superpixel-by-Superpixel Clustering Framework for Hyperspectral Change Detection. Remote Sensing. 2022; 14(12):2838. https://doi.org/10.3390/rs14122838

Chicago/Turabian StyleLi, Qiuxia, Tingkui Mu, Hang Gong, Haishan Dai, Chunlai Li, Zhiping He, Wenjing Wang, Feng Han, Abudusalamu Tuniyazi, Haoyang Li, and et al. 2022. "A Superpixel-by-Superpixel Clustering Framework for Hyperspectral Change Detection" Remote Sensing 14, no. 12: 2838. https://doi.org/10.3390/rs14122838

APA StyleLi, Q., Mu, T., Gong, H., Dai, H., Li, C., He, Z., Wang, W., Han, F., Tuniyazi, A., Li, H., Lang, X., Li, Z., & Wang, B. (2022). A Superpixel-by-Superpixel Clustering Framework for Hyperspectral Change Detection. Remote Sensing, 14(12), 2838. https://doi.org/10.3390/rs14122838