Machine-Learning-Based Change Detection of Newly Constructed Areas from GF-2 Imagery in Nanjing, China

Abstract

:1. Introduction

2. Materials and Methods

2.1. Study Area

2.2. Data

2.3. Methods

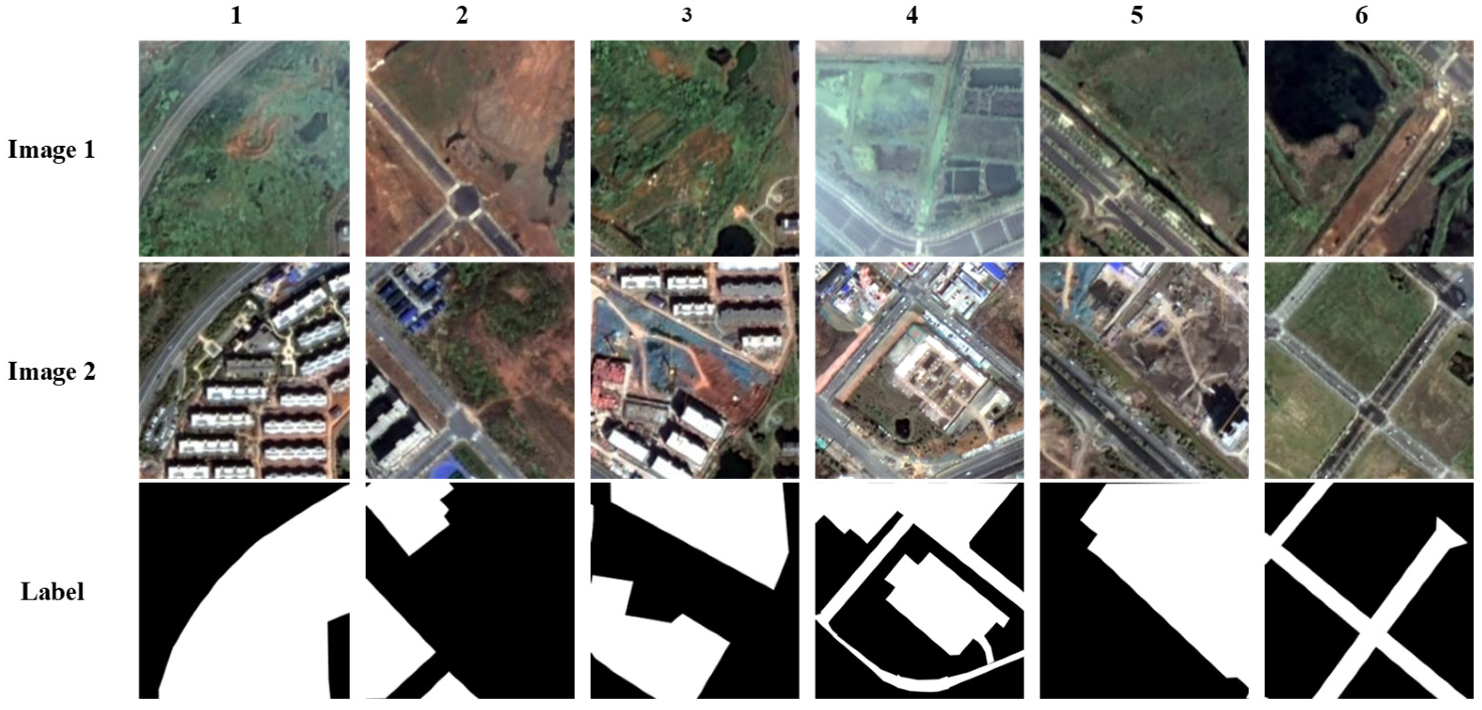

2.3.1. Dataset Preprocessing and Annotation

2.3.2. The STANet-BASE, STANet-BAM, STANet-PAM, SNUNet, and BiT Model

2.4. Evaluation Metrics

3. Results

3.1. Overall Performance

3.2. Change Detection

3.3. Change Detection of the Core Region of Jiangbei New Area in 2021

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Guan, X.L.; Wei, H.K.; Lu, S.S.; Dai, Q.; Su, H.J. Assessment on the urbanization strategy in China: Achievements, challenges and reflections. Habitat Int. 2018, 71, 97–109. [Google Scholar] [CrossRef]

- Kuang, B.; Lu, X.; Han, J.; Fan, X.; Zuo, J. How urbanization influence urban land consumption intensity: Evidence from China. Habitat Int. 2020, 100, 102103. [Google Scholar] [CrossRef]

- Available online: http://www.stats.gov.cn/xxgk/sjfb/zxfb2020/202202/t20220228_1827971.html (accessed on 2 April 2022).

- Luo, J.; Zhang, X.; Wu, Y.; Shen, J.; Shen, L.; Xing, X. Urban land expansion and the floating population in China: For production or for living? Cities 2018, 74, 219–228. [Google Scholar] [CrossRef]

- Seydi, S.; Hasanlou, M.; Amani, M. A New End-to-End Multi-Dimensional CNN Framework for Land Cover/Land Use Change Detection in Multi-Source Remote Sensing Datasets. Remote Sens. 2020, 12, 2010. [Google Scholar] [CrossRef]

- Desdemoustier, J.; Crutzen, N.; Giffinger, R. Municipalities’ understanding of the Smart City concept: An exploratory analysis in Belgium. Technol. Forecast. Soc. Chang. 2019, 142, 129–141. [Google Scholar] [CrossRef]

- Zha, Y.; Gao, J.; Ni, S. Use of normalized difference built-up index in automatically mapping urban areas from TM imagery. Int. J. Remote Sens. 2003, 24, 583–594. [Google Scholar] [CrossRef]

- Varshney, A. Improved NDBI differencing algorithm for built-up regions change detection from remote-sensing data: An automated approach. Remote Sens. Lett. 2013, 4, 504–512. [Google Scholar] [CrossRef]

- He, C.; Shi, P.; Xie, D.; Zhao, Y. Improving the normalized difference built-up index to map urban built-up areas using a semiautomatic segmentation approach. Remote Sens. Lett. 2010, 1, 213–221. [Google Scholar] [CrossRef] [Green Version]

- Xu, L.; Jing, W.; Song, H.; Chen, G. High-Resolution Remote Sensing Image Change Detection Combined with Pixel-Level and Object-Level. IEEE Access 2019, 7, 78909–78918. [Google Scholar] [CrossRef]

- Lee, H.; Lee, K.S.; Kim, J.H.; Na, Y.; Park, J.; Choi, J.P.; Hwang, J.Y.Y. Local Similarity Siamese Network for Urban Land Change Detection on Remote Sensing Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 4139–4149. [Google Scholar] [CrossRef]

- Chen, G.; Hay, G.J.; Carvalho, L.M.; Wulder, M.A. Object-based change detection. Int. J. Remote Sens. 2012, 33, 4434–4457. [Google Scholar] [CrossRef]

- Wang, X.; Liu, S.; Du, P.; Liang, H.; Xia, J.; Li, Y. Object-Based Change Detection in Urban Areas from High Spatial Resolution Images Based on Multiple Features and Ensemble Learning. Remote Sens. 2018, 10, 276. [Google Scholar] [CrossRef] [Green Version]

- Afaq, Y.; Manocha, A. Analysis on change detection techniques for remote sensing applications: A review. Ecol. Inform. 2021, 63, 101310. [Google Scholar] [CrossRef]

- Zhou, Y.; Song, Y.; Cui, S.; Zhu, H.; Sun, J.; Qin, W. A Novel Change Detection Framework in Urban Area Using Multilevel Matching Feature and Automatic Sample Extraction Strategy. IEEE J. Sel. Top. in Appl. Earth Obs. Remote Sens. 2021, 14, 3967–3987. [Google Scholar] [CrossRef]

- Wu, J.; Li, B.; Qin, Y.; Ni, W.; Zhang, H.; Fu, R.; Sun, Y. A multiscale graph convolutional network for change detection in homogeneous and heterogeneous remote sensing images. Int. J. Appl. Earth Obs. Geoinf. 2021, 105, 102615. [Google Scholar] [CrossRef]

- Burbridge, S.; Zhang, Y.Z.Y. A neural network based approach to detecting urban land cover changes using Landsat TM and IKONOS imagery. In Proceedings of the 22nd Digital Avionics Systems Conference. Proceedings (Cat. No.03CH37449), Berlin, Germany, 22–23 May 2003; pp. 157–161. [Google Scholar] [CrossRef]

- Doxani, G.; Siachalou, S.; Tsakiri-Strati, M. An object-oriented approach to urban land cover change detection. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2008, 37, 1655–1660. [Google Scholar]

- Li, Z.; Wang, P.; Fan, M.; Long, Y. Method of urban land change detection that is based on GF-2 high-resolution RS images. Int. J. Image Data Fusion 2020, 1–18. [Google Scholar] [CrossRef]

- Uamkasem, B.; Chao, H.L.; Jiantao, B. Regional land use dynamic monitoring using Chinese GF high resolution satellite data. In Proceedings of the 2017 International Conference on Applied System Innovation (ICASI), Sapporo, Japan, 13–17 May 2017; pp. 838–841. [Google Scholar] [CrossRef]

- Yuan, Q.; Shen, H.; Li, T.; Li, Z.; Li, S.; Jiang, Y.; Xu, H.; Tan, W.; Yang, Q.; Wang, J.; et al. Deep learning in environmental remote sensing: Achievements and challenges. Remote Sens. Environ. 2020, 241, 111716. [Google Scholar] [CrossRef]

- Dong, Z.; Wang, G.; Amankwah, S.O.Y.; Wei, X.; Hu, Y.; Feng, A. Monitoring the summer flooding in the Poyang Lake area of China in 2020 based on Sentinel-1 data and multiple convolutional neural networks. Int. J. Appl. Earth Obs. Geoinf. J. 2021, 102, 102400. [Google Scholar] [CrossRef]

- Xu, H.; Zhu, P.; Luo, X.; Xie, T.; Zhang, L. Extracting Buildings from Remote Sensing Images Using a Multitask Encoder-Decoder Network with Boundary Refinement. Remote Sens. 2022, 14, 564. [Google Scholar] [CrossRef]

- De Lima, R.P.; Marfurt, K.; Duarte, D.; Bonar, A. Progress and Challenges in Deep Learning Analysis of Geoscience Images. In Proceedings of the 81st EAGE Conference and Exhibition 2019, London, UK, 3–6 June 2019; Volume 1, pp. 1–5. [Google Scholar] [CrossRef]

- Gao, S.; Li, W.; Sun, K.; Wei, J.; Chen, Y.; Wang, X. Built-Up Area Change Detection Using Multi-Task Network with Object-Level Refinement. Remote Sens. 2022, 14, 957. [Google Scholar] [CrossRef]

- Wu, C.; Du, B.; Cui, X.; Zhang, L. A post-classification change detection method based on iterative slow feature analysis and Bayesian soft fusion. Remote Sens. Environ. 2017, 199, 241–255. [Google Scholar] [CrossRef]

- Ji, S.; Shen, Y.; Lu, M.; Zhang, Y. Building Instance Change Detection from Large-Scale Aerial Images using Convolutional Neural Networks and Simulated Samples. Remote Sens. 2019, 11, 1343. [Google Scholar] [CrossRef] [Green Version]

- Nemoto, K.; Imaizumi, T.; Hikosaka, S.; Hamaguchi, R.; Sato, M.; Fujita, A. Building change detection via a combination of CNNs using only RGB aerial imageries. In Remote Sensing Technologies and Applications in Urban Environments II; International Society for Optics and Photonics: Warsaw, Poland, 2017; Volume 10431, p. 104310J. [Google Scholar] [CrossRef]

- Sun, Y.; Zhang, X.; Huang, J.; Wang, H.; Xin, Q. Fine-Grained Building Change Detection from Very High-Spatial-Resolution Remote Sensing Images Based on Deep Multitask Learning. IEEE Geosci. Remote Sens. Lett. 2020, 19, 1–5. [Google Scholar] [CrossRef]

- Chughtai, A.H.; Abbasi, H.; Karas, I.R. A review on change detection method and accuracy assessment for land use land cover. Remote Sens. Appl. Soc. Environ. 2021, 22, 100482. [Google Scholar] [CrossRef]

- Deng, J.S.; Wang, K.; Deng, Y.H.; Qi, G.J. PCA-based land-use change detection and analysis using multitemporal and multisensor satellite data. Int. J. Remote Sens. 2008, 29, 4823–4838. [Google Scholar] [CrossRef]

- Coppin, P.; Jonckheere, I.; Nackaerts, K.; Muys, B.; Lambin, E. Digital change detection methods in ecosystem monitoring: A review. Int. J. Remote Sens. 2004, 25, 1565–1596. [Google Scholar] [CrossRef]

- Zhang, C.; Yue, P.; Tapete, D.; Jiang, L.; Shangguan, B.; Huang, L.; Liu, G. A deeply supervised image fusion network for change detection in high resolution bi-temporal remote sensing images. ISPRS J. Photogramm. Remote Sens. 2020, 166, 183–200. [Google Scholar] [CrossRef]

- Bandara, W.G.C.; Patel, V.M. A Transformer-Based Siamese Network for Change Detection. arXiv 2022, arXiv:2201.01293. [Google Scholar]

- Zhu, X.X.; Tuia, D.; Mou, L.; Xia, G.-S.; Zhang, L.; Xu, F.; Fraundorfer, F. Deep Learning in Remote Sensing: A Comprehensive Review and List of Resources. IEEE Geosci. Remote Sens. Mag. 2017, 5, 8–36. [Google Scholar] [CrossRef] [Green Version]

- Gupta, A.; Welburn, E.; Watson, S.; Yin, H. CNN-Based Semantic Change Detection in Satellite Imagery. In International Conference on Artificial Neural Networks, Munich, Germany, 17–19 September 2019; Springer: Cham, Switzerland, 2019; pp. 669–684. [Google Scholar] [CrossRef]

- Zhang, Z.; Vosselman, G.; Gerke, M.; Tuia, D.; Yang, M.Y. Change detection between multimodal remote sensing data using siamese CNN. arXiv 2018, arXiv:1807.09562. [Google Scholar]

- Saha, S.; Bovolo, F.; Bruzzone, L. Building Change Detection in VHR SAR Images via Unsupervised Deep Transcoding. IEEE Trans. Geosci. Remote Sens. 2020, 59, 1917–1929. [Google Scholar] [CrossRef]

- Lyu, H.; Lu, H. A deep information based transfer learning method to detect annual urban dynamics of Beijing and New York from 1984–2016. In Proceedings of the 2017 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Fort Vorth, TX, USA, 23–28 July 2017; pp. 1958–1961. [Google Scholar] [CrossRef]

- Caye Daudt, R.; Le Saux, B.; Boulch, A.; Gousseau, Y. Urban change detection for multispectral Earth observation using Convolutional Neural Networks. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium (IGARSS’2018), Valencia, Spain, 22–27 July 2018; pp. 2115–2118. [Google Scholar] [CrossRef] [Green Version]

- Fang, S.; Li, K.; Shao, J.; Li, Z. SNUNet-CD: A Densely Connected Siamese Network for Change Detection of VHR Images. IEEE Geosci. Remote Sens. Lett. 2021, 19, 1–5. [Google Scholar] [CrossRef]

- Jiang, H.; Hu, X.; Li, K.; Zhang, J.; Gong, J.; Zhang, M. PGA-SiamNet: Pyramid Feature-Based Attention-Guided Siamese Network for Remote Sensing Orthoimagery Building Change Detection. Remote Sens. 2020, 12, 484. [Google Scholar] [CrossRef] [Green Version]

- Dong, H.; Ma, W.; Jiao, L.; Liu, F.; Li, L. A Multiscale Self-Attention Deep Clustering for Change Detection in SAR Images. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–16. [Google Scholar] [CrossRef]

- Chen, H.; Shi, Z. A Spatial-Temporal Attention-Based Method and a New Dataset for Remote Sensing Image Change Detection. Remote Sens. 2020, 12, 1662. [Google Scholar] [CrossRef]

- Wang, L.; Fang, S.; Zhang, C.; Li, R.; Duan, C. Efficient Hybrid Transformer: Learning Global-local Context for Urban Scene Segmentation. arXiv 2021, arXiv:2109.08937. [Google Scholar]

- Chen, H.; Qi, Z.; Shi, Z. Remote Sensing Image Change Detection with Transformers. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–14. [Google Scholar] [CrossRef]

- Ren, K.; Sun, W.; Meng, X.; Yang, G.; Du, Q. Fusing China GF-5 Hyperspectral Data with GF-1, GF-2 and Sentinel-2A Multispectral Data: Which Methods Should Be Used? Remote Sens. 2020, 12, 882. [Google Scholar] [CrossRef] [Green Version]

- Voulodimos, A.; Doulamis, N.; Doulamis, A.; Protopapadakis, E. Deep Learning for Computer Vision: A Brief Review. Comput. Intell. Neurosci. 2018, 2018, 7068349. [Google Scholar] [CrossRef]

- Huang, X.; Cao, Y.; Li, J. An automatic change detection method for monitoring newly constructed building areas using time-series multi-view high-resolution optical satellite images. Remote Sens. Environ. 2020, 244, 111802. [Google Scholar] [CrossRef]

- Li, L.; Wang, C.; Zhang, H.; Zhang, B.; Wu, F. Urban Building Change Detection in SAR Images Using Combined Differential Image and Residual U-Net Network. Remote Sens. 2019, 11, 1091. [Google Scholar] [CrossRef] [Green Version]

- El Amin, A.M.; Liu, Q.; Wang, Y. Zoom out CNNs features for optical remote sensing change detection. In Proceedings of the 2017 2nd International Conference on Image, Vision and Computing (ICIVC), Chengdu, China, 2–4 June 2017; pp. 812–817. [Google Scholar]

- Wan, L.; Xiang, Y.; You, H. A Post-Classification Comparison Method for SAR and Optical Images Change Detection. IEEE Geosci. Remote Sens. Lett. 2019, 16, 1026–1030. [Google Scholar] [CrossRef]

- Sublime, J.; Kalinicheva, E. Automatic Post-Disaster Damage Mapping Using Deep-Learning Techniques for Change Detection: Case Study of the Tohoku Tsunami. Remote Sens. 2019, 11, 1123. [Google Scholar] [CrossRef] [Green Version]

- Zhang, Y.; Zhang, S.; Li, Y.; Zhang, Y. Single- and Cross-Modality Near Duplicate Image Pairs Detection via Spatial Transformer Comparing CNN. Sensors 2021, 21, 255. [Google Scholar] [CrossRef]

| Method | Precision | Recall | F1_Score |

|---|---|---|---|

| STANet-BASE | 0.725 | 0.847 | 0.781 |

| STANet-BAM | 0.770 | 0.816 | 0.795 |

| STANet-PAM | 0.807 | 0.810 | 0.809 |

| SNUNet | 0.821 | 0.734 | 0.775 |

| BiT | 0.719 | 0.719 | 0.745 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhou, S.; Dong, Z.; Wang, G. Machine-Learning-Based Change Detection of Newly Constructed Areas from GF-2 Imagery in Nanjing, China. Remote Sens. 2022, 14, 2874. https://doi.org/10.3390/rs14122874

Zhou S, Dong Z, Wang G. Machine-Learning-Based Change Detection of Newly Constructed Areas from GF-2 Imagery in Nanjing, China. Remote Sensing. 2022; 14(12):2874. https://doi.org/10.3390/rs14122874

Chicago/Turabian StyleZhou, Shuting, Zhen Dong, and Guojie Wang. 2022. "Machine-Learning-Based Change Detection of Newly Constructed Areas from GF-2 Imagery in Nanjing, China" Remote Sensing 14, no. 12: 2874. https://doi.org/10.3390/rs14122874