CAISOV: Collinear Affine Invariance and Scale-Orientation Voting for Reliable Feature Matching

Abstract

1. Introduction

- (1)

- A reliable geometric constraint, namely CAI (Collinear Affine Invariance) has been designed, which has two advantages. On one hand, the mathematical model of the CAI (collinear affine invariance) is simple, which uses the collinearity of feature points as support to separate outliers from initial matches. Compared with RANSAC-like methods, this enables the ability of processing rigid and non-rigid images; on the other hand, CAI uses the collinear features within image space and has a global observation of initial matches, in contrast to the other local constraint based methods.

- (2)

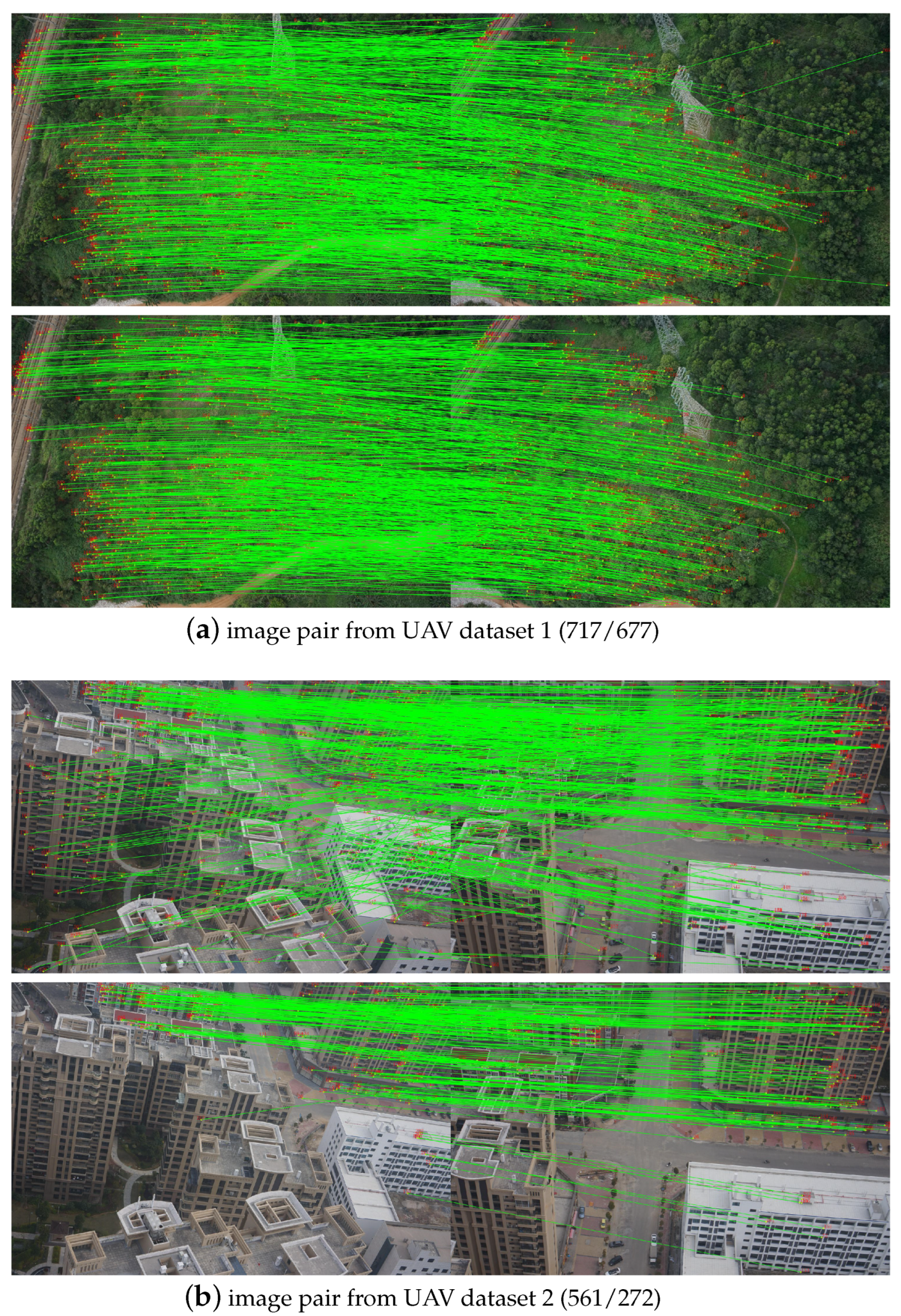

- Based on CAI and SOV (Scale-Orientation Voting) constraints, a hierarchical outlier-removal algorithm has been designed and implemented, which is reliable to high outlier ratios. By using both rigid and non-rigid images, the performance of the proposed algorithm has been verified and compared with state-of-the-art methods. Furthermore, the proposed algorithm achieves good performance in UAV image orientation.

2. Methodology

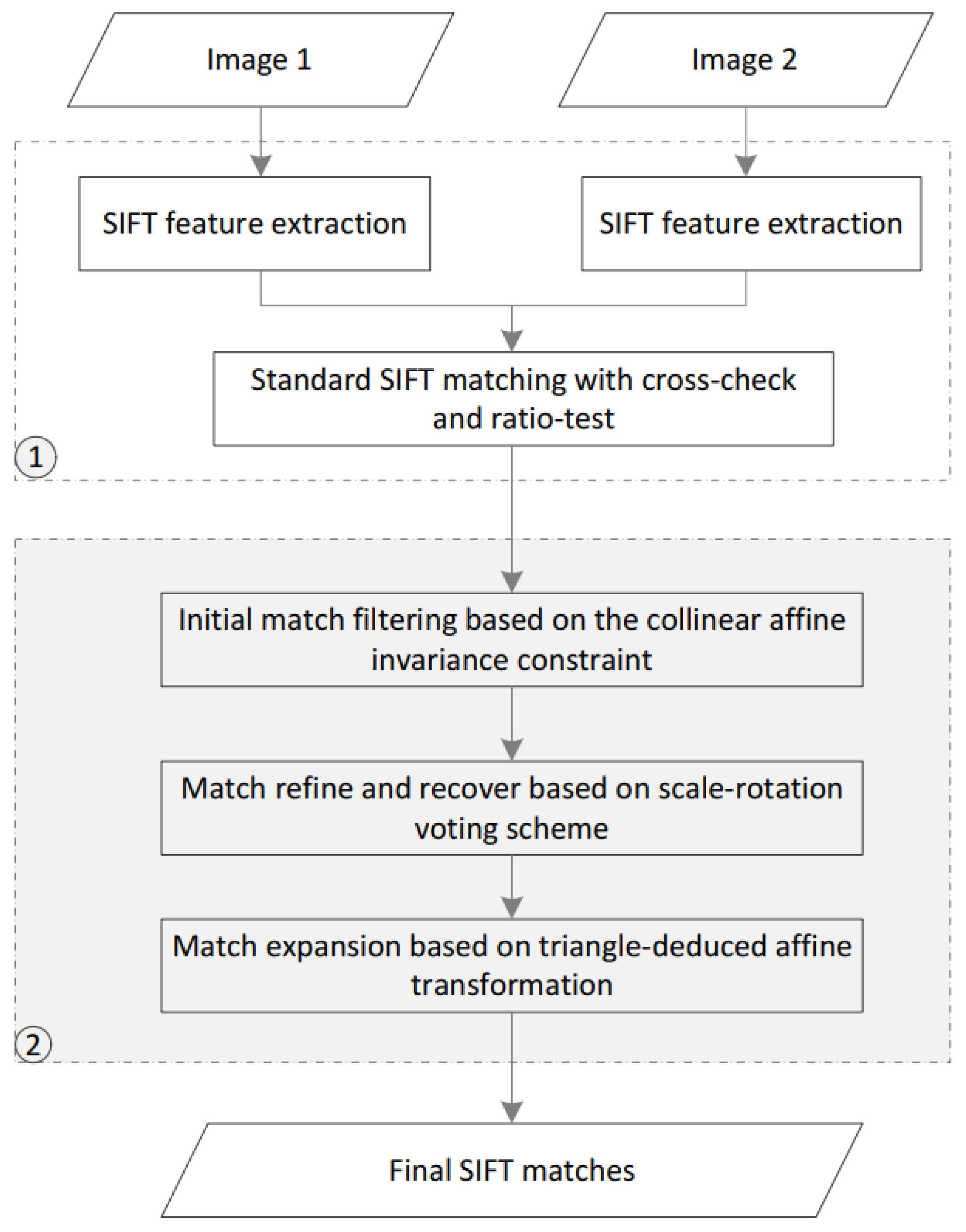

2.1. The Overview of the Proposed Algorithm

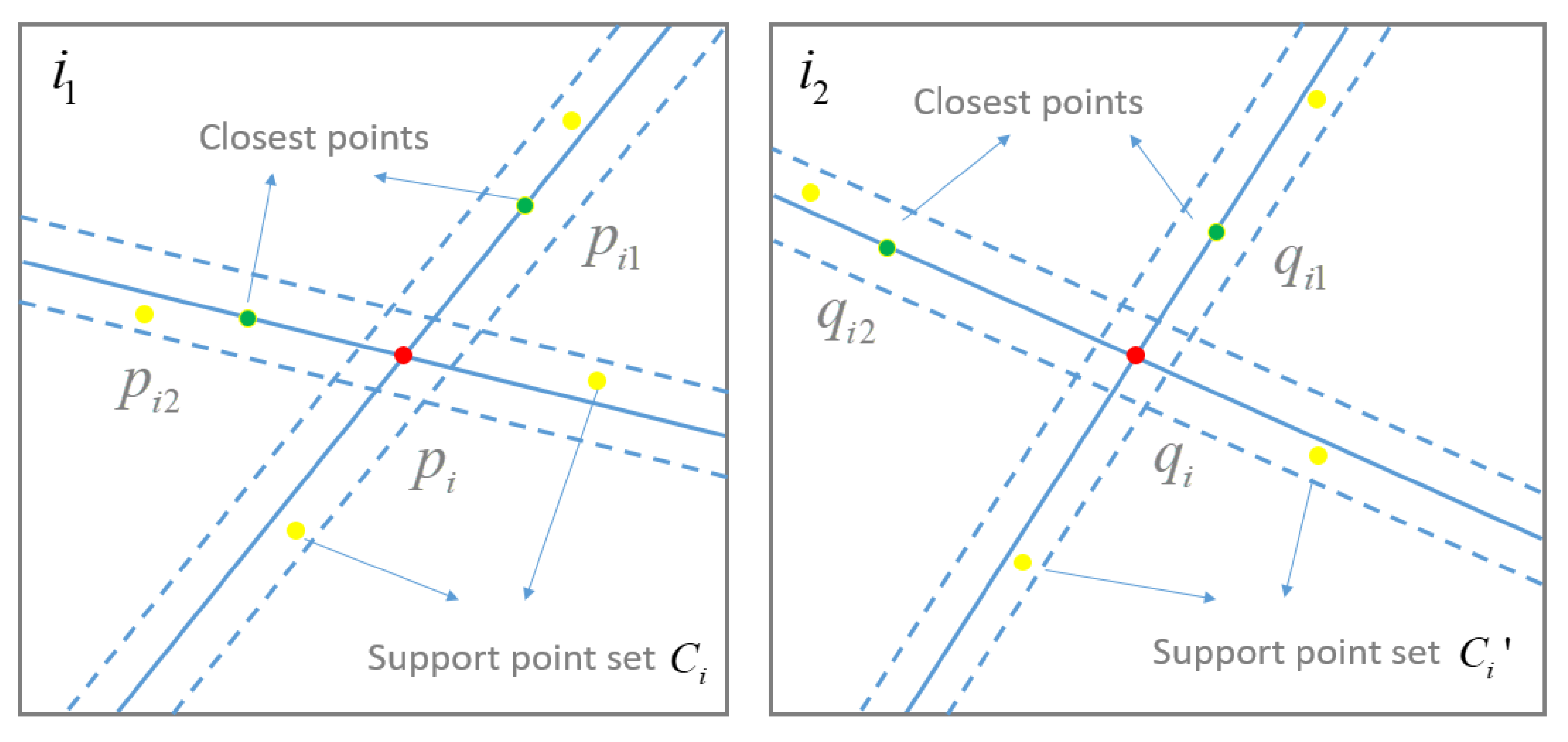

2.2. Collinear Affine Invariance-Based Geometric Constraint

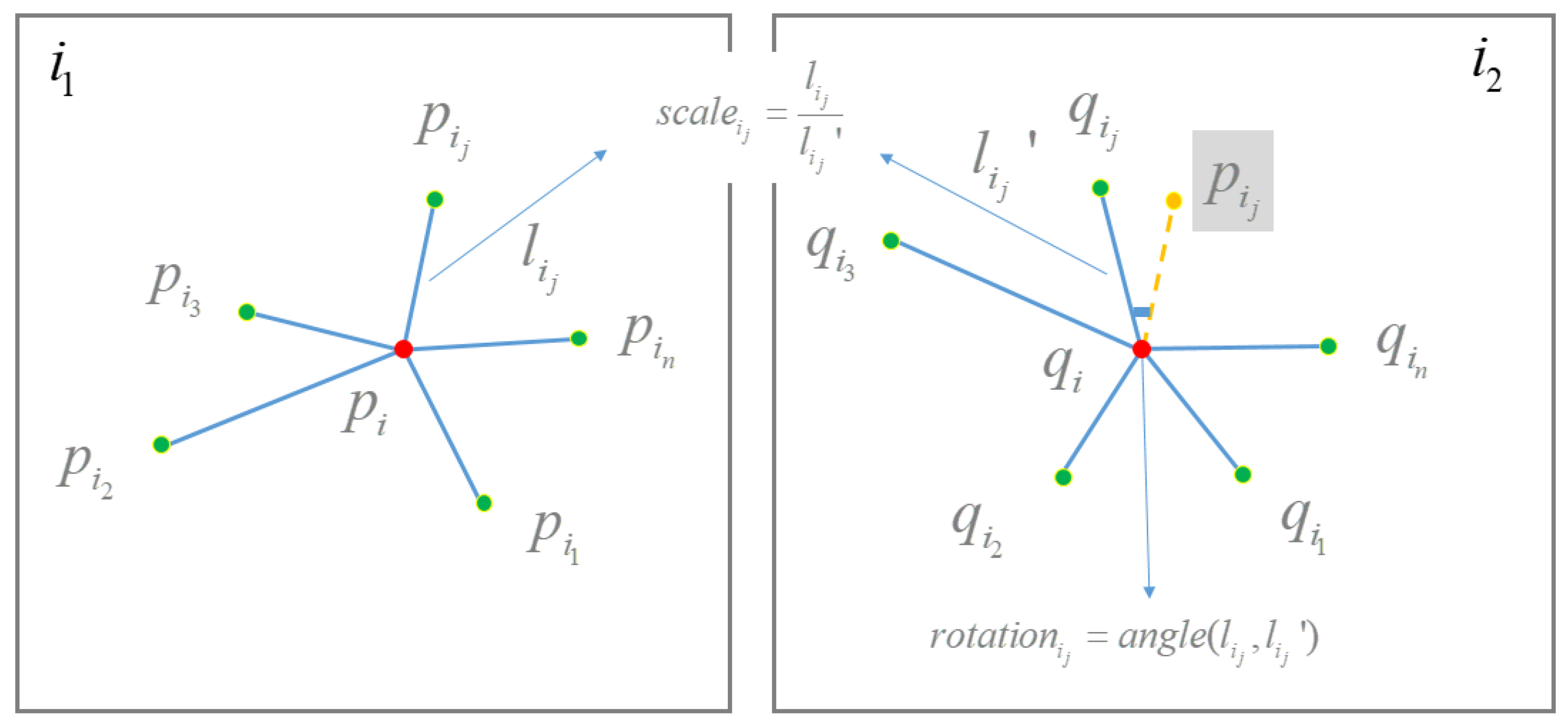

2.3. Scale-Orientation Voting-Based Geometric Constraint

2.4. Implementation of the Proposed Algorithm

- (1)

- Outlier removal based on the CAI geometric constraint. According to Equation (1), the similarity score of each match can be calculated. To cope with high outlier ratios, initial matches with the similarity score that equals zero are first directly eliminated. For the remaining matches, the similarity score is calculated again, and the matches with a similarity score less than a pre-defined threshold are removed. The above-mentioned operations are iteratively executed until the similarity scores of all matches are greater than . In this study, the threshold is set as 0.1.

- (2)

- Outlier removal based on the SOV geometric constraint. After the execution of step (1), the retained matches with higher inlier ratios are then used to search the correct scale and rotation parameters between image pairs and . The matches are labeled as inliers if their scale and rotation parameters fall into the correct voting bins; otherwise, the matches are labeled as outliers. To recover falsely removed matches, match expansion is simultaneously executed in this step. In detail, for each removed match , the scale and rotation parameters are calculated again. If these parameters fall into the correct voting bin, the match is grouped with the inliers.

- (3)

- Match expansion based on the triangle constraint. After the execution of CAI and SOV constraints, refined matches with high inlier ratios can be obtained. To further recover more inliers, match expansion is conducted again based on the transformation deduced from corresponding triangles, as presented in [29]. During match expansion, refined matches are used to construct the Delaunay triangulation and its corresponding graph. For each feature point , candidate feature points are found by using the transformation that is deduced from two corresponding triangles, and the classical local feature matching is executed between feature point and candidate feature points . The workflow of the proposed CAISOV algorithm is presented in Algorithm 1.

| Algorithm 1 CAISOV |

Input: Initial candidate matches M Output: final matches

|

3. Experimental Results

3.1. Datasets and Evaluation Metrics

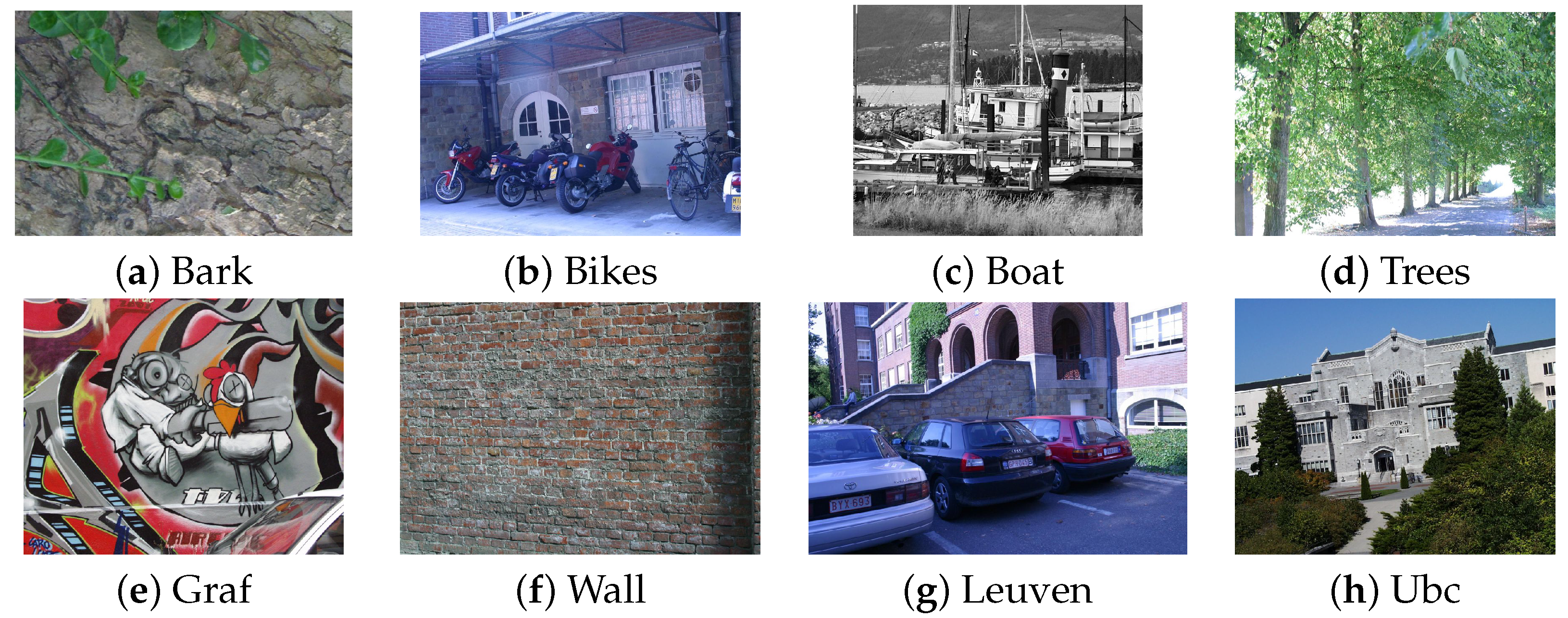

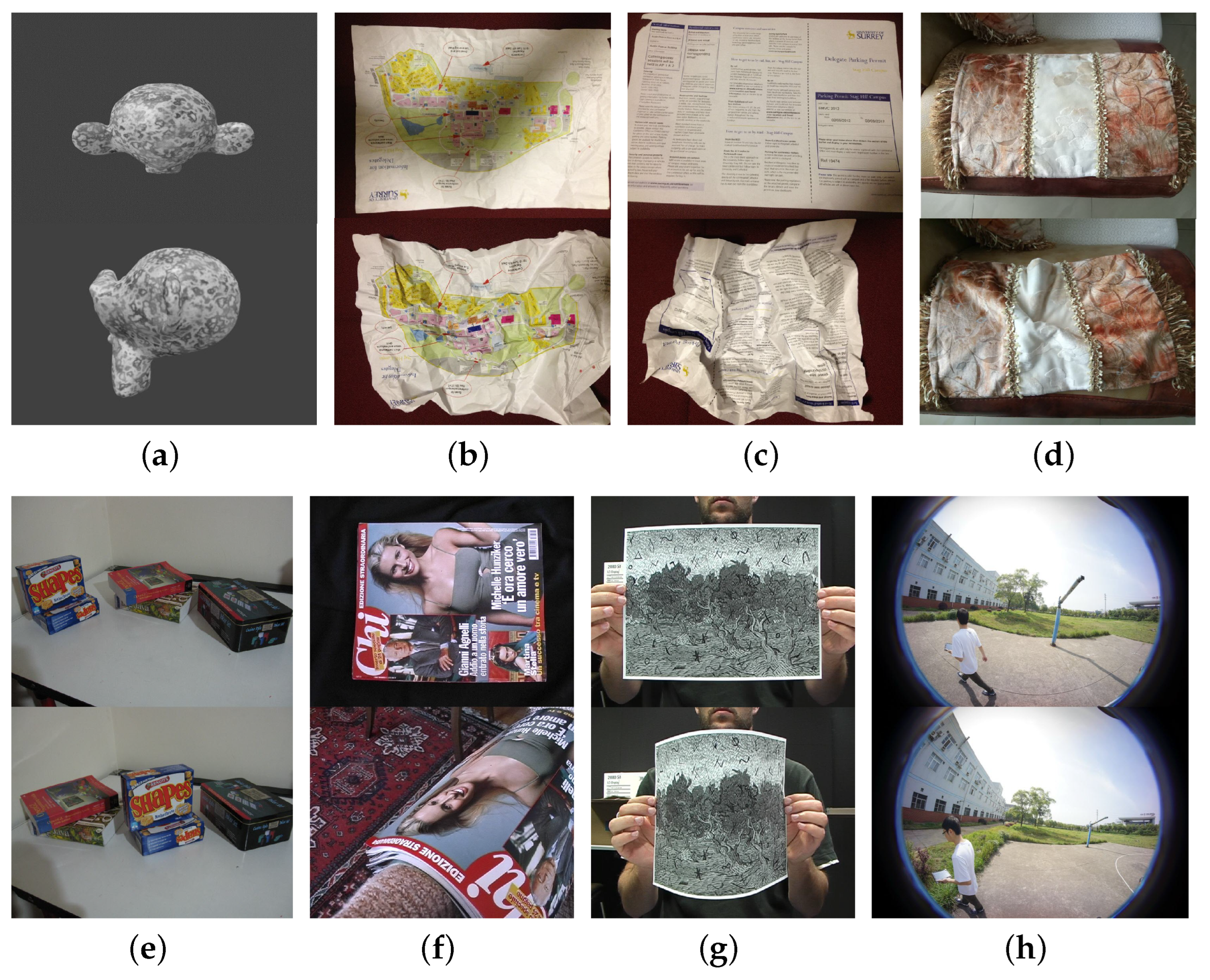

- The first dataset is the well-known benchmark dataset, termed the Oxford dataset, which has been widely used for the evaluation of feature detectors and descriptors [36], as presented in Figure 4. This dataset consists of a total number of eight image sequences, in which each image sequence has six images with varying photometric and geometric deformations, e.g., changes of viewpoint and illumination, motion blur, and scale and rotation.

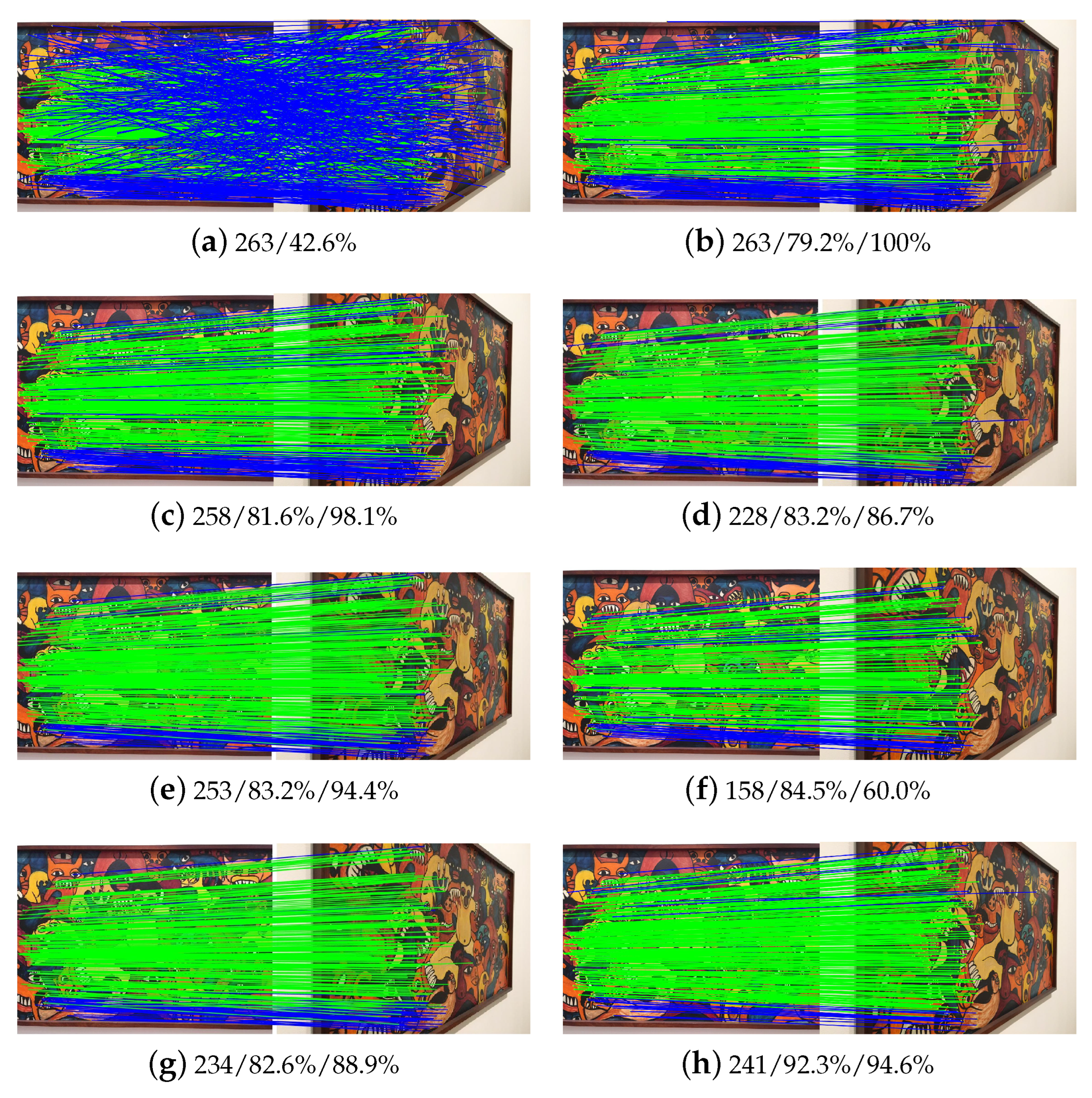

- The second dataset is the well-known HPatches benchmark [37], which has been widely used for training and testing feature descriptors based on CNN models. Similar to the Oxford dataset, 117 image sequences that consist of 6 images have been prepared in this dataset, among which 8 image sequences have been selected for the performance evaluation in rigid image matching, as shown in Figure 5.

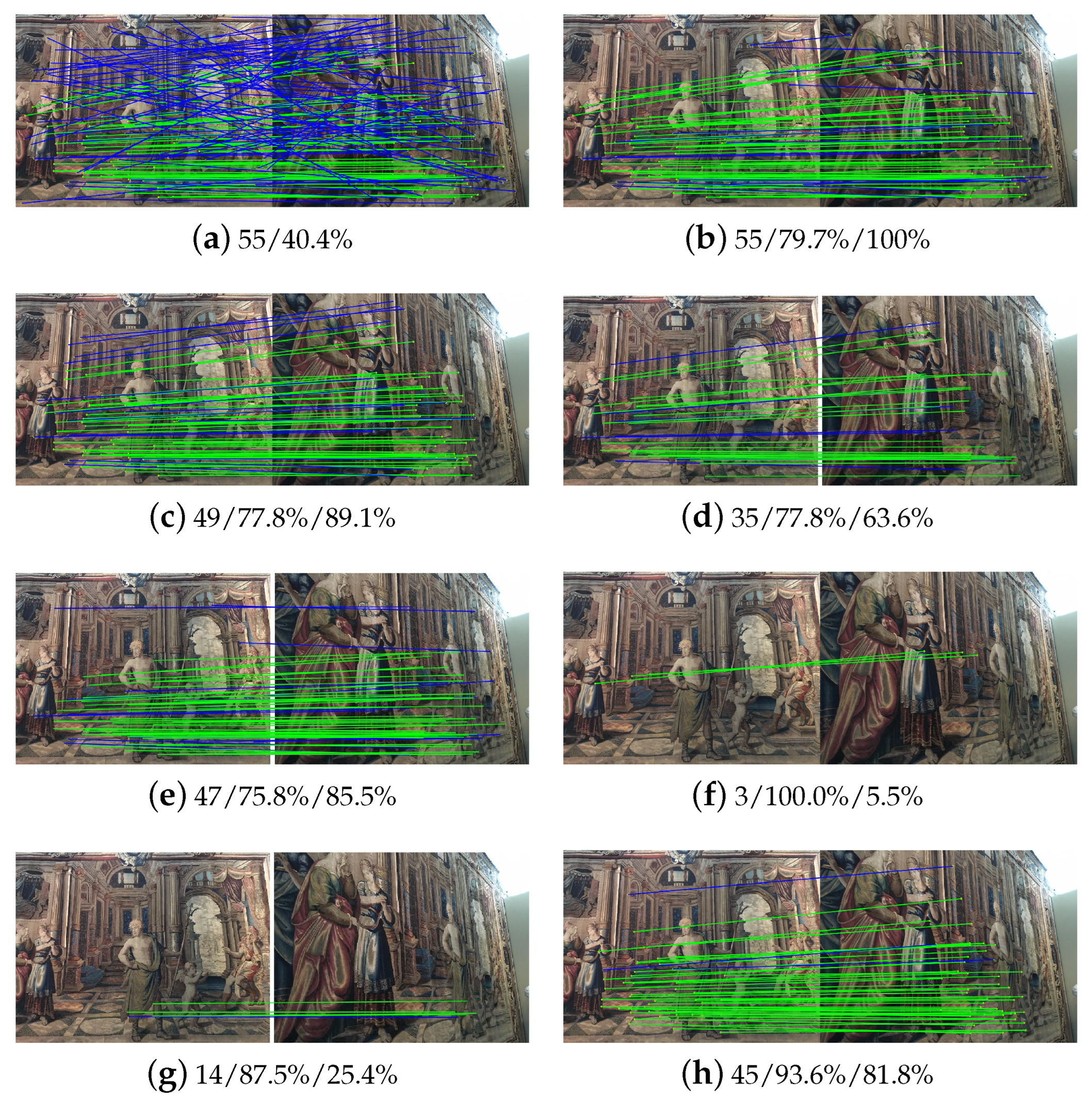

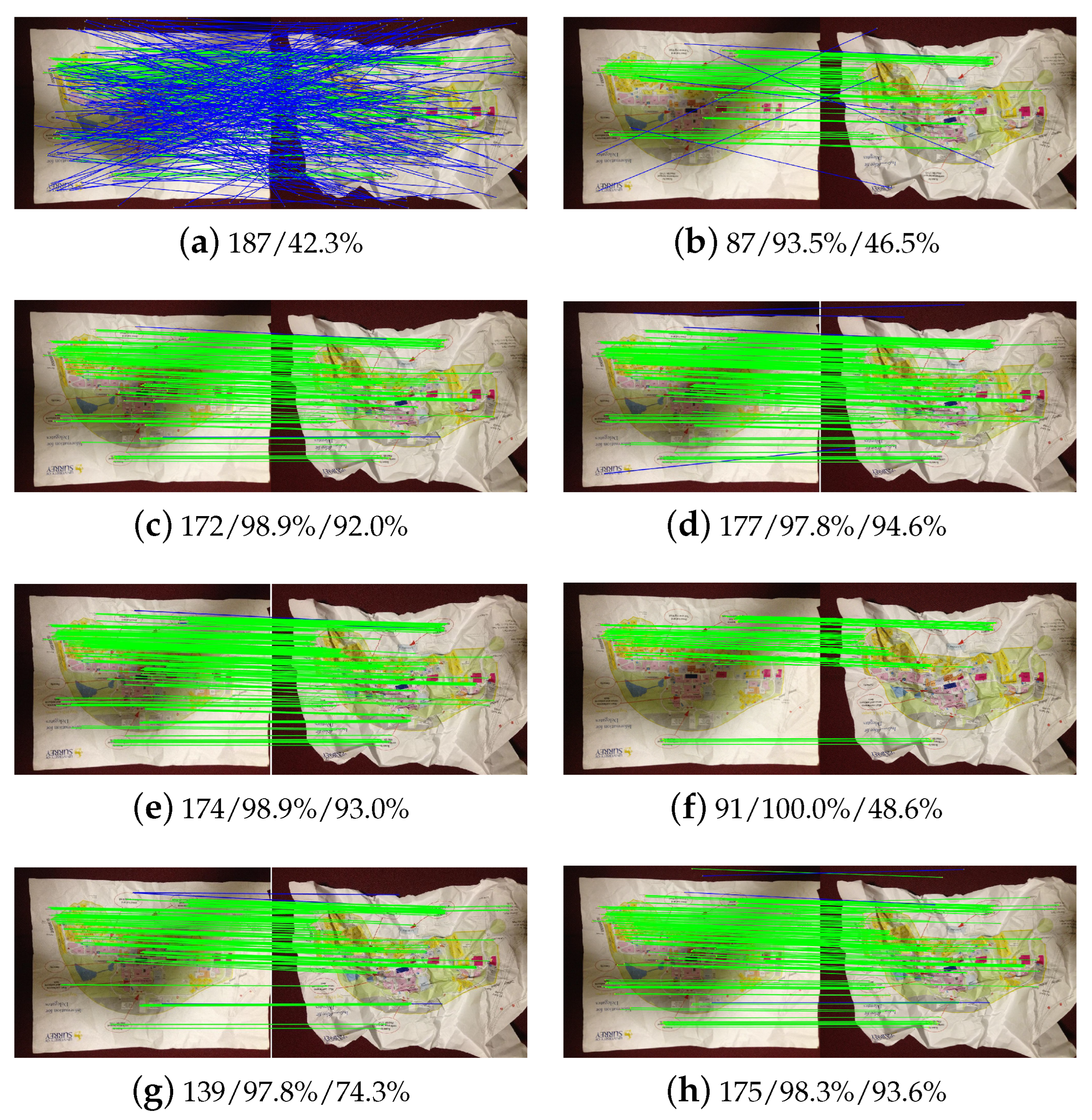

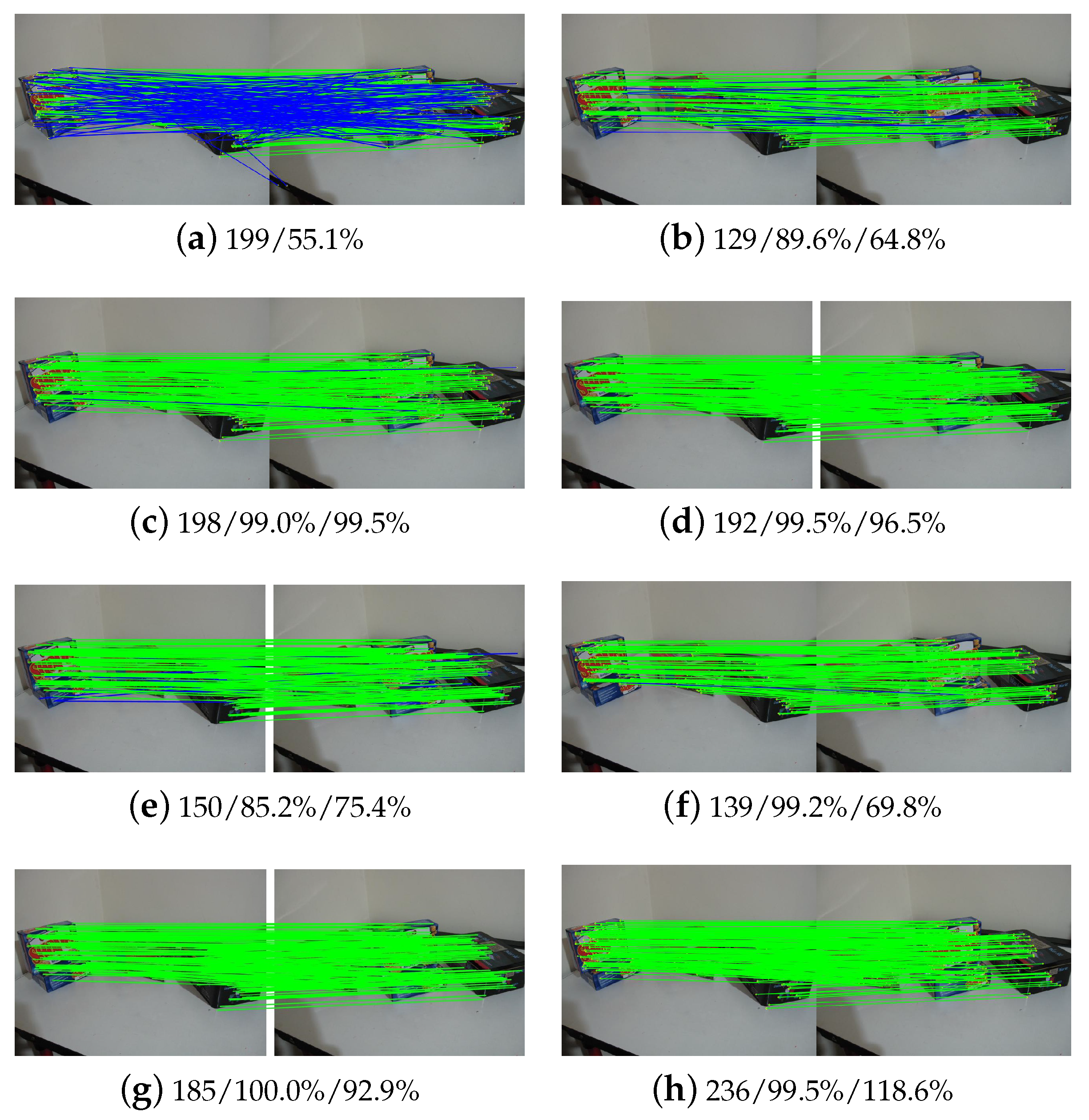

- The third dataset includes 8 image pairs that have non-rigid deformations, such as blend and extrusion, as shown in Figure 6. For the used three datasets, the first and second datasets have the ground-truth transformation between image pairs, such as the homography matrix for the first and the other images in each image sequence. For these two datasets, the provided model parameters have been used to separate inliers from initial matches. In this study, the matches with the transformation errors that are less than 5 pixels are defined as inliers. For the third dataset, we have prepared ground-truth data through manual inspection.

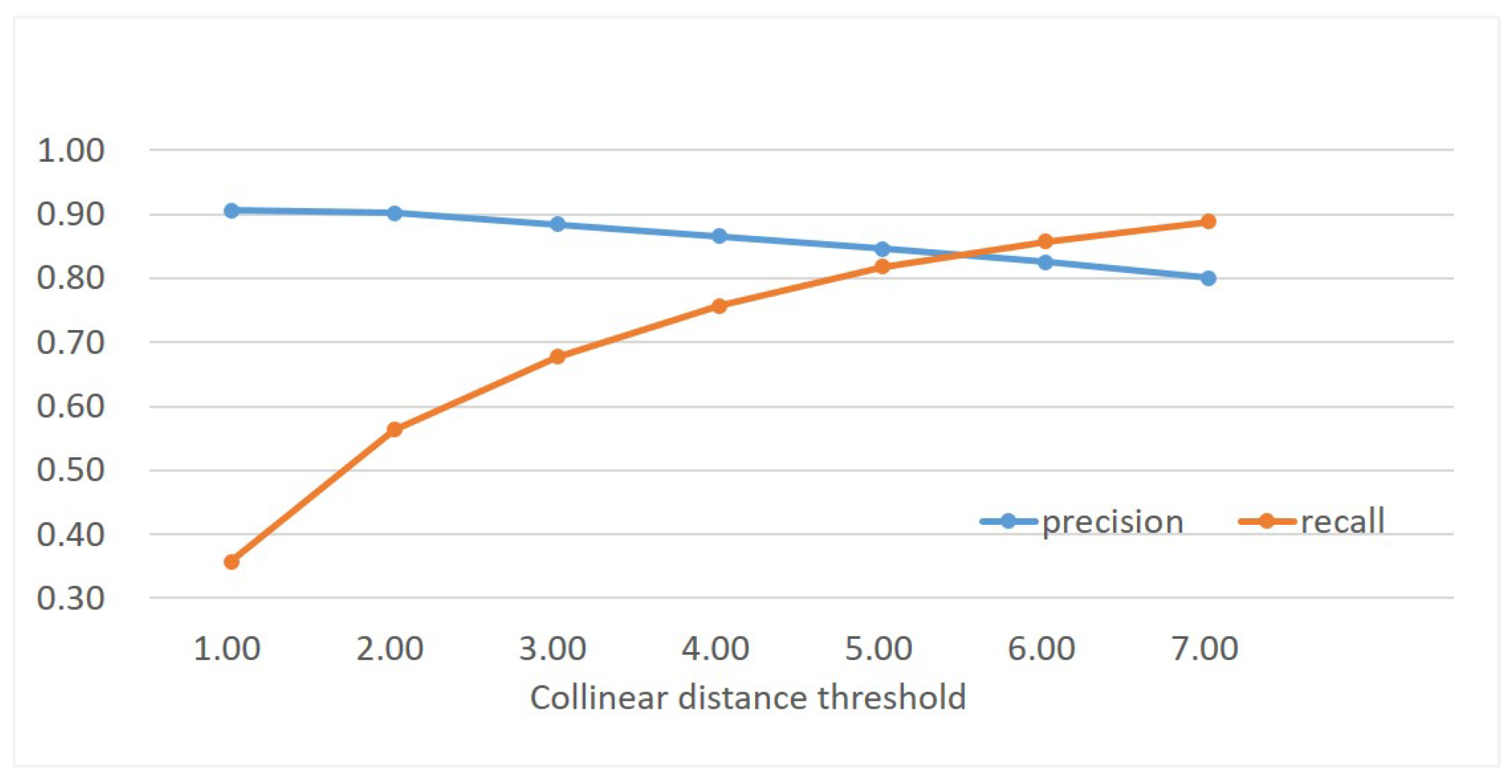

3.2. The Influence of the Collinear Distance Threshold on Outlier Removal

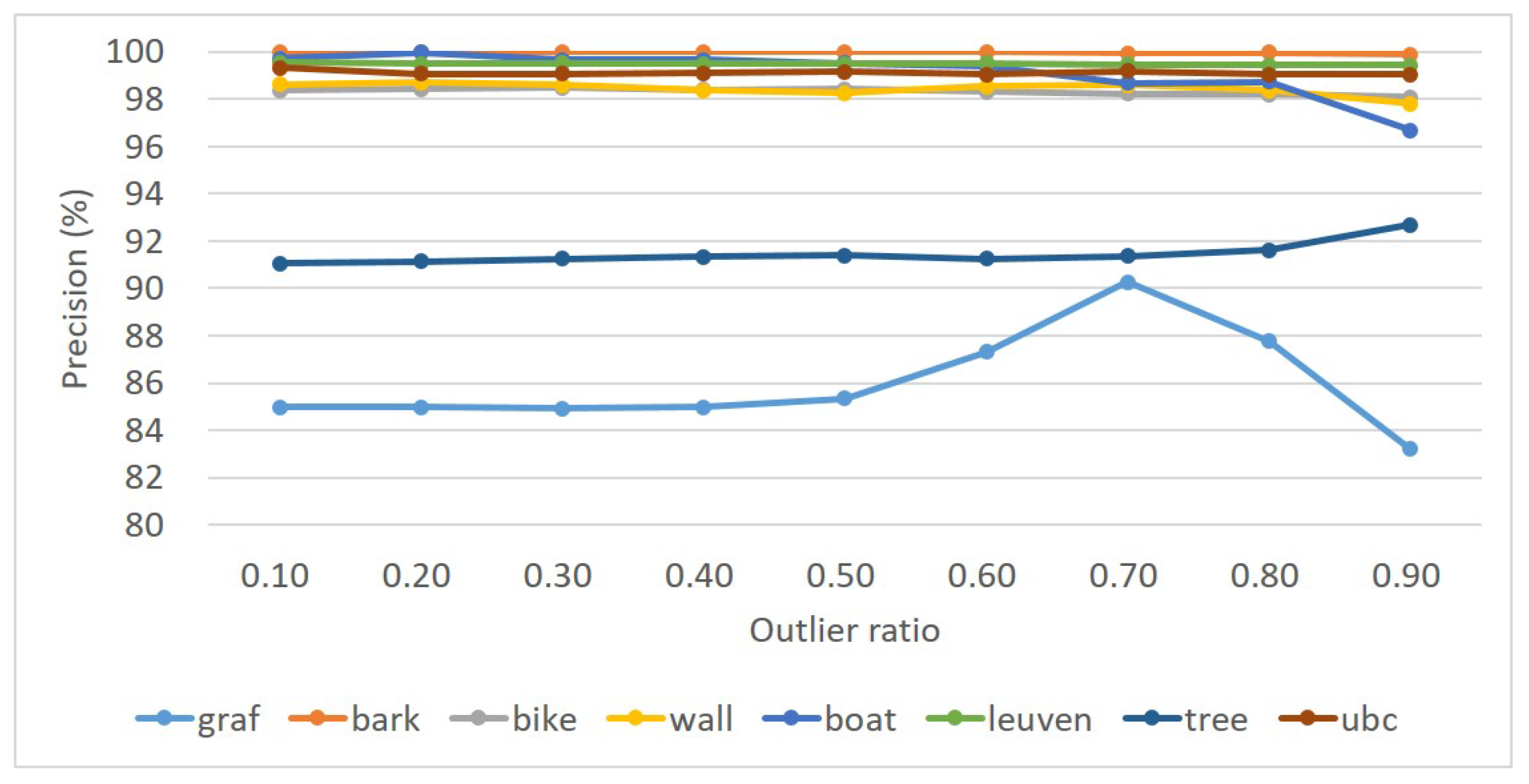

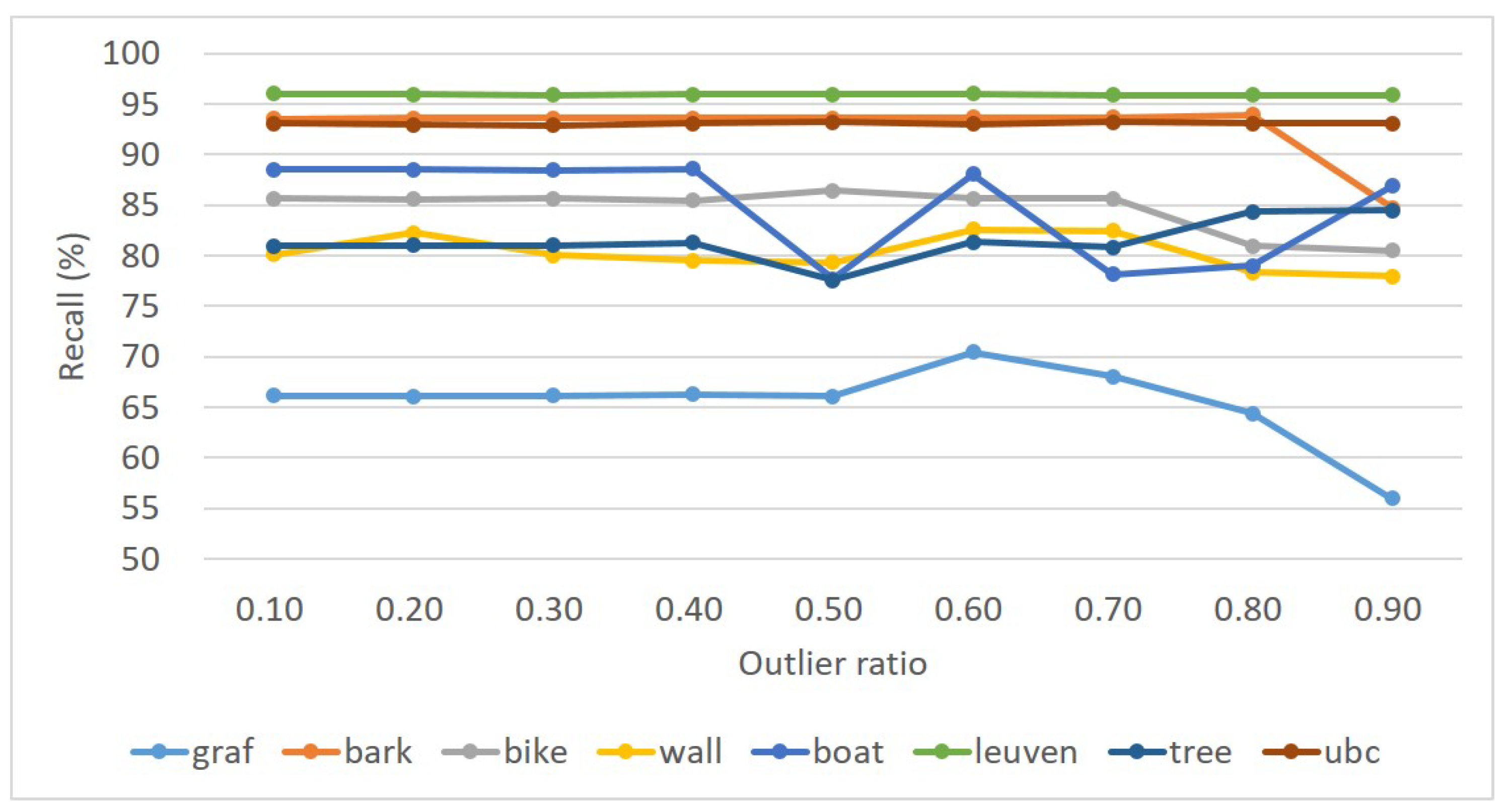

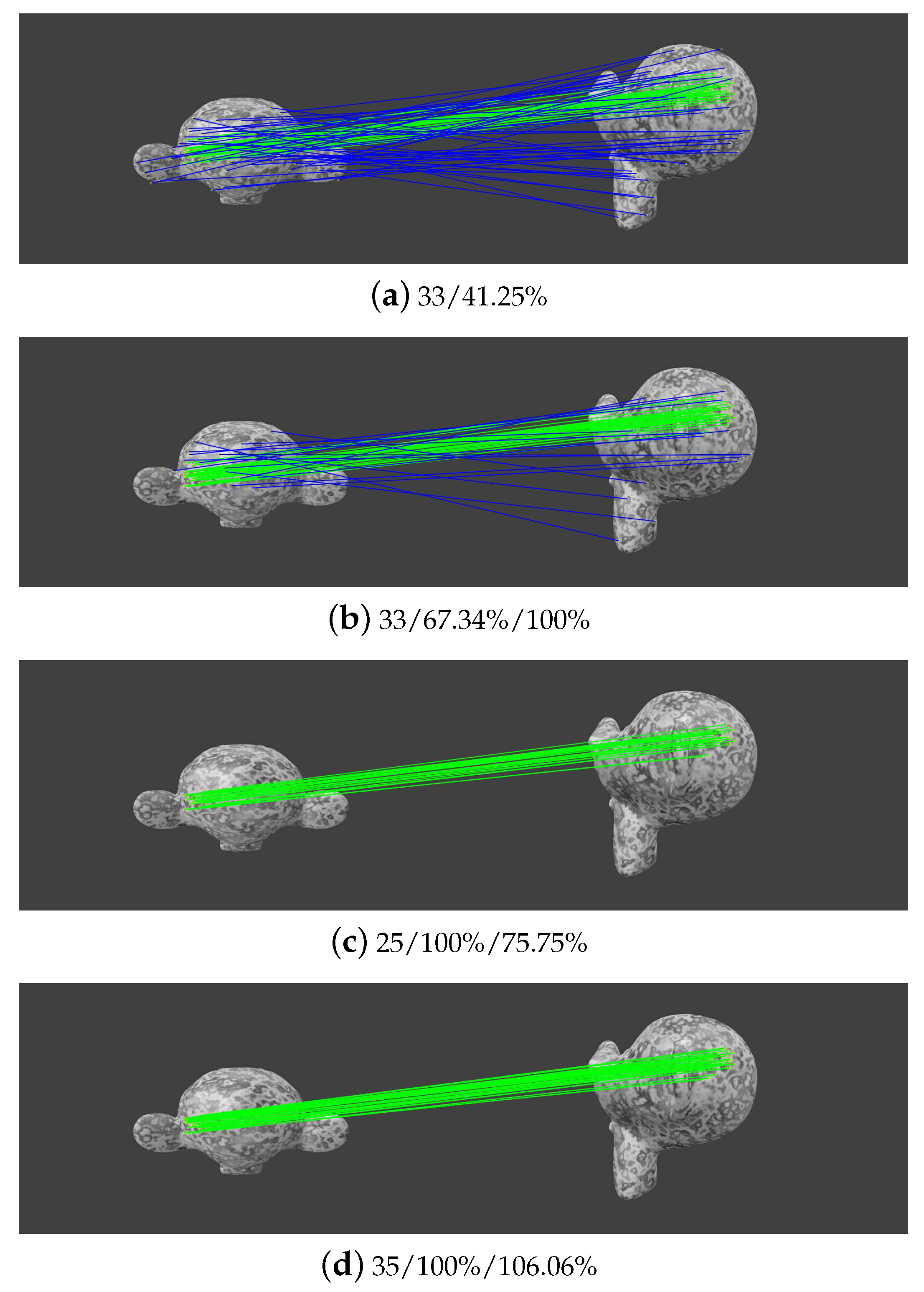

3.3. The Analysis of the Robustness to Outliers of the Algorithm

3.4. Outlier Elimination Based on the Proposed Algorithm

3.5. Comparison with State-of-the-Art Methods

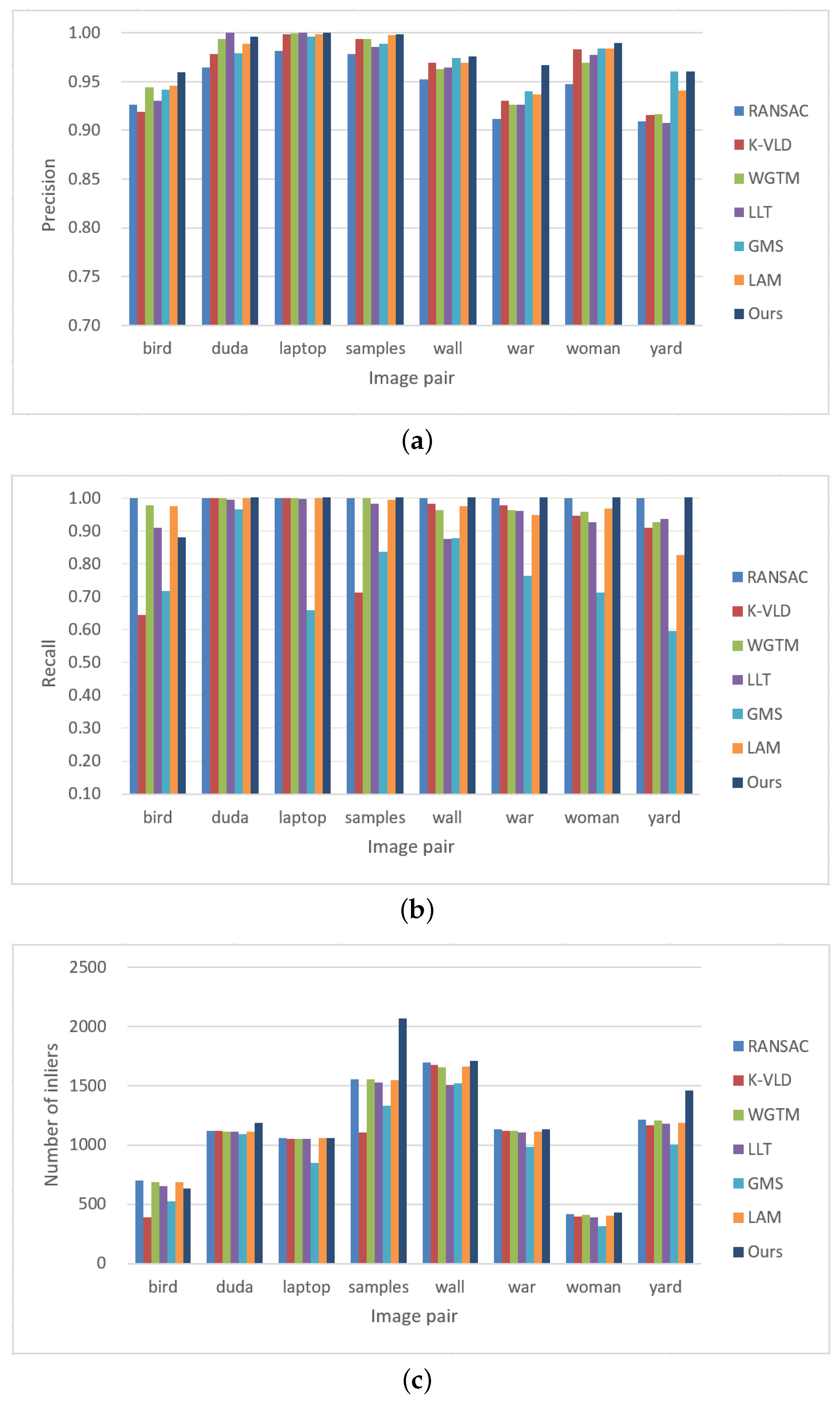

3.5.1. Performance Comparison Using the Rigid Dataset

3.5.2. Performance Comparison Using the Non-Rigid Dataset

3.6. Application of the Proposed Algorithm for SfM-Based UAV Image Orientation

4. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Habib, A.; Han, Y.; Xiong, W.; He, F.; Zhang, Z.; Crawford, M. Automated Ortho-Rectification of UAV-Based Hyperspectral Data over an Agricultural Field Using Frame RGB Imagery. Remote Sens. 2016, 8, 796. [Google Scholar] [CrossRef]

- Jiang, S.; Jiang, W.; Guo, B. Leveraging vocabulary tree for simultaneous match pair selection and guided feature matching of UAV images. ISPRS J. Photogramm. Remote Sens. 2022, 187, 273–293. [Google Scholar] [CrossRef]

- Ye, Y.; Shan, J. A local descriptor based registration method for multispectral remote sensing images with non-linear intensity differences. ISPRS J. Photogramm. Remote Sens. 2014, 90, 83–95. [Google Scholar] [CrossRef]

- Sattler, T.; Leibe, B.; Kobbelt, L. Efficient & effective prioritized matching for large-scale image-based localization. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 1744–1756. [Google Scholar]

- Jian, M.; Wang, J.; Yu, H.; Wang, G.; Meng, X.; Yang, L.; Dong, J.; Yin, Y. Visual saliency detection by integrating spatial position prior of object with background cues. Expert Syst. Appl. 2021, 168, 114219. [Google Scholar] [CrossRef]

- Jiang, S.; Jiang, C.; Jiang, W. Efficient structure from motion for large-scale UAV images: A review and a comparison of SfM tools. ISPRS J. Photogramm. Remote Sens. 2020, 167, 230–251. [Google Scholar] [CrossRef]

- Fan, B.; Kong, Q.; Wang, X.; Wang, Z.; Xiang, S.; Pan, C.; Fua, P. A performance evaluation of local features for image-based 3D reconstruction. IEEE Trans. Image Process. 2019, 28, 4774–4789. [Google Scholar] [CrossRef]

- Jiang, S.; Jiang, W.; Guo, B.; Li, L.; Wang, L. Learned Local Features for Structure From Motion of UAV Images: A Comparative Evaluation. IEEE JOurnal Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 10583–10597. [Google Scholar] [CrossRef]

- Harris, C.; Stephens, M. A combined corner and edge detector. In Proceedings of the Alvey Vision Conference, Manchester, UK, 31 August–2 September 1988; Volume 15, pp. 147–151. [Google Scholar]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Dong, J.; Soatto, S. Domain-size pooling in local descriptors: DSP-SIFT. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 5097–5106. [Google Scholar]

- Morel, J.M.; Yu, G. ASIFT: A new framework for fully affine invariant image comparison. SIAM J. Imaging Sci. 2009, 2, 438–469. [Google Scholar] [CrossRef]

- Sun, Y.; Zhao, L.; Huang, S.; Yan, L.; Dissanayake, G. L2-SIFT: SIFT feature extraction and matching for large images in large-scale aerial photogrammetry. ISPRS J. Photogramm. Remote Sens. 2014, 91, 1–16. [Google Scholar] [CrossRef]

- Han, X.; Leung, T.; Jia, Y.; Sukthankar, R.; Berg, A.C. Matchnet: Unifying feature and metric learning for patch-based matching. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3279–3286. [Google Scholar]

- Simo-Serra, E.; Trulls, E.; Ferraz, L.; Kokkinos, I.; Fua, P.; Moreno-Noguer, F. Discriminative learning of deep convolutional feature point descriptors. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 118–126. [Google Scholar]

- Mishchuk, A.; Mishkin, D.; Radenovic, F.; Matas, J. Working hard to know your neighbor’s margins: Local descriptor learning loss. In Proceedings of the 31st International Conference on Neural Information Processing Systems NIPS’17, Long Beach, CA, USA, 4–9 December 2017; pp. 4829–4840. [Google Scholar]

- Tian, Y.; Fan, B.; Wu, F. L2-net: Deep learning of discriminative patch descriptor in euclidean space. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 661–669. [Google Scholar]

- Luo, Z.; Shen, T.; Zhou, L.; Zhang, J.; Yao, Y.; Li, S.; Fang, T.; Quan, L. Contextdesc: Local descriptor augmentation with cross-modality context. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 2527–2536. [Google Scholar]

- DeTone, D.; Malisiewicz, T.; Rabinovich, A. Superpoint: Self-supervised interest point detection and description. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Salt Lake City, UT, USA, 18–22 June 2018; pp. 224–236. [Google Scholar]

- Dusmanu, M.; Rocco, I.; Pajdla, T.; Pollefeys, M.; Sivic, J.; Torii, A.; Sattler, T. D2-net: A trainable cnn for joint description and detection of local features. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 8092–8101. [Google Scholar]

- Jiang, S.; Jiang, W.; Wang, L. Unmanned Aerial Vehicle-Based Photogrammetric 3D Mapping: A Survey of Techniques, Applications, and Challenges. IEEE Geosci. Remote Sens. Mag. 2021. [Google Scholar] [CrossRef]

- Fischler, M.A.; Bolles, R.C. Random sample consensus: A paradigm for model fitting with applications to image analysis and automated cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Chum, O.; Matas, J. Optimal randomized RANSAC. IEEE Trans. Pattern Anal. Mach. Intell. 2008, 30, 1472–1482. [Google Scholar] [CrossRef]

- Raguram, R.; Chum, O.; Pollefeys, M.; Matas, J.; Frahm, J.M. USAC: A universal framework for random sample consensus. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 2022–2038. [Google Scholar] [CrossRef]

- Lu, L.; Zhang, Y.; Tao, P. Geometrical Consistency Voting Strategy for Outlier Detection in Image Matching. Photogramm. Eng. Remote Sens. 2016, 82, 559–570. [Google Scholar] [CrossRef]

- Jiang, S.; Jiang, W. Hierarchical motion consistency constraint for efficient geometrical verification in UAV stereo image matching. ISPRS J. Photogramm. Remote Sens. 2018, 142, 222–242. [Google Scholar] [CrossRef]

- Jiang, S.; Jiang, W. Reliable image matching via photometric and geometric constraints structured by Delaunay triangulation. ISPRS J. Photogramm. Remote Sens. 2019, 153, 1–20. [Google Scholar] [CrossRef]

- Li, J.; Hu, Q.; Ai, M. 4FP-structure: A robust local region feature descriptor. Photogramm. Eng. Remote Sens. 2017, 83, 813–826. [Google Scholar] [CrossRef]

- Jiang, S.; Jiang, W.; Li, L.; Wang, L.; Huang, W. Reliable and Efficient UAV Image Matching via Geometric Constraints Structured by Delaunay Triangulation. Remote Sens. 2020, 12, 3390. [Google Scholar] [CrossRef]

- Hu, H.; Zhu, Q.; Du, Z.; Zhang, Y.; Ding, Y. Reliable spatial relationship constrained feature point matching of oblique aerial images. Photogramm. Eng. Remote Sens. 2015, 81, 49–58. [Google Scholar] [CrossRef]

- Aguilar, W.; Frauel, Y.; Escolano, F.; Martinez-Perez, M.E.; Espinosa-Romero, A.; Lozano, M.A. A robust graph transformation matching for non-rigid registration. Image Vis. Comput. 2009, 27, 897–910. [Google Scholar] [CrossRef]

- Izadi, M.; Saeedi, P. Robust weighted graph transformation matching for rigid and nonrigid image registration. IEEE Trans. Image Process. 2012, 21, 4369–4382. [Google Scholar] [CrossRef]

- Ma, J.; Zhao, J.; Tian, J.; Yuille, A.L.; Tu, Z. Robust point matching via vector field consensus. IEEE Trans. Image Process. 2014, 23, 1706–1721. [Google Scholar] [CrossRef]

- Bian, J.; Lin, W.Y.; Matsushita, Y.; Yeung, S.K.; Nguyen, T.D.; Cheng, M.M. Gms: Grid-based motion statistics for fast, ultra-robust feature correspondence. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4181–4190. [Google Scholar]

- Wu, C. A GPU Implementation of Scale Invariant Feature Transform (SIFT). Available online: http://www.cs.unc.edu/ccwu/siftgpu/ (accessed on 20 May 2022).

- Mikolajczyk, K.; Schmid, C. A performance evaluation of local descriptors. IEEE Trans. Pattern Anal. Mach. Intell. 2005, 27, 1615–1630. [Google Scholar] [CrossRef]

- Balntas, V.; Lenc, K.; Vedaldi, A.; Mikolajczyk, K. HPatches: A benchmark and evaluation of handcrafted and learned local descriptors. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 5173–5182. [Google Scholar]

- Liu, Z.; Marlet, R. Virtual line descriptor and semi-local matching method for reliable feature correspondence. In Proceedings of the British Machine Vision Conference 2012, Surrey, UK, 3–7 September 2012; p. 16. [Google Scholar] [CrossRef]

- Li, J.; Hu, Q.; Ai, M. LAM: Locality affine-invariant feature matching. ISPRS J. Photogramm. Remote Sens. 2019, 154, 28–40. [Google Scholar] [CrossRef]

- Jiang, S.; Jiang, W. Efficient structure from motion for oblique UAV images based on maximal spanning tree expansion. ISPRS J. Photogramm. Remote Sens. 2017, 132, 140–161. [Google Scholar] [CrossRef]

| Item | RANSAC | K-VLD | WGTM | LLT | GMS | LAM | Ours |

|---|---|---|---|---|---|---|---|

| Precision (%) | 94.62 | 96.08 | 96.31 | 96.13 | 96.93 | 97.02 | (97.27) 98.06 |

| Recall (%) | 99.99 | 89.65 | 97.36 | 94.76 | 78.36 | 96.12 | (81.21) 106.21 |

| No. inliers | 1101 | 1003 | 1099 | 1065 | 952 | 1096 | (951) 1210 |

| Time (s) | 0.043 | 0.833 | 1276.081 | 1.261 | 0.122 | 0.107 | 1.054 |

| Item | RANSAC | K-VLD | WGTM | LLT | GMS | LAM | Ours |

|---|---|---|---|---|---|---|---|

| Precision (%) | 90.83 | 98.77 | 96.83 | 92.00 | 99.23 | 98.57 | (99.19) 99.43 |

| Recall (%) | 70.23 | 93.75 | 95.36 | 91.43 | 53.60 | 77.14 | (74.59) 117.84 |

| No. inliers | 108 | 150 | 151 | 142 | 82 | 122 | (120) 195 |

| Time (s) | 0.146 | 0.842 | 55.711 | 0.705 | 0.250 | 0.031 | 0.062 |

| Dataset | Efficiency (min) | Precision (Pixel) | Completeness | |||

|---|---|---|---|---|---|---|

| RANSAC | Our | RANSAC | Our | RANSAC | Our | |

| 1 | 12.9 | 14.5 | 0.632 | 0.646 | 209,514 (320) | 236,800 (320) |

| 2 | 37.5 | 40.7 | 0.725 | 0.731 | 370,055 (750) | 379,989 (750) |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Luo, H.; Liu, K.; Jiang, S.; Li, Q.; Wang, L.; Jiang, W. CAISOV: Collinear Affine Invariance and Scale-Orientation Voting for Reliable Feature Matching. Remote Sens. 2022, 14, 3175. https://doi.org/10.3390/rs14133175

Luo H, Liu K, Jiang S, Li Q, Wang L, Jiang W. CAISOV: Collinear Affine Invariance and Scale-Orientation Voting for Reliable Feature Matching. Remote Sensing. 2022; 14(13):3175. https://doi.org/10.3390/rs14133175

Chicago/Turabian StyleLuo, Haihan, Kai Liu, San Jiang, Qingquan Li, Lizhe Wang, and Wanshou Jiang. 2022. "CAISOV: Collinear Affine Invariance and Scale-Orientation Voting for Reliable Feature Matching" Remote Sensing 14, no. 13: 3175. https://doi.org/10.3390/rs14133175

APA StyleLuo, H., Liu, K., Jiang, S., Li, Q., Wang, L., & Jiang, W. (2022). CAISOV: Collinear Affine Invariance and Scale-Orientation Voting for Reliable Feature Matching. Remote Sensing, 14(13), 3175. https://doi.org/10.3390/rs14133175