Robust Correlation Tracking for UAV with Feature Integration and Response Map Enhancement

Abstract

:1. Introduction

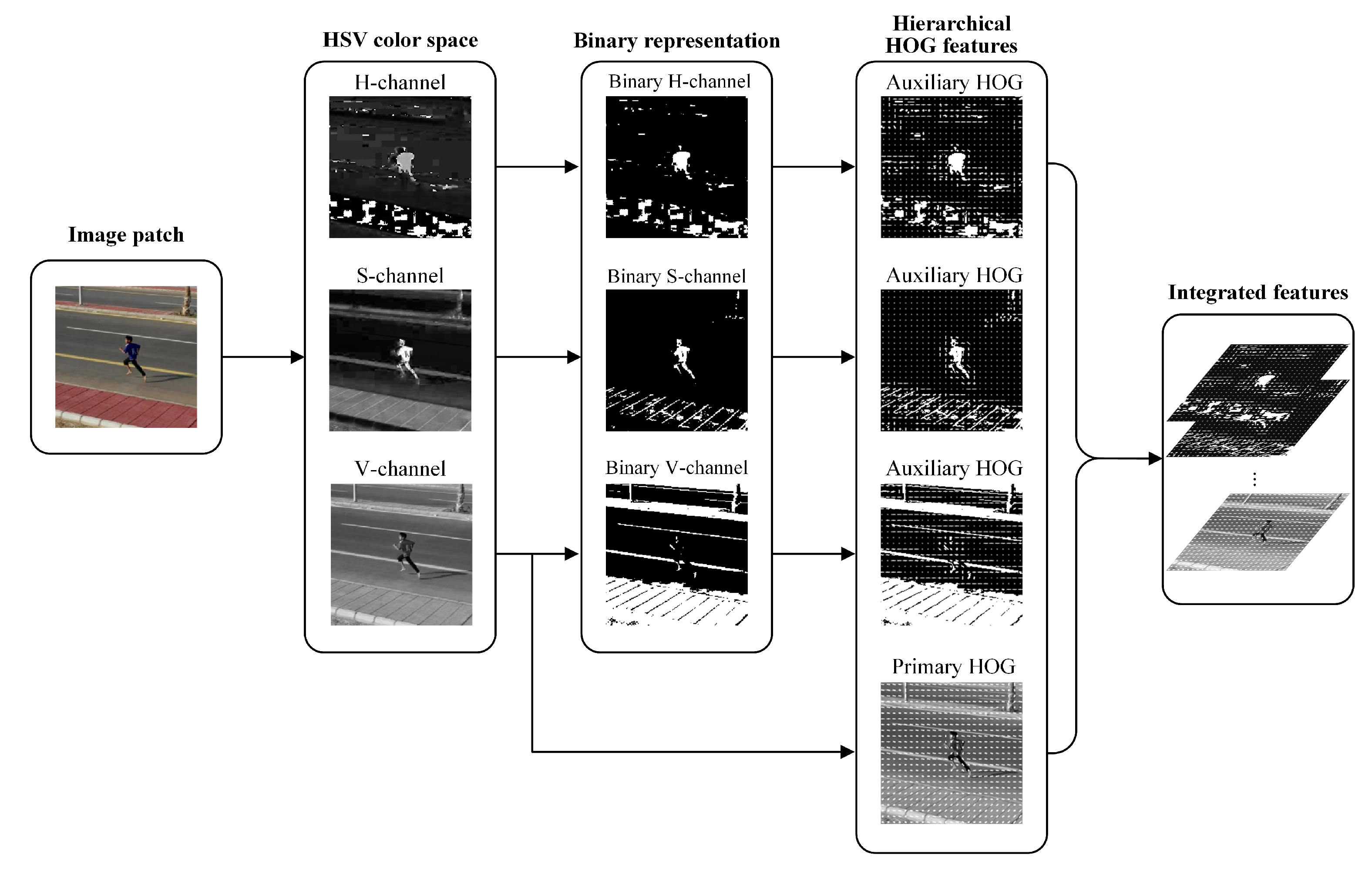

- A new feature integration method is presented to combine distinct gradient features in the HSV color space and their binary representation at a hierarchical level for building a discriminative appearance model of the aerial tracking object. This feature-level fusion manner not only makes use of the gradient and color information together in a more rational approach but also delivers better anti-interference ability with the benefit of binary representation.

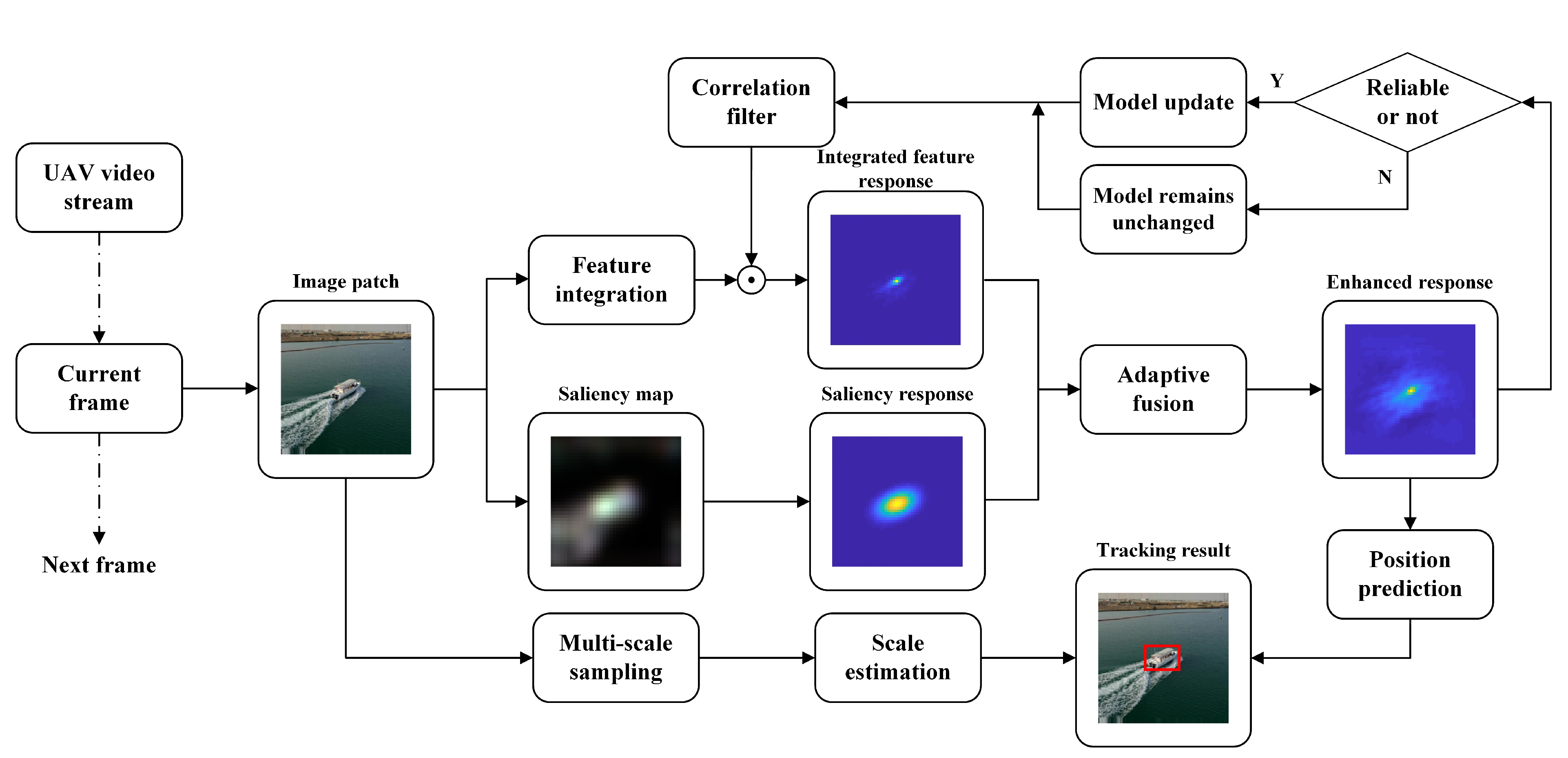

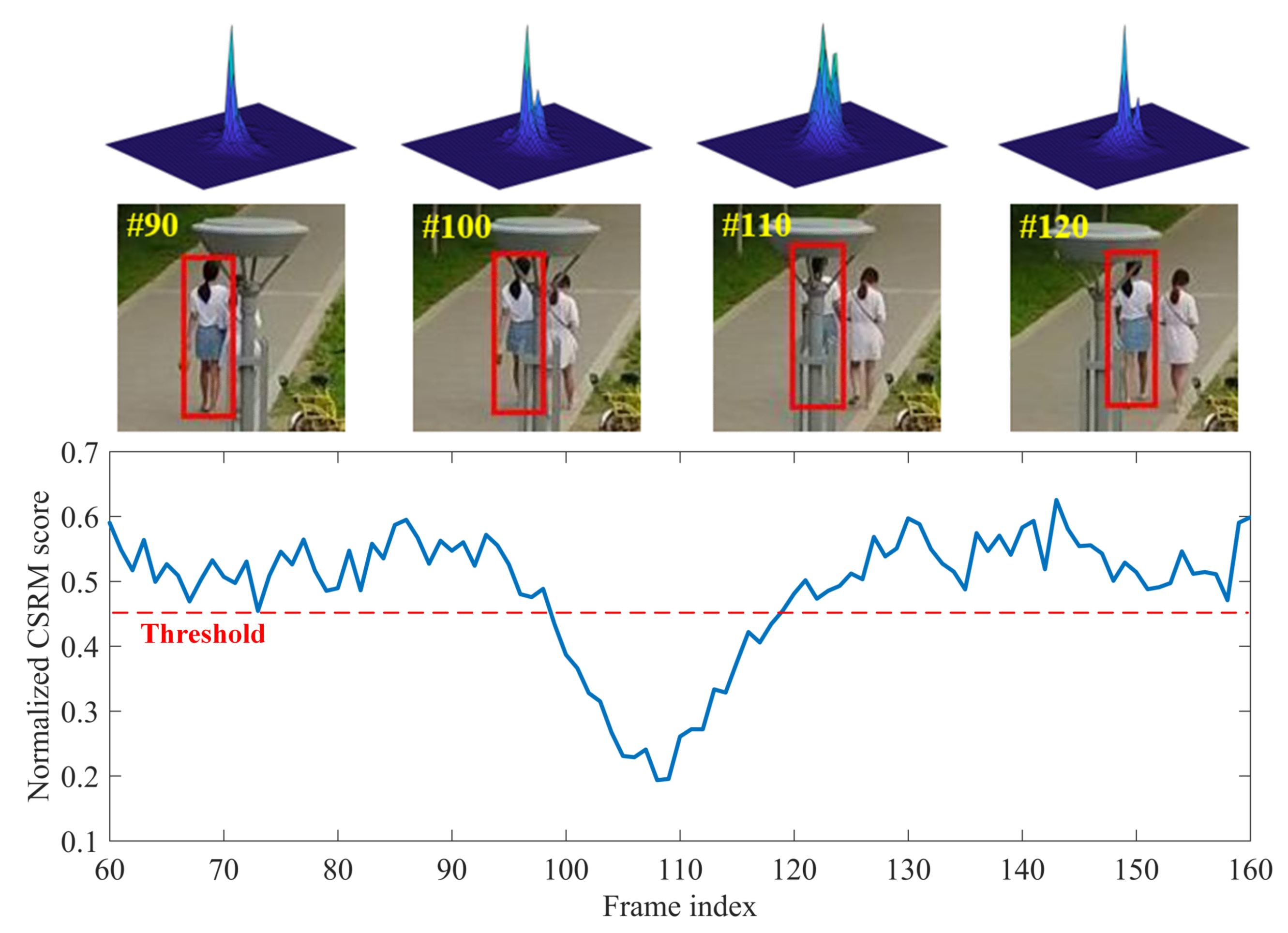

- Saliency awareness is introduced into the correlation filter framework, enabling the tracker to focus on more salient object regions to enhance model discrimination and mitigate model drift. Furthermore, based on a consistent criterion that reveals the quality of the response map, an adaptive response fusion strategy and a dynamic model update mechanism are designed, respectively, for achieving a more reliable decision-level fusion result and avoiding model corruption.

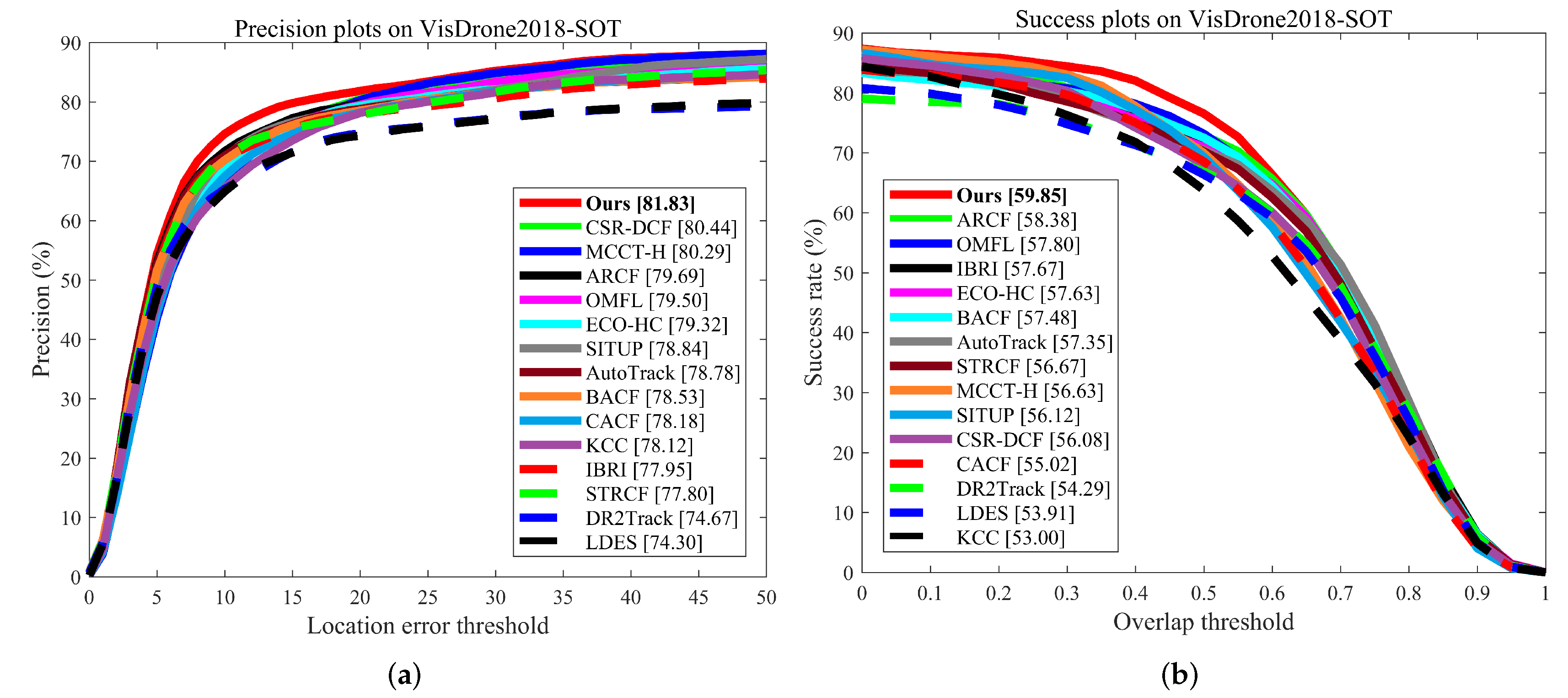

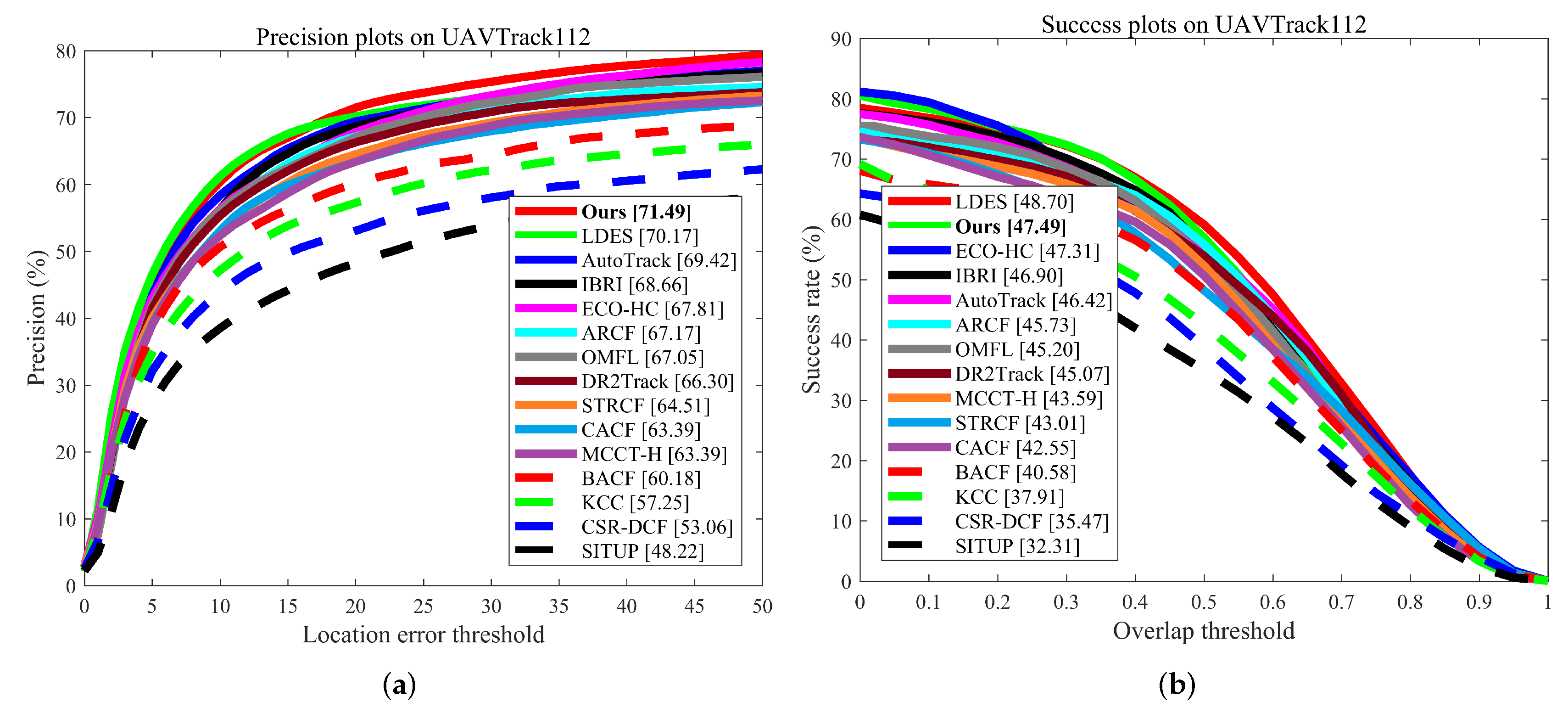

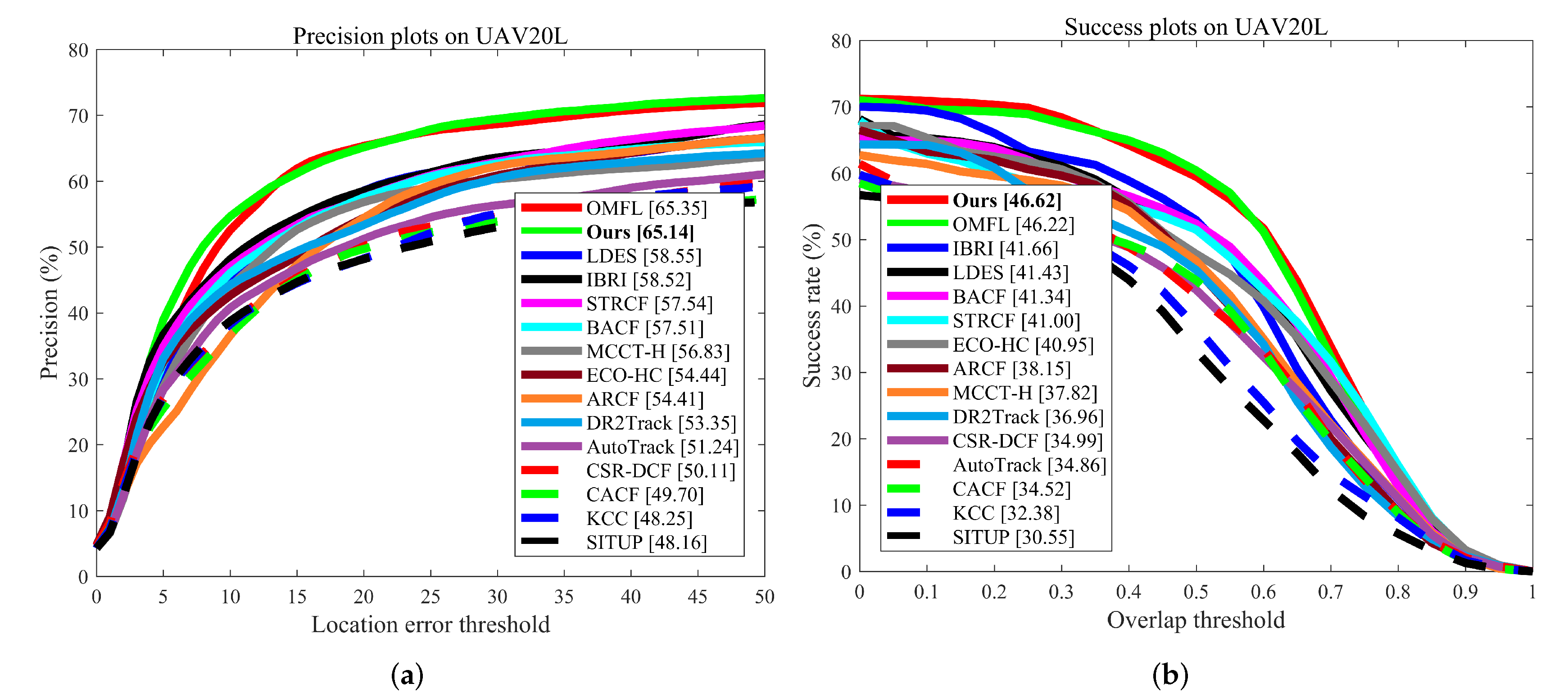

- Exhaustive evaluations are performed on four popular UAV tracking benchmarks, i.e., UAV123@10fps [45], VisDrone2018-SOT [46], UAVTrack112 [25], and UAV20L [45]. The experimental results demonstrate that our approach achieves very competitive performance compared to state-of-the-art trackers, while delivering a real-time tracking speed of 26.7 frames per second (FPS) on a single CPU.

2. Proposed Approach

2.1. Feature Integration Method

2.2. Filter Learning and Detection

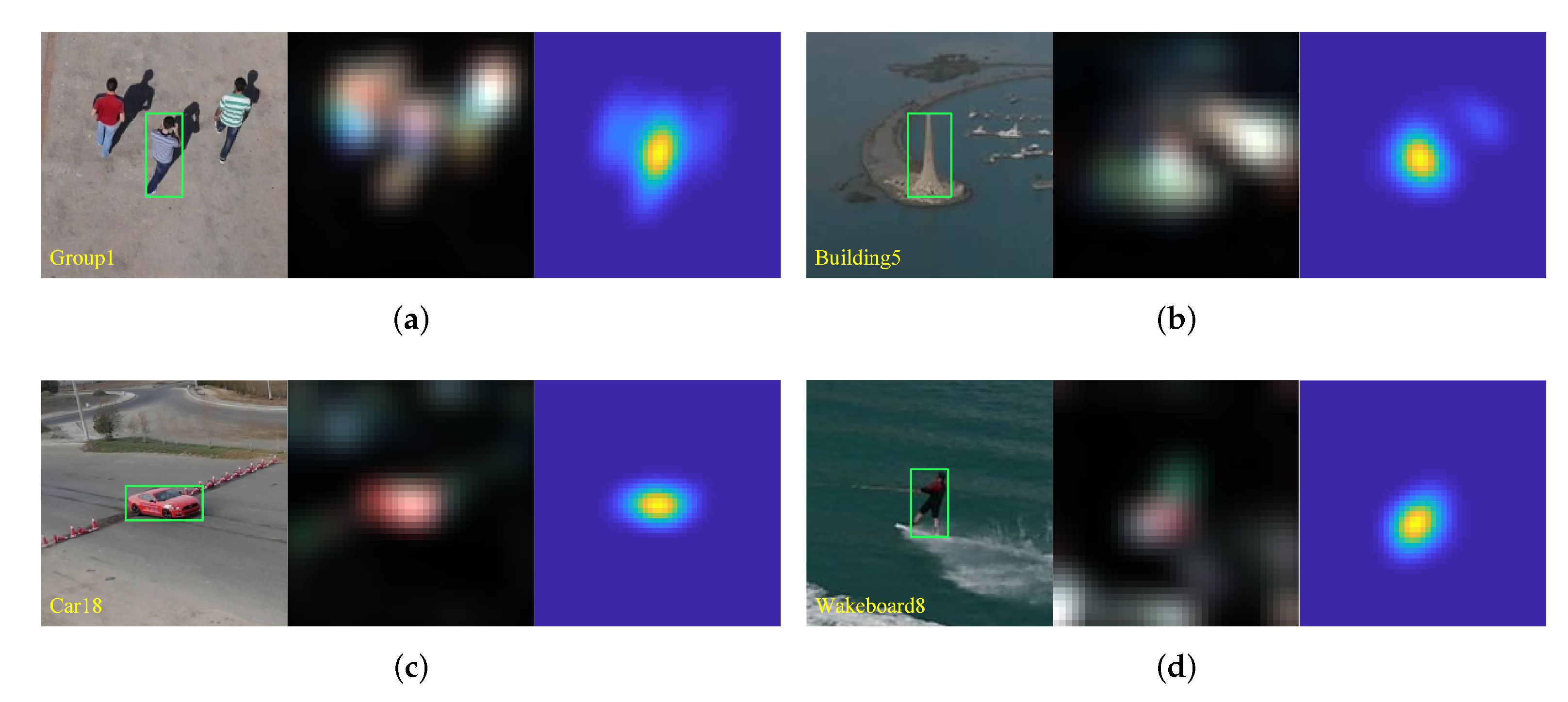

2.3. Tracking Incorporating Saliency

2.4. Model Update and Scale Estimation

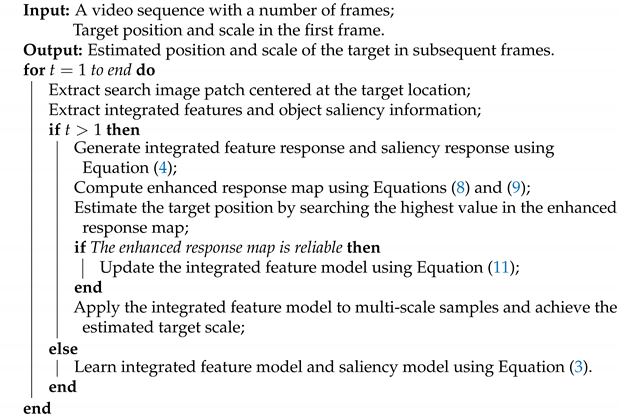

| Algorithm 1: The proposed tracker. |

|

3. Experiments

3.1. Implementation Details

3.2. Quantitative Evaluation

3.2.1. Overall Performance Evaluation

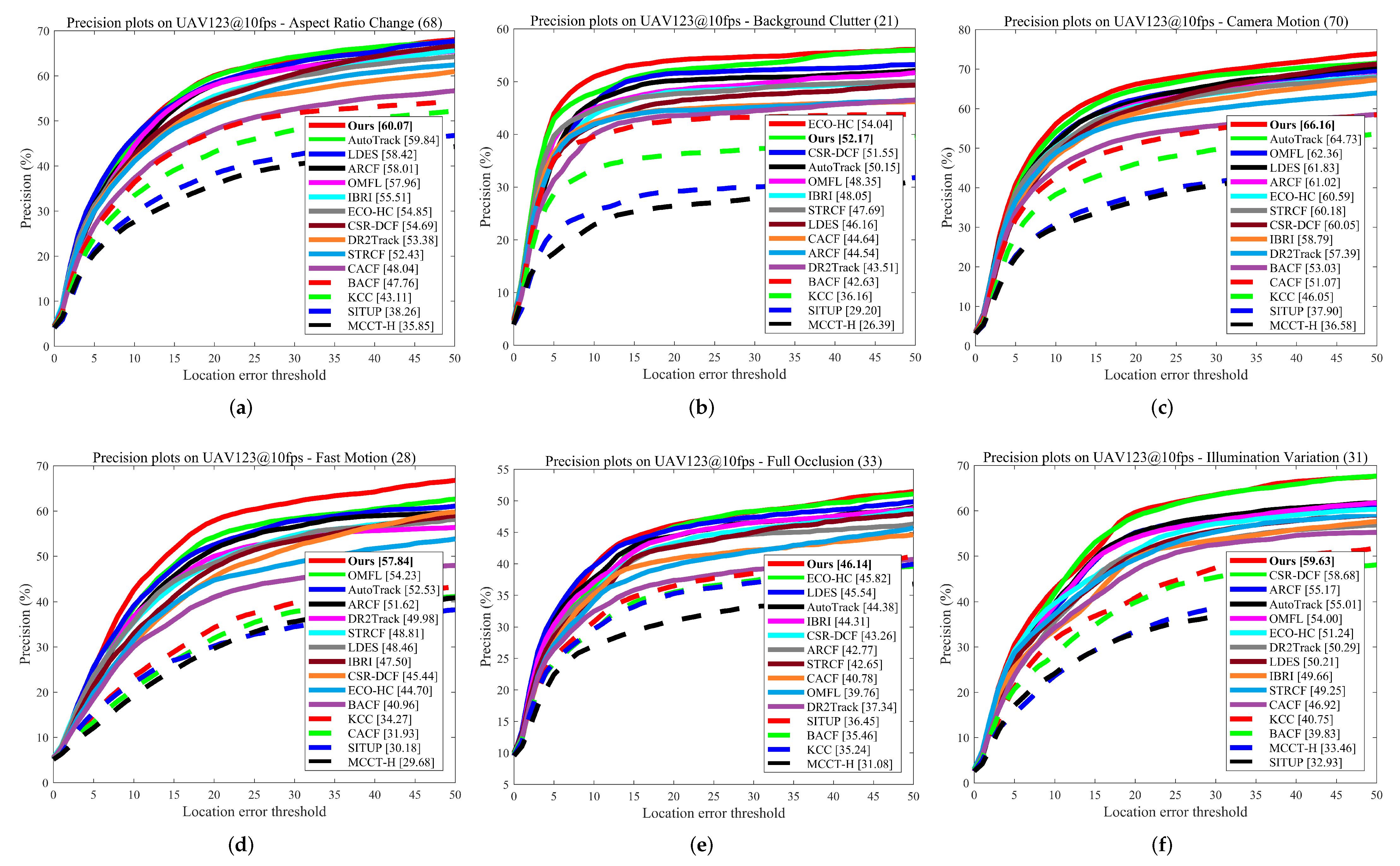

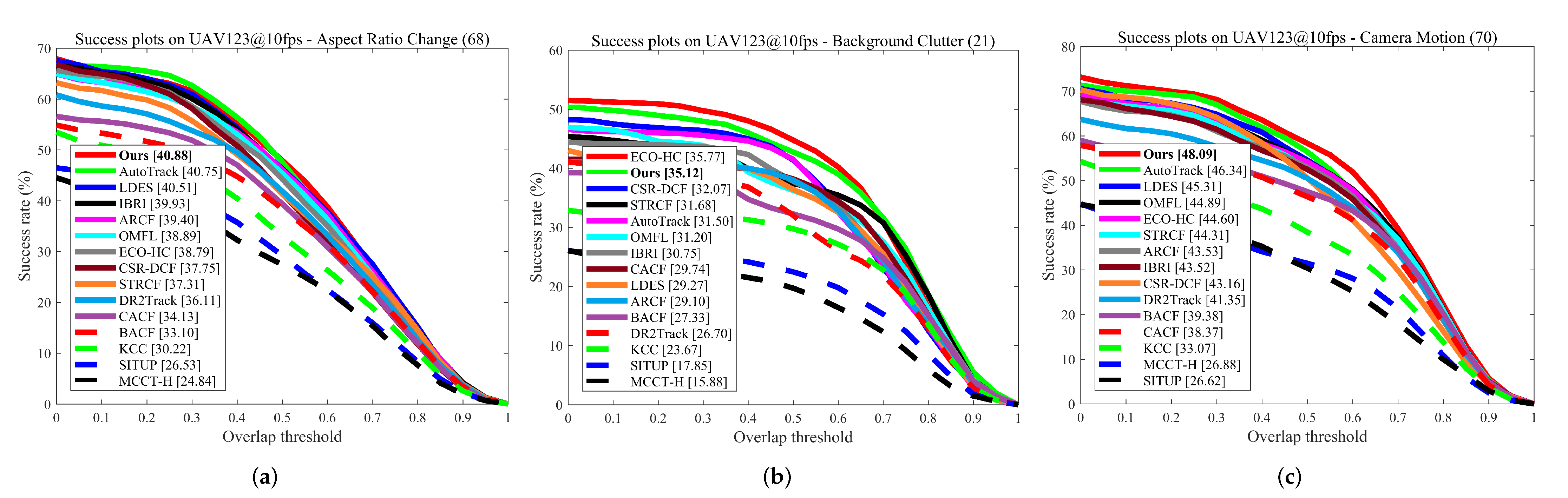

3.2.2. Attribute-Based Evaluation

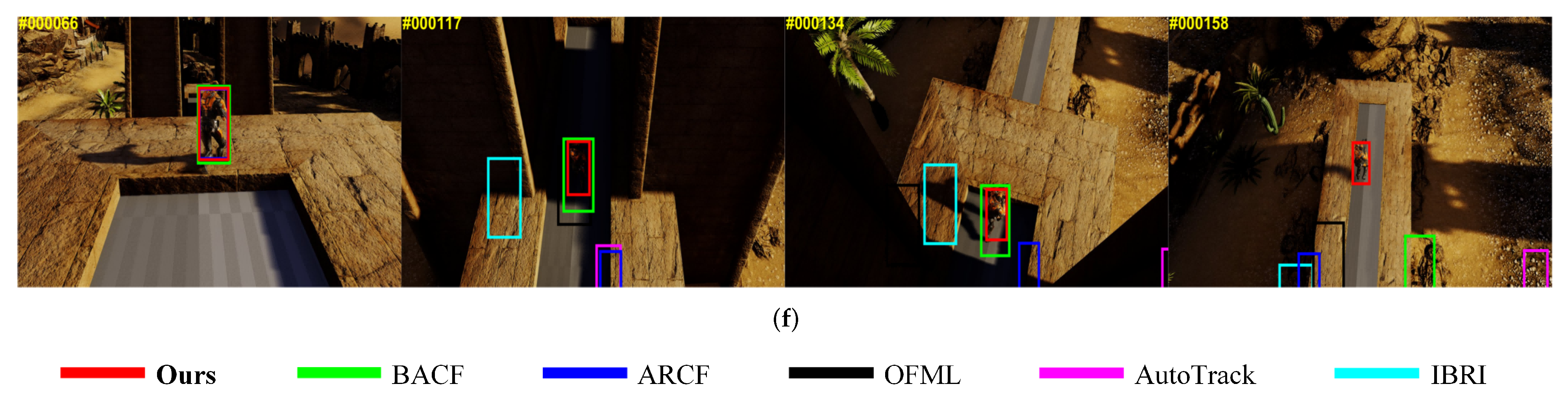

3.3. Qualitative Evaluation

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Kumar, A.; Walia, G.S.; Sharma, K. Recent Trends in Multicue Based Visual Tracking: A Review. Expert Syst. Appl. 2020, 162, 113711. [Google Scholar] [CrossRef]

- Huang, Z.; Fu, C.; Li, Y.; Lin, F.; Lu, P. Learning Aberrance Repressed Correlation Filters for Real-Time UAV Tracking. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Korea, 27 October–2 November 2019; pp. 2891–2900. [Google Scholar]

- Li, Y.; Fu, C.; Ding, F.; Huang, Z.; Lu, G. AutoTrack: Towards High-Performance Visual Tracking for UAV With Automatic Spatio-Temporal Regularization. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 11920–11929. [Google Scholar]

- Fu, C.; Ding, F.; Li, Y.; Jin, J.; Feng, C. DR2Track: Towards Real-Time Visual Tracking for UAV via Distractor Repressed Dynamic Regression. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 24 October 2020–24 January 2021; pp. 1597–1604. [Google Scholar]

- Specht, M.; Stateczny, A.; Specht, C.; Widźgowski, S.; Lewicka, O.; Wiśniewska, M. Concept of an Innovative Autonomous Unmanned System for Bathymetric Monitoring of Shallow Waterbodies (INNOBAT System). Energies 2021, 14, 5370. [Google Scholar] [CrossRef]

- Wang, D.; Xing, S.; He, Y.; Yu, J.; Xu, Q.; Li, P. Evaluation of a New Lightweight UAV-Borne Topo-Bathymetric LiDAR for Shallow Water Bathymetry and Object Detection. Sensors 2022, 22, 1379. [Google Scholar] [CrossRef] [PubMed]

- Burdziakowski, P. Increasing the Geometrical and Interpretation Quality of Unmanned Aerial Vehicle Photogrammetry Products using Super-Resolution Algorithms. Remote Sens. 2020, 12, 810. [Google Scholar] [CrossRef] [Green Version]

- Yuan, C.; Liu, Z.; Zhang, Y. Aerial Images-Based Forest Fire Detection for Firefighting Using Optical Remote Sensing Techniques and Unmanned Aerial Vehicles. J. Intell. Robot. Syst. 2017, 88, 635–654. [Google Scholar] [CrossRef]

- Nikolakopoulos, K.G.; Lampropoulou, P.; Fakiris, E.; Sardelianos, D.; Papatheodorou, G. Synergistic Use of UAV and USV Data and Petrographic Analyses for the Investigation of Beachrock Formations: A Case Study from Syros Island, Aegean Sea, Greece. Minerals 2018, 8, 534. [Google Scholar] [CrossRef] [Green Version]

- Fu, C.; Xu, J.; Lin, F.; Guo, F.; Liu, T.; Zhang, Z. Object Saliency-Aware Dual Regularized Correlation Filter for Real-Time Aerial Tracking. IEEE Trans. Geosci. Remote Sens. 2020, 58, 8940–8951. [Google Scholar] [CrossRef]

- Fu, C.; Li, B.; Ding, F.; Lin, F.; Lu, G. Correlation Filters for Unmanned Aerial Vehicle-Based Aerial Tracking: A Review and Experimental Evaluation. IEEE Geosci. Remote Sens. Mag. 2022, 10, 125–160. [Google Scholar] [CrossRef]

- Jia, X.; Lu, H.; Yang, M.H. Visual Tracking via Adaptive Structural Local Sparse Appearance Model. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 1822–1829. [Google Scholar]

- Sevilla-Lara, L.; Learned-Miller, E. Distribution Fields for Tracking. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 1910–1917. [Google Scholar]

- He, S.; Yang, Q.; Lau, R.W.; Wang, J.; Yang, M.H. Visual Tracking via Locality Sensitive Histograms. In Proceedings of the 2013 IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; pp. 2427–2434. [Google Scholar]

- Babenko, B.; Yang, M.H.; Belongie, S. Robust Object Tracking with Online Multiple Instance Learning. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 33, 1619–1632. [Google Scholar] [CrossRef] [Green Version]

- Kalal, Z.; Mikolajczyk, K.; Matas, J. Tracking-Learning-Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 1409–1422. [Google Scholar] [CrossRef] [Green Version]

- Zhang, J.; Ma, S.; Sclaroff, S. MEEM: Robust Tracking via Multiple Experts Using Entropy Minimization. In ECCV 2014: Proceedings of the Computer Vision, Zurich, Switzerland, 6–12 September 2014; Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T., Eds.; Springer International Publishing: Cham, Switzerland, 2014; pp. 188–203. [Google Scholar]

- Hare, S.; Golodetz, S.; Saffari, A.; Vineet, V.; Cheng, M.M.; Hicks, S.L.; Torr, P.H.S. Struck: Structured Output Tracking with Kernels. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 38, 2096–2109. [Google Scholar] [CrossRef] [Green Version]

- Ma, C.; Huang, J.B.; Yang, X.; Yang, M.H. Hierarchical Convolutional Features for Visual Tracking. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 3074–3082. [Google Scholar]

- Li, Y.; Fu, C.; Huang, Z.; Zhang, Y.; Pan, J. Intermittent Contextual Learning for Keyfilter-Aware UAV Object Tracking Using Deep Convolutional Feature. IEEE Trans. Multimed. 2021, 23, 810–822. [Google Scholar] [CrossRef]

- Bertinetto, L.; Valmadre, J.; Henriques, J.F.; Vedaldi, A.; Torr, P.H.S. Fully-Convolutional Siamese Networks for Object Tracking. In ECCV 2016 Workshops: Proceedings of the Computer Vision, Amsterdam, The Netherlands, 8–16 October 2016; Hua, G., Jégou, H., Eds.; Springer International Publishing: Cham, Switzerland, 2016; pp. 850–865. [Google Scholar]

- Li, B.; Yan, J.; Wu, W.; Zhu, Z.; Hu, X. High Performance Visual Tracking with Siamese Region Proposal Network. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8971–8980. [Google Scholar]

- Wang, Q.; Zhang, L.; Bertinetto, L.; Hu, W.; Torr, P.H. Fast Online Object Tracking and Segmentation: A Unifying Approach. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 1328–1338. [Google Scholar]

- Chen, X.; Yan, B.; Zhu, J.; Wang, D.; Yang, X.; Lu, H. Transformer Tracking. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 8122–8131. [Google Scholar]

- Fu, C.; Cao, Z.; Li, Y.; Ye, J.; Feng, C. Onboard Real-Time Aerial Tracking with Efficient Siamese Anchor Proposal Network. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–13. [Google Scholar] [CrossRef]

- Bolme, D.S.; Beveridge, J.R.; Draper, B.A.; Lui, Y.M. Visual Object Tracking Using Adaptive Correlation Filters. In Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, 13–18 June 2010; pp. 2544–2550. [Google Scholar]

- Henriques, J.F.; Caseiro, R.; Martins, P.; Batista, J. High-Speed Tracking with Kernelized Correlation Filters. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 583–596. [Google Scholar] [CrossRef] [Green Version]

- Li, Y.; Zhu, J. A Scale Adaptive Kernel Correlation Filter Tracker with Feature Integration. In ECCV 2014 Workshops: Proceedings of the Computer Vision, Zurich, Switzerland, 6–12 September 2014; Agapito, L., Bronstein, M.M., Rother, C., Eds.; Springer International Publishing: Cham, Switzerland, 2015; pp. 254–265. [Google Scholar]

- Danelljan, M.; Khan, F.S.; Felsberg, M.; Van De Weijer, J. Adaptive Color Attributes for Real-Time Visual Tracking. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 1090–1097. [Google Scholar] [CrossRef] [Green Version]

- Fu, C.; Lin, F.; Li, Y.; Chen, G. Correlation Filter-Based Visual Tracking for UAV with Online Multi-Feature Learning. Remote Sens. 2019, 11, 549. [Google Scholar] [CrossRef] [Green Version]

- Fu, C.; Ye, J.; Xu, J.; He, Y.; Lin, F. Disruptor-Aware Interval-Based Response Inconsistency for Correlation Filters in Real-Time Aerial Tracking. IEEE Trans. Geosci. Remote Sens. 2021, 59, 6301–6313. [Google Scholar] [CrossRef]

- Zhang, F.; Ma, S.; Yu, L.; Zhang, Y.; Qiu, Z.; Li, Z. Learning Future-Aware Correlation Filters for Efficient UAV Tracking. Remote Sens. 2021, 13, 4111. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [Green Version]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention is All You Need. In Proceedings of the 31st International Conference on Neural Information Processing Systems—NIPS’17, Long Beach, CA, USA, 4–9 December 2017; Curran Associates Inc.: Red Hook, NY, USA, 2017; pp. 6000–6010. [Google Scholar]

- Dalal, N.; Triggs, B. Histograms of Oriented Gradients for Human Detection. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR), San Diego, CA, USA, 20–25 June 2005; Volume 1, pp. 886–893. [Google Scholar]

- Zhu, G.; Wang, J.; Wu, Y.; Zhang, X.; Lu, H. MC-HOG Correlation Tracking with Saliency Proposal. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2016. [Google Scholar]

- Wang, N.; Zhou, W.; Tian, Q.; Hong, R.; Wang, M.; Li, H. Multi-cue Correlation Filters for Robust Visual Tracking. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4844–4853. [Google Scholar]

- Wang, C.; Zhang, L.; Xie, L.; Yuan, J. Kernel Cross-Correlator. In Proceedings of the AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018; pp. 4179–4186. [Google Scholar]

- Zhang, T.; Xu, C.; Yang, M.H. Learning Multi-Task Correlation Particle Filters for Visual Tracking. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 41, 365–378. [Google Scholar] [CrossRef]

- Li, Y.; Zhu, J.; Hoi, S.C.; Song, W.; Wang, Z.; Liu, H. Robust Estimation of Similarity Transformation for Visual Object Tracking. In AAAI’19/IAAI’19/EAAI’19: Proceedings of the Thirty-Third AAAI Conference on Artificial Intelligence and Thirty-First Innovative Applications of Artificial Intelligence Conference and Ninth AAAI Symposium on Educational Advances in Artificial Intelligence, Honolulu, Hawaii USA, 27 January–1 February 2019; AAAI Press: Menlo Park, CA, USA, 2019. [Google Scholar]

- Galoogahi, H.K.; Sim, T.; Lucey, S. Correlation filters with limited boundaries. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 4630–4638. [Google Scholar]

- Danelljan, M.; Häger, G.; Khan, F.S.; Felsberg, M. Learning Spatially Regularized Correlation Filters for Visual Tracking. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 4310–4318. [Google Scholar]

- Galoogahi, H.K.; Fagg, A.; Lucey, S. Learning Background-Aware Correlation Filters for Visual Tracking. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 1144–1152. [Google Scholar]

- Lukežic, A.; Vojír, T.; Zajc, L.C.; Matas, J.; Kristan, M. Discriminative Correlation Filter with Channel and Spatial Reliability. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 4847–4856. [Google Scholar]

- Mueller, M.; Smith, N.; Ghanem, B. A Benchmark and Simulator for UAV Tracking. In ECCV 2016: Proceedings of the Computer Vision, Amsterdam, The Netherlands, 8–16 October 2016; Leibe, B., Matas, J., Sebe, N., Welling, M., Eds.; Springer International Publishing: Cham, Switzerland, 2016; pp. 445–461. [Google Scholar]

- Wen, L.; Zhu, P.; Du, D.; Bian, X.; Ling, H.; Hu, Q.; Liu, C.; Cheng, H.; Liu, X.; Ma, W.; et al. VisDrone-SOT2018: The Vision Meets Drone Single-Object Tracking Challenge Results. In ECCV 2018 Workshops: Proceedings of the Computer Vision, Munich, Germany, 8–14 September 2018; Leal-Taixé, L., Roth, S., Eds.; Springer International Publishing: Cham, Switzerland, 2019; pp. 469–495. [Google Scholar]

- Wang, N.; Shi, J.; Yeung, D.Y.; Jia, J. Understanding and Diagnosing Visual Tracking Systems. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 3101–3109. [Google Scholar]

- Danelljan, M.; Bhat, G.; Khan, F.S.; Felsberg, M. ECO: Efficient Convolution Operators for Tracking. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6931–6939. [Google Scholar]

- Bertinetto, L.; Valmadre, J.; Golodetz, S.; Miksik, O.; Torr, P.H.S. Staple: Complementary Learners for Real-Time Tracking. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 1401–1409. [Google Scholar]

- Dai, K.; Wang, D.; Lu, H.; Sun, C.; Li, J. Visual Tracking via Adaptive Spatially-Regularized Correlation Filters. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 4665–4674. [Google Scholar]

- Bhat, G.; Johnander, J.; Danelljan, M.; Khan, F.S.; Felsberg, M. Unveiling the Power of Deep Tracking. In ECCV 2018: Proceedings of the Computer Vision, Munich, Germany, 8–14 September 2018; Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y., Eds.; Springer International Publishing: Cham, Switzerland, 2018; pp. 493–509. [Google Scholar]

- Xu, T.; Feng, Z.H.; Wu, X.J.; Kittler, J. Joint Group Feature Selection and Discriminative Filter Learning for Robust Visual Object Tracking. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Korea, 27 October–2 November 2019; pp. 7949–7959. [Google Scholar]

- Boyd, S.; Parikh, N.; Chu, E.; Peleato, B.; Eckstein, J. Distributed Optimization and Statistical Learning via the Alternating Direction Method of Multipliers; Now Foundations and Trends: Hanover, MA, USA, 2011. [Google Scholar]

- Huang, R.; Feng, W.; Sun, J. Color Feature Reinforcement for Cosaliency Detection Without Single Saliency Residuals. IEEE Signal Process. Lett. 2017, 24, 569–573. [Google Scholar] [CrossRef]

- Tang, Y.; Zou, W.; Jin, Z.; Chen, Y.; Hua, Y.; Li, X. Weakly Supervised Salient Object Detection With Spatiotemporal Cascade Neural Networks. IEEE Trans. Circuits Syst. Video Technol. 2019, 29, 1973–1984. [Google Scholar] [CrossRef] [Green Version]

- Wei, Y.; Feng, J.; Liang, X.; Cheng, M.M.; Zhao, Y.; Yan, S. Object Region Mining with Adversarial Erasing: A Simple Classification to Semantic Segmentation Approach. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6488–6496. [Google Scholar]

- Wang, X.; You, S.; Li, X.; Ma, H. Weakly-Supervised Semantic Segmentation by Iteratively Mining Common Object Features. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 1354–1362. [Google Scholar]

- Hou, X.; Zhang, L. Saliency Detection: A Spectral Residual Approach. In Proceedings of the 2007 IEEE Conference on Computer Vision and Pattern Recognition, Minneapolis, MN, USA, 17–22 June 2007; pp. 1–8. [Google Scholar]

- Zhang, Y.; Yang, Y.; Zhou, W.; Shi, L.; Li, D. Motion-Aware Correlation Filters for Online Visual Tracking. Sensors 2018, 18, 3937. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Mueller, M.; Smith, N.; Ghanem, B. Context-Aware Correlation Filter Tracking. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 1387–1395. [Google Scholar]

- Li, F.; Tian, C.; Zuo, W.; Zhang, L.; Yang, M.H. Learning Spatial-Temporal Regularized Correlation Filters for Visual Tracking. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4904–4913. [Google Scholar]

- Ma, H.; Acton, S.T.; Lin, Z. SITUP: Scale Invariant Tracking Using Average Peak-to-Correlation Energy. IEEE Trans. Image Process. 2020, 29, 3546–3557. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Wu, Y.; Lim, J.; Yang, M.H. Object Tracking Benchmark. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1834–1848. [Google Scholar] [CrossRef] [Green Version]

| Tracker | Average FPS | Real-Time | Venue |

|---|---|---|---|

| ECO-HC [48] | 52.0 | Yes | 2017’CVPR |

| CSR-DCF [44] | 14.2 | No | 2017’CVPR |

| BACF [43] | 52.0 | Yes | 2017’ICCV |

| CACF [60] | 26.2 | Yes | 2017’ICCV |

| STRCF [61] | 25.9 | Yes | 2018’CVPR |

| MCCT-H [37] | 57.6 | Yes | 2018’CVPR |

| KCC [38] | 35.0 | Yes | 2018’AAAI |

| LDES [40] | 7.0 | No | 2019’AAAI |

| ARCF [2] | 28.1 | Yes | 2019’ICCV |

| OMFL [30] | 14.4 | No | 2019’Remote Sensing |

| AutoTrack [3] | 57.7 | Yes | 2020’CVPR |

| DR2Track [4] | 44.0 | Yes | 2020’IROS |

| SITUP [62] | 12.9 | No | 2020’TIP |

| IBRI [31] | 25.7 | Yes | 2021’TGARS |

| Ours | 26.7 | Yes | This work |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lin, B.; Bai, Y.; Bai, B.; Li, Y. Robust Correlation Tracking for UAV with Feature Integration and Response Map Enhancement. Remote Sens. 2022, 14, 4073. https://doi.org/10.3390/rs14164073

Lin B, Bai Y, Bai B, Li Y. Robust Correlation Tracking for UAV with Feature Integration and Response Map Enhancement. Remote Sensing. 2022; 14(16):4073. https://doi.org/10.3390/rs14164073

Chicago/Turabian StyleLin, Bin, Yunpeng Bai, Bendu Bai, and Ying Li. 2022. "Robust Correlation Tracking for UAV with Feature Integration and Response Map Enhancement" Remote Sensing 14, no. 16: 4073. https://doi.org/10.3390/rs14164073

APA StyleLin, B., Bai, Y., Bai, B., & Li, Y. (2022). Robust Correlation Tracking for UAV with Feature Integration and Response Map Enhancement. Remote Sensing, 14(16), 4073. https://doi.org/10.3390/rs14164073