Satellite Fog Detection at Dawn and Dusk Based on the Deep Learning Algorithm under Terrain-Restriction

Abstract

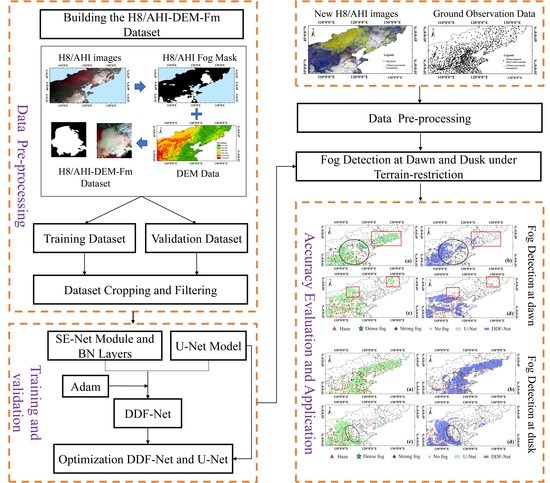

:1. Introduction

2. Materials and Methods

2.1. Study Area and Data Sources

- (1)

- Satellite data

- (2)

- Ground observation data

- (3)

- Terrain data

2.2. Deep Learning Algorithm

2.3. The SE-Net Module

2.4. Deep Learning-Based Algorithm of Fog Detection under Terrain Restriction

2.4.1. Building the H8/AHI-DEM-Fm Fog Mask Dataset

- (1)

- Building the AHI-DEM dataset at dawn and dusk.

- (2)

- Building the fog mask dataset Fm.

- (3)

- Building the H8/AHI-DEM-Fm fog mask dataset.

2.4.2. Integration of the SE-Net Module

2.4.3. Adjust the Training Strategy of the Model

2.5. Evaluation Metrics

- (1)

- Model Evaluation Metrics

- (2)

- Quantitative evaluation metrics of fog detection accuracy

3. Results

4. Discussion

5. Conclusions

- (1)

- The DDF-Net could detect large-scale fog and has a more refined identification of the edge of the fog area. Both the DDF-Net and U-net are challenged in detecting fog during the periods of time when the fog is dissipating and when there are clouds, while the FAR of DDF-Net is lower than the latter.

- (2)

- The fog near the terminator line can be effectively separated from the surface, which is effective for identifying fog from the surface at dawn and dusk. Specially, the DDF-Net can effectively improve the misdetection of medium/low clouds near and far from the terminator line. The fog detection results are relatively stable with fewer false detections.

- (3)

- The algorithm is simple and efficient, with little human intervention. As a result, the fog detection results can be obtained in near real time and are well suited to the requirements for real-time detection of dawn and dusk fog over large areas of land.

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- World Meteorological Organization (WMO). International Meteorological Vocabulary; Secretariat of the WMO: Geneva, Switzerland, 1992; p. 782. [Google Scholar]

- Ma, H.; Li, Y.; Wu, X.; Feng, H.; Ran, Y.; Jiang, B.; Wang, W. A large-region fog detection algorithm at dawn and dusk for high-frequency Himawari-8 satellite data. Int. J. Remote Sens. 2022, 43, 2620–2637. [Google Scholar] [CrossRef]

- Nilo, S.T.; Romano, F.; Cermak, J.; Cimini, D.; Ricciardelli, E.; Cersosimo, A.; Di Paola, F.; Gallucci, D.; Gentile, S.; Geraldi, E. Fog detection based on meteosat second generation-spinning enhanced visible and infrared imager high resolution visible channel. Remote Sens. 2018, 10, 541. [Google Scholar] [CrossRef]

- Kang, L.; Miao, Y.Y.; Miao, M.S.; Hu, Z.Y. The Harm of Fog and Thick Haze to the Body and the Prevention of Chinese Medicine. In Proceedings of the 2nd International Conference on Biomedical and Biological Engineering 2017 (BBE 2017), Guilin, China, 26–28 May 2017; Atlantis Press: Amsterdam, The Netherlands, 2017; pp. 119–124. [Google Scholar] [CrossRef]

- Yoo, J.M.; Choo, G.H.; Lee, K.H.; Wu, D.L.; Yang, J.H.; Park, J.D.; Choi, Y.S.; Shin, D.B.; Jeong, J.H.; Yoo, J.M. Improved detection of low stratus and fog at dawn from dual geostationary (COMS and FY-2D) satellites. Remote Sens. Environ. 2018, 211, 292–306. [Google Scholar] [CrossRef]

- Lee, J.R.; Chung, C.Y.; Ou, M.L. Fog detection using geostationary satellite data: Temporally continuous algorithm. Asia-Pac. J. Atmos. Sci. 2011, 47, 113–122. [Google Scholar] [CrossRef]

- Hunt, G.E. Radiative properties of terrestrial clouds at visible and infra-red thermal window wavelengths. Q. J. R. Meteorol. Soc. 1973, 99, 346–369. [Google Scholar] [CrossRef]

- Eyre, J. Detection of fog at night using Advanced Very High Resolution Radiometer (AVHRR) imagery. Meteorol. Mag. 1984, 113, 266–271. [Google Scholar]

- Turner, J.; Allam, R.; Maine, D. A case-study of the detection of fog at night using channels 3 and 4 on the Advanced Very High-Resolution Radiometer (AVHRR). Meteorol. Mag. 1986, 115, 285–290. [Google Scholar]

- Bendix, J. Fog climatology of the Po Valley. Riv. Meteorol. Aeronaut. 1994, 54, 25–36. [Google Scholar]

- Bendix, J. A case study on the determination of fog optical depth and liquid water path using AVHRR data and relations to fog liquid water content and horizontal visibility. Int. J. Remote Sens. 1995, 16, 515–530. [Google Scholar] [CrossRef]

- Cermak, J.; Bendix, J. Dynamical Nighttime Fog/Low Stratus Detection Based on Meteosat SEVIRI Data: A Feasibility Study. Pure Appl. Geophys. 2007, 164, 1179–1192. [Google Scholar] [CrossRef]

- Cermak, J.; Bendix, J. A novel approach to fog/low stratus detection using Meteosat 8 data. Atmos. Res. 2008, 87, 279–292. [Google Scholar] [CrossRef]

- Chaurasia, S.; Sathiyamoorthy, V.; Paul Shukla, B.; Simon, B.; Joshi, P.C.; Pal, P.K. Night time fog detection using MODIS data over Northern India. Met Apps. 2011, 18, 483–494. [Google Scholar] [CrossRef]

- Weston, M.; Temimi, M. Application of a Nighttime Fog Detection Method Using SEVIRI Over an Arid Environment. Remote Sens. 2020, 12, 2281. [Google Scholar] [CrossRef]

- Gurka, J.J. The Role of Inward Mixing in the Dissipation of Fog and Stratus. Mon. Weather Rev. 1978, 106, 1633–1635. [Google Scholar] [CrossRef]

- Rao, P.K.; Holmes, S.J.; Anderson, R.K.; Winston, J.S.; Lehr, P.E. Weather Satellites: Systems, Data, and Environmental Applications; American Meteorological Society: Boston, MA, USA, 1990; p. 516. [Google Scholar]

- Chaurasia, S.; Gohil, B.S. Detection of Day Time Fog Over India Using INSAT-3D Data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 4524–4530. [Google Scholar] [CrossRef]

- Drönner, J.; Egli, S.; Thies, B.; Bendix, J.; Seeger, B. FFLSD—Fast Fog and Low Stratus Detection tool for large satellite time-series. Comput. Geosci. 2019, 128, 51–59. [Google Scholar] [CrossRef]

- Yang, J.H.; Yoo, J.M.; Choi, Y.S. Advanced Dual-Satellite Method for Detection of Low Stratus and Fog near Japan at Dawn from FY-4A and Himawari-8. Remote Sens. 2021, 13, 1042. [Google Scholar] [CrossRef]

- Hůnová, I.; Brabec, M.; Malý, M.; Dumitrescu, A.; Geletič, J. Terrain and its effects on fog occurrence. Sci. Total Environ. 2021, 768, 144359. [Google Scholar] [CrossRef]

- Shang, H.; Chen, L.; Letu, H.; Zhao, M.; Li, S.; Bao, S. Development of a daytime cloud and haze detection algorithm for Himawari-8 satellite measurements over central and eastern China. J. Geophys. Res. Atmos. 2017, 122, 3528–3543. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Jeppesen, J.H.; Jacobsen, R.H.; Inceoglu, F.; Toftegaard, T.S. A cloud detection algorithm for satellite imagery based on deep learning. Remote Sens. Environ. 2019, 229, 247–259. [Google Scholar] [CrossRef]

- Wu, X.; Shi, Z.; Zou, Z. A geographic information-driven method and a new large scale dataset for remote sensing cloud/snow detection. Isprs. J. Photogramm. 2021, 174, 87–104. [Google Scholar] [CrossRef]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar] [CrossRef]

- Ding, Y.; Liu, Y. Analysis of long-term variations of fog and haze in China in recent 50 years and their relations with atmospheric humidity. Sci. China Earth Sci. 2014, 57, 36–46. [Google Scholar] [CrossRef]

- Chen, H.; Wang, H. Haze Days in North China and the associated atmospheric circulations based on daily visibility data from 1960 to 2012. J. Geophys. Res. Atmos. 2015, 120, 5895–5909. [Google Scholar] [CrossRef]

- Bessho, K.; Date, K.; Hayashi, M.; Ikeda, A.; Imai, T.; Inoue, H.; Kumagai, Y.; Miyakawa, T.; Murata, H.; Ohno, T. An introduction to Himawari-8/9—Japan’s new-generation geostationary meteorological satellites. J. Meteorol. Soc. Jpn. 2016, 94, 151–183. [Google Scholar] [CrossRef]

- Ishida, H.; Nakajima, T.Y. Development of an unbiased cloud detection algorithm for a spaceborne multispectral imager. J. Geophys. Res. 2009, 114. [Google Scholar] [CrossRef]

- Knudby, A.; Latifovic, R.; Pouliot, D. A cloud detection algorithm for AATSR data, optimized for daytime observations in Canada. Remote Sens. Environ. 2011, 115, 3153–3164. [Google Scholar] [CrossRef]

- Cermak, J.; Bendix, J. Detecting ground fog from space—A microphysics-based approach. Int. J. Remote Sens. 2011, 32, 3345–3371. [Google Scholar] [CrossRef]

- GB/T 27964-2011GB/T; Grade of Fog Forecast. China Meteorological Administration (CMA): Beijing, China, 2011. (In Chinese)

- Zeiler, M.; Fergus, R. Visualizing and understanding convolutional networks. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; pp. 818–833. [Google Scholar]

- Rumelhart, D.E.; Hinton, G.E.; Williams, R.J. Learning representations by back-propagating errors. Nature 1986, 323, 533–536. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015, Munich, Germany, 5–9 October 2015; Springer International Publishing: Cham, Switzerland, 2015; pp. 234–241. [Google Scholar]

- Shao, Z.; Pan, Y.; Diao, C.; Cai, J. Cloud Detection in Remote Sensing Images Based on Multiscale Features-Convolutional Neural Network. IEEE Trans. Geosci. Remote 2019, 57, 4062–4076. [Google Scholar] [CrossRef]

- Mohajerani, S.; Krammer, T.A.; Saeedi, P. Cloud detection algorithm for remote sensing images using fully convolutional neural networks. arXiv 2018, arXiv:1810.05782. [Google Scholar] [CrossRef] [Green Version]

- Ioffe, S.; Szegedy, C. Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift. In Proceedings of the 32nd International Conference on Machine Learning, Lille, France, 6–11 July 2015; Francis, B., David, B., Eds.; PMLR: San Diego, CA, USA, 2015; pp. 448–456. [Google Scholar]

| Parameter Configuration | Platform and Version |

|---|---|

| GPU | NVIDIA GeForce RTX 2080Ti (NVIDIA Corporation, Santa Clara, CA, United States) |

| Memory | 11GB |

| Operating system | Windows 10 |

| Programming language | Python3.6.6 |

| Framework | PyTorch1.5.1 |

| Cuda | 10.0 |

| Batch size | 8 |

| Epoch | 1500 |

| Decaying Epoch | 1440 |

| 0.0035 |

| Date | Satellite Detection | Ground Observation (Fog) | Ground Observation (Nonfog) | POD | FAR | CSI |

|---|---|---|---|---|---|---|

| 18 November 2016 | Fog Nonfog | 34 12 | 7 210 | 0.739 | 0.171 | 0.642 |

| 12 December 2016 | Fog Nonfog | 44 3 | 6 255 | 0.936 | 0.120 | 0.830 |

| 18 December 2016 | Fog Nonfog | 16 3 | 3 276 | 0.842 | 0.158 | 0.727 |

| 20 December 2016 | Fog Nonfog | 19 4 | 2 322 | 0.826 | 0.095 | 0.760 |

| 3 January 2017 | Fog Nonfog | 18 3 | 7 381 | 0.857 | 0.280 | 0.643 |

| mean | 0.840 | 0.164 | 0.720 | |||

| Date | Satellite Detection | Ground Observation (Fog) | Ground Observation (Nonfog) | POD | FAR | CSI |

|---|---|---|---|---|---|---|

| 17 November 2016 | Fog Nonfog | 11 2 | 2 209 | 0.846 | 0.154 | 0.733 |

| 18 November 2016 | Fog Nonfog | 42 3 | 3 238 | 0.933 | 0.067 | 0.875 |

| 11 December 2016 | Fog Nonfog | 44 8 | 10 372 | 0.846 | 0.185 | 0.710 |

| 18 December 2016 | Fog Nonfog | 12 3 | 4 277 | 0.800 | 0.250 | 0.632 |

| 28 December 2016 | Fog Nonfog | 19 6 | 3 307 | 0.760 | 0.136 | 0.679 |

| mean | 0.837 | 0.158 | 0.726 | |||

| Ground Observation Time | Cases | Satellite Detection | Ground Observation (Fog) | Ground Observation (Nonfog) | POD | FAR | CSI |

|---|---|---|---|---|---|---|---|

| 8:00 | 5 February 2017, 8:00 | Fog Nonfog | 21 3 | 9 402 | 0.875 | 0.300 | 0.636 |

| 8:00 | 17 March 2017, 7:00 | Fog Nonfog | 5 2 | 4 413 | 0.714 | 0.444 | 0.455 |

| 5:00 | 5 May 2017, 6:00 | Fog Nonfog | 8 5 | 15 409 | 0.615 | 0.652 | 0.286 |

| 5:00 | 27 June 2017, 6:00 | Fog Nonfog | 24 11 | 13 392 | 0.686 | 0.351 | 0.500 |

| 5:00 | 29 July 2017, 6:00 | Fog Nonfog | 6 5 | 13 426 | 0.545 | 0.684 | 0.250 |

| 5:00 | 31 August 2017, 5:00 | Fog Nonfog | 12 9 | 14 399 | 0.571 | 0.538 | 0.343 |

| 5:00 | 17 September 2017, 5:00 | Fog Nonfog | 5 3 | 3 377 | 0.625 | 0.375 | 0.455 |

| 5:00 | 12 October 2017, 6:00 | Fog Nonfog | 22 9 | 8 415 | 0.710 | 0.267 | 0.564 |

| mean | 0.668 | 0.451 | 0.436 | ||||

| Ground Observation Time | Cases | Satellite Detection | Ground Observation (Fog) | Ground Observation (Nonfog) | POD | FAR | CSI |

|---|---|---|---|---|---|---|---|

| 17:00 | 21 February 2017, 17:00 | Fog Nonfog | 12 14 | 3 395 | 0.462 | 0.200 | 0.414 |

| 17:00 | 13 March 2017, 17:00 | Fog Nonfog | 24 7 | 11 417 | 0.774 | 0.314 | 0.571 |

| 20:00 | 22 August 2017, 19:00 | Fog Nonfog | 0 7 | 0 487 | -- | -- | -- |

| 17:00 | 15 September 2017, 18:00 | Fog Nonfog | 19 8 | 12 377 | 0.704 | 0.387 | 0.487 |

| 17:00 | 5 October 2017, 18:00 | Fog Nonfog | 14 4 | 9 437 | 0.778 | 0.391 | 0.519 |

| mean | 0.680 | 0.323 | 0.498 | ||||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ran, Y.; Ma, H.; Liu, Z.; Wu, X.; Li, Y.; Feng, H. Satellite Fog Detection at Dawn and Dusk Based on the Deep Learning Algorithm under Terrain-Restriction. Remote Sens. 2022, 14, 4328. https://doi.org/10.3390/rs14174328

Ran Y, Ma H, Liu Z, Wu X, Li Y, Feng H. Satellite Fog Detection at Dawn and Dusk Based on the Deep Learning Algorithm under Terrain-Restriction. Remote Sensing. 2022; 14(17):4328. https://doi.org/10.3390/rs14174328

Chicago/Turabian StyleRan, Yinze, Huiyun Ma, Zengwei Liu, Xiaojing Wu, Yanan Li, and Huihui Feng. 2022. "Satellite Fog Detection at Dawn and Dusk Based on the Deep Learning Algorithm under Terrain-Restriction" Remote Sensing 14, no. 17: 4328. https://doi.org/10.3390/rs14174328

APA StyleRan, Y., Ma, H., Liu, Z., Wu, X., Li, Y., & Feng, H. (2022). Satellite Fog Detection at Dawn and Dusk Based on the Deep Learning Algorithm under Terrain-Restriction. Remote Sensing, 14(17), 4328. https://doi.org/10.3390/rs14174328