1. Introduction

Autonomous driving, unmanned aerial vehicles (UAVs), and the Internet of Things (IoT) are technologies that have developed rapidly in recent years. Precise navigation and positioning in complex environments are receiving increasing attention. However, any one sensor alone is not able to provide position solutions with high accuracy, availability, reliability, and continuity at any time and in all environments [

1]. The integration of different sensors, for example, the integration of GNSS, INS, and vision, has become a trend [

2,

3,

4].

GNSS (global navigation satellite system) is an efficient tool for providing precise positioning regardless of time and location and it is widely used in transportation. Zumberge et al. [

5] proposed the precise point positioning (PPP) technique, which has received much attention in recent years for its low costs, global coverage, and high accuracy [

6,

7]. Though PPP can provide centimeter-level positioning for real-time kinematic applications, a nearly 30 min convergence time has limited its applications in UAVs and other technologies. Thus, great efforts have been focused on improving the PPP performance, especially to accelerate its convergence, and promoting various methods, e.g., multi-GNSS combination, ambiguity resolution, and atmospheric augmentation. Lou et al. [

8] presented a comprehensive analysis of quad-constellations with PPP. The results showed that in comparison with the GPS-only solution, the four-system combined PPP can reduce the convergence time by more than 60% on average in kinematic mode. For ambiguity resolution, Ge et al. [

9] proposed the uncalibrated phase delay (UPD) method. Then, Laurichesse et al. [

10] proposed the integer phase clock method, and Collins et al. [

11] proposed the decoupled clock model to facilitate PPP ambiguity resolution (PPP-AR). It was proved that these three PPP-AR methods can dramatically accelerate the convergence and improve the positioning accuracy of PPP [

9,

10,

11,

12]. The undifferenced and uncombined data processing strategy has received increasing interest [

13,

14,

15,

16,

17,

18]. First proposed by Gerhard Wübbena et al. [

19], PPP with ambiguity resolution and atmospheric augmentation is nowadays widely regarded as PPP-RTK (real-time kinematic). As PPP-RTK weakens the influence of the long convergence time in PPP and regional service coverage in RTK, it is regarded as a promising technique for high-precision navigation in mass market applications, including vehicle platforms. As a result, some regional authorities have developed their own PPP-RTK augmentation services, e.g., QZSS centimeter-level accuracy service (QZSS CLAS) began offering PPP-RTK services in 2018 [

20]. Chinese BDS also intends to provide its satellite-based PPP-RTK service in the future.

However, the performance of GNSS is limited by non-line-of-sight (NLOS) conditions, which means PPP-RTK cannot work very well in challenging environments such as urban areas [

21]. When the satellite signals are blocked by buildings or other structures, PPP-RTK fails to provide positioning results if there are less than four satellites available and the performance is terrible due to frequent re-convergence and gross errors. Inertial navigation systems (INS) are immune to interference and can output navigation states continuously without external information. However, the accuracy degrades fast over time due to the accumulated errors. Integrating GNSS with INS can minimize their respective drawbacks and improve the performance of GNSS or INS alone. There are two common integration strategies for PPP with INS, tightly coupled integration and loosely coupled integration [

22]. Moreover, it has been proved that the tightly coupled integration of PPP/INS performs better than loosely coupled integration, especially under GNSS-challenged environments [

23]. Furthermore, Rabbou M A [

24] studied the integration of GPS PPP and MEMS (micro-electro-mechanical System)-based inertial system, the results of which suggested that decimeter-level positioning accuracy was achievable for GPS outages within 30 s. Gao et al. [

23] analyzed the integration of multi-GNSS PPP with MEMS IMU. The results showed that the position RMS improved from 23.3 cm, 19.8 cm, and 14.9 cm for the GPS PPP/INS tightly coupled integration to 7.9 cm, 3.3 cm, and 5.1 cm for the multi-GNSS PPP/INS in the north, east, and up components, respectively. PPP-AR/INS tightly coupled integration is able to realize stable centimeter-level positioning after the first AR and achieve fast re-convergence and re-resolving after a short period of GNSS outage [

25]. Han et al. [

26] analyzed the performance of the tightly coupled integration of RTK/INS constrained with the ionospheric and tropospheric models. Gu et al. [

27] realized the tightly coupled integration of multi-GNSS PPP/INS with atmospheric augmentation. Taking the advantages of PPP-RTK over PPP and RTK into consideration, the integration of multi-GNSS PPP-RTK and MEMS IMU still needs further research.

The performance of GNSS/INS tightly coupled integration could deteriorate even if there were short periods of GNSS signal outages and the positioning accuracy was terrible during long periods of GNSS signal outages, as the drift of INS accumulates rapidly. Therefore, other aiding sensors are required to limit the drift errors of INS when the GNSS signals are blocked. On the one hand, a camera is suitable for solving this problem since visual odometry (VO) can estimate the motion of a vehicle with a slow drift. On the other hand, the model of the monocular camera is relatively simple, but it lacks the metric scale, which can be recovered by IMU. Consequently, a monocular camera is usually integrated with IMU to achieve accurate pose estimations. The fusion algorithms of IMU and vision are usually based on an extended Kalman filter (EKF) [

28,

29,

30] or nonlinear optimization [

31,

32]. The former method usually carries out linearization only once, so there may be obvious linearization errors for vision data processing. The latter method utilizes iterative linearization, which can achieve higher estimation accuracy but is subject to an increased computational burden. The multi-state constraint Kalman filter (MSCKF) is a popular EKF-based visual–inertial odometry (VIO) approach, which is capable of high-precision pose estimations in large-scale real-world environments [

28]. MSCKF maintains several previous camera poses in the state vector by a sliding window and forms the constraints among multiple camera poses by using visual measurements of the same feature point across multiple camera views. Accordingly, the computational complexity is linear with the number of feature points.

On the one hand, VIO can provide accurate pose estimations when GNSS is unavailable. On the other hand, VIO or VI-SLAM (simultaneous localization and mapping) can only achieve an estimation of motion and provide the relative position and attitude and there are unavoidable accumulated drifts over time. Consequently, the integration of GNSS, INS, and vision is receiving increasing interest [

33,

34,

35,

36]. Kim et al., 2005 used a six-degrees-of-freedom (DOF) SLAM to aid GNSS/INS navigation by providing reliable navigation solutions in denied and unknown environments GNSS. Then, Won et al. integrated GNSS with vision for low GNSS visibility [

34], and proposed the selective integration of GNSS, INS, and vision under GNSS-challenged environments [

2], which was able to improve the positioning accuracy compared with nonselective integration. However, in most of these studies, only the position provided by the GNSS or pseudo-range measurements were utilized in the fusion of GNSS, INS, and vision. The application of the carrier phase in multi-sensor fusion is less studied. More recently, Liu [

35] proposed the tightly coupled integration of a GNSS/INS/stereo vision/map matching system for land vehicle navigation, but only the positioning results of PPP were integrated with the INS, stereo vision, and map matching system. Li et al. [

36] further conducted the tightly coupled integration of RTK, MEMS-IMU, and monocular cameras. Obviously, more efforts should be focused on PPP-RTK/INS/vision integration to fully explore the potential of GNSS for further research.

There are many dynamic objects in urban areas which interfere with VIO. Dynamic objects can provide dynamic feature points, but mainstream SLAM uses static feature points to recover the motion. There are a lot of researches about dynamic object removal in VIO or SLAM, but most of them mainly focus on vision [

37,

38]. Thus, a simple dynamic feature points removal model based on position is proposed in this paper with the help of GNSS. As VIO has accumulation errors, a model based on position does not work well without GNSS.

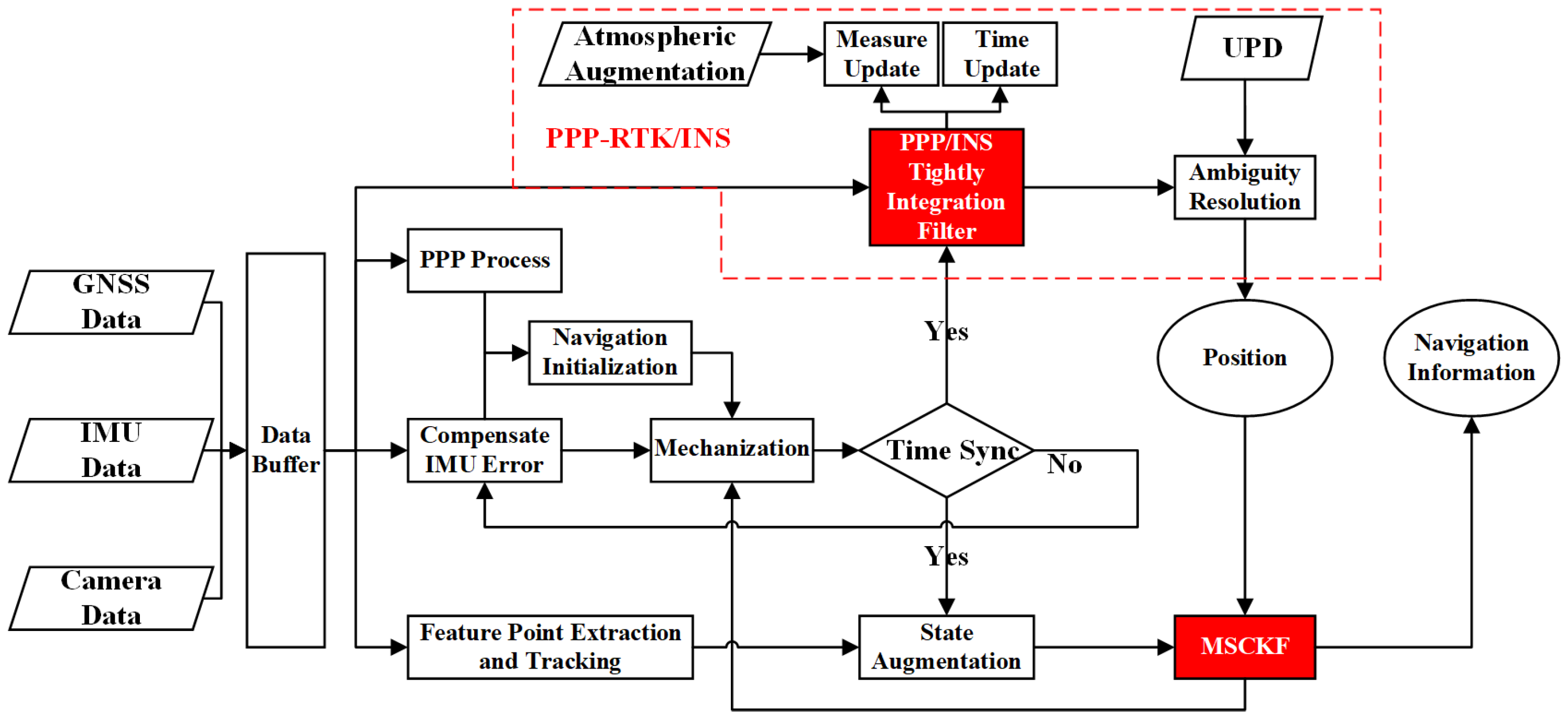

This paper aims to evaluate the navigation performance of the integration of multi-GNSS PPP-RTK, MEMS-IMU, and monocular cameras with a cascading filter and the dynamic object removal algorithm in urban areas. The remainder of this paper is organized as follows: first,

Section 2 presents the mathematical models of PPP-RTK, the MEMS-IMU, and monocular camera integration based on the MSCKF, integration of multi-GNSS PPP-RTK, INS, and vision as well as the dynamic object removal model, and introduces the structure of the proposed model. Then, the details of the test are demonstrated in

Section 3 and the efficiency of different techniques in urban vehicle navigation is analyzed in

Section 4. Finally,

Section 5 presents the conclusions.

3. Experiment

To evaluate the positioning accuracy and performance of the proposed integration model of multi-GNSS PPP-RTK/INS/vision with a cascading filter in urban areas, based on the FUSING (FUSing IN Gnss) software [

7,

40,

41], this algorithm was further developed by us. At present, FUSING has been developed into an integrated software platform that can deal with real-time multi-GNSS precise orbit determination, atmospheric delay modeling and monitoring, satellite clock estimation, as well as multi-sensor fusion navigation.

Two datasets were collected based on a vehicle platform as shown in

Figure 2. One is in the suburban area of Wuhan City on 1 January 2020, and the other is on the Second Ring Road of Wuhan city on 2 January 2020. For simplicity, according to the DOY (day of the year), the two-vehicle tests are denoted as T01 and T02, respectively.

As shown in

Figure 2, the raw data was collected by the IMU of two different grades. A MEMS-grade IMU was used to integrate with PPP-RTK and vision to evaluate the performance of the proposed model. The navigation-grade IMU is of high accuracy and is integrated with RTK to calculate the reference solution, which was regarded as the true value. Both IMUs collected the data at a sampling rate of 200 Hz and their performance parameters are listed in

Table 1. The grayscale Basler acA640-90gm camera was equipped to collect the raw images at a sampling rate of 10 Hz with a resolution of 659 × 494. The UBLOX-M8T was used for generating the pulses per second (PPS) to trigger the camera exposure and it also recorded the time of the pulse at the same time. GNSS data were collected by Trimble Net R9 at a sampling rate of 1 Hz. The camera-IMU extrinsic parameters were calibrated offline by utilizing the Kalibr tool (

https://github.com/ethz-asl/kalibr/ (accessed on 3 January 2020)). The lever-arm correction vector was measured manually.

The test trajectory and the true scenarios for these two tests are shown in the left and right panels of

Figure 3, respectively. It can be seen that dataset T01 was collected in a relatively open sky and only a few obstacles were blocking the GNSS signals. T02 was collected in a GNSS-challenged environment and there were many tall buildings on both sides of the narrow road, including some viaducts and tunnels, which could have totally blocked the GNSS signals. The vehicle speeds of T01 and T02 were about 10 m/s and 15 m/s, respectively, which is shown in

Figure 4. It can be seen that there were significant changes in velocity and direction. The number of visible satellites and the PDOP (precision dilution of positioning) with a cutoff angle of 10° are shown in

Figure 5. Taking, for instance, the GPS, the average number of tracking satellites of T01 and T02 were 9 and 7, respectively, and the average PDOP values were 1.8 and 10.9, respectively, which demonstrates the difference in the observation environment between T01 and T02.

Considering the high-precision ionospheric and tropospheric delay augmentation and ambiguity resolution to support PPP-RTK, the measurement data of seven reference stations as distributed in

Figure 6 were also collected. They were processed to generate high-precision atmospheric delay corrections and UPD products. The average distance between the seven reference stations is 40 km and the green trajectories are the trajectories of the two tests as shown in

Figure 3.

The details of the GNSS data processing strategy are presented in

Table 2. The positioning performance was evaluated by RMS (root mean square). The reference solution was calculated by a loosely coupled RTK/INS solution with a bi-directional smoothing algorithm, in which navigation-grade IMU and GNSS data collected by Trimble R9 were adopted. The ground truth was calculated using commercial software named GINS (

http://www.whmpst.com/cn/ (accessed on 15 April 2021)). The nominal positioning accuracy of the RTK/INS loosely coupled solution provided by GINS was at the level of 2 cm for horizontal and 3 cm for vertical.

4. Results

All results were output at a frequency of 1 Hz. In the following analysis, PPP-RTK/INS means the tightly coupled integration of PPP-RTK and INS. PPP-RTK/INS/vision means the integration of PPP-RTK, INS, and vision with a cascading filter. Before analyzing the performance of our proposed integration system in urban areas, the effects of the dynamic feature point removal algorithm aided by position are presented in

Figure 7. It can be seen that the feature points on the car (in the red box) were removed. Some points that were extracted from unobvious places were also removed and the most static obvious feature points were saved, which can be used for visual localization.

Figure 8 shows the positioning results of PPP-RTK/INS/vision for T02 before and after dynamic feature point removal. The positioning accuracy was improved by dynamic feature point removal, which is demonstrated in the green box in

Figure 8. When PPP-RTK/INS provided stable, high-accuracy position information, the error caused by the dynamic feature points was constrained. However, the positioning performance was obviously influenced by the dynamic feature points when the GNSS signals were interfered with. Combining

Figure 5 and

Figure 8, the GNSS observation conditions were poor and PPP-RTK/INS performed poorly in positioning around time 362,900 s, 364,000 s, and 364,600 s, thus PPP-RTK/INS was not able to restrain the interference of the dynamic feature points. Therefore, the dynamic feature points removal algorithm based on position improved the positioning accuracy when the GNSS signals were severely disturbed. The statistics of the positioning results show that the positioning accuracy was improved by 3 cm and 1 cm in the horizontal and vertical directions, respectively.

The comparison of the performance of different positioning solutions is presented in

Figure 9 and

Figure 10 for T01 and T02, respectively. It can be seen in the left section in

Figure 9 that PPP-RTK converged much faster than PPP, though the ambiguity sometimes failed to fix. It took about 30 s to converge for PPP-RTK and more than 10 min for PPP. Moreover, PPP-RTK had higher accuracy than PPP after convergence. The inclusion of BDS made the series more stable, especially for the vertical direction. Although the contribution of the INS was rather limited in the horizontal direction as shown in the GC-PPP-RTK/INS solution, the outliers may have been inhibited, e.g., around the time 28,400 s. As for the vertical direction, the INS significantly contributed to the improvement of the positioning accuracy. The INS helped the GNSS to converge to and maintain a higher level of positioning accuracy. Additionally, the introduction of vision reduced the fluctuation, although overall, there was no big difference. As for T02, there was no obvious convergence in

Figure 10 as the observation environment was complicated and the positioning accuracy was relatively low. However, it still can be seen in the left section in

Figure 10 that PPP-RTK performed better than PPP. In order to further demonstrate the convergence and reconvergence effects of PPP and PPP-RTK, enlarged images of parts of

Figure 10 are shown in

Figure 11 and the correspondence can be seen from the time of the week. PPP-RTK converged and reconverged much faster than PPP and achieved higher positioning accuracy, though the observational environment was challenging.

Figure 10 shows that the series of G-PPP and G-PPP-RTK were interrupted many times because the GNSS signals were blocked out, which is embodied in the tracking number of the visible satellites in

Figure 5. Both the continuity and accuracy of the positioning were improved with the BDS included. However, the GNSS was still unable to provide positioning results from 362,906 s to 362,965 s because the vehicle was in the tunnel at that time and there was no GNSS signal at all. GC-PPP-RTK/INS provided continuous and more stable positioning information with the integration with the INS, but there was also obvious fluctuation and the existence of epochs with large positioning errors. The positioning errors diverged to 21.74 m, 15.82 m, and 3.82 m in the north, east, and down directions, respectively. The three-dimensional positioning error at 362,965 s was 27.16 m, which is not suitable for vehicle navigation. Furthermore, the positioning error was obviously reduced when vision was included, especially around the time of the week at 362,900 s, 363,600 s, and 364,000 s. The cumulative errors of the INS were effectively constrained by vision. Thus, in the period from 362,906 s to 362,965 s, the maximum errors of the INS/vision were −1.43 m, −3.94 m, and 2.50 m in the north, east, and down directions, respectively. The corresponding three-dimensional error was 4.88 m, which was 0.49% of the traveled distance. It can be concluded that the integration of multi-GNSS PPP-RTK/INS/vision with a cascading filter performed best in comparison with the other four solutions.

The statistics of the position difference between T01 and T02 are presented in

Table 3 and

Table 4 to further verify the conclusion. Additionally, the number of epochs at which the position information can be obtained is denoted as A and the number of total epochs is denoted as B. Then the positioning availability can be defined as A/B, which is also included in the tables. The improvement statistics are derived in comparison with the G-PPP solution. The statistics show that the PPP-RTK performed better than PPP in both tests. The ambiguity could not be fixed in many epochs for the frequent GPS signal interruptions and disturbances in T02. Thus, the improvement brought by PPP-RTK for T02 was not as obvious as for T01. The horizontal and vertical positioning RMS of the GC-PPP-RTK/INS/vision solution for the test T01 were 0.08 m and 0.09 m, respectively. As for T02, the horizontal and vertical RMS of the GC-PPP-RTK/INS/vision solution were 0.83 m and 0.91 m, respectively. It can be seen that GC-PPP-RTK/INS/vision made significant improvements compared with the other four solutions. The improvements should have been more obvious because of the interruption in G-PPP and G-PPP-RTK. The positioning availabilities of G-PPP and G-PPP-RTK were both 90.5%. The availability increased to 95.1% with the inclusion of BDS, which means that more epochs of worse positioning were taken into consideration. Because of the accumulation errors of the INS, the statistics of GC-PPP-RTK/INS were worse than all the other solutions in the horizontal direction. The statistics of GC-PPP-RTK, GC-PPP-RTK/INS, and GC-PPP-RTK/INS/vision are shown in

Table 5, in which the positioning results derived by the INS and vision are excluded, in order to better show the improvements brought by the INS and vision to the positioning performance of GC-PPP-RTK. The improvement statistics were derived in comparison with the GC-PPP-RTK solution. It can be seen that the INS improved the performance of GC-PPP-RTK by 31.4% and 37.1% in the horizontal and vertical directions, respectively. Eventually, the inclusion of vision increased the improvements to 37.1% and 42.2% in the horizontal and vertical directions, respectively.

Position, velocity, and attitude are of great importance for vehicle navigation in urban areas. The velocity error series of GC-PPP-RTK/INS and GC-PPP-RTK/INS/vision for T01 and T02 are shown in

Figure 12 and

Figure 13, respectively. As dataset T01 was collected in a relatively open-sky environment, the error series was very stable. There was no obvious difference between GC-PPP-RTK/INS and GC-PPP-RTK/INS/vision. Because T02 was collected in a GNSS-challenged environment, there were obvious divergences in the velocity estimation around 362,900 s, 363,600 s, and 364,000 s. The inclusion of vision weakened the impact and improved the accuracy of the velocity estimation in three directions. It can be seen from the statistics in

Table 6 and

Table 7 that vision could not bring about obvious improvements in an open-sky environment but greatly improved the velocity estimation accuracy in a GNSS-challenged environment. The error of velocity estimation in T02 was reduced by the inclusion of vision from 0.12 m/s, 0.07 m/s, and 0.07 m/s to 0.03 m/s, 0.05 m/s, and 0.05 m/s in the north, east, and down directions, respectively. The improvement in all three directions was more than 20%.

The attitude error series of GC-PPP-RTK/INS and GC-PPP-RTK/INS/vision for T01 and T02 are shown in

Figure 14 and

Figure 15, respectively. It can be seen that there was no obvious difference between GC-PPP-RTK/INS and GC-PPP-RTK/INS/vision in the roll and pitch angles in T01. As for T02, the error of pitch and yaw angle of GC-PPP-RTK/INS accumulated when GNSS signals were blocked or interfered with.

Figure 15 shows that vision helped to constrain the error divergence around 363,000 s when the GNSS signals were blocked, but there was no obvious difference in the roll and pitch angles at other parts in T02. However, the estimation accuracy of the yaw angle was significantly improved with vision aiding in both tests. The statistics of the attitude error are listed in

Table 8 and

Table 9. The inclusion of vision reduced the error of the yaw angle from 0.24° to 0.11° and from 0.39° to 0.26° for T01 and T02, respectively. The improvement rates were more than 30% in both tests.

6. Conclusions

To improve the position, velocity, and attitude estimation performance in urban areas for vehicle navigation, a multi-GNSS PPP-RTK/INS/vision integration model with a cascading filter was developed and validated using two vehicular tests, T01 and T02, in urban areas. T01 was conducted in the suburban area of Wuhan City and T02 on the Second Ring Road of Wuhan city. The T02 test can be regarded as a typical GNSS-challenged environment. To obtain the atmospheric corrections and UPD products for PPP-RTK, observations from seven reference stations were also collected for generating those products.

A dynamic object removal model was also proposed and validated with T02. A dynamic object removal model based on position can work well in a GNSS-challenged environment and improve the positioning performance of multi-GNSS PPP-RTK/INS/vision.

PPP-RTK achieved centimeter-level positioning in the horizontal direction and decimeter-level positioning in the vertical direction under a relatively open sky environment such as T01. The performance in the vertical direction was obviously improved when BDS was included with respect to G-PPP-RTK. Moreover, it took only about 30 s for PPP-RTK convergence due to the atmospheric augmentation and ambiguity resolution. However, incorrect ambiguity resolution remained and the position performance became significantly worse in this case. The introduction of the INS weakened the influence of the incorrect ambiguity resolution and improved the positioning accuracy. The positioning error of GC-PPP-RTK/INS was 0.08 m and 0.09 m, with an improvement of 11.1% and 59.1% in the horizontal and vertical directions, respectively, in comparison with GC-PPP-RTK.

The performance of PPP-RTK degraded fast when the GNSS observation environments became complicated and challenging such as T02. G-PPP-RTK could only achieve meter-level positioning in a GNSS-challenged environment. Compared with G-PPP-RTK, the positioning availability was improved from 90.5% to 95.1% for GC PPP-RTK. The GC-PPP-RTK/INS solution, in comparison with the GC-PPP-RTK solution, contributed significantly to the improvement of the positioning accuracy in the horizontal and vertical directions and the positioning availability. The positioning availability of GC-PPP-RTK/INS increased to 100%. The positioning error of GC-PPP-RTK/INS was 0.48 m and 0.73 m in the horizontal and vertical directions, respectively, after excluding the positioning results derived by the INS. The improvements were 31.4% and 37.1% in the horizontal and vertical directions, respectively, in comparison with GC-PPP-RTK.

The two-vehicle tests showed that GC-PPP-RTK/INS could realize high-precision continuous positioning in a relatively open-sky environment but the positioning errors diverged to more than 20 m in a GNSS-challenged environment. Thus, it needed other sensors such as vision to help restrict the error divergence. Vision did not improve the positioning accuracy statistically but further reduced the fluctuation slightly in the vertical direction for T01. The results indicated that the position RMS of the GC-PPP-RTK/INS/vision tightly coupled integration were 0.08 m and 0.09 m in the horizontal and vertical directions, respectively, which could fully meet the demands of vehicle navigation in urban areas. However, the introduction of vision significantly improved the positioning performance in both the horizontal and vertical directions for T02. The RMS of GC-PPP-RTK/INS/vision reached 0.83 m and 0.91 m in the horizontal and vertical directions, respectively. It improved the positioning accuracy by 54.9% and 7.1% in the horizontal and vertical directions, respectively, compared with GC-PPP-RTK/INS. Additionally, the velocity and attitude estimation performance were also analyzed in this paper. The inclusion of vision improved the velocity performance by more than 25% in the north, east, and down directions in a GNSS-challenged environment. As for attitude, there was no obvious difference with vision in the roll and pitch angles, but GC-PPP-RTK/INS/vision performed much better in the estimation of the yaw angle. The improvements brought about by vision were more than 30% in both tests.

The results show that GC-PPP-RTK/INS/vision integration with a cascading filter performs best in the position, velocity, and attitude estimations compared with the other solutions. Multi-GNSS, INS, and vision can play their respective roles and achieve complementary advantages in vehicle navigation in urban areas. However, navigation performance in real-time still deserves further study.