Abstract

Artificial reef detection in multibeam sonar images is an important measure for the monitoring and assessment of biological resources in marine ranching. With respect to how to accurately detect artificial reefs in multibeam sonar images, this paper proposes an artificial reef detection framework for multibeam sonar images based on convolutional neural networks (CNN). First, a large-scale multibeam sonar image artificial reef detection dataset, FIO-AR, was established and made public to promote the development of artificial multibeam sonar image artificial reef detection. Then, an artificial reef detection framework based on CNN was designed to detect the various artificial reefs in multibeam sonar images. Using the FIO-AR dataset, the proposed method is compared with some state-of-the-art artificial reef detection methods. The experimental results show that the proposed method can achieve an 86.86% F1-score and a 76.74% intersection-over-union (IOU) and outperform some state-of-the-art artificial reef detection methods.

1. Introduction

Marine ranching is an artificial fishery that is formed based on the principles of marine ecology and modern marine engineering technology, making full use of its natural productivity while scientifically cultivating and managing fishery resources in specific sea areas [1,2,3,4,5]. The construction and delivery of artificial reefs are important technical means used in the construction of marine ranching [6,7]. Large-scale marine ranching construction usually requires thousands or even tens of thousands of artificial reefs in specific sea areas [8,9,10]. Accurately detecting artificial reefs has an important impact on the monitoring and assessment of the biological resources of constructed marine ranching [11]. The traditional methods of detecting artificial reefs by diving cameras and artificial exploration have the disadvantages of low efficiency and high cost and are difficult to document macroscopic monitoring of large-scale artificial reefs [12,13]. With the development of modern sonar technology, detectors that are based on acoustic methods have become the most effective and efficient technical means for seabed detection. The multibeam sonar detector, which is an advanced acoustic detector, has become an important technical means for the monitoring of artificial reefs on the seabed [14,15,16]. Artificial reef detection in multibeam sonar images usually relies on visual interpretation, which has the disadvantages of a large workload and high labor costs. How to accurately and automatically detect artificial reefs from multibeam sonar images has become a research hotspot.

2. Related Work

With respect to detecting artificial reefs using multibeam sonar images, some research has been carried out. A convolutional neural network (CNN) is the most popular deep learning model [17,18,19,20,21,22,23,24,25]. The CNN does not need to artificially design image features. It can learn and extract the image features based on a unique network structure and a large number of labeled data. When the training data is sufficient, the image features extracted by the CNN have good robustness and universality in different complex situations [26,27]. Therefore, some scholars have applied object detectors based on CNNs to artificial reef detection in multibeam sonar images. For example, Xiong et al. used the Faster-RCNN [23] and single-shot multibox detector (SSD) [21] to realize artificial reef detection in multibeam sonar images [28]. Feldens et al. applied the You Look Only Once, version 4 (YOLOv4) [29] object detector to detect artificial reefs using multibeam sonar images [30]. However, in the detection results of Faster-RCNN, SSD and YOLOv4, the detected artificial reefs are rectangular areas, making it difficult to accurately detect the boundary of artificial reefs. In a word, there are currently few studies on the automatic detection of artificial reefs in multibeam sonar images.

The semantic segmentation framework based on CNNs can assign a class label to each pixel in the image. It is suitable for the detection and extraction of irregularly shaped objects. Since the success of fully convolutional networks (FCN) [31] for semantic image segmentation, different semantic segmentation frameworks based on CNN have been rapidly developed. The semantic segmentation architectures (e.g., U-Net [32], SegNet [33], and Deeplab series [34,35,36,37]) have been successively proposed and successfully applied to the semantic segmentation of different types of images. For example, for the semantic segmentation of natural images, Long et al. proposed the FCN to detect multi-class objects in the PASCAL VOC 2011 segmentation dataset [31]. Badrinarayanan et al. proposed the SegNet for various object detections in the PASCAL VOC 2012 and MS-COCO segmentation datasets [33]. Chen et al. developed the Deeplab series semantic segmentation frameworks to detect various objects in the PASCAL VOC 2012 and MS-COCO segmentation datasets [34,35,36,37]. Han et al. proposed the edge constraint-based U-Net for salient object detection in natural images [38]. For the semantic segmentation of medical images, Ronneberger et al. proposed the U-Net for biomedical image segmentation to obtain good cell detection results [32]. Wang et al. used the Deeplabv3+ to detect the pathological slices of gastric cancer in medical images [39]. Bi et al. combined a generative adversarial network (GAN) with the FCN to detect blood vessels, cells and lung regions in medical images [40]. Zhou et al. proposed the U-Net++ to detect cells, liver and lung regions in medical images [41]. For the semantic segmentation of high spatial resolution remote sensing images (HSRIs), Guo et al. combined U-Net with an attention block and multiple losses to realize the detection of buildings for HSRIs [42]. Zhang et al. proposed a deep residual U-Net to detect roads in HSRIs [43]. Diakogiannis et al. proposed the ResUnet-a to realize the semantic segmentation of various geographic objects for HSRIs. The ResUnet-a can clearly detect buildings, trees, low vegetation and cars in the ISPRS 2D semantic dataset [44]. Lin et al. proposed a nested SE-Deeplab model to detect and extract roads in HSRIs [45]. Jiao et al. proposed the refined U-Net framework to detect clouds and shadow regions in HSRIs. Different types of clouds and shadow regions in the HSRIs can be accurately detected using the refined U-Net framework [46]. The semantic segmentation frameworks based on CNNs have been successfully applied to various object detection in natural images, medical images and HSRIs. However, they have not yet been applied to the semantic segmentation of multibeam sonar images.

With respect to how to accurately detect artificial reefs in multibeam sonar images using semantic segmentation frameworks based on a CNN, this paper proposes an artificial reef detection framework for multibeam sonar images based on a CNN. First, a large-scale multibeam sonar image artificial reef detection dataset, FIO-AR, was established and made public to promote the development of artificial multibeam sonar image artificial reef detection. Then, an artificial reef detection framework based on a CNN (AR-Net) is designed to detect various artificial reefs in multibeam sonar images.

The main contributions of this paper are summarized as follows.

- A large-scale multibeam sonar image artificial reef detection dataset, FIO-AR, is established and published for the first time to facilitate the development of multibeam sonar image artificial reef detection.

- A semantic segmentation framework based on a CNN, the AR-Net, is designed for artificial reef detection in multibeam sonar images.

- Various artificial reefs at different water depths can be accurately detected using the proposed method.

The remainder of this paper is organized as follows. Section 2 describes how to build an artificial reef detection dataset and introduces the AR-Net and how to train and test it. Section 3 and Section 4 present the experimental data and analyze and discuss the experimental results. Section 5 summarizes the experimental results and presents the conclusions.

3. Materials and Methods

In this section, the information of the FIO-AR dataset is first introduced in detail. Then, the performance and features of the essential phases of the AR-Net framework are shown and described.

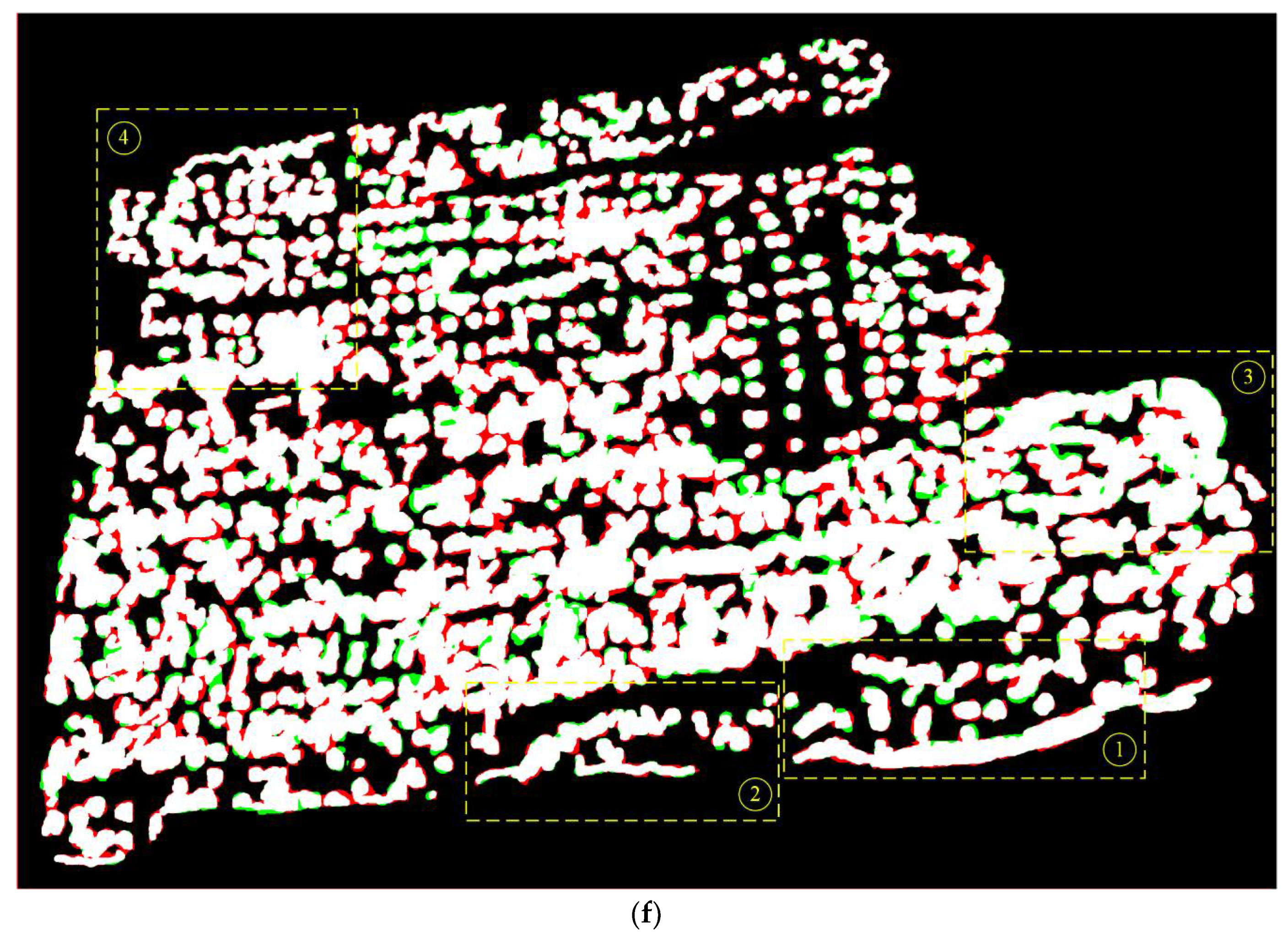

3.1. Artificial Reef Detection Dataset for the Multibeam Sonar Images

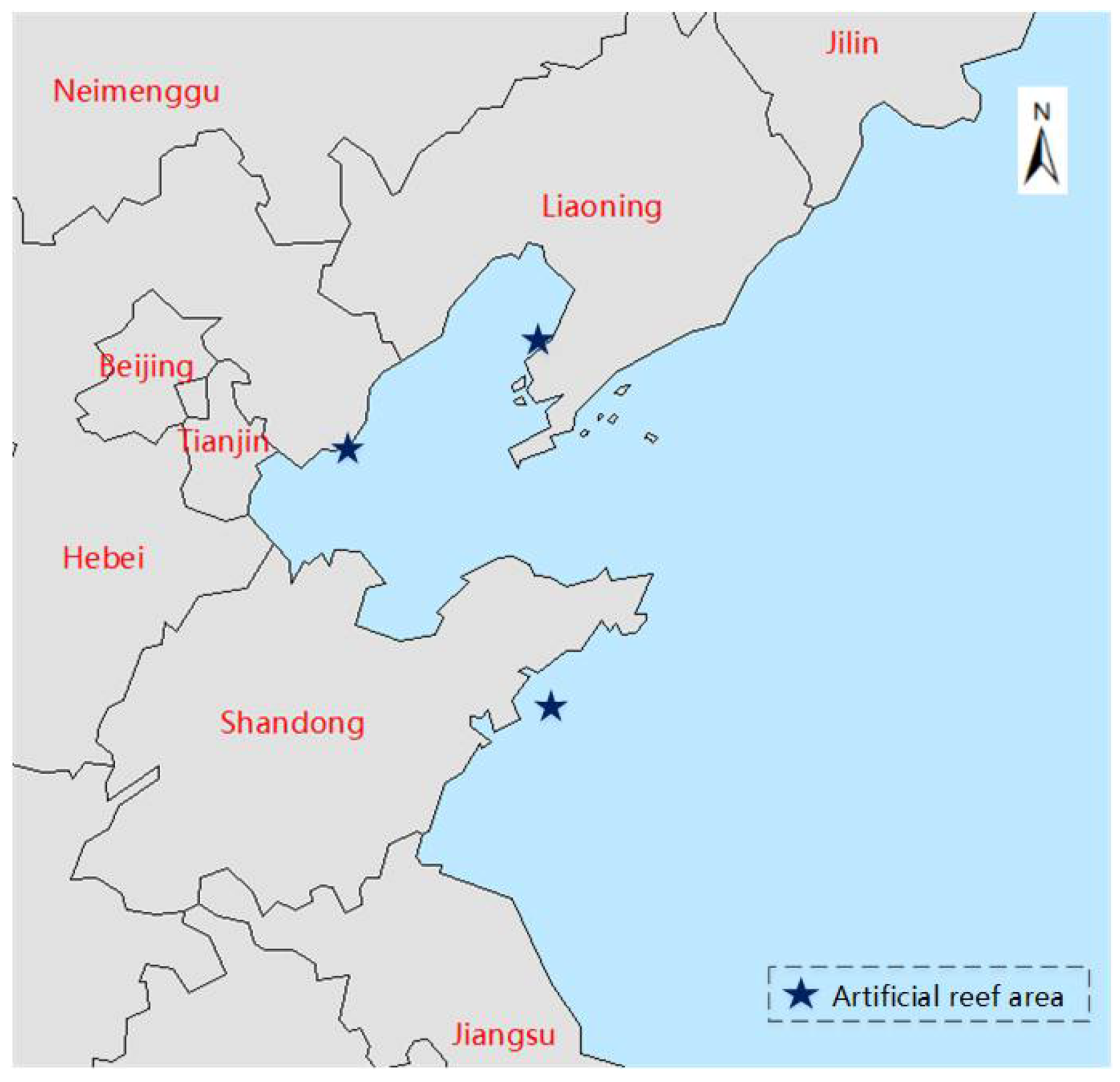

Large-scale semantic segmentation datasets are the basis and key to the high performance of the semantic segmentation frameworks based on CNNs. However, there are currently no publicly available multibeam sonar image artificial reef detection datasets. To solve this problem, a large-scale multibeam sonar image artificial reef detection dataset, FIO-AR, was established and published for the first time to facilitate the development of multibeam sonar image artificial reef detection. In this paper, the NORBIT-iWBMS and Teledyne Reson SeaBat T50P multibeam echosounding (MBES) bathymeters were used to detect three artificial reef areas in China. The NORBIT-iWBMS and Teledyne Reson SeaBat T50P MBES bathymeters are shown in Figure 1, and their detailed parameters are presented in Table 1. The three artificial reefs are located in Dalian, Tangshan and Qianliyan, respectively, as shown in Figure 2. Their latitudes and longitudes are roughly 121°50′41″E and 40°00′25″ N, 118°58′50″E and 39°10′40″ N, and 121°14′11″E and 36°13′40″N, respectively. The three artificial reef areas are approximately 0.46 km2, 3 km2 and 0.7 km2, respectively. The water depth of the three artificial reefs is about 2~4 m, 5~12 m and 30 m, respectively. The three artificial reef areas are built mainly by throwing stones and reinforced concrete components.

Figure 1.

(a) NORBIT-iWBMS, and (b) Teledyne Reson SeaBat T50P.

Table 1.

Detailed information for the NORBIT-iWBMS and Teledyne Reson SeaBat T50P.

Figure 2.

Distribution location of three artificial reef areas.

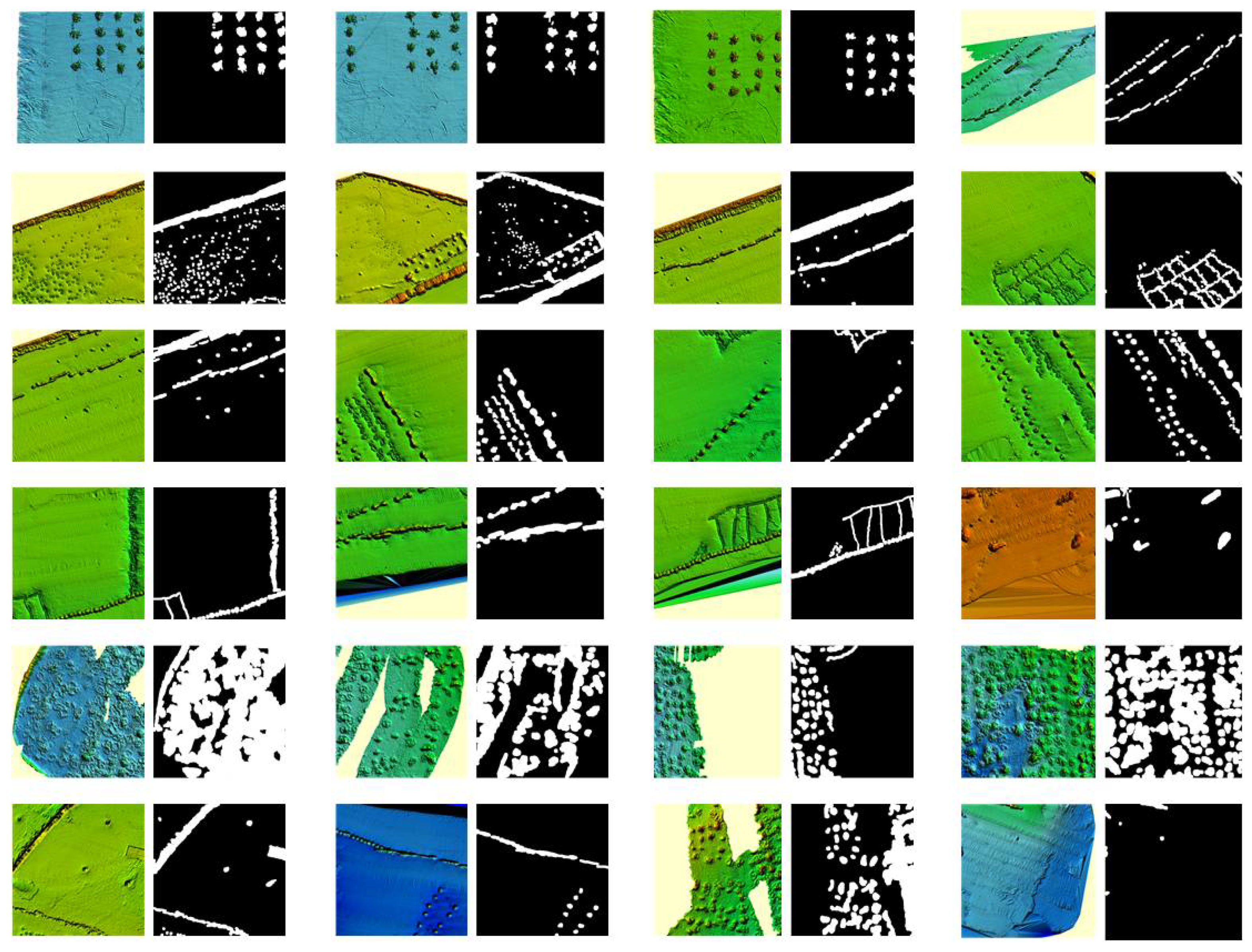

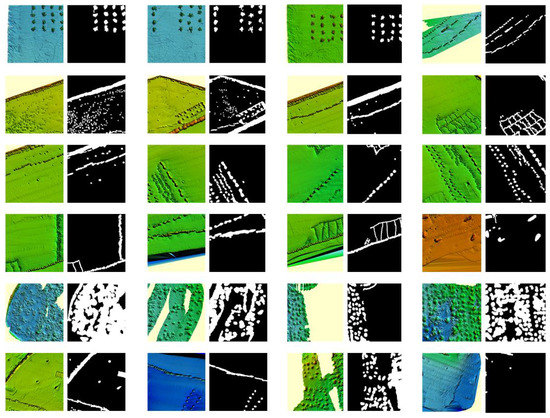

For this paper, a rich variety of multibeam sonar artificial reef images were obtained using two different MBES bathymeters to measure the three artificial reef areas at different water depths. The obtained multibeam sonar artificial reef images are cropped into 1576 image blocks with a size of 512 × 512 pixels. The artificial reef areas in the 1576 image blocks are accurately annotated using a professional semantic segmentation annotation tool to establish the artificial reef detection dataset, FIO-AR. In the FIO-AR dataset, some images and annotation samples are shown in Figure 3. In order to evenly match the distribution of the training data and test data, we randomly selected 3/5 images as the training dataset, 1/5 images as the verification dataset, and 1/5 images as the test dataset from the FIO-AR dataset. The FIO-AR dataset lays a good data foundation for artificial reef detection using the semantic segmentation frameworks based on a CNN.

Figure 3.

The samples of the images and annotations in the FIO-AR dataset.

3.2. The AR-Net Framework Design

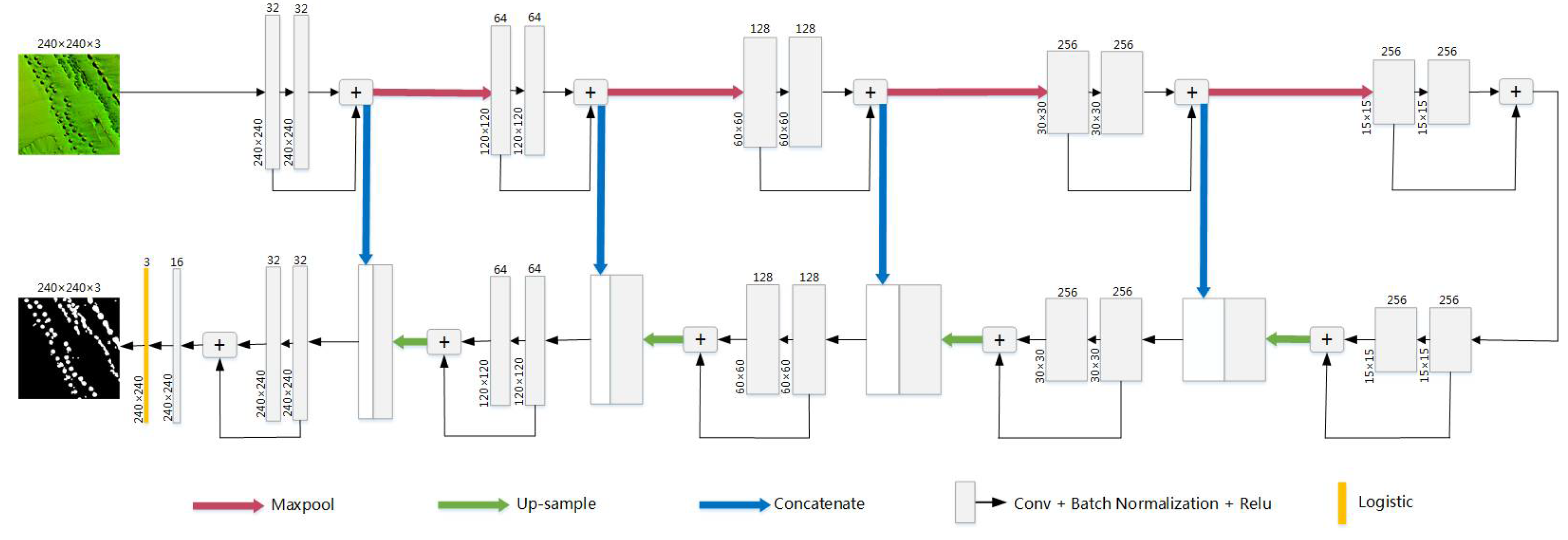

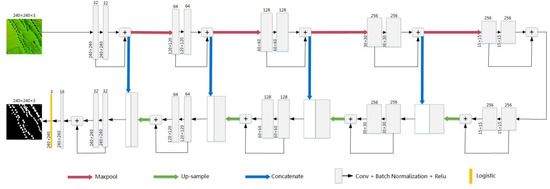

The semantic segmentation framework based on the CNN can assign a class label to each pixel in the image. It is suitable for the detection and extraction of irregularly shaped objects. The semantic segmentation frameworks based on CNNs have been successfully applied to various object detections in natural images, medical images and HSRIs. However, they have not yet been applied to the semantic segmentation of the multibeam sonar images. With respect to this problem, an artificial reef semantic segmentation framework based on the CNN, the AR-Net, is designed to realize artificial reef detection and extraction in multibeam sonar images. The SegNet network structure has the advantage of a symmetrical structure in the encoding and decoding stages, which can achieve scale consistency between the input image and the semantic segmentation result [33]. The U-Net network structure can combine high-level semantic information and low-level location information to achieve accurate object region extraction [32]. The residual module can make the network parameters converge faster and better [47]. In this paper, the AR-Net fully integrates the advantages of the SegNet network structure symmetry, the U-Net, combined with high-level feature semantic information and low-level feature location information, and a residual module to optimize the training parameters to detect artificial reefs in the multibeam sonar images. The AR-Net framework is an efficient and lightweight network architecture, as shown in Figure 4.

Figure 4.

The AR-Net framework.

In the AR-Net framework, five-level features are used for the image feature extraction in the encoding stage. In each level feature, there are two feature maps. In the decoding stage, six-level features are used for the image feature extraction. Among them, each of the first five-level features has two feature maps, and the sixth-level feature has only one feature map. Moreover, the feature maps obtained from each level feature in the encoding stage are fused into the image feature extraction in the decoding stage. In the decoding stage, the logistic function normalizes the sixth-level feature output value from 0 to 1. The logistic function is shown in Formula (1). The output values of the logistic function are used to calculate the network loss function and predict the network semantic segmentation result. In the AR-Net framework, the width, height and number of bands of the input image are 240, 240 and 3, respectively. The size and stride of the convolution kernel are set to 2 and 2 in the max-pool layers, respectively. In the convolution layers, the size, stride and pad of the convolution kernel are set to 3, 1 and 1, respectively. During the training of the AR-Net framework, the stochastic gradient descent (SGD) [48] with a minibatch size of 12 is applied to train the network parameters. The learning rate for the first 10,000 min-batches is set to 0.0001, the learning rate for the 10,000 to 50,000 times min-batch is set to 0.00001, and the learning rate for the 50,000 to 100,000 times min-batch is set to 0.000001. The momentum and weight decay are set to 0.9 and 0.0001, respectively. In the ground-truth annotation, the pixel RGB values of the artificial reef area and the background are labeled as (255, 255, 255) and (0, 0, 0), respectively. When calculating the loss function, the pixel values of the ground-truth annotation result are divided by 255 to normalized to 0~1. In this paper, the root mean squared error (RMSE) is used to calculate the AR-Net loss value between the normalized ground-truth annotation results and the output values of the logistic function. The RMSE is calculated as shown in Formula (2).

where x is the sixth-level feature output value. Loss is the training loss value of the AR-Net framework for a min-batch. N is the number of images contained in a min-batch. m and n are the height and width of the last feature map, respectively. is the output value of the logistic function at position (r, j, i, k). is the truth value at the position (r, j, i, k) of the normalized ground-truth annotation results.

In the AR-Net framework testing phase, the output values of the logistic function are multiplied by 255 to map to 0~255. A gray image is obtained based on the output values of the logistic function using Formula (3) [49], which is the most widely used color image in gray images. If the pixel value in the gray image is greater than or equal to the threshold, the predicted image pixel corresponding to the location of the pixel in the gray image is an artificial reef; otherwise, it is the background. The threshold is set to 200 in this paper. In the prediction image, the artificial reef area and background are labeled as (255, 255, 255) and (0, 0, 0), respectively.

where Gray is the pixel value of the gray image. (R, G, B) are the output values of the three feature maps of the logistic function.

4. Results

To verify the effectiveness of the AR-Net framework, the AR-Net framework is compared with some state-of-the-art semantic segmentation algorithms (e.g., U-Net, SegNet and Deeplab). The AR-Net is comprehensively tested and evaluated in terms of its accuracy, efficiency, model complexity and generality. The AR-Net is implemented based on the darknet framework and written in C++. The experiments are run on a workstation with Windows 10 installed, Inter CPU E5-2667 v4 @ 3.20 GHz, 16 GB RAM and NVIDIA Quadro M4000 graphics card (8 GB GPU memory).

4.1. The Evaluation Criteria

In this paper, the F1-score, over-accuracy (OA) and intersection-over-union (IOU) are used as the evaluation criteria for the artificial reef detection results of different semantic segmentation algorithms [50]. The value range of the F1-score is [0, 1]. The larger the F1-score is, the higher the accuracy of the semantic segmentation algorithm detection result and vice versa. The value range of the OA is [0, 1]. The larger the OA is, the better the classification results of the semantic segmentation algorithm are and vice versa. The value range of IOU is [0, 1]. The larger the IOU, the more consistent the semantic segmentation algorithm detection result is with the ground truth and vice versa. The F1-score, OA and IOU are calculated as follows, respectively.

where TP, FP, TN and FN represent the pixel numbers of true positive, false positive, true negative and false negative, respectively. In this paper, the artificial reef area pixels are positive, whereas the background pixels are negative. represents the set of pixels predicted as artificial reefs. represents the set of artificial reef pixels in the ground truth. represents to calculate the number of pixels in the set.

4.2. Quantitative Evaluation for FIO-AR Dataset

Large-scale semantic segmentation datasets are the basis and key to the high performance of the semantic segmentation frameworks based on CNNs. This paper compares the AR-Net framework to some state-of-the-art semantic segmentation algorithms (e.g., U-Net, SegNet and Deeplab) using the FIO-AR dataset. In the FIO-AR dataset, there are 1576 multibeam sonar artificial reef image blocks with a size of 512 × 512 pixels. Among them, 944 images are randomly selected as the training set, 316 images as the verification set and 316 images as the test set. The quantitative evaluation results of the artificial reef detection results of the different semantic segmentation algorithms for the FIO-AR dataset are shown in Table 2.

Table 2.

Performance comparisons of the six semantic segmentation algorithms for the FIO-AR dataset. The bold numbers represent the maximum value in each column.

In Table 2, the precision of the AR-Net is slightly lower than that of the SegNet and ResUNet-a but is higher than that of the other three semantic segmentation algorithms, indicating that the AR-Net can well distinguish the artificial reef and background for the multibeam sonar images. The recalls of the six semantic segmentation algorithms are 0.5312, 0.7425, 0.8559, 0.8191, 0.8184 and 0.8638, respectively. The recall of the AR-Net is the largest, which shows that the AR-Net can clearly identify the artificial reef pixels in the multibeam sonar images. The F1-score of the six semantic segmentation algorithms are 0.6477, 0.7829, 0.864, 0.8557, 0.8455 and 0.8684, respectively. The AR-Net has the largest F1-score, indicating that the AR-Net has the highest accuracy in the artificial reef detection results for the multibeam sonar images. The IOUs of the six semantic segmentation algorithms are 0.4789, 0.6432, 0.7605, 0.7477,0.7324 and 0.7674, respectively. The IOU of the AR-Net is the largest among the six semantic segmentation algorithms, which shows that the semantic segmentation results of the AR-Net are the most consistent with the annotation results of the ground truth for the FIO-AR dataset. The OA of the six semantic segmentation algorithms are 0.9046, 0.932, 0.9555, 0.9544, 0.9506 and 0.9568, respectively. The AR-Net has the largest OA, showing that the AR-Net has the best classification results for the multibeam sonar images. The quantitative evaluation results show that the AR-Net outperforms the other five semantic segmentation algorithms and can obtain more accurate artificial reef detection results for the FIO-AR dataset.

4.3. The Time Consumptions

Table 3 shows the average time consumption on artificial reef detection for each image in the FIO-AR dataset by six semantic segmentation algorithms. In Table 3, the average time consumptions of the six semantic segmentation algorithms are 0.08 s, 0.1 s, 0.16 s, 0.12 s, 0.12 s and 0.08 s, respectively. The time consumption of both AR-Net and Deeplab is 0.08 s, which is the least time consumption among the six algorithms. The experimental result shows that the efficiency of the AR-Net is better than that of the other three algorithms, and it can efficiently detect the artificial reefs for the multibeam sonar images in the FIO-AR dataset.

Table 3.

The average time consumption of the six semantic segmentation algorithms for the FIO-AR dataset. The bold numbers represent the minimum value in the column.

4.4. The Model Parameter Evaluation

Table 4 shows the model parameter size and FLOPS of the six semantic segmentation algorithms. The FLOPS represents the number of multiply-adds of the semantic segmentation framework. The number of multiply-adds is calculated based on the input and output of each layer in the semantic segmentation framework. In Table 4, the model parameter sizes of the six semantic segmentation algorithms are 78.2 MB, 704 MB, 131 MB, 124 MB, 84.3 MB and 23.2 MB, respectively. The model parameter size of the AR-Net framework is the least among the six semantic segmentation algorithms. The FLOPS of the six semantic segmentation algorithms are 47.365 B, 48.081 B, 114.89 B, 79.735 B, 52.676 B and 23.466 B, respectively. The FLOPS of the AR-Net framework is the least among the six semantic segmentation algorithms. The evaluation results show that the AR-Net framework has a smaller amount of computation and requires fewer computing resources. The experimental results show that the AR-Net framework uses the least amount of computation to obtain the optimal artificial reef detection results among the six semantic segmentation algorithms, which indicates that the AR-Net framework is effective for artificial reef detection for the multibeam sonar images in the FIO-AR dataset.

Table 4.

The model parameter size of the six semantic segmentation algorithms. The bold numbers represent the minimum value in each column.

4.5. Quantitative Evaluation for Artificial Reef Detection Results of Large-Scale Multibeam Sonar Image

To further verify the AR-Net framework’s artificial reef detection effectiveness and generalizability, two large-scale multibeam sonar images are used to compare the AR-Net framework with some state-of-the-art semantic segmentation algorithms. Two large-scale multibeam sonar images show the artificial reef areas in Dongtou. They are roughly located at 121°7′40″E and 27°50′30″N, and 121°11′20″E and 27°53′40″N, respectively. An image covers an area of approximately 1.05 km2 and has a size of 4259 × 1785 pixels. The other image covers an area of approximately 0.47 km2 and has a size of 2296 × 1597 pixels. The artificial reef areas are built mainly by throwing stones and reinforced concrete components in Dongtou. Based on the trained model using the FIO-AR database, different semantic segmentation algorithms are tested and compared using two large-scale multibeam sonar images. Two large-scale multibeam sonar images are divided into 512 × 512-pixel image blocks, which are input into the semantic segmentation algorithms for artificial reef detection. When the image blocks are detected, the detection results are mapped back to the original large-scale images.

Table 5 shows the quantitative evaluation results of six semantic segmentation algorithms for the artificial reef detection of the two large-scale multibeam images. In Table 5, the F1-score values of the six semantic segmentation algorithms are 0.5394, 0.7729, 0.7853, 0.8163, 0.8049 and 0.8974, respectively. The F1-score value of the AR-Net is the largest, indicating that the AR-Net has the highest accuracy in the artificial reef detection results for the large-scale multibeam sonar images. The IOU values of the six semantic segmentation algorithms are 0.3693, 0.6299, 0.6465, 0.6897, 0.6735 and 0.8139, respectively. The OAs of the six semantic segmentation algorithms are 0.9025, 0.9336, 0.9451, 0.9514, 0.9486 and 0.9693, respectively. The AR-Net has the largest OA, showing that the AR-Net has the best classification results for large-scale multibeam sonar images. The IOU of the AR-Net is the largest among the six semantic segmentation algorithms, which shows that the semantic segmentation results of the AR-Net are the most consistent with the annotation results of the ground truth for the large-scale multibeam sonar images. The experimental results show that the AR-Net is superior to the other five semantic segmentation algorithms and can obtain more accurate artificial reef detection results for large-scale multibeam sonar images.

Table 5.

Performance comparisons of the six semantic segmentation algorithms for the large-scale multibeam images. The bold numbers represent the maximum value in each column.

5. Discussion

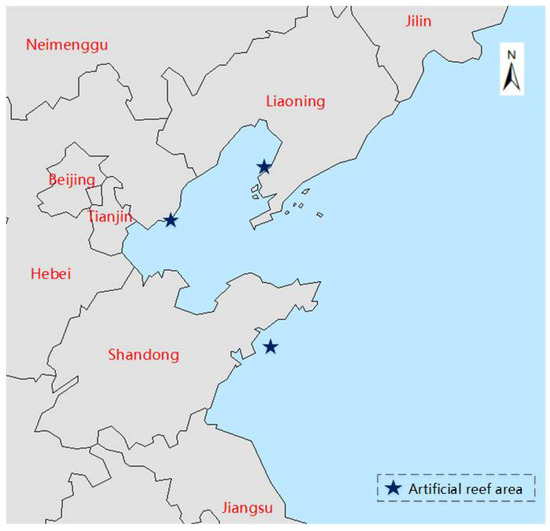

5.1. Visual Evaluation for the FIO-AR Dataset

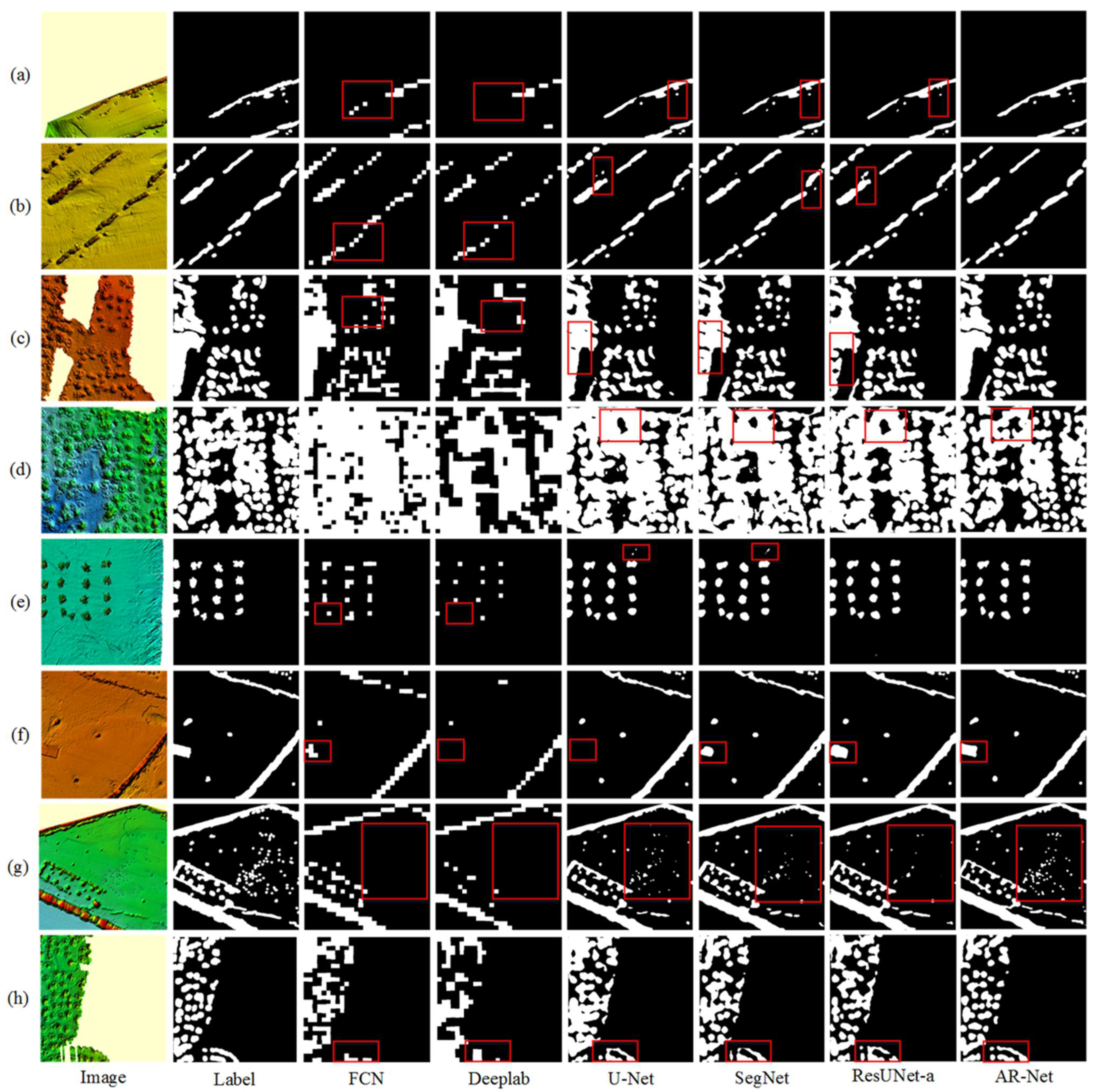

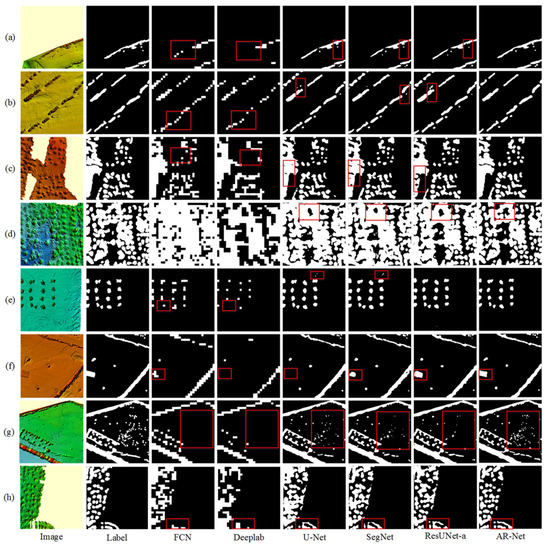

In this section, the AR-Net is compared with the other five semantic segmentation algorithms by using visual evaluation. Figure 5 shows some artificial reef detection result samples of the six semantic segmentation algorithms for the FIO-AR dataset. In Figure 5, the first two columns are the multibeam sonar images and ground-truth labels in the FIO-AR dataset, respectively. The next six columns are the artificial reef detection results of the FCN, Deeplab, U-Net, SegNet, ResUNet-a and AR-Net, respectively.

Figure 5.

The artificial reef detection results of the six semantic segmentation algorithms for the multibeam sonar images in the FIO-AR dataset. (a–h) The first two columns are the multibeam sonar images and ground-truth labels in the FIO-AR dataset, respectively. The next six columns are the artificial reef detection results of the FCN, Deeplab, U-Net, SegNet, ResUNet-a and AR-Net, respectively. The red rectangles represent the key comparison areas of the algorithm results.

In Figure 5a, the FCN and Deeplab fail to detect the larger artificial reef areas in the red rectangular box. The U-Net, SegNet and ResUnet-a cannot detect the smaller-scale artificial reef area in the red rectangle. The artificial reef detection results of the AR-Net are basically consistent with the ground-truth labels, which can accurately detect the artificial reef areas in the multibeam sonar images. In Figure 5b, the FCN and Deeplab miss detecting the artificial reef areas in the red rectangle. The U-Net, SegNet and ResUnet-a produce false detections for the artificial reef area in the red rectangle. The AR-Net can accurately detect artificial reef areas in the multibeam sonar images. In Figure 5c, the FCN, Deeplab, U-Net, SegNet and ResUnet-a have missed detections for the artificial reef areas in the red rectangle. The artificial reef detection results of the AR-Net are basically consistent with the ground-truth labels. In Figure 5d, the artificial reef detection results of the FCN and Deeplab are quite different from the ground-truth labels, and there are a large number of missed and false detections. For the artificial reef area in the red rectangle, the U-Net, SegNet and ResUnet-a have false detections, while the AR-Net can accurately detect the artificial reef in this area. In Figure 5e, the FCN and Deeplab miss the detection of artificial reefs in red rectangles. The U-Net and SegNet mistakenly detect the gully areas in the red rectangles as artificial fish reefs. The ResUNet-a and AR-Net can accurately detect discrete artificial reefs in the multibeam sonar images. In Figure 5f, the FCN, Deeplab, U-Net, SegNet and ResUNet-a cannot correctly detect the artificial reef area in the red rectangle. However, the AR-Net can accurately detect the artificial reef area in the red rectangle, and its artificial reef detection results are basically consistent with the ground-truth labels. In Figure 5g, the FCN, Deeplab, U-Net, SegNet and ResUNet-a cannot accurately detect discrete artificial reef areas in the red rectangle. The AR-Net can accurately detect discrete artificial reef areas in the red rectangle, and its artificial reef detection results are the closest to the ground-truth labels. In Figure 5h, for the artificial reef areas in the red rectangle, the FCN, Deeplab, U-Net, SegNet and ResUNet-a cannot accurately detect them. The AR-Net can accurately detect the artificial reef areas in the red rectangle, and its artificial reef detection results are most similar to the ground-truth labels.

The visual evaluation results show that the artificial reef detection results of the AR-Net are superior to those of the other five semantic segmentation algorithms for the FIO-AR dataset. The AR-Net framework can accurately detect artificial reef areas with different shapes in multibeam sonar images at different depths.

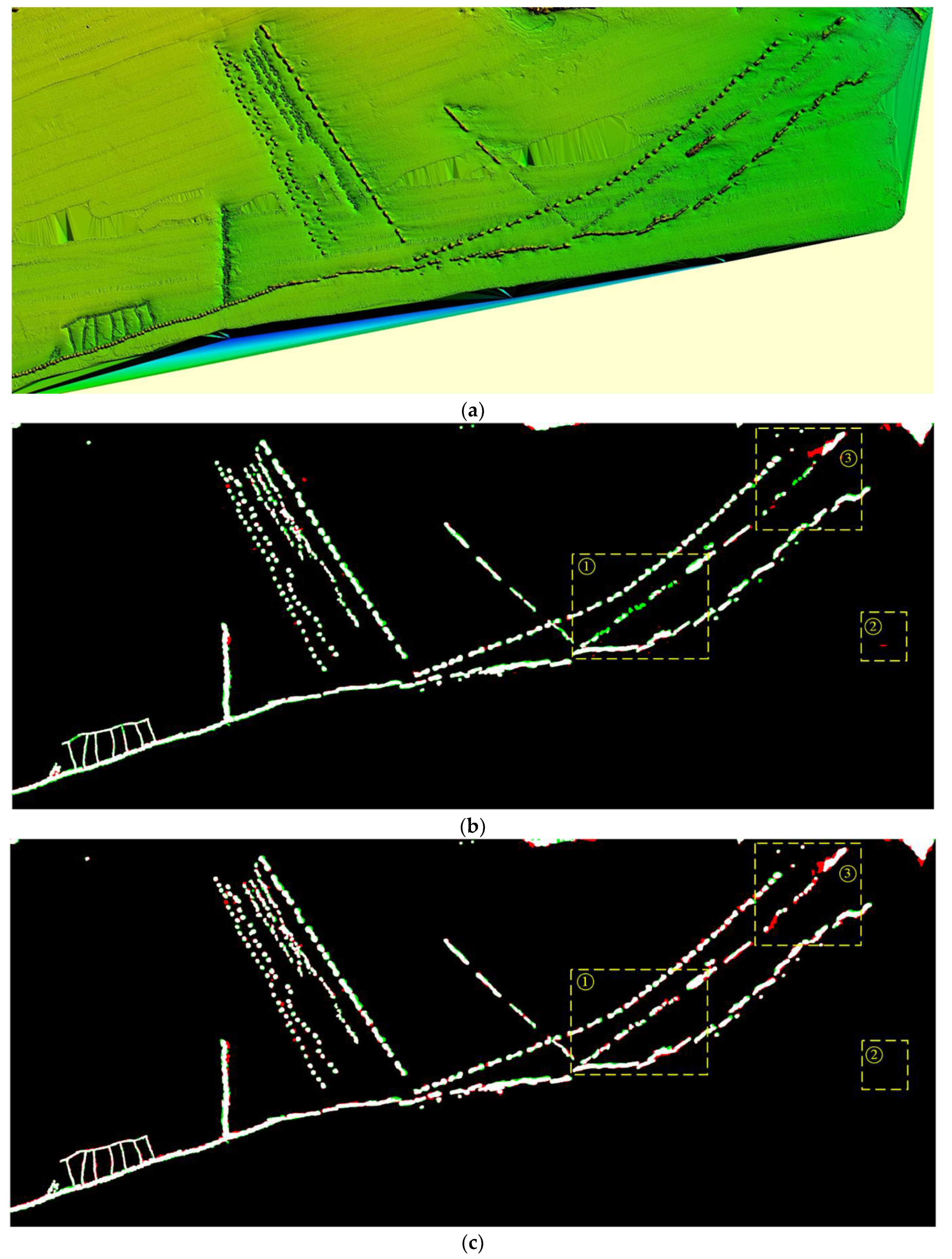

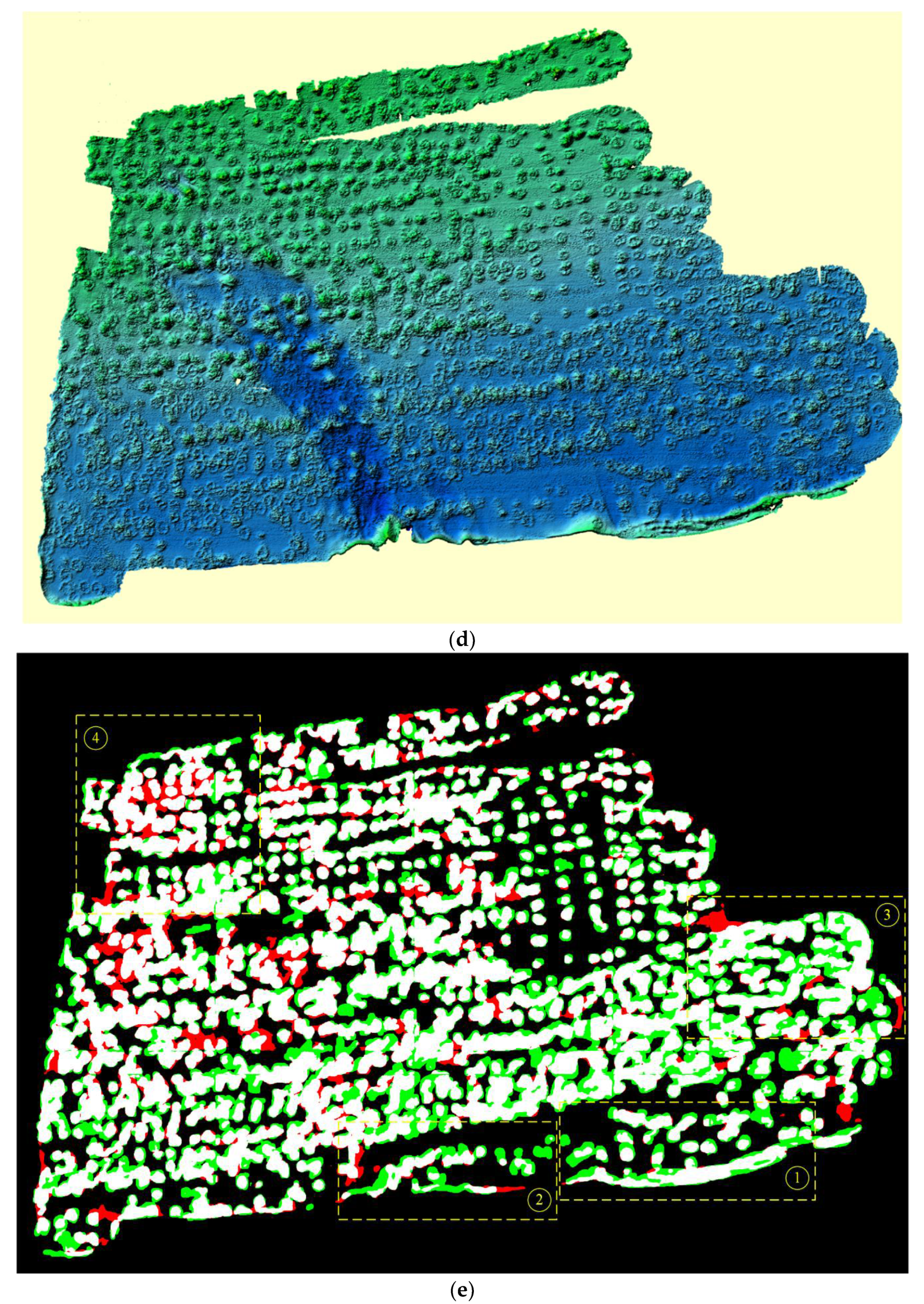

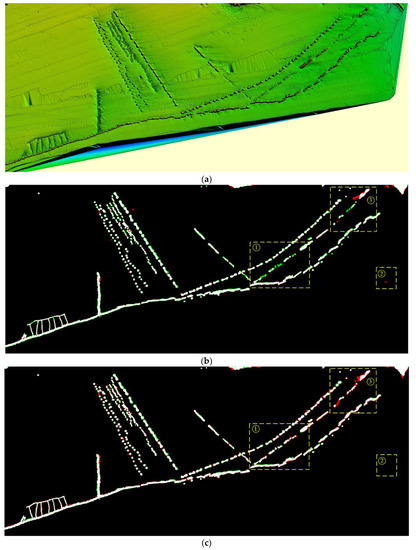

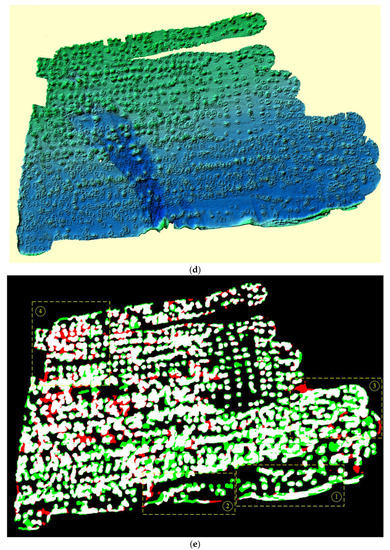

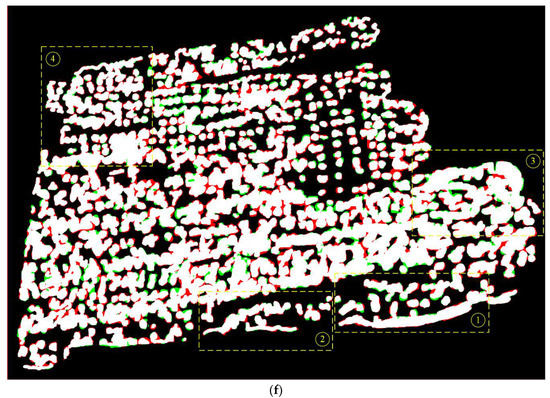

5.2. Visual Evaluation for the Large-Scale Multibeam Sonar Image

To verify the effectiveness of the AR-Net in detecting the artificial reefs from actual large-scale multibeam sonar images, the artificial reef detection results of two large-scale multibeam sonar images in Dongtou are visually evaluated and discussed in this section. A large-scale multibeam sonar image covers an area of approximately 1.05 km2 and has a size of 4259 × 1785 pixels. The other large-scale multibeam sonar image covers an area of approximately 0.47 km2 and has a size of 2296 × 1597 pixels. In Table 5, the SegNet and AR-Net have the best artificial reef detection results for large-scale multibeam sonar images. Therefore, the detection results of the large-scale multibeam sonar images of the SegNet and AR-Net are visually compared and evaluated. Figure 6a,d are two large-scale multibeam sonar images. Figure 6b,c are the artificial reef detection results of Figure 6a using the SegNet and AR-Net, respectively. Figure 6e,f are the artificial reef detection results of Figure 6d using the SegNet and AR-Net, respectively. In the artificial reef detection results, the white pixels, the red pixels, the green pixels and the black pixels represent the correctly detected artificial reef areas, the falsely detected artificial areas, the missed artificial reef areas and the background area, respectively.

Figure 6.

(a,d) are the large-scale multibeam sonar images. (b,e) are the artificial reef detection results using SegNet. (c,f) are the artificial reef detection results using AR-Net. The yellow rectangles with the numbers represent the key comparison areas of the algorithm results.

In Figure 6b—area ①, the SegNet misses detecting the artificial reef areas with small height differences from the seafloor. In Figure 6c—area ①, the AR-Net can basically accurately detect the artificial reef area, except for a small amount of false detection in the artificial reef boundary area. In Figure 6b—area ②, the SegNet falsely detects an undulating seafloor area as an artificial reef. In Figure 6c—area ②, the AR-Net can accurately identify the seafloor area. In Figure 6b—area ③, the SegNet misses the detection of artificial reef areas with small height differences from the seafloor and falsely detects undulating seafloor areas as artificial reefs. In Figure 6c—area ③, the AR-Net falsely detects undulating seafloor areas as artificial reefs. From the overall comparative evaluation, the AR-Net is better than the SegNet for the detection results of artificial reefs in Figure 6a.

In Figure 6e—area ①, the SegNet has a large number of missed detections in artificial reef areas. In Figure 6f—area ①, the AR-Net can basically accurately detect the artificial reef area, except for a small amount of false detection in the artificial reef boundary area. In Figure 6e—area ②, the SegNet has a large number of missed detections and false detections in artificial reef areas. In Figure 6f—area ②, the AR-Net can accurately detect the artificial reef areas. In Figure 6e—area ③, the SegNet has a large number of missed detections and false detections in artificial reef areas. In Figure 6f—area ③, the AR-Net can accurately detect the artificial reef area, except for a small amount of false detection in the artificial reef boundary area. In Figure 6e—area ④, there are a large number of missed detections and false detections in the detection results of artificial reefs. In Figure 6f—area ④, the artificial reefs can be accurately detected using the AR-Net. In Figure 6e, the SegNet has a large number of missed detections and false detections in the detection results of densely distributed artificial reefs. However, the AR-Net can accurately detect densely distributed artificial reefs, except for a small number of missed and false detections in the boundaries of artificial reefs in Figure 6f.

The analysis results show that the AR-Net can accurately detect various types of artificial reefs from actual large-scale multibeam sonar images, except for a small number of false detections and missed detections in the boundary areas of artificial reefs. Therefore, the AR-Net can be effectively applied to artificial reef detection in actual large-scale multibeam sonar images.

6. Conclusions

In this paper, an artificial reef semantic segmentation framework, based on a CNN (AR-Net), is designed for artificial reef detection of multibeam sonar images. The AR-Net fully integrates the advantages of the SegNet network structure symmetry, the U-Net, combined with high-level feature semantic information and low-level feature location information, and a residual module to optimize the training parameters to detect artificial reefs in the multibeam sonar images. Furthermore, a large-scale multibeam sonar image artificial reef detection dataset, FIO-AR, was established and published for the first time to facilitate the development of multibeam sonar image artificial reef detection. To verify the effectiveness of the AR-Net, the FIO-AR dataset and two large-scale multibeam sonar images were used to qualitatively and quantitatively compare the AR-Net with some state-of-the-art semantic segmentation algorithms. The experimental results show that the AR-Net is superior to other state-of-the-art semantic segmentation algorithms and can accurately and efficiently detect artificial reef areas with different shapes in multibeam sonar images at different depths, except for a small number of false detections and missed detections in the boundary areas of artificial reefs. In future work, the boundary constraints will be added to the semantic segmentation framework design to eliminate the missed and false detections in the artificial reef boundary areas to further improve the accuracy of the artificial reef detection results.

Author Contributions

Methodology, software, writing—original draft, writing—review and editing, Z.D.; funding acquisition, supervision, writing—review and editing, L.Y.; writing—review and editing, Y.L. and Y.F.; data collection and annotation, J.D. and F.J. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Key Laboratory of Ocean Geomatics, Ministry of Natural Resources, China, under grant [No. 2021A01] and the Fundamental Research Funds for the Central Universities, under grant [No. 2042021kf1030].

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The FIO-AR dataset can be downloaded at https://pan.baidu.com/s/1i0VJ20zyar361wNvJudxQA (password: 1234).

Acknowledgments

The authors would like to thank the editors and anonymous reviewers for their valuable comments, which helped improve this paper.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

| CNN | Convolutional Neural Networks |

| SSD | Single Shot Multibox Detector |

| YOLO | You Only Look Once |

| FCN | Fully Convolutional Networks |

| HSRIs | High Spatial Resolution Remote Sensing Images |

| R-CNN | Regional Convolutional Neural Networks |

| GAN | Generative Adversarial Network |

| AR-Net | Artificial Reefs Detection Framework based on Convolutional Neural Networks |

| MBES | Multibeam Echosounding |

| SGD | Stochastic Gradient Descent |

| IOU | Intersection-Over-Union |

| TP | True Positive |

| FP | False Positive |

| FN | False Negative |

References

- Yang, H. Construction of marine ranching in China: Reviews and prospects. J. Fish. China 2016, 40, 1133–1140. [Google Scholar]

- Yang, H.; Zhang, S.; Zhang, X.; Chen, P.; Tian, T.; Zhang, T. Strategic thinking on the construction of modern marine ranching in China. J. Fish. China 2019, 43, 1255–1262. [Google Scholar]

- Zhou, X.; Zhao, X.; Zhang, S.; Lin, J. Marine ranching construction and management in east china sea: Programs for sustainable fishery and aquaculture. Water 2019, 11, 1237. [Google Scholar] [CrossRef]

- Yu, J.; Zhang, L. Evolution of marine ranching policies in China: Review, performance and prospects. Sci. Total Environ. 2020, 737, 139782. [Google Scholar] [CrossRef]

- Qin, M.; Wang, X.; Du, Y.; Wan, X. Influencing factors of spatial variation of national marine ranching in China. Ocean Coast. Manag. 2021, 199, 105407. [Google Scholar] [CrossRef]

- Kang, M.; Nakamura, T.; Hamano, A. A methodology for acoustic and geospatial analysis of diverse artificial-reef datasets. ICES J. Mar. Sci. 2011, 68, 2210–2221. [Google Scholar] [CrossRef]

- Zhang, D.; Cui, Y.; Zhou, H.; Jin, C.; Zhang, C. Microplastic pollution in water, sediment, and fish from artificial reefs around the Ma’an Archipelago, Shengsi, China. Sci. Total Environ. 2020, 703, 134768. [Google Scholar] [CrossRef]

- Yu, J.; Wang, Y. Exploring the goals and objectives of policies for marine ranching management: Performance and prospects for China. Mar. Pol. 2020, 122, 104255. [Google Scholar] [CrossRef]

- Castro, K.L.; Battini, N.; Giachetti, C.B.; Trovant, B.; Abelando, M.; Basso, N.G.; Schwindt, E. Early detection of marine invasive species following the deployment of an artificial reef: Integrating tools to assist the decision-making process. J. Environ. Manag. 2021, 297, 113333. [Google Scholar] [CrossRef]

- Whitmarsh, S.K.; Barbara, G.M.; Brook, J.; Colella, D.; Fairweather, P.G.; Kildea, T.; Huveneers, C. No detrimental effects of desalination waste on temperate fish assemblages. ICES J. Mar. Sci. 2021, 78, 45–54. [Google Scholar] [CrossRef]

- Becker, A.; Taylor, M.D.; Lowry, M.B. Monitoring of reef associated and pelagic fish communities on Australia’s first purpose built offshore artificial reef. ICES J. Mar. Sci. 2016, 74, 277–285. [Google Scholar] [CrossRef]

- Lowry, M.; Folpp, H.; Gregson, M.; Suthers, I. Comparison of baited remote underwater video (BRUV) and underwater visual census (UVC) for assessment of artificial reefs in estuaries. J. Exp. Mar. Biol. Ecol. 2012, 416, 243–253. [Google Scholar] [CrossRef]

- Becker, A.; Taylor, M.D.; Mcleod, J.; Lowry, M.B. Application of a long-range camera to monitor fishing effort on an offshore artificial reef. Fish. Res. 2020, 228, 105589. [Google Scholar] [CrossRef]

- Trzcinska, K.; Tegowski, J.; Pocwiardowski, P.; Janowski, L.; Zdroik, J.; Kruss, A.; Rucinska, M.; Lubniewski, Z.; Schneider von Deimling, J. Measurement of seafloor acoustic backscatter angular dependence at 150 kHz using a multibeam echosounder. Remote Sens. 2021, 13, 4771. [Google Scholar] [CrossRef]

- Tassetti, A.N.; Malaspina, S.; Fabi, G. Using a multibeam echosounder to monitor an artificial reef. In Proceedings of the International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences, Piano di Sorrento, Italy, 16–17 April 2015. [Google Scholar]

- Wan, J.; Qin, Z.; Cui, X.; Yang, F.; Yasir, M.; Ma, B.; Liu, X. MBES seabed sediment classification based on a decision fusion method using deep learning model. Remote Sens. 2022, 14, 3708. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Spatial pyramid pooling in deep convolutional networks for visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 37, 1904–1916. [Google Scholar] [CrossRef]

- Girshick, R. Fast R-CNN. In Proceedings of the IEEE International Conference on Computer Vision (CVPR), Boston, MA, USA, 8–12 June 2015; pp. 1440–1448. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Region-based convolutional networks for accurate object detection and segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 38, 142–158. [Google Scholar] [CrossRef] [PubMed]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE International Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016; pp. 779–788. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single shot multibox detector. In Proceedings of the IEEE International Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016; pp. 21–37. [Google Scholar]

- Dai, J.; Li, Y.; He, K.; Sun, J. R-FCN: Object detection via region based fully convolutional networks. In Proceedings of the Neural Information Processing Systems (NIPS), Barcelona, Spain, 4–9 December 2016; pp. 379–387. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLO9000: Better, faster, stronger. In Proceedings of the IEEE International Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6517–6525. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLOv3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Dong, Z.; Wang, M.; Wang, Y.; Liu, Y.; Feng, Y.; Xu, W. Multi-oriented object detection in high-resolution remote sensing imagery based on convolutional neural networks with adaptive object orientation features. Remote Sens. 2022, 14, 950. [Google Scholar] [CrossRef]

- Dong, Z.; Wang, M.; Wang, Y.; Zhu, Y.; Zhang, Z. Object detection in high resolution remote sensing imagery based on convolutional neural networks with suitable object scale features. IEEE Trans. Geosci. Remote Sens. 2020, 58, 2104–2114. [Google Scholar] [CrossRef]

- Xiong, H.; Liu, L.; Lu, Y. Artificial reef detection and recognition based on Faster-RCNN. In Proceedings of the IEEE 2nd International Conference on Information Technology, Big Data and Artificial Intelligence (ICIBA), Chongqing, China, 17–19 December 2021; Volume 2, pp. 1181–1184. [Google Scholar]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. YOLOv4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Feldens, P.; Westfeld, P.; Valerius, J.; Feldens, A.; Papenmeier, S. Automatic detection of boulders by neural networks. In Hydrographische Nachrichten 119; Deutsche Hydrographische Gesellschaft E.V.: Rostock, Germany, 2021; pp. 6–17. [Google Scholar]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE International Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-assisted Intervention, Munich, Germany, 5–9 October 2015; Springer: Cham, Switzerland, 2015; pp. 234–241. [Google Scholar]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. Segnet: A deep convolutional encoder-decoder architecture for image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef] [PubMed]

- Chen, L.C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. Semantic image segmentation with deep convolutional nets and fully connected CRFs. Comput. Sci. 2014, 357–361. [Google Scholar] [CrossRef]

- Chen, L.C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. DeepLab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected CRFs. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 834–848. [Google Scholar] [CrossRef] [PubMed]

- Chen, L.C.; Papandreou, G.; Schroff, F.; Adam, H. Rethinking atrous convolution for semantic image segmentation. arXiv 2017, arXiv:1706.05587. [Google Scholar]

- Chen, L.C.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-decoder with atrous separable convolution for semantic image segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 801–818. [Google Scholar]

- Han, L.; Li, X.; Dong, Y. Convolutional edge constraint-based U-Net for salient object detection. IEEE Access 2019, 7, 48890–48900. [Google Scholar] [CrossRef]

- Wang, J.; Liu, X. Medical image recognition and segmentation of pathological slices of gastric cancer based on Deeplab v3+ neural network. Comput. Met. Prog. Biomed. 2021, 207, 106210. [Google Scholar] [CrossRef]

- Bi, L.; Feng, D.; Kim, J. Dual-path adversarial learning for fully convolutional network (FCN)-based medical image segmentation. Vis. Comput. 2018, 34, 1043–1052. [Google Scholar] [CrossRef]

- Zhou, Z.; Siddiquee, M.M.R.; Tajbakhsh, N.; Liang, J. UNet++: A nested U-Net architecture for medical image segmentation. In Proceedings of the 4th Deep Learning in Medical Image Analysis (DLMIA) Workshop, Granada, Spain, 20 September 2018. [Google Scholar]

- Guo, M.; Liu, H.; Xu, Y.; Huang, Y. Building extraction based on U-Net with an attention block and multiple losses. Remote Sens. 2020, 12, 1400. [Google Scholar] [CrossRef]

- Zhang, Z.; Liu, Q.; Wang, Y. Road extraction by deep residual U-Net. IEEE Geosci. Remote Sens. Lett. 2018, 15, 749–753. [Google Scholar] [CrossRef]

- Diakogiannis, F.I.; Waldner, F.; Caccetta, P.; Wu, C. ResUNet-a: A deep learning framework for semantic segmentation of remotely sensed data. ISPRS J. Photogramm. Remote Sens. 2020, 162, 94–114. [Google Scholar] [CrossRef]

- Lin, Y.; Xu, D.; Wang, N.; Shi, Z.; Chen, Q. Road extraction from very-high-resolution remote sensing images via a nested SE-Deeplab model. Remote Sens. 2020, 12, 2985. [Google Scholar] [CrossRef]

- Jiao, L.; Huo, L.; Hu, C.; Tang, P. Refined unet: Unet-based refinement network for cloud and shadow precise segmentation. Remote Sens. 2020, 12, 2001. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016; pp. 770–778. [Google Scholar]

- LeCun, Y.; Boser, B.; Denker, J.; Henderson, D.; Howard, R.; Hubbard, W.; Jackel, L. Backpropagation applied to kandwritten zip code recognition. Neural Comput. 1989, 1, 541–551. [Google Scholar] [CrossRef]

- Dong, Z.; Liu, Y.; Xu, W.; Feng, Y.; Chen, Y.; Tang, Q. A cloud detection method for GaoFen-6 wide field of view imagery based on the spectrum and variance of superpixels. Int. J. Remote Sens. 2021, 42, 6315–6332. [Google Scholar] [CrossRef]

- He, S.; Jiang, W. Boundary-assisted learning for building extraction from optical remote sensing imagery. Remote Sens. 2021, 13, 760. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).