1. Introduction

Indoor positioning capability is regarded as an important part of smart city infrastructure. Aiming at complex and diversified urban indoor environments, how to provide autonomous and low-cost indoor location-based services becomes an urgent task. Existing indoor positioning systems (IPS) such as Wi-Fi [

1], BLE [

2], UWB [

3], acoustic sensors [

4], and inertial sensors [

5] can provide indoor positioning ability with different levels of precision. Among most kinds of IPS, the Wi-Fi positioning system (WPS) is proven to be an efficient approach for realizing universal localization without installing additional facilities in large-scale indoor spaces, which usually uses the crowdsourced spatiotemporal data mining technology based on the collected mobile sensors data acquired from people’s daily lives [

6].

At this stage, the performance of crowd-sensing-based WPS is limited by the poor accuracy of collected daily-life trajectories data, mainly due to the diversified handheld modes of smartphones [

7], the cumulative error of built-in sensors [

8], the efficient generation and updating of crowdsourced navigation databases [

9], and a lack of efficient combinations of different location sources and existing indoor maps or floorplans [

10].

To solve the above problems, previous researchers have created many meaningful works. Yan et al. [

11] proposed RIDI, aiming at providing robust smartphone-based walking speed estimation and localization information under changeable handheld modes, which realize comparable results with traditional Visual Inertial Odometry (VIO). Moreover, they further enhanced the accuracy and stability of inertial odometry by developing the RoNIN network, which can realize significant improvement using the new dataset containing over 40 h of built-in sensor data from different human daily-life contributions [

12]. Klein et al. [

13] used a machine learning (ML) algorithm to detect the daily-life handheld modes of the pedestrian and proposed an adaptive gain value selection method to improve the accuracy of step-length estimation. In order to enhance the algorithm efficiency, only a single tester is needed in the procedure of model training. Guo et al. [

14] proposed a handheld mode awareness strategy for walking speed estimation under pedestrians’ complex motion modes using the machine learning-based classification approach. Comprehensive mobile sensor data are collected by different users for model training purposes and accuracy evaluation of the proposed speed estimator, which effectively decreases the cumulative error originating from low-cost micro-electromechanical systems (MEMS) sensors.

For efficient crowdsourced navigation database generation, Yang et al. [

9] used the transfer learning approach to improve the efficiency and accuracy of a wireless database update, which can autonomously recognize the outlier features and search a suitable mapping space among the generated database and collected crowd-sensing data. Li et al. [

15] proposed a RITA localization system, which models the rotation and translation of crowdsourced trajectories into optimization problems under the distance constraints of Wi-Fi APs, and the particle filter (PF) is further applied for robust multi-source fusion. Zhang et al. [

16] proposed a novel quality evaluation criteria for autonomously selecting the eligible crowdsourced trajectories for the construction of the final navigation database, in which the motion modes, sensor biases, and time duration of each trajectory are adopted as the essential features. The final experimental results realize the comparable results of the map-aimed approach.

The indoor map information is also an essential part of the procedure of crowdsourced database generation and updating with high accuracy. Wu et al. [

17] proposed the HTrack system for more accurate map matching, which takes the pedestrian heading and geospatial data into consideration and can effectively reduce the calculation complexity. Xia et al. [

18] combined the models of pedestrian dead reckoning (PDR), BLE-based received signal strength indicator (RSSI) ranging, and map constraints using a unified PF. In addition, accessible and inaccessible spaces are defined to further enhance the positioning continuity and accuracy, and the RMSE of 1.48 m is finally achieved. Li et al. [

19] proposed fingerprinting accuracy indicators for autonomously predicting the accuracy of Wi-Fi and magnetic fingerprinting results combined with the signal, indoor map, and database-based features, which effectively improves the performance of final integrated localization using crowd-sensing data.

In addition, indoor floor detection also consists of an important part in enhancing the efficiency and accuracy of crowdsourced trajectories classification and database generation. Zhao et al. [

20] developed an HYFI system, in which the distribution of local Wi-Fi APs is adopted to provide an initial floor estimation result and further combined it with pressure information to decrease the effects of environments, and an overall accuracy of more than 96.1% is realized compared with a single source. Shao et al. [

21] proposed an adaptive wireless floor detection algorithm for large-scale and multi-floor contained indoor areas by extracting the Wi-Fi RSSI and spatial similarity features and dividing the local environments using a block model, which achieved an average accuracy of 97.24%.

To further enhance the performance of crowd-sensing-based database construction and multi-source fusion-based 3D indoor localization, this paper proposes the ML-CTEF structure, which uses a deep-learning framework for crowdsourced trajectories data modeling, accurate walking speed estimation, and floor detection, and applies indoor network information for trajectory matching and calibration in the procedure of crowdsourced database generation, and finally, the error ellipse is adopted to enhance the performance of traditional PF in the multi-source fusion phase. By using the proposed ML-CTEF framework, sub-meter-level optimized indoor trajectories can be acquired for the enhancement of crowdsourced navigation database construction, and the meter-level indoor positioning precision can be realized. The main contributions of this work are summarized as follows:

- (1)

This paper proposes novel inertial odometry which contains the combination of a deep-learning-based walking speed estimator (DLSE) and the non-holonomic constraint, which takes the handheld modes, lateral error, and step-length constraint into consideration, and updates the location based on a period of observations instead of just considering the last moment.

- (2)

A novel Bi-LSTM-based floor detection algorithm is applied to provide floor indexes reference for crowdsourced trajectories by extracting the hybrid wireless and sensor- related features to enhance the recognition precision and further improve the efficiency of crowdsourced database generation.

- (3)

This paper simplifies the indoor network and represents it in the form of a matrix and proposes the grid search approach for crowdsourced trajectory matching and calibration. The calibrated trajectories effectively improve the precision of crowdsourced database generation.

- (4)

Based on the results of walking speed estimation, floor detection, and crowdsourced database generation, an error ellipse-assisted particle filter (EE-PF) is proposed for the robust integration of Wi-Fi fingerprinting, inertial sensors data, and indoor map information, and meter-level positioning accuracy can be realized.

The remainder of this article is organized as follows.

Section 2 presents the related work.

Section 3 introduces the deep-learning-based speed estimator and inertial odometry.

Section 4 presents Bi-LSTM-based floor detection, crowdsourced trajectory matching and calibration, and error ellipse-assisted particle filter-based multi-source fusion.

Section 5 describes the experimental results of the proposed ML-CTEF. Finally,

Section 6 concludes this article.

2. Related Work

The crowdsourced wireless positioning technology is proven as an effective and labor-saving approach for providing indoor location-based services in large-scale indoor spaces, which can autonomously generate an indoor navigation database using the massive daily-life trajectories provided by the public. Normally, the crowdsourced wireless positioning system contains two main realizing approaches: a map-assisted positioning algorithm and a non-map-assisted positioning algorithm.

Zee [

22] and UnLoc [

23] are two early crowdsourced indoor localization systems, which adopt indoor map information and deployed landmark points to provide absolute locations for pedestrians indoors. LIFS [

24] constructs the indoor navigation database using the modeled PDR-originated trajectories and indoor floor plans without pain. Li et al. [

19] use geospatial big data technology to collect massive indoor pedestrian trajectories using the enhanced PDR algorithm and propose the precision indicator for different location sources, which effectively increases indoor positioning performance during the multi-source fusion phase. FineLoc [

25] further applied BLE nodes in the procedure of crowdsourced trajectories generation and merging, while the disadvantages are that the prior location information of BLE nodes is required to get the absolute position of the pedestrian and the accuracy of landmark detection of BLE node would decrease among indoor open areas.

The non-map-assisted indoor positioning algorithm aims at generating a navigation database without the help of indoor map information and deployed local facilities. Walkie-Markie [

26] is a classical crowdsourced indoor mapping and positioning system, which uses the RSSI vector acquired from local Wi-Fi access points (APs) to model as the signal-marks, and the locations of the APs are defined as the nearest distance of RSSI ranging results. PiLoc [

27] clusters crowdsourced indoor trajectories using the combination of Wi-Fi RSSI similarity detection results and the shape of each collected trajectory. The disadvantage of the Walkie-Markie and PiLoc systems is that the high precision of heading information from collected crowdsourced trajectories is required, which is not always applicable in real-world indoor scenes with complex interference. Li et al. [

8] realize crowdsourced trajectory merging using a loop closure detection algorithm based on acquired Wi-Fi RSSI similarity information. In order to improve the algorithm efficiency, they further propose the Wi-Fi-RITA system [

15], which applied the rotation and scale parameters as the optimization model for trace merging and is more efficient for a large number of crowdsourced trajectories merging.

In addition, multi-source fusion-based indoor positioning solution also provides an effective method for enhancing the accuracy and adaptability of large-scale indoor location-based services (iLBS), in which the MEMS sensor-based localization approach is usually applied as the basic positioning model [

28], and different technologies are used for indoor navigation, including Wi-Fi [

1], Bluetooth [

2], computer cameras [

29,

30] and so on. The different indoor location sources are integrated by the classical Kalman Filter (KF) including extended Kaiman filter (EKF) and unscented Kalman filter (UKF) or the particle filter (PF), while the traditional KF or PF only provide fixed weights of corresponding different location sources, which would decrease the localization performance in complex indoor environments.

Lee and Kim [

31] propose a hybrid marker-based indoor positioning system (HMIPS), which uses quick response markers and augmented reality to enhance the localization ability in large-scale indoor areas. The Viterbi tracking algorithm combines image information and inertial sensor data to correct the cumulative error originating from the single sensor-based approach. Wang et al. [

32] propose a tightly coupled multi-source fusion structure using the combination of UWB, floorplans, and MEMS sensors. The inertial navigation system (INS) mechanization is applied to eliminate the non-line-of-sight (NLOS) effect of UWB-ranging measurements, and the map line segment matching algorithm is applied to further eliminate the abnormal observations. Wang et al. [

33] develop a novel heading estimation algorithm that can be applied under complex smartphone handheld modes and proposes an online trajectory calibration method using the magnetic fingerprinting-based matching approach, which realizes meter-level accuracy under different tested indoor environments.

Different from existing crowdsourced indoor positioning and multi-source fusion algorithms, our proposed ML-CTEF uses a deep-learning framework for crowdsourced trajectories data modeling, accurate walking speed estimation, and floor detection and needs only indoor network information for trajectory matching and calibration purposes to generate the final navigation database, and the error ellipse is applied in the traditional PF algorithm in order to increase the multi-source fusion accuracy.

3. Deep-Learning Based Speed Estimator and Inertial Odometry

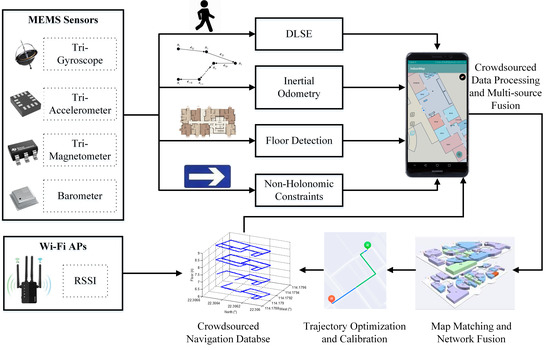

This work proposes the ML-CTEF framework, containing the combination of inertial odometry and multi-level observations, which can autonomously generate a crowdsourced navigation database and realize accurate multi-source fusion. First of all, the sensor data acquired from the tri-gyroscope, tri-accelerometer, tri-magnetometer, and barometer are integrated by the proposed inertial odometry to get the raw 3D position, velocity, and attitude information. Next, the deep-learning-based speed estimator (DLSE), the Bi-LSTM-based floor detection, and the non-holonomic constraints are modeled as the multi-level observations and integrated with inertial odometry using EE-PF. In addition, the map-matching and network fusion algorithms are proposed to optimize and calibrate the crowdsourced trajectories and generate an accurate navigation database, which is further applied in the online multi-source fusion phase. The overall description of the proposed ML-CTEF framework is shown in

Figure 1. This section focuses on proposing a novel deep-learning-based speed estimator for pedestrian walking speed estimation under complex handheld modes, and the estimated walking speed is further combined with the pedestrian dead reckoning (PDR) mechanization and magnetic observation as the novel inertial odometry for the robust reconstruction of crowdsourced indoor trajectories.

3.1. Deep-Learning Based Speed Estimator

Aiming at mobile terminals based on pedestrian indoor localization, INS and PDR mechanizations are regarded as two effective approaches for realizing inertial odometry. However, the accuracy of traditional INS/PDR mechanizations is limited by the diversified handheld modes of the terminals, and the cumulative error of inertial sensors. In addition, the location update results provided by the INS/PDR mechanizations are always based on the previous moment, which may miss some useful motion information during the selected walking period.

To solve the problems of traditional dead reckoning, in this work, a novel deep-learning-based speed estimator (DLSE) is proposed to provide an accurate reference for PDR mechanization regarding the above challenges. In order to recognize the changeable handheld modes of mobile terminals, the 1D-CNN model is applied to detect the different handheld modes by considering a period of acceleration data and angular velocity data and the corresponding extracted features. Afterward, the Bi-LSTM network is applied for the further prediction of pedestrians’ continuous walking speed also using a period of acceleration data and angular velocity data and the corresponding extracted features. The overall structure of the proposed deep-learning-based speed estimator is shown in

Figure 2:

Figure 2 describes the main structure of the proposed deep-learning-based speed estimator. In the proposed model, the 1D-CNN is applied as the first part for the handheld modes detection, and the recognized handheld modes and extracted features are further applied in the Bi-LSTM network for the accurate estimation of the pedestrians’ walking speed-related features, and the fully connected layer is finally adopted as the output layer which contains the real-time estimated pedestrians’ walking speed information.

To realize the handheld modes’ detection and corresponding walking speed estimation, the input features of 1D-CNN are the smoothed data collected from the accelerometer and gyroscope firstly for the handheld modes’ detection, and the detected results are further applied as the enhanced input feature for the training purposes of the corresponding Bi-LSTM network to estimate the final speed information under different handheld modes. In this case, to improve the final performance of speed estimation, the overall length of 3 s of data is applied as the input vector, with a sampling rate of 50 Hz.

In the convolution layer, the relationship between the input value and the output value is described as:

where

indicates the input vector,

indicates the kernel weights,

indicates the biases,

represents the activation function, and

is the output vector of the convolution layer.

In the Bi-LSTM layer, the update model of Bi-LSTM parameters is described as [

34]:

where

,

, and

represent the input, forget and output units,

indicates the input vector of the Bi-LSTM model at the timestamp

t, and the

represents the hidden state vector, which is regarded as the output of the Bi-LSTM model at that moment.

indicates the sigmoid function, and

is the candidate vector which is combined with the output vector as the memorized state at timestamp

t.

Finally, the output layer of the Bi-LSTM units is modeled as the input vector of a fully connected network function

MLP(), and the predicted uncertainty error is presented as:

We adopt the walking speed as the expected output value during the training phase of the deep-learning-based speed estimator framework. The initial predicted walking speed does not contain the absolute coordinate reference, and we can just regard it as the forward speed, which is described as:

where

is calculated by a step-length-based method.

In order to get the pedestrian’s forward speed, the estimated walking speed

should be transformed based on the results of the handheld mode recognition. The forward speed in the navigation coordinate system is calculated as [

7]:

where

is the converted NHC-based speed,

represents the calculated attitude matrix from the carrier coordinate system to the ENU coordinate system;

indicates the handheld mode-related translation matrix, which converts the heading-related axis into the reading mode-based heading-related axis based on the results of handheld mode recognition.

indicates the translation matrix from the ENU coordinate system to the NED coordinate system.

The final estimated location of the pedestrian is the combination of both the heading and walking speed information:

where

indicates the converted walking speed information estimated during one detected step period, which has been transferred into the navigation coordinate system, and the real-time location

is updated based on the previous result.

3.2. Integrated Model of Inertial Odometry

In a complex indoor environment, the initial location of pedestrians is usually unknown due to the lack of absolute reference. To model the real-time three-dimensional (3D) trajectory of pedestrians indoors, the state vector of the trajectory estimator is constructed as follows:

where

,

, and

indicate the real-time estimated 3D position,

is the corrected heading information,

represents the heading bias caused by the random walking error of the gyroscope. The state update equation is described as:

where the heading bias

is modeled as the first-order Markov process,

indicates the estimated time period of each gait,

Tc indicates the correlation time, and

is the estimated gait-length information provided in [

7].

Considering the interference caused by the indoor artificial magnetic field, the heading difference during the straightforward motion mode under quasi-static magnetic field (QSMF) periods [

35] is extracted as the pseudo-observation to constrain the heading divergence error:

where

and

represent the reference heading acquired from the first epoch of the recognized QSMF period under the straightforward walking modes and other epochs, and

indicates the Gaussian noise.

The observation model aiming at the deep-learning model-estimated velocity under the navigation coordinate is modeled as the observation value:

where

indicates the walking speed calculated in (11);

is the deep-learning-based speed estimation results. The observation equation for location increment observation in the

n-frame can also be given by:

where

indicates the location updated by the state value, and

indicates the deep-learning model-based location update results.

4. Deep-Learning Based Database Generation and Intelligent Integration

In order to improve the efficiency and accuracy of crowdsourced Wi-Fi fingerprinting database generation and multi-source fusion, a deep-learning framework is proposed for autonomous floor detection, crowdsourced trajectories matching and calibration, and Bi-LSTM-based Wi-Fi and MEMS sensor integration.

4.1. Bi-LSTM Based Floor Detection

In order to fully use all the observations acquired from the crowdsourced navigation data and obtain the complementary effects of the observation sources, in this work, a time-continuous method of floor detection using the Bi-LSTM network is applied by taking a period of crowdsourced data into consideration to improve the final accuracy of floor detection. The extracted features include the combination of Wi-Fi, barometer, and magnetic sources, which are constructed as the input vector of Bi-LSTM:

- (1)

The most representative collected RSSI values: In order to cover the wireless characteristics of the specific indoor floor, the constructed RSSI vector collected from several of the most characteristic local Wi-Fi APs are constructed as the input values during the training phase of Bi-LSTM:

where

is the scanned RSSI value of the specific Wi-Fi AP, and

is the threshold for the RSSI filter.

- (2)

Average RSSI index of the representative collected RSSI vector: To describe the universal feature of the RSSI vector, the average signal strength is also calculated as one of the input values of the proposed Bi-LSTM:

where

represents the estimated average RSSI index.

- (3)

Differences of the representative collected RSSI vector: The real-time difference of the scanned RSSI vector can effectively present the changing characteristics of the environment:

where

indicates the RSSI difference index.

- (4)

Norm of collected local magnetic vector:

where

,

, and

indicate the real-time collected local magnetic field data.

- (5)

Barometric pressure at specific floors at different time periods of the same day:

where

,

, and

indicate the real-time collected barometer data during different time periods of the same day. Because of the effects of wind, humidity, and small dust indoors, the measured pressure data may influence even in a one-day period. Thus, in this case, the three different time periods relating to pressure are collected to avoid the time deviation.

- (6)

Barometric pressure difference index:

where

and

are the acquired pressure data at two adjacent timestamps. The different indexes of barometric pressure can effectively indicate the floor change during the pedestrian’s walking procedure.

4.2. Crowdsourced Trajectory Matching and Calibration

In this work, the indoor pedestrian network extracted from each floor is represented in the form of a matrix, and each intersection point is marked as the element in the matrix, which contains the heading and length information between each two intersection points. The extracted indoor network and corresponding network matrix is described in

Figure 3:

In

Figure 3, the overall indoor pedestrian network is divided into a combination of straight lines and intersection points, and each straight line has two features, heading and length, which are applied as the feature-matching parameters for comparison with the real-time collected trajectories. In this case, the two adjacent intersection points can be marked as 1, and the disconnected intersection points are marked as 0 in the generated network matrix:

In the constructed network matrix, the dimension of the matrix is the same as the number of intersection points in the extracted indoor network. Among each two adjacent points, the detailed features contain the heading and length information of the pedestrian’s straight forward walking period, which can be described as:

where

and

indicate the calculated heading and overall length information of each two adjacent intersection points when the pedestrian walks straight forward from one point to the other point.

In this work, the grid search approach is proposed for realizing trajectory matching and calibration between collected crowdsourced trajectories and the extracted indoor pedestrian network using the constructed network matrix information, which is described as follows:

- (1)

Turning points detection: In the case of complex pedestrian handheld modes, the proposed hybrid deep-learning model is applied to classify the four different handheld modes and find the forward axis during the pedestrian’s walking procedure. The turning points are further extracted using a peak detection algorithm based on the smoothed angular velocity data, similar to the step detection approach [

7];

- (2)

Selecting the crowdsourced trajectories with more than three turning points for comparison purposes, which can effectively decrease the false matching rate;

- (3)

To realize the trajectories matching with the existing indoor network, the correlation coefficient index and the dynamic time warping (DTW) index are applied to find similar trajectories using the information of the detected turning points in each trajectory:

where

presents the cumulative distance between two turning point distributions,

indicates the Euclidean distance between each two points of distribution.

where

and

indicate the results of the correlation coefficient on the

x- and

y-axis, respectively.

- (4)

Crowdsourced trajectory calibration: After the map-matching phase, the matched turning points on the existing indoor network are applied as the absolute reference for the smoothing procedure of the trajectory segmentations:

where

and

represent the state value and the measured value, which are represented in Equation (8).

4.3. Error Ellipse Assisted Particle Filter for Multi-Source Integration

In this work, the error ellipse-assisted particle filter (EEPF) is applied to integrate all of the results provided by the crowdsourced Wi-Fi fingerprinting, built-in sensors, indoor network observation, and map constraint for realizing the meter-level indoor positioning performance.

The state model is the same as Equation (7), and the observed model of crowdsourced Wi-Fi RSSI fingerprinting can be described as:

where

and

represent the received Wi-Fi RSSI fingerprinting-based position and speed results, and

and

represent the MEMS sensor-based navigation results.

In addition, the indoor network and map constraint are also applied for localization performance improvement. The first step is to search the nearest two adjacent reference turning points from the extracted indoor pedestrian network according to the current locations provided by the integration of the crowdsourced Wi-Fi fingerprinting and built-in sensors. The modeled indoor network segment is described as:

Thus, the nearest observation point on the indoor network can be calculated by the current location (

x1,

y1). Thus, the final observed model of the indoor network can be described as:

where

and

represent the received Wi-Fi RSSI fingerprinting-based position and speed results, and

and

represent the Wi-Fi and MEMS sensor-integrated localization results.

Finally, the indoor map information is applied to provide outlier constraints for the PF-based weight update phase. In this case, an error ellipse is built for particle constraint during the overall PF update procedure, which is summarized as:

Regarding the definition of the confidence ellipse in the engineering field [

36], the central point of the error ellipse is set as the location of the major semi-axis of the ellipse, and is presented as:

The minor semi-axis can be described as:

The azimuth of the major semi-axis can be calculated by:

The generated error ellipse is firstly applied for the error constraint during the particle state update phase of the whole PF procedure, and the generated new particle, which is out of the existing error ellipse, is autonomously discarded. Secondly, the matched points on the existing indoor network and the matched reference points within the range of the error ellipse are further fused with the other location sources to realize the meter-level indoor positioning accuracy in complex and multi-floor contained indoor environments. Thus, the updated weights of particles are described as:

in which case1 indicates that the location of the particle is within the boundary of the error ellipse, and case2 indicates that the location of the particle is out of the boundary of the error ellipse. Thus, after the check of the error ellipse-assisted map constraint, the number of M-eligible particles will remain for the next PF update procedure.

5. Experimental Results of ML-CTEF

In this section, comprehensive experiments are designed to evaluate the performance of the proposed ML-CTEF. A multi-floor contained 3D indoor environment is selected as the experimental site, in which the proposed inertial odometry, Bi-LSTM-based floor detection, map-matching, and crowdsourced database generation, and error ellipse-assisted particle filter are evaluated and compared with the existing algorithms, respectively. For the presented model setting, the Adam, because of its efficiency regarding a large amount of training data, is applied as the optimizer, with a learning rate of 0.002. For the deep-learning-based speed estimator module, the dimension of the input vector of the 1D-CNN is set as 10, the same as the dimension of the sensor data, and the dimension of the kernel size of 1D-CNN is set as 5, and the dimension of the output hidden state from the LSTM unit is set as 30, with the dimension of input vector as 11.

5.1. Performance Evaluation of Inertial Odometry

In order to evaluate the performance of the proposed inertial odometry, a long-term experiment is designed for accuracy estimation under different handheld modes. In this case, a comprehensive experimental site containing the indoor and outdoor scenes is selected for evaluation purposes, which is shown in

Figure 4. The tester started with point A, passed point B to K, and returned to point A, and this walking route was continuously repeated 10 times in order to test the long-term performance.

The long-term performance of inertial odometry is firstly compared with the single PDR mechanization [

13] using the same walking route and smartphone data. In this case, the absolute control points are deployed at each turning point to calculate the positioning error, and the comparison of the estimated trajectories provided by two different algorithms is shown in

Figure 5:

It can be found in

Figure 5 that the proposed inertial odometry realizes much more stable and precise long-term localization performance compared to single-PDR mechanization. To further estimate the positioning accuracy of the two algorithms, ten users repeated the same walking route, and the estimated positioning errors of the two algorithms are described in

Figure 6:

Figure 6 presents that the long-term error of the proposed inertial odometry is within 3.62 m in 75%, compared with the single-PDR approach within 5.76 m in 75%, due to the contributions of hybrid observations and constraints.

To further evaluate the performance of the proposed DLSE-assisted inertial odometry under different handheld modes, a state-of-the-art pose awareness estimator (PAE) [

14] is adopted for comparison with the proposed inertial odometry. We compared the average positioning errors under four different handheld modes using the same walking route in

Figure 6, and the final estimated average positioning errors are compared as:

It can be found from

Figure 7 that the proposed DLSE-assisted inertial odometry realizes much higher positioning accuracy under four different handheld modes; the realized average positioning errors are within 2.91 m (reading mode), 3.56 m (calling mode), 5.79 m (swaying mode), and 3.89 m (pocket mode) under the long-term test route, compared with the PAE algorithm with 3.47 m (reading mode), 4.13 m (calling mode), 7.22 m (swaying mode), and 4.34 m (pocket mode).

5.2. Performance Evaluation of Floor Detection

In this paper, the initial floor information is missing from the collected crowdsourced raw trajectories. To mark the floor index for crowdsourced trajectories before the map matching phase, the Bi-LSTM network is adopted to integrate the information collected from the local wireless signals and sensor-related features to enhance the floor detection performance in multi-floor contained indoor environments. To cover the required indoor scenes, a time period of 2.5 h trajectories dataset is collected from four different floors for training purposes, and a time period of 0.5 h trajectories dataset is applied for accuracy evaluation. In this case, the proposed Bi-LSTM-based floor detection algorithm is compared with the previous LSTM method [

37], and the classical K-Nearest Neighbor (KNN) method [

38], and the accuracy comparison result is described as:

Figure 8 presents that the proposed Bi-LSTM-based floor detection algorithm realizes improved accuracy compared with the LSTM and KNN algorithms, which reach an average accuracy of more than 98.93% using the test dataset compared with the average accuracy of 97.28% using the LSTM-based approach and 93.7% using the KNN-based approach.

5.3. Performance Evaluation of Map Matching and Crowdsourced Database Generation

To evaluate the performance of map-matching and crowdsourced database generation, a multi-floor contained 3D indoor environment is selected, which covers four different floors, where the daily-life trajectories are collected, as shown in

Figure 9. In this case, the crowdsourced smartphone data collected from different floors is firstly modeled by the proposed multi-level observations and constraints-assisted inertial odometry for the initial estimation of the trajectories, while these modeled trajectories have only relative location information and the same original point, which are shown in

Figure 10:

It can be found from

Figure 10 that the raw modeled crowdsourced trajectories are irregular and cannot get the true walking routes directly due to the lack of absolute reference. Thus, this work proposes the indoor network-matching algorithm for providing the absolute turning points reference for the optimization and calibration of raw modeled crowdsourced trajectories, and the floor detection algorithm is applied to provide floor indexes for raw trajectories. The final generated crowdsourced indoor network is described as:

It can be found from

Figure 11 that the matched and calibrated crowdsourced trajectories effectively reconstruct the indoor pedestrian network, and a sub-meter level of accuracy can be realized with the assistance of an indoor map. In this case, to further evaluate the performance of the proposed map-assisted crowdsourced trajectories matching and calibration (M-CTMC), the state-of-the-art Walkie-Markie [

27] algorithm is compared with our proposed approach. As shown in

Figure 12, the proposed M-CTMC algorithm realizes a much higher crowdsourced trajectories reconstruction accuracy, and the overall trajectory error is within 0.51 m in 75%, compared with the Walkie-Markie algorithm, with a trajectory error of 1.2 m in 75%.

5.4. Performance Evaluation of Error Ellipse Enhanced Fusion Approach

To estimate the performance of the final ML-CTII approach, four adjacent floors contained within the 3D indoor environment were selected, the tester walked from the sixth floor continuously to the ninth floor, and the detailed walking route is described in

Figure 8. In this case, the EEPF-based fusion algorithm is proposed to intelligently integrate the different location sources, including the crowdsourced Wi-Fi fingerprinting, MEMS sensors, and indoor network information, in which the error ellipse is applied to constrain the gross error of different location sources and match the useful indoor network information for the improvement of the integrated results of Wi-Fi fingerprinting and inertial odometry. The estimated trajectories comparison between inertial odometry (IO), Wi-Fi fingerprinting/inertial odometry (W-IO) integration, and indoor map/Wi-Fi fingerprinting/inertial odometry (MW-IO) integration are described as:

It can be found from

Figure 13 that the inertial odometry is still affected by the cumulative errors even after multi-level-based constraints and observations. The integration model of crowdsourced Wi-Fi fingerprinting and inertial odometry effectively enhances the performance of single IO, and the assistance of indoor map information further eliminates the positioning error of W-IO, which is closer to the ground-truth trajectory. The comparison of the estimated positioning error of the three different combinations of location sources is described as follows:

Figure 14 presents that the combination of indoor maps realizes the meter-level indoor positioning accuracy; the positioning error is within 1.22 m in 75%, compared with the combination of Wi-Fi fingerprinting and inertial odometry, which achieves an accuracy of 2.2 m in 75%.

Finally, the proposed EE-PF-based multi-source fusion algorithm is compared with the state-of-the-art map-aided particle filter (MA-PF) approach [

39], in which the same walking route and generated crowdsourced Wi-Fi fingerprinting database are applied to make the comparison fairer. The 3D trajectories comparison and positioning errors comparison between the two algorithms are described in

Figure 15 and

Table 1:

It can be found from

Table 1 that the proposed EE-PF achieves better multi-source integration performance compared to the MA-PF approach because of the assistance of the error ellipse-based particle update strategy, and the realized average positioning error is within 1.01 m, improved by 21.7% compared to the MA-PF approach (average within 1.29 m).