Jitter Detection Method Based on Sequence CMOS Images Captured by Rolling Shutter Mode for High-Resolution Remote Sensing Satellite

Abstract

:1. Introduction

2. Materials and Methods

2.1. Jitter Detection Principle of CMOS Image by Rolling Shutter

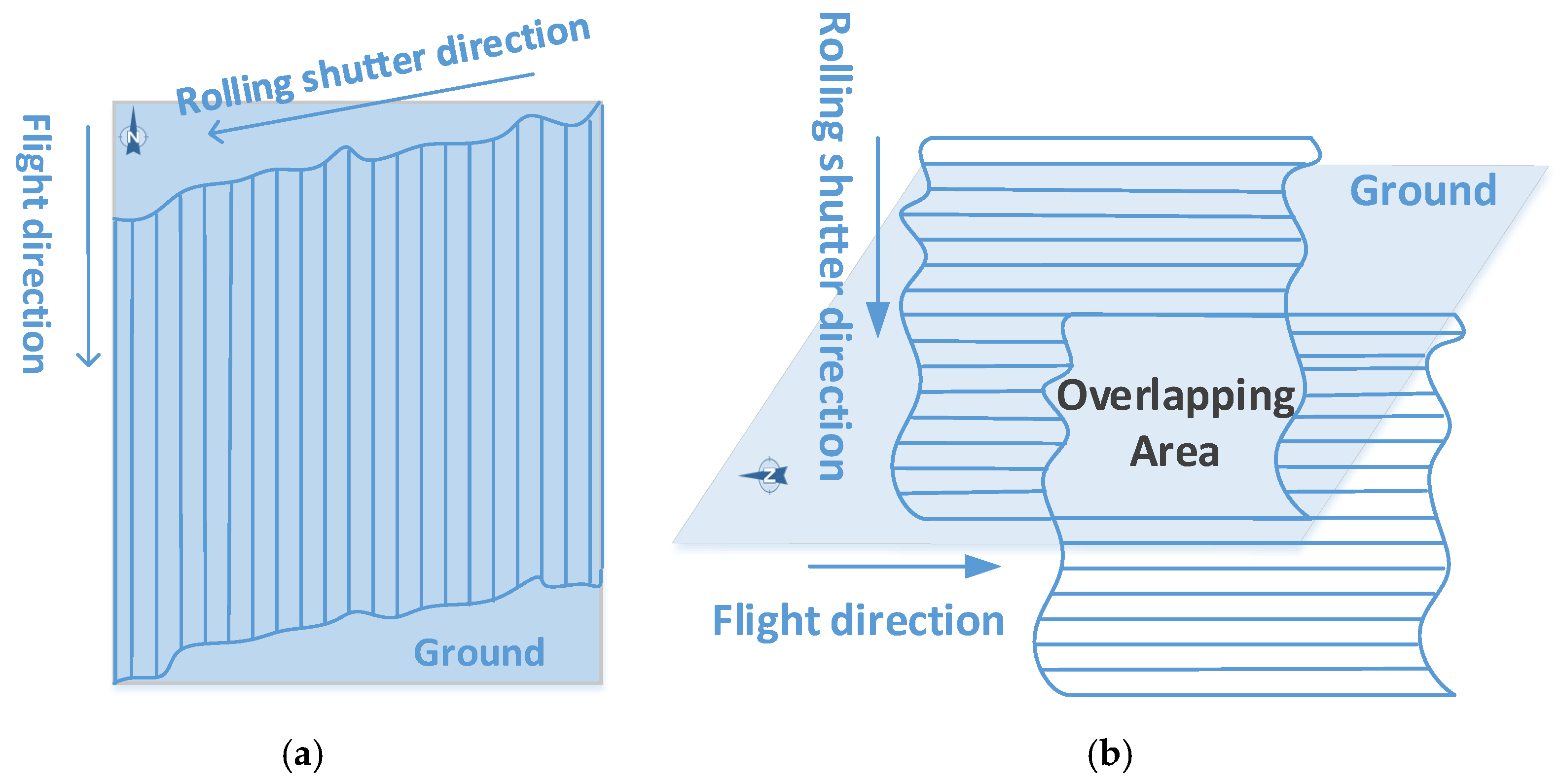

2.1.1. Imaging Characteristics of CMOS Sensor with Rolling Shutter

2.1.2. The Principle of Jitter Detection Using CMOS Images by Rolling Shutter

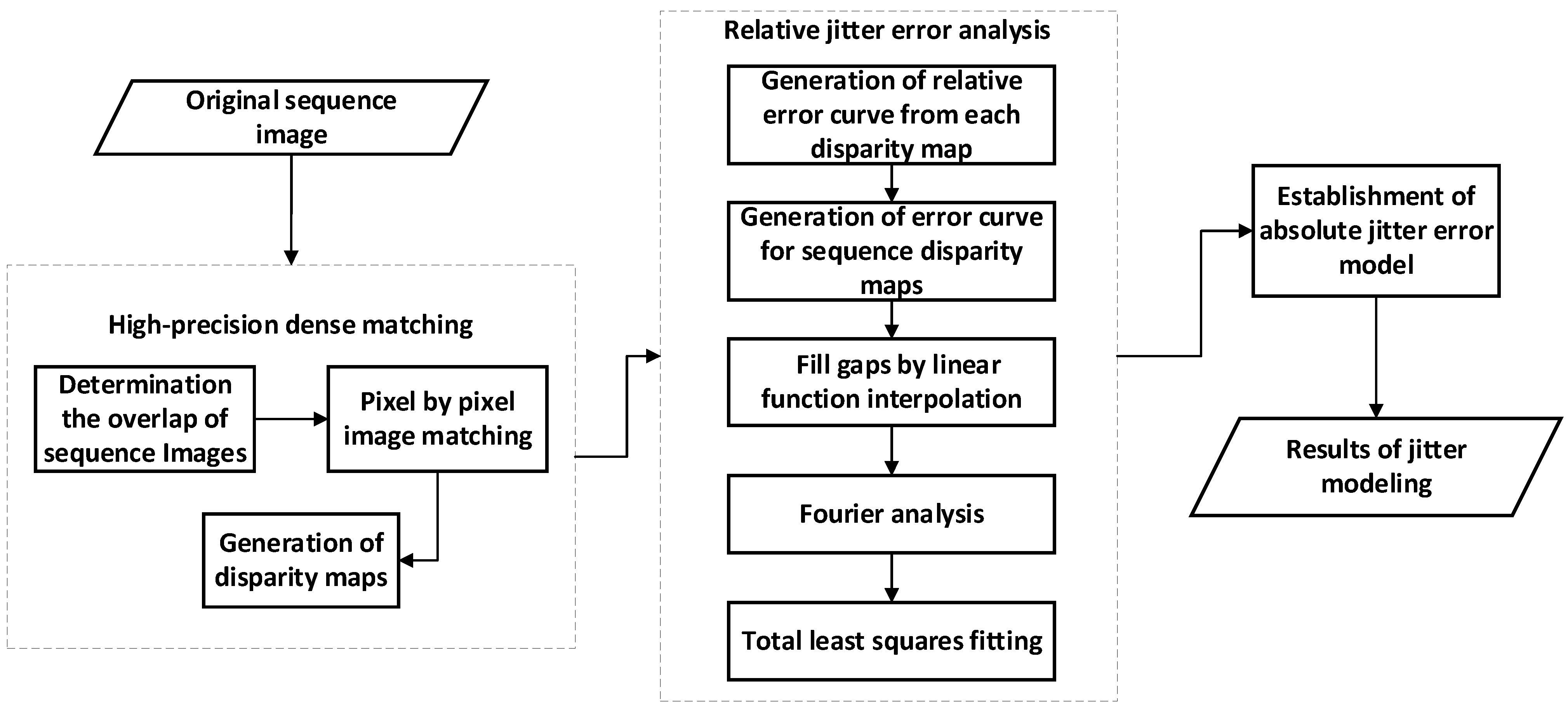

2.2. Detection of Jitter Based on CMOS Sequence Images by Rolling Shutter

2.2.1. Dense Matching of Sequence CMOS Images

2.2.2. Relative Jitter Error Analysis of Sequence CMOS Images

2.2.3. Absolute Jitter Error Modeling

3. Results

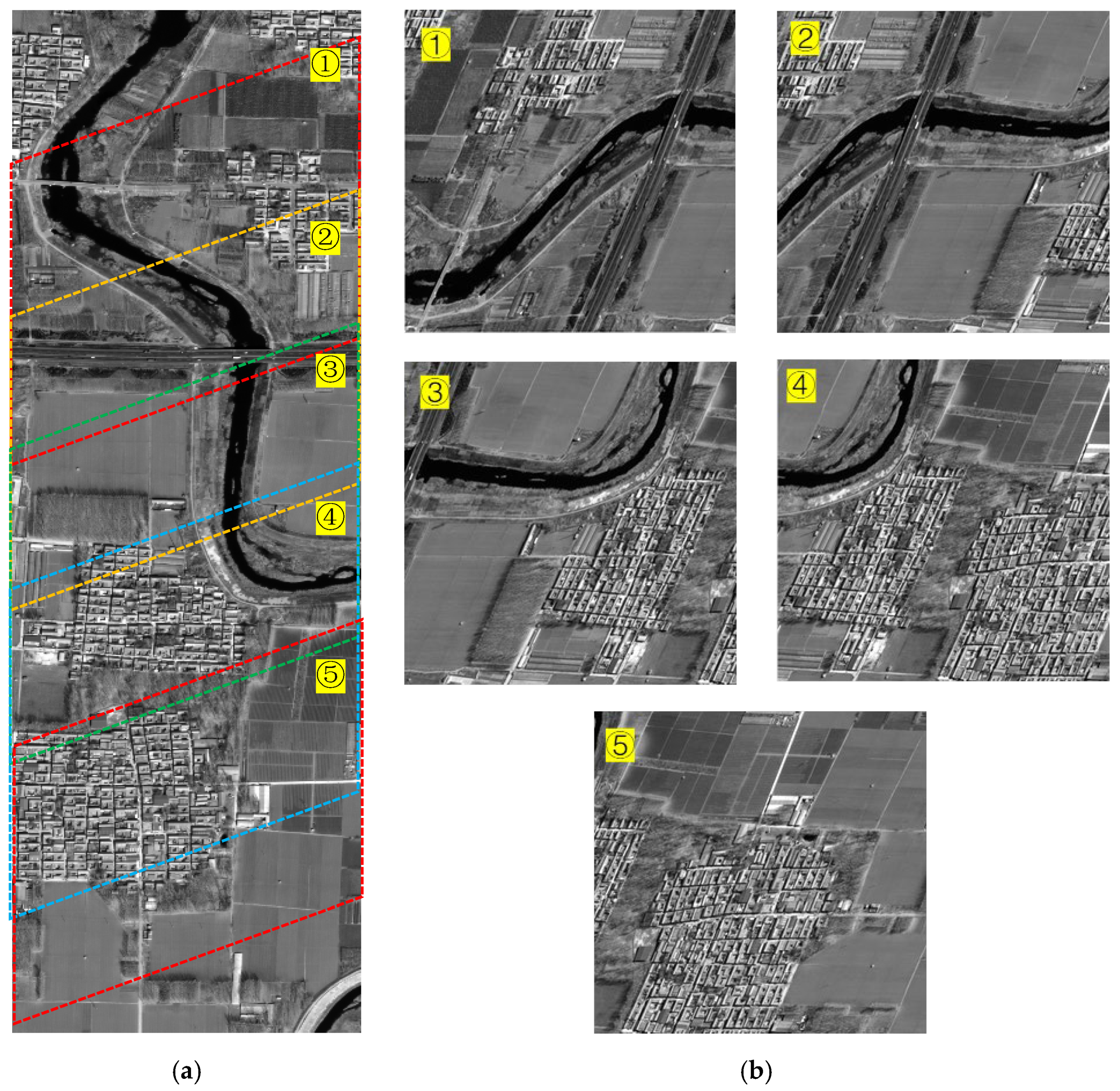

3.1. Data Description

3.2. Experimental Result

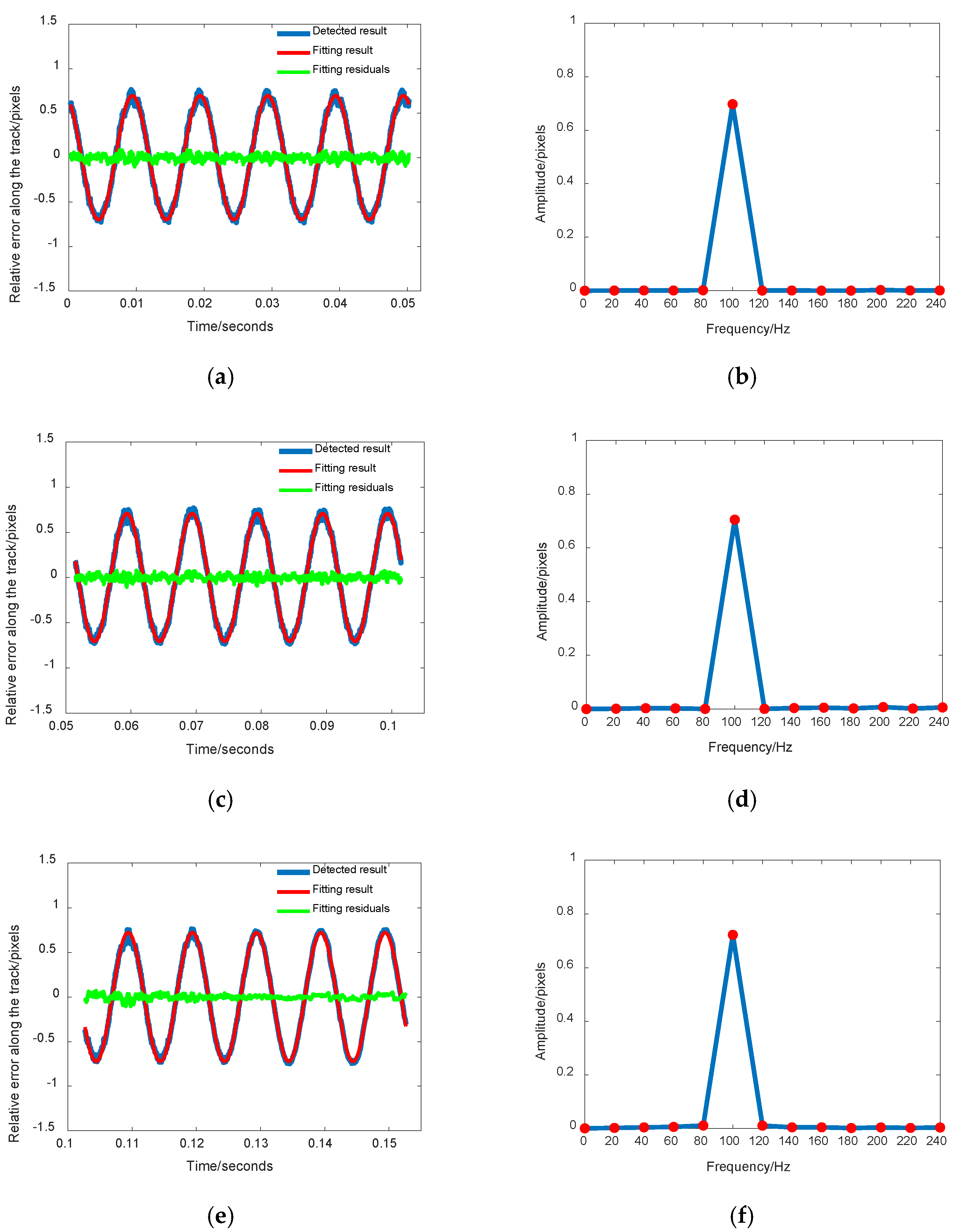

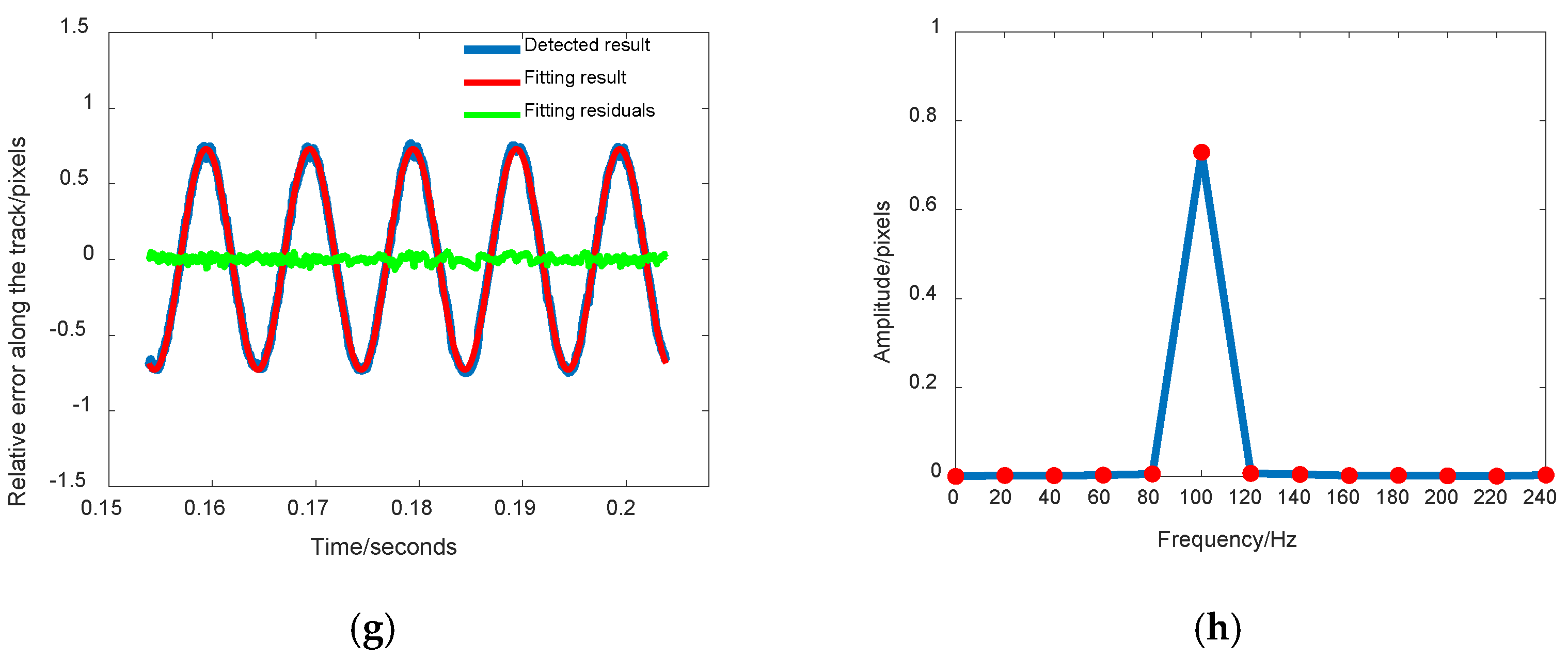

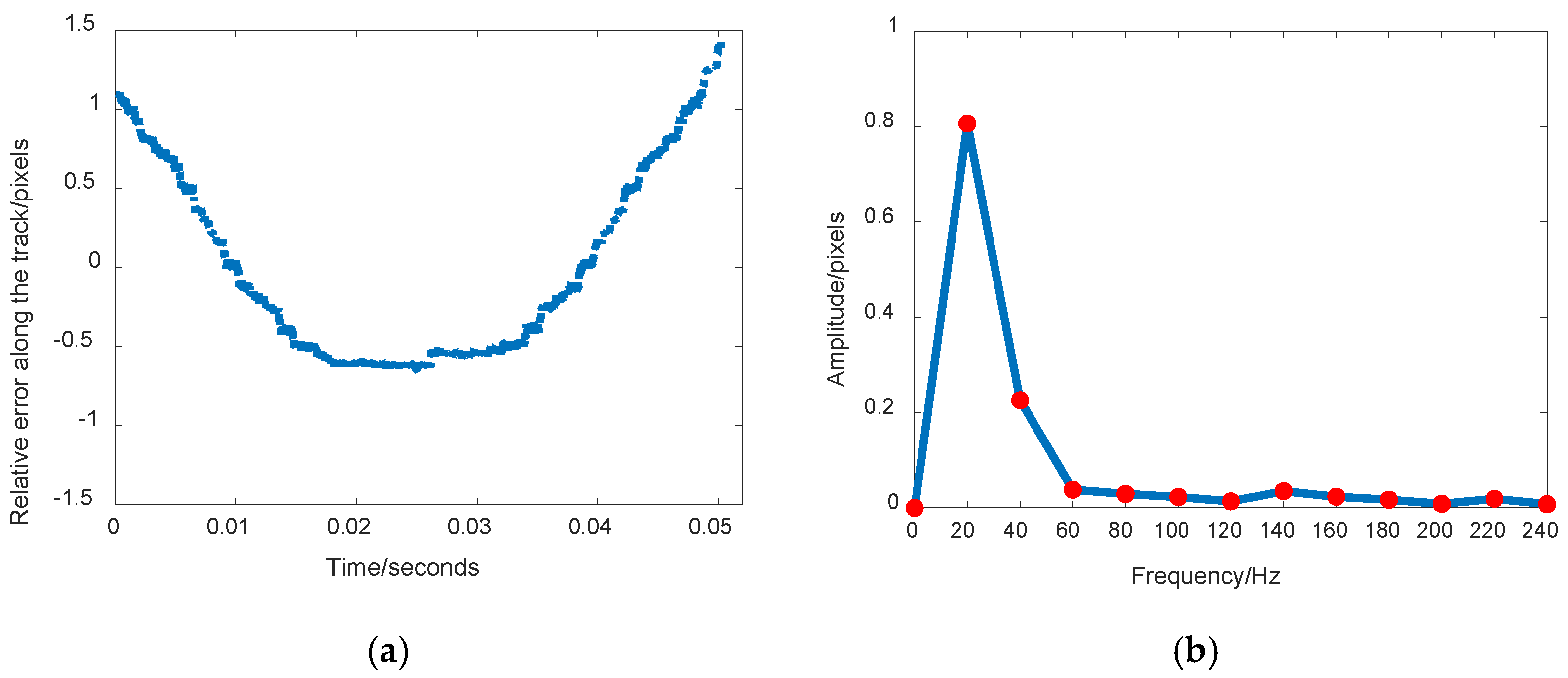

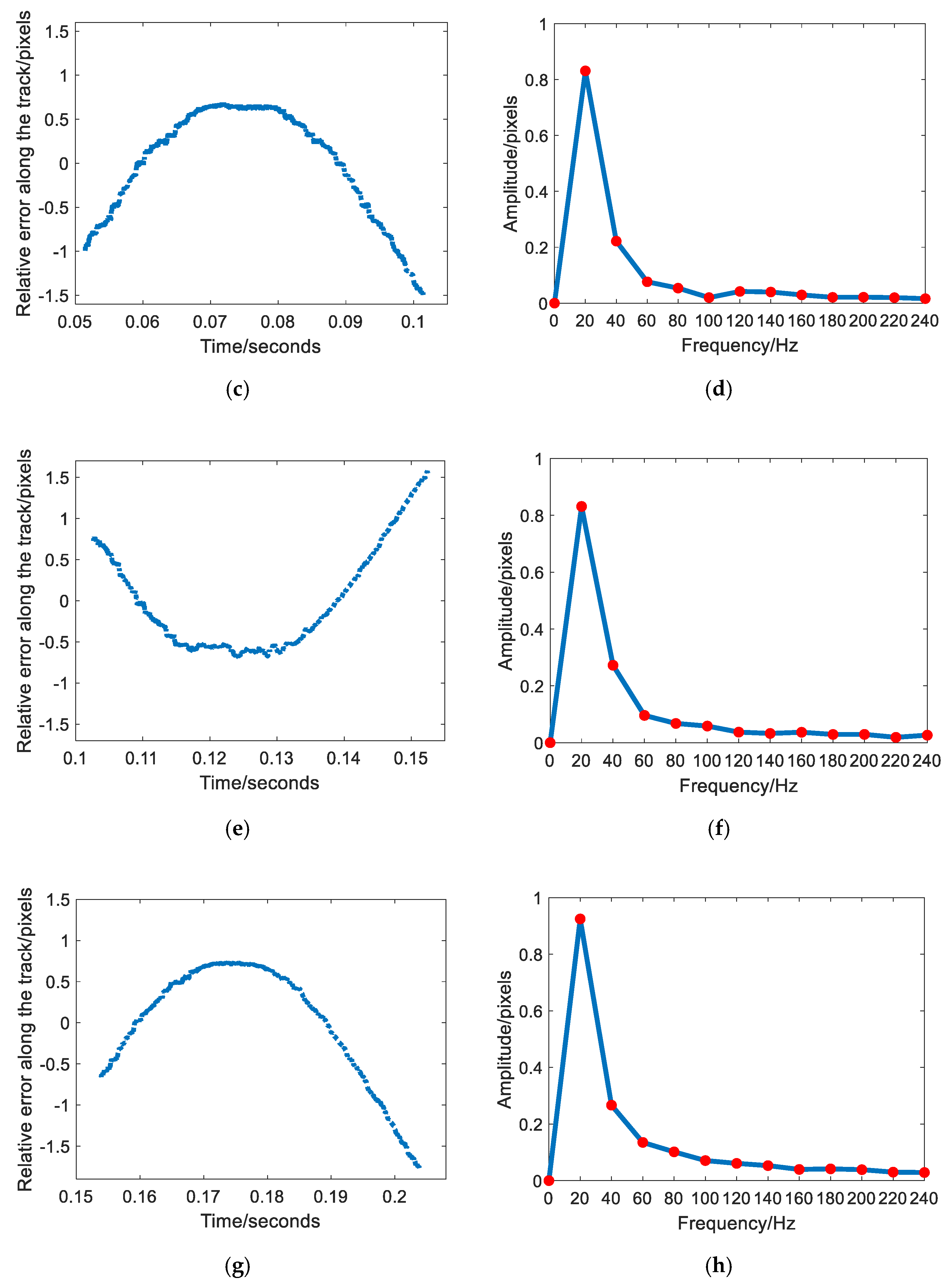

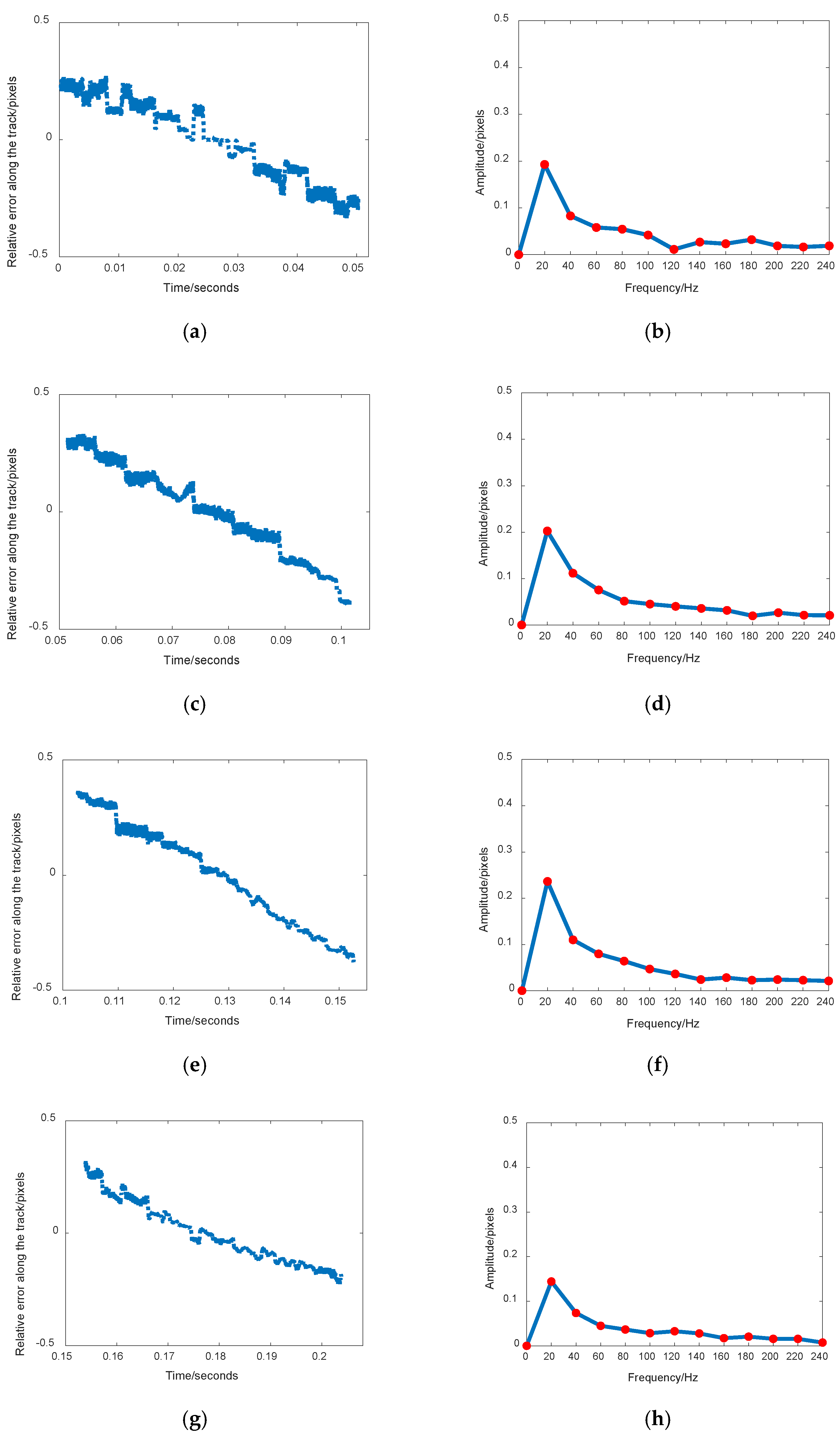

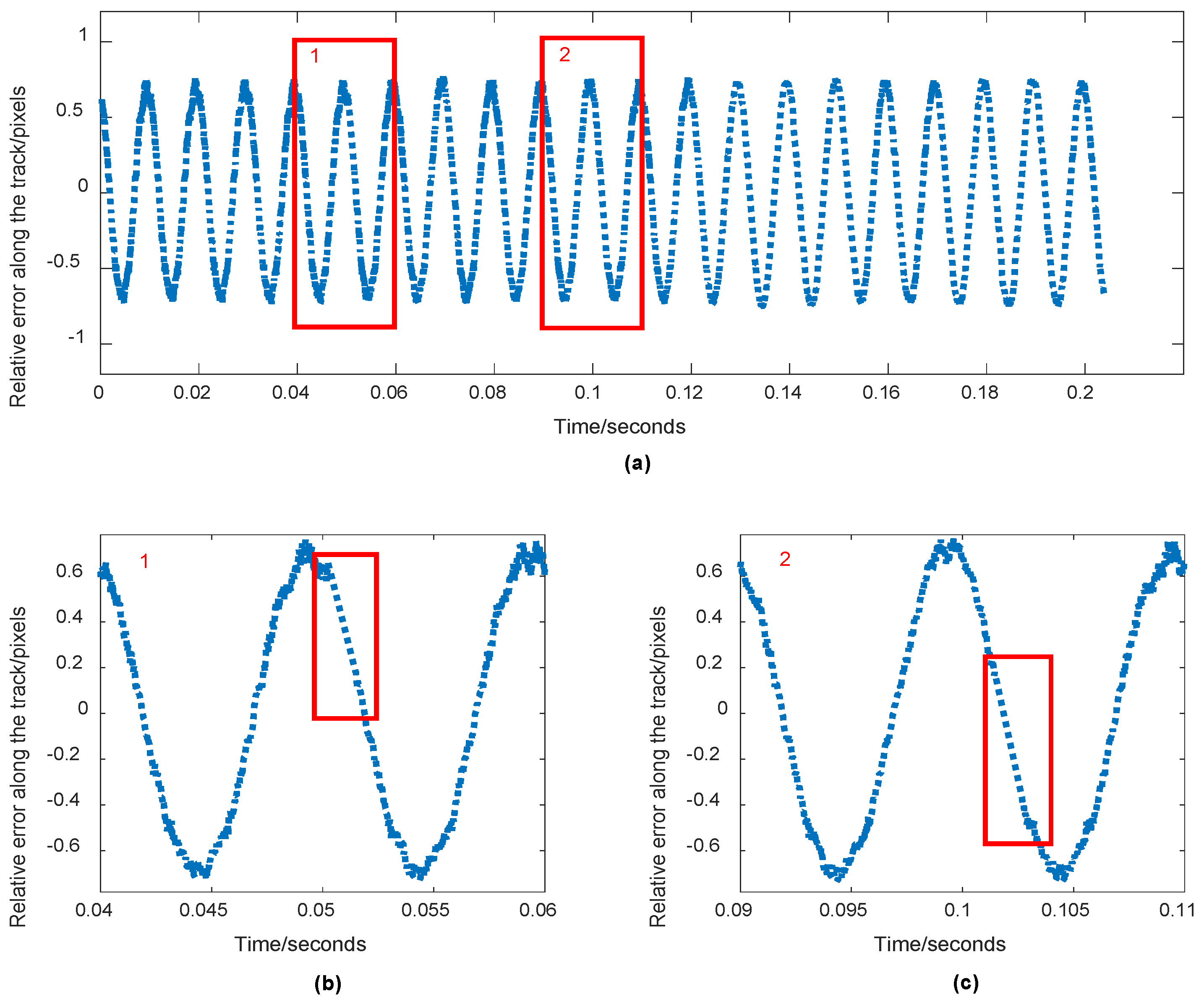

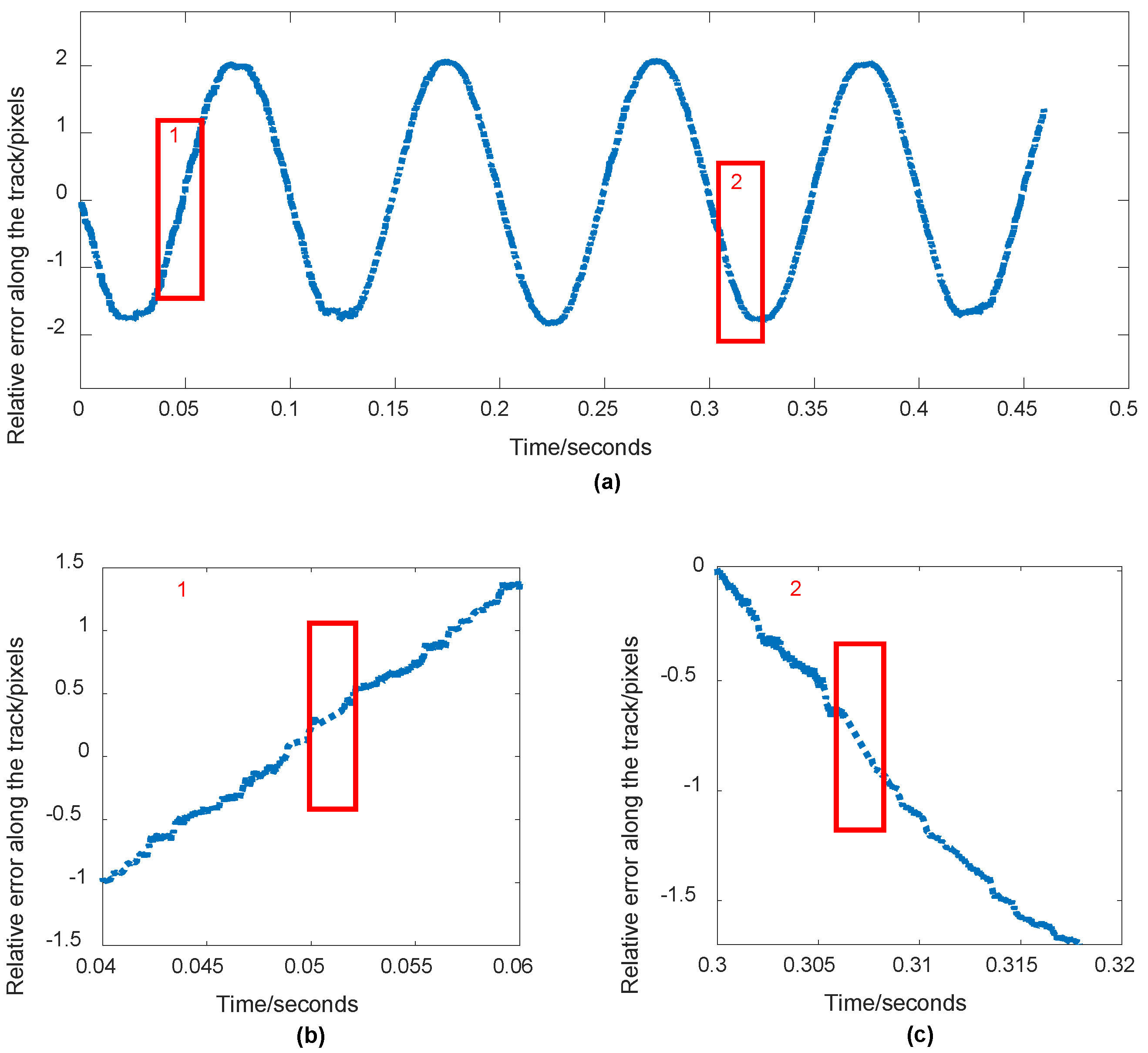

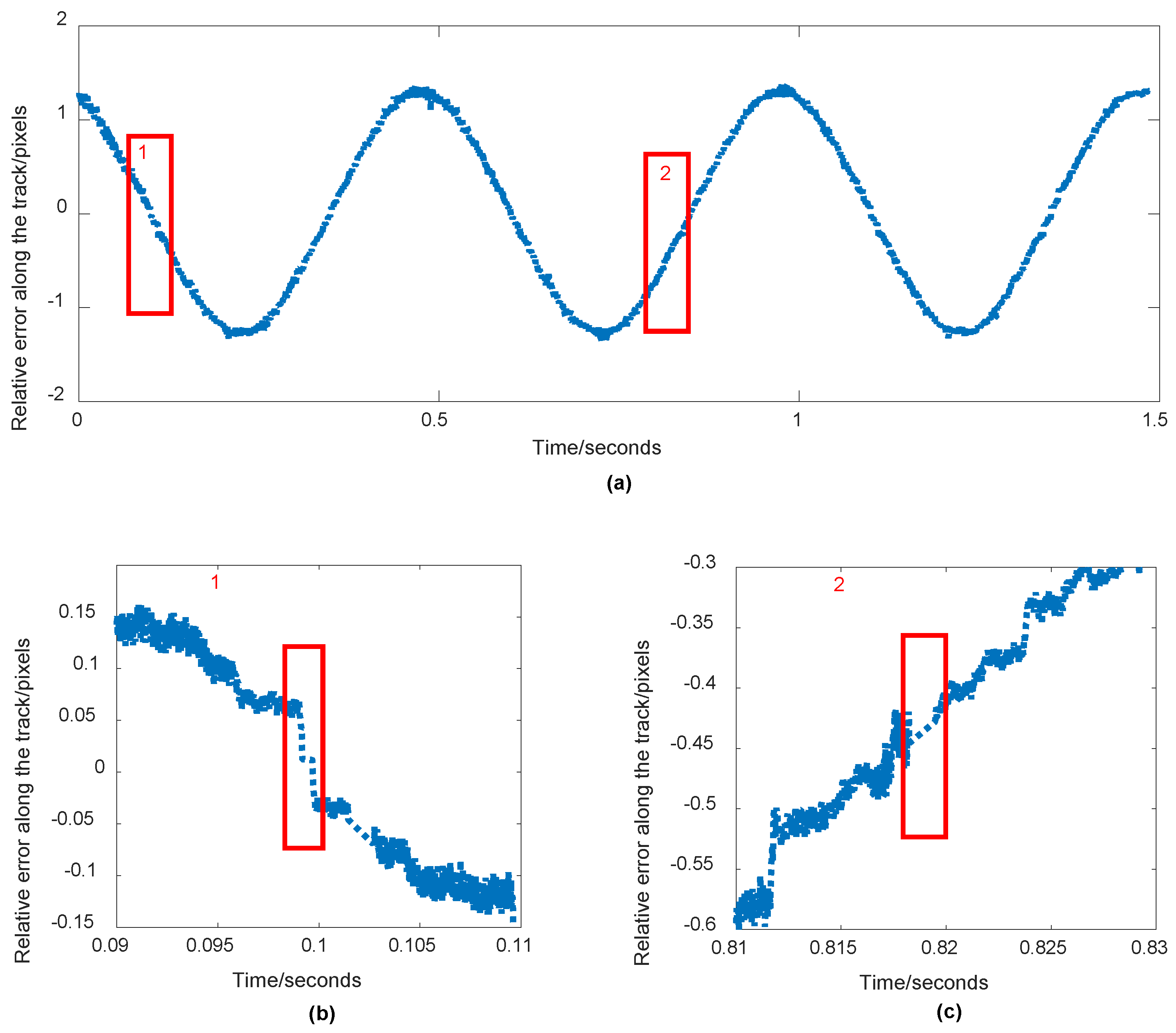

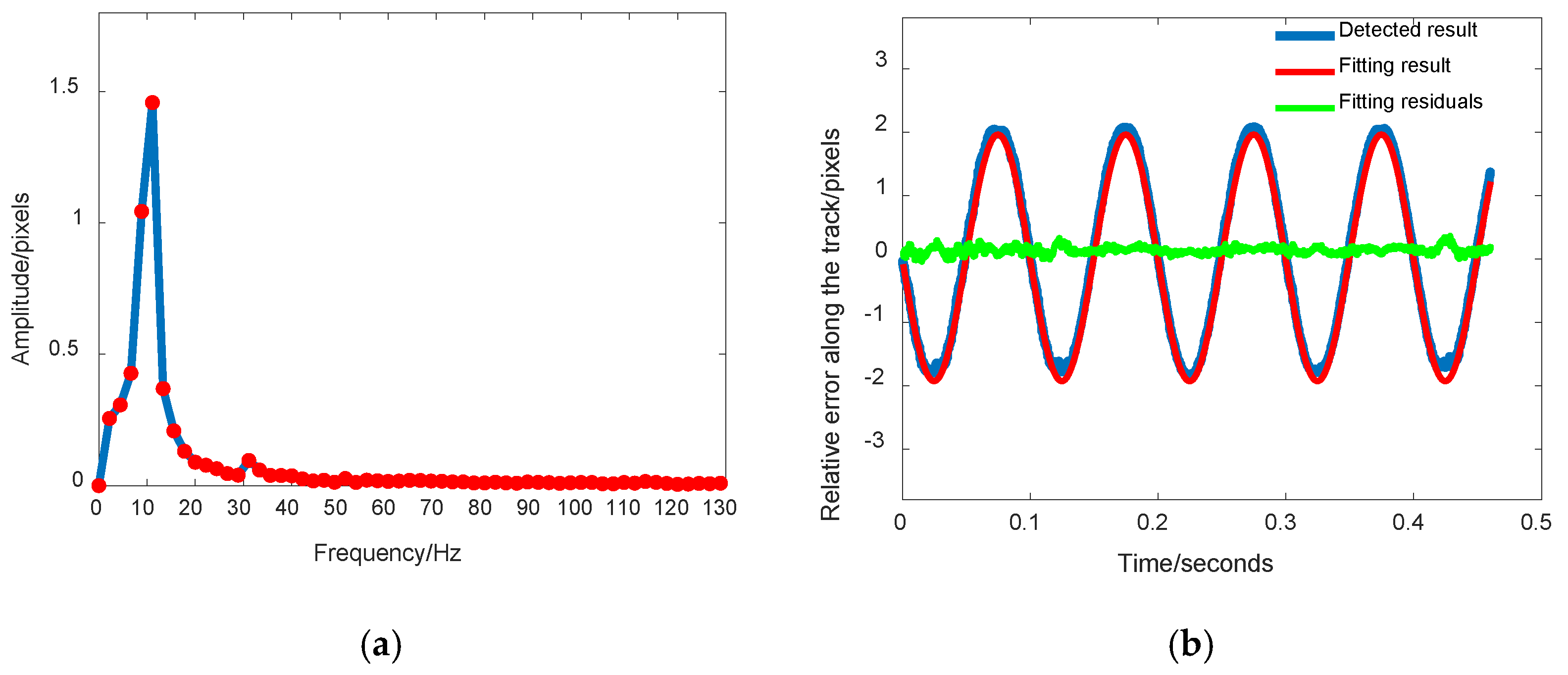

3.2.1. Jitter Detection Results by Single Disparity Map

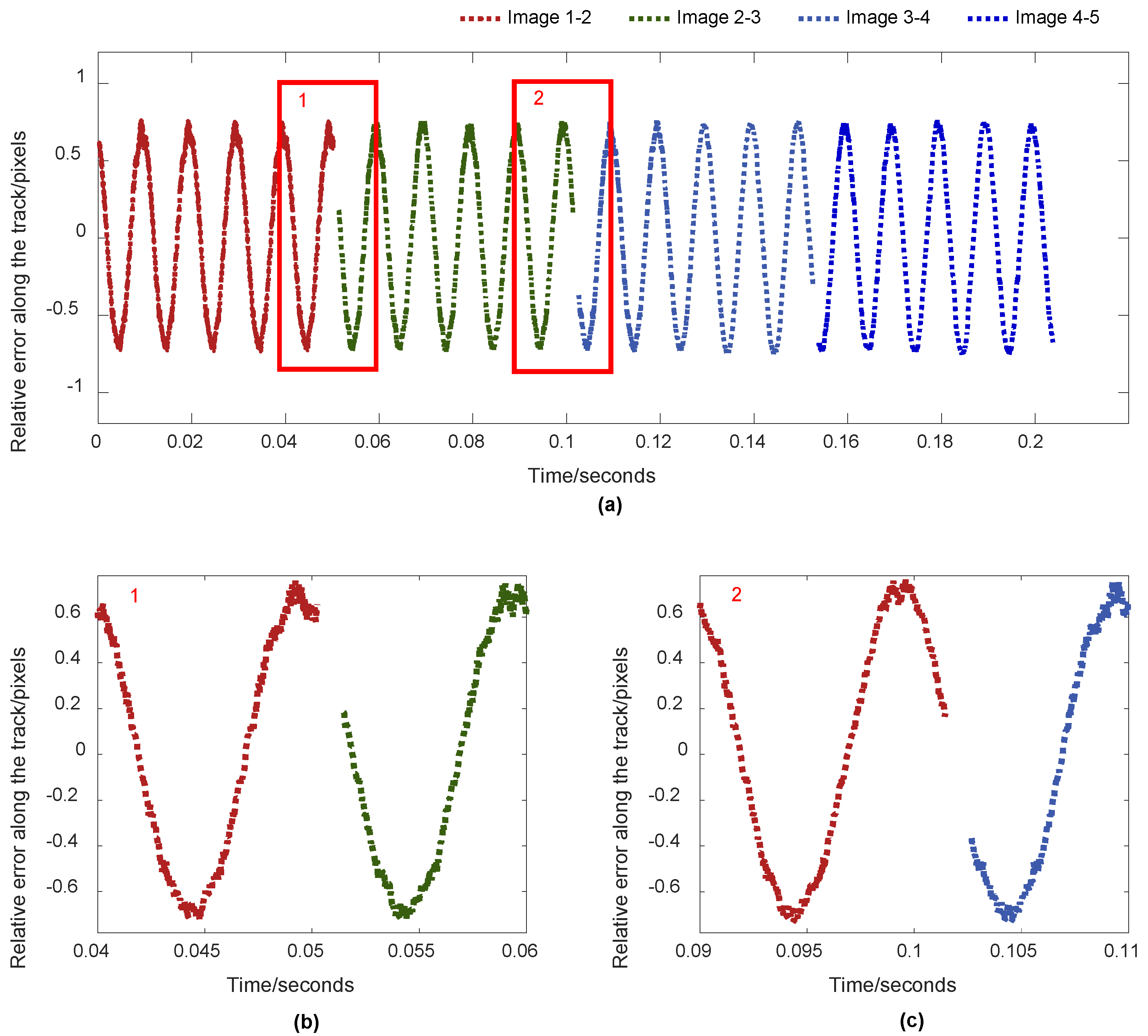

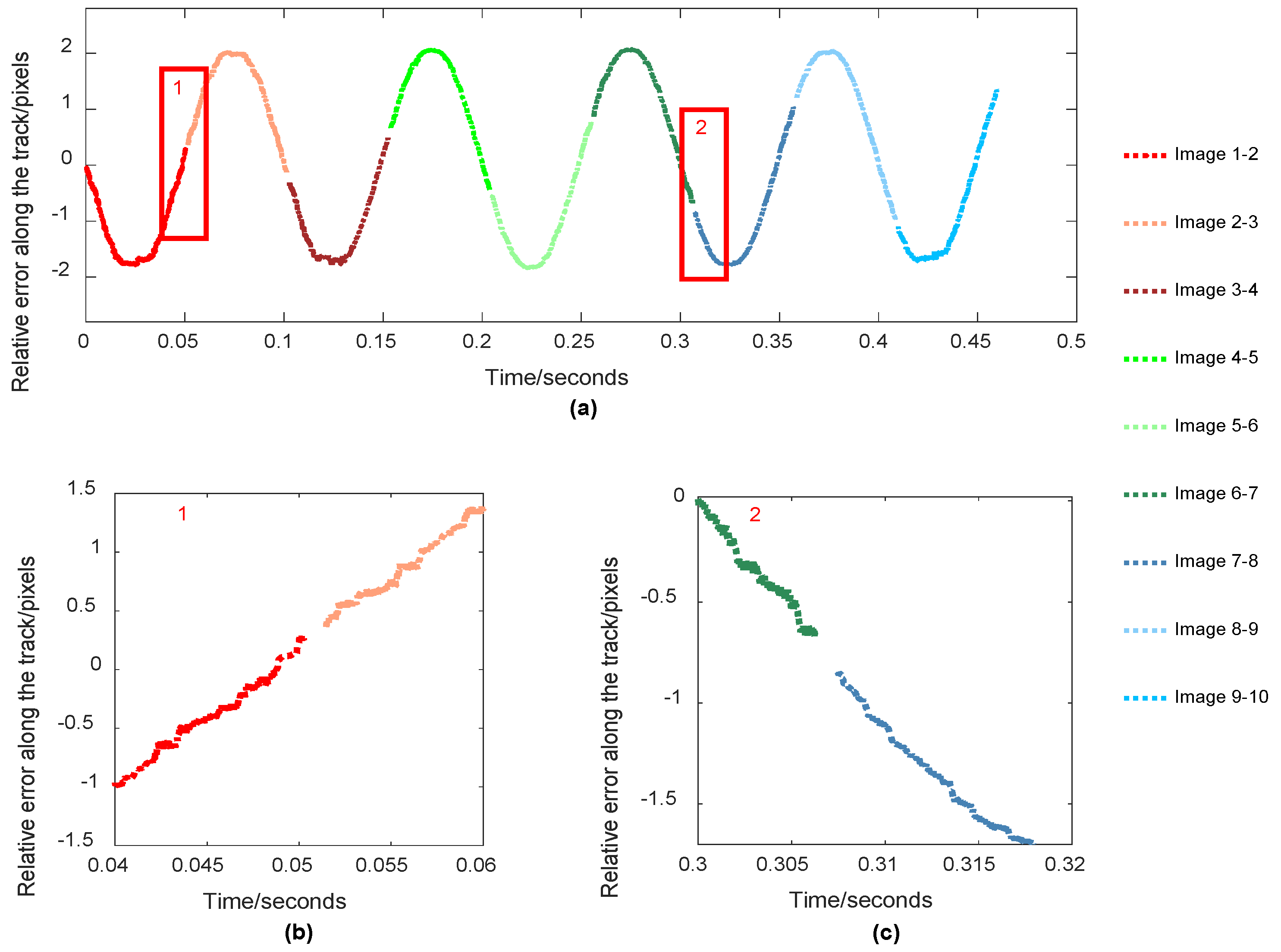

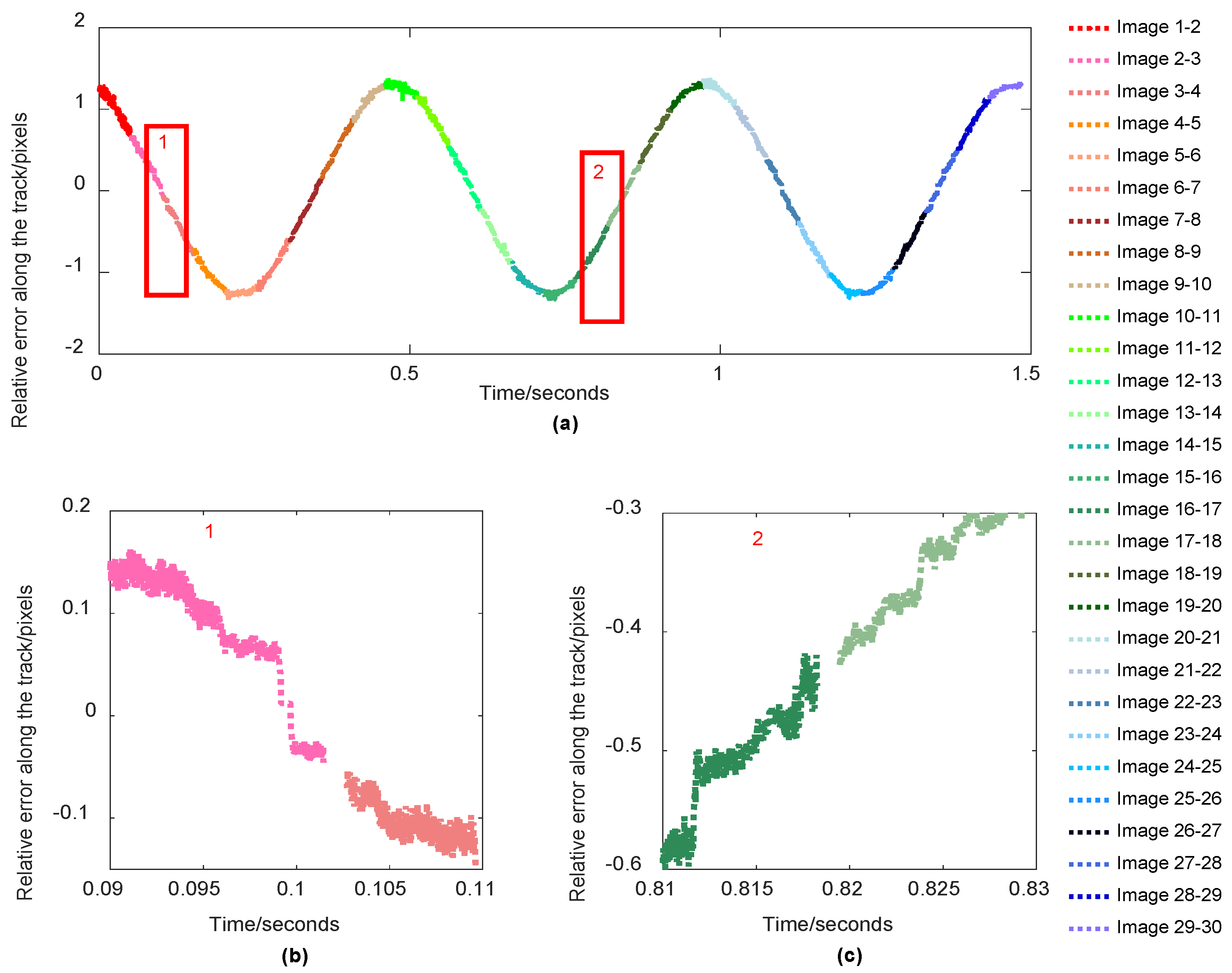

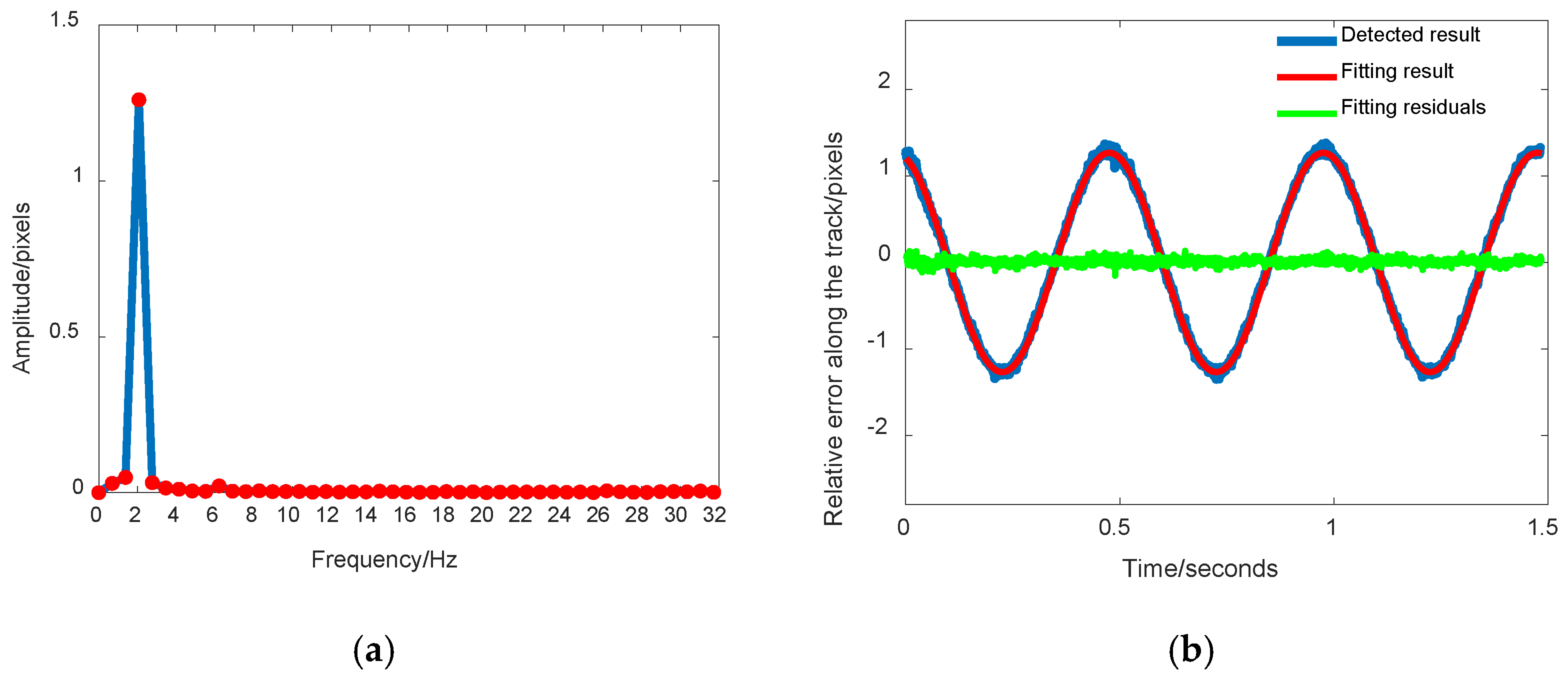

3.2.2. Jitter Detection Results by Sequential Disparity Maps

4. Discussion

4.1. Jitter Detection Accuracy by Single Disparity Map

4.2. Jitter Detection Accuracy by Sequential Disparity Maps

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Iwasaki, A. Detection and Estimation Satellite Attitude Jitter Using Remote Sensing Imagery. In Advances in Spacecraft Technologies; InTech: Rijeka, Croatia, 2011; pp. 257–272. [Google Scholar]

- Tong, X.; Ye, Z.; Xu, Y.; Tang, X.; Liu, S.; Li, L.; Xie, H.; Wang, F.; Li, T.; Hong, Z. Framework of Jitter Detection and Compensation for High Resolution Satellites. Remote Sens. 2014, 6, 3944–3964. [Google Scholar] [CrossRef] [Green Version]

- Robertson, B.C. Rigorous geometric modeling and correction of QuickBird imagery. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium, Toulouse, France, 21–25 August 2003. [Google Scholar]

- Takaku, J.; Tadono, T. High Resolution DSM Generation from ALOS Prism-processing Status and Influence of Attitude Fluctuation. In In Proceedings of the 2010 IEEE International Geoscience and Remote Sensing Symposium, Honolulu, HI, USA, 25–30 July 2010. [Google Scholar]

- Amberg, V.; Dechoz, C.; Bernard, L.; Greslou, D.; De Lussy, F.; Lebegue, L. In-Flight Attitude Perturbances Estimation: Application to PLEIADES-HR Satellites. In In Proceedings of the SPIE Optical Engineering & Applications. International Society for Optics and Photonics, San Diego, CA, USA, 23 September 2013. [Google Scholar]

- Jiang, Y.; Zhang, G.; Tang, X.; Li, D.; Huang, W. Detection and Correction of Relative Attitude Errors for ZY1-02C. IEEE Trans. Geosci. Remote Sens. 2014, 52, 7674–7683. [Google Scholar] [CrossRef]

- Tong, X.; Xu, Y.; Ye, Z.; Liu, S.; Tang, X.; Li, L.; Xie, H.; Xie, J. Attitude Oscillation Detection of the ZY-3 Satellite by Using Multispectral Parallax Images. IEEE Trans. Geosci. Remote Sens. 2015, 53, 3522–3534. [Google Scholar] [CrossRef]

- Wang, M.; Zhu, Y.; Jin, S.; Pan, J.; Zhu, Q. Correction of ZY-3 Image Distortion Caused by Satellite Jitter Via Virtual Steady Reimaging Using Attitude Data. ISPRS J. Photogramm. Remote Sens. 2016, 119, 108–123. [Google Scholar] [CrossRef]

- Zhu, Y.; Wang, M.; Cheng, Y.; He, L.; Xue, L. An Improved Jitter Detection Method Based on Parallax Observation of Multispectral Sensors for Gaofen-1 02/03/04 Satellites. Remote Sens. 2019, 11, 16. [Google Scholar] [CrossRef] [Green Version]

- Wang, M.; Zhu, Y.; Fan, C.C. Research Status and Prospect of Platform Jitter Geometric Accuracy Impact Analysis and Processing for High Resolution Optical Satellite Image. Geomat. Inf. Sci. Wuhan Univ. 2018, 43, 1899–1908. [Google Scholar]

- Ozesmi, S.L.; Bauer, M.E. Satellite remote sensing of wetlands. Wetl. Ecol. Manag. 2002, 10, 381–402. [Google Scholar] [CrossRef]

- Tong, X.H.; Ye, Z.; Liu, S.J. Key Techniques and Applications of High-Resolution Satellite Jitter Detection Compensation. Acta Geod. Cartogr. Sin. 2017, 46, 1500–1508. [Google Scholar]

- Huo, H.Q.; Ma, M.J.; Li, Y.P.; Qiu, J.W. High Precision Measurement Technology of Satellite Micro-Angle Flutter. Transducer Microsyst. Technol. 2011, 30, 4–6+9. [Google Scholar]

- Tang, X.; Xie, J.; Wang, X.; Jiang, W. High-Precision Attitude Post-Processing and Initial Verification for the ZY-3 Satellite. Remote Sens. 2015, 7, 111–134. [Google Scholar] [CrossRef]

- Wang, M.; Fan, C.; Pan, J.; Jin, S.; Chang, X. Image jitter detection and compensation using a high-frequency angular displacement method for Yaogan-26 remote sensing satellite. ISPRS J. Photogramm. Remote Sens. 2017, 130, 32–43. [Google Scholar] [CrossRef]

- Grodecki, J.; Dial, G. IKONOS geometric accuracy validation. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2002, 34, 50–55. [Google Scholar]

- Dial, G.; Bowen, H.; Gerlach, F.; Grodecki, J.; Oleszczuk, R. IKONOS satellite, imagery, and products. Remote Sens. Environ. 2003, 88, 23–36. [Google Scholar] [CrossRef]

- Aguilar, M.A.; del Mar Saldana, M.; Aguilar, F.J. Assessing geometric accuracy of the orthorectification process from GeoEye-1 and WorldView-2 panchromatic images. Int. J. Appl. Earth Obs. Geoinf. 2013, 21, 427–435. [Google Scholar] [CrossRef]

- Iwata, T. Precision attitude and position determination for the Advanced Land Observing Satellite (ALOS). In Proceedings of the Fourth International Asia-Pacific Environmental Remote Sensing Symposium 2004: Remote Sensing of the Atmosphere, Ocean, Environment, and Space, Honolulu, HI, USA, 8–12 November 2004; International Society for Optics and Photonics: Bellingham, WA, USA, 2005; pp. 34–50. [Google Scholar]

- Blarre, L.; Ouaknine, J.; Oddos-Marcel, L.; Martinez, P.E. High accuracy Sodern star trackers: Recent improvements proposed on SED36 and HYDRA star tracker. In Proceedings of the AIAA Guidance, Navigation, and Control Conference and Exhibit, Keystone, CO, USA, 21–24 August 2006; p. 6046. [Google Scholar]

- Teshima, Y.; Iwasaki, A. Correction of Attitude Fluctuation of Terra Spacecraft Using ASTER/SWIR Imagery with Parallax Observation. IEEE Trans. Geosci. Remote Sens. 2008, 46, 222–227. [Google Scholar] [CrossRef]

- Mattson, S.; Boyd, A.; Kirk, R.; Cook, D.A. HiJACK: Correcting spacecraft jitter in HiRISE images of Mars. In Proceedings of the European Planetary Science Congress, Potsdam, Germany, 14–18 September 2009. [Google Scholar]

- Delvit, J.M.; Greslou, D.; Amberg, V.; Dechoz, C.; de Lussy, F.; Lebegue, L.; Latry, C.; Artigues, S.; Bernard, L. Attitude assessment using Pleiades-HR capabilities. In Proceedings of the International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences, Melbourne, VIC, Australia, 25 August 2012. [Google Scholar]

- Ayoub, F.; Leprince, S.; Binet, R.; Lewis, K.W.; Aharonson, O.; Avouac, J.P. Influence of Camera Distortions on Satellite Image Registration and Change Detection Applications. In Proceedings of the 2008 IEEE International Geoscience and Remote Sensing Symposium, Boston, MA, USA, 6–11 July 2008. [Google Scholar]

- Liu, S.; Tong, X.; Wang, F.; Sun, W.; Guo, C.; Ye, Z.; Jin, Y.; Xie, H.; Chen, P. Attitude Jitter Detection Based on Remotely Sensed Images and Dense Ground Controls: A Case Study for Chinese ZY-3 Satellite. IEEE J.-STARS 2016, 9, 7. [Google Scholar] [CrossRef]

- Wang, P.; An, W.; Deng, X.; Yang, J.; Sheng, W. A jitter compensation method for spaceborne line-array imagery using compressive sampling. Remote Sens. Lett. 2015, 6, 558–567. [Google Scholar] [CrossRef]

- Ran, Q.; Chi, Y.; Wang, Z. Property and removal of jitter in Beijing-1 small satellite panchromatic images. In Proceedings of the International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences, Beijing, China, 3–11 July 2008. [Google Scholar]

- Wang, Z.; Zhang, Z.; Dong, L.; Xu, G. Jitter Detection and Image Restoration Based on Generative Adversarial Networks in Satellite Images. Sensors 2021, 21, 4693. [Google Scholar] [CrossRef] [PubMed]

- Tong, X.; Li, L.; Liu, S.; Xu, Y.; Ye, Z.; Jin, Y.; Wang, F.; Xie, H. Detection and estimation of ZY-3 three-line array image distortions caused by attitude oscillation. ISPRS J. Photogramm. Remote Sens. 2015, 101, 291–309. [Google Scholar] [CrossRef]

- Wang, M.; Zhu, Y.; Pan, J.; Yang, B.; Zhu, Q. Satellite jitter detection and compensation using multispectral imagery. Remote Sens. Lett. 2016, 7, 513–522. [Google Scholar] [CrossRef]

- Pan, J.; Che, C.; Zhu, Y.; Wang, M. Satellite jitter estimation and validation using parallax images. Sensors 2017, 17, 83. [Google Scholar] [CrossRef] [Green Version]

- Tong, X.; Ye, Z.; Li, L.; Liu, S.; Jin, Y.; Chen, P.; Xie, H.; Zhang, S. Detection and Estimation of along-Track Attitude Jitter from Ziyuan-3 Three-Line-Array Images Based on Back-Projection Residuals. IEEE Trans. Geosci. Remote Sens. 2017, 55, 4272–4284. [Google Scholar] [CrossRef]

- Sun, T.; Long, H.; Liu, B.; Li, Y. Application of attitude jitter detection based on short-time asynchronous images and compensation methods for Chinese mapping satellite-1. Opt. Express 2015, 23, 1395–1410. [Google Scholar] [CrossRef]

- Luo, B.; Yang, F.; Yan, L. Key technologies and research development of CMOS image sensors. In Proceedings of the 2010 Second IITA International Conference on Geoscience and Remote Sensing (IITA-GRS 2010), Qingdao, China, 28–31 August 2010; Volume 1. [Google Scholar]

- Sunny, A.I.; Zhang, J.; Tian, G.Y.; Tang, C.; Rafique, W.; Zhao, A.; Fan, M. Temperature independent defect monitoring using passive wireless RFID sensing system. IEEE Sens. J. 2018, 19, 1525–1532. [Google Scholar] [CrossRef] [Green Version]

- Liu, H.; Li, X.; Xue, X.; Han, C.; Hu, C.; Sun, X. Vibration parameter detection of space camera by taking advantage of COMS self-correlation Imaging of plane array of roller shutter. Opt. Precis. Eng. 2016, 24, 1474–1481. [Google Scholar]

- Zhao, W.; Fan, C.; Wang, Y.; Shang, D.H.; Zhang, Y.H.; Yin, Z.H. A Jitter Detection Method for Space Camera Based on Rolling Shutter CMOS Imaging. Acta Opt. Sin. 2021, 1, 13. [Google Scholar]

- Low, D.G. Distinctive Image Features from Scale-Invariant Keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

| Dataset ID | Frequency | Amplitude | Integration Time | Imaging Duration | Image Size | Number of Images |

|---|---|---|---|---|---|---|

| 1 | 100.0 | 1.0 | 0.000025 | 0.256 s | 2048 × 2048 | 5 |

| 2 | 10.0 | 1.0 | 0.000025 | 0.512 s | 2048 × 2048 | 10 |

| 3 | 2.0 | 2.0 | 0.000025 | 1.536 s | 2048 × 2048 | 30 |

| Image Combination | Frequency/Hz | Amplitude/Pixel | Phase/Rad |

|---|---|---|---|

| 1–2 | 100.005825 | 0.697309 | 1.945412 |

| 2–3 | 99.992349 | 0.705260 | 1.958776 |

| 3–4 | 99.998652 | 0.722530 | 1.949275 |

| 4–5 | 100.001128 | 0.729211 | 1.945773 |

| Data | Frequency/Hz | Amplitude/Pixel | Phase/Rad |

|---|---|---|---|

| 1 | 99.997711 | 0.713395 | 1.940706 |

| 2 | 10.003774 | 1.942974 | 3.177410 |

| 3 | 2.000533 | 1.268048 | 1.891298 |

| Image Combination | Frequency/Hz | Amplitude/Pixel | Phase/Rad |

|---|---|---|---|

| 1–2 | 100.005825 | 0.944875 | 0.003312 |

| 2–3 | 99.992349 | 0.960897 | 0.001704 |

| 3–4 | 99.998652 | 0.981904 | 0.002196 |

| 4–5 | 100.001128 | 0.969415 | 0.004857 |

| Average value | 99.999488 | 0.969415 | 0.004857 |

| Image Combination | Average Error/Pixel | RMSE/Pixel | Maximum Error/Pixel | Minimum Error/Pixel |

|---|---|---|---|---|

| 1–2 | 0.000009 | 0.039118 | 0.055342 | −0.055328 |

| 2–3 | −0.000059 | 0.028290 | 0.040181 | −0.040237 |

| 3–4 | −0.000013 | 0.012812 | 0.018113 | −0.018111 |

| 4–5 | 0.000007 | 0.007489 | 0.010634 | −0.010646 |

| Average value | −0.000014 | 0.021927 | 0.031067 | −0.003417 |

| Data | Frequency/Hz | Amplitude/Pixel | Phase/Rad |

|---|---|---|---|

| 1 | 99.997711 | 0.969811 | 0.003287 |

| 2 | 10.003774 | 0.972187 | 0.002489 |

| 3 | 2.000533 | 2.004754 | 0.001283 |

| Data | Average Error/Pixel | RMSE/Pixel | Maximum Error/Pixel | Minimum Error/Pixel |

|---|---|---|---|---|

| 1 | −0.000413 | 0.021436 | 0.030350 | −0.00357 |

| 2 | −0.002036 | 0.020352 | 0.030045 | −0.030512 |

| 3 | −0.000129 | 0.006487 | 0.011325 | −0.012143 |

| Average value | −8.56E−4 | 0.016091 | 0.023907 | −0.015408 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhu, Y.; Yang, T.; Wang, M.; Hong, H.; Zhang, Y.; Wang, L.; Rao, Q. Jitter Detection Method Based on Sequence CMOS Images Captured by Rolling Shutter Mode for High-Resolution Remote Sensing Satellite. Remote Sens. 2022, 14, 342. https://doi.org/10.3390/rs14020342

Zhu Y, Yang T, Wang M, Hong H, Zhang Y, Wang L, Rao Q. Jitter Detection Method Based on Sequence CMOS Images Captured by Rolling Shutter Mode for High-Resolution Remote Sensing Satellite. Remote Sensing. 2022; 14(2):342. https://doi.org/10.3390/rs14020342

Chicago/Turabian StyleZhu, Ying, Tingting Yang, Mi Wang, Hanyu Hong, Yaozong Zhang, Lei Wang, and Qilong Rao. 2022. "Jitter Detection Method Based on Sequence CMOS Images Captured by Rolling Shutter Mode for High-Resolution Remote Sensing Satellite" Remote Sensing 14, no. 2: 342. https://doi.org/10.3390/rs14020342