An Investigation of Winter Wheat Leaf Area Index Fitting Model Using Spectral and Canopy Height Model Data from Unmanned Aerial Vehicle Imagery

Abstract

1. Introduction

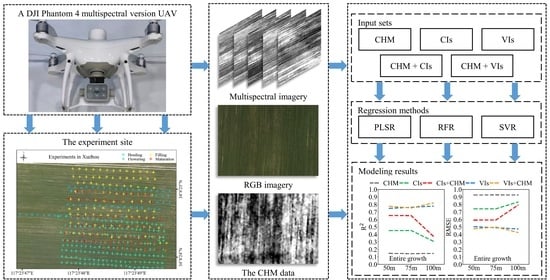

2. Materials and Methods

2.1. Study Area

2.2. Acquisition and Pre-Processing of Images

2.3. Field Collection for LAI

2.4. Image Processing and Data Extraction

2.4.1. CIs and VIs

2.4.2. Extraction for CHM Data

2.5. Data Analyses

2.5.1. Correlation Analysis

2.5.2. Modeling Methods

2.5.3. Accuracy Assessment

3. Results

3.1. Data Range of Wheat LAI

3.2. Data Size Per Hectare

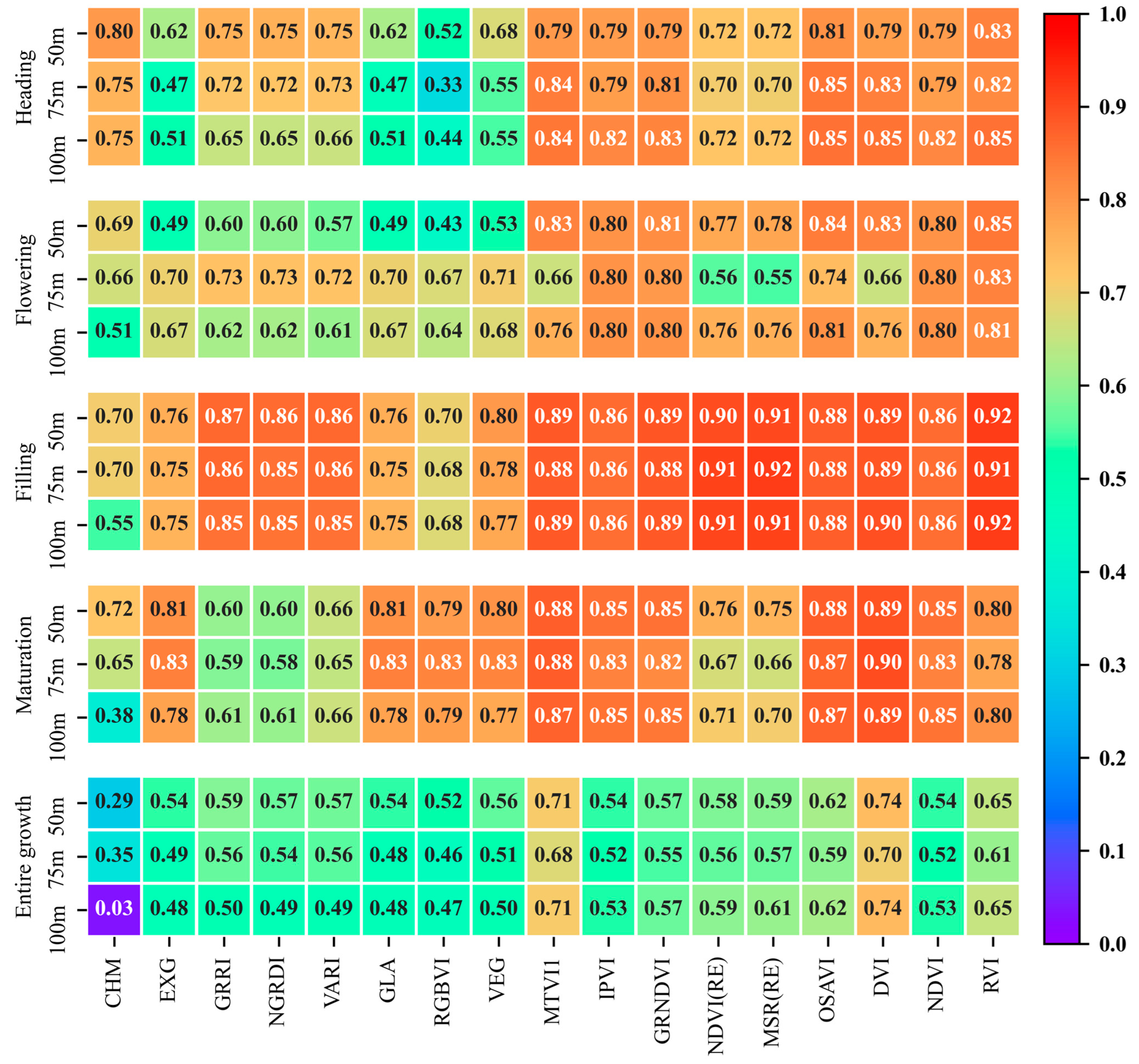

3.3. Correlation between LAI and CHM Data, CIs and VIs

3.4. LAI Prediction Accuracy from PLSR, RFR, and SVR over the Entire Growth Period

3.5. LAI Prediction Accuracy for Individual Growth Stages

3.6. Effect of Spatial Resolution on LAI Prediction

4. Discussion

4.1. Using CHM Data Combined with Either CIs or VIs in LAI Modelling

4.2. The Optimal Spatial Resolution of the CHM data for LAI Prediction

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Watson, D.J. Comparative Physiological Studies on the Growth of Field Crops: II. The Effect of Varying Nutrient Supply on Net Assimilation Rate and Leaf Area. Ann. Bot. 1947, 11, 375–407. [Google Scholar] [CrossRef]

- Qu, Y.H.; Wang, Z.X.; Shang, J.L.; Liu, J.G.; Zou, J. Estimation of leaf area index using inclined smartphone camera. Comput. Electron. Agric. 2021, 191, 106514. [Google Scholar] [CrossRef]

- Yan, G.J.; Hu, R.H.; Luo, J.H.; Weiss, M.; Jiang, H.L.; Mu, X.H.; Xie, D.H.; Zhang, W.M. Review of indirect optical measurements of leaf area index: Recent advances, challenges, and perspectives. Agric. For. Meteorol. 2019, 265, 390–411. [Google Scholar] [CrossRef]

- Lan, Y.B.; Huang, Z.X.; Deng, X.L.; Zhu, Z.H.; Huang, H.S.; Zheng, Z.; Lian, B.Z.; Zeng, G.L.; Tong, Z.J. Comparison of machine learning methods for citrus greening detection on UAV multispectral images. Comput. Electron. Agric. 2020, 171, 105234. [Google Scholar] [CrossRef]

- Behera, S.K.; Srivastava, P.; Pathre, U.V.; Tuli, R. An indirect method of estimating leaf area index in Jatropha curcas L. using LAI-2000 Plant Canopy Analyzer. Agric. For. Meteorol. 2010, 150, 307–311. [Google Scholar] [CrossRef]

- dela Torre, D.M.G.; Gao, J.; Macinnis-Ng, C. Remote sensing-based estimation of rice yields using various models: A critical review. Geo-Spat. Inf. Sci. 2021, 24, 580–603. [Google Scholar] [CrossRef]

- Jonckheere, I.; Fleck, S.; Nackaerts, K.; Muys, B.; Coppin, P.; Weiss, M.; Baret, F. Review of methods for in situ leaf area index determination. Agric. For. Meteorol. 2004, 121, 19–35. [Google Scholar] [CrossRef]

- Zheng, H.; Cheng, T.; Li, D.; Yao, X.; Tian, Y.; Cao, W.; Zhu, Y. Combining Unmanned Aerial Vehicle (UAV)-Based Multispectral Imagery and Ground-Based Hyperspectral Data for Plant Nitrogen Concentration Estimation in Rice. Front Plant Sci 2018, 9, 936. [Google Scholar] [CrossRef]

- Yu, L.; Shang, J.; Cheng, Z.; Gao, Z.; Wang, Z.; Tian, L.; Wang, D.; Che, T.; Jin, R.; Liu, J.; et al. Assessment of Cornfield LAI Retrieved from Multi-Source Satellite Data Using Continuous Field LAI Measurements Based on a Wireless Sensor Network. Remote Sens. 2020, 12, 3304. [Google Scholar] [CrossRef]

- Liu, S.; Jin, X.; Nie, C.; Wang, S.; Yu, X.; Cheng, M.; Shao, M.; Wang, Z.; Tuohuti, N.; Bai, Y.; et al. Estimating leaf area index using unmanned aerial vehicle data: Shallow vs. deep machine learning algorithms. Plant Physiol. 2021, 187, 1551–1576. [Google Scholar] [CrossRef] [PubMed]

- Arroyo-Mora, J.P.; Kalacska, M.; Loke, T.; Schlapfer, D.; Coops, N.C.; Lucanus, O.; Leblanc, G. Assessing the impact of illumination on UAV pushbroom hyperspectral imagery collected under various cloud cover conditions. Remote Sens. Environ. 2021, 258, 112396. [Google Scholar] [CrossRef]

- Xu, W.C.; Yang, W.G.; Chen, S.D.; Wu, C.S.; Chen, P.C.; Lan, Y.B. Establishing a model to predict the single boll weight of cotton in northern Xinjiang by using high resolution UAV remote sensing data. Comput. Electron. Agric. 2020, 179, 105762. [Google Scholar] [CrossRef]

- Tetila, E.C.; Machado, B.B.; Menezes, G.K.; Oliveira, A.D.; Alvarez, M.; Amorim, W.P.; Belete, N.A.D.; da Silva, G.G.; Pistori, H. Automatic Recognition of Soybean Leaf Diseases Using UAV Images and Deep Convolutional Neural Networks. IEEE Geosci. Remote Sens. Lett. 2020, 17, 903–907. [Google Scholar] [CrossRef]

- Maes, W.H.; Steppe, K. Perspectives for Remote Sensing with Unmanned Aerial Vehicles in Precision Agriculture. Trends Plant Sci. 2019, 24, 152–164. [Google Scholar] [CrossRef]

- Niu, Y.X.; Zhang, L.Y.; Zhang, H.H.; Han, W.T.; Peng, X.S. Estimating Above-Ground Biomass of Maize Using Features Derived from UAV-Based RGB Imagery. Remote Sens. 2019, 11, 1261. [Google Scholar] [CrossRef]

- Cao, Y.; Li, G.L.; Luo, Y.K.; Pan, Q.; Zhang, S.Y. Monitoring of sugar beet growth indicators using wide-dynamic-range vegetation index (WDRVI) derived from UAV multispectral images. Comput. Electron. Agric. 2020, 171, 105331. [Google Scholar] [CrossRef]

- Wang, F.M.; Yi, Q.X.; Hu, J.H.; Xie, L.L.; Yao, X.P.; Xu, T.Y.; Zheng, J.Y. Combining spectral and textural information in UAV hyperspectral images to estimate rice grain yield. Int. J. Appl. Earth Obs. Geoinf. 2021, 102, 102397. [Google Scholar] [CrossRef]

- Feng, L.; Chen, S.S.; Zhang, C.; Zhang, Y.C.; He, Y. A comprehensive review on recent applications of unmanned aerial vehicle remote sensing with various sensors for high-throughput plant phenotyping. Comput. Electron. Agric. 2021, 182, 106033. [Google Scholar] [CrossRef]

- Zheng, H.B.; Cheng, T.; Zhou, M.; Li, D.; Yao, X.; Tian, Y.C.; Cao, W.X.; Zhu, Y. Improved estimation of rice aboveground biomass combining textural and spectral analysis of UAV imagery. Precis. Agric. 2019, 20, 611–629. [Google Scholar] [CrossRef]

- Maimaitijiang, M.; Sagan, V.; Sidike, P.; Hartling, S.; Esposito, F.; Fritschi, F.B. Soybean yield prediction from UAV using multimodal data fusion and deep learning. Remote Sens. Environ. 2020, 237, 111599. [Google Scholar] [CrossRef]

- Bendig, J.; Yu, K.; Aasen, H.; Bolten, A.; Bennertz, S.; Broscheit, J.; Gnyp, M.L.; Bareth, G. Combining UAV-based plant height from crop surface models, visible, and near infrared vegetation indices for biomass monitoring in barley. Int. J. Appl. Earth Obs. Geoinf. 2015, 39, 79–87. [Google Scholar] [CrossRef]

- Li, B.; Xu, X.M.; Zhang, L.; Han, J.W.; Bian, C.S.; Li, G.C.; Liu, J.G.; Jin, L.P. Above-ground biomass estimation and yield prediction in potato by using UAV-based RGB and hyperspectral imaging. ISPRS J. Photogramm. Remote Sens. 2020, 162, 161–172. [Google Scholar] [CrossRef]

- Li, W.; Niu, Z.; Chen, H.Y.; Li, D.; Wu, M.Q.; Zhao, W. Remote estimation of canopy height and aboveground biomass of maize using high-resolution stereo images from a low-cost unmanned aerial vehicle system. Ecol. Indic. 2016, 67, 637–648. [Google Scholar] [CrossRef]

- Peprah, C.O.; Yamashita, M.; Yamaguchi, T.; Sekino, R.; Takano, K.; Katsura, K. Spatio-Temporal Estimation of Biomass Growth in Rice Using Canopy Surface Model from Unmanned Aerial Vehicle Images. Remote Sens. 2021, 13, 2388. [Google Scholar] [CrossRef]

- Maimaitijiang, M.; Ghulam, A.; Sidike, P.; Hartling, S.; Maimaitiyiming, M.; Peterson, K.; Shavers, E.; Fishman, J.; Peterson, J.; Kadam, S.; et al. Unmanned Aerial System (UAS)-based phenotyping of soybean using multi-sensor data fusion and extreme learning machine. ISPRS J. Photogramm. Remote Sens. 2017, 134, 43–58. [Google Scholar] [CrossRef]

- Reisi Gahrouei, O.; McNairn, H.; Hosseini, M.; Homayouni, S. Estimation of Crop Biomass and Leaf Area Index from Multitemporal and Multispectral Imagery Using Machine Learning Approaches. Can. J. Remote Sens. 2020, 46, 84–99. [Google Scholar] [CrossRef]

- Li, S.Y.; Yuan, F.; Ata-UI-Karim, S.T.; Zheng, H.B.; Cheng, T.; Liu, X.J.; Tian, Y.C.; Zhu, Y.; Cao, W.X.; Cao, Q. Combining Color Indices and Textures of UAV-Based Digital Imagery for Rice LAI Estimation. Remote Sens. 2019, 11, 1763. [Google Scholar] [CrossRef]

- Wang, L.A.; Zhou, X.D.; Zhu, X.K.; Dong, Z.D.; Guo, W.S. Estimation of biomass in wheat using random forest regression algorithm and remote sensing data. Crop J. 2016, 4, 212–219. [Google Scholar] [CrossRef]

- Maimaitijiang, M.; Sagan, V.; Sidike, P.; Daloye, A.M.; Erkbol, H.; Fritschi, F.B. Crop Monitoring Using Satellite/UAV Data Fusion and Machine Learning. Remote Sens. 2020, 12, 1357. [Google Scholar] [CrossRef]

- Dong, T.F.; Liu, J.G.; Shang, J.L.; Qian, B.D.; Ma, B.L.; Kovacs, J.M.; Walters, D.; Jiao, X.F.; Geng, X.Y.; Shi, Y.C. Assessment of red-edge vegetation indices for crop leaf area index estimation. Remote Sens. Environ. 2019, 222, 133–143. [Google Scholar] [CrossRef]

- Meyer, G.E.; Neto, J.C. Verification of color vegetation indices for automated crop imaging applications. Comput. Electron. Agric. 2008, 63, 282–293. [Google Scholar] [CrossRef]

- Woebbecke, D.M.; Meyer, G.E.; Bargen, K.V.; Mortensen, D.A. Color Indices for Weed Identification Under Various Soil, Residue, and Lighting Conditions. Trans. ASAE 1995, 38, 259–269. [Google Scholar] [CrossRef]

- Verrelst, J.; Schaepman, M.E.; Koetz, B.; Kneubuhler, M. Angular sensitivity analysis of vegetation indices derived from CHRIS/PROBA data. Remote Sens. Environ. 2008, 112, 2341–2353. [Google Scholar] [CrossRef]

- Tucker, C.J. Red and photographic infrared linear combinations for monitoring vegetation. Remote Sens. Environ. 1979, 8, 127–150. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Kaufman, Y.J.; Stark, R.; Rundquist, D. Novel algorithms for remote estimation of vegetation fraction. Remote Sens. Environ. 2002, 80, 76–87. [Google Scholar] [CrossRef]

- Louhaichi, M.; Borman, M.M.; Johnson, D.E. Spatially Located Platform and Aerial Photography for Documentation of Grazing Impacts on Wheat. Geocarto Int. 2008, 16, 65–70. [Google Scholar] [CrossRef]

- Hague, T.; Tillett, N.D.; Wheeler, H. Automated crop and weed monitoring in widely spaced cereals. Precis. Agric. 2006, 7, 21–32. [Google Scholar] [CrossRef]

- Becker, F.; Choudhury, B.J. Relative sensitivity of normalized difference vegetation Index (NDVI) and microwave polarization difference Index (MPDI) for vegetation and desertification monitoring. Remote Sens. Environ. 1988, 24, 297–311. [Google Scholar] [CrossRef]

- Gitelson, A.; Merzlyak, M.N. Spectral Reflectance Changes Associated with Autumn Senescence of Aesculus hippocastanum L. and Acer platanoides L. Leaves. Spectral Features and Relation to Chlorophyll Estimation. J. Plant Physiol. 1994, 143, 286–292. [Google Scholar] [CrossRef]

- Wu, C.Y.; Niu, Z.; Tang, Q.; Huang, W.J. Estimating chlorophyll content from hyperspectral vegetation indices: Modeling and validation. Agric. For. Meteorol. 2008, 148, 1230–1241. [Google Scholar] [CrossRef]

- Rondeaux, G.; Steven, M.; Baret, F. Optimization of soil-adjusted vegetation indices. Remote Sens. Environ. 1996, 55, 95–107. [Google Scholar] [CrossRef]

- Peñuelas, J.; Isla, R.; Filella, I.; Araus, J.L. Visible and Near-Infrared Reflectance Assessment of Salinity Effects on Barley. Crop Sci. 1997, 37, 198–202. [Google Scholar] [CrossRef]

- Jordan, C.F. Derivation of Leaf-Area Index from Quality of Light on the Forest Floor. Ecology 1969, 50, 663–666. [Google Scholar] [CrossRef]

- Haboudane, D.; Miller, J.R.; Pattey, E.; Zarco-Tejada, P.J.; Strachan, I.B. Hyperspectral vegetation indices and novel algorithms for predicting green LAI of crop canopies: Modeling and validation in the context of precision agriculture. Remote Sens. Environ. 2004, 90, 337–352. [Google Scholar] [CrossRef]

- Crippen, R. Calculating the vegetation index faster. Remote Sens. Environ. 1990, 34, 71–73. [Google Scholar] [CrossRef]

- Wang, F.-M.; Huang, J.-F.; Tang, Y.-L.; Wang, X.-Z. New Vegetation Index and Its Application in Estimating Leaf Area Index of Rice. Rice Sci. 2007, 14, 195–203. [Google Scholar] [CrossRef]

- Zhao, K.G.; Suarez, J.C.; Garcia, M.; Hu, T.X.; Wang, C.; Londo, A. Utility of multitemporal lidar for forest and carbon monitoring: Tree growth, biomass dynamics, and carbon flux. Remote Sens. Environ. 2018, 204, 883–897. [Google Scholar] [CrossRef]

- Yuan, H.H.; Yang, G.J.; Li, C.C.; Wang, Y.J.; Liu, J.G.; Yu, H.Y.; Feng, H.K.; Xu, B.; Zhao, X.Q.; Yang, X.D. Retrieving Soybean Leaf Area Index from Unmanned Aerial Vehicle Hyperspectral Remote Sensing: Analysis of RF, ANN, and SVM Regression Models. Remote Sens. 2017, 9, 309. [Google Scholar] [CrossRef]

- Qu, Y.H.; Gao, Z.B.; Shang, J.L.; Liu, J.G.; Casa, R. Simultaneous measurements of corn leaf area index and mean tilt angle from multi-directional sunlit and shaded fractions using downward-looking photography. Comput. Electron. Agric. 2021, 180, 105881. [Google Scholar] [CrossRef]

- Kayad, A.; Sozzi, M.; Paraforos, D.S.; Rodrigues, F.A.; Cohen, Y.; Fountas, S.; Francisco, M.-J.; Pezzuolo, A.; Grigolato, S.; Marinello, F. How many gigabytes per hectare are available in the digital agriculture era? A digitization footprint estimation. Comput. Electron. Agric. 2022, 198, 107080. [Google Scholar] [CrossRef]

- Volpato, L.; Pinto, F.; Gonzalez-Perez, L.; Thompson, I.G.; Borem, A.; Reynolds, M.; Gerard, B.; Molero, G.; Rodrigues, F.A., Jr. High Throughput Field Phenotyping for Plant Height Using UAV-Based RGB Imagery in Wheat Breeding Lines: Feasibility and Validation. Front. Plant Sci. 2021, 12, 591587. [Google Scholar] [CrossRef]

- Ballesteros, R.; Ortega, J.F.; Hernandez, D.; Moreno, M.A. Onion biomass monitoring using UAV-based RGB imaging. Precis. Agric. 2018, 19, 840–857. [Google Scholar] [CrossRef]

- Yamaguchi, T.; Tanaka, Y.; Imachi, Y.; Yamashita, M.; Katsura, K. Feasibility of Combining Deep Learning and RGB Images Obtained by Unmanned Aerial Vehicle for Leaf Area Index Estimation in Rice. Remote Sens. 2020, 13, 84. [Google Scholar] [CrossRef]

- Zhang, J.Y.; Qiu, X.L.; Wu, Y.T.; Zhu, Y.; Cao, Q.; Liu, X.J.; Cao, W.X. Combining texture, color, and vegetation indices from fixed-wing UAS imagery to estimate wheat growth parameters using multivariate regression methods. Comput. Electron. Agric. 2021, 185, 106138. [Google Scholar] [CrossRef]

- Lu, N.; Zhou, J.; Han, Z.; Li, D.; Cao, Q.; Yao, X.; Tian, Y.; Zhu, Y.; Cao, W.; Cheng, T. Improved estimation of aboveground biomass in wheat from RGB imagery and point cloud data acquired with a low-cost unmanned aerial vehicle system. Plant Methods 2019, 15, 17. [Google Scholar] [CrossRef] [PubMed]

- Gong, Y.; Yang, K.; Lin, Z.; Fang, S.; Wu, X.; Zhu, R.; Peng, Y. Remote estimation of leaf area index (LAI) with unmanned aerial vehicle (UAV) imaging for different rice cultivars throughout the entire growing season. Plant Methods 2021, 17, 88. [Google Scholar] [CrossRef]

- Yue, J.; Yang, G.; Tian, Q.; Feng, H.; Xu, K.; Zhou, C. Estimate of winter-wheat above-ground biomass based on UAV ultrahigh-ground-resolution image textures and vegetation indices. ISPRS J. Photogramm. Remote Sens. 2019, 150, 226–244. [Google Scholar] [CrossRef]

| Band Name | Central Wavelength (nm) | Bandwidth (nm) |

|---|---|---|

| Blue | 450 | 16 |

| Green | 560 | 16 |

| Red | 650 | 16 |

| Red Edge (RE) | 730 | 16 |

| Near Infrared (NIR) | 840 | 26 |

| Growth Stage | UAV Flight Date | Image Capture Altitude | Sampling Date | Number of Samples |

|---|---|---|---|---|

| Heading | 19 April | 50, 75, 100 m | 18 April, 19 April | 179 |

| Flowering | 29 April | same as above | 28 April | 130 |

| Filling | 20 May | same as above | 19 May, 20 May | 141 |

| Maturation | 27 May | same as above | 26 May, 27 May | 107 |

| Index | Formula | Reference |

|---|---|---|

| Excess Green Index (EXG) | [32] | |

| Green Red Ratio Index (GRRI) | [33] | |

| Normalized Green-Red Difference Index (NGRDI) | [34] | |

| Visible Atmospherically Resistant Index (VARI) | [35] | |

| Green Leaf Algorithm (GLA) | [36] | |

| Red Green Blue Vegetation Index (RGBVI) | [21] | |

| Vegetativen (VEG) | [37] | |

| Difference Vegetation Index (DVI) | [38] | |

| Normalized Difference Red-Edge vegetation index (NDVIRE) | [39] | |

| Modified Red-Edge Simple Ratio Index (MSRRE) | [40] | |

| Optimization of Soil-Adjusted Vegetation Index (OSAVI) | [41] | |

| Normalized Difference Vegetation Index (NDVI) | [42] | |

| Ratio Vegetation Index (RVI) | [43] | |

| Modified Triangular Vegetation Index-1 (MITVI1) | [44] | |

| Infrared Percentage Vegetation Index (IPVI) | [45] | |

| Green Red Normalized Difference Vegetation Index (GRNDVI) | [46] |

| Growth Stage | Training Dataset | Testing Dataset | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Number | Range | Mean | STD | CV | Number | Range | Mean | STD | CV | |

| Heading | 119 | 2.22–7.37 | 4.06 | 1.01 | 24.91 | 60 | 2.47–6.62 | 4.31 | 1.08 | 25.14 |

| Flowering | 87 | 1.84–5.45 | 3.45 | 0.84 | 24.26 | 43 | 2.14–5.19 | 3.36 | 0.72 | 21.52 |

| Filling | 94 | 1.43–6.20 | 3.25 | 1.07 | 33.05 | 47 | 1.59–5.76 | 3.31 | 1.02 | 30.86 |

| Maturation | 71 | 1.71–4.97 | 3.20 | 0.79 | 24.67 | 36 | 1.74–5.47 | 3.30 | 0.86 | 25.98 |

| Entire growth | 371 | 1.43–7.37 | 3.56 | 1.05 | 29.38 | 186 | 1.59–6.09 | 3.61 | 1.00 | 27.78 |

| Data Type | Flight Altitude (m) | Spatial Resolution (cm) | Data Size (MB ha−1) |

|---|---|---|---|

| Multispectral | 100 | 5.4 | 77.148 |

| 75 | 4.0 | 120.215 | |

| 50 | 2.7 | 306.738 | |

| RGB | 100 | 5.4 | 11.621 |

| 75 | 4.0 | 18.066 | |

| 50 | 2.7 | 46.094 | |

| CHM | 100 | 10.8 | 3.906 |

| 75 | 8.0 | 5.566 | |

| 50 | 5.4 | 12.400 |

| 50 m | Method | Metrics | CHM | CIs | CIs + CHM | VIs | VIs + CHM |

|---|---|---|---|---|---|---|---|

| Entire growth | PLSR | R2 | 0.097 | 0.430 | 0.588 | 0.707 | 0.753 |

| RMSE | 0.952 | 0.757 | 0.644 | 0.542 | 0.498 | ||

| RFR | R2 | 0.045 | 0.358 | 0.644 | 0.711 | 0.776 | |

| RMSE | 0.980 | 0.803 | 0.598 | 0.539 | 0.475 | ||

| SVR | R2 | 0.147 | 0.451 | 0.652 | 0.748 | 0.768 | |

| RMSE | 0.926 | 0.742 | 0.591 | 0.503 | 0.483 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, X.; Zhang, K.; Wu, S.; Shi, H.; Sun, Y.; Zhao, Y.; Fu, E.; Chen, S.; Bian, C.; Ban, W. An Investigation of Winter Wheat Leaf Area Index Fitting Model Using Spectral and Canopy Height Model Data from Unmanned Aerial Vehicle Imagery. Remote Sens. 2022, 14, 5087. https://doi.org/10.3390/rs14205087

Zhang X, Zhang K, Wu S, Shi H, Sun Y, Zhao Y, Fu E, Chen S, Bian C, Ban W. An Investigation of Winter Wheat Leaf Area Index Fitting Model Using Spectral and Canopy Height Model Data from Unmanned Aerial Vehicle Imagery. Remote Sensing. 2022; 14(20):5087. https://doi.org/10.3390/rs14205087

Chicago/Turabian StyleZhang, Xuewei, Kefei Zhang, Suqin Wu, Hongtao Shi, Yaqin Sun, Yindi Zhao, Erjiang Fu, Shuo Chen, Chaofa Bian, and Wei Ban. 2022. "An Investigation of Winter Wheat Leaf Area Index Fitting Model Using Spectral and Canopy Height Model Data from Unmanned Aerial Vehicle Imagery" Remote Sensing 14, no. 20: 5087. https://doi.org/10.3390/rs14205087

APA StyleZhang, X., Zhang, K., Wu, S., Shi, H., Sun, Y., Zhao, Y., Fu, E., Chen, S., Bian, C., & Ban, W. (2022). An Investigation of Winter Wheat Leaf Area Index Fitting Model Using Spectral and Canopy Height Model Data from Unmanned Aerial Vehicle Imagery. Remote Sensing, 14(20), 5087. https://doi.org/10.3390/rs14205087