Spiral Search Grasshopper Features Selection with VGG19-ResNet50 for Remote Sensing Object Detection

Abstract

1. Introduction

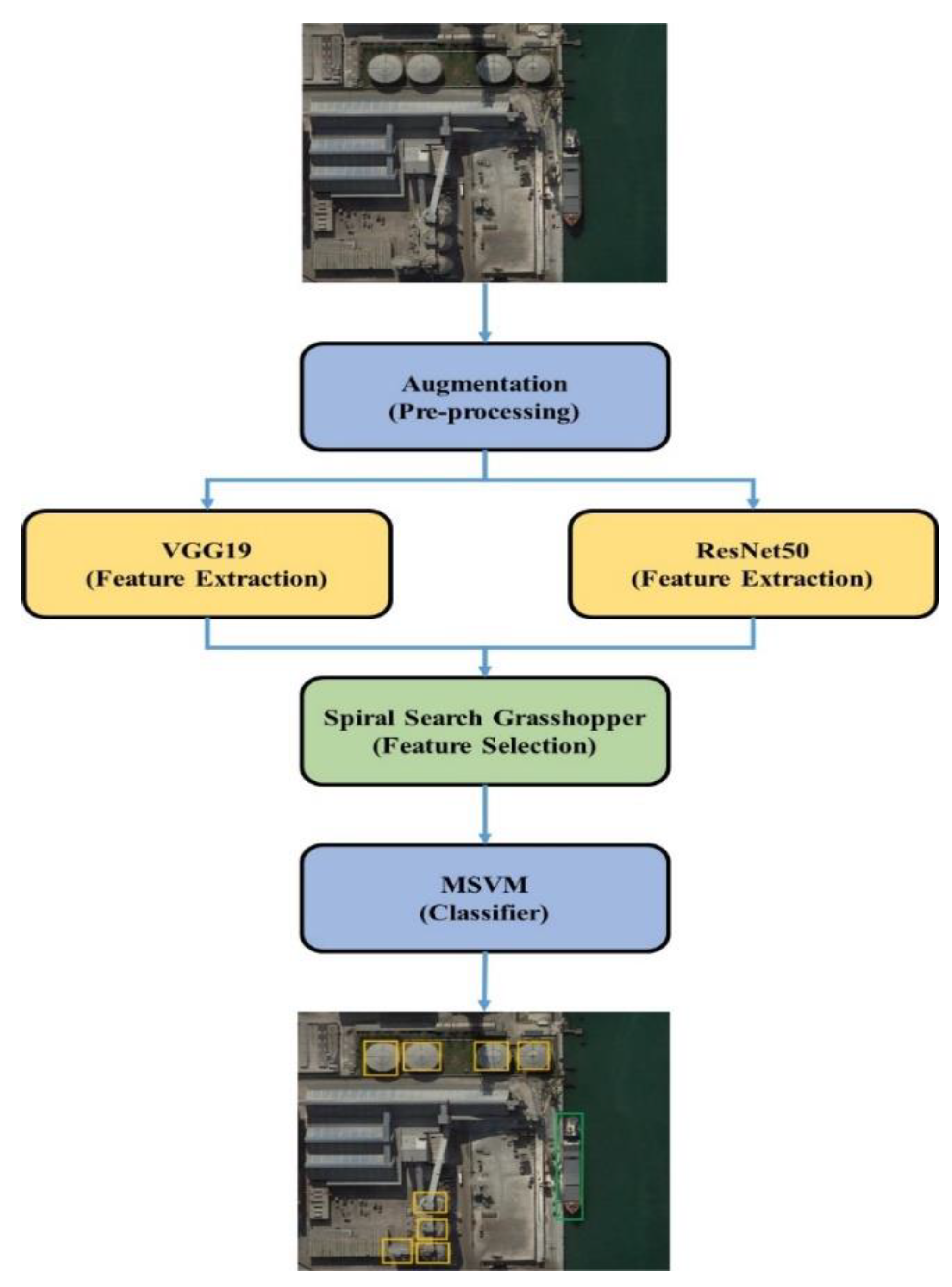

- The SSG method uses the spiral search technique to increase the exploitation in feature selection for object detection. The SSG method selects unique features that help to overcome data imbalance and overfitting problems.

- The VGG-19 and ResNet50 model is applied for feature extraction for a better representation of the object in the images. The SSG method selects the relevant features that help to classify small objects in the dataset.

- The SSG method is evaluated in two datasets and compared with various feature selection and deep learning techniques. The SSG method demonstrates higher performance than existing methods in remote sensing object classification.

2. Literature Survey

3. Proposed Method

3.1. Data Pre-Processing

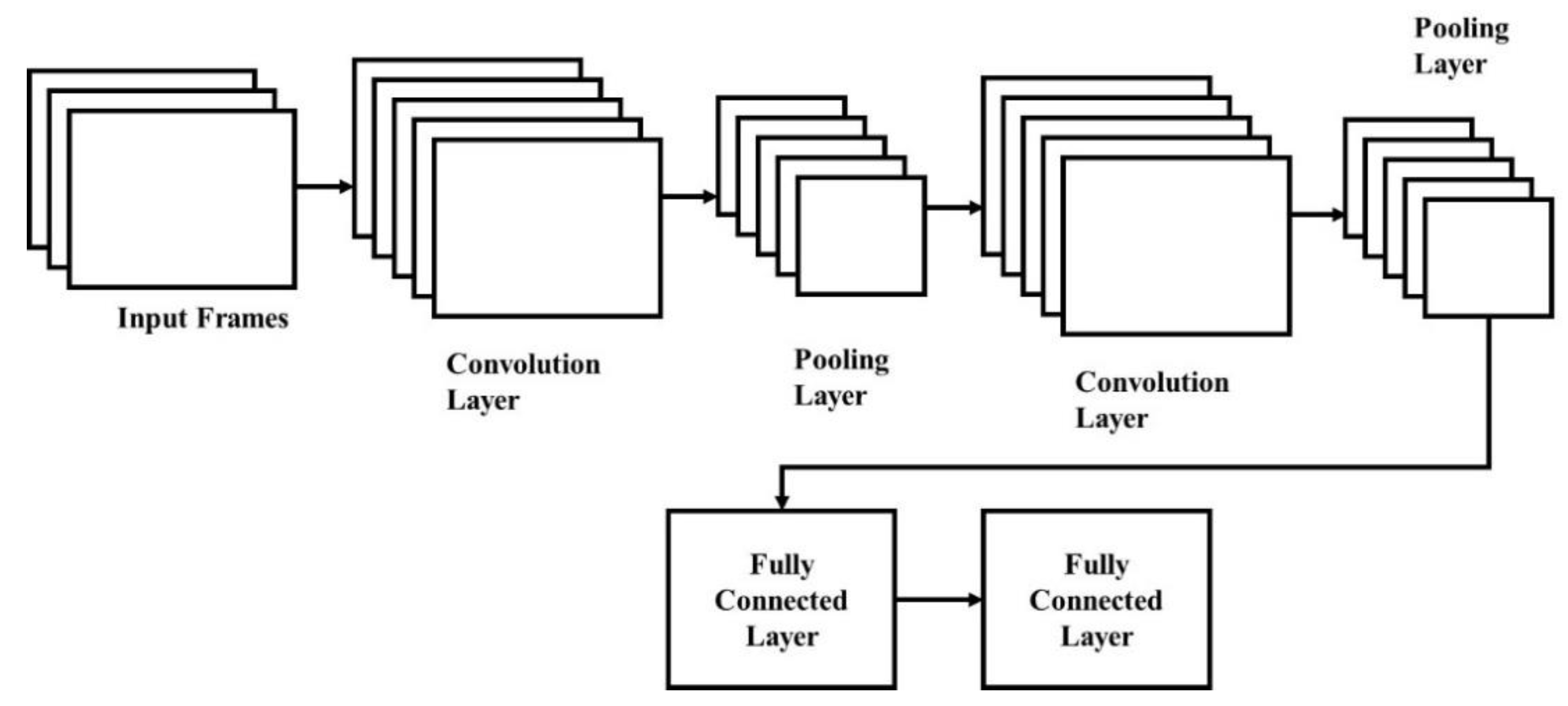

3.2. CNN Models in Feature Extraction

3.2.1. VGG19

3.2.2. ResNet

3.3. Spiral Search Grasshopper Optimization

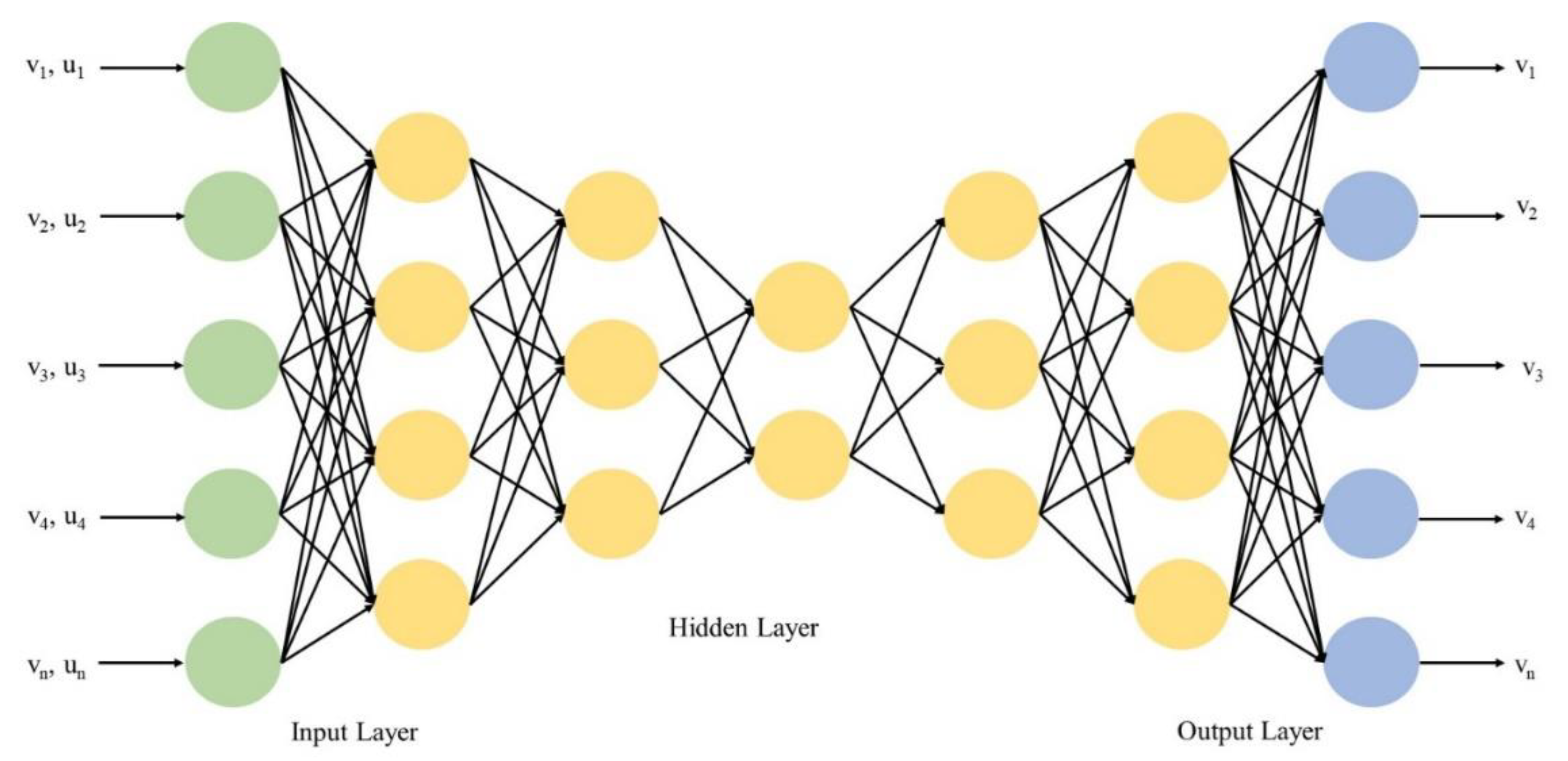

3.4. Multi-Class Support Vector Machine

4. Simulation Setup

5. Results

5.1. DOTA Dataset

5.2. DIOR Dataset

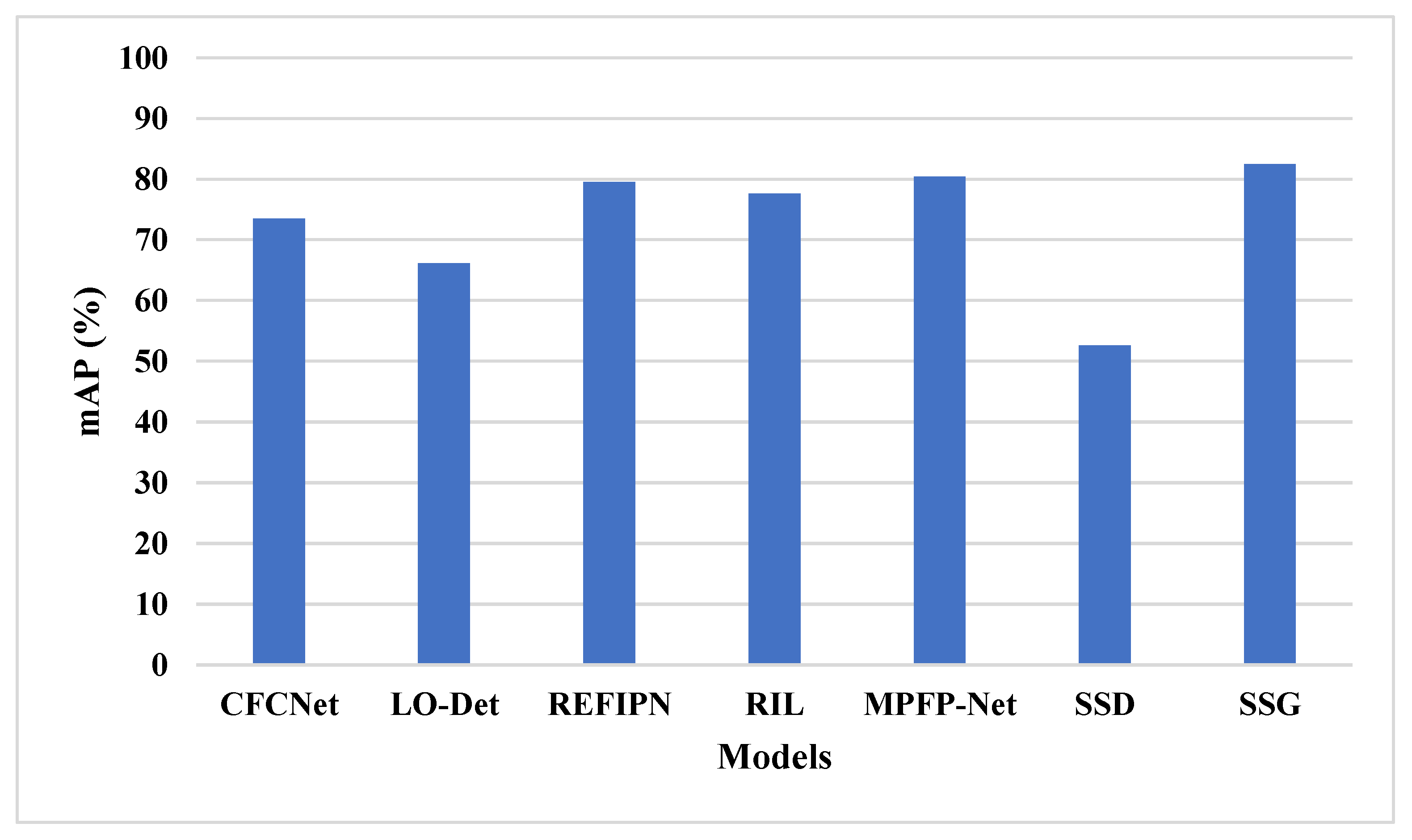

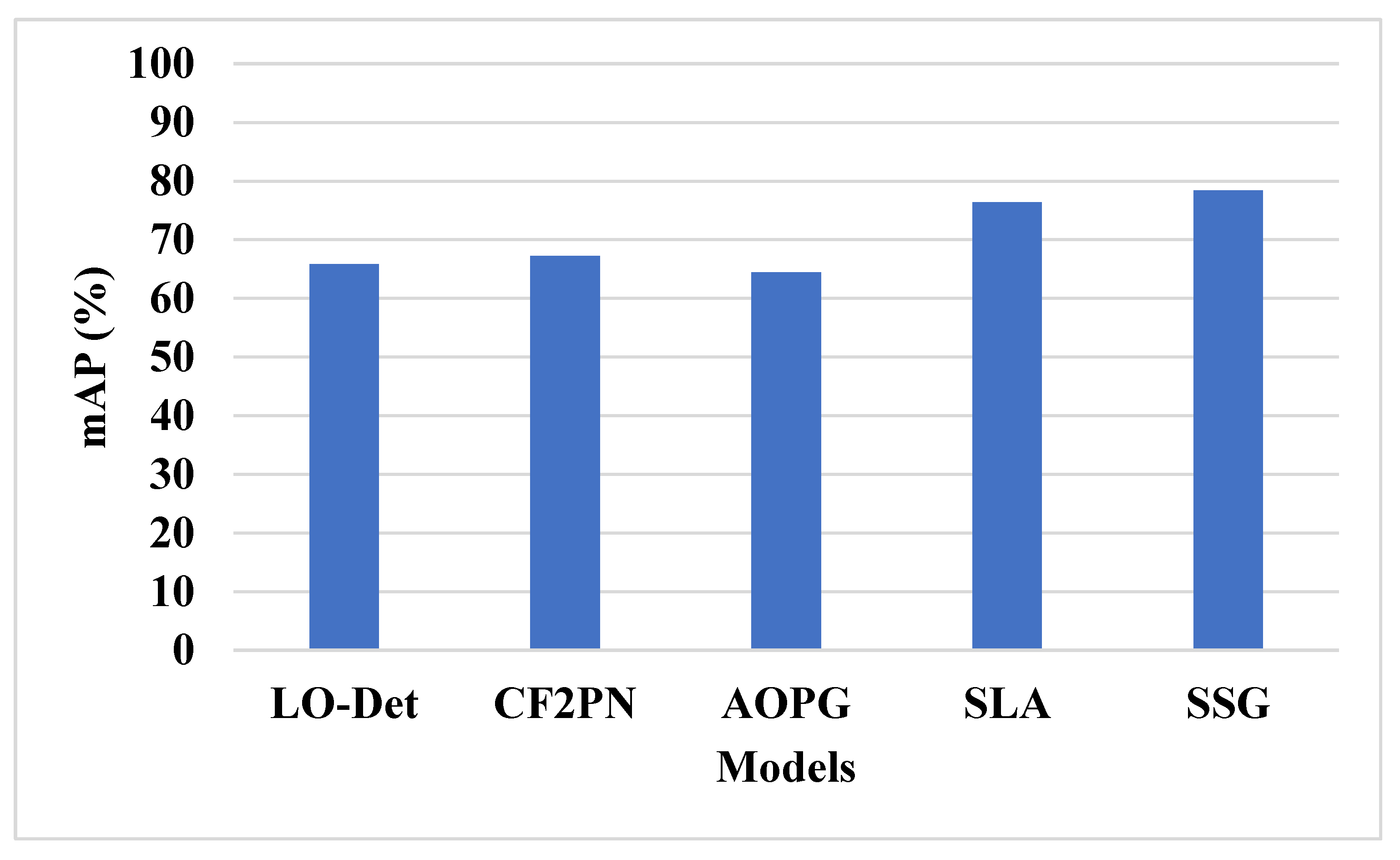

5.3. Comparative Analysis

5.4. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Tian, L.; Cao, Y.; He, B.; Zhang, Y.; He, C.; Li, D. Image enhancement driven by object characteristics and dense feature reuse network for ship target detection in remote sensing imagery. Remote Sens. 2021, 13, 1327. [Google Scholar] [CrossRef]

- Shivappriya, S.N.; Priyadarsini, M.J.P.; Stateczny, A.; Puttamadappa, C.; Parameshachari, B.D. Cascade object detection and remote sensing object detection method based on trainable activation function. Remote Sens. 2021, 13, 200. [Google Scholar] [CrossRef]

- Ji, F.; Ming, D.; Zeng, B.; Yu, J.; Qing, Y.; Du, T.; Zhang, X. Aircraft detection in high spatial resolution remote sensing images combining multi-angle features driven and majority voting CNN. Remote Sens. 2021, 13, 2207. [Google Scholar] [CrossRef]

- Chen, L.; Liu, C.; Chang, F.; Li, S.; Nie, Z. Adaptive multi-level feature fusion and attention-based network for arbitrary-oriented object detection in remote sensing imagery. Neurocomputing 2021, 451, 67–80. [Google Scholar] [CrossRef]

- Dong, Y.; Chen, F.; Han, S.; Liu, H. Ship object detection of remote sensing image based on visual attention. Remote Sens. 2021, 13, 3192. [Google Scholar] [CrossRef]

- Srinivas, M.; Roy, D.; Mohan, C.K. Discriminative feature extraction from X-ray images using deep convolutional neural networks. In Proceedings of the 2016 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Shanghai, China, 20–25 March 2016; pp. 917–921. [Google Scholar]

- Ijjina, E.P.; Mohan, C.K. Human action recognition based on recognition of linear patterns in action bank features using convolutional neural networks. In Proceedings of the 2014 13th International Conference on Machine Learning and Applications, Detroit, MI, USA, 3–6 December 2014; pp. 178–182. [Google Scholar]

- Saini, R.; Jha, N.K.; Das, B.; Mittal, S.; Mohan, C.K. Ulsam: Ultra-lightweight subspace attention module for compact convolutional neural networks. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Snowmass Village, CO, USA, 1–5 March 2020; pp. 1627–1636. [Google Scholar]

- Deepak, K.; Chandrakala, S.; Mohan, C.K. Residual spatiotemporal autoencoder for unsupervised video anomaly detection. Signal Image Video Process. 2021, 15, 215–222. [Google Scholar] [CrossRef]

- Roy, D.; Murty, K.S.R.; Mohan, C.K. Unsupervised universal attribute modeling for action recognition. IEEE Trans. Multimed. 2018, 21, 1672–1680. [Google Scholar] [CrossRef]

- Perveen, N.; Roy, D.; Mohan, C.K. Spontaneous expression recognition using universal attribute model. IEEE Trans. Image Process. 2018, 27, 5575–5584. [Google Scholar] [CrossRef]

- Roy, D.; Ishizaka, T.; Mohan, C.K.; Fukuda, A. Vehicle trajectory prediction at intersections using interaction based generative adversarial networks. In Proceedings of the 2019 IEEE Intelligent Transportation Systems Conference (ITSC), Auckland, New Zealand, 27–30 October 2019; pp. 2318–2323. [Google Scholar]

- Roy, D. Snatch theft detection in unconstrained surveillance videos using action attribute modelling. Pattern Recognit. Lett. 2018, 108, 56–61. [Google Scholar] [CrossRef]

- Huang, X.; Xu, K.; Huang, C.; Wang, C.; Qin, K. Multiple Instance Learning Convolutional Neural Networks for Fine-Grained Aircraft Recognition. Remote Sens. 2021, 13, 5132. [Google Scholar] [CrossRef]

- Yu, D.; Ji, S. A new spatial-oriented object detection framework for remote sensing images. IEEE Trans. Geosci. Remote Sens. 2021, 60, 4407416. [Google Scholar] [CrossRef]

- Zhang, Z.; Zhang, L.; Wang, Y.; Feng, P.; He, R. ShipRSImageNet: A large-scale fine-grained dataset for ship detection in high-resolution optical remote sensing images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 8458–8472. [Google Scholar] [CrossRef]

- Yu, Y.; Wang, J.; Qiang, H.; Jiang, M.; Tang, E.; Yu, C.; Zhang, Y.; Li, J. Sparse anchoring guided high-resolution capsule network for geospatial object detection from remote sensing imagery. Int. J. Appl. Earth Obs. Geoinf. 2021, 104, 102548. [Google Scholar] [CrossRef]

- Wang, Y.; Bashir, S.M.A.; Khan, M.; Ullah, Q.; Wang, R.; Song, Y.; Guo, Z.; Niu, Y. Remote sensing image super-resolution and object detection: Benchmark and state of the art. Expert Syst. Appl. 2022, 197, 116793. [Google Scholar] [CrossRef]

- Sun, X.; Wang, P.; Wang, C.; Liu, Y.; Fu, K. PBNet: Part-based convolutional neural network for complex composite object detection in remote sensing imagery. ISPRS J. Photogramm. Remote Sens. 2021, 173, 50–65. [Google Scholar] [CrossRef]

- Ming, Q.; Miao, L.; Zhou, Z.; Dong, Y. CFC-Net: A critical feature capturing network for arbitrary-oriented object detection in remote-sensing images. IEEE Trans. Geosci. Remote Sens. 2021, 60, 5605814. [Google Scholar] [CrossRef]

- Xu, X.; Feng, Z.; Cao, C.; Li, M.; Wu, J.; Wu, Z.; Shang, Y.; Ye, S. An improved swin transformer-based model for remote sensing object detection and instance segmentation. Remote Sens. 2021, 13, 4779. [Google Scholar] [CrossRef]

- Zhou, L.; Wei, H.; Li, H.; Zhao, W.; Zhang, Y.; Zhang, Y. Arbitrary-oriented object detection in remote sensing images based on polar coordinates. IEEE Access 2020, 8, 223373–223384. [Google Scholar] [CrossRef]

- Huang, W.; Li, G.; Chen, Q.; Ju, M.; Qu, J. CF2PN: A cross-scale feature fusion pyramid network based remote sensing target detection. Remote Sens. 2021, 13, 847. [Google Scholar] [CrossRef]

- Cheng, G.; Wang, J.; Li, K.; Xie, X.; Lang, C.; Yao, Y.; Han, J. Anchor-free oriented proposal generator for object detection. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5625411. [Google Scholar] [CrossRef]

- Zareapoor, M.; Chanussot, J.; Zhou, H.; Yang, J. Rotation Equivariant Feature Image Pyramid Network for Object Detection in Optical Remote Sensing Imagery. IEEE Trans. Geosci. Remote Sens. 2021, 60, 5608614. [Google Scholar]

- Ming, Q.; Miao, L.; Zhou, Z.; Yang, X.; Dong, Y. Optimization for arbitrary-oriented object detection via representation invariance loss. IEEE Geosci. Remote Sens. Lett. 2021, 19, 8021505. [Google Scholar] [CrossRef]

- Shamsolmoali, P.; Chanussot, J.; Zareapoor, M.; Zhou, H.; Yang, J. Multipatch feature pyramid network for weakly supervised object detection in optical remote sensing images. IEEE Trans. Geosci. Remote Sens. 2021, 60, 5610113. [Google Scholar] [CrossRef]

- Lu, X.; Ji, J.; Xing, Z.; Miao, Q. Attention and feature fusion SSD for remote sensing object detection. IEEE Trans. Instrum. Meas. 2021, 70, 5501309. [Google Scholar] [CrossRef]

- Ming, Q.; Miao, L.; Zhou, Z.; Song, J.; Yang, X. Sparse label assignment for oriented object detection in aerial images. Remote Sens. 2021, 13, 2664. [Google Scholar] [CrossRef]

- Kattenborn, T.; Leitloff, J.; Schiefer, F.; Hinz, S. Review on Convolutional Neural Networks (CNN) in vegetation remote sensing. ISPRS J. Photogramm. Remote Sens. 2021, 173, 24–49. [Google Scholar] [CrossRef]

- Yu, C.; Han, R.; Song, M.; Liu, C.; Chang, C.I. A simplified 2D-3D CNN architecture for hyperspectral image classification based on spatial–spectral fusion. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 2485–2501. [Google Scholar] [CrossRef]

- Zhang, W.; Tang, P.; Zhao, L. Remote sensing image scene classification using CNN-CapsNet. Remote Sens. 2019, 11, 494. [Google Scholar] [CrossRef]

- Li, W.; Wang, Z.; Wang, Y.; Wu, J.; Wang, J.; Jia, Y.; Gui, G. Classification of high-spatial-resolution remote sensing scenes method using transfer learning and deep convolutional neural network. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 1986–1995. [Google Scholar] [CrossRef]

- Pires de Lima, R.; Marfurt, K. Convolutional neural network for remote-sensing scene classification: Transfer learning analysis. Remote Sens. 2019, 12, 86. [Google Scholar] [CrossRef]

- Özyurt, F. Efficient deep feature selection for remote sensing image recognition with fused deep learning architectures. J. Supercomput. 2020, 76, 8413–8431. [Google Scholar] [CrossRef]

- Fan, L.; Zhao, H.; Zhao, H. Distribution consistency loss for large-scale remote sensing image retrieval. Remote Sens. 2020, 12, 175. [Google Scholar] [CrossRef]

- Wang, H.; Gao, K.; Min, L.; Mao, Y.; Zhang, X.; Wang, J.; Hu, Z.; Liu, Y. Triplet-Metric-Guided Multi-Scale Attention for Remote Sensing Image Scene Classification with a Convolutional Neural Network. Remote Sens. 2022, 14, 2794. [Google Scholar] [CrossRef]

- Shabbir, A.; Ali, N.; Ahmed, J.; Zafar, B.; Rasheed, A.; Sajid, M.; Ahmed, A.; Dar, S.H. Satellite and scene image classification based on transfer learning and fine tuning of ResNet50. Math. Probl. Eng. 2021, 2021, 5843816. [Google Scholar] [CrossRef]

- Mirjalili, S.Z.; Mirjalili, S.; Saremi, S.; Faris, H.; Aljarah, I. Grasshopper optimization algorithm for multi-objective optimization problems. Appl. Intell. 2018, 48, 805–820. [Google Scholar] [CrossRef]

- Yildiz, B.S.; Pholdee, N.; Bureerat, S.; Yildiz, A.R.; Sait, S.M. Enhanced grasshopper optimization algorithm using elite opposition-based learning for solving real-world engineering problems. Eng. Comput. 2021, 1–13. [Google Scholar] [CrossRef]

- Meraihi, Y.; Gabis, A.B.; Mirjalili, S.; Ramdane-Cherif, A. Grasshopper optimization algorithm: Theory, variants, and applications. IEEE Access 2021, 9, 50001–50024. [Google Scholar] [CrossRef]

- Le, V.N.T.; Apopei, B.; Alameh, K. Effective plant discrimination based on the combination of local binary pattern operators and multiclass support vector machine methods. Inf. Process. Agric. 2019, 6, 116–131. [Google Scholar]

- Cheng, Y.; Zhu, H.; Hu, K.; Wu, J.; Shao, X.; Wang, Y. Multisensory data-driven health degradation monitoring of machining tools by generalized multiclass support vector machine. IEEE Access 2019, 7, 47102–47113. [Google Scholar] [CrossRef]

- Xia, G.S.; Bai, X.; Ding, J.; Zhu, Z.; Belongie, S.; Luo, J.; Datcu, M.; Pelillo, M.; Zhang, L. DOTA: A large-scale dataset for object detection in aerial images. In Proceedings of the IEEE conference on computer vision and pattern recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 3974–3983. [Google Scholar]

- Li, K.; Wan, G.; Cheng, G.; Meng, L.; Han, J. Object detection in optical remote sensing images: A survey and a new benchmark. ISPRS J. Photogramm. Remote Sens. 2020, 159, 296–307. [Google Scholar] [CrossRef]

- Nakamura, K.; Derbel, B.; Won, K.-J.; Hong, B.-W. Learning-Rate Annealing Methods for Deep Neural Networks. Electronics 2021, 10, 2029. [Google Scholar] [CrossRef]

- Xu, Z.; Gui, W.; Heidari, A.A.; Liang, G.; Chen, H.; Wu, C.; Turabieh, H.; Mafarja, M. Spiral motion mode embedded grasshopper optimization algorithm: Design and analysis. IEEE Access 2021, 9, 71104–71132. [Google Scholar] [CrossRef]

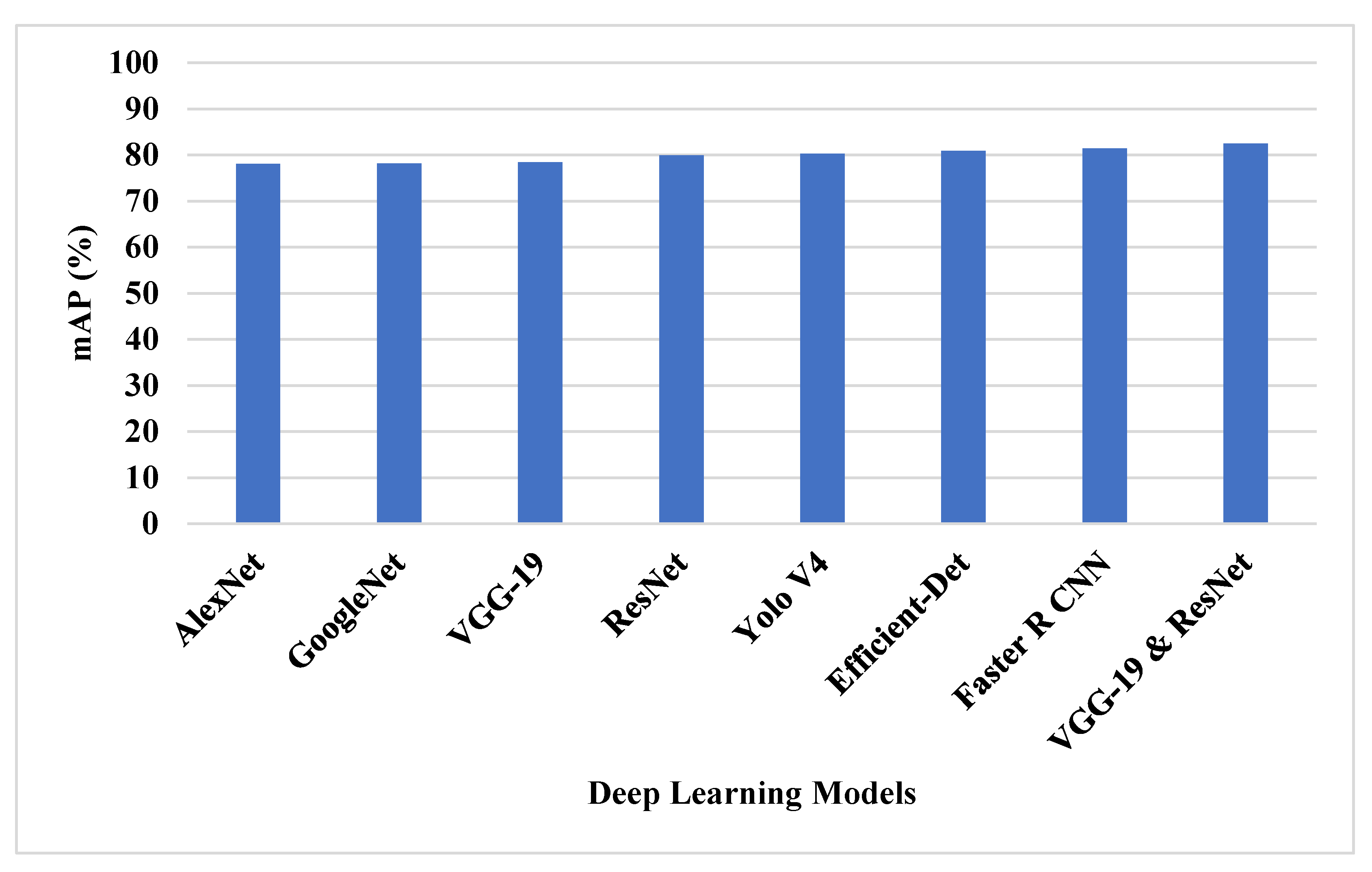

| Methods | mAP (%) |

|---|---|

| AlexNet | 78.09 |

| GoogleNet | 78.2 |

| VGG-19 | 78.41 |

| ResNet | 79.97 |

| Yolo V4 | 80.27 |

| Efficient-Det | 80.93 |

| Faster R CNN | 81.47 |

| VGG-19 and ResNet | 82.45 |

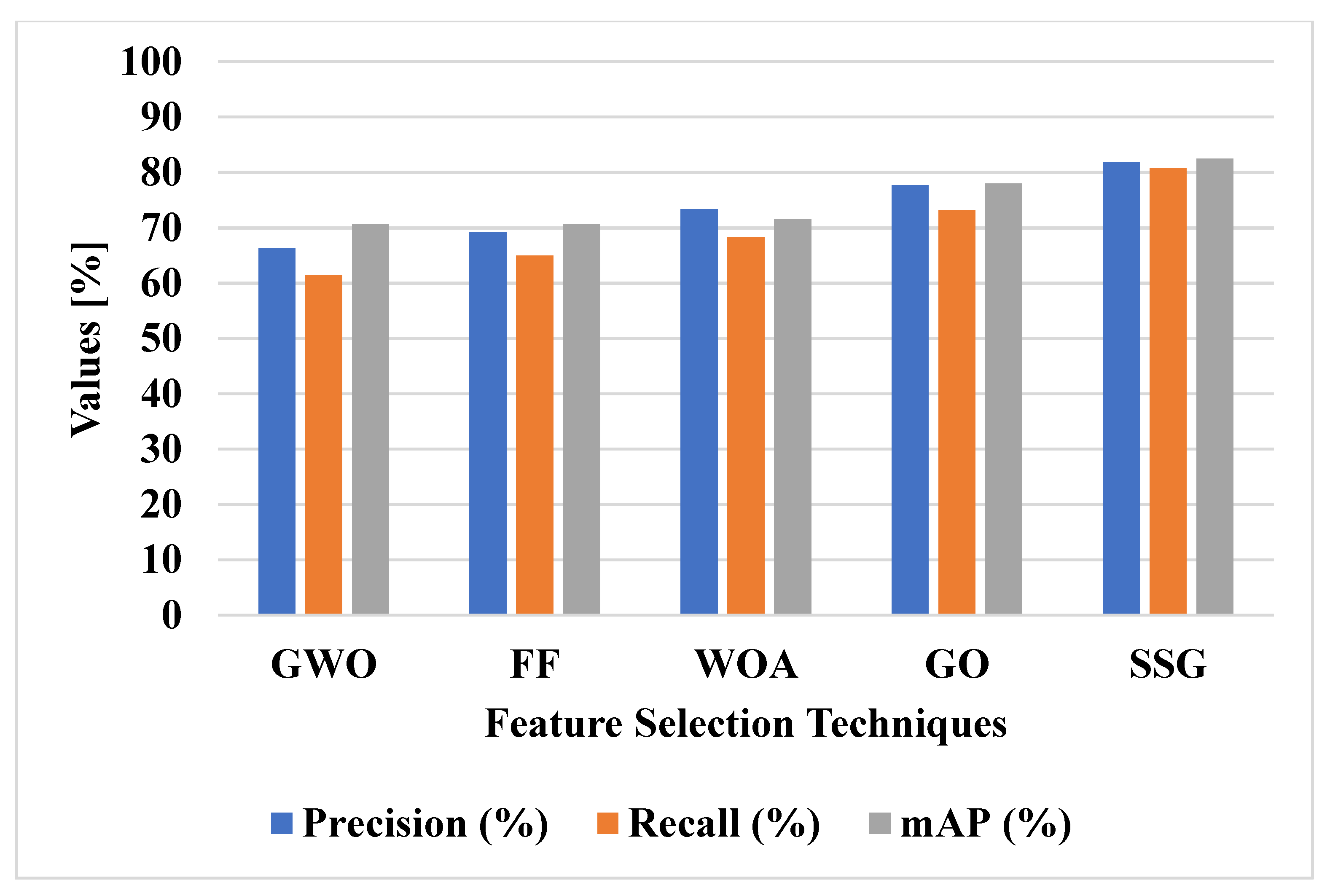

| Methods | Precision (%) | Recall (%) | mAP (%) |

|---|---|---|---|

| GWO | 66.33 | 61.52 | 70.6 |

| FF | 69.12 | 64.94 | 70.62 |

| WOA | 73.31 | 68.28 | 71.57 |

| GO | 77.64 | 73.14 | 77.95 |

| SSG | 81.88 | 80.77 | 82.45 |

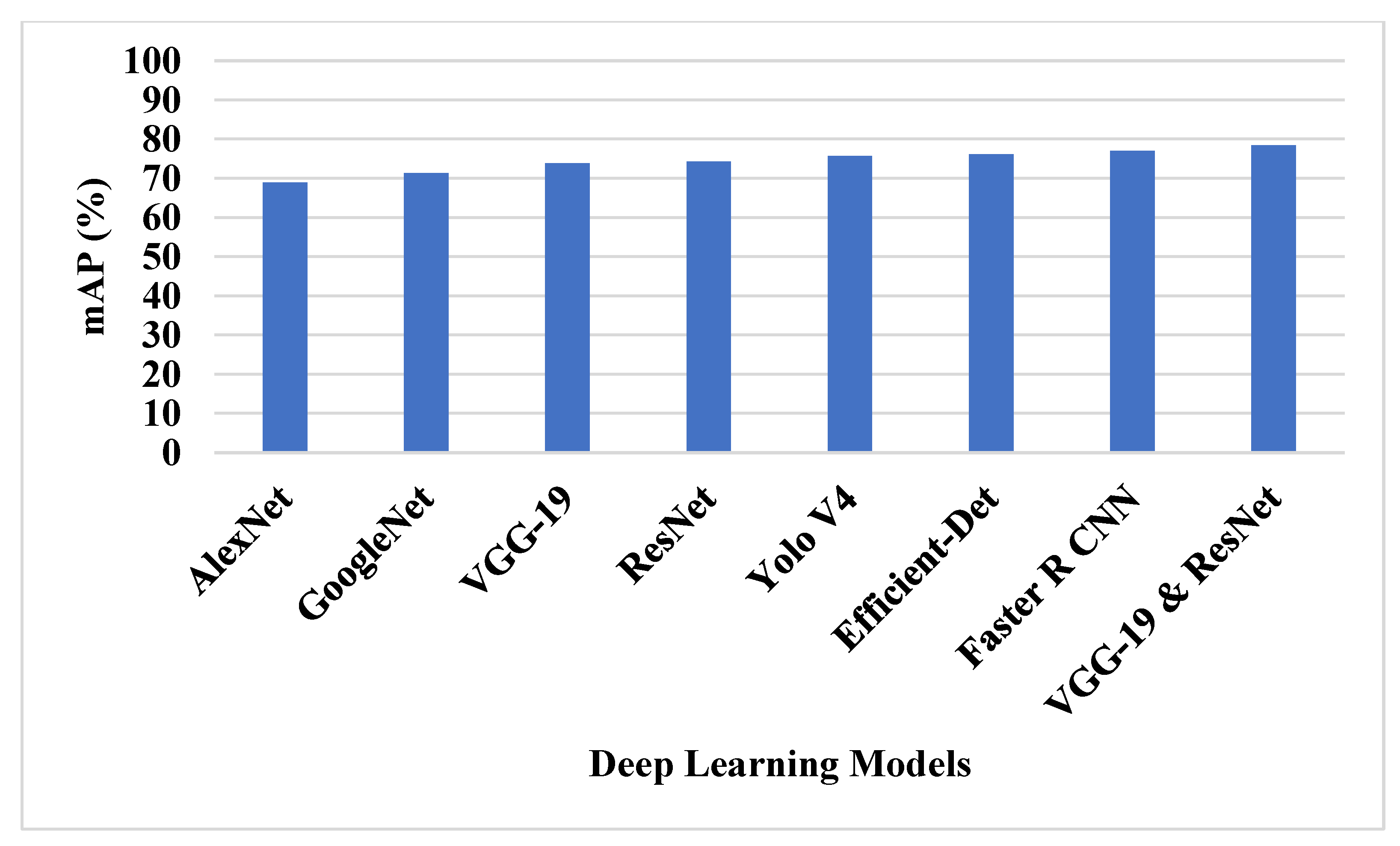

| Methods | mAP (%) |

|---|---|

| AlexNet | 68.91 |

| GoogleNet | 71.33 |

| VGG-19 | 73.81 |

| ResNet | 74.29 |

| Yolo V4 | 75.71 |

| Efficient-Det | 76.13 |

| Faster R CNN | 76.99 |

| VGG-19 and ResNet | 78.42 |

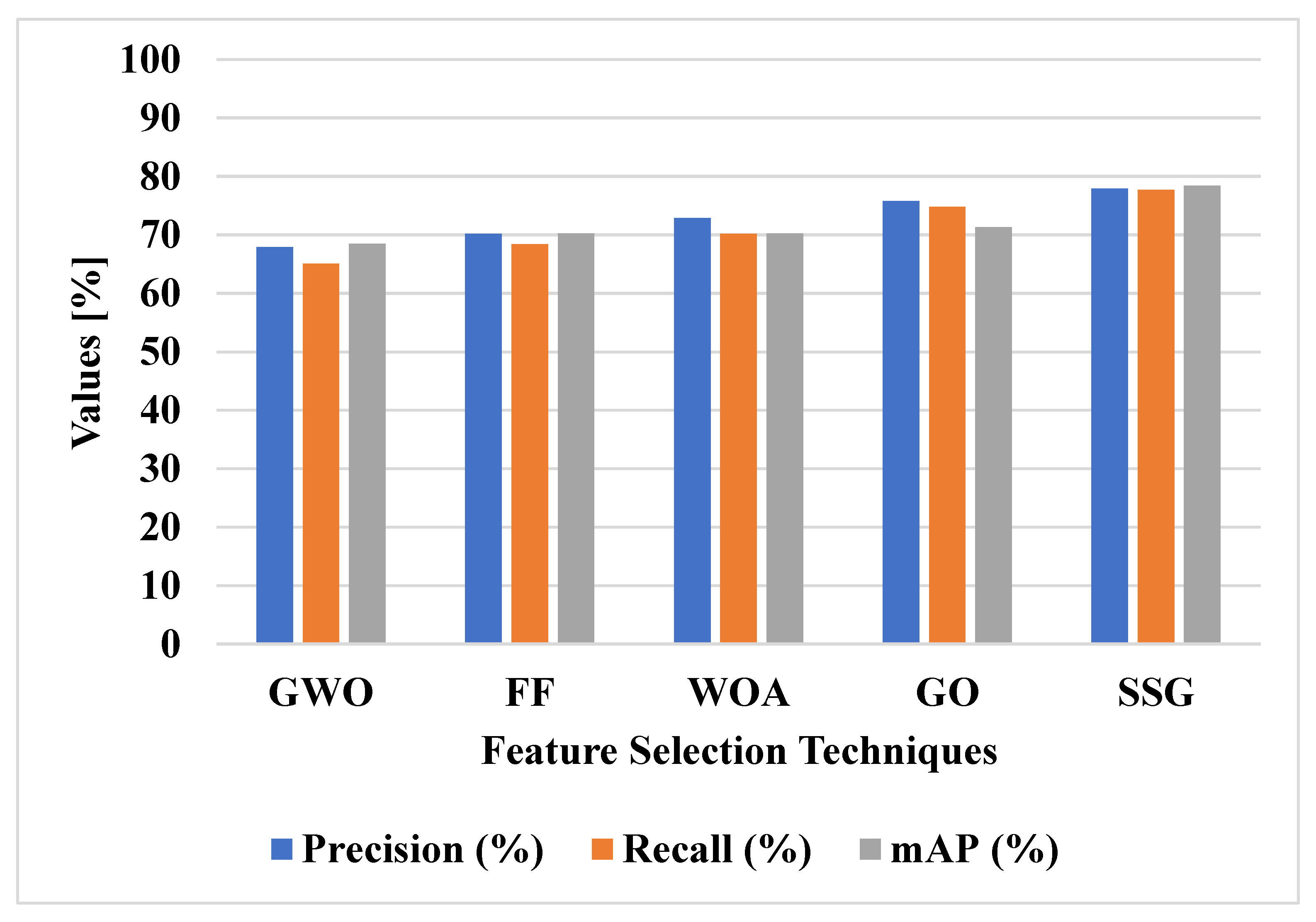

| Methods | Precision (%) | Recall (%) | mAP (%) |

|---|---|---|---|

| GWO | 67.91 | 65.08 | 68.49 |

| FF | 70.22 | 68.44 | 70.25 |

| WOA | 72.93 | 70.23 | 70.3 |

| GO | 75.82 | 74.85 | 71.33 |

| SSG | 77.98 | 77.74 | 78.42 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Stateczny, A.; Uday Kiran, G.; Bindu, G.; Ravi Chythanya, K.; Ayyappa Swamy, K. Spiral Search Grasshopper Features Selection with VGG19-ResNet50 for Remote Sensing Object Detection. Remote Sens. 2022, 14, 5398. https://doi.org/10.3390/rs14215398

Stateczny A, Uday Kiran G, Bindu G, Ravi Chythanya K, Ayyappa Swamy K. Spiral Search Grasshopper Features Selection with VGG19-ResNet50 for Remote Sensing Object Detection. Remote Sensing. 2022; 14(21):5398. https://doi.org/10.3390/rs14215398

Chicago/Turabian StyleStateczny, Andrzej, Goru Uday Kiran, Garikapati Bindu, Kanegonda Ravi Chythanya, and Kondru Ayyappa Swamy. 2022. "Spiral Search Grasshopper Features Selection with VGG19-ResNet50 for Remote Sensing Object Detection" Remote Sensing 14, no. 21: 5398. https://doi.org/10.3390/rs14215398

APA StyleStateczny, A., Uday Kiran, G., Bindu, G., Ravi Chythanya, K., & Ayyappa Swamy, K. (2022). Spiral Search Grasshopper Features Selection with VGG19-ResNet50 for Remote Sensing Object Detection. Remote Sensing, 14(21), 5398. https://doi.org/10.3390/rs14215398