1. Introduction

Due to the advance of unmanned aerial vehicle (UAV) technologies, the images captured by UAV are cost- and time-effective. In addition, they can provide high quality geographic data and information from a low flight altitude. These advantages make UAV images an increasingly popular medium for many applications [

1,

2,

3,

4,

5,

6], such as disaster assessment, construction site monitoring, building change detection, military applications, and heating requirement determination for frost management. However, the area that one UAV image can cover is limited by the flight altitude. Therefore, to extend the area that UAV images can cover, the method of stitching UAV images has received extensive attention.

To construct a stitched image, several seam cutting-based methods [

7,

8,

9,

10,

11,

12] have been developed. They often include the image registration step, the seam searching step, and the color blending step. In the image registration step, the feature points [

13] are first extracted from the source image

and the target image

to establish the correspondence [

14,

15,

16,

17] between

and

, and then according to the established correspondence, a proper perspective transform is performed on the target image

such that the source image

and the transformed target image can be aligned as well as possible. After that, the overlapping area of

and

can be determined. The seam searching step is used to determine the best stitching line to separate the overlapping area of

and

into two disjoint parts.

Let and denote the pixel sets on the side of the stitching line in and , respectively, where ∩ . Usually, the color inconsistency problem is caused by different exposure times, atmosphere illuminations, and different capturing times between and , and it makes the stitched image visually unpleasant. In this study, we focus on addressing the color consistency correction problem for the target pixels in to make the stitched image have good subjective and objective quality performance. Note that each stitching target pixel on the stitching line is only updated by simply adding the corresponding stitching color difference to the color of that stitching target pixel. In the next subsection, the classical representative works in the color consistency correction area, as well as their advantages and shortcomings, are introduced.

1.1. Related Works

Brown and Lowe [

18] proposed a multi-band blending (MBB) method to correct color in low frequency over a large spatial range, and to correct color in high frequency over a short spatial range. Their method could produce a smooth color transition across the warped overlapping area of

and

, denoted by

, but it cannot effectively solve the color inconsistency problem in the area

-

, where the operator “-” denotes a set difference operator. Fecker et al. [

19] proposed a histogram matching-based (HM-based) method to correct color between

and

. They first built up the cumulative histograms of

and

, respectively, and then a mapping function was delivered to correct the color of each target pixel in

. Although the HM-based method is very fast, it lacks the pixel position consideration in the cumulative histograms used, limiting the color correction effect.

Xiong and Pulli [

20] proposed a two-step approach to correct color. First they applied a gamma function to modify the luminance component for the target pixels, and then applied a linear correction function to modify the chrominance components. Based on a parameterized spline curve approach for each image, Xia et al. [

21] proposed a new gradient preservation-based (GP-based) color correction method. Using the convex quadratic programming technique, a closed form was derived to model the color correction problem by considering the visual quality of

and

as well as the global color consistency. To enhance the accuracy of the extracted color correspondence, the gradient and color features in the possible alteration objects in

were utilized. However, the single-channel optimization strategy used in the GP-based method was unable to solve the white balance problem. Later, based on a spline curve remapping function and the structure from motion technique, Yang et al. [

22] proposed a global color correction method for large-scale image sets in three-dimensional reconstruction applications.

Fang et al. [

23] found that in the stitched image, the stitching pixel set information on the stitching line is useful for color blending, but this information is ignored in the above-mentioned related color blending methods. Utilizing all color difference values on the stitching line globally for each target pixel, Fang et al. proposed a global joint bilateral interpolation-based (GJBI-based) color blending method. Based on several testing stitched images, each one with well aligned stitching pixels, their experimental results demonstrated the visual quality superiority of their method over the MBB method, the HM-based method, Xiong and Pulli’s method, and the GP-based method.

Parallel to the above introduction on color correction for stitched images, for multiple images, some color correction methods have been developed and they include the joint global and local color consistency approach [

24], the combined model color correction approach [

25], and the contrast-aware color consistency approach [

26].

1.2. Motivation

The GJBI-based method [

23] achieved good visual quality performance for well stitched images, in which one stitched image almost contains aligned stitching pixels without parallax distortion. In practical situations, one stitched image often has not only aligned stitching pixels but also has misaligned stitching pixels. From the experimental data, we found that the GJBI-based method tends to suffer from a perceptual artifact near the misaligned stitching pixel set, in which each stitching color difference between the source pixel and the target pixel becomes conspicuous due to parallax distortion.

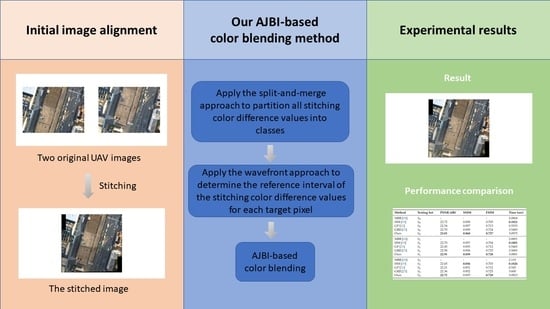

The above perceptual artifact occurring in the GJBI-based method motivated us to propose a new adaptive joint bilateral interpolation-based (AJBI-based) color blending method, such that instead of referring to all stitching color difference values on the stitching line globally, each target pixel only adaptively refers to an adequate interval of stitching color difference values locally, achieving a better subjective quality effect near the misaligned stitching pixels and a higher objective quality benefit.

1.3. Contributions

In this paper, we propose an AJBI-based color blending method to correct the color for the stitched UAV images. The contributions of the proposed method are clarified below.

We first propose a split-and-merge approach to classify all stitching color difference values into one aligned class or two classes, namely the aligned class corresponding to aligned stitching pixels and the misaligned class corresponding to misaligned stitching pixels. Next, we propose a wavefront approach to determine the adequate reference interval of the stitching color difference values, which will be used for correcting the color of each target pixel in ;

To remedy the perceptual artifact near the misaligned stitching pixels, instead of using all stitching color difference values globally, we propose an AJBI-based method to correct color for each target pixel in

by using the determined reference stitching color difference values locally. It is notable that in the Gaussian function used in our method for correcting color for each target pixel, the color variance parameter setting (see Equation (

6)) is adaptively dependent on the ratio of the number of all reference misaligned stitching color differences over the number of all reference stitching color difference values;

Based on several tests of UAV stitched images under different misalignment and brightness situations, the comprehensive experimental results justify that in terms of the three objective quality metrics, namely PSNR (peak signal-to-noise ratio), SSIM (structural similarity index) [

27], and FSIM (feature similarity index) [

28], the proposed AJBI-based color blending method achieves higher objective quality effects when compared with the state-of-the-art methods [

18,

19,

21,

23]. In addition, relative to these comparative methods, the proposed color blending method also achieves better perceptual effects, particularly near the misaligned stitching pixels.

The rest of this paper is organized as follows. In

Section 2, the proposed split-and-merge approach to classify all stitching color difference values into one aligned class or two different classes is presented. In

Section 3, the proposed AJBI-based color blending method is presented. In

Section 4, thorough experiments are carried out to justify the better color consistency correction merit of the proposed method. In

Section 5, the conclusions and future work are addressed.

2. The Classification of Stitching Color Difference Values

In this section, we first take one real stitched image example to define the stitching color difference values on the stitching line, and then the proposed split-and-merge approach is presented to partition all stitching color difference values into one aligned class or two classes, namely the aligned class and the misaligned class. Based on the same stitched image example, the partition result using the proposed split-and-merge approach is also provided. To help clarify the visual understanding of the proposed approach, a flowchart is also provided.

Given

and

in

Figure 1a, after performing Yuan et al.’s superpixel- and graph cut-based method [

10] on

Figure 1a,

Figure 1b shows the resultant stitched image, where the stitching line is marked in red and the overlapping area between

and

is shown by an area surrounded by a green quadrilateral. On the stitching line with

n pixel-pairs, namely (

,

) for 1 <=

i <=

n,

and

denote the

ith stitching source pixel and target pixel, respectively. The stitching color difference value between

and

is defined by

where

and

denote the color values of

and

, respectively.

On the stitching line, let

denote the absolute stitching c-color difference value between

and

; it is defined by

where

and

denote the c-color, c ∈ {R, G, B}, values of

and

, respectively. For the stitching line in

Figure 1b, the three distributions of

,

, and

are shown in

Figure 2a,

Figure 2b, and

Figure 2c, respectively. From

Figure 2a–c, we can observe that the three distributions of all absolute stitching c-color difference values tend to be two classes, namely the aligned class and the misaligned class.

We propose a split-and-merge approach to partition all stitching c-color, c ∈ {R, G, B}, difference values into two classes,

and

, or one aligned class. In most cases, all stitching color difference values on the stitching line tend to be partitioned into two classes, namely

and

. However, for the rare example, the distribution of all absolute stitching c-color difference values tends to be one aligned class. As depicted in

Figure 3, a flowchart is provided to help clarify the visual understanding of the proposed split-and merge approach.

When setting K = 2, we first adopt Lloyd’s K-means clustering process [

29] to split all absolute stitching color difference values, which constitute the feature space used in the clustering process, into two tentative classes,

and

. Initially, two randomly selected absolute stitching color difference values form the centers of the two tentative classes. Next, based on the minimal 2-norm distance criterion, every absolute color difference value is assigned to one class center. Then, the center of each class is updated by the mean value of all absolute color difference values in the same class. The above procedure is repeated until two stable classes are found. Then, we propose a merging cost-based process to examine whether the two tentative classes can be merged into one aligned class

or not. If the two tentative classes cannot be merged into one class, we report the two tentative classes as the partition result; otherwise, we report the aligned class

as the partition result. It can be said that our split-and-merge approach consists of one two-means splitting process and one merging process.

Let the variances of , , and be denoted by , , and , respectively; let n be the number of all stitching color difference values, and let and be the mean values of the absolute color difference values in and , respectively. The three variances, , , and , have the relation in Theorem 1, and the merging cost term “” in Theorem 1 is used to determine whether the two different classes, and , can be merged into one aligned class or not.

Theorem 1. where , , and 1 = + .

In Theorem 1, if the merging cost term “” is larger than or equal to the specified threshold , it indicates that the split two classes, and , cannot be merged into one aligned class ; otherwise, the class and the class should be merged into one class . In our experience, after trying the interval [100, 1000], the best choice of is recommended to be set to 500.

After performing our split-and-merge approach on the three distributions in

Figure 2a–c,

Figure 4a–c illustrate the corresponding classification results, where the aligned absolute c-color difference class

, c ∈{R, G, B}, is depicted in c-color, and the misaligned absolute c-color difference class

is depicted in black.

Figure 5 depicts the partition result of

Figure 1b, where on the stitching line, the aligned color difference pixels are marked in green color and the misaligned color difference pixels are marked in red color.

4. Experimental Results

Based on the eight original testing UAV image-pairs, which are selected from the website:

https://www.sensefly.com/education/datasets (accessed on 2 September 2018) provided by the company “senseFly”, the corresponding eight stitched images are produced by using Yuan et al.’s graph cut-based method [

10]. The eight testing stitched images can be accessed from the website in

Supplementary Material. For convenience, let the resultant eight stitched images under different misalignment situations be denoted by the set symbol

with |

| = 8. For each stitched image in

, the misalignment ratio of the stitching color difference values is within the interval [0%, 15%].

Based on the testing set

under different misalignment situations, to produce more stitched images under different brightness situations, for each testing stitched image in

, the brightness of every pixel in the target image is updated by four different brightness percentages, namely −15%, 15%, −25%, and 25%, of its own brightness. Here, if the updated brightness of one target pixel is over the range [0, 255], it is forced to be 0 or 255.

Figure 7a illustrates one stitched image taken from

, where the stitching line is depicted by a green line.

Figure 7b illustrates the updated stitched image of

Figure 7a, where the brightness of the target image in

Figure 7b is obtained by increasing the 15% brightness percentage of that in

Figure 7a. In

Figure 7b, we can observe that the target image, which is below the stitching line, is brighter than the corresponding target image in

Figure 7a. Therefore, for each original testing stitched image, four updated stitched images are produced. Overall, 32 (4 × 8) newly generated stitched images are produced. Among the 32 generated stitched images that can be accessed from the website in

Supplementary Material, the 16 newly generated stitched images under

% brightness update are denoted by the set symbol

with

= 16, and the 16 newly generated stitched images under

% brightness update are denoted by the set symbol

with

= 16. Here, for each stitched image in

, the misalignment ratio of the stitching color difference values is within the interval [0%, 37%]; for each stitched image in

, the misalignment ratio of the stitching color difference values is within the interval [0%, 32%].

Next, the proposed split-and-merge approach is applied to partition the color difference values of the stitching line of each testing stitched image in

,

, and

, into two classes. After performing the proposed split-and-merge approach on the stitching color difference values of

Figure 7b, the misaligned part of the stitching line in

Figure 7c is marked in red color and the aligned part is marked in green color. Based on the 40 testing stitched images in

,

, and

, comprehensive experimental results demonstrate the objective and subjective quality merits of the proposed AJBI-based color correction method relative to the 4 comparative methods, namely the MBB method [

18], the HM-based method [

19], the GP-based method [

21], and the GBJI-based method [

23]. Here, the three objective quality metrics, namely PSNR, SSIM, and FSIM, are used to justify the objective quality merit of the proposed color blending method. In addition, the visual demonstration is provided to justify the subjective quality merit of the proposed method. The execution time comparison is also reported.

Among the four comparative methods, the execution code of the MBB method [

18] can be accessed from the website:

http://matthewalunbrown.com/autostitch/autostitch.html (accessed on 23 October 2021). The C++ source code of the GP-based method [

21] can be accessed from the website:

https://github.com/MenghanXia/ColorConsistency (accessed on 29 August 2021). We have tried our best to implement the HM-based method [

19] and the GJBI-based method [

23] in C++ language. The blending parameters of the GJBI-based method are fine tuned to have the perceptually best color corrected results. The C++ source code of the proposed AJBI-based method can be accessed from the website in

Supplementary Material. The program development environment is Visual Studio 2019. The Platform Toolset is set to LLVM (clang-cl), which is used to compile the C++ source code of each considered method into the execution code. The C++ language standard is set to ISO C++ 17.

For comparison fairness, the execution codes of all considered methods are run on the same computer with an Intel Core i7-10700 CPU 2.9 GHz and 32 GB RAM. The operating system is the Microsoft Windows 10 64- bit operating system. Since the GJBI-based method [

23] and the proposed AJBI-based method can be done in parallel, we deploy the parallel processing functionality of the multi-core processor for accelerating the two methods.

4.1. Objective and Subjective Quality Merits of Our Method

This subsection demonstrates the objective and subjective quality merits of the proposed AJBI-based color correction method relative to the comparative methods.

4.1.1. Objective Quality Merit

As mentioned before, the three quality metrics, PSNR, SSIM, and FSIM, are used to report the objective quality merit of our method. Let

denote the source subimage overlapping with the target image

and let

denote the color corrected target subimage overlapping with the source image

, where

. PSNR is used to evaluate the average quality of

and it is defined by

where the set symbol

,

, has been defined before; MSE (mean square error) denotes the mean square error between

and

. Overall, the values of

for

are reported for the three sets:

,

, and

.

Because the formulas of SSIM and FSIM are somewhat complicated, we just outline their physical meaning. Interested readers please refer to the papers [

27,

28]. The quality metric SSIM is expressed as the product of the luminance mean similarity, the contrast similarity, and the structure similarity between the source subimage

and the color corrected target subimage

. The quality metric FSIM utilizes the phase consistency and gradient magnitude to weight the local quality maps, obtaining a feature quality score of the color corrected target subimage

.

Because only the execution code of the MBB method [

18] is available and as an output, the stitched image has been warped, the original overlapping area of

and

cannot be exactly extracted. Therefore,

Table 1 only tabulates the PSNR, SSIM, and FSIM performance of the three comparative methods, namely the HM-based method [

19], the GP-based method [

21], and the GBJI-based method [

23], and the proposed method. From

Table 1, we observe that based on the three testing sets,

,

, and

, under different misalignment and brightness situations, the proposed ABJI-based method, abbreviated as “Ours”, always has the highest PSNR and FSIM, shown in boldface, among the considered methods. It is noticeable that the FSIM performance of the GBJI method [

23] is ranked second.

Table 1 also indicates that for

and

, the SSIM performance of our method is the best; for

, the HM method [

19] is the best, but our method is ranked second.

In summary,

Table 1 indicates that the overall quality performance of our method is the best among the considered methods. In the next subsection, the subjective quality superiority of our method is demonstrated.

4.1.2. Subjective Quality Merit

Five testing UAV stitched images under different misalignment and brightness situations are used to demonstrate the perceptual merit of the proposed ABJI-based method relative to the four comparative methods. For one testing stitched image, some amplified sub-images near the stitching line are adopted to justify the perceptual merit of our method.

The first testing UAV stitched image, which is taken from the set

, is illustrated in

Figure 8a. After performing the five considered methods on

Figure 8a,

Figure 8b–f shows the color correction results by using the MBB method, the HM-based method, the GP-based method, the GBJI-based method, and our method, respectively. It is notable that for the MBB method, the experimental demonstration is based on the tool that is downloaded from

http://www.autostitch.net/ (accessed on 23 October 2021), so it is different from the other color correction methods. From

Figure 8b–f, we observe that our method achieves the best perceptual gradation effect near the misaligned stitching target pixels, but for the four comparative methods, there are unsmooth artifacts. Our method also preserves a good color correction effect in the other areas.

The second, third, fourth, and fifth testing stitched UAV images are illustrated in

Figure 9a,

Figure 10a,

Figure 11a, and

Figure 12a, respectively, which are taken from

under −15% brightness update,

under +15% brightness update,

under −25% brightness update, and

under +25% brightness update. After performing the five considered methods on the four testing stitched images,

Figure 9b–f,

Figure 10b–f,

Figure 11b–f and

Figure 12b–f demonstrate the corresponding color correction results for

Figure 9a,

Figure 10a,

Figure 11a,

Figure 12a, respectively.

Figure 9b–f indicate that inside the three amplified sub-images, our method still achieves the best perceptual gradation effect near the misaligned stitching target pixels, but for the four comparative methods, there are some unsmooth artifacts. In

Figure 10b–f, we observe that inside the two amplified grassland sub-images, our method achieves a more natural effect. For

Figure 11b–f and

Figure 12b–f, we have the same conclusion that, inside the amplified sub-images, our method achieves a more smooth effect near the misaligned stitching target pixels relative to the four comparative methods.

The main reasons for the quality superiority of the proposed color correction method are summarized as follows. Based on the two partitioned classes of the stitching color difference values, which are determined by the proposed split-and-merge approach, for each target pixel in

, the suitable reference interval of the stitching color difference values is determined by the proposed wavefront approach. Finally, using this useful reference interval information, the proposed AJBI-based color blending method can achieve more natural and smooth color blending effects near the misaligned stitching target pixels. After running the five considered methods on the 40 testing stitched images, the 200 (5 × 40) color corrected stitched images can be accessed from the website in

Supplementary Material. Interested readers can refer to these color correction results for more detailed perceptual quality comparison.

4.2. Computation of Time Cost

Based on the above-mentioned three testing sets,

,

, and

, under different alignment and brightness situations, the last column of

Table 1 tabulates the average execution time required by each considered color blending method for one testing stitched image in each testing set. We have the following two observations: (1) Among the five considered methods, the HM-based method [

19] and the GP-based method [

21] are the two fastest methods and (2) the execution time improvement ratio of the proposed ABJI-based method over the GBJI-based method [

23] equals 13.52%

.

Although our method is not the fastest, it achieves the best perceptual quality performance, particularly near the misaligned stitching target pixels, and has the best objective quality performance among all considered methods.