1. Introduction

Infrared small target detection is an important component of infrared search and tracking systems that has been utilized extensively in space surveillance, remote sensing, missile tracking, and other domains [

1,

2,

3]. Fast and reliable detection of infrared small targets is crucial in these applications. However, small targets usually contain only a few pixels and lack detailed shape and texture feature information due to long-range target imaging [

4,

5,

6]. Noise disturbances, including grassland, poles, trees, buildings, and high-brightness clutter on the ground, commonly found in the complex and changeable imaging environment of the ground–sky scene typically result in targets with extremely low signal-to-noise ratios (SNRs). Very low SNRs of targets increase their susceptibility to background interference from nearby ground objects. Therefore, detecting infrared small targets in ground–sky scenes is challenging.

Many different methods have been proposed for detecting infrared small targets in complex backgrounds. Existing methods can be typically divided into single-frame and sequential detection methods [

7]. The sequential detection method requires reference to temporal characteristics of multiple successive frames. Background interference clutter is eliminated on the basis of consistency of motion of the moving target and high correlation of the background in adjacent frames [

8]. However, background variation is easily caused by the jitter of the photoelectric tower itself in practice, thereby affecting the performance of the sequential detection method. Meanwhile, the computationally complex sequential detection method and requirement of high hardware performance limit its applicability to practical infrared search systems. Compared with the sequential detection method, the single-frame detection method can more easily obtain satisfactory real-time performance. Traditional single-frame detection methods include Top-Hat [

9,

10], Max-mean and Max-median filtering [

11], two-dimensional least mean square (TDLMS) [

12,

13], and multi-scale directional filtering approaches [

14]. Although these morphology filtering-based methods generally demonstrate satisfactory detection performance in infrared images with uniform background and are easy to implement, they usually cause high rates of false alarms in complex backgrounds. Notably, the method based on a human visual system (HVS) is introduced into the field of infrared small target detection. The background is usually a uniform area with some noise in the local region, while the target and the adjacent region are concentrated and discontinuous. Therefore, the region of interest can be extracted from the difference between features of the target and the background in the local region, and the most appropriate among the responses can be obtained using multi-scale calculations [

15]. Chen et al. [

16] proposed the initial local contrast method (LCM) for detecting infrared small targets. A series of extended LCM methods, such as an improved LCM (ILCM) [

17], a novel local contrast measure (NLCM) [

18], a multi-scale relative local contrast measure (RLCM) [

19], and a multi-scale patch-based contrast measure (MPCM) [

20], was subsequently proposed. Cui et al. [

21] put forward a high-speed local contrast infrared small target detection method that achieves fast detection of infrared small targets using local contrast combined with a machine learning classifier to solve the problem of calculation inefficiency of LCM-based methods. Xia et al. [

22] designed a multi-scale local contrast measure based on a local energy factor (LEF). Han et al. [

23] subsequently proposed a weighted strengthened local contrast measure (WSLCM). Hence, these methods primarily utilize the gray difference between the target and the background in a local region to measure infrared small targets.

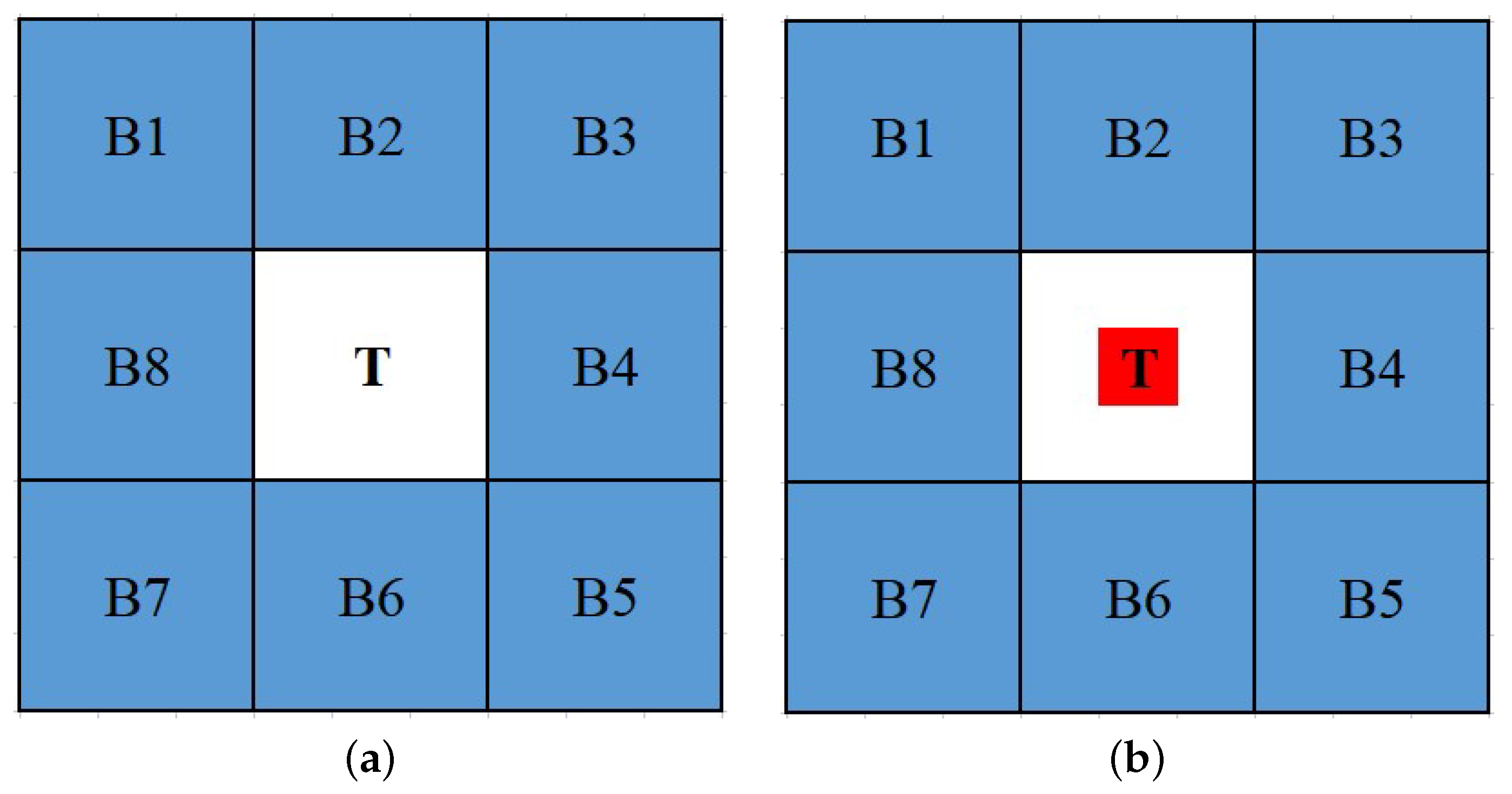

Some scholars have improved the window detection framework in LCM-based methods, as shown in

Figure 1. For example, Han et al. [

24] proposed a multi-scale triple-layer local contrast measurement (TLLCM) method utilizing a new window, and its triple-layer window detection framework is shown in

Figure 1b. Wu et al. [

25] put forward a double-neighborhood gradient measure for the detection of infrared small targets of different sizes through a designed double-neighborhood window and effective avoidance of the “expansion effect” of traditional multi-scale LCM-based methods. The double-neighborhood window is presented in

Figure 1c. Lu et al. [

26] devised a weighted double local contrast measure utilizing a new sliding window that further subdivides the central block in the window detection framework and fully considers the contrast information within the central block. This new sliding window is shown in

Figure 1d. The improvement of the window detection framework allows the enhanced capture of energy in the central region and contrast with the adjacent background while avoiding multi-scale calculations and improving computational efficiency.

At present, methods based on matrix decomposition can successfully improve the detection performance of infrared small targets in complex scenes. The core idea of these kinds of methods is to transform the small target detection problem into a low-rank and sparse optimization problem according to sparse a priori characteristics of targets. Gao et al. [

27] established an infrared patch image (IPI) model for infrared small target detection, expressed the small target detection as an optimization problem of low-rank sparse matrix recovery, and solved it effectively using stable principal component tracking. Wang et al. [

28] then created a novel stable multi-subspace learning (SMSL) method by analyzing the background data structure. On the basis of the IPI model, Dai and Wu [

29] designed an infrared patch tensor (IPT) model, exploited the target sparse prior and background non-local self-correlation prior, and modeled the target–background separation as a robust low-rank tensor recovery problem. On the basis of an infrared patch tensor (IPT) model, a novel non-convex low-rank constraint named partial sum of tensor nuclear norm (PSTNN) [

30] with joint weighted

norm was employed to suppress the background and preserve the target efficiently. However, these methods are inefficient in the face of complex backgrounds with multiple or structural sparse targets and commonly result in high false alarm rates.

Many sequential detection methods based on multiple frames have been proposed to detect infrared small targets and achieve enhanced detection results in complex backgrounds with the improvement of computer performance. For instance, Deng et al. [

31] and Zhao et al. [

32] realized the detection of motion point targets by fusing the spatial and temporal local contrast information. Du et al. [

33] proposed a new spatio-temporal local difference measurement method. Liu et al. [

34] put forward an infrared video small target detection method based on the spatio-temporal tensor model. Pang et al. [

35] established a novel spatio-temporal saliency method for detecting low-altitude slow infrared small targets in image sequences. These sequential detection methods use the information from anterior-posterior multiple frames to enhance the suppression of background clutter and extraction of targets. However, the output results of these sequential detection methods are usually lagging.

With the development of deep learning (DL) technology, many DL-based methods have been put forward to detect infrared small targets. Wang et al. [

36] designed a YOLO-based feature extraction backbone network for infrared small target detection. Subsequently, an infrared small target detection method based on Generative Adversarial Network (GAN) was proposed in [

37]. Dai et al. [

38] incorporated a visual attention mechanism into a neural network to improve target detection performance. Kim et al. [

39] utilized a GAN framework to obtain synthetic training datasets, and the detection performance was effectively improved. Zuo et al. [

40] designed the attention fusion feature pyramid network specifically for small infrared target detection. The method based on deep learning has achieved some relatively good detection results in infrared small target detection. However, the above methods usually require rich infrared image data. Regrettably, there are few publicly available datasets to support the research of these methods.

A weighted local ratio-difference contrast (WLRDC) method is proposed in this paper to detect infrared small targets and improve the robust detection performance in ground–sky complex backgrounds. Specifically, the innovation of the proposed WLRDC method is as presented follows:

A local ratio-difference contrast (LRDC) method that can simultaneously enhance the target and suppress complex background clutter and noise is proposed by combining local ratio information and difference information. LRDC uses the mean of the Z max pixel gray values in the center block to effectively solve the problem of poorly enhancing the target at low contrast when the traditional LCM-based method is applied.

A simple and effective strategy of block difference product weighted (BDPW) mapping is designed on the basis of spatial dissimilarity of the target to improve the robustness of the WLRDC method. BDPW can further suppress background clutter residuals without increasing the computation complexity given that this strategy is also calculated using the gray of the center and adjacent blocks.

The rest of the paper is organized as follows: in

Section 2, we present the related work. The proposed WLRDC method is described in

Section 3. In

Section 4, we conduct extensive experiments in various scenes to verify the effectiveness of the proposed method. Finally, the paper is discussed and summarized in

Section 5 and

Section 6, respectively.

2. Related Work

In recent years, some infrared small target detection methods based on LCM have been extensively investigated. Existing local contrast calculation types can usually be divided into three types: ratio, difference, and ratio-difference (RD) form. We briefly review the RD-based form of infrared small target detection method in this section because the proposed method is based on the RD form.

RLCM [

19] is a typical method of the RD form method that uses the average gray of the

and

max pixels in the central and adjacent cell blocks for calculation to suppress the interference of various noises effectively. Guan et al. [

41] utilized a multi-scale Gaussian filter combined with RD contrast and proposed an infrared small target detection method. Although the RD-based contrast method achieved relatively excellent detection performance compared to the traditional LCM-based method, the detection capability was weak in complex backgrounds. Moreover, the weighted function was developed to improve the detection capability and robustness of the method further. For instance, Han et al. [

42] put forward a multi-directional two-dimensional least mean square product weighted RD-LCM method for infrared small target detection, which has better detection performance for different types of backgrounds and targets. Subsequently, Han et al. [

43] also offered a weighted RD local feature contrast method with improved robustness to noise. With the combination of weighting function with the RD contrast, the detection performance of these methods was effectively improved.

The RD form of the contrast calculation method integrates the advantages of both the ratio and difference forms, enhances the target, and effectively suppresses background clutter. Although the weighting function is an excellent method for improving the detection performance, existing weighting functions are overly complex and increase the computational complexity. Therefore, rapidly and reliably detecting infrared dim and small targets in complex backgrounds remains an important challenge to overcome.