1. Introduction

Because of its capacity to obtain high-resolution SAR images regardless of time and weather, synthetic aperture radar has been widely applied in many fields, such as ocean observations, natural disaster prediction, and battlefield surveillance [

1,

2]. Automatic target detection in SAR images has been a hot research topic for many years, attracting extensive attention from scholars at home and abroad. The essence of object detection in SAR images is to extract the target from the background via the difference in scattering characteristics, figuring out the location of potential objects. However, it is difficult to interpret SAR images using coherent scattering and imaging mechanisms. Aircraft detection in complicated conditions of SAR images is always a challenging task.

Essentially, traditional SAR target detection algorithms are designed for target extraction based on the differences in the electromagnetic backscattering properties of targets and the clutter background, and the same goes for aircraft detection. The traditional methods applied in SAR images can be grouped into the following four categories: contrast-based, visual attention-based, complex data-based, and multi-feature-based. The methods based on contrast include CFAR [

3], various CFAR-derived methods (CA-CFAR [

4], SOCA-CFAR [

5], GOCA-CFAR [

6], OS-CFAR [

7], VI-CFAR [

8]), GLRT [

9] and PR [

10]. The advantage of methods based on contrast are easy to implement and can accomplish good performance in simple scenarios. However, it is difficult to select an appropriate statistical clutter model and tackle heterogeneous strong clutter in the practical application. In addition, there are many false alarms and missing targets in object detection under a complex background. The visual attention-based method [

11] suppresses the strong hetero enhancement target by introducing prior information, improving the SCR (signal to clutter ratio). Yet, the prior information introduced needs to be concretely analyzed for specific conditions, and the algorithm may be redesigned for different detection tasks. For complex data-based methods [

12], the scattering characteristics of the target and clutter, as well as the imaging mechanism, are utilized for detecting the target. It can reflect the difference between man-made targets and natural clutter from the physical mechanism. However, high acquisition costs are required to obtain the raw complex SAR image data, and the capacity to distinguish between artificial clutter interference and artificial targets of interest is yet to be verified. The principal features used in the multi-feature-based approach mainly comprise structural features [

13], peak features [

4], variance features [

14], and extended fractal features [

15]. The merit of this solution is that it can detect targets from multi-dimensional features. Nevertheless, due to the poor generalization of this method, it can only achieve good performance for specific data. Among the above detection methods, the CFAR detector is one of the most widely studied and intensively applied. The main components of aircraft are composed of metallic materials with high backscattering coefficients. The airport area has a low backscattering coefficient as it is more capable of absorbing electromagnetic waves. The contrast-based detection algorithm can efficiently detect aircraft in the ROI area of the airport under such conditions. However, in general, traditional methods are ineffective in the face of complex background scattering, owing to the poor robustness and the limited capacity of feature representation.

With the enrichment of SAR images, the traditional algorithm, represented by CFAR, no longer meets the requirements. In recent years, with the development of DL and the improvement of the related hardware device, convolutional neural networks have shown amazing performance in the field of computer vision; and yet these methods are mainly applied to optical images. Detection algorithms based on DL can be divided into the two-stage algorithm and the single-stage algorithm in terms of detection steps. The Faster-RCNN [

16] is a typical two-stage algorithm that adopts a trainable region proposal network (RPN) to improve detection efficiency while maintaining high accuracy. However, only high-level features are employed to predict objects, and it is difficult to detect small targets because it loses the low-level features. The single-stage representative algorithm is YOLO [

17], which transforms the detection into a regression problem. The algorithm achieves a significant improvement in the detection rate, but the precision becomes poor. An FPN structure [

18] is proposed in the literature, which integrates high-resolution information about shallow features with high semantic information on deep features. The detection performance is raised by predicting the different feature layers separately. All the above methods are based on anchor frames. Firstly, the location of potential targets is inferred from the generated anchors in the RPN stage, and the final results are filtered by NMS (Non-Maximum Suppression). After 2019, algorithms based on DL without anchor frames have been developed, such as CornetNet [

19], ExtremeNet [

20], FCOS [

21], and CenerNet [

22]. CornerNet is a new one-stage detection method that treats the target as a set of key points. ExtremeNet evolves based on CornerNet, which detects four extremes and one centroid via standard key point estimation. The central heat map of each class is proposed to predict the target center. CenterNet derives the centroid via key point estimation and additional information about the target by regression. Many other methods have been proposed for object detection, e.g., [

23,

24].

With deep learning (DL) achieving amazing performance in computer vision, DL has also gradually become a standard paradigm in SAR image analysis, including aircraft detection [

25], which is detailed in

Section 2.1.1. Although many approaches have achieved a certain effect in aircraft detection of SAR images, they still leave much room for improvement.

The imaging mechanisms of SAR and optical satellites display great differences, resulting in an entirely different appearance of the target. The represented features of aircraft in SAR images can be summarized via the following three aspects:

- (1)

Discrete points. Due to the mechanism of SAR imaging, aircraft appearing in SAR images consist of a few strong scattering points, which form the faint outline of the aircraft. Coupled with the interference of background clutter, it is easy to miss detection;

- (2)

Multi-scale targets. In this study, the size of most aircraft in the GF3 dataset ranges from 20 to 110 pixels. However, with the deepening of the CNN, the spatial information of small-size aircraft with fewer pixels is easy to lose, which poses a challenge to multi-scale aircraft detection;

- (3)

Attitude sensitivity. With changes in azimuth angle, the appearance of the same aircraft in different SAR images is not quite identical. It is difficult to acquire the modeling geometric transformation of changeable-appearance aircraft in limited and available training samples.

As is well-known, there is no doubt that abstract features extracted by DL have better representation capacities than traditional manual features. However, should the traditionally established hand-made features be completely abandoned in favor of total reliance on abstract features? This is a meaningful issue. Regretfully, most detection algorithms of SAR based on DL are established by fine-tuning the classical optical network, and do not consider the differences in the imaging mechanism between SAR and optical satellites. Otherwise, compared with optical data, SAR image data are relatively scarce. A large amount of image data is acquired to train the network model, so transfer learning from optical networks alone does not work well. Besides this, many publications are only tested on specific circumstances, e.g., aircraft with clear contours and a single background. Fewer detection networks take the multi-scale and attitude sensitivity of targets in the various and complicated conditions of SAR images into consideration.

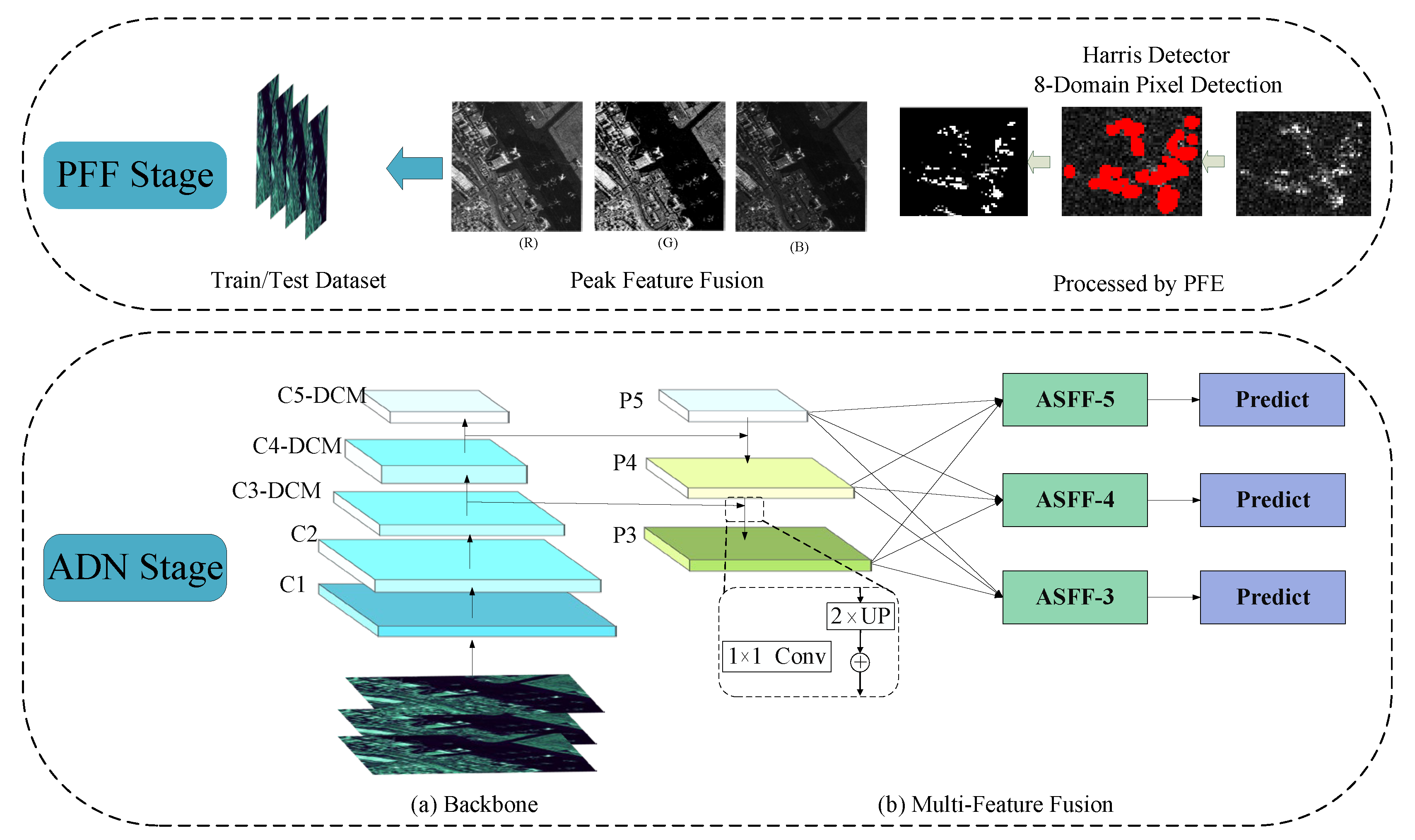

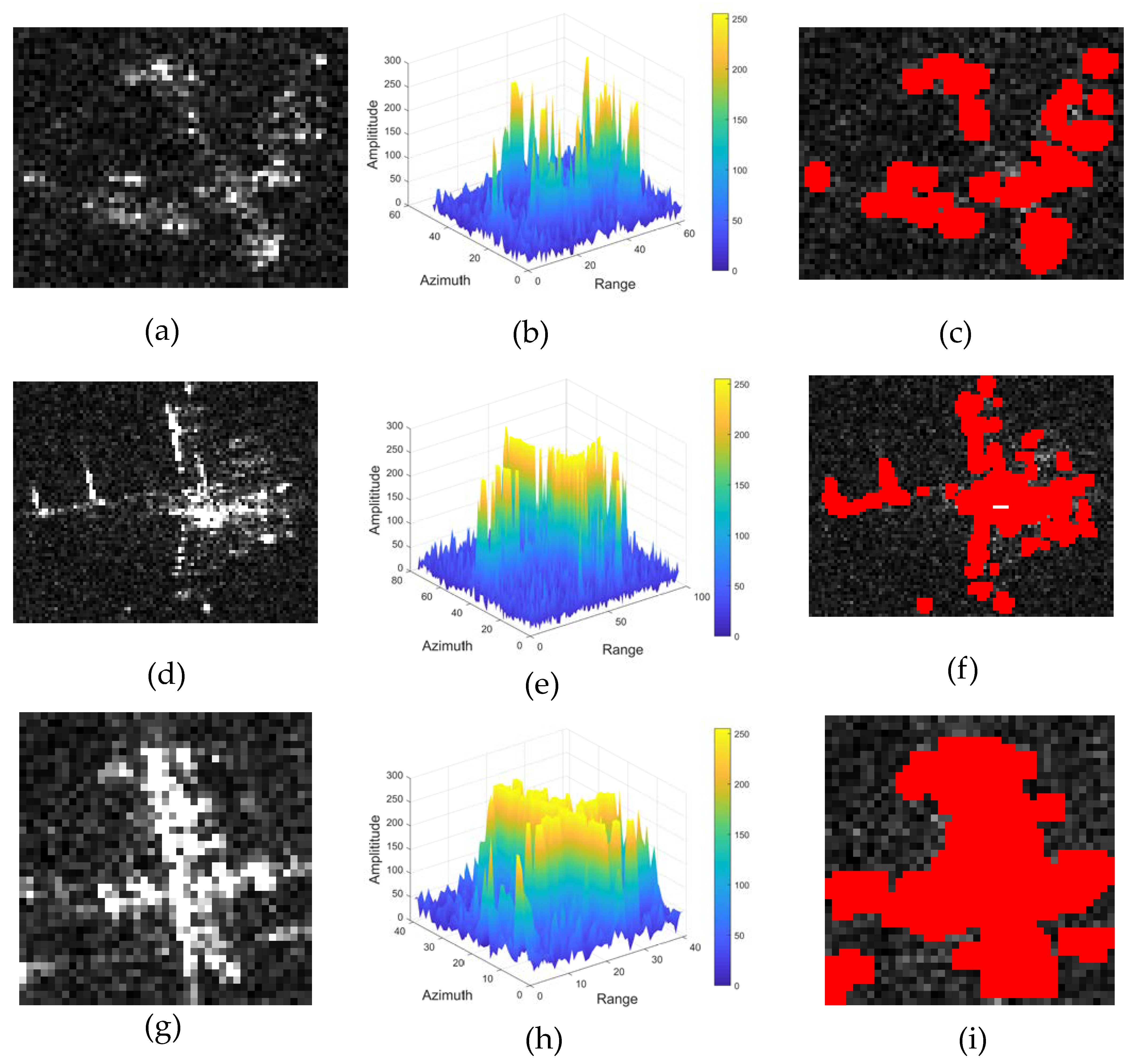

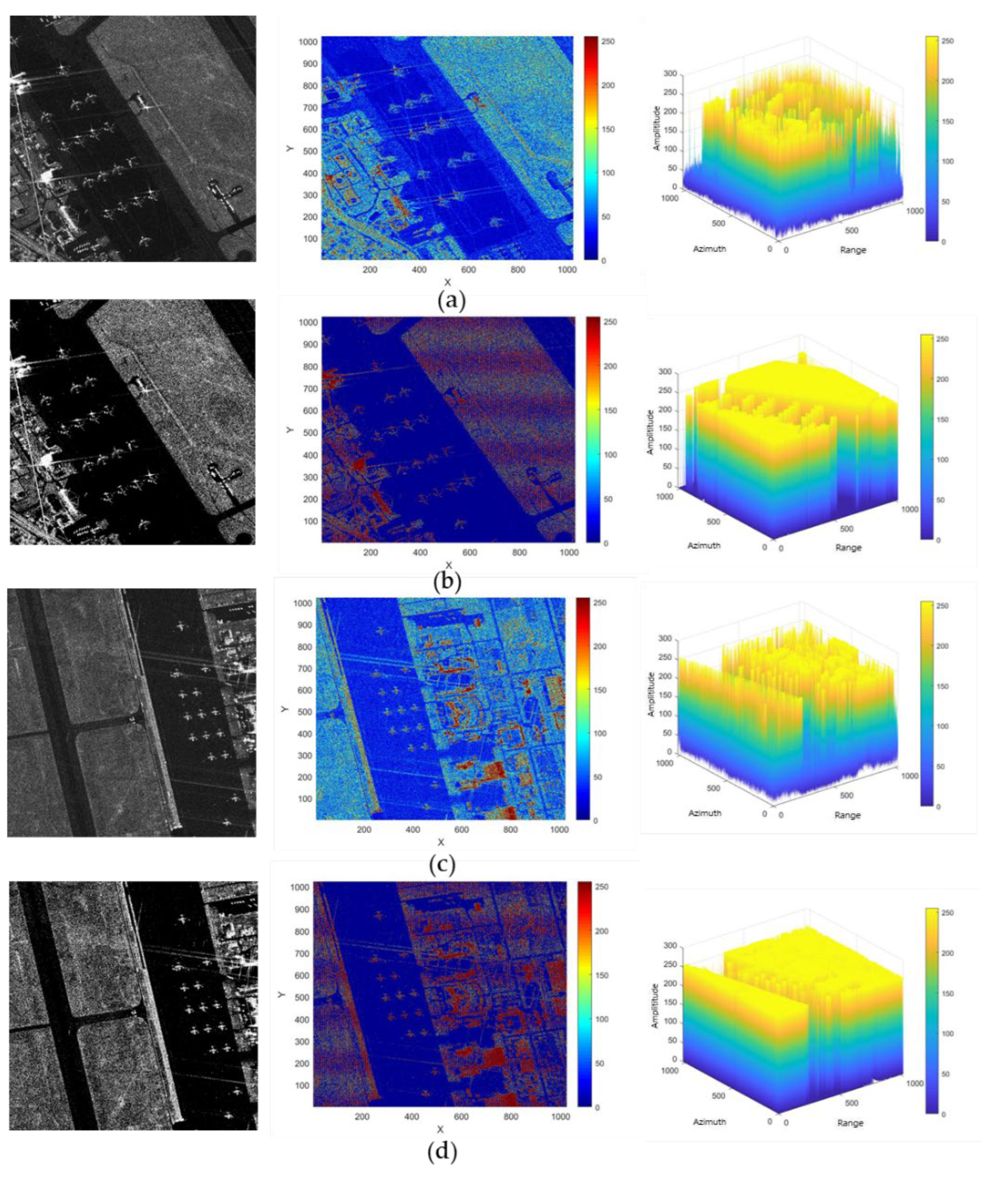

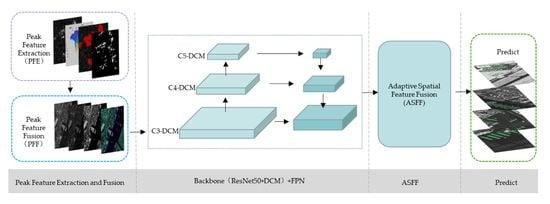

Intending to address the above-mentioned problems, an adaptive pyramid deformable network combined with the peak feature fusion (PFF-ADN) is proposed to ameliorate the performance of aircraft detection under complex backgrounds and target dense arrangement conditions. The strong scattering points represent the maximum pixel value of the local scattering region in SAR images, which have superior significance and stability [

26]. The distribution of scattering centers can be responded to the peak features, which also can describe the geometric shape of the target in a complex environment. Therefore, combining the peak features is a feasible way to enhance the performance of aircraft detection. (1) In the peak feature extraction stage, because the strong scattering points tend to be distributed on the common components such as nose, fuselage, tail, wings, and engines, the corner points extracted by the Harris detector [

27] with rotational invariance, are selected as the basis for modeling the scattering information of aircraft. Then the peak features of images are acquired based on the 8-domain pixel detection of local maxima, which will be sent to the next stage for feature fusion. (2) In the peak feature fusion stage, the image data processed by PFE will be integrated into the image as the G-channel data. This operation can fuse the peak features of aircraft into the image, enhancing the saliency of aircraft under various imaging conditions. Meanwhile, it facilitates the mitigation of variability and provides more effective information to the detection network. (3) In the deep feature extraction stage, an adaptive deformable network for aircraft detection is proposed by incorporating the ASFF and DCM. The ASFF can cope with the inconsistency of multi-scale features, which is beneficial to fully exploit the representational power of the feature pyramid network, improving the ability to detect multi-scale aircraft, especially for small-size aircraft. The DCM is adopted to cope with the attitude sensitivity of aircraft in SAR imaging and various shapes of aircraft, making the detection network accommodate the geometric variations. Finally, experiments are conducted on the GF3 dataset to validate the effectiveness of each presented module and to demonstrate the superiority of the proposed method.

The main contributions of our work can be summarized as follows:

- (1)

A novel integrated framework named PFF-ADN is proposed for SAR aircraft detection, which enhances the scattering features of the target by fusing the peak features of images and improving the network structure. This presented method achieves state-of-the-art performance on the GF3 dataset.

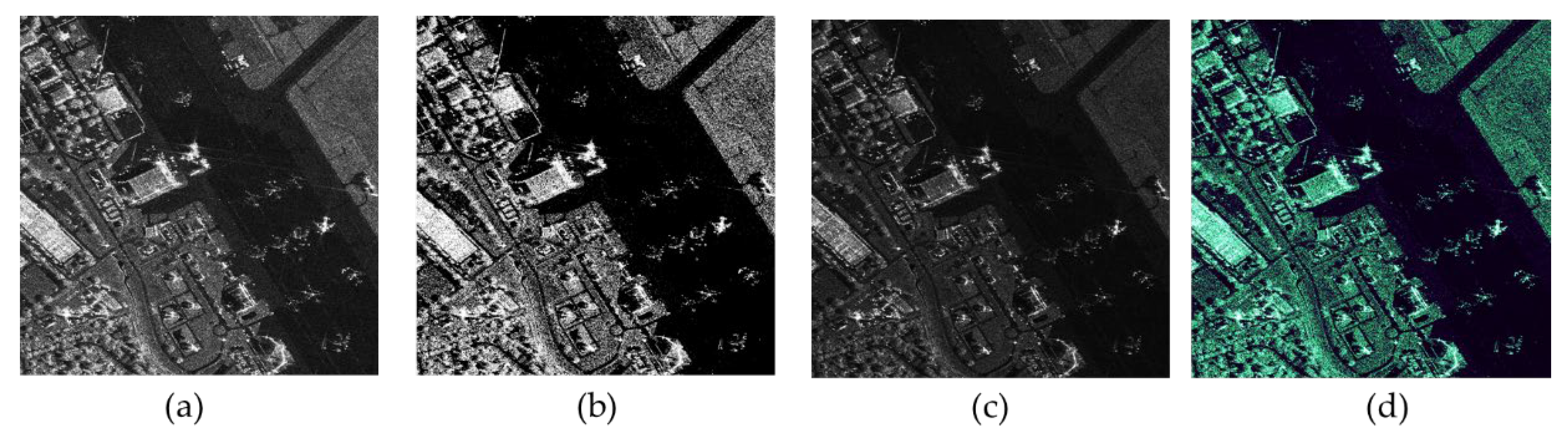

- (2)

A peak feature fusion strategy is designed for enhancing the brightness information of aircraft in the SAR images by extracting and fusing the peak feature information, which has stronger robustness to deal with the variability and obscureness caused by the scattering mechanism of SAR. Compared to the raw images, the aircraft characteristics are highlighted in the enhanced images, providing effective information on aircraft for the subsequent network.

- (3)

An adaptive deformable network for aircraft detection is designed, which is composed of a Feature Pyramid Network with ASFF structure and deformable convolution module (DCM). The ASSF is introduced to solve the inconsistency of multi-scale features and retain more discrete information about small-size aircraft, which enhances the detectability, especially for small-size aircraft. The DCM is adopted to cope with the attitude sensitivity of aircraft in SAR imaging and various shapes of aircraft, making the detection network accommodate the geometric variations.

The remainder of this paper is organized as follows.

Section 2.1 shows a review of the related work, which contains DL methods and traditional methods of aircraft detection in the SAR domain.

Section 2.2 describes the proposed PFF-ADN algorithm in detail. In

Section 3, the parameter configuration, assessment criteria, experimental results as well as performance evaluation are shown in detail. Finally, we summarize this article and provide the prospect for further research in

Section 4.

3. Experiments and Analyses

In short, experiments on the PFF-ADN we have proposed are conducted for illustration and comparison with GF3 datasets. To confirm the effectiveness of each module, the detection results of ablation experiments are presented and the contributions of different modules in this method are discussed.

3.1. Data Set Description and Parameter Setting

The built datasets contain 69 GF3 SAR images with sufficient desired variations. The GF3 images show C-band HH polarization with 1 × 1 m resolution, and contain 1903 SAR aircraft. These magnitude images with expert annotation cover a variety of complex scenes in which it is difficult to distinguish targets from the background noise, such as scenes where small aircraft are densely arranged, and scenes where both large and small aircraft exist together. In addition, aircraft adjacent to buildings are common in this dataset. Therefore, GF3 is established to verify the performance of the proposed algorithm in a complex background. We randomly chose 49 images as the training set and the remaining 20 images as the test set. The large-scale images in GF3 are about 20,000 × 20,000 pixels, which need to be cut into slices of 1000 × 1000 pixels with an overlap of 200 pixels. The reason for the 200-pixel overlap is to avoid cutting off the aircraft. Detailed information on the experimental dataset is given in

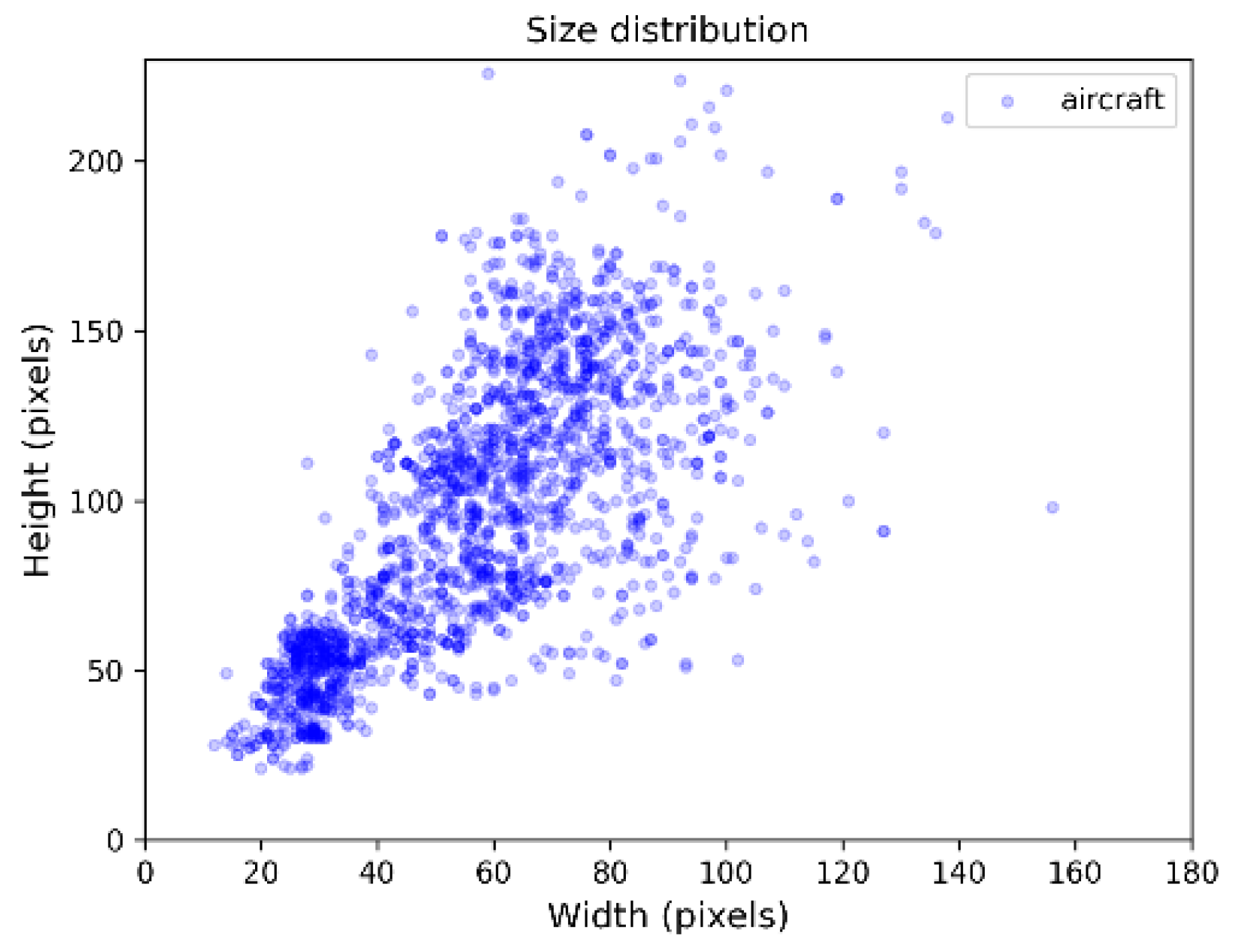

Table 1. Besides this, the size distribution of aircraft in this dataset also varies considerably, as shown in

Figure 7.

Parameters in the PFF stage: In the PFF step, for the image containing varied brightness information, the mean values of R and B channels are set to three and six empirically when the image data are processed by KTN.

Parameters in the ADN stage: the Resnet50 with DCM is taken as the backbone of the ADN, which is pre-trained on the COCO. The input size for ADN is 1000 × 1000 pixels and the basic anchor sizes for P3, P4, and P5 are set to 32, 64, and 128, respectively. The stochastic gradient descent (SGD) is applied to train the model with a batch size of eight images. The momentum and weight decay of the optimizer are given fixed values: 0.9, 0.0001, and the training steps are set to 60,000. The initial learning rate is set to 0.001 and decays to 0.0001 after 60,000 steps for the convergence of loss.

In addition, the experiment is performed on an Nvidia Titan 2080 Ti, and the configuration of the operating system environment includes Ubuntu16.04, CUDA10.1, Pytorch1.17, and Tensorflow 2.1.0.

3.2. Evaluation Metrics

To evaluate the performance of the detection task, a variety of evaluation metrics are employed in the experiment, namely, Precision (

), Recall (

), False Alarm Rate (

),

-score, Average Precision (

), and Running Time. These metrics are computed from four well-established values measured in the experiments: True Positive (

), False Positive (

), False Negative (

), and running time for each scene. TP represents a correctly detected target.

and represent the correctly detected objects and false alarms, respectively. denotes a missing target. The intersection over union (IoU) indicates the extent of overlap between the predicted bounding box and the true bounding box in the image. The value of IoU is in the range of 0 to 1. When the two bounding boxes coincide completely, the value of IoU is 1. In this experiment, if the IoU is greater than 0.5, the detected bounding box can be considered as .

In addition, the -score is utilized to evaluate the comprehensive performance of the proposed approach, which is defined in Formula (16). Since different precision and recall values arise at different confidence thresholds, the Precision–Recall (PR) curve is introduced to balance the two metrics, where the recall rate is the horizontal coordinate and the precision rate is the vertical coordinate. The value of is the area under the PR curve, which can be used for evaluating the overall effectiveness of the algorithm. The method for calculating is shown in the Formula (17).

3.3. Ablation Experiments

3.3.1. Effect of PFF

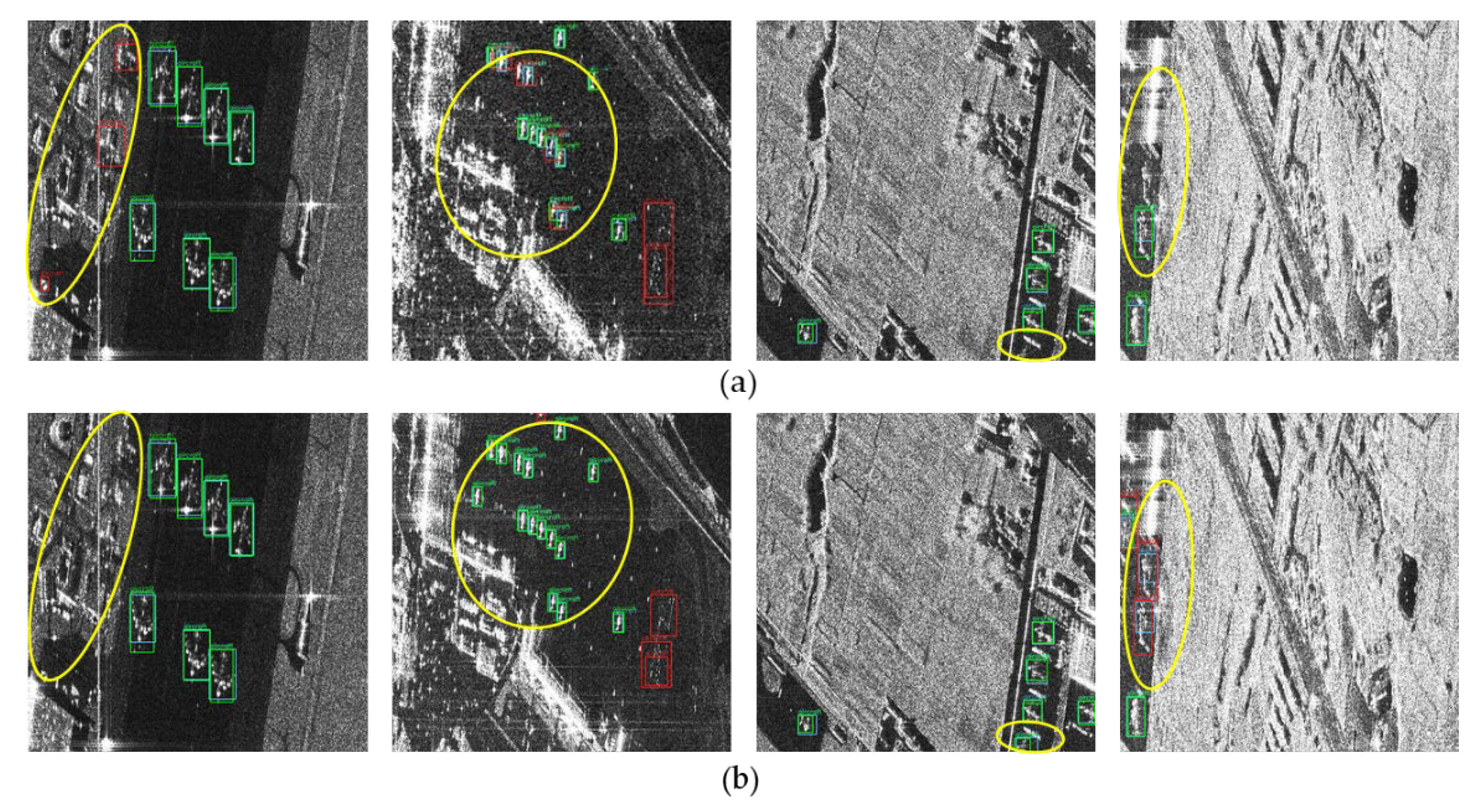

The effective pre-processing algorithm is beneficial to improving the performance of detection in SAR images, especially for the higher recall rate. The effectiveness of the PFF is given in

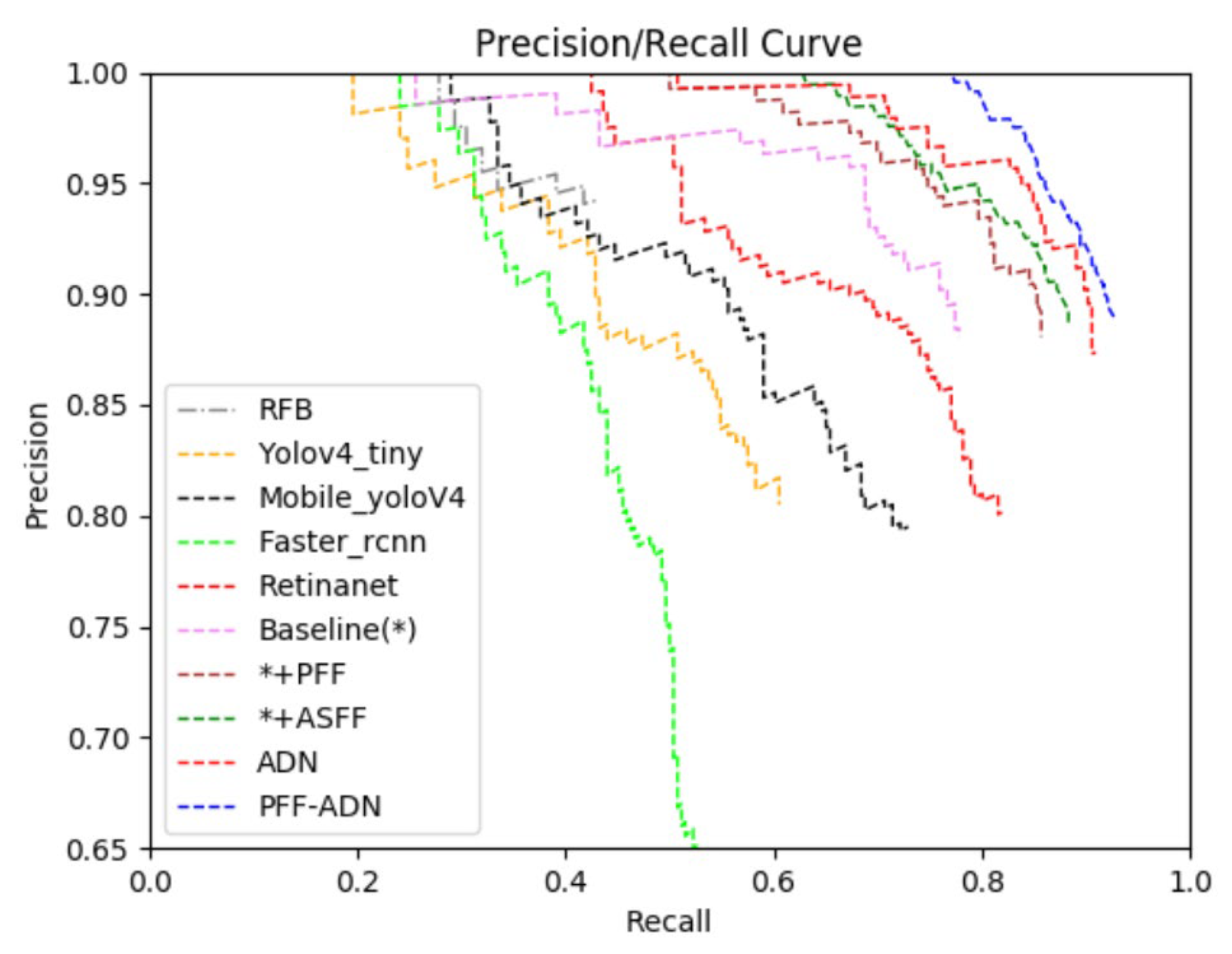

Table 2. As can be seen from the table, the recall rate decreases obviously, but the precision reduces only a little bit. Fortunately, when the PFF is added, the test AP and F1 increase by 5.64% and 3.39%. The two lines in

Figure 8 show the implementation results of adding PFF on the number of false alarms and detected targets through a before-and-after comparison. In the first (from left to right) column, two false alarms are suppressed. In the second column, not only are two small false alarm targets suppressed, but a small target in a densely arranged SAR scene is also detected. However, there are still two large-size false alarms that are mistaken for aircraft, even with the added PFF. The reason why the false alarms are not eliminated may be that the features of the background are enhanced along with the target. Another reason may be that the characterization ability of the detection network is insufficient. In the third column, the aircraft at the bottom of the image is also detected. In the fourth column, although the results show three more false alarms of small size, three more aircraft can be assumed to be detected. Since the bounding boxes of the detected aircraft are too large, the IoU between the detected bounding box and the true bounding box is less than 0.5, so these are considered false alarms. It can be inferred that the features from PFF are rich but redundant, leading to serious interference. In a word, the scale-invariance features of aircraft in SAR images are enhanced by adding the PFF, improving the detection performance in SAR images to a certain extent. As shown in

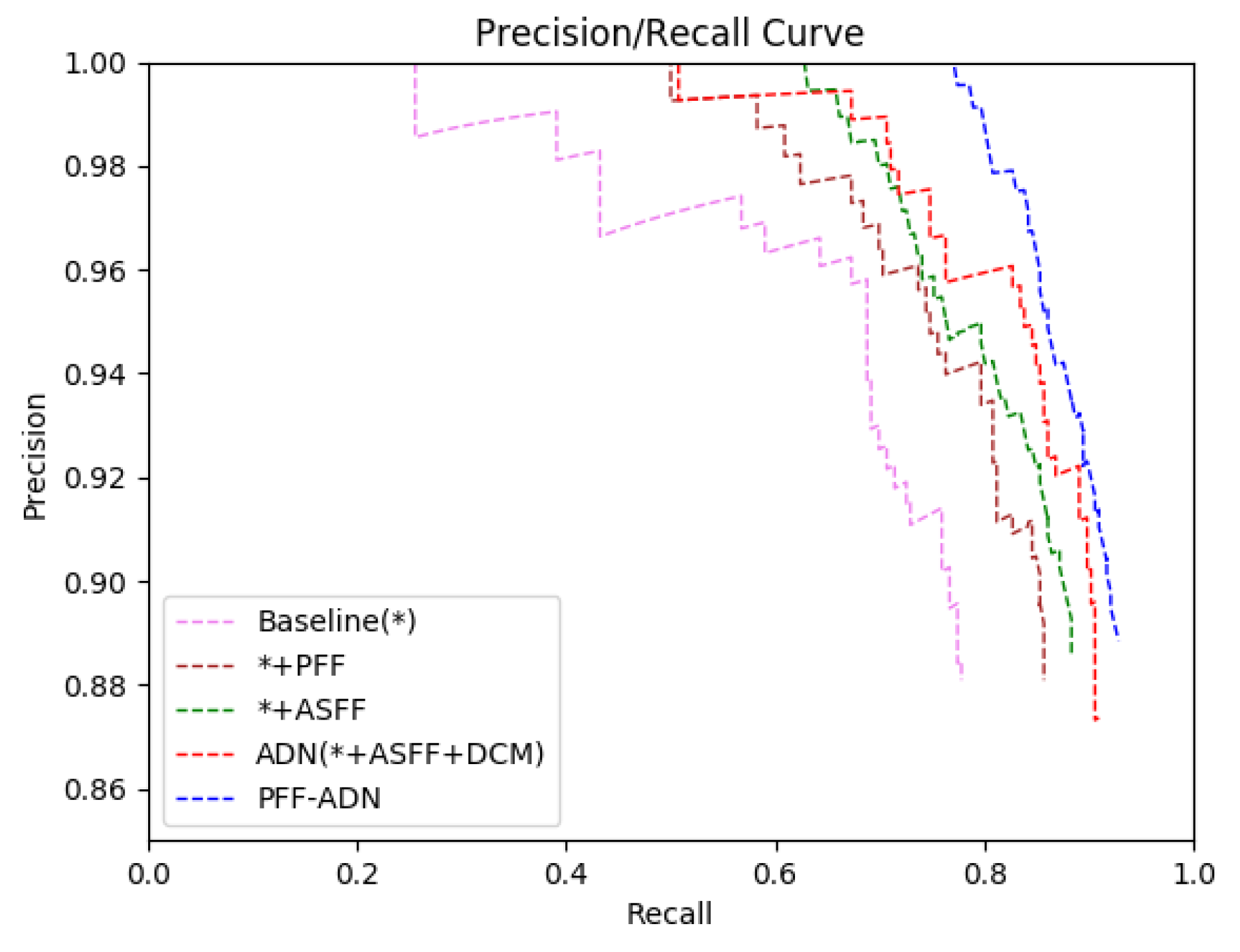

Figure 9, the PR curve indicates the superiority of the proposed model.

3.3.2. Effect of ADN

Experiments are designed to verify the effectiveness of ADN, which contains two aspects: the ASFF and the DCM. The quantitative evaluation results of ASFF are displayed in

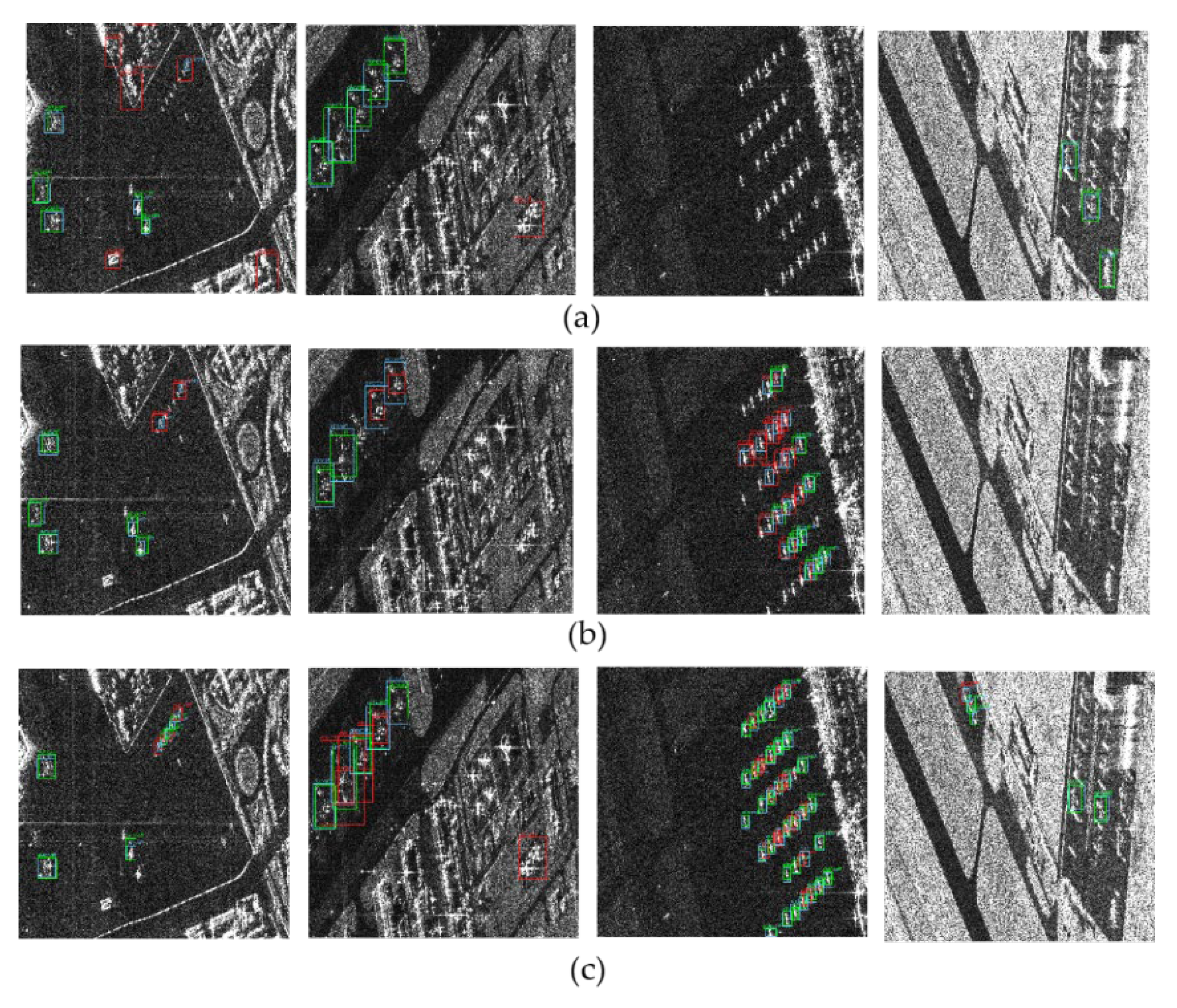

Table 3. The precision rate and recall rate of the baseline algorithm are all increased up to 88.60% and 88.34%, respectively, by adding ASFF. The first two lines in

Figure 10 show the results of adding ASFF on the number of false alarms and detected targets through a before-and-after comparison. In the first (from left to right) column, two false alarms are suppressed. In the second column, one more aircraft is detected in dense arrangements of SAR scenes. In the third column, two more aircraft are detected by adding the ASFF. However, one more false alarm appears, because the IoU is less than 0.5. The reason may be that the detection network cannot accurately extract the features of small targets. So, the DCM will be utilized to improve this condition in the next step.

In the fourth column, one more small-size aircraft and two large-size aircraft are detected. It might be inferred that the features of ASFF are rich, leading to more aircraft being detected. In a word, the detection algorithm gives full play to the characterization capabilities of the feature pyramid by adding ASFF, further improving the detection performance of multi-scale aircraft, especially for the small-size aircraft in SAR images.

Furthermore, another experiment is performed to reveal the effectiveness of DCM. As shown in

Table 3, the algorithm’s performance is greatly enhanced by adding DCM. Compared to the methods without DCM, namely, * + ASFF, the method (* + ASFF + DCM) acquires a competitive advantage in terms of quantitative indicators. The recall rate is increased up to 88.34% from 90.97%. As displayed in the last two lines of

Figure 10, more aircraft against a complicated background are detected in the fourth column of SAR scenes. This confirms that the deformable convolution introduced is beneficial to the fine localization of SAR aircraft. However, in the fourth column, there is still a situation in which noise is recognized as a false alarm. Encouragingly, when the DCM is added, the

value increased to 88.46%, up from 89.12%. The PR curve representing the AP value is shown in

Figure 9. It can be concluded from the PR curve that a great improvement in the AP value is achieved by adding the ASFF and the DCM models, respectively, demonstrating the effectiveness of each proposed model.

3.3.3. Performance of PFF-ADN

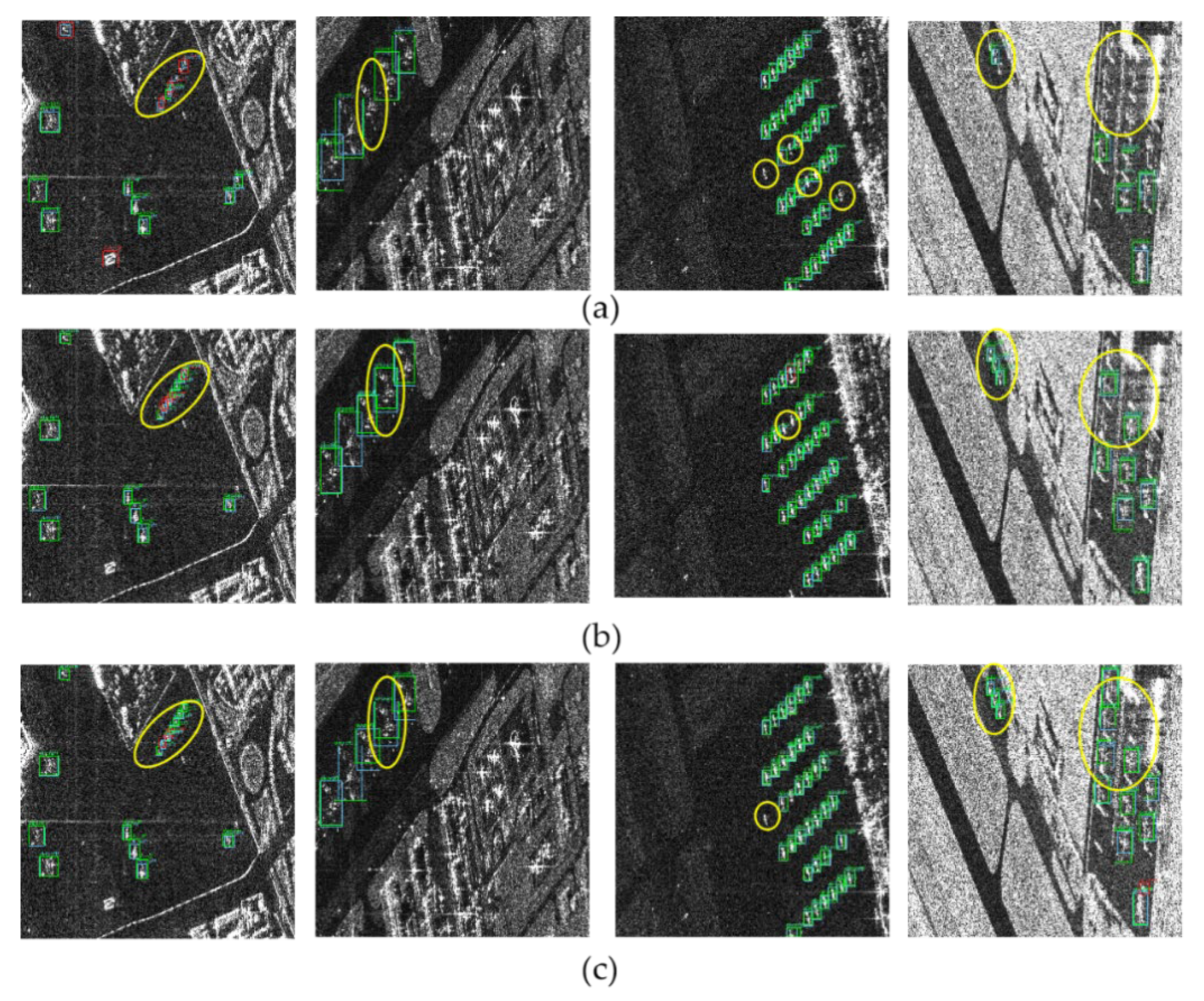

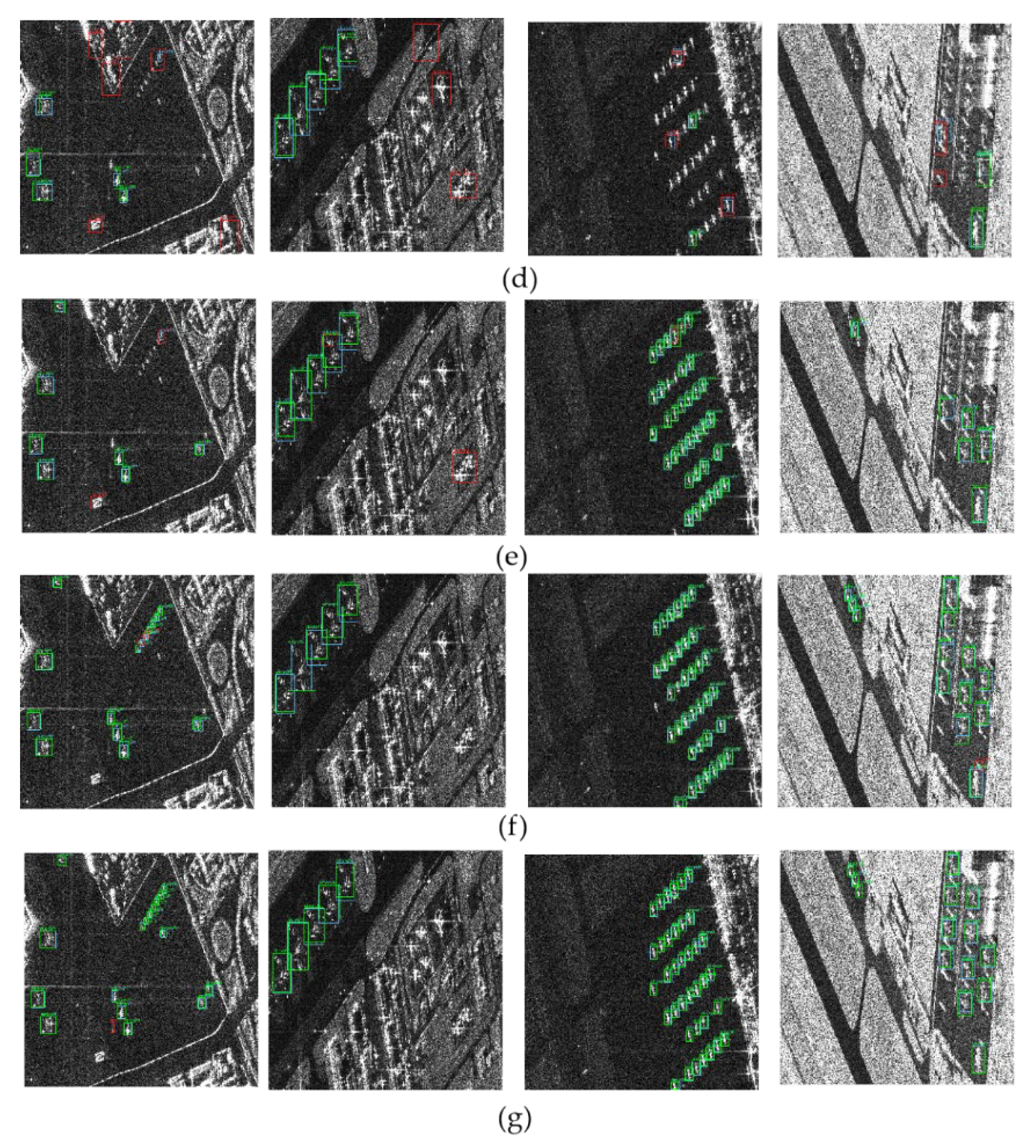

To validate the superiority of the proposed PFF-ADN further, the results are compared with those of other detectors.

Table 4 lists the quantitative assessment results of the algorithms. All the metrics of the proposed approach have been improved greatly. Meanwhile, to better illustrate the performance of each approach, four representative images are selected to visually show the detection results of aircraft in different conditions. This is exemplified in

Figure 11 A high false alarm rate and low recall rate appear in the results of lightweight algorithms, such as Yolov4-tiny and Mobile-yolov4. Besides this, in the case of small-size aircraft, the detection results of Yolov4-tiny, RFB, and FastRcnn-resnet50 are not very good. The reason why small-size aircraft are not detected is that these algorithms only utilize the high-semantic information of deeper layers to predict objects. RetinaNet adopts a feature pyramid structure that fuses the semantic information of deep features and the high-resolution information of shallow features, and then makes predictions in different feature layers, significantly improving the recall rate of small-size aircraft. However, as can be seen in the results of other detectors, there are still some aircraft missing. As such, in this paper, the PFF is proposed based on SAR image pre-processing. The PFF is employed to enhance the scattering features of aircraft in SAR images and thus catch the non-obvious features of aircraft. The ADN structure that incorporates the ASFF and the DCM is proposed as the network structure to improve accuracy. The ASFF is introduced to solve inconsistency between multi-scale features, boosting the representational capacity of the feature pyramid structure. The DCM is exploited to cope with the geometric variations or attitude sensitivity of aircraft in SAR images. The last three rows of

Figure 11 show that the detection results of PFF-ADN are better than those of RetinaNet in four typical SAR scenes. In the first column, all the small-size aircraft are detected, while small-size aircraft appearing in dense arrangements are not detected in the RetinaNet. In the third column, the small-size aircraft in a dense arrangement are all detected by PFF-ADN, while two aircraft are missed by RetinaNet. In the fourth column, all aircraft are detected by PFF-ADN under complex conditions wherein both large and small aircraft exist.

To sum up, the validity of the two modules in PFF-ADN has been demonstrated by ablation experiments. All the results are presented in

Table 2,

Table 3 and

Table 4. The algorithm we propose can not only achieve a higher recall rate, but also suppress false alarms in multiple complex conditions.

The Precision–Recall curve, which measures the detection performance of the network structure, is shown in

Figure 12. The larger the area covered by the curve, the better the performance of the network structure. Therefore, in comparison with other detectors, the approach we propose achieves better detection performance in SAR images.