Aircraft Detection in SAR Images Based on Peak Feature Fusion and Adaptive Deformable Network

Abstract

:1. Introduction

- (1)

- Discrete points. Due to the mechanism of SAR imaging, aircraft appearing in SAR images consist of a few strong scattering points, which form the faint outline of the aircraft. Coupled with the interference of background clutter, it is easy to miss detection;

- (2)

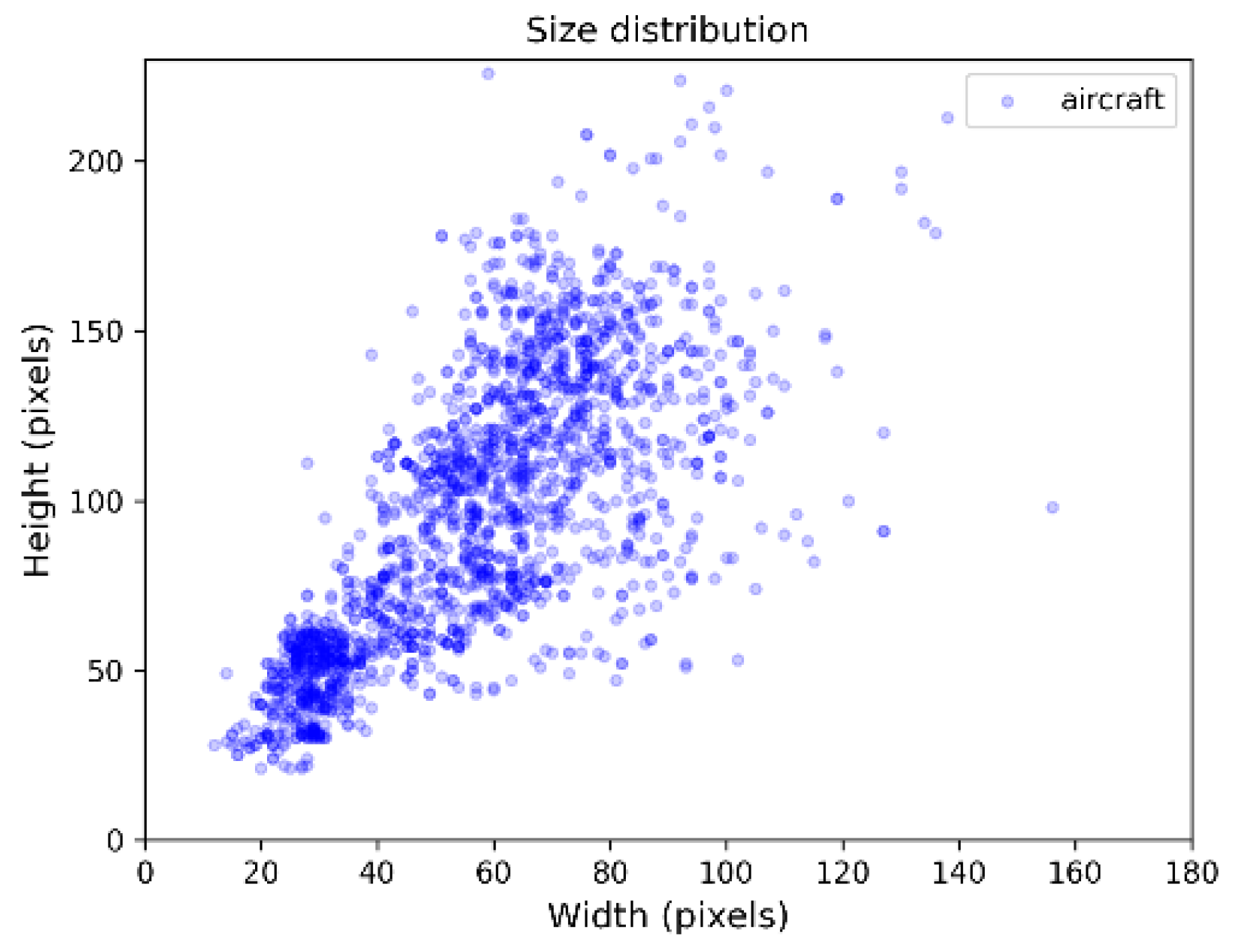

- Multi-scale targets. In this study, the size of most aircraft in the GF3 dataset ranges from 20 to 110 pixels. However, with the deepening of the CNN, the spatial information of small-size aircraft with fewer pixels is easy to lose, which poses a challenge to multi-scale aircraft detection;

- (3)

- Attitude sensitivity. With changes in azimuth angle, the appearance of the same aircraft in different SAR images is not quite identical. It is difficult to acquire the modeling geometric transformation of changeable-appearance aircraft in limited and available training samples.

- (1)

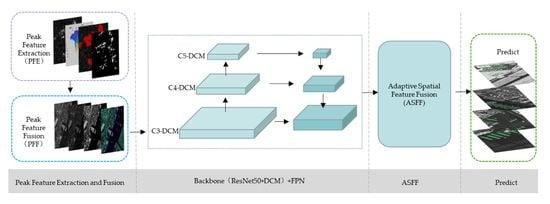

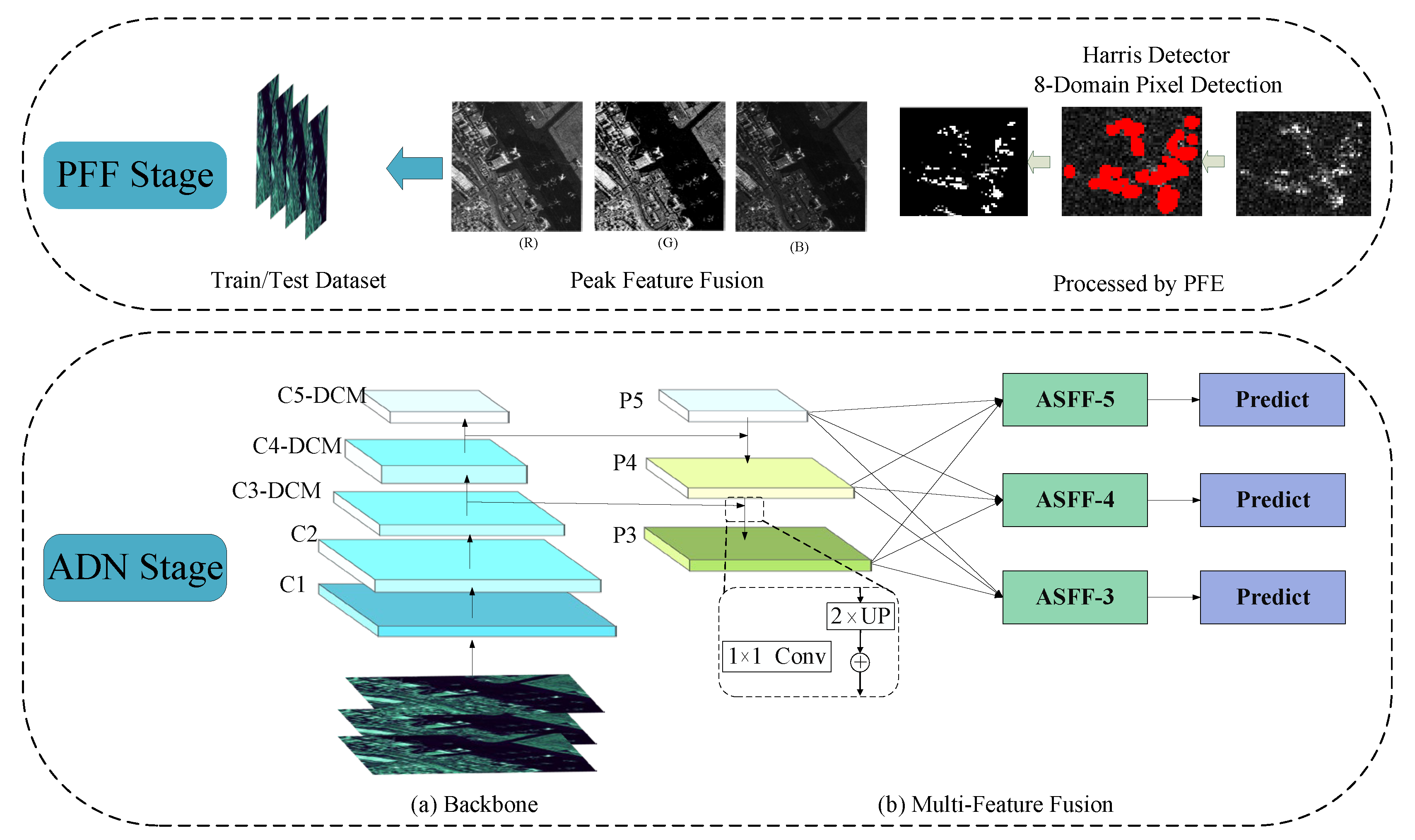

- A novel integrated framework named PFF-ADN is proposed for SAR aircraft detection, which enhances the scattering features of the target by fusing the peak features of images and improving the network structure. This presented method achieves state-of-the-art performance on the GF3 dataset.

- (2)

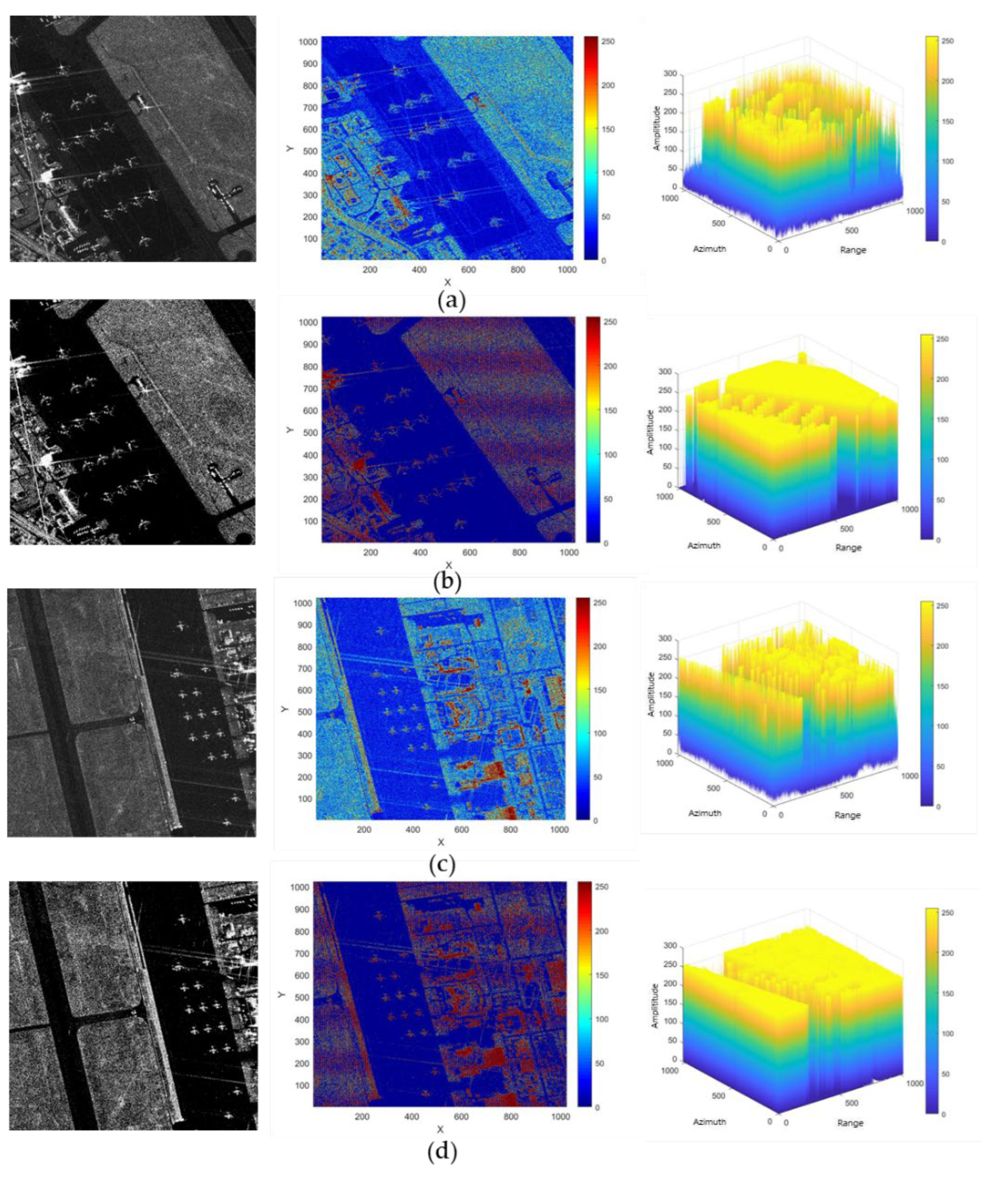

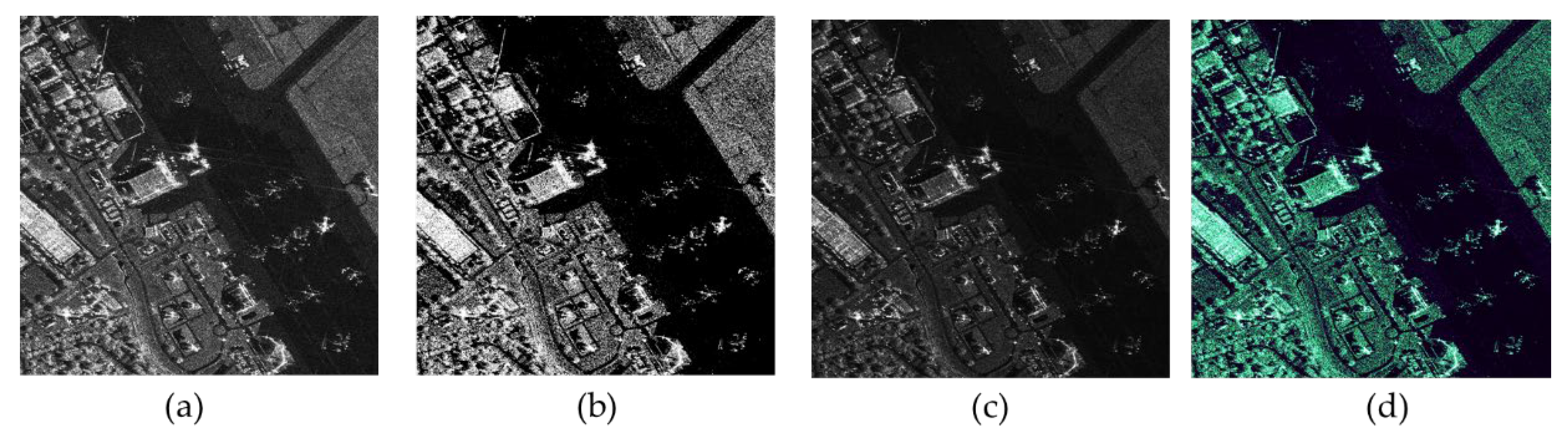

- A peak feature fusion strategy is designed for enhancing the brightness information of aircraft in the SAR images by extracting and fusing the peak feature information, which has stronger robustness to deal with the variability and obscureness caused by the scattering mechanism of SAR. Compared to the raw images, the aircraft characteristics are highlighted in the enhanced images, providing effective information on aircraft for the subsequent network.

- (3)

- An adaptive deformable network for aircraft detection is designed, which is composed of a Feature Pyramid Network with ASFF structure and deformable convolution module (DCM). The ASSF is introduced to solve the inconsistency of multi-scale features and retain more discrete information about small-size aircraft, which enhances the detectability, especially for small-size aircraft. The DCM is adopted to cope with the attitude sensitivity of aircraft in SAR imaging and various shapes of aircraft, making the detection network accommodate the geometric variations.

2. Materials and Methods

2.1. Related Work

2.1.1. Aircraft Detection Based on DL in SAR Images

2.1.2. Traditional Candidate Feature Methods in SAR Images

2.2. The Proposed Method

2.2.1. Overview of the Proposed Method

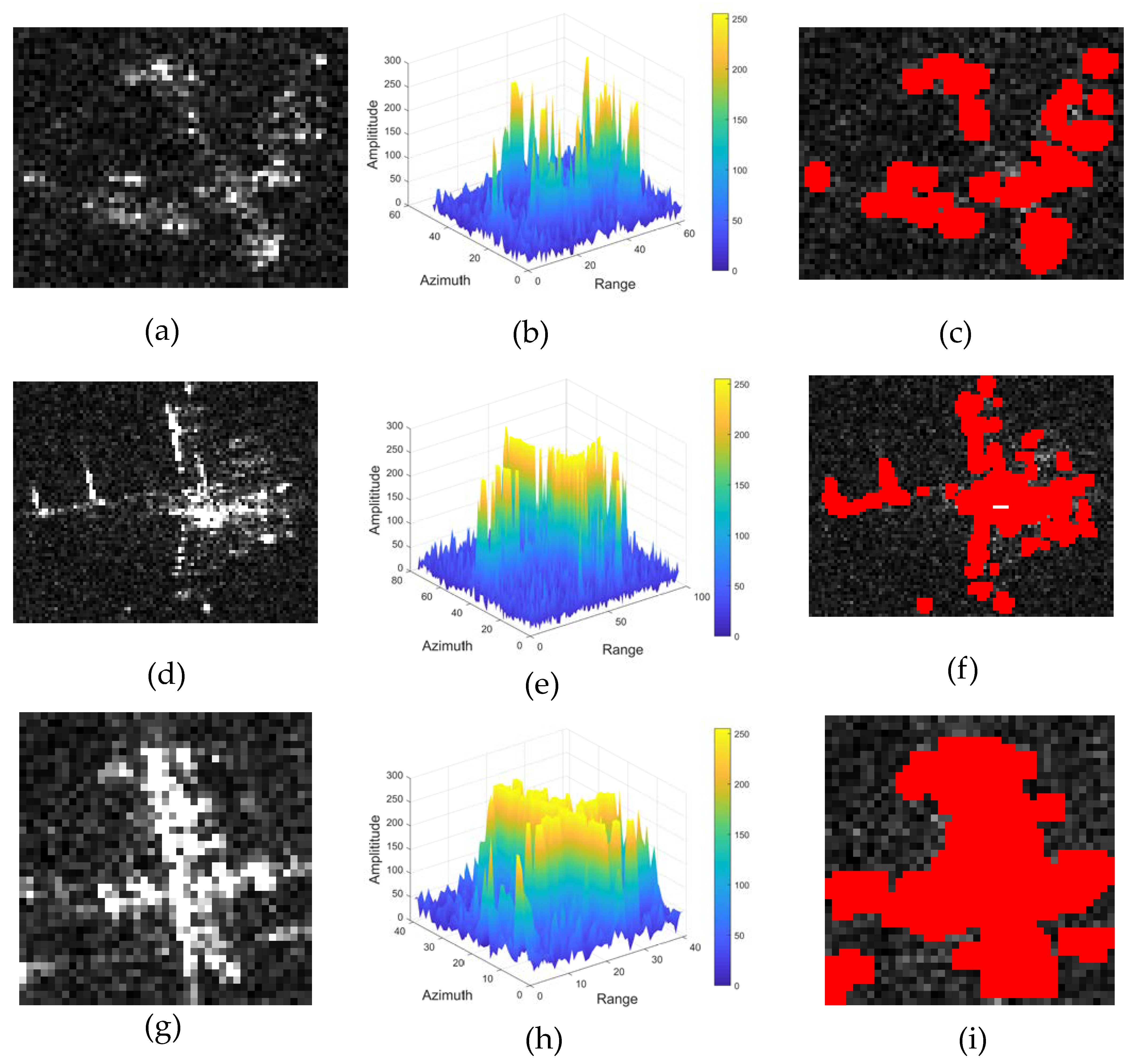

2.2.2. PFE

| Algorithm 1: Peak Feature Extraction | |

| Input: | |

| the mean and variance of the background region | |

| Hyper-parameter: = 0.4 | |

| Output: | |

| Main loop: | |

| 1: | Compute the gradient in the direction of and : |

| 2: | |

| 3: | Calculate the product of the gradients of the two directions: |

| 4: | |

| 5: | A Gaussian weight is assigned to each gradient acquired: |

| 6: | , |

| 7: | |

| 8: | for corner-point do |

| 9: | |

| 10: | add |

| 11: | else |

| 12: | pass |

| 13: | for do |

| 14: | if |

| 15: | add |

| 16: | else |

| 17: | pass |

2.2.3. PFF

2.2.4. ADN

- (1)

- DCM: The input feature map is sampled at fixed positions in the standard convolution operation, and the sampled pixels are mostly rectangles. This gives rise to obvious issues, such as that the receptive fields are of the same size in the same layer. So, the standard convolution has no ability to handle geometric transformations. Nevertheless, because of the SAR scattering mechanisms, the same target in SAR images appears in various shapes with changes in azimuth angle. In this paper, deformable convolution [49] is adopted to accommodate geometric variations or attitude sensitivity in aircraft viewpoint and the part deformation of aircraft in SAR images.

- (2)

- ASFF: To fully exploit the semantic information of deep features and the high-resolution information of shallow features, the structure of the feature pyramid network is often used for feature fusion. However, the representational ability of the feature pyramid is constrained because of the inconsistency between multi-scale features. Inconsistency is reflected in detecting multi-scale aircraft in the same SAR image. The high-resolution information in the shallow layer is beneficial to the detection of small-size aircraft, while large-size aircraft can be detected with semantic information in the deep layer. When large-size aircraft are recognized as true positives, small-size aircraft are easily mistaken as false negatives, resulting in leak detection. Considering that that aircraft in SAR images consist of several discrete points and problems do occur with the detection of multi-scale aircraft, ASFF is introduced to filter conflicting information, suppressing the inconsistency and improving the scale-invariance of features [52]. The essence of ASFF is to adaptively learn the spatial weight of fusion for feature maps at each scale. Firstly, for the features of a given layer, features from other layers are adjusted to the same scale for fusion. Secondly, the best spatial weight for fusion is acquired by subsequent training. Finally, the features of all levels are adaptively aggregated at each level. In other words, some features carrying contradictory information may be filtered out, while other features with cataloged clues are retained.

3. Experiments and Analyses

3.1. Data Set Description and Parameter Setting

3.2. Evaluation Metrics

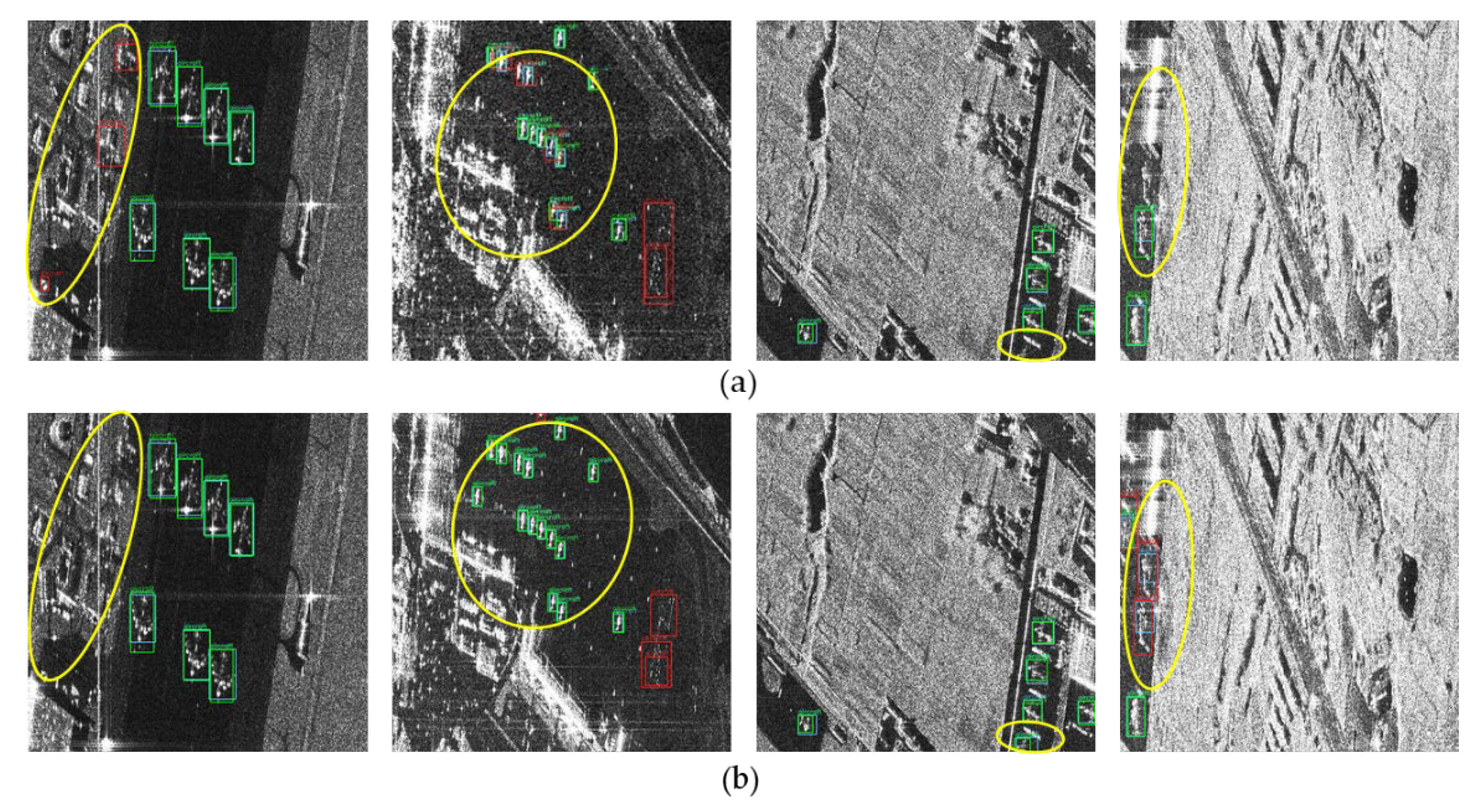

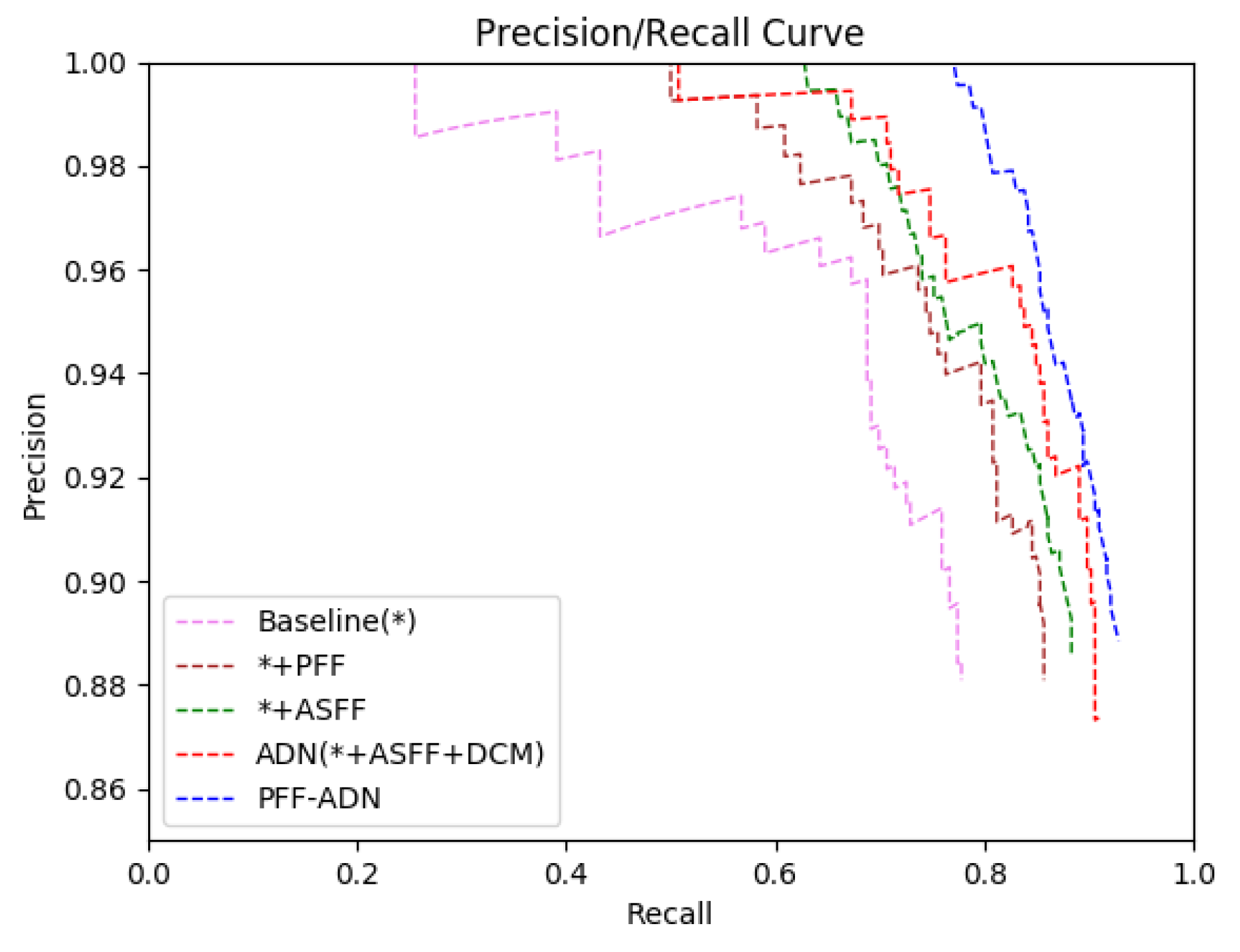

3.3. Ablation Experiments

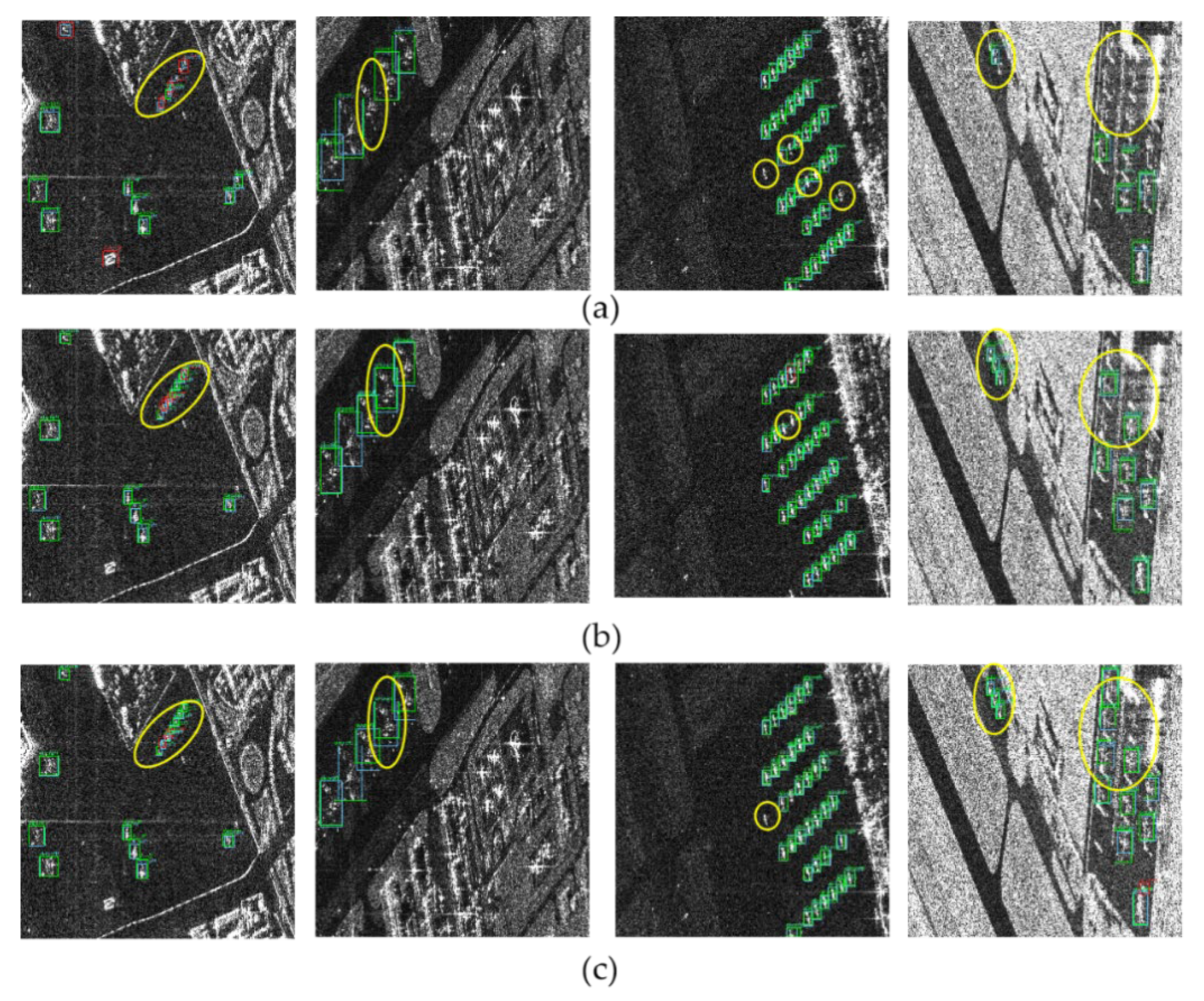

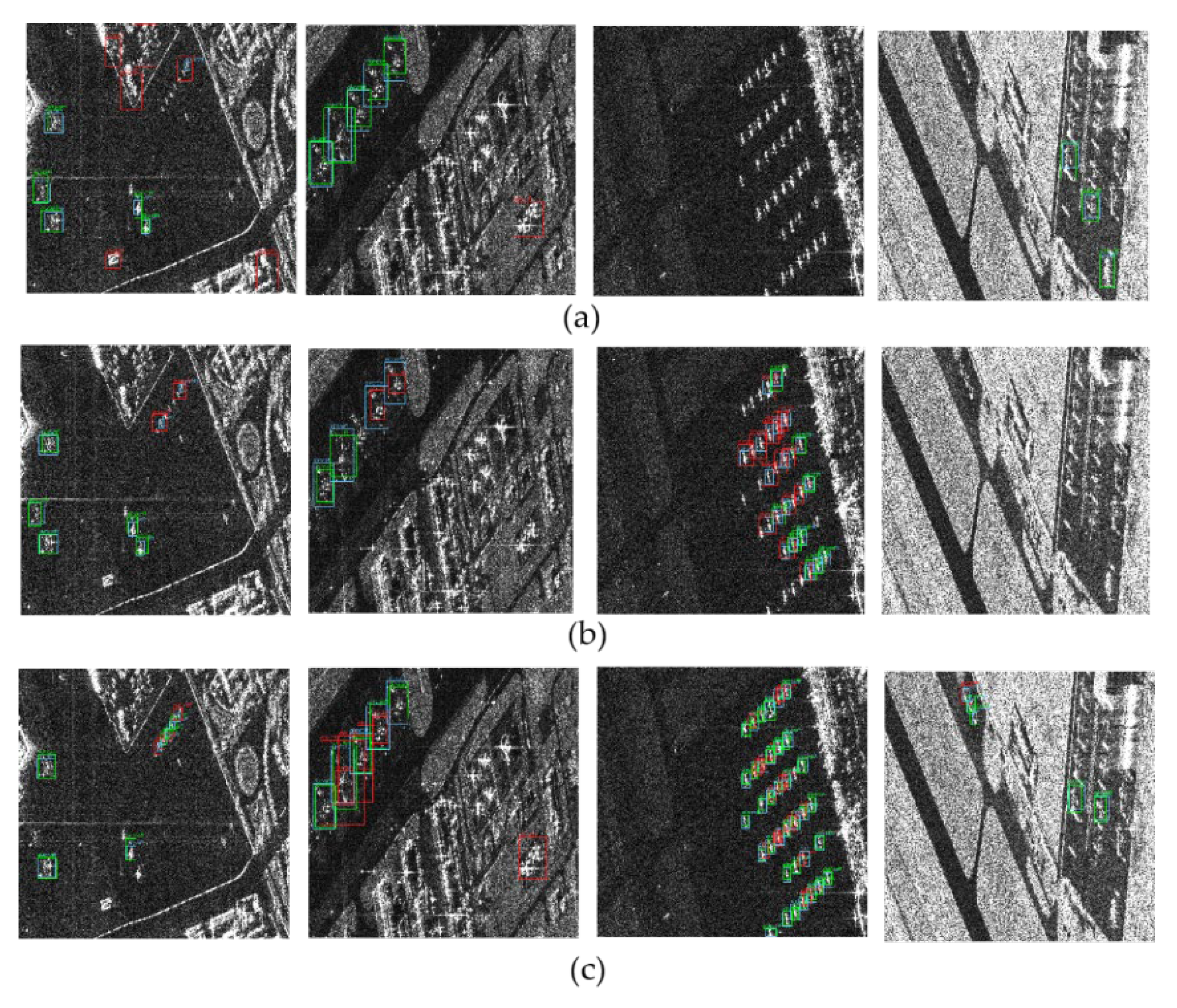

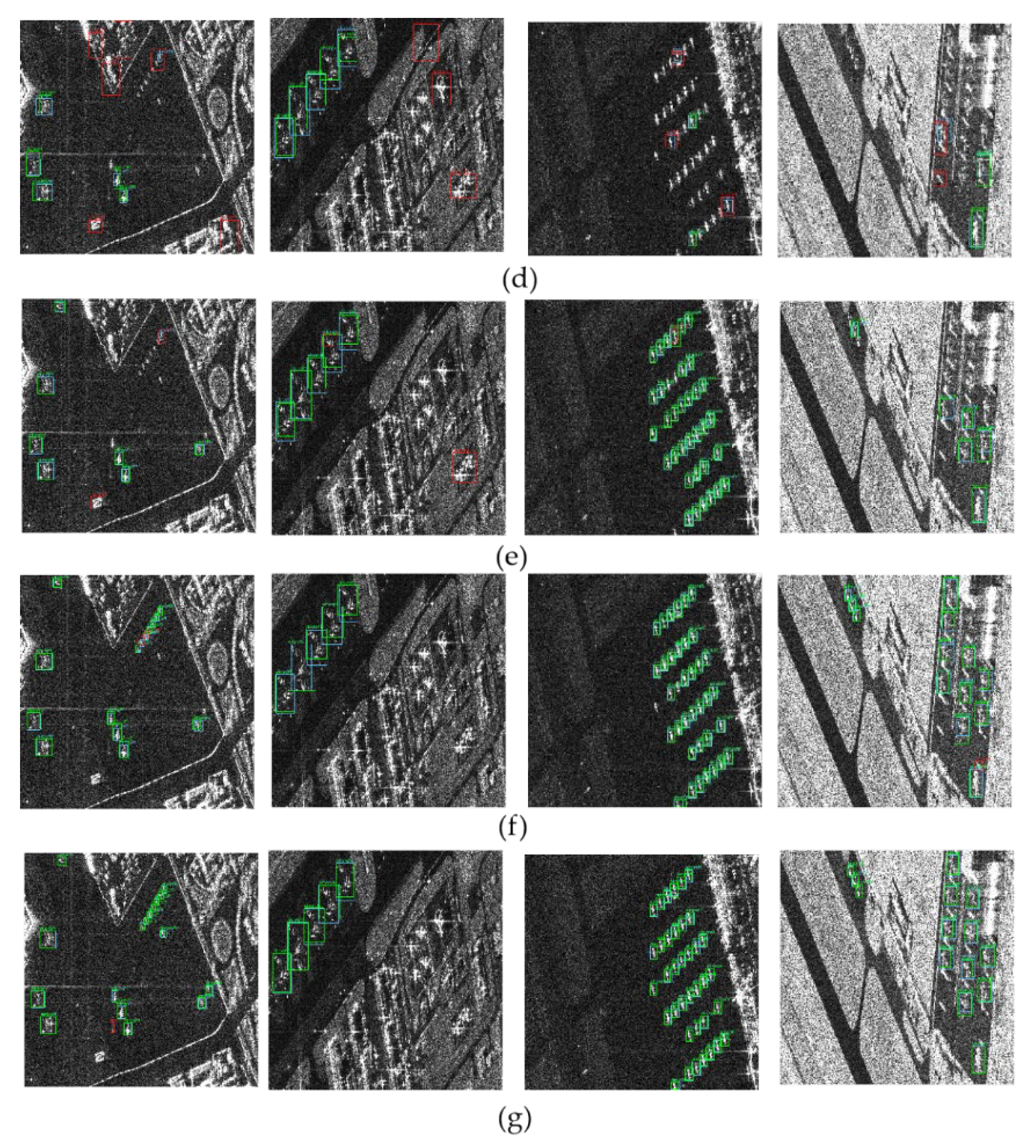

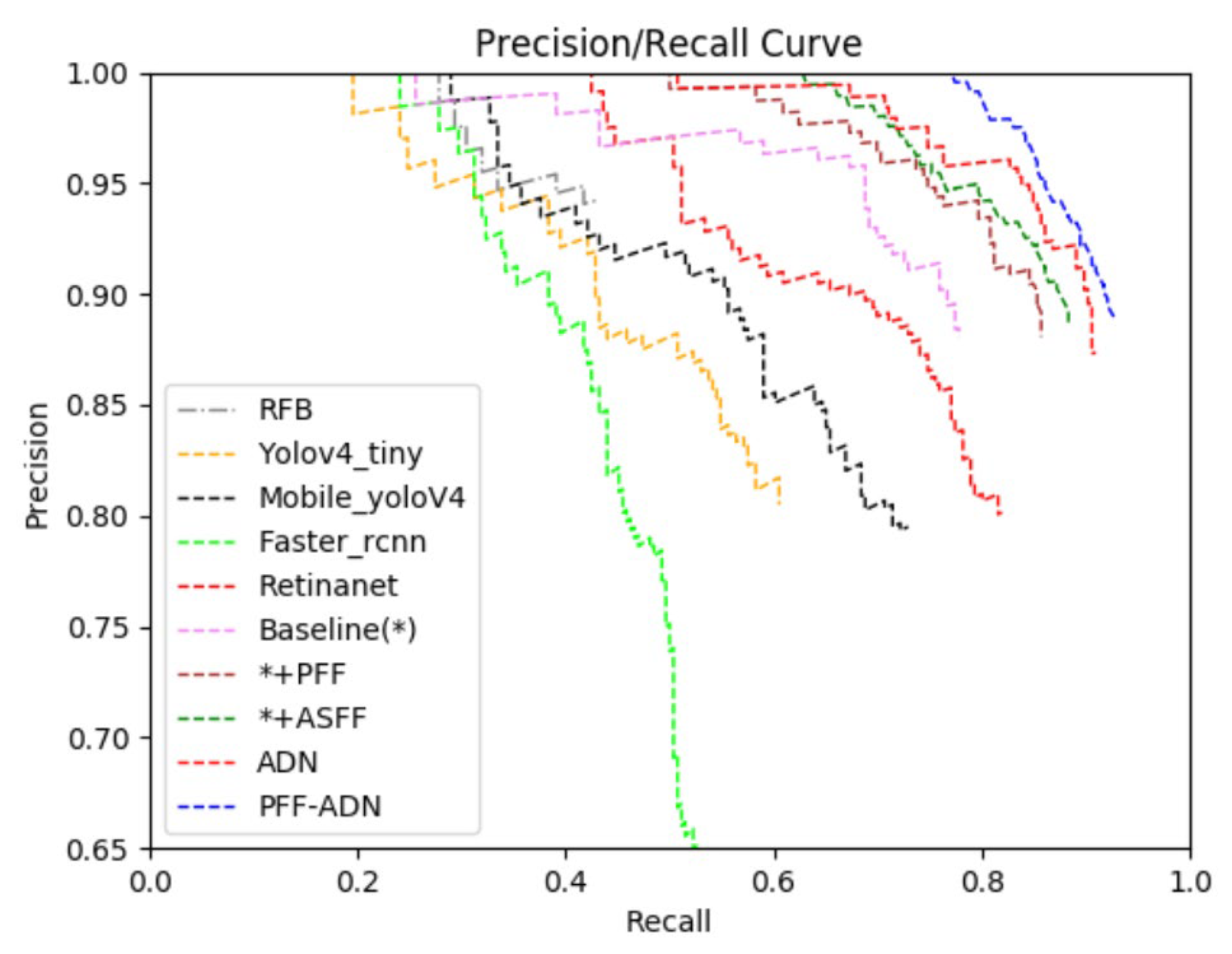

3.3.1. Effect of PFF

3.3.2. Effect of ADN

3.3.3. Performance of PFF-ADN

4. Discussion

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Nomenclature

| SAR | Synthetic Aperture Radar |

| CFAR | Constant False Alarm Rate |

| CA | Cell-Averaging |

| DL | Deep Learning |

| SCR | Signal to Clutter Ratio |

| SOCA | Smallest of Cell-Averaging |

| GOCA | Greatest of Cell-Averaging |

| OS | Ordered Statistic |

| VI | Variability Index |

| PFE | Peak Feature Extraction |

| PFF | Peak Feature Fusion |

| ADN | Adaptive Deformable Network |

| ASFF | Adaptive Spatial Feature Fusion |

| DCM | Deformable Convolution Module |

| GLRT | Generalized Likelihood Ratio Test |

| KTN | K-Times to Truncate and Normalize |

| FPN | Feature Pyramid Network |

| GF3 | GaoFen-3 |

| IoU | Intersection Over Union |

| PR | Power Ring |

References

- Zhu, X.X.; Tuia, D.; Mou, L.C.; Xia, G.S.; Zhang, L.P.; Xu, F.; Fraundorfer, F. Deep Learning in Remote Sensing: A Comprehensive Review and List of Resources. IEEE Geosci. Remote Sens. Mag. 2017, 5, 8–36. [Google Scholar] [CrossRef] [Green Version]

- Xu, G.; Zhang, B.; Yu, H.W.; Chen, J.L.; Xing, M.D.; Hong, W. Sparse Synthetic Aperture Radar Imaging from Compressed Sensing and Machine Learning: Theories, Applications and Trends. IEEE Geosci. Remote Sens. Mag. 2022, 12, 1–26. [Google Scholar] [CrossRef]

- Steenson, B.O. Detection performance of a mean-level threshold. IEEE Trans. Aerosp. Electron. Syst. 1968, AES-4, 529–534. [Google Scholar] [CrossRef]

- Finn, H.M. Adaptive detection mode with threshold control as a function of spatially sampled clutter-level estimates. RCA Rev. 1968, 29, 414–465. [Google Scholar]

- Hansen, V.G. Constant false alarm rate processing in search radars. In Proceedings of the IEEE Conference Publication No. 105, “Radar-Present and Future”, London, UK, 23–25 October 1973; pp. 325–332. [Google Scholar]

- Trunk, G.V. Range resolution of targets using automatic detectors. IEEE Trans. Aerosp. Electron. Syst. 1978, AES-4, 750–755. [Google Scholar] [CrossRef]

- Kuttikkad, S.; Chellappa, R. Non-Gaussian CFAR techniques for target detection in high resolution SAR images. In Proceedings of the 1st International Conference on Image Processing, Austin, TX, USA, 13–16 November 1994; Volume 1, pp. 910–914. [Google Scholar]

- Smith, M.E.; Varshney, P.K. VI-CFAR: A novel CFAR algorithm based on data variability. In Proceedings of the 1997 IEEE National Radar Conference, Syracuse, NY, USA, 13–15 May 1997; pp. 263–268. [Google Scholar]

- Conte, E.; Lops, M.; Ricci, G. Radar detection in K-distributed clutter. IEE Proc. Radar Sonar Navig. 1994, 141, 116–118. [Google Scholar] [CrossRef]

- Lombardo, P.; Sciotti, M.; Kaplan, L.M. SAR prescreening using both target and shadow information. In Proceedings of the 2001 IEEE Radar Conference (Cat. No. 01CH37200), Atlanta, GA, USA, 3 May 2001; pp. 147–152. [Google Scholar]

- Yu, Y.; Wang, B.; Zhang, L. Hebbian-based neural networks for bottom-up visual attention and its applications to ship detection in SAR images. Neurocomputing 2011, 74, 2008–2017. [Google Scholar] [CrossRef]

- El-Darymli, K.; Moloney, C.; Gill, E.; McGuire, P.; Power, D.; Deepakumara, J. Nonlinearity and the effect of detection on single-channel synthetic aperture radar imagery. In Proceedings of the OCEANS 2014-TAIPEI, Taipei, Taiwan, 7–10 April 2014; pp. 1–7. [Google Scholar]

- Gu, D.; Xu, X. Multi-feature extraction of ships from SAR images. In Proceedings of the 2013 6th International Congress on Image and Signal Processing (CISP), Hangzhou, China, 16–18 December 2013; Volume 1, pp. 454–458. [Google Scholar]

- Kaplan, L.M.; Murenzi, R.; Namuduri, K.R. Extended fractal feature for first-stage SAR target detection. In Proceedings of the Algorithms for Synthetic Aperture Radar Imagery VI, Orlando, FL, USA, 5–9 April 1999; SPIE: Bellingham, WA, USA, 1999; Volume 3721, pp. 35–46. [Google Scholar]

- Kaplan, L.M. Improved SAR target detection via extended fractal features. IEEE Trans. Aerosp. Electron. Syst. 2001, 37, 436–451. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Law, H.; Deng, J. Cornernet: Detecting objects as paired keypoints. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 734–750. [Google Scholar]

- Zhou, X.Y.; Zhuo, J.C.; Krhenbühl, P. Bottom-up Object Detection by Grouping Extreme and Center Points. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 850–859. [Google Scholar]

- Tian, Z.; Shen, C.; Chen, H.; He, T. FCOS: Fully Convolutional One-Stage Object Detection. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27–28 October 2019; pp. 9627–9636. [Google Scholar]

- Zhou, X.Y.; Wang, D.Q.; Krähenbühl, P. Objects as points. arXiv 2019, arXiv:1904.07850. [Google Scholar]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-CNN. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C. Ssd: Single shot multibox detector. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; pp. 21–37. [Google Scholar]

- Guo, Q.; Wang, H.; Xu, F. Scattering Enhanced Attention Pyramid Network for Aircraft Detection in SAR Images. IEEE Trans. Geosci. Remote Sens. 2020, 59, 7570–7587. [Google Scholar] [CrossRef]

- Fu, K.; Fu, J.; Wang, Z.; Sun, X. Scattering-keypoint-guided network for oriented ship detection in high-resolution and large-scale SAR images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 11162–11178. [Google Scholar] [CrossRef]

- Harris, C.; Stephens, M. A combined corner and edge detector. In Proceedings of the Alvey Vision Conference, Manchester, UK, 31 August–2 September 1988; Volume 15, pp. 10–5244. [Google Scholar]

- Wang, S.Y.; Gao, X.; Sun, H.; Zheng, X.W.; Sun, X. An aircraft detection method based on convolutional neural networks in high-resolution SAR images. J. Radars 2017, 6, 195–203. [Google Scholar] [CrossRef]

- Zhang, L.; Li, C.; Zhao, L.; Xiong, B.; Quan, S.; Kuang, G. A cascaded three-look network for aircraft detection in SAR images. Remote Sens. Lett. 2020, 11, 57–65. [Google Scholar] [CrossRef]

- Zhao, Y.; Zhao, L.; Li, C.; Kuang, G. Pyramid Attention Dilated Network for Aircraft Detection in SAR Images. IEEE Geosci. Remote Sens. Lett. 2020, 18, 662–666. [Google Scholar] [CrossRef]

- He, C.; Tu, M.; Xiong, D.; Tu, F.; Liao, M. Adaptive Component Selection-Based Discriminative Model for Object Detection in High-Resolution SAR Imagery. Int. J. Geo-Inf. 2018, 7, 72. [Google Scholar] [CrossRef] [Green Version]

- Dou, F.; Diao, W.; Sun, X.; Zhang, Y.; Fu, K. Aircraft reconstruction in high-resolution SAR images using deep shape prior. ISPRS Int. J. Geo-Inf. 2017, 6, 330. [Google Scholar] [CrossRef] [Green Version]

- Imbert, J.; Dashyan, G.; Goupilleau, A.; Ceillier, T.; Corbineau, M.C. Improving performance of aircraft detection in satellite imagery while limiting the labelling effort: Hybrid active learning. In Proceedings of the 2021 IEEE International Geoscience and Remote Sensing Symposium IGARSS, Brussels, Belgium, 11–16 July 2021; pp. 220–223. [Google Scholar]

- Han, P.; Lu, B.; Zhou, B.; Han, B. Aircraft Target Detection in Polsar Image based on Region Segmentation and Multi-Feature Decision. In Proceedings of the IGARSS 2020—2020 IEEE International Geoscience and Remote Sensing Symposium, Waikoloa, HI, USA, 26 September–2 October 2020; pp. 2201–2204. [Google Scholar]

- Jia, H.; Guo, Q.; Chen, J.; Wang, F.; Wang, H.; Xu, F. Adaptive Component Discrimination Network for Airplane Detection in Remote Sensing Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 7699–7713. [Google Scholar] [CrossRef]

- Kang, Y.; Wang, Z.; Fu, J.; Sun, X.; Fu, K. SFR-Net: Scattering Feature Relation Network for Aircraft Detection in Complex SAR Images. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5218317. [Google Scholar] [CrossRef]

- Zhao, Y.; Zhao, L.; Liu, Z.; Hu, D.; Kuang, G.; Liu, L. Attentional Feature Refinement and Alignment Network for Aircraft Detection in SAR Imagery. arXiv 2022, arXiv:2201.07124. [Google Scholar] [CrossRef]

- Bi, H.; Yao, J.; Wei, Z.; Hong, D.; Chanussot, J. PolSAR image classification based on robust low-rank feature extraction and Markov random field. IEEE Geosci. Remote Sens. Lett. 2020, 19, 1–5. [Google Scholar] [CrossRef]

- Margt, G.; Mallorqui, J.J.; Rius, J.M.; Sanz-Marcos, J. On the usage of GRECOSAR, an orbital polarimetric SAR simulator of complex targets, to vessel classification studies. IEEE Trans. Geosci. Remote Sens. 2006, 44, 3517–3526. [Google Scholar] [CrossRef]

- Margarit, G.; Mallorqui, J. Assessment of polarimetric SAR interferometry for improving ship classification based on simulated data. Sensors 2008, 8, 7715–7735. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Margarit, G.; Tabasco, A. Ship classification in single-pol SAR images based on fuzzy logic. IEEE Trans. Geosci. Remote Sens. 2011, 49, 3129–3138. [Google Scholar] [CrossRef]

- Xu, G.; Zhang, B.J.; Chen, J.L.; Hong, W. Structured Low-rank and Sparse Method for ISAR Imaging with 2D Compressive Sampling. IEEE Trans. Geosci. Remote Sens. 2022; early access. [Google Scholar]

- Gao, J.; Gao, X.; Sun, X. Geometrical Features-based Method for Aircraft Target Interpretation in High-resolution SAR Images. Foreign Electron. Meas. Technol. 2015, 34, 21–28. [Google Scholar]

- Chen, J.; Zhang, B.; Wang, C. Backscattering feature analysis and recognition of civilian aircraft in TerraSAR-X images. IEEE Geosci. Remote Sens. Lett. 2014, 12, 796–800. [Google Scholar] [CrossRef]

- Zhu, J.W.; Qiu, X.L.; Pan, Z.X.; Zhang, Y.T.; Lei, B. An improved shape contexts based ship classification in SAR images. Remote Sens. 2017, 9, 145. [Google Scholar] [CrossRef] [Green Version]

- Iii, G.J.; Bhanu, B. Recognizing articulated objects in SAR images. Pattern Recognit. 2001, 34, 469–485. [Google Scholar] [CrossRef]

- Guo, Q.; Wang, H.; Xu, F. Research progress on aircraft detection and recognition in SAR imager. J. Radars 2020, 9, 497–513. [Google Scholar]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft COCO: Common Objects in Context; Springer International Publishing: Zurich, Switzerland, 2014. [Google Scholar]

- Dai, J.; Qi, H.; Xiong, Y.; Li, Y.; Zhang, G.; Hu, H.; Wei, Y. Deformable convolutional networks. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 764–773. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Zhu, X.; Hu, H.; Lin, S.; Dai, J. Deformable ConvNets V2: More Deformable, Better Results. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019; pp. 1–13. [Google Scholar]

- Liu, S.; Huang, D.; Wang, Y. Learning Spatial Fusion for Single-Shot Object Detection. arXiv 2019, arXiv:1911.09516. [Google Scholar]

| Dataset | Training | Test | Total |

|---|---|---|---|

| GF-3 | 495 | 165 | 660 |

| Algorithm | (%) | (%) | (%) | (%) |

|---|---|---|---|---|

| Baseline (*) | 77.08 | 88.08 | 77.81 | 82.63 |

| * + PFF | 82.72 | 86.34 | 85.71 | 86.02 |

| Algorithm | (%) | (%) | (%) | (%) |

|---|---|---|---|---|

| Baseline (*) | 77.08 | 88.08 | 77.81 | 82.63 |

| * + ASFF | 84.65 | 88.60 | 88.34 | 88.46 |

| * + ASFF + DCM | 86.15 | 87.36 | 90.97 | 89.12 |

| Algorithm | (%) | (%) | (%) | (%) | FPS (Slice) |

|---|---|---|---|---|---|

| RFB | 42.24 | 94.21 | 42.85 | 58.91 | 85 |

| Yolov4-tiny | 58.28 | 81.46 | 61.27 | 69.93 | 341 |

| Mobile-yolov4 | 68.58 | 79.47 | 72.92 | 76.05 | 325 |

| FasterRcnn | 49.87 | 65.76 | 53.75 | 58.84 | 13 |

| RetinaNet | 78.36 | 80.04 | 81.95 | 80.98 | 268 |

| ADN | 86.15 | 87.36 | 90.97 | 89.12 | 11 |

| PFF-ADN | 89.34 | 89.44 | 92.85 | 91.11 | 11 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xiao, X.; Jia, H.; Xiao, P.; Wang, H. Aircraft Detection in SAR Images Based on Peak Feature Fusion and Adaptive Deformable Network. Remote Sens. 2022, 14, 6077. https://doi.org/10.3390/rs14236077

Xiao X, Jia H, Xiao P, Wang H. Aircraft Detection in SAR Images Based on Peak Feature Fusion and Adaptive Deformable Network. Remote Sensing. 2022; 14(23):6077. https://doi.org/10.3390/rs14236077

Chicago/Turabian StyleXiao, Xiayang, Hecheng Jia, Penghao Xiao, and Haipeng Wang. 2022. "Aircraft Detection in SAR Images Based on Peak Feature Fusion and Adaptive Deformable Network" Remote Sensing 14, no. 23: 6077. https://doi.org/10.3390/rs14236077

APA StyleXiao, X., Jia, H., Xiao, P., & Wang, H. (2022). Aircraft Detection in SAR Images Based on Peak Feature Fusion and Adaptive Deformable Network. Remote Sensing, 14(23), 6077. https://doi.org/10.3390/rs14236077