Cloudformer: A Cloud-Removal Network Combining Self-Attention Mechanism and Convolution

Abstract

1. Introduction

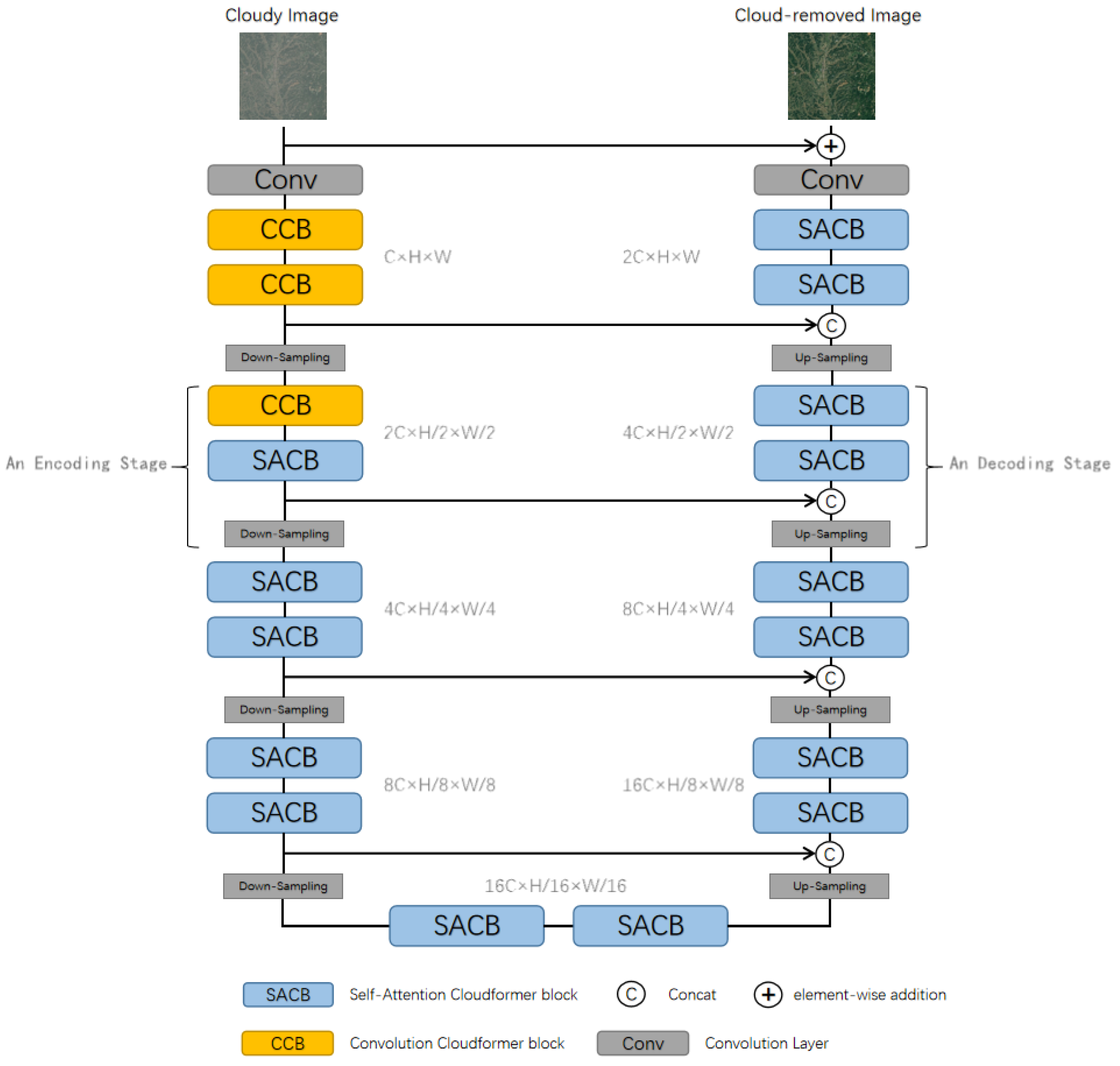

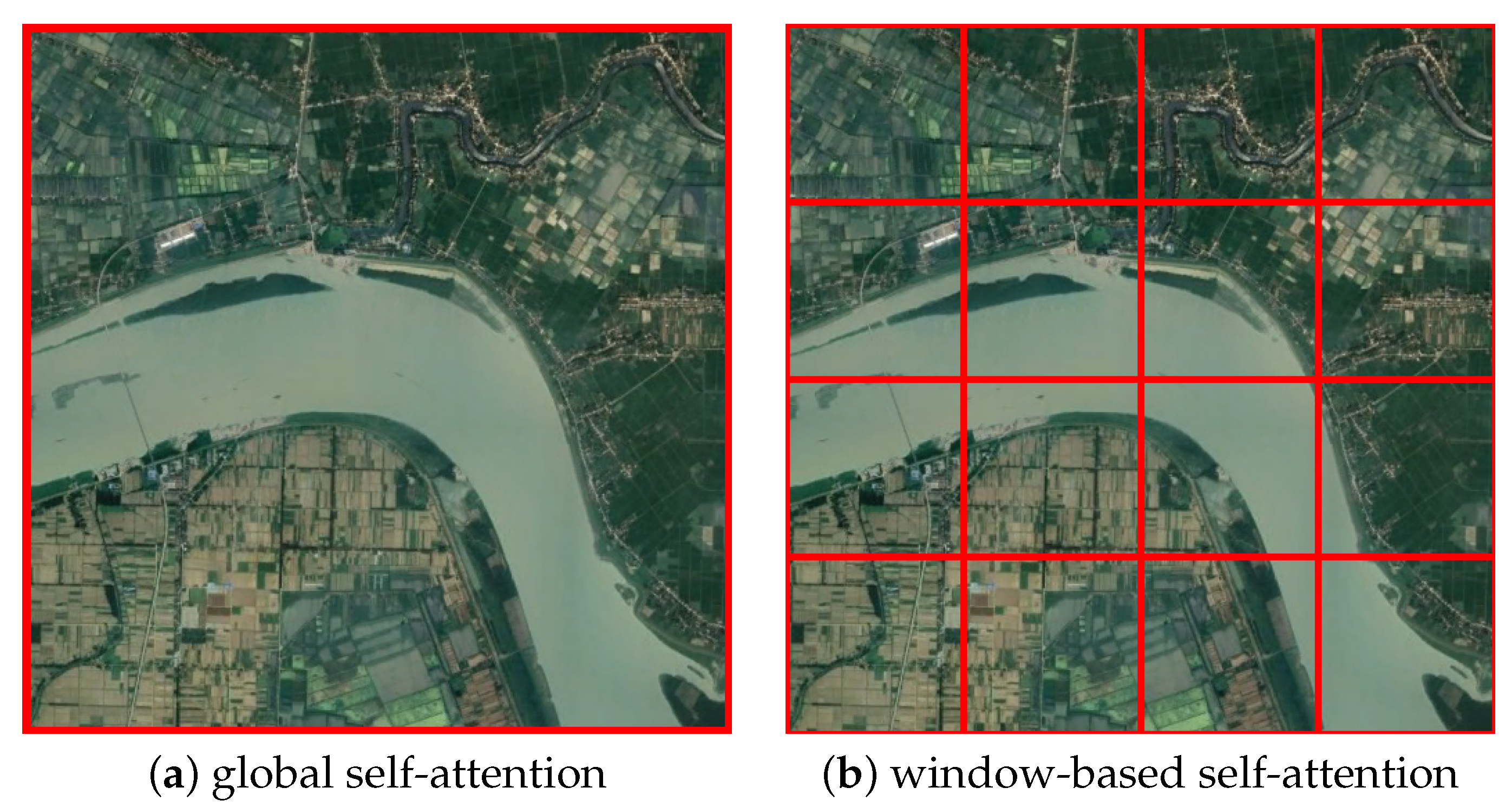

- We use the convolution layers to replace the self-attention mechanism in the shallow layer of the network to extract simple features over a small range. Window-based Multi-head Self-Attention (W-MSA) is used in the deep layers of the network to calculate dependencies on a larger scale. Thus, convolution and self-attention are combined well to improve network performance.

- A transformer-based U-shaped encoder–decoder residual symmetric network architecture is used to achieve end-to-end cloud removal, enhancing the network’s ability to remove thin and thick clouds.

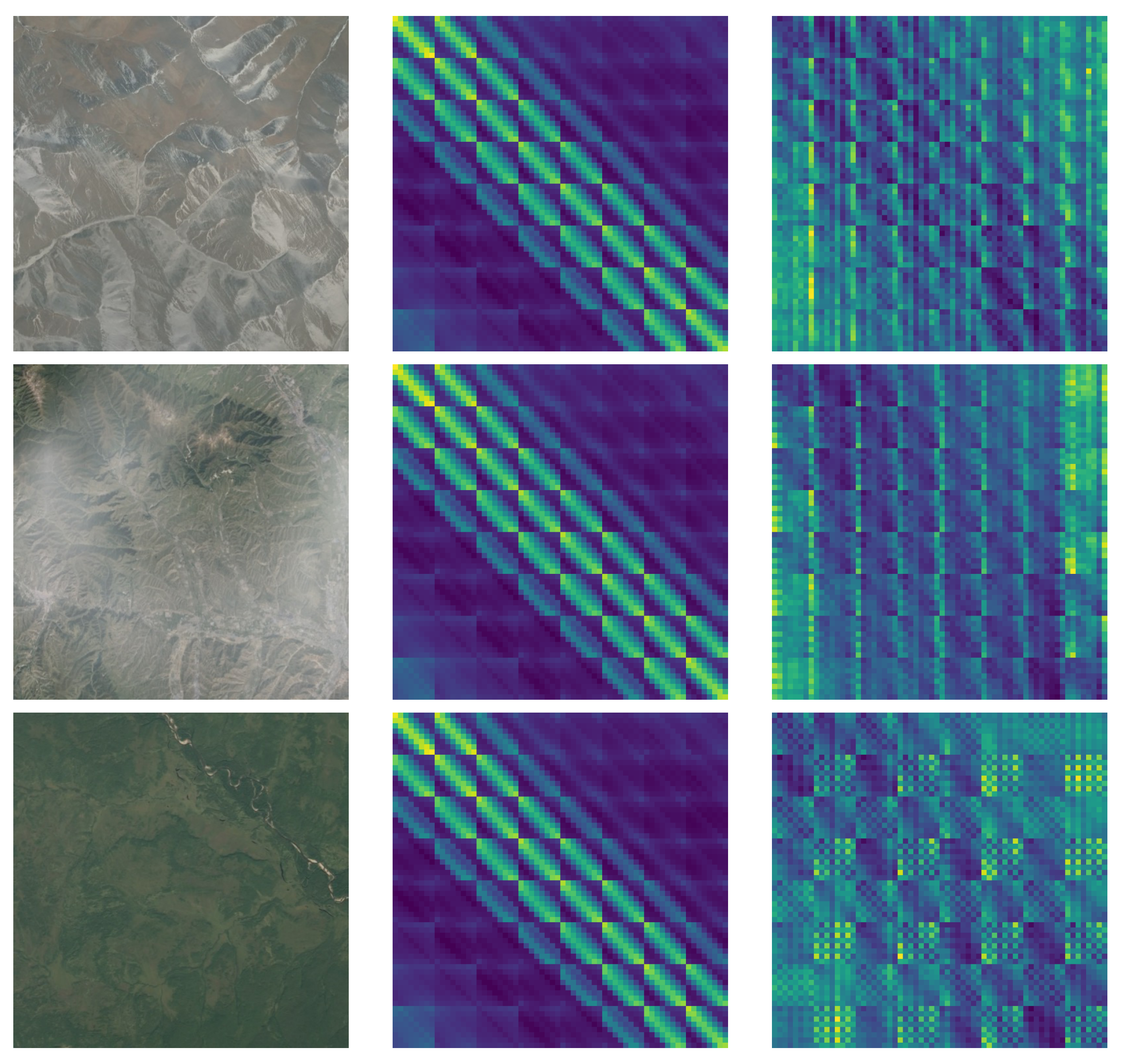

- Locally-enhanced Positional Encoding (LePE) [12] is introduced into W-MSA. Different from the commonly used relative position encoding that remains fixed for different inputs after training, LePE is based on depthwise convolution, which can generate different position encodings for different inputs.

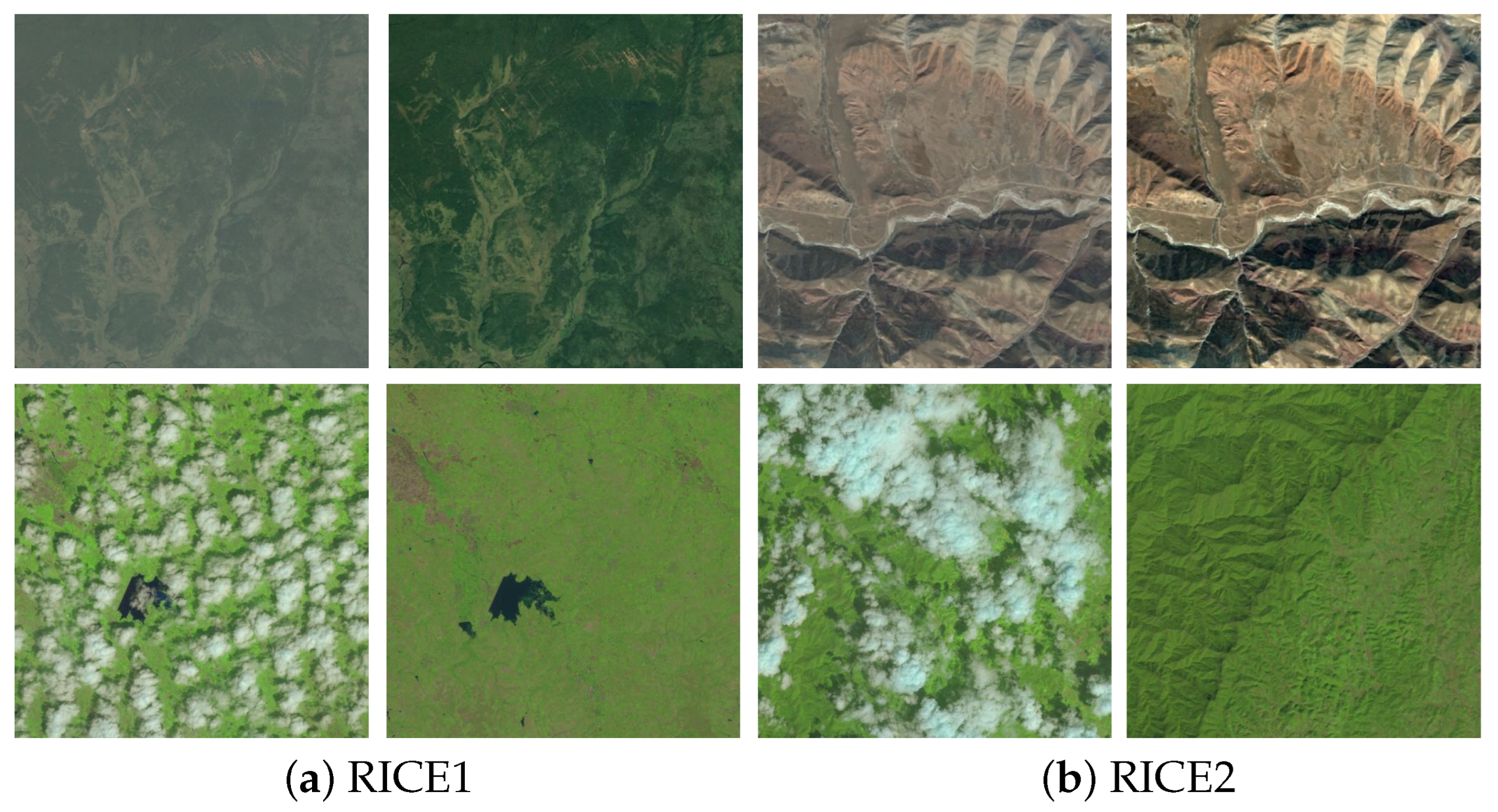

- Comparative experiments on the thin-cloud dataset RICE1, the thick-cloud dataset RICE2 and the synthetic-cloud Paris dataset demonstrate that our method outperforms the other four methods, and the effectiveness of the proposed method is verified by experiments and visual analysis.

2. Related Works

2.1. Cloud Removal Methods

2.1.1. Thick-Cloud Removal Methods

2.1.2. Thin-Cloud Removal Methods

2.2. Vision Transformers

3. Method

3.1. Architecture of Cloudformer

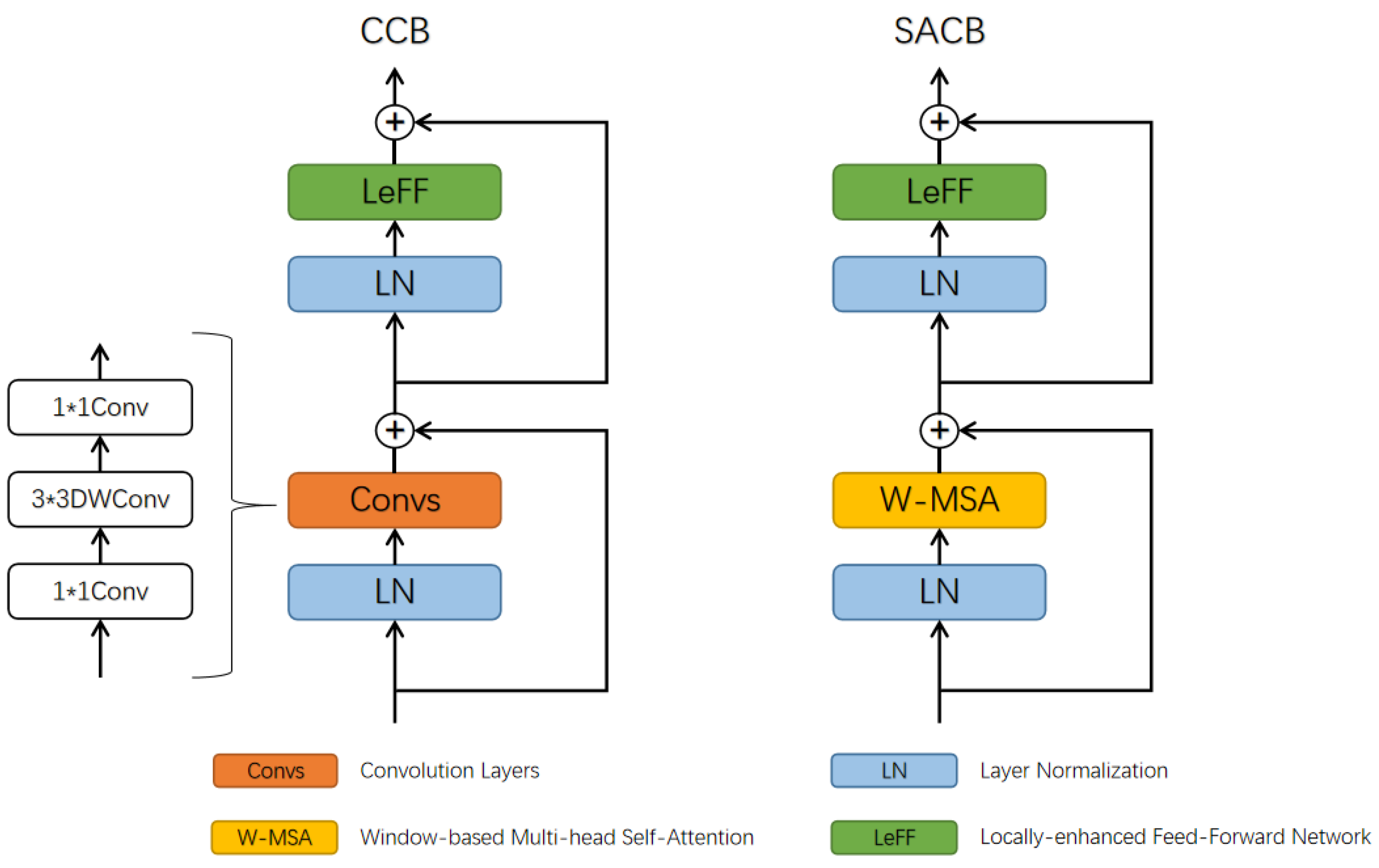

3.2. Cloudformer Block

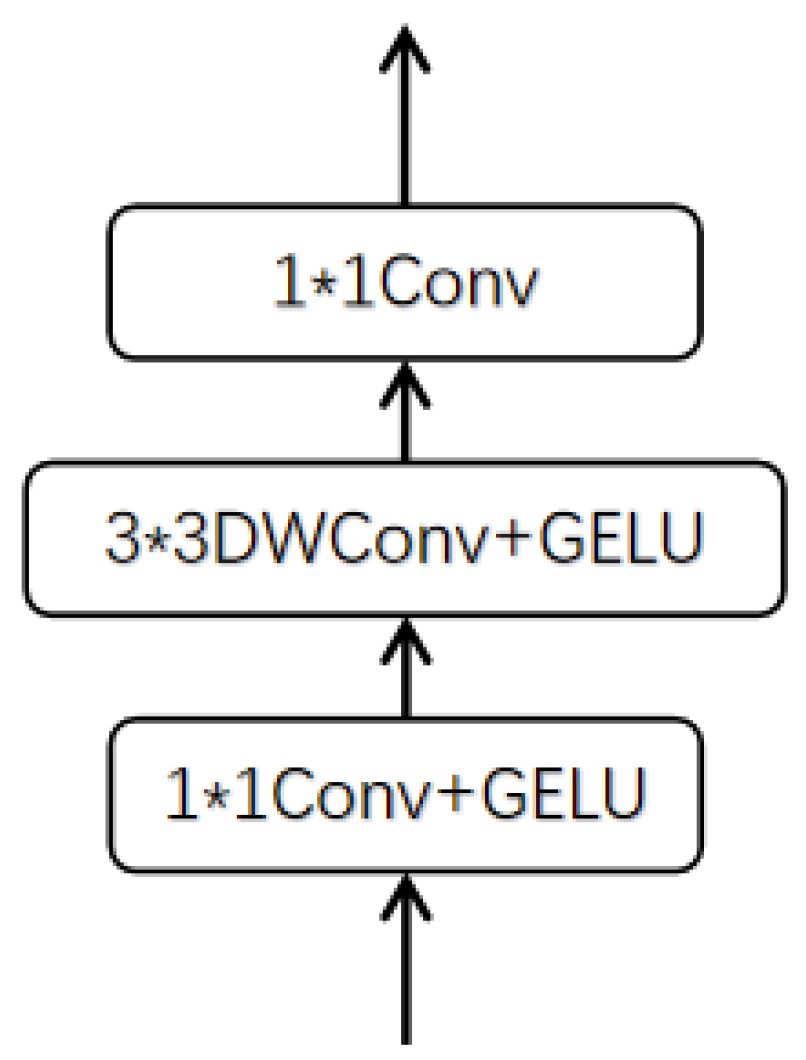

3.2.1. Self-Attention Cloudformer Block (SACB)

3.2.2. Convolution Cloudformer Block (CCB)

4. Experimental Results

4.1. Description of Dataset

4.2. Training Details and Evaluation Metrics

4.3. Network Architecture Analysis

4.3.1. Comparison of Transformer and CNN

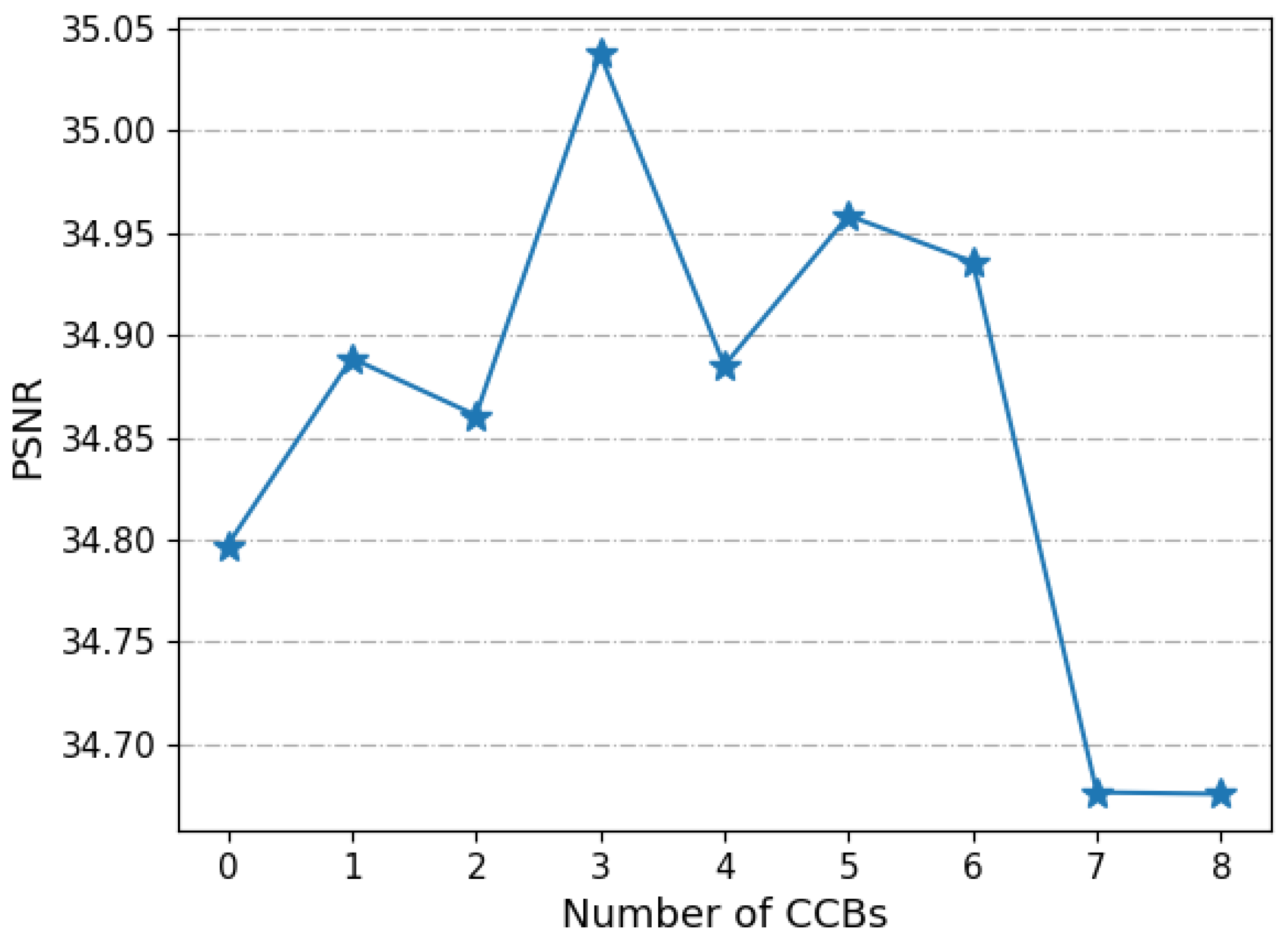

4.3.2. The Trade-Off between Convolution and Self-Attention

4.3.3. Positional Encoding

4.3.4. Window Shift

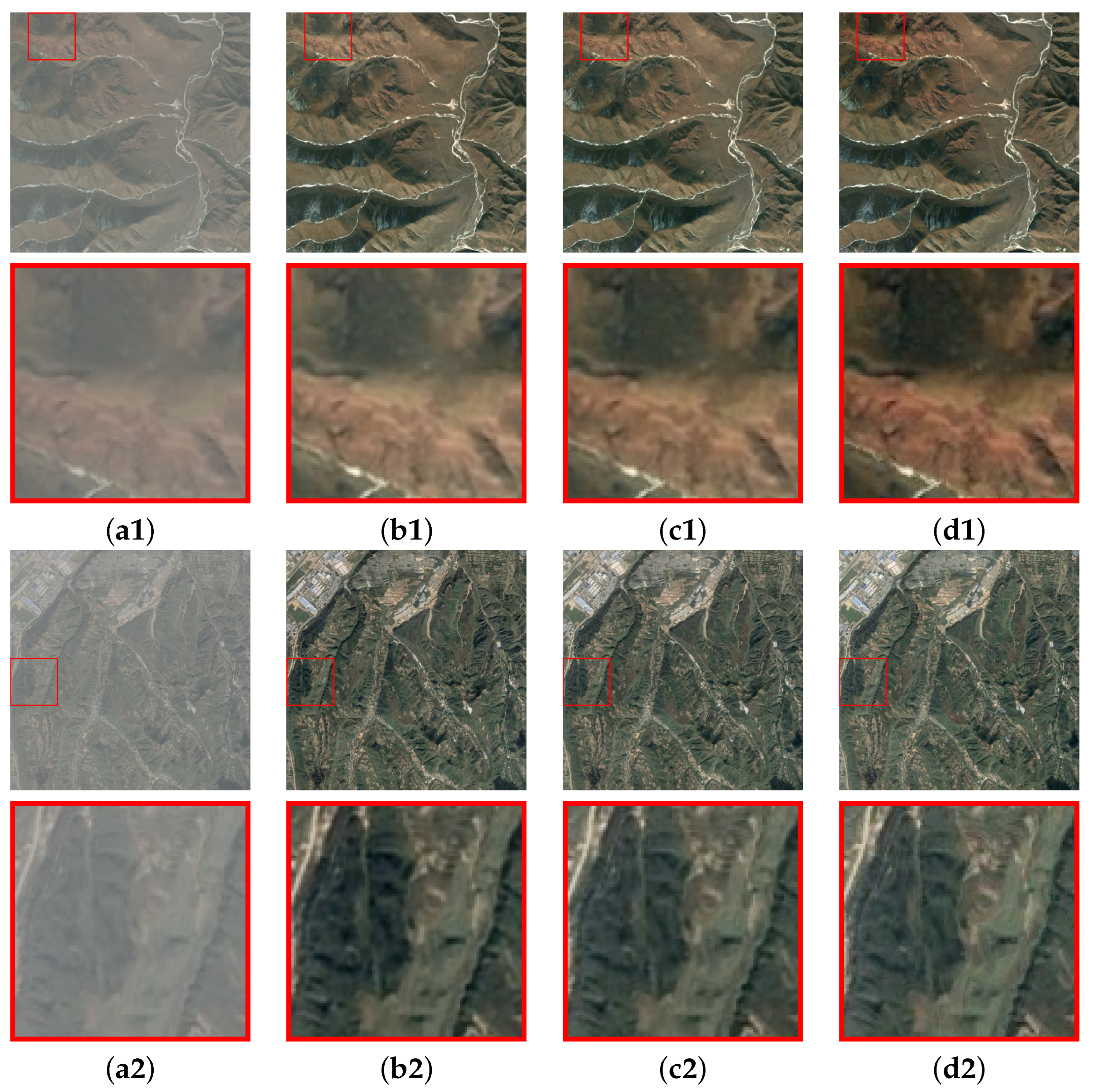

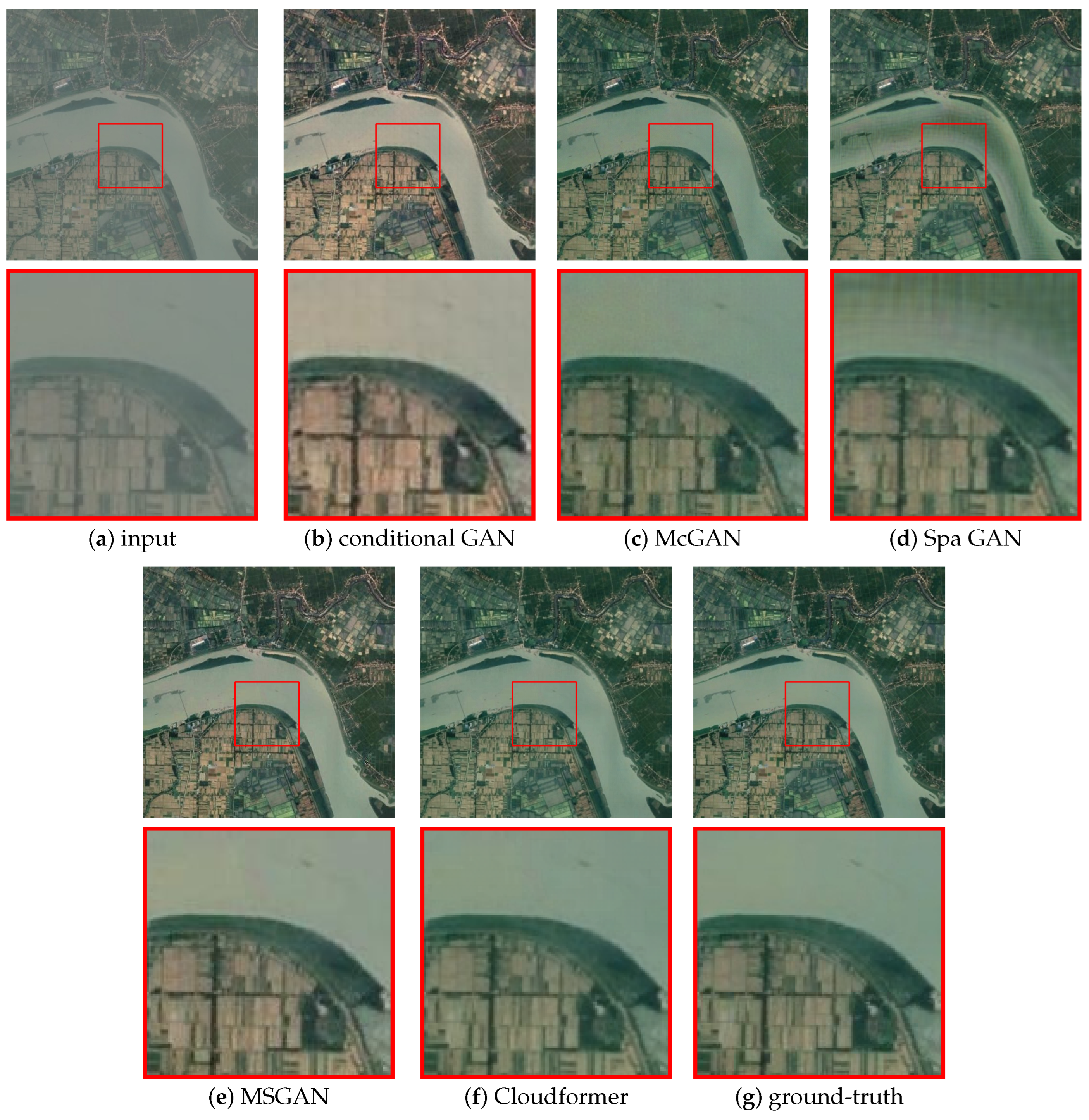

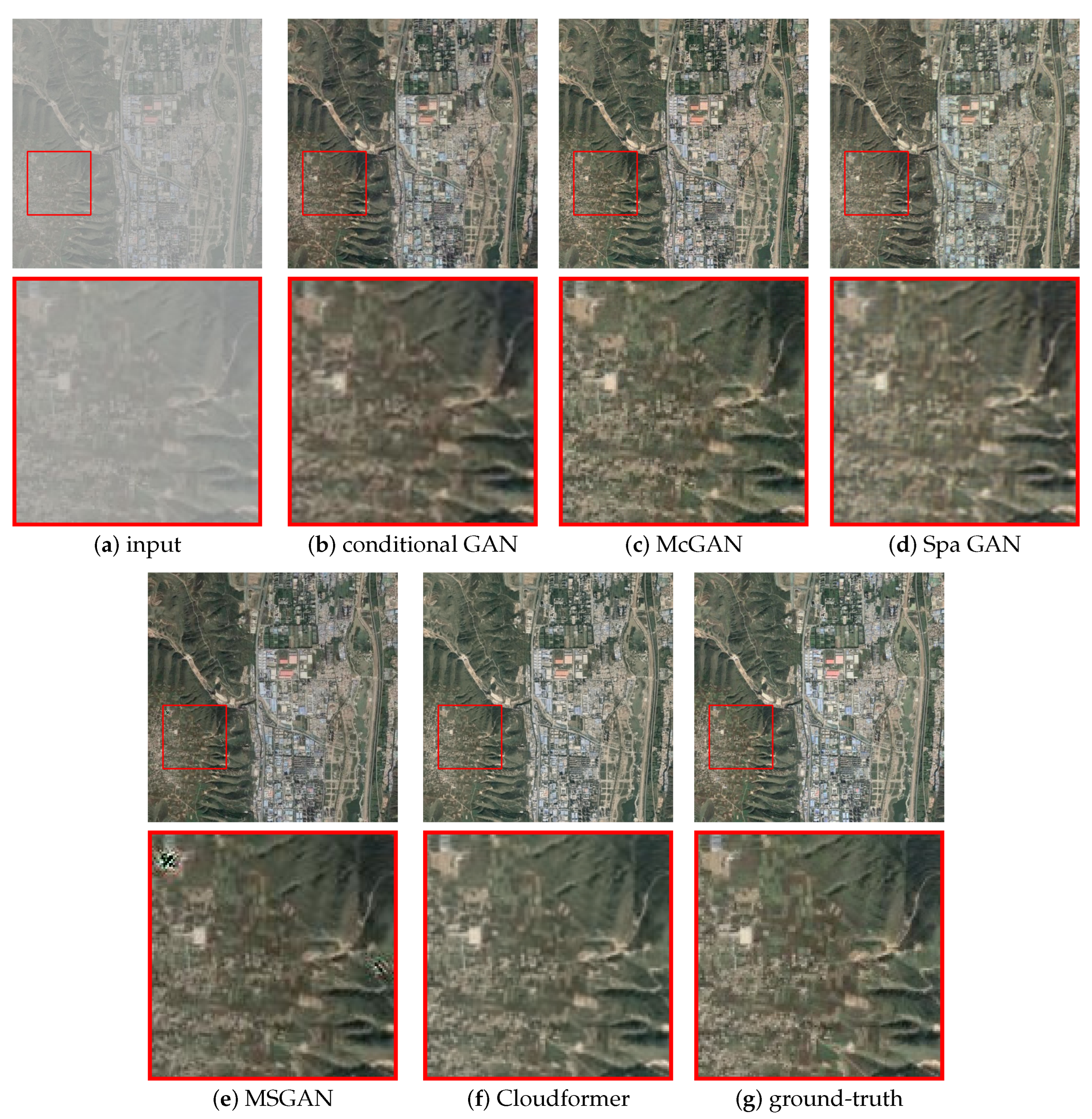

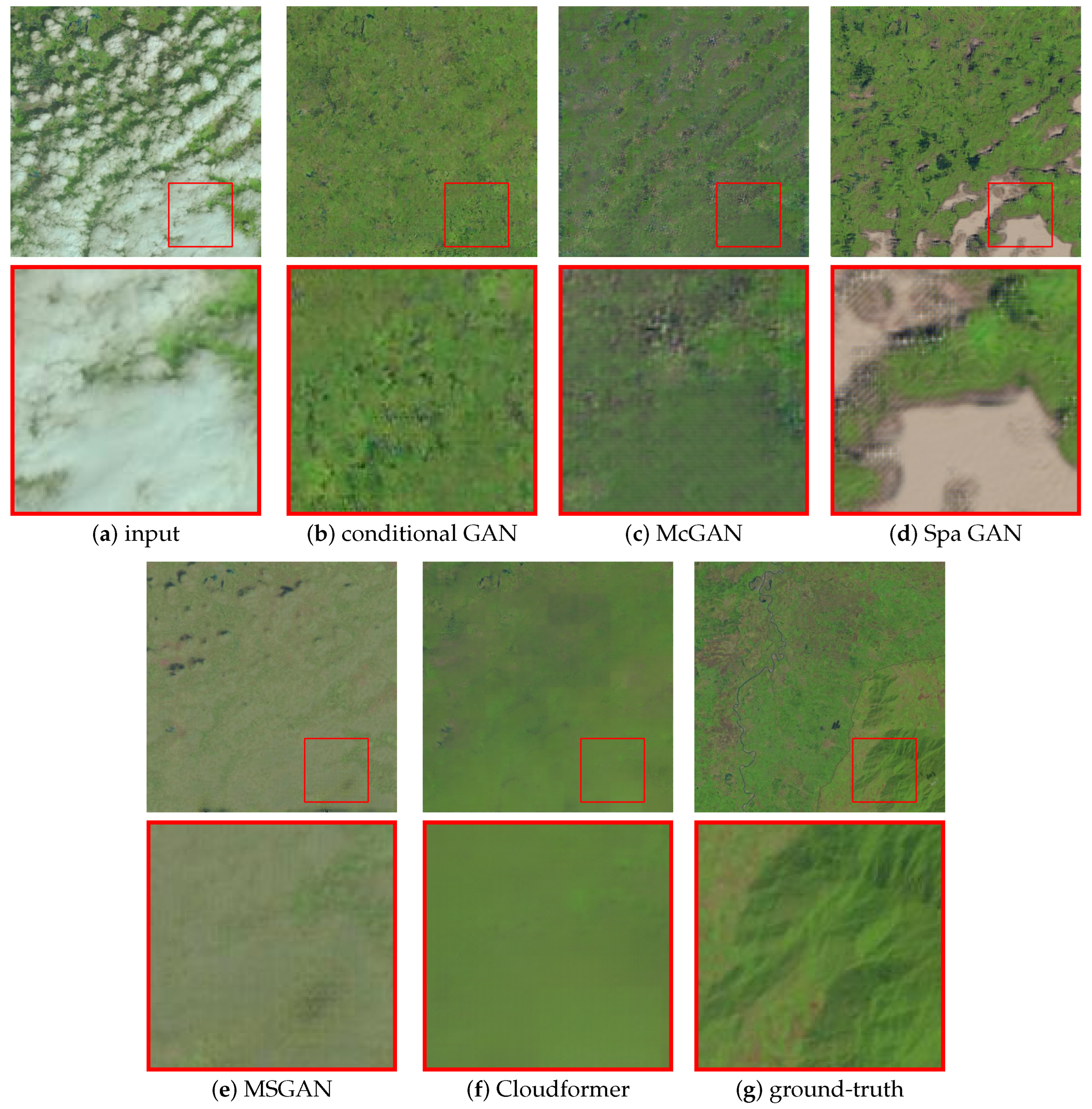

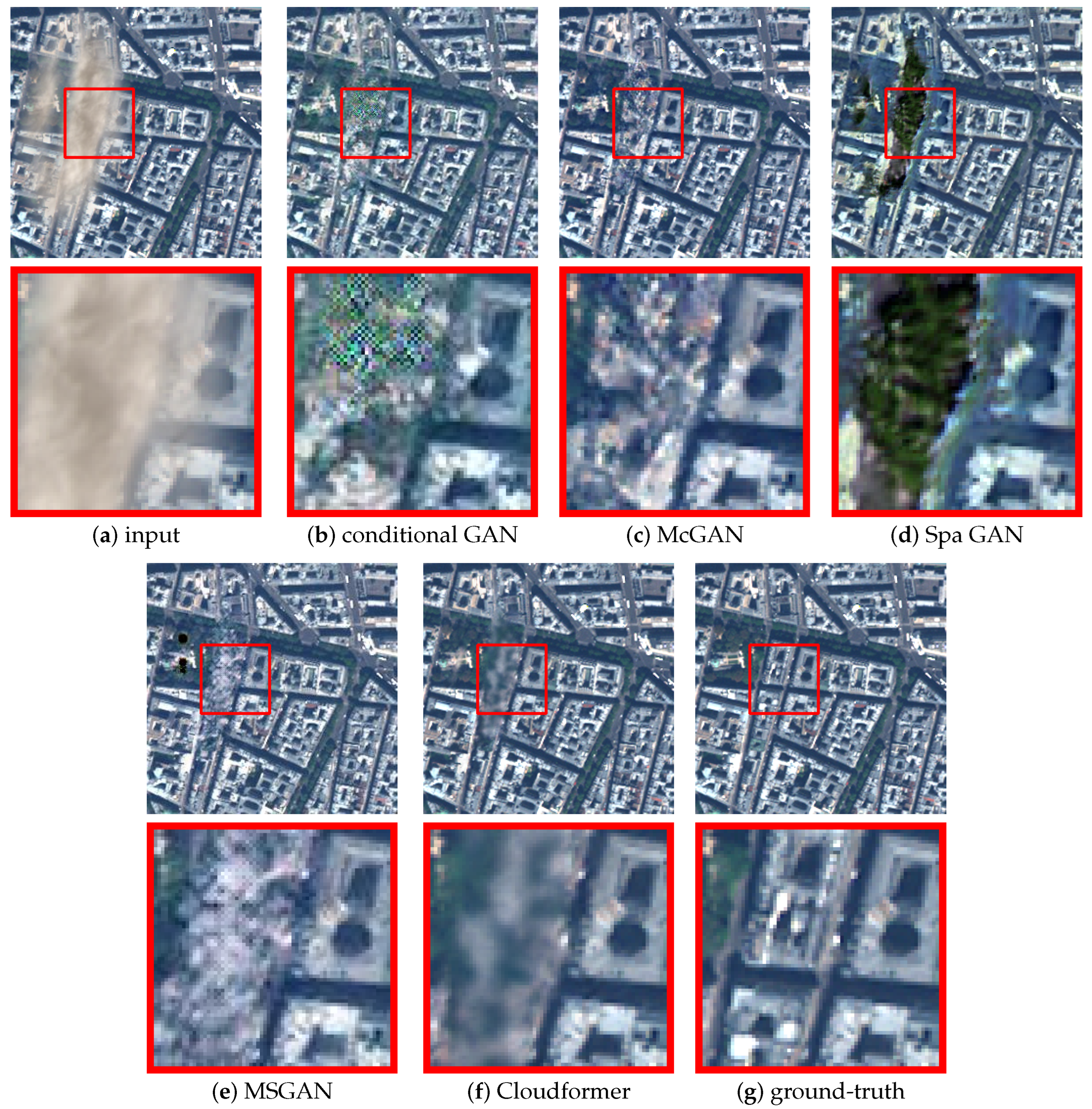

4.4. Results on RICE1

4.5. Results on RICE2

4.6. Inference Speed

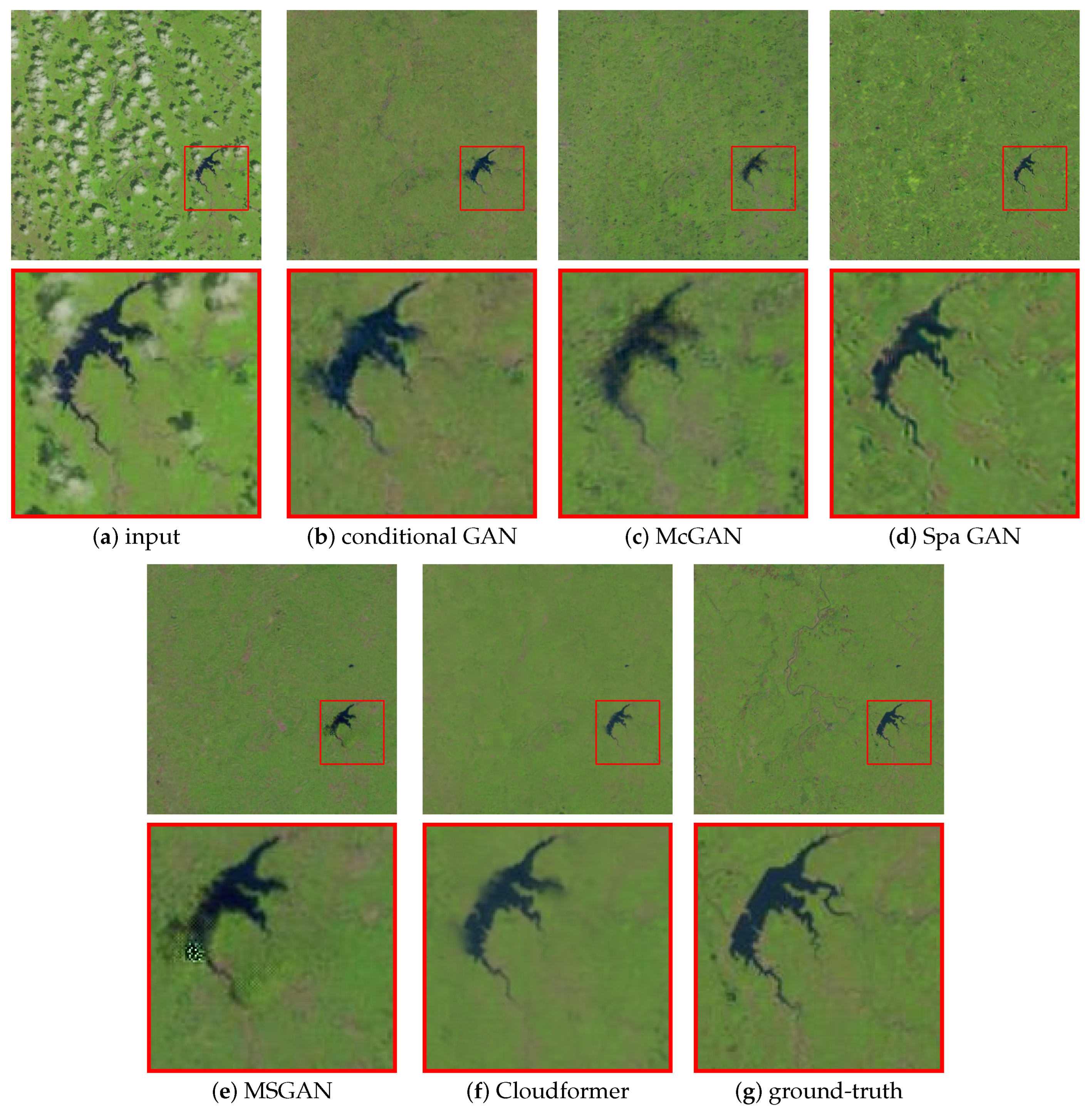

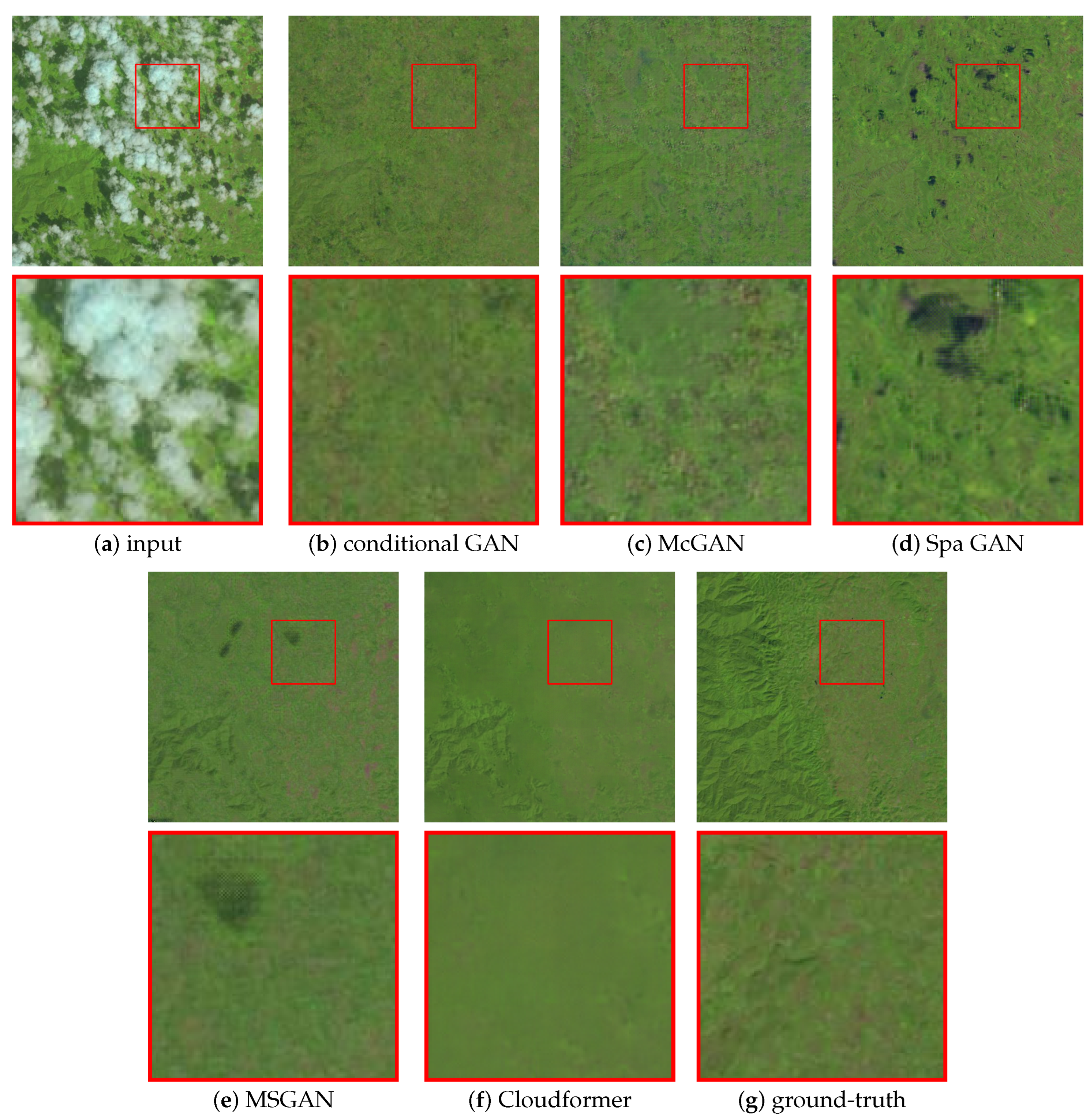

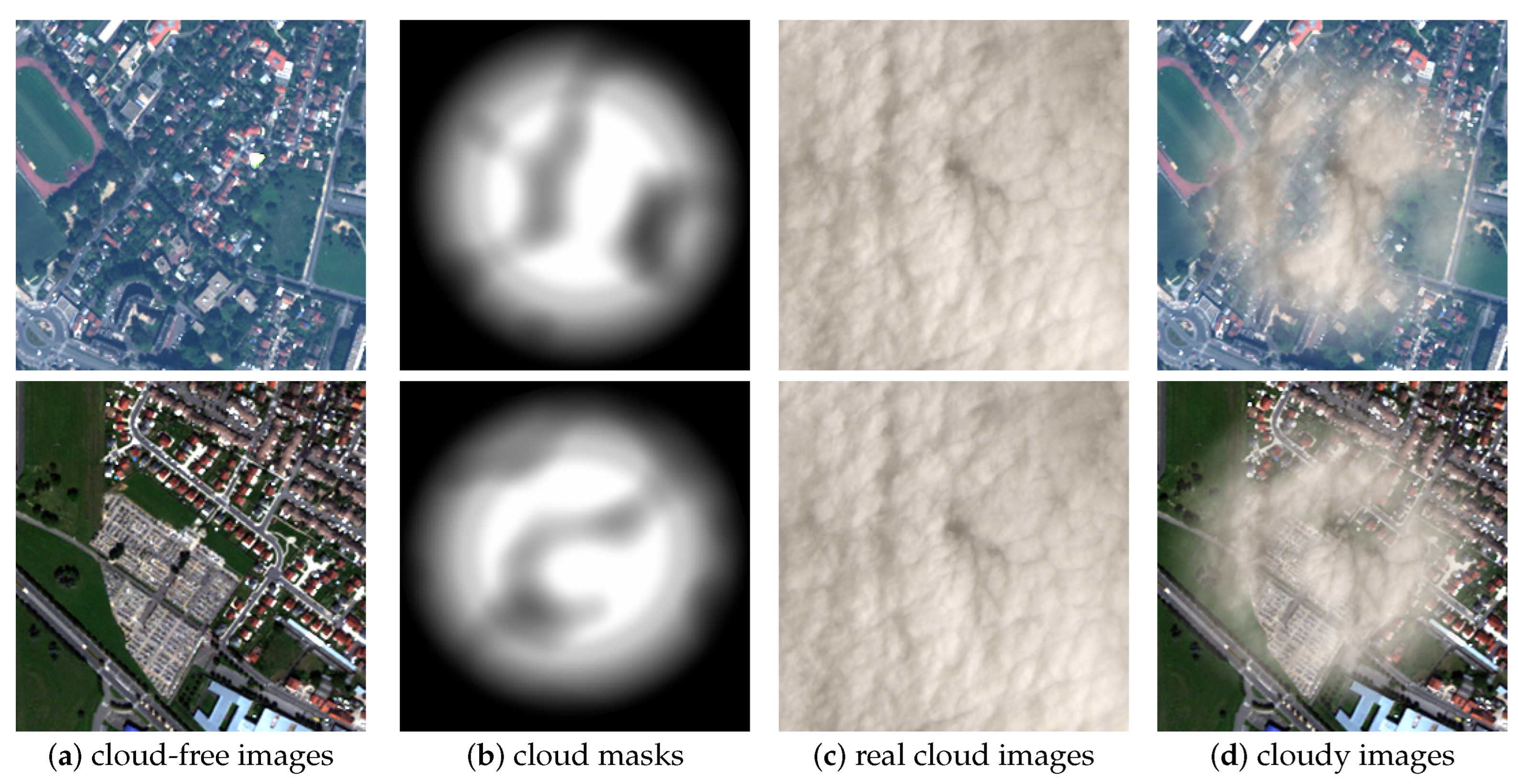

4.7. Additional Dataset

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Maggiori, E.; Tarabalka, Y.; Charpiat, G.; Alliez, P. Can semantic labeling methods generalize to any city? the inria aerial image labeling benchmark. In Proceedings of the 2017 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Worth, TX, USA, 23–28 July 2017; pp. 3226–3229. [Google Scholar]

- Vogelmann, J.E.; Tolk, B.; Zhu, Z. Monitoring forest changes in the southwestern United States using multitemporal Landsat data. Remote Sens. Environ. 2009, 113, 1739–1748. [Google Scholar] [CrossRef]

- King, M.D.; Platnick, S.; Menzel, W.P.; Ackerman, S.A.; Hubanks, P.A. Spatial and temporal distribution of clouds observed by MODIS onboard the Terra and Aqua satellites. IEEE Trans. Geosci. Remote Sens. 2013, 51, 3826–3852. [Google Scholar] [CrossRef]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An image is worth 16×16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Chen, H.; Wang, Y.; Guo, T.; Xu, C.; Deng, Y.; Liu, Z.; Ma, S.; Xu, C.; Xu, C.; Gao, W. Pre-trained image processing transformer. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021; pp. 12299–12310. [Google Scholar]

- Zamir, S.W.; Arora, A.; Khan, S.; Hayat, M.; Khan, F.S.; Yang, M.H. Restormer: Efficient transformer for high-resolution image restoration. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 19–20 June 2022; pp. 5728–5739. [Google Scholar]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-end object detection with transformers. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; Springer: Berlin/Heidelberg, Germany, 2020; pp. 213–229. [Google Scholar]

- Zhu, X.; Su, W.; Lu, L.; Li, B.; Wang, X.; Dai, J. Deformable detr: Deformable transformers for end-to-end object detection. arXiv 2020, arXiv:2010.04159. [Google Scholar]

- Wang, Y.; Xu, Z.; Wang, X.; Shen, C.; Cheng, B.; Shen, H.; Xia, H. End-to-end video instance segmentation with transformers. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021; pp. 8741–8750. [Google Scholar]

- Zheng, S.; Lu, J.; Zhao, H.; Zhu, X.; Luo, Z.; Wang, Y.; Fu, Y.; Feng, J.; Xiang, T.; Torr, P.H.; et al. Rethinking semantic segmentation from a sequence-to-sequence perspective with transformers. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021; pp. 6881–6890. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Advances in Neural Information Processing Systems; MIT Press: Cambridge, MA, USA, 2017; Volume 30. [Google Scholar]

- Dong, X.; Bao, J.; Chen, D.; Zhang, W.; Yu, N.; Yuan, L.; Chen, D.; Guo, B. Cswin transformer: A general vision transformer backbone with cross-shaped windows. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 19–20 June 2022; pp. 12124–12134. [Google Scholar]

- Lin, C.H.; Tsai, P.H.; Lai, K.H.; Chen, J.Y. Cloud removal from multitemporal satellite images using information cloning. IEEE Trans. Geosci. Remote Sens. 2012, 51, 232–241. [Google Scholar] [CrossRef]

- Cheng, Q.; Shen, H.; Zhang, L.; Yuan, Q.; Zeng, C. Cloud removal for remotely sensed images by similar pixel replacement guided with a spatio-temporal MRF model. ISPRS J. Photogramm. Remote Sens. 2014, 92, 54–68. [Google Scholar] [CrossRef]

- Li, X.; Shen, H.; Zhang, L.; Zhang, H.; Yuan, Q.; Yang, G. Recovering quantitative remote sensing products contaminated by thick clouds and shadows using multitemporal dictionary learning. IEEE Trans. Geosci. Remote Sens. 2014, 52, 7086–7098. [Google Scholar]

- Chen, B.; Huang, B.; Chen, L.; Xu, B. Spatially and temporally weighted regression: A novel method to produce continuous cloud-free Landsat imagery. IEEE Trans. Geosci. Remote Sens. 2016, 55, 27–37. [Google Scholar] [CrossRef]

- Wen, F.; Zhang, Y.; Gao, Z.; Ling, X. Two-pass robust component analysis for cloud removal in satellite image sequence. IEEE Geosci. Remote Sens. Lett. 2018, 15, 1090–1094. [Google Scholar] [CrossRef]

- Ji, T.Y.; Chu, D.; Zhao, X.L.; Hong, D. A unified framework of cloud detection and removal based on low-rank and group sparse regularizations for multitemporal multispectral images. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–15. [Google Scholar] [CrossRef]

- Eckardt, R.; Berger, C.; Thiel, C.; Schmullius, C. Removal of optically thick clouds from multi-spectral satellite images using multi-frequency SAR data. Remote Sens. 2013, 5, 2973–3006. [Google Scholar] [CrossRef]

- Zhu, C.; Zhao, Z.; Zhu, X.; Nie, Z.; Liu, Q.H. Cloud removal for optical images using SAR structure data. In Proceedings of the 2016 IEEE 13th International Conference on Signal Processing (ICSP), Chengdu, China, 6–10 November 2016; pp. 1872–1875. [Google Scholar]

- Li, Y.; Li, W.; Shen, C. Removal of optically thick clouds from high-resolution satellite imagery using dictionary group learning and interdictionary nonlocal joint sparse coding. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 1870–1882. [Google Scholar] [CrossRef]

- Li, W.; Li, Y.; Chan, J.C.W. Thick cloud removal with optical and SAR imagery via convolutional-mapping-deconvolutional network. IEEE Trans. Geosci. Remote Sens. 2019, 58, 2865–2879. [Google Scholar] [CrossRef]

- Meng, Q.; Borders, B.E.; Cieszewski, C.J.; Madden, M. Closest spectral fit for removing clouds and cloud shadows. Photogramm. Eng. Remote Sens. 2009, 75, 569–576. [Google Scholar] [CrossRef]

- Chavez Jr, P.S. An improved dark-object subtraction technique for atmospheric scattering correction of multispectral data. Remote Sens. Environ. 1988, 24, 459–479. [Google Scholar] [CrossRef]

- Zhang, Y.; Guindon, B.; Cihlar, J. An image transform to characterize and compensate for spatial variations in thin cloud contamination of Landsat images. Remote Sens. Environ. 2002, 82, 173–187. [Google Scholar] [CrossRef]

- He, X.Y.; Hu, J.B.; Chen, W.; Li, X.Y. Haze removal based on advanced haze-optimized transformation (AHOT) for multispectral imagery. Int. J. Remote Sens. 2010, 31, 5331–5348. [Google Scholar] [CrossRef]

- Du, Y.; Guindon, B.; Cihlar, J. Haze detection and removal in high resolution satellite image with wavelet analysis. IEEE Trans. Geosci. Remote Sens. 2002, 40, 210–217. [Google Scholar]

- Siravenha, A.C.; Sousa, D.; Bispo, A.; Pelaes, E. The use of high-pass filters and the inpainting method to clouds removal and their impact on satellite images classification. In Proceedings of the International Conference on Image Analysis and Processing, Ravenna, Italy, 14–16 September 2011; Springer: Berlin/Heidelberg, Germany, 2011; pp. 333–342. [Google Scholar]

- Shen, H.; Li, H.; Qian, Y.; Zhang, L.; Yuan, Q. An effective thin cloud removal procedure for visible remote sensing images. ISPRS J. Photogramm. Remote Sens. 2014, 96, 224–235. [Google Scholar] [CrossRef]

- Xu, M.; Jia, X.; Pickering, M. Automatic cloud removal for Landsat 8 OLI images using cirrus band. In Proceedings of the 2014 IEEE Geoscience and Remote Sensing Symposium, Quebec City, QC, Canada, 13–18 July 2014; pp. 2511–2514. [Google Scholar]

- Xu, M.; Pickering, M.; Plaza, A.J.; Jia, X. Thin cloud removal based on signal transmission principles and spectral mixture analysis. IEEE Trans. Geosci. Remote Sens. 2015, 54, 1659–1669. [Google Scholar] [CrossRef]

- He, K.; Sun, J.; Tang, X. Single image haze removal using dark channel prior. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 33, 2341–2353. [Google Scholar] [PubMed]

- Li, W.; Li, Y.; Chen, D.; Chan, J.C.W. Thin cloud removal with residual symmetrical concatenation network. ISPRS J. Photogramm. Remote Sens. 2019, 153, 137–150. [Google Scholar] [CrossRef]

- Wang, X.; Xu, G.; Wang, Y.; Lin, D.; Li, P.; Lin, X. Thin and thick cloud removal on remote sensing image by conditional generative adversarial network. In Proceedings of the IGARSS 2019-2019 IEEE International Geoscience and Remote Sensing Symposium, Yokohama, Japan, 28 July–2 August 2019; pp. 1426–1429. [Google Scholar]

- Isola, P.; Zhu, J.Y.; Zhou, T.; Efros, A.A. Image-to-image translation with conditional adversarial networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1125–1134. [Google Scholar]

- Enomoto, K.; Sakurada, K.; Wang, W.; Fukui, H.; Matsuoka, M.; Nakamura, R.; Kawaguchi, N. Filmy cloud removal on satellite imagery with multispectral conditional generative adversarial nets. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Honolulu, HI, USA, 21–26 July 2017; pp. 48–56. [Google Scholar]

- Pan, H. Cloud removal for remote sensing imagery via spatial attention generative adversarial network. arXiv 2020, arXiv:2009.13015. [Google Scholar]

- Xu, M.; Deng, F.; Jia, S.; Jia, X.; Plaza, A.J. Attention mechanism-based generative adversarial networks for cloud removal in Landsat images. Remote Sens. Environ. 2022, 271, 112902. [Google Scholar] [CrossRef]

- Zhu, J.Y.; Park, T.; Isola, P.; Efros, A.A. Unpaired image-to-image translation using cycle-consistent adversarial networks. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2223–2232. [Google Scholar]

- Singh, P.; Komodakis, N. Cloud-gan: Cloud removal for sentinel-2 imagery using a cyclic consistent generative adversarial networks. In Proceedings of the IGARSS 2018-2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018; pp. 1772–1775. [Google Scholar]

- Li, J.; Wu, Z.; Hu, Z.; Zhang, J.; Li, M.; Mo, L.; Molinier, M. Thin cloud removal in optical remote sensing images based on generative adversarial networks and physical model of cloud distortion. ISPRS J. Photogramm. Remote Sens. 2020, 166, 373–389. [Google Scholar] [CrossRef]

- Liu, L.; Hu, S. SACTNet: Spatial Attention Context Transformation Network for Cloud Removal. Wirel. Commun. Mob. Comput. 2021, 2021, 8292612. [Google Scholar] [CrossRef]

- Christopoulos, D.; Ntouskos, V.; Karantzalos, K. Cloudtran: Cloud removal from multitemporal satellite images using axial transformer networks. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2022, 43, 1125–1132. [Google Scholar] [CrossRef]

- Wu, H.; Xiao, B.; Codella, N.; Liu, M.; Dai, X.; Yuan, L.; Zhang, L. Cvt: Introducing convolutions to vision transformers. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 22–31. [Google Scholar]

- Li, Y.; Zhang, K.; Cao, J.; Timofte, R.; Van Gool, L. Localvit: Bringing locality to vision transformers. arXiv 2021, arXiv:2104.05707. [Google Scholar]

- Yuan, K.; Guo, S.; Liu, Z.; Zhou, A.; Yu, F.; Wu, W. Incorporating convolution designs into visual transformers. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 579–588. [Google Scholar]

- Li, K.; Wang, Y.; Zhang, J.; Gao, P.; Song, G.; Liu, Y.; Li, H.; Qiao, Y. Uniformer: Unifying convolution and self-attention for visual recognition. arXiv 2022, arXiv:2201.09450. [Google Scholar]

- Huang, Z.; Ben, Y.; Luo, G.; Cheng, P.; Yu, G.; Fu, B. Shuffle transformer: Rethinking spatial shuffle for vision transformer. arXiv 2021, arXiv:2106.03650. [Google Scholar]

- Chu, X.; Tian, Z.; Wang, Y.; Zhang, B.; Ren, H.; Wei, X.; Xia, H.; Shen, C. Twins: Revisiting the design of spatial attention in vision transformers. Adv. Neural Inf. Process. Syst. 2021, 34, 9355–9366. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 10012–10022. [Google Scholar]

- Vaswani, A.; Ramachandran, P.; Srinivas, A.; Parmar, N.; Hechtman, B.; Shlens, J. Scaling local self-attention for parameter efficient visual backbones. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021; pp. 12894–12904. [Google Scholar]

- Gehring, J.; Auli, M.; Grangier, D.; Yarats, D.; Dauphin, Y.N. Convolutional sequence to sequence learning. In Proceedings of the International Conference on Machine Learning, PMLR, Sydney, Australia, 6–11 August 2017; pp. 1243–1252. [Google Scholar]

- Shaw, P.; Uszkoreit, J.; Vaswani, A. Self-attention with relative position representations. arXiv 2018, arXiv:1803.02155. [Google Scholar]

- Dai, Z.; Yang, Z.; Yang, Y.; Carbonell, J.; Le, Q.V.; Salakhutdinov, R. Transformer-xl: Attentive language models beyond a fixed-length context. arXiv 2019, arXiv:1901.02860. [Google Scholar]

- Raffel, C.; Shazeer, N.; Roberts, A.; Lee, K.; Narang, S.; Matena, M.; Zhou, Y.; Li, W.; Liu, P.J. Exploring the limits of transfer learning with a unified text-to-text transformer. J. Mach. Learn. Res. 2020, 21, 1–67. [Google Scholar]

- He, P.; Liu, X.; Gao, J.; Chen, W. Deberta: Decoding-enhanced bert with disentangled attention. arXiv 2020, arXiv:2006.03654. [Google Scholar]

- Chu, X.; Tian, Z.; Zhang, B.; Wang, X.; Wei, X.; Xia, H.; Shen, C. Conditional positional encodings for vision transformers. arXiv 2021, arXiv:2102.10882. [Google Scholar]

- Wang, Z.; Cun, X.; Bao, J.; Zhou, W.; Liu, J.; Li, H. Uformer: A general u-shaped transformer for image restoration. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 19–20 June 2022; pp. 17683–17693. [Google Scholar]

- Charbonnier, P.; Blanc-Feraud, L.; Aubert, G.; Barlaud, M. Two deterministic half-quadratic regularization algorithms for computed imaging. In Proceedings of the 1st International Conference on Image Processing, Austin, TX, USA, 13–16 November 1994; Volume 2, pp. 168–172. [Google Scholar]

- Zamir, S.W.; Arora, A.; Khan, S.; Hayat, M.; Khan, F.S.; Yang, M.H.; Shao, L. Learning enriched features for real image restoration and enhancement. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; Springer: Berlin/Heidelberg, Germany, 2020; pp. 492–511. [Google Scholar]

- Lin, D.; Xu, G.; Wang, X.; Wang, Y.; Sun, X.; Fu, K. A remote sensing image dataset for cloud removal. arXiv 2019, arXiv:1901.00600. [Google Scholar]

- Loshchilov, I.; Hutter, F. Decoupled weight decay regularization. arXiv 2017, arXiv:1711.05101. [Google Scholar]

- Zhou, J.; Luo, X.; Rong, W.; Xu, H. Cloud Removal for Optical Remote Sensing Imagery Using Distortion Coding Network Combined with Compound Loss Functions. Remote Sens. 2022, 14, 3452. [Google Scholar] [CrossRef]

- Hasan, C.; Horne, R.; Mauw, S.; Mizera, A. Cloud removal from satellite imagery using multispectral edge-filtered conditional generative adversarial networks. Int. J. Remote Sens. 2022, 43, 1881–1893. [Google Scholar] [CrossRef]

| Method | SACB | CCB | LePE | PSNR | SSIM |

|---|---|---|---|---|---|

| 1 | 34.128 | 0.975 | |||

| 2 | √ | 34.797 | 0.977 | ||

| 3 | √ | √ | 35.037 | 0.978 | |

| 4 | √ | √ | √ | 35.243 | 0.978 |

| Method | PSNR | SSIM |

|---|---|---|

| Cloudformer | 35.243 | 0.978 |

| Cloudformer + Window Shift | 35.247 | 0.978 |

| Method | PSNR | SSIM |

|---|---|---|

| Conditional GAN [35] | 27.260 | 0.924 |

| McGAN [36] | 32.170 | 0.965 |

| Spa GAN [37] | 29.835 | 0.952 |

| MSGAN [63] | 29.907 | 0.960 |

| Cloudformer | 35.243 | 0.978 |

| Method | PSNR | SSIM |

|---|---|---|

| Conditional GAN [35] | 28.596 | 0.896 |

| McGAN [36] | 30.480 | 0.909 |

| Spa GAN [37] | 28.232 | 0.890 |

| MSGAN [63] | 29.628 | 0.918 |

| Cloudformer | 35.698 | 0.949 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wu, P.; Pan, Z.; Tang, H.; Hu, Y. Cloudformer: A Cloud-Removal Network Combining Self-Attention Mechanism and Convolution. Remote Sens. 2022, 14, 6132. https://doi.org/10.3390/rs14236132

Wu P, Pan Z, Tang H, Hu Y. Cloudformer: A Cloud-Removal Network Combining Self-Attention Mechanism and Convolution. Remote Sensing. 2022; 14(23):6132. https://doi.org/10.3390/rs14236132

Chicago/Turabian StyleWu, Peiyang, Zongxu Pan, Hairong Tang, and Yuxin Hu. 2022. "Cloudformer: A Cloud-Removal Network Combining Self-Attention Mechanism and Convolution" Remote Sensing 14, no. 23: 6132. https://doi.org/10.3390/rs14236132

APA StyleWu, P., Pan, Z., Tang, H., & Hu, Y. (2022). Cloudformer: A Cloud-Removal Network Combining Self-Attention Mechanism and Convolution. Remote Sensing, 14(23), 6132. https://doi.org/10.3390/rs14236132