Road Damage Detection Using the Hunger Games Search with Elman Neural Network on High-Resolution Remote Sensing Images

Abstract

1. Introduction

2. Related Works

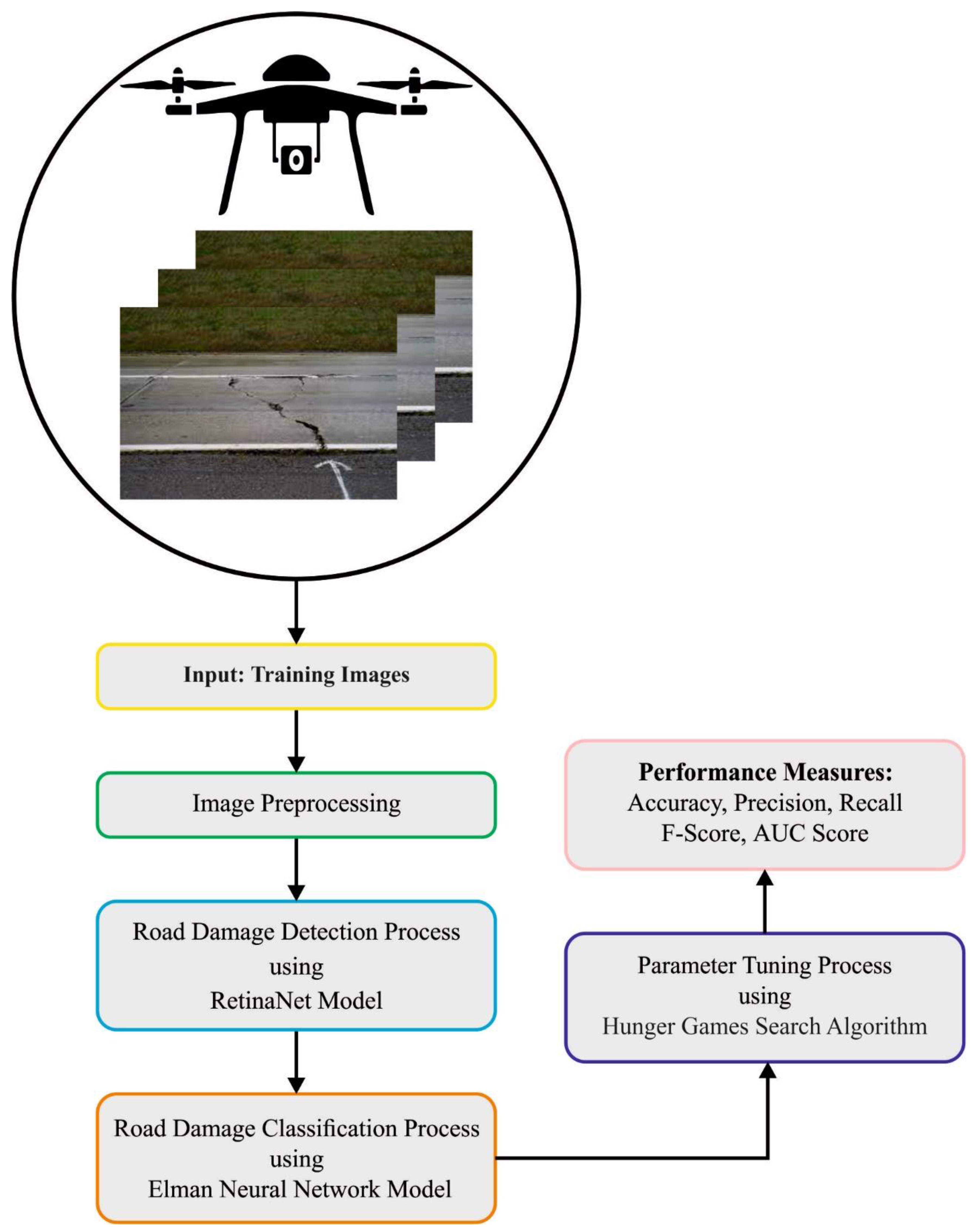

3. The Proposed Model

3.1. Road Damage Detection: The RetinaNet Model

3.2. Road Damage Classification: Optimal ENN Model

- 1.

- Population initialized: to determine the first location for optimal search, the population will be initialized. The HGS approach makes use of the real-valued vector of dimension , and all the members of the population are denoted by In the original HGS model, each population member is considered to conform to a mean and probabihty distribution with the subsequent formula

- 2.

- Approach food: This phase can be described as follows

- 3.

- Hunger role: here, the hunger features of the search agent were simulated mathematically. In Equation (8), and characterize the extent of the population starvation, which vigorously controls the upgrade of the search agents’ position.

4. Experimental Validation

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Cao, M.T.; Tran, Q.V.; Nguyen, N.M.; Chang, K.T. Survey on performance of deep learning models for detecting road damages using multiple dashcam image resources. Adv. Eng. Inform. 2020, 46, 101182. [Google Scholar] [CrossRef]

- Hruza, P.; Mikita, T.; Tyagur, N.; Krejza, Z.; Cibulka, M.; Prochazkova, A.; Patocka, Z. Detecting forest road wearing course damage using different methods of remote sensing. Remote. Sens. 2018, 10, 492. [Google Scholar] [CrossRef]

- Li, J.; Zhao, X.; Li, H. April. Method for detecting road pavement damage based on deep learning. In Health Monitoring of Structural and Biological Systems XIII; Fromme, P., Su, Z., Eds.; SPIE: Bellingham, WA, USA, 2019; Volume 10972, pp. 517–526. [Google Scholar]

- Azimi, M.; Eslamlou, A.D.; Pekcan, G. Data-driven structural health monitoring and damage detection through deep learning: State-of-the-art review. Sensors 2020, 20, 2778. [Google Scholar] [CrossRef] [PubMed]

- Arya, D.; Maeda, H.; Ghosh, S.K.; Toshniwal, D.; Mraz, A.; Kashiyama, T.; Sekimoto, Y. Deep learning-based road damage detection and classification for multiple countries. Autom. Constr. 2021, 132, 103935. [Google Scholar] [CrossRef]

- Yin, J.; Qu, J.; Huang, W.; Chen, Q. Road damage detection and classification based on multi-level feature pyramids. KSII Trans. Internet Inf. Syst. (TIIS) 2021, 15, 786–799. [Google Scholar]

- Heidari, M.J.; Najafi, A.; Borges, J.G. Forest Roads Damage Detection Based on Objected Detection Deep Learning Algorithms. 2022. [Google Scholar] [CrossRef]

- Van Der Horst, B.B.; Lindenbergh, R.C.; Puister, S.W.J. Mobile laser scan data for road surface damage detection. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, 42, 1141–1148. [Google Scholar] [CrossRef]

- Shim, S.; Kim, J.; Lee, S.W.; Cho, G.C. Road surface damage detection based on hierarchical architecture using lightweight auto-encoder network. Autom. Constr. 2021, 130, 103833. [Google Scholar] [CrossRef]

- Angulo, A.; Vega-Fernández, J.A.; Aguilar-Lobo, L.M.; Natraj, S.; Ochoa-Ruiz, G. Road damage detection acquisition system based on deep neural networks for physical asset management. In Proceedings of the 18th Mexican International Conference on Artificial Intelligence, MICAI 2019, Xalapa, Mexico, 27 October–2 November 2019; Springer: New York, NY, USA, 2019; pp. 3–14. [Google Scholar]

- Jia, J.; Sun, H.; Jiang, C.; Karila, K.; Karjalainen, M.; Ahokas, E.; Khoramshahi, E.; Hu, P.; Chen, C.; Xue, T.; et al. Review on active and passive remote sensing techniques for road extraction. Remote Sens. 2021, 13, 4235. [Google Scholar] [CrossRef]

- Chen, Z.; Deng, L.; Luo, Y.; Li, D.; Junior, J.M.; Gonçalves, W.N.; Nurunnabi, A.A.M.; Li, J.; Wang, C.; Li, D. Road extraction in remote sensing data: A survey. Int. J. Appl. Earth Obs. Geoinf. 2022, 112, 102833. [Google Scholar] [CrossRef]

- Shao, Z.; Zhou, Z.; Huang, X.; Zhang, Y. MRENet: Simultaneous extraction of road surface and road centerline in complex urban scenes from very high-resolution images. Remote Sens. 2021, 13, 239. [Google Scholar] [CrossRef]

- Zhang, L.; Lan, M.; Zhang, J.; Tao, D. Stagewise unsupervised domain adaptation with adversarial self-training for road segmentation of remote-sensing images. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–13. [Google Scholar] [CrossRef]

- Chen, Z.; Wang, C.; Li, J.; Fan, W.; Du, J.; Zhong, B. Adaboost-like End-to-End multiple lightweight U-nets for road extraction from optical remote sensing images. Int. J. Appl. Earth Obs. Geoinf. 2021, 100, 102341. [Google Scholar] [CrossRef]

- Shim, S.; Kim, J.; Lee, S.W.; Cho, G.C. Road damage detection using super-resolution and semi-supervised learning with generative adversarial network. Autom. Constr. 2022, 135, 104139. [Google Scholar] [CrossRef]

- Zhao, K.; Liu, J.; Wang, Q.; Wu, X.; Tu, J. Road Damage Detection From Post-Disaster High-Resolution Remote Sensing Images Based on TLD Framework. IEEE Access 2022, 10, 43552–43561. [Google Scholar] [CrossRef]

- Yuan, Y.; Yuan, Y.; Baker, T.; Kolbe, L.M.; Hogrefe, D. FedRD: Privacy-preserving adaptive Federated learning framework for intelligent hazardous Road Damage detection and warning. Future Gener. Comput. Syst. 2021, 125, 385–398. [Google Scholar] [CrossRef]

- Fan, R.; Liu, M. Road damage detection based on unsupervised disparity map segmentation. IEEE Trans. Intell. Transp. Syst. 2019, 21, 4906–4911. [Google Scholar] [CrossRef]

- Fan, R.; Cheng, J.; Yu, Y.; Deng, J.; Giakos, G.; Pitas, I. Long-awaited next-generation road damage detection and localization system is finally here. In Proceedings of the 2021 29th European Signal Processing Conference (EUSIPCO), Dublin, Ireland, 23–27 August 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 641–645. [Google Scholar]

- Kortmann, F.; Talits, K.; Fassmeyer, P.; Warnecke, A.; Meier, N.; Heger, J.; Drews, P.; Funk, B. Detecting various road damage types in global countries utilizing faster r-cnn. In Proceedings of the 2020 IEEE International Conference on Big Data (Big Data), Atlanta, GA, USA, 10–13 December 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 5563–5571. [Google Scholar]

- Izadi, M.; Mohammadzadeh, A.; Haghighattalab, A. A new neuro-fuzzy approach for post-earthquake road damage assessment using GA and SVM classification from QuickBird satellite images. J. Indian Soc. Remote Sens. 2017, 45, 965–977. [Google Scholar] [CrossRef]

- Hill, C. Automatic Detection of Vehicles in Satellite Images for Economic Monitoring. Doctoral Dissertation, University of South Florida, Tampa, FL, USA, 2021. [Google Scholar]

- Sitharthan, R.; Krishnamoorthy, S.; Sanjeevikumar, P.; Holm-Nielsen, J.B.; Singh, R.R.; Rajesh, M. Torque ripple minimization of PMSM using an adaptive Elman neural network-controlled feedback linearization-based direct torque control strategy. Int. Trans. Electr. Energy Syst. 2021, 31, 12685. [Google Scholar]

- Zhou, X.; Gui, W.; Heidari, A.A.; Cai, Z.; Elmannai, H.; Hamdi, M.; Liang, G.; Chen, H. Advanced Orthogonal Learning and Gaussian Barebone Hunger Games for Engineering Design. J. Comput. Des. Eng. 2022, 9, 1699–1736. [Google Scholar] [CrossRef]

- Ochoa-Ruiz, G.; Angulo-Murillo, A.A.; Ochoa-Zezzatti, A.; Aguilar-Lobo, L.M.; Vega-Fernández, J.A.; Natraj, S. An asphalt damage dataset and detection system based on retinanet for road conditions assessment. Appl. Sci. 2020, 10, 3974. [Google Scholar] [CrossRef]

| Class | No. of Samples |

|---|---|

| Linear Cracks | 1000 |

| Peeling | 1000 |

| Alligator Cracks | 1000 |

| Potholes | 1000 |

| Total Number of Samples | 4000 |

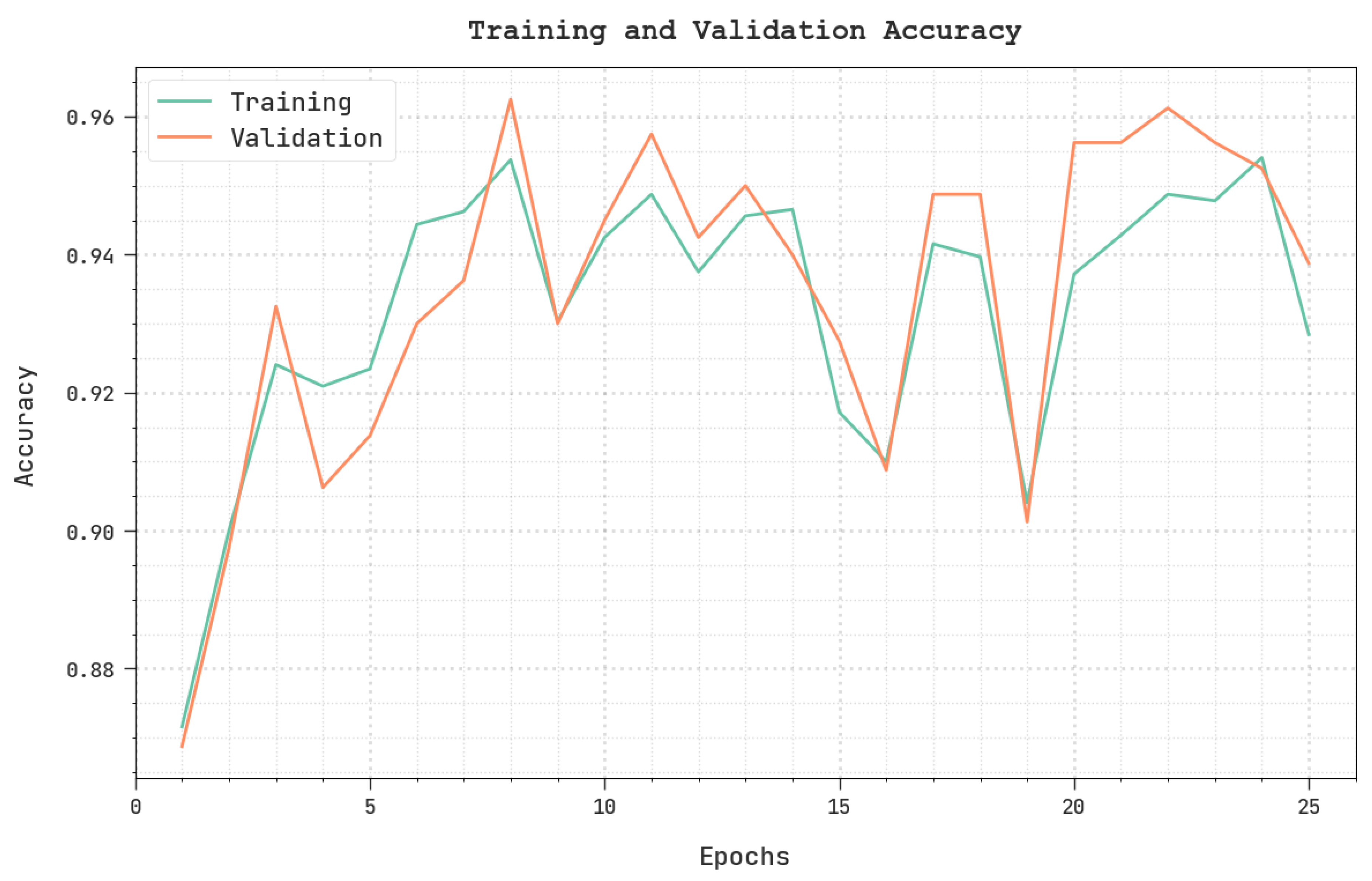

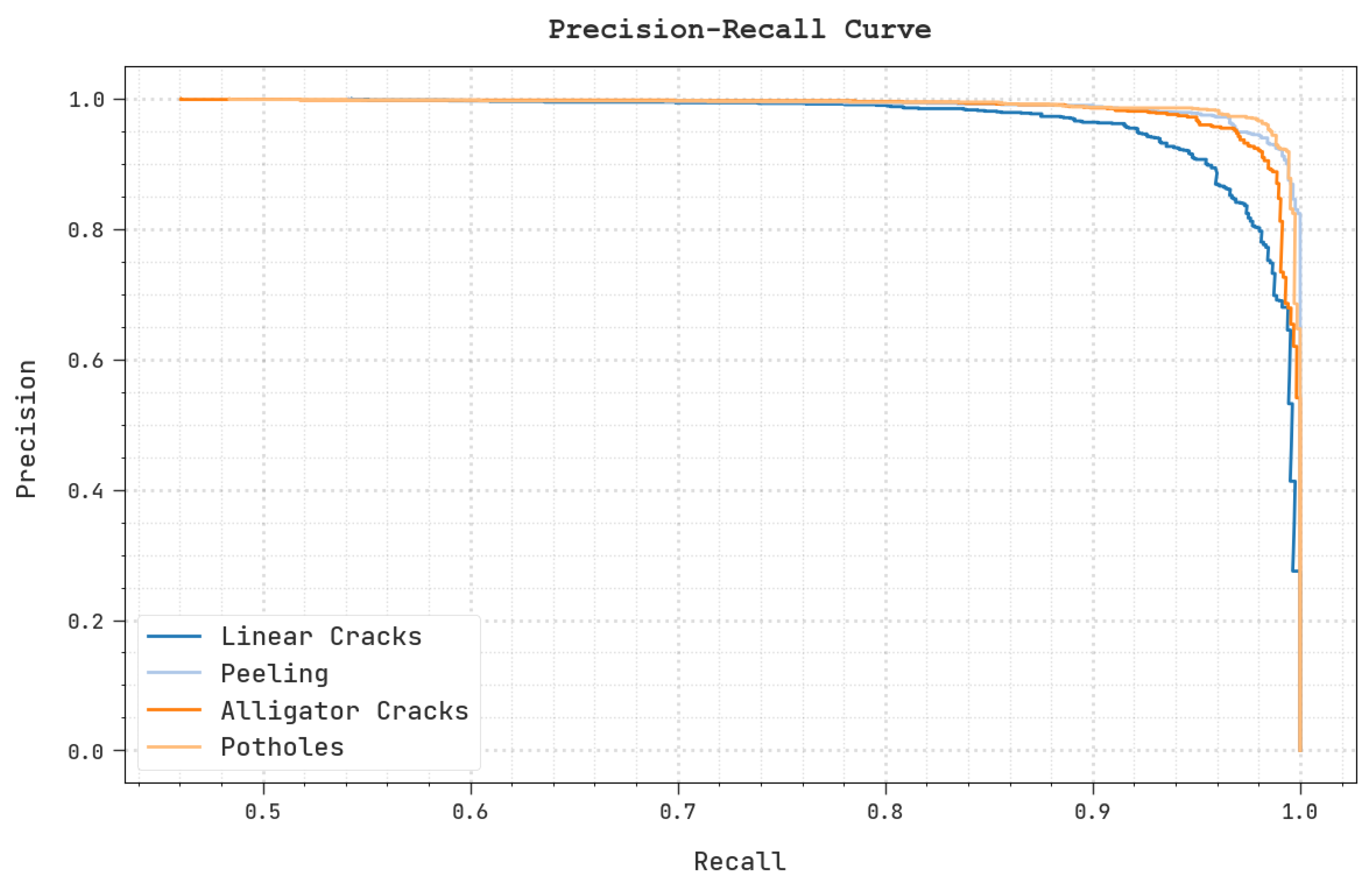

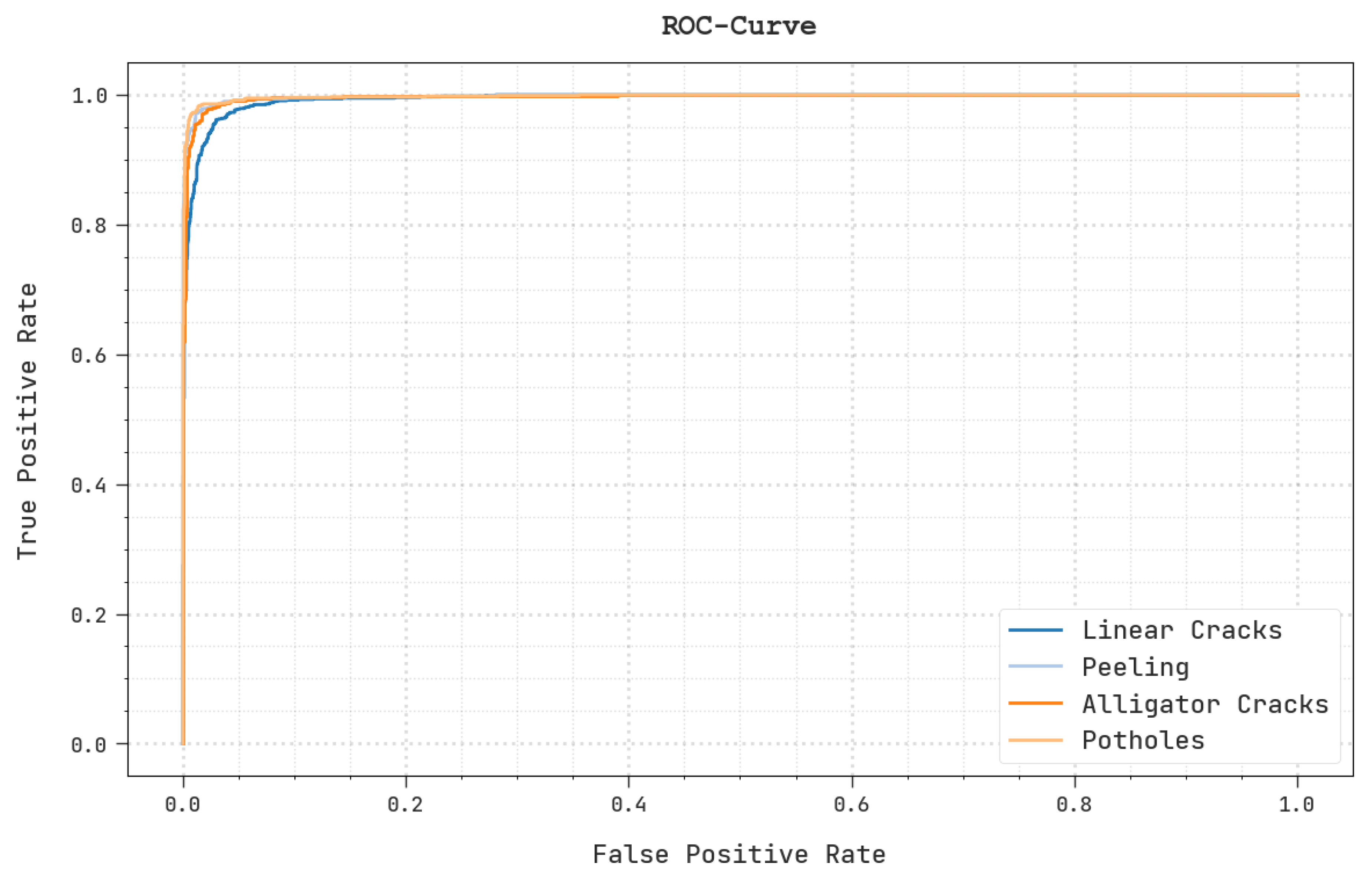

| Class | Accuracy | Precision | Recall | F-Score | AUC Score |

|---|---|---|---|---|---|

| Training Phase (80%) | |||||

| Linear Cracks | 96.59 | 94.53 | 91.55 | 93.02 | 94.90 |

| Peeling | 97.97 | 96.50 | 95.14 | 95.81 | 97.01 |

| Alligator Cracks | 97.72 | 94.13 | 97.04 | 95.56 | 97.49 |

| Potholes | 98.47 | 96.37 | 97.67 | 97.01 | 98.21 |

| Average | 97.69 | 95.38 | 95.35 | 95.35 | 96.90 |

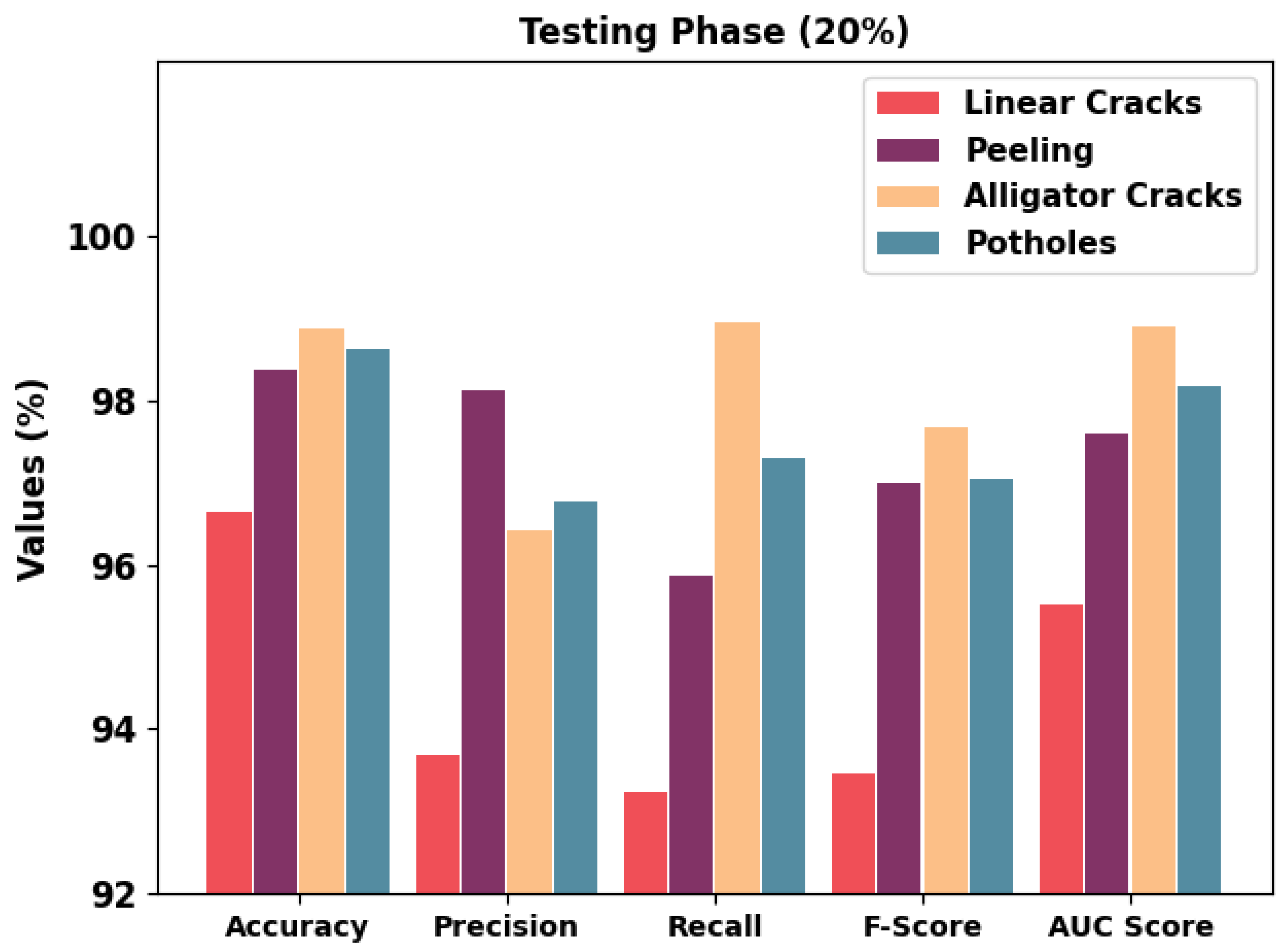

| Testing Phase (20%) | |||||

| Linear Cracks | 96.63 | 93.69 | 93.24 | 93.46 | 95.52 |

| Peeling | 98.38 | 98.12 | 95.87 | 96.98 | 97.59 |

| Alligator Cracks | 98.88 | 96.41 | 98.95 | 97.66 | 98.90 |

| Potholes | 98.62 | 96.77 | 97.30 | 97.04 | 98.16 |

| Average | 98.13 | 96.25 | 96.34 | 96.29 | 97.54 |

| Class | Accuracy | Precision | Recall | F-Score | AUC Score |

|---|---|---|---|---|---|

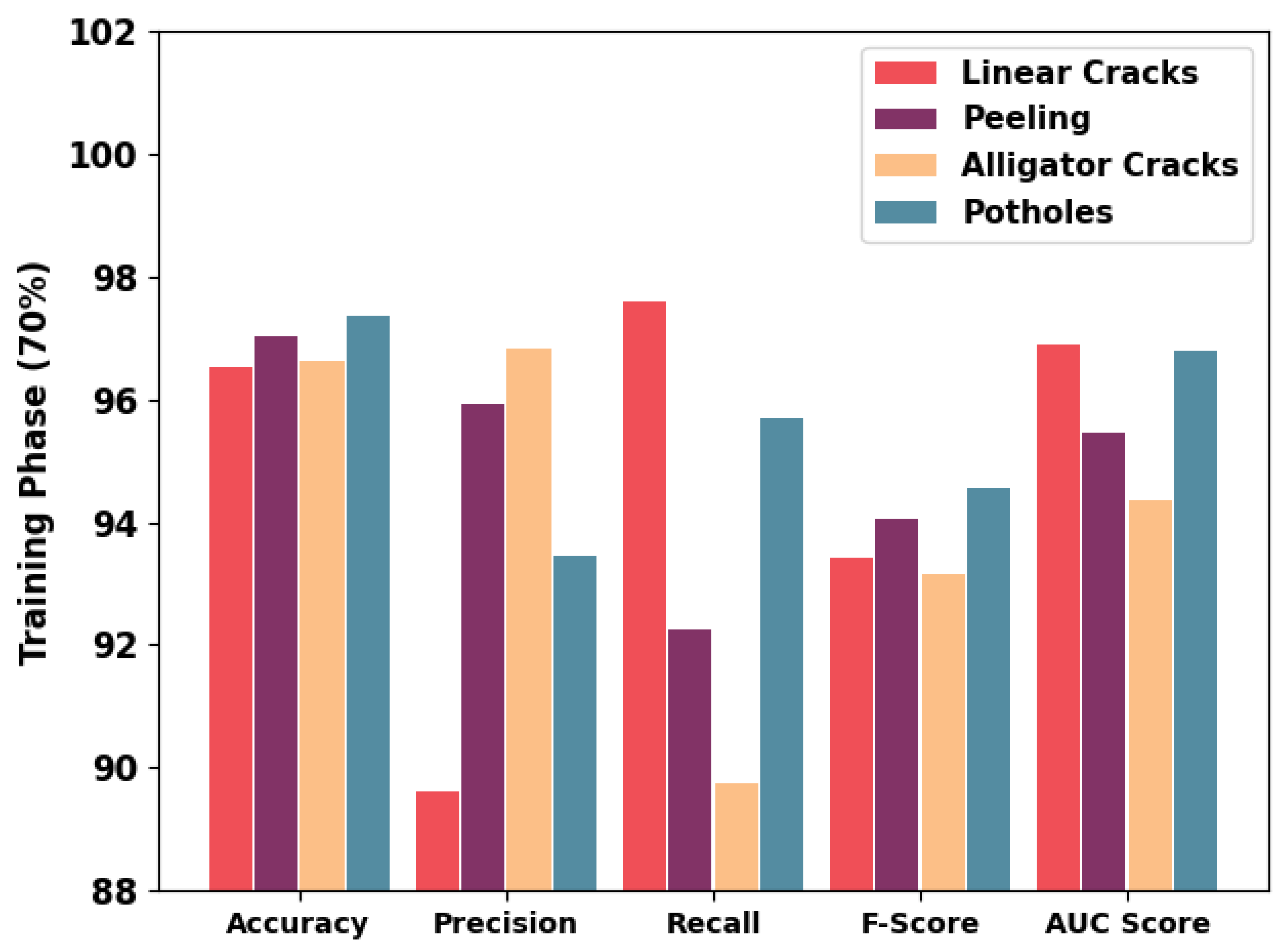

| Training Phase (70%) | |||||

| Linear Cracks | 96.54 | 89.61 | 97.60 | 93.43 | 96.89 |

| Peeling | 97.04 | 95.91 | 92.26 | 94.05 | 95.46 |

| Alligator Cracks | 96.64 | 96.81 | 89.73 | 93.14 | 94.36 |

| Potholes | 97.36 | 93.45 | 95.68 | 94.55 | 96.78 |

| Average | 96.89 | 93.94 | 93.82 | 93.79 | 95.87 |

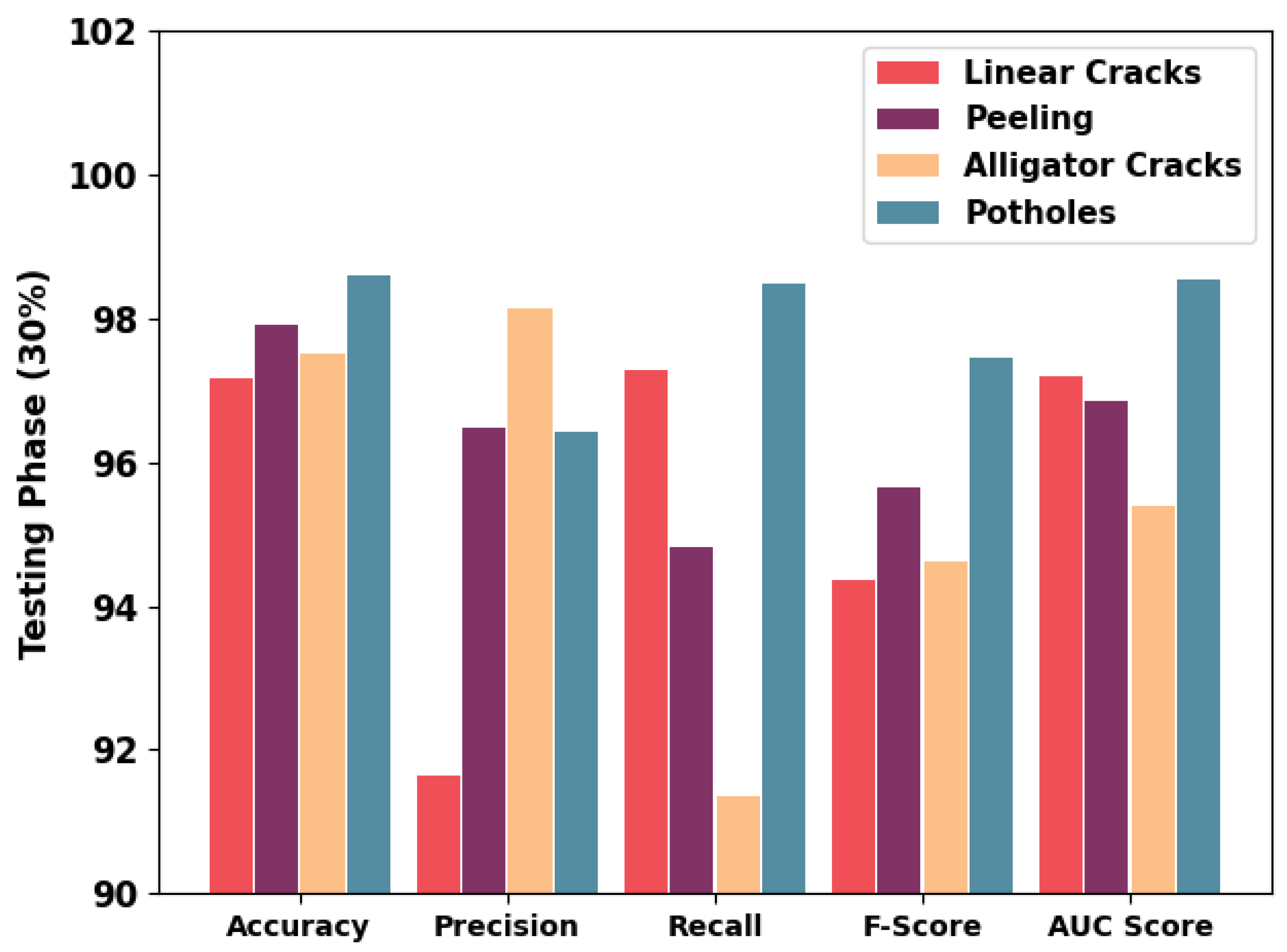

| Testing Phase (30%) | |||||

| Linear Cracks | 97.17 | 91.64 | 97.27 | 94.37 | 97.20 |

| Peeling | 97.92 | 96.48 | 94.81 | 95.64 | 96.86 |

| Alligator Cracks | 97.50 | 98.14 | 91.35 | 94.62 | 95.40 |

| Potholes | 98.58 | 96.43 | 98.48 | 97.44 | 98.55 |

| Average | 97.79 | 95.67 | 95.48 | 95.52 | 97.00 |

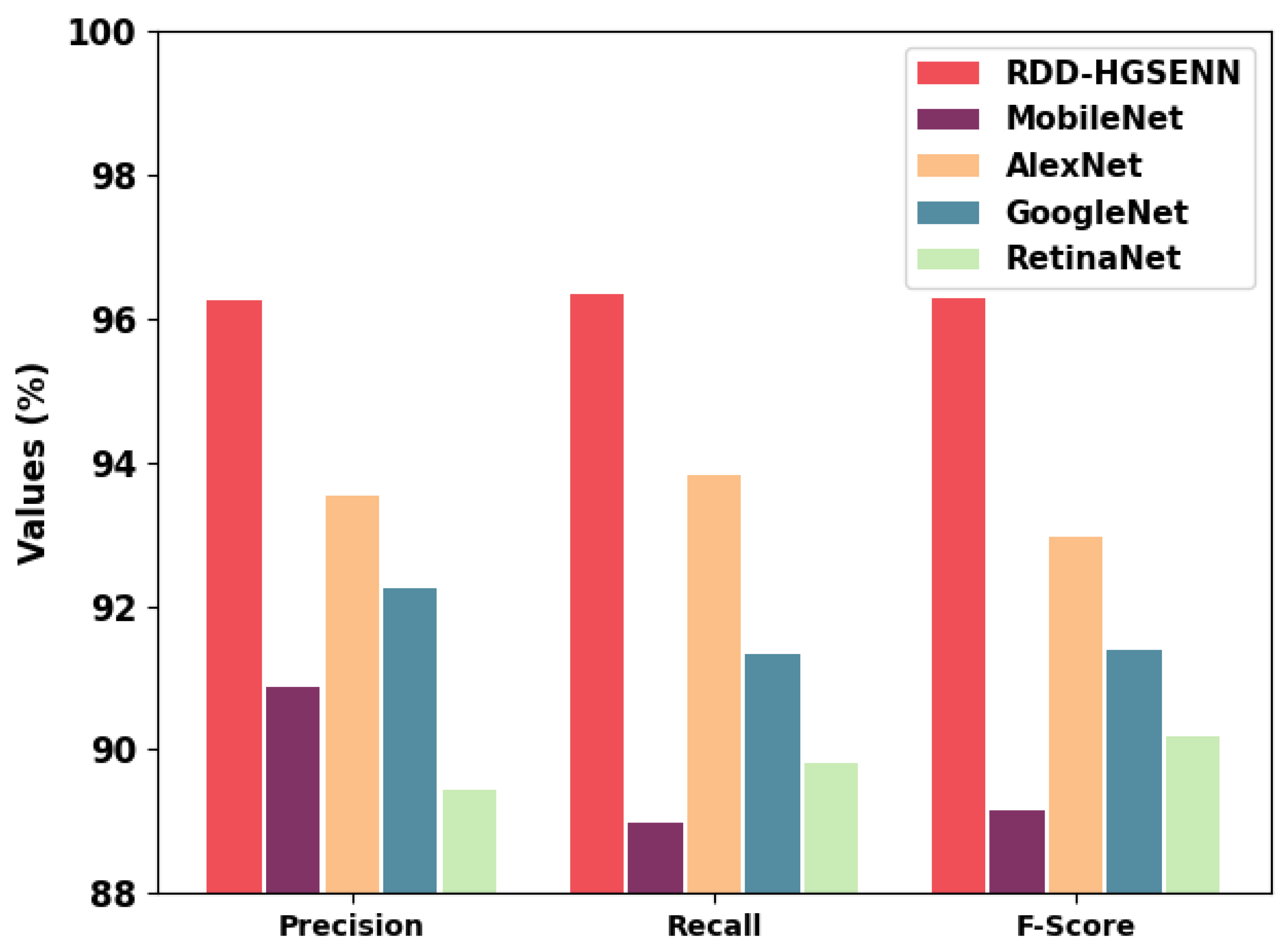

| Methods | Accuracy | Precision | Recall | F-Score |

|---|---|---|---|---|

| RDD-HGSENN | 98.13 | 96.25 | 96.34 | 96.29 |

| MobileNet | 90.03 | 90.88 | 88.97 | 89.15 |

| AlexNet | 92.84 | 93.52 | 93.83 | 92.95 |

| GoogleNet | 91.47 | 92.23 | 91.33 | 91.39 |

| RetinaNet | 90.70 | 89.45 | 89.80 | 90.17 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Al Duhayyim, M.; Malibari, A.A.; Alharbi, A.; Afef, K.; Yafoz, A.; Alsini, R.; Alghushairy, O.; Mohsen, H. Road Damage Detection Using the Hunger Games Search with Elman Neural Network on High-Resolution Remote Sensing Images. Remote Sens. 2022, 14, 6222. https://doi.org/10.3390/rs14246222

Al Duhayyim M, Malibari AA, Alharbi A, Afef K, Yafoz A, Alsini R, Alghushairy O, Mohsen H. Road Damage Detection Using the Hunger Games Search with Elman Neural Network on High-Resolution Remote Sensing Images. Remote Sensing. 2022; 14(24):6222. https://doi.org/10.3390/rs14246222

Chicago/Turabian StyleAl Duhayyim, Mesfer, Areej A. Malibari, Abdullah Alharbi, Kallekh Afef, Ayman Yafoz, Raed Alsini, Omar Alghushairy, and Heba Mohsen. 2022. "Road Damage Detection Using the Hunger Games Search with Elman Neural Network on High-Resolution Remote Sensing Images" Remote Sensing 14, no. 24: 6222. https://doi.org/10.3390/rs14246222

APA StyleAl Duhayyim, M., Malibari, A. A., Alharbi, A., Afef, K., Yafoz, A., Alsini, R., Alghushairy, O., & Mohsen, H. (2022). Road Damage Detection Using the Hunger Games Search with Elman Neural Network on High-Resolution Remote Sensing Images. Remote Sensing, 14(24), 6222. https://doi.org/10.3390/rs14246222