Deep Encoder–Decoder Network-Based Wildfire Segmentation Using Drone Images in Real-Time

Abstract

1. Introduction

2. Related works

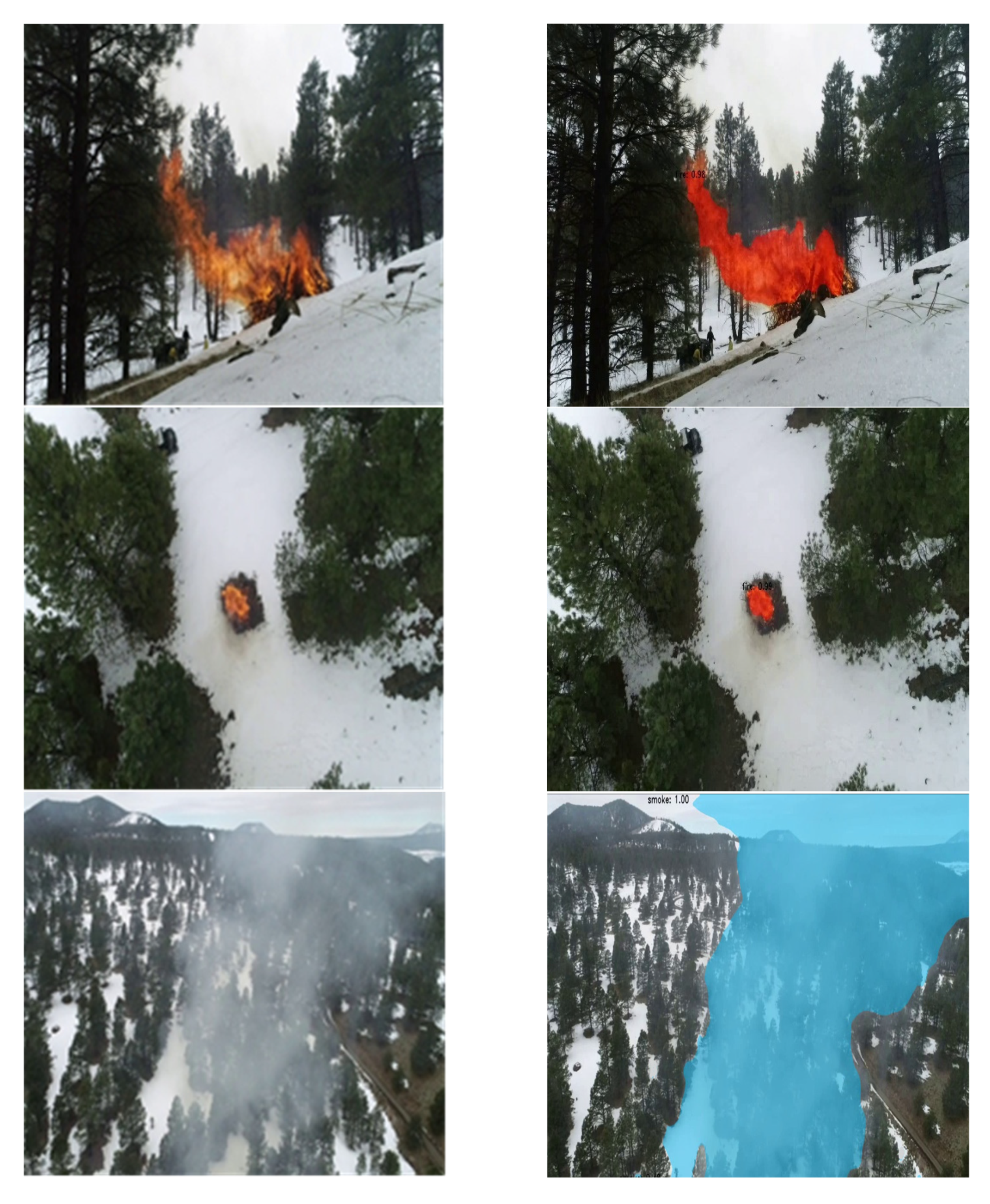

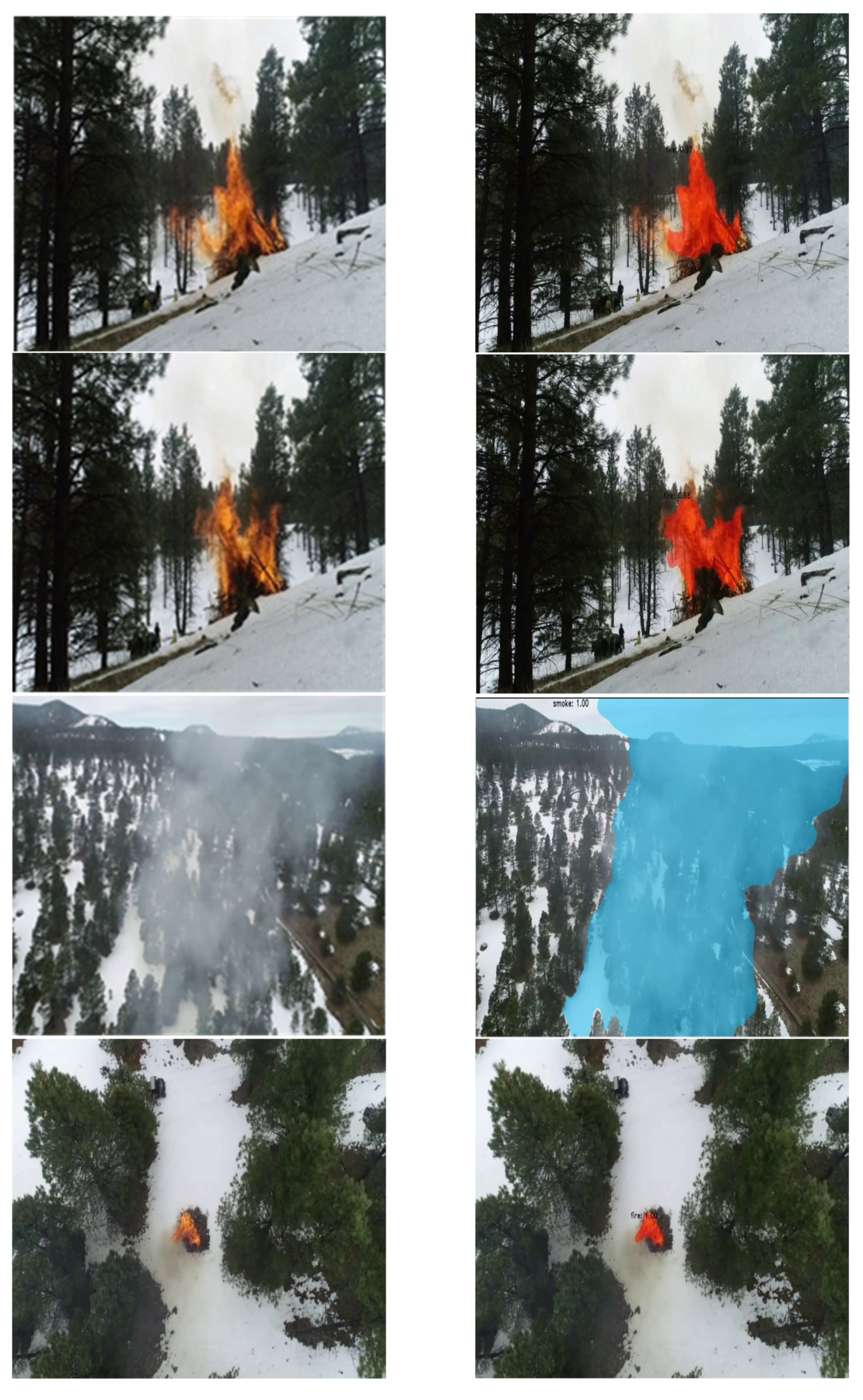

2.1. Traditional and Deep Learning Methods

2.2. UAV-Based Fire Segmentation Methods

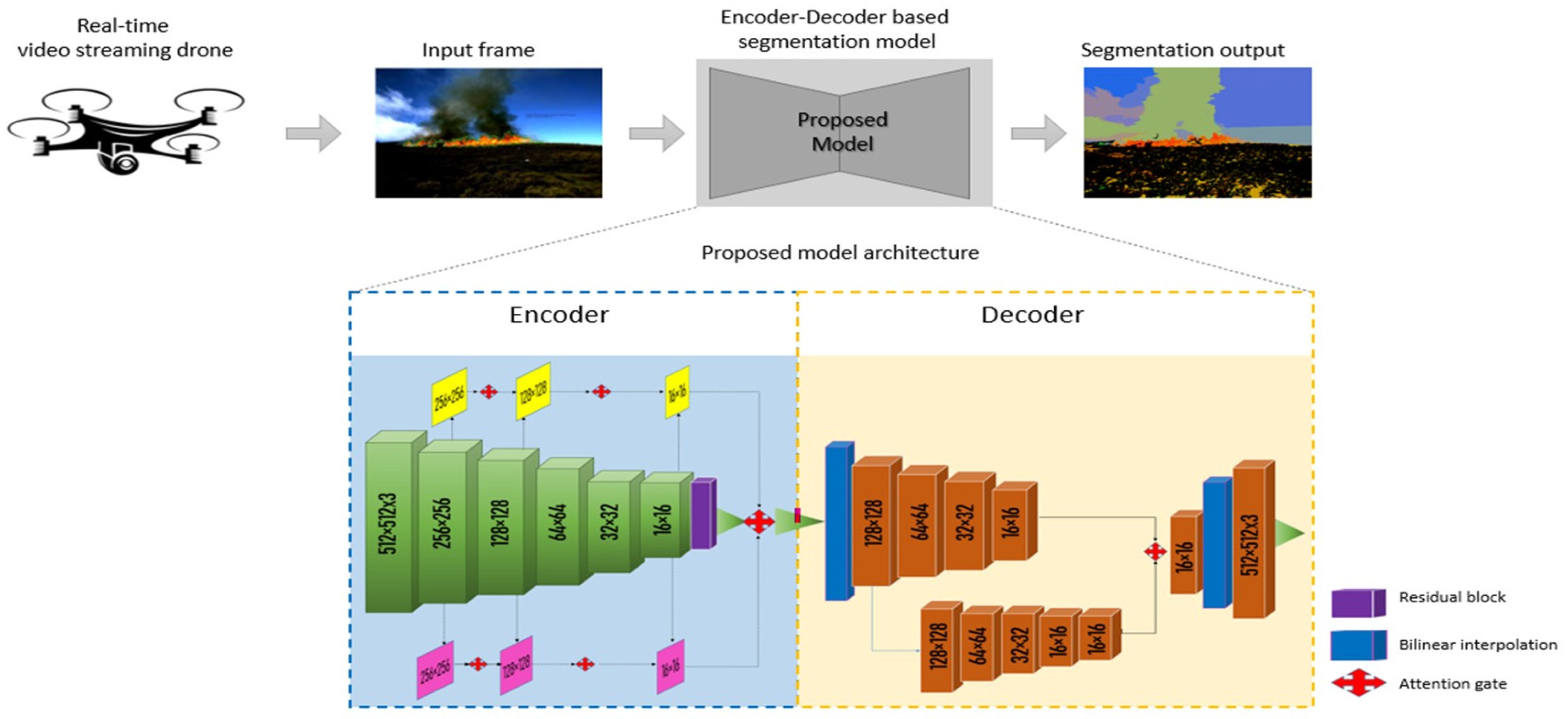

3. Proposed Method

3.1. Feature-Extraction-Network Backbone

3.2. Attention Gate

3.3. Parallel Branches

3.4. Segmentation Network

3.5. Drone

4. Experimental Results

4.1. Implementation Details

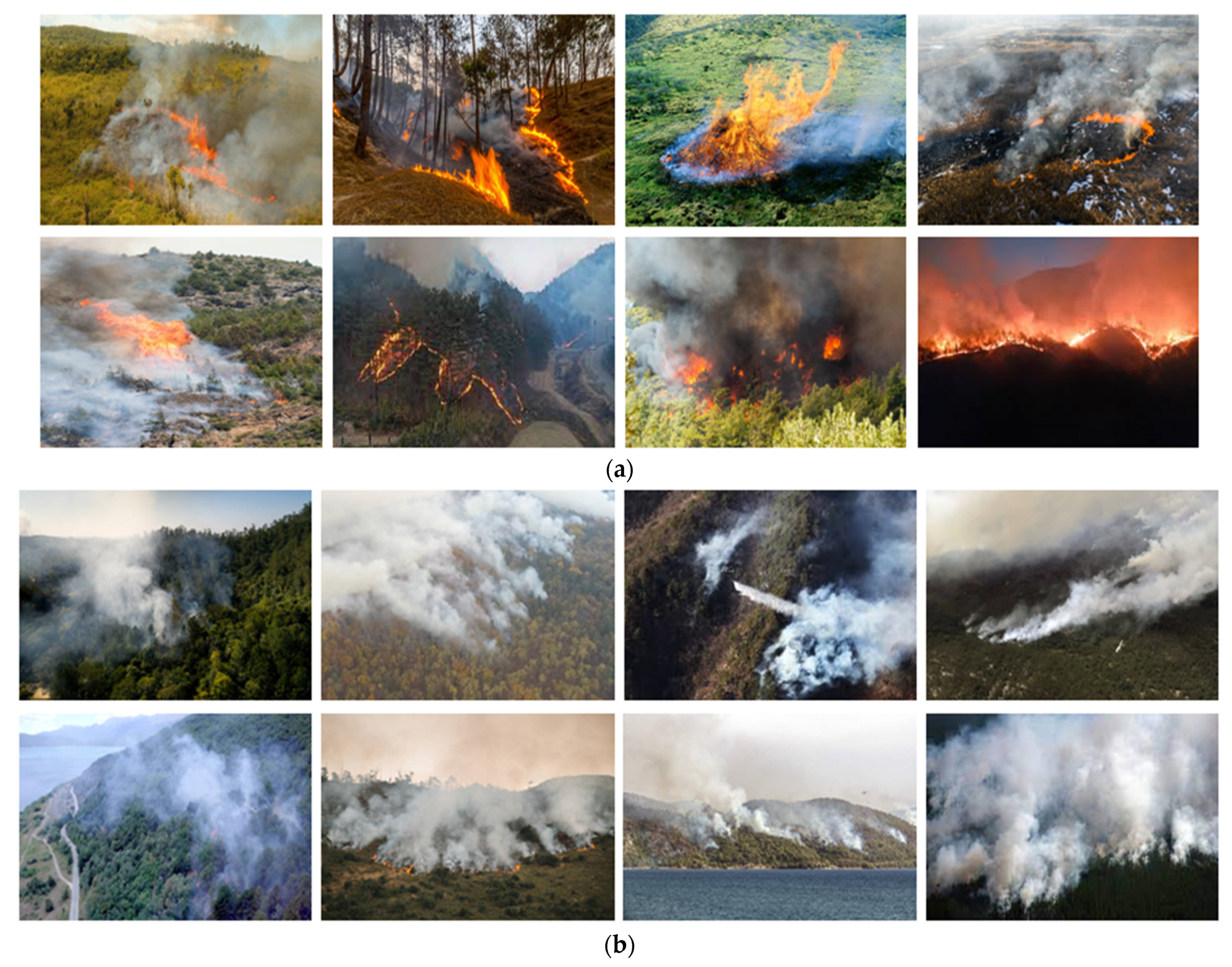

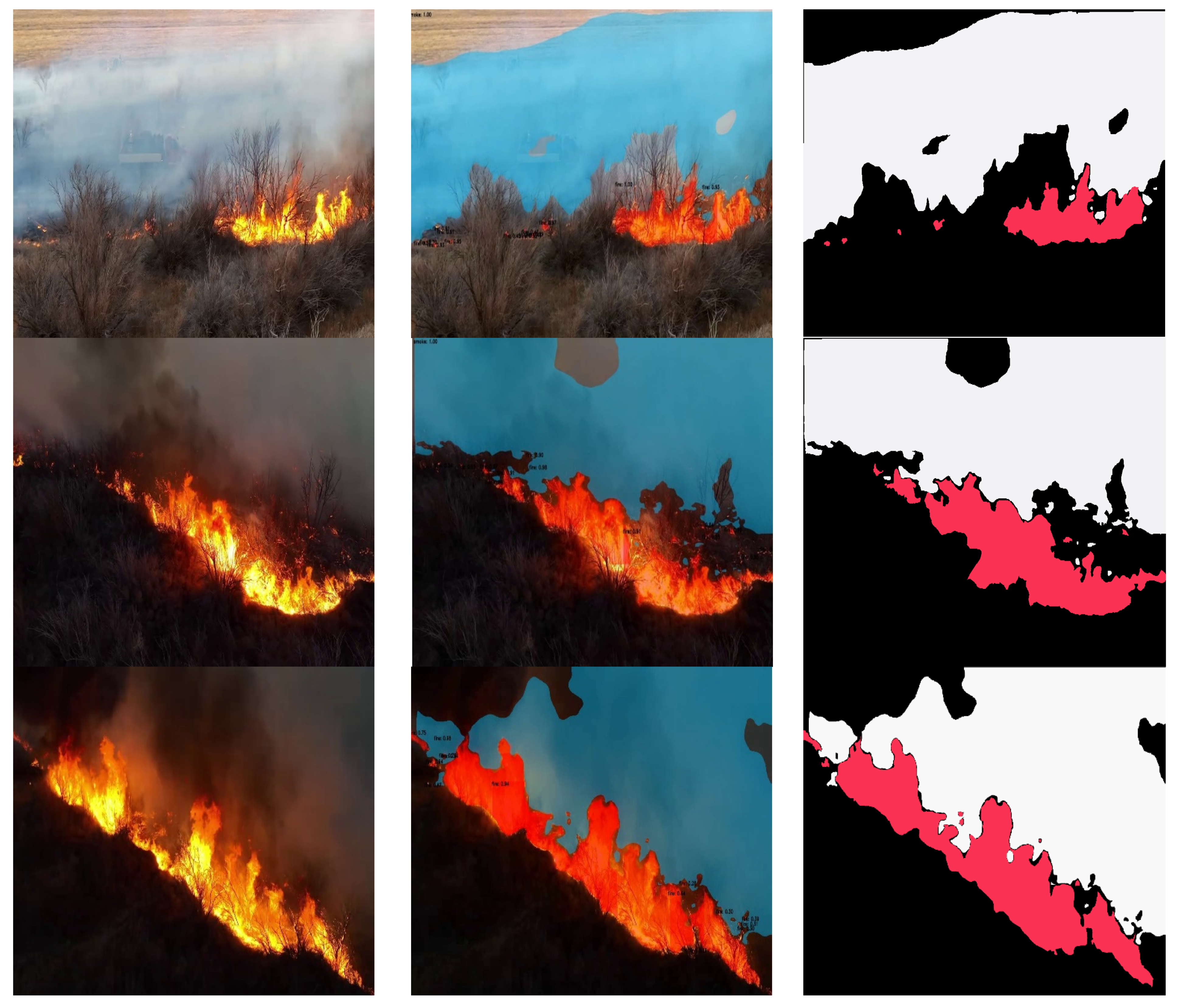

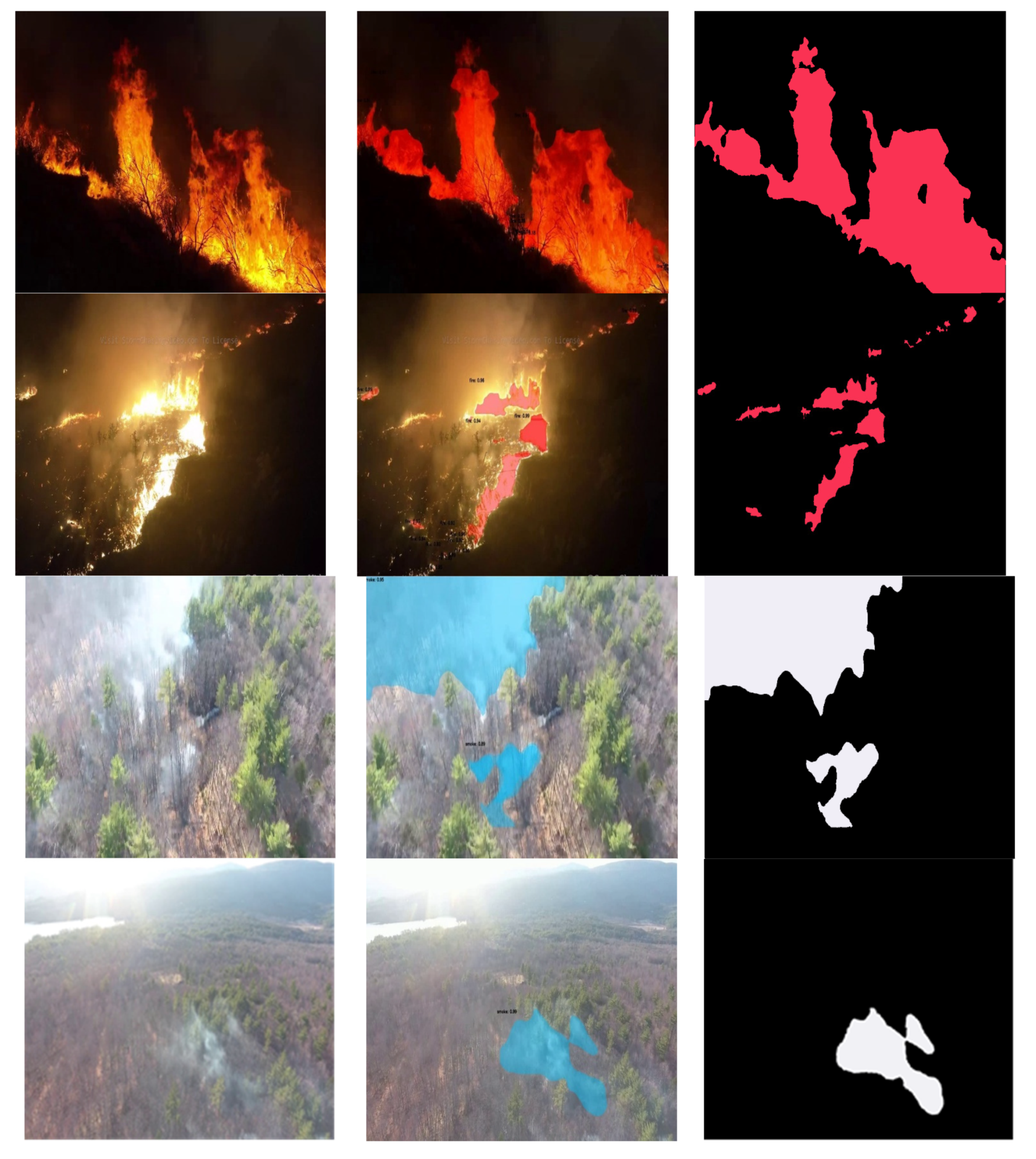

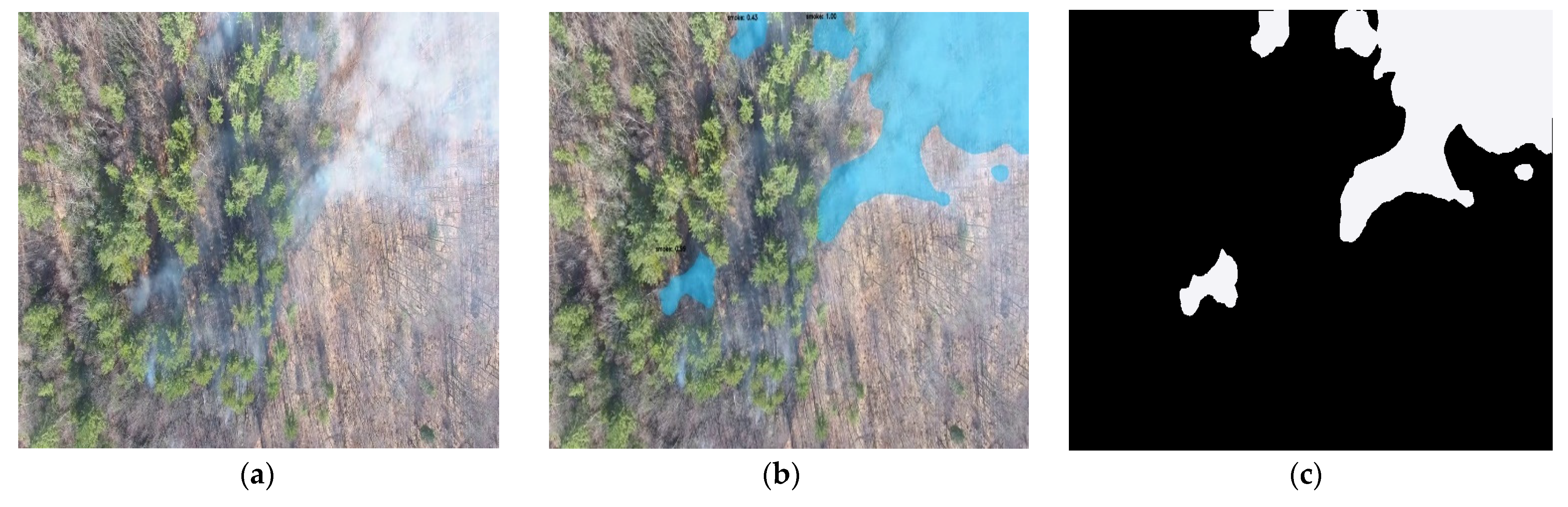

4.2. Datasets

4.3. Training Details

4.4. Process Speediness

4.5. Comparison with State-of-the-Art Methods

4.6. Proposed Model Stability

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| AG | attention gate |

| AP | average precision |

| CNN | convolution neural network |

| CCTV | closed-circuit television |

| DL | deep learning |

| DSC | standard deviation calculator |

| GPU | graphics processing unit |

| FPN | feature pyramid network |

| FPS | frames per second |

| iABN | in-place activated batch normalization |

| ML | machine learning |

| RGB | red, green, and blue |

| RPN | region proposal network |

| SOTA | state of the art |

| TFD | traditional fire detection |

| UAV | unmanned aerial vehicles |

Appendix A

| Image Size | 512 × 512 | 256 × 256 | 128 × 128 | 64 × 64 | 32 × 32 | 28 × 28 |

|---|---|---|---|---|---|---|

| Proposed Work: Average and Std. Dev. | 0.928 ± 0.072 | 0.948 ± 0.070 | 0.911 ± 0.069 | 0.902 ± 0.062 | 0.891 ± 0.087 | 0.890 ± 0.088 |

References

- Number of Fires, Fire Deaths Fall in 2019 “Yonhap News Agency”. Available online: https://en.yna.co.kr/view/AEN20200106008000315 (accessed on 6 January 2020).

- National Interagency Coordination Center Wildland Fire Summary and Statistics Annual Report 2021. Available online: https://www.predictiveservices.nifc.gov/intelligence/2021_statssumm/annual_report_2021.pdf (accessed on 10 July 2022).

- Zheng, Z.; Hu, Y.; Qiao, Y.; Hu, X.; Huang, Y. Real-Time Detection of Winter Jujubes Based on Improved YOLOX-Nano Network. Remote Sens. 2022, 14, 4833. [Google Scholar] [CrossRef]

- Umirzakova, S.; Whangbo, T.K. Detailed feature extraction network-based fine-grained face segmentation. Knowl.-Based Syst. 2022, 250, 109036. [Google Scholar] [CrossRef]

- Unmanned Aerial Vehicles (UAV). Available online: https://www.kari.re.kr/eng/sub03_02.do (accessed on 25 June 2021).

- Frizzi, S.; Kaabi, R.; Bouchouicha, M.; Ginoux, J.-M.; Moreau, E.; Fnaiech, F. Convolutional neural network for video fire and smoke detection. In Proceedings of the 42nd Annual Conference of the IEEE Industrial Electronics Society (IECON 2016), Florence, Italy, 23–26 October 2016; pp. 877–882. [Google Scholar]

- Dzigal, D.; Akagic, A.; Buza, E.; Brdjanin, A.; Dardagan, N. Forest Fire Detection based on Color Spaces Combination. In Proceedings of the 2019 11th International Conference on Electrical and Electronics Engineering (ELECO), Bursa, Turkey, 28–30 November 2019; pp. 595–599. [Google Scholar]

- Pan, J.; Ou, X.; Xu, L. A Collaborative Region Detection and Grading Framework for Forest Fire Smoke Using Weakly Supervised Fine Segmentation and Lightweight Faster-RCNN. Forests 2021, 12, 768. [Google Scholar] [CrossRef]

- Tan, M.; Le, Q.V. EfficientNetV2: Smaller Models and Faster Training. arXiv 2021, arXiv:2104.00298. [Google Scholar]

- Applications for Fire Alarms and Fire Safety. Available online: http://www.vent.co.uk/ fire-alarms/fire-alarm-applications.php (accessed on 5 January 2019).

- Wu, Q.; Cao, J.; Zhou, C.; Huang, J.; Li, Z.; Cheng, S.; Cheng, J.; Pan, G. Intelligent Smoke Alarm System with Wireless Sensor Network Using ZigBee. Wirel. Commun. Mob. Comput. 2018, 2018, 8235127. [Google Scholar] [CrossRef]

- Yadav, R.; Rani, P. Sensor-Based Smart Fire Detection and Fire Alarm System. In Proceedings of the International Conference on Advances in Chemical Engineering (AdChE) 2020, Dehradun, India, 5–7 February 2020. [Google Scholar]

- Jobert, G.; Fournier, M.; Barritault, P.; Boutami, S.; Auger, J.; Maillard, A.; Michelot, J.; Lienhard, P.; Nicoletti, S.; Duraffourg, L. A Miniaturized Optical Sensor for Fire Smoke Detection. In Proceedings of the 2019 20th International Conference on Solid-State Sensors, Actuators and Microsystems & Eurosensors XXXIII (TRANSDUCERS & EUROSENSORS XXXIII), Berlin, Germany, 23–27 June 2019; pp. 1144–1149. [Google Scholar]

- Chowdhury, N.; Mushfiq, D.R.; Chowdhury, A.E. Computer Vision and Smoke Sensor Based Fire Detection System. In Proceedings of the 2019 1st International Conference on Advances in Science, Engineering and Robotics Technology (ICASERT), Dhaka, Bangladesh, 3–5 May 2019; pp. 1–5. [Google Scholar]

- Xu, G.; Zhang, Y.; Zhang, Q.; Lin, G.; Wang, Z.; Jia, Y.; Wang, J. Video smoke detection based on deep saliency network. Fire Saf. J. 2019, 105, 277–285. [Google Scholar] [CrossRef]

- Muksimova, S.; Umirzakova, S.; Mardieva, S.; Cho, Y.I. Novel Video Surveillance-Based Fire and Smoke Classification Using Attentional Feature Map in Capsule Networks. Sensors 2022, 22, 98. [Google Scholar]

- Liu, R.; Tao, F.; Liu, X.; Na, J.; Leng, H.; Wu, J.; Zhou, T. RAANet: A Residual ASPP with Attention Framework for Semantic Segmentation of High-Resolution Remote Sensing Images. Remote Sens. 2022, 14, 3109. [Google Scholar] [CrossRef]

- Zhang, X.; Li, L.; Di, D.; Wang, J.; Chen, G.; Jing, W.; Emam, M. SERNet: Squeeze and Excitation Residual Network for Semantic Segmentation of High-Resolution Remote Sensing Images. Remote Sens. 2022, 14, 4770. [Google Scholar] [CrossRef]

- Xu, Y.; Luo, W.; Hu, A.; Xie, Z.; Xie, X.; Tao, L. TE-SAGAN: An Improved Generative Adversarial Network for Remote Sensing Super-Resolution Images. Remote Sens. 2022, 14, 2425. [Google Scholar] [CrossRef]

- Benjdira, B.; Bazi, Y.; Koubaa, A.; Ouni, K. Unsupervised domain adaptation using generative adversarial networks for semantic segmentation of aerial images. Remote Sens. 2019, 11, 1369. [Google Scholar] [CrossRef]

- Zhang, Q.; Zhang, J.; Liu, W.; Tao, D. Category anchor-guided unsupervised domain adaptation for semantic segmentation. Adv. Neural Inf. Processing Syst. 2019, 32. [Google Scholar]

- Stan, S.; Rostami, M. Unsupervised model adaptation for continual semantic segmentation. In Proceedings of the AAAI Conference on Artificial Intelligence 2021, Virtually, 2–9 February 2021; Volume 35, pp. 2593–2601. [Google Scholar]

- Pan, F.; Shin, I.; Rameau, F.; Lee, S.; Kweon, I.S. Unsupervised intra-domain adaptation for semantic segmentation through self-supervision. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 3764–3773. [Google Scholar]

- Cai, Y.; Yang, Y.; Zheng, Q.; Shen, Z.; Shang, Y.; Yin, J.; Shi, Z. BiFDANet: Unsupervised Bidirectional Domain Adaptation for Semantic Segmentation of Remote Sensing Images. Remote Sens. 2022, 14, 190. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015; Springer International Publishing: Cham, Switzerland, 2015; pp. 234–241. [Google Scholar]

- Shamsoshoara, A.; Afghah, F.; Razi, A.; Zheng, L.; Peter; Fulé, Z.; Blasch, E. Aerial imagery pile burn detection using deep learning: FLAME Dataset. Comput. Netw. 2021, 193, 108001. [Google Scholar]

- Frizzi, S.; Bouchouicha, M.; Ginoux, J.M.; Moreau, E.; Sayadi, M. Convolutional neural network for smoke and fire semantic segmentation. IET Image Process 2021, 15, 634–647. [Google Scholar] [CrossRef]

- Barmpoutis, P.; Stathaki, T.; Dimitropoulos, K.; Grammalidis, N. Early Fire Detection Based on Aerial 360-Degree Sensors, Deep Convolution Neural Networks and Exploitation of Fire Dynamic Textures. Remote Sens. 2020, 12, 3177. [Google Scholar] [CrossRef]

- Chen, L.C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-Decoder with Atrous Separable Convolution for Semantic Image Segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 801–818. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-Excitation Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Samuel, R.B.; Lorenzo, P.; Peter, K. In-Place Activated BatchNorm for Memory-Optimized Training of DNNs. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Lin, T.Y.; Dollár, P.; Girshick, R.; Kaiming, H.; Hariharan, B.; Belongie, S. Feature Pyramid Networks for Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Luong, M.-T.; Pham, H.; Manning, C.D. Effective approaches to attention-based neural machine translation. arXiv 2015, arXiv:1508.04025. [Google Scholar]

- Bahdanau, D.; Cho, K.; Bengio, Y. Neural machine translation by jointly learning to align and translate. arXiv 2014, arXiv:1409.0473. [Google Scholar]

- Oktay, O.; Schlemper, J.; Folgoc, L.L.; Lee, M.; Heinrich, M.; Misawa, K.; Mori, K.; McDonagh, S.; Hammerla, N.Y.; Kainz, B.; et al. Attention u-net: Learning where to look for the pancreas. arXiv 2018, arXiv:1804.03999. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Identity mappings in deep residual networks. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; Springer: Berlin/Heidelberg, Germany, 2016; pp. 630–645. [Google Scholar]

- Maas, A.L.; Hannun, A.Y.; Ng, A.Y. Rectifier nonlinearities improve neural network acoustic models. In Proceedings of the ICML, Atlanta, GA, USA, 16–21 June 2013; p. 3. [Google Scholar]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask r-CNN. In Proceedings of the International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Liu, W.; Rabinovich, A.; Berg, A.C. ParseNet: Looking Wider to See Better. arXiv 2015, arXiv:1506.04579. [Google Scholar]

- DJI Mavic 3. Available online: https://www.dji.com/kr/mavic-3 (accessed on 28 June 2022).

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G. PyTorch: An Imperative Style, High-Performance Deep Learning Library. In Proceedings of the 33rd Conference on Neural Information Processing System, Vancouv, CA, USA, 8–14 December 2019. [Google Scholar]

- Kingma, D.P.; Ba, J.L. Adam: A Method for Stochastic Optimization. arXiv 2015, arXiv:1412.6980. [Google Scholar]

- Wu, D.; Zhang, C.J.; Ji, L.; Ran, R.; Wu, H.Y.; Xu, Y.M. Forest fire recognition based on feature extraction from multi-view images. Traitement Du Signal 2021, 38, 775–783. [Google Scholar] [CrossRef]

- Xavier-Initialization. Available online: https://mnsgrg.com/2017/12/21/xavier-initialization/ (accessed on 21 December 2017).

- Wang, Y.; Luo, B.; Shen, J.; Pantic, M. Face mask extraction in video sequence. Int. J. Comput. Vis. 2019, 127, 625–641. [Google Scholar] [CrossRef]

- Bolya, D.; Zhou, C.; Xiao, F.; Lee, Y.J. Yolact: Real-time instance segmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 9157–9166. [Google Scholar]

- Wang, X.; Kong, T.; Shen, C.; Jiang, Y.; Li, L. SOLO: Segmenting Objects by Locations. 2019. Available online: https://link.springer.com/chapter/10.1007/978-3-030-58523-5_38 (accessed on 4 December 2020).

- Wang, X.; Zhang, R.; Kong, T.; Li, L.; Shen, C. SOLOv2: Dynamic, Faster and Stronger. 2020. Available online: https://deepai.org/publication/solov2-dynamic-faster-and-stronger (accessed on 23 March 2020).

- Chen, H.; Sun, K.; Tian, Z.; Shen, C.; Huang, Y.; Yan, Y. Blendmask: Top-down meets bottom-up for instance segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 8573–8581. [Google Scholar]

- Fu, C.-Y.; Shvets, M.; Berg, A.C. Retina Mask: Learning to predict masks improves state-of-the-art single-shot detection for free. arXiv 2019, arXiv:1901.03353. [Google Scholar]

- Li, Y.; Qi, H.; Dai, J.; Ji, X.; Wei, Y. Fully convolutional instance aware semantic segmentation. In Proceedings of the CVPR, 2017, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Huang, Z.; Huang, L.; Gong, Y.; Huang, C.; Wang, X. Mask scoring r-cnn. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 6409–6418. [Google Scholar]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path aggregation network for instance segmentation. In Proceedings of the CVPR, Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar]

- Bolya, D.; Zhou, C.; Xiao, F.; Lee, Y.J. Yolact++: Better real-time instance segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2020. [Google Scholar] [CrossRef] [PubMed]

| UAV-Platform | Parameters | Specifications |

| Max. flight time | 46 min | |

| Takeoff weight | 895 g | |

| Battery | Lithium-Ion Polymer (LiPo) battery: 5000 mAh/77 Wh | |

| Camera | Hasselblad L2D-20C | |

| Sensor | 4/3” CMOS Sensor | |

| Image size | 5280-3956, 20 MP | |

| Focal size | 24 mm | |

| f/number | f/2.8 to f/11 | |

| Video format | 5120 × 2700 p | |

| Image format | JPEG/DNG | |

| Method | Backbone | APmask | FPS | Time |

|---|---|---|---|---|

| FCIS w/o mask voting | EfficientNet | 27.8 | 9.5 | 105.3 |

| Mask R-CNN (550 × 550) | EfficientNet | 32.2 | 13.5 | 73.9 |

| FC-mask [45] | EfficientNet | 20.7 | 25.7 | 38.9 |

| Yolact-550 [46] | EfficientNet | 29.9 | 33.0 | 42.1 |

| SOLOv2 | EfficientNet | 38.8 | 31.3 | 42.1 |

| Proposed method | EfficientNet | 40.01 | 33.9 | 24.0 |

| Method | Backbone | Time | FPS | APmask | AP50mask | AP75mask | APSmask | APMmask | APLmask |

|---|---|---|---|---|---|---|---|---|---|

| SOLOv1 [47] | Res-101-FPN | 43.2 | 10.4 | 37.8 | 59.5 | 40.4 | 16.4 | 40.6 | 54.2 |

| SOLOv2 [48] | Res-101-FPN | 42.1 | 31.3 | 38.8 | 59.9 | 41.7 | 16.5 | 41.7 | 56.2 |

| Blend Mask [49] | Res-101-FPN | 72.5 | 25 | 38.4 | 60.7 | 41.3 | 18.2 | 41.2 | 53.3 |

| Retina Mask [50] | Res-101-FPN | 166.7 | 6.0 | 34.7 | 55.4 | 36.9 | 14.3 | 36.7 | 50.5 |

| FCIS [51] | Res-101-C5 | 151.5 | 6.7 | 29.5 | 51.5 | 30.2 | 8.0 | 31.0 | 49.7 |

| MS R-CNN [52] | Res-101-FPN | 116.3 | 8.6 | 38.3 | 58.8 | 41.5 | 17.8 | 40.4 | 54.4 |

| YOLACT- 550 [46] | Res-101-FPN | 29.8 | 33.5 | 29.8 | 48.5 | 31.2 | 9.9 | 31.3 | 47.7 |

| Mask R-CNN [38] | Res-101-FPN | 116.3 | 8.6 | 35.7 | 58.0 | 37.8 | 15.5 | 38.1 | 52.4 |

| PA-Net [53] | Res-101-FPN | 212.8 | 4.7 | 36.6 | 58.0 | 39.3 | 16.3 | 38.1 | 53.1 |

| YOLACT++ [54] | Res-101-FPN | 36.7 | 27.3 | 34.6 | 53.8 | 36.9 | 11.9 | 36.8 | 55.1 |

| Proposed method | Res-101-FPN | 26.2 | 33.9 | 39.4 | 63.2 | 40.5 | 16.3 | 42.8 | 56.1 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Muksimova, S.; Mardieva, S.; Cho, Y.-I. Deep Encoder–Decoder Network-Based Wildfire Segmentation Using Drone Images in Real-Time. Remote Sens. 2022, 14, 6302. https://doi.org/10.3390/rs14246302

Muksimova S, Mardieva S, Cho Y-I. Deep Encoder–Decoder Network-Based Wildfire Segmentation Using Drone Images in Real-Time. Remote Sensing. 2022; 14(24):6302. https://doi.org/10.3390/rs14246302

Chicago/Turabian StyleMuksimova, Shakhnoza, Sevara Mardieva, and Young-Im Cho. 2022. "Deep Encoder–Decoder Network-Based Wildfire Segmentation Using Drone Images in Real-Time" Remote Sensing 14, no. 24: 6302. https://doi.org/10.3390/rs14246302

APA StyleMuksimova, S., Mardieva, S., & Cho, Y.-I. (2022). Deep Encoder–Decoder Network-Based Wildfire Segmentation Using Drone Images in Real-Time. Remote Sensing, 14(24), 6302. https://doi.org/10.3390/rs14246302