Using Very-High-Resolution Multispectral Classification to Estimate Savanna Fractional Vegetation Components

Abstract

:1. Introduction

2. Materials and Methods

2.1. Study Area and Permissions

2.2. Field Data and UAS-Derived Canopy Height Model

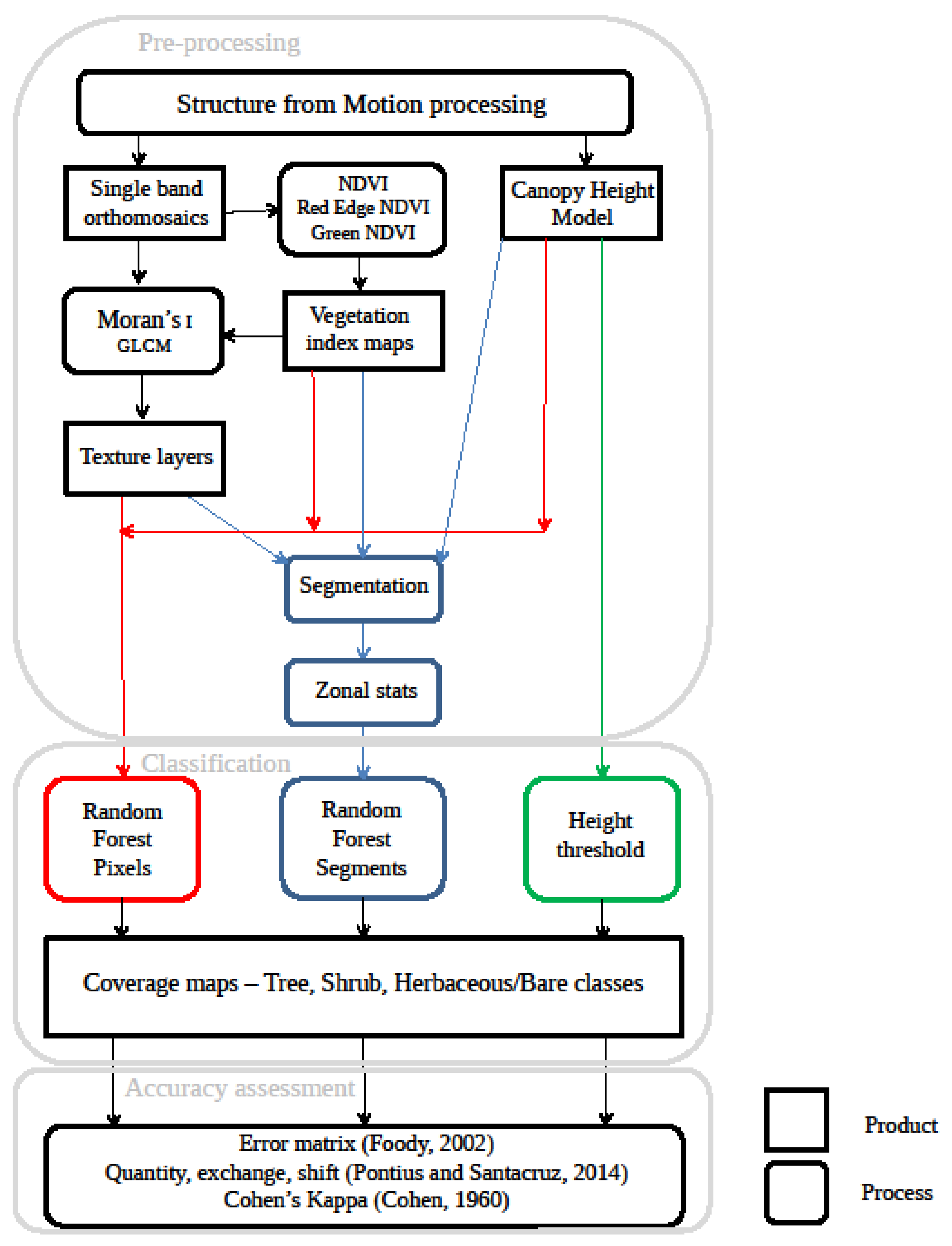

2.3. Data Processing Overview

2.4. Segmentation Units/Parameters

2.5. Analytical Framework

2.6. Technique Agreement Measures

3. Results

3.1. Random Forest Models

3.2. Classification Approach—Accuracy Assessment

3.3. Site Type Characterization

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Cherlet, M.; Hutchinson, C.; Reynolds, J.; Hill, J.; Sommer, S.; von Maltitz, G. World Atlas of Desertification: Rethinking Land Degradation and Sustainable Land Management; Publications Office of the European Commission: Luxenbourg, 2018. [Google Scholar]

- Feng, S.; Fu, Q. Expansion of global drylands under a warming climate. Atmos. Chem. Phys. 2013, 13, 10081–10094. [Google Scholar] [CrossRef] [Green Version]

- Holdridge, L.R. Determination of world plant formations from simple climatic data. Science 1947, 105, 367–368. [Google Scholar] [CrossRef]

- Touboul, J.D.; Staver, A.C.; Levin, S.A. On the complex dynamics of savanna landscapes. Proc. Natl. Acad. Sci. USA 2018, 115, E1336–E1345. [Google Scholar] [CrossRef] [Green Version]

- Archer, S.R.; Andersen, E.M.; Predick, K.I.; Schwinning, S.; Steidl, R.J.; Woods, S.R. Woody plant encroachment: Causes and consequences. In Rangeland Systems: Processes, Management and Challenges; Briske, D.D., Ed.; Springer Series on Environmental Management; Springer International Publishing: Cham, Germany, 2017; pp. 25–84. ISBN 978-3-319-46709-2. [Google Scholar]

- Liu, J.; Dietz, T.; Carpenter, S.R.; Alberti, M.; Folke, C.; Moran, E.; Pell, A.N.; Deadman, P.; Kratz, T.; Lubchenco, J.; et al. Complexity of coupled human and natural systems. Science 2007, 317, 1513–1516. [Google Scholar] [CrossRef] [Green Version]

- Sala, O.E.; Maestre, F.T. Grass–woodland transitions: Determinants and consequences for ecosystem functioning and provisioning of services. J. Ecol. 2014, 102, 1357–1362. [Google Scholar] [CrossRef]

- Gamon, J.A.; Qiu, H.-L.; Sanchez-Azofeifa, A. Ecological applications of remote sensing at multiple scales. In Functional Plant Ecology; CRC Press: Boca Raton, FL, USA, 2007; ISBN 978-0-429-12247-7. [Google Scholar]

- Whiteside, T.G.; Boggs, G.S.; Maier, S.W. Comparing object-based and pixel-based classifications for mapping savannas. Int. J. Appl. Earth Obs. Geoinf. 2011, 13, 884–893. [Google Scholar] [CrossRef]

- Mishra, N.B.; Crews, K.A.; Okin, G.S. Relating spatial patterns of fractional land cover to savanna vegetation morphology using multi-scale remote sensing in the central kalahari. Int. J. Remote Sens. 2014, 35, 2082–2104. [Google Scholar] [CrossRef] [Green Version]

- Belgiu, M.; Drăguţ, L. Random forest in remote sensing: A review of applications and future directions. ISPRS J. Photogramm. Remote Sens. 2016, 114, 24–31. [Google Scholar] [CrossRef]

- Brandt, M.; Tucker, C.J.; Kariryaa, A.; Rasmussen, K.; Abel, C.; Small, J.; Chave, J.; Rasmussen, L.V.; Hiernaux, P.; Diouf, A.A.; et al. An unexpectedly large count of trees in the West African Sahara and Sahel. Nature 2020, 587, 78–82. [Google Scholar] [CrossRef]

- Riva, F.; Nielsen, S.E. A Functional perspective on the analysis of land use and land cover data in ecology. Ambio 2021, 50, 1089–1100. [Google Scholar] [CrossRef]

- Venter, Z.S.; Cramer, M.D.; Hawkins, H.-J. Drivers of woody plant encroachment over Africa. Nat. Commun. 2018, 9, 2272. [Google Scholar] [CrossRef] [Green Version]

- Asner, G.; Levick, S.; Smit, I. Remote sensing of fractional cover and biochemistry in savannas. In Ecosystem Function in Savannas; Hill, M.J., Hanan, N.P., Eds.; CRC Press: Boca Raton, FL, USA, 2010; pp. 195–218. ISBN 978-1-4398-0470-4. [Google Scholar]

- Chadwick, K.D.; Asner, G.P. Organismic-scale remote sensing of canopy foliar traits in lowland tropical forests. Remote Sens. 2016, 8, 87. [Google Scholar] [CrossRef] [Green Version]

- Staver, A.C.; Archibald, S.; Levin, S. Tree cover in Sub-Saharan Africa: Rainfall and fire constrain forest and savanna as alternative stable states. Ecology 2011, 92, 1063–1072. [Google Scholar] [CrossRef]

- Scholes, R.J.; Dowty, P.R.; Caylor, K.; Parsons, D.A.B.; Frost, P.G.H.; Shugart, H.H. Trends in savanna structure and composition along an aridity gradient in the Kalahari. J. Veg. Sci. 2002, 13, 419–428. [Google Scholar] [CrossRef]

- Chadwick, K.D.; Brodrick, P.G.; Grant, K.; Goulden, T.; Henderson, A.; Falco, N.; Wainwright, H.; Williams, K.H.; Bill, M.; Breckheimer, I.; et al. Integrating airborne remote sensing and field campaigns for ecology and earth system science. Methods Ecol. Evol. 2020, 11, 1492–1508. [Google Scholar] [CrossRef]

- Munyati, C.; Economon, E.B.; Malahlela, O.E. Effect of canopy cover and canopy background variables on spectral profiles of savanna rangeland bush encroachment species based on selected acacia species (Mellifera, Tortilis, Karroo) and Dichrostachys Cinerea at Mokopane, South Africa. J. Arid Environ. 2013, 94, 121–126. [Google Scholar] [CrossRef]

- Anderson, K.; Gaston, K. Lightweight unoccupied aerial vehicles will revolutionize spatial ecology. Front. Ecol. Environ. 2013, 11, 138–146. [Google Scholar] [CrossRef] [Green Version]

- Hardin, P.J.; Lulla, V.; Jensen, R.R.; Jensen, J.R. Small unoccupied aerial systems (SUAS) for environmental remote sensing: Challenges and opportunities revisited. GISci. Remote Sens. 2019, 56, 309–322. [Google Scholar] [CrossRef]

- Melville, B.; Lucieer, A.; Aryal, J. Classification of lowland native grassland communities using hyperspectral unoccupied aircraft system (UAS) imagery in the tasmanian midlands. Drones 2019, 3, 5. [Google Scholar] [CrossRef] [Green Version]

- Räsänen, A.; Virtanen, T. Data and resolution requirements in mapping vegetation in spatially heterogeneous landscapes. Remote Sens. Environ. 2019, 230, 111207. [Google Scholar] [CrossRef]

- Smith, M.W.; Carrivick, J.L.; Quincey, D.J. Structure from motion photogrammetry in physical geography. Prog. Phys. Geogr. Earth Environ. 2016, 40, 247–275. [Google Scholar] [CrossRef] [Green Version]

- Kedia, A.C.; Kapos, B.; Liao, S.; Draper, J.; Eddinger, J.; Updike, C.; Frazier, A.E. An integrated spectral–structural workflow for invasive vegetation mapping in an arid region using drones. Drones 2021, 5, 19. [Google Scholar] [CrossRef]

- Gränzig, T.; Fassnacht, F.E.; Kleinschmit, B.; Förster, M. Mapping the fractional coverage of the invasive Shrub Ulex Europaeus with multi-temporal sentinel-2 imagery utilizing UAV orthoimages and a new spatial optimization approach. Int. J. Appl. Earth Obs. Geoinf. 2021, 96, 102281. [Google Scholar] [CrossRef]

- Xu, Z.; Shen, X.; Cao, L.; Coops, N.C.; Goodbody, T.R.H.; Zhong, T.; Zhao, W.; Sun, Q.; Ba, S.; Zhang, Z.; et al. Tree species classification using UAS-based digital aerial photogrammetry point clouds and multispectral imageries in subtropical natural forests. Int. J. Appl. Earth Obs. Geoinf. 2020, 92, 102173. [Google Scholar] [CrossRef]

- Stehman, S.V.; Wickham, J.D. Pixels, blocks of pixels, and polygons: Choosing a spatial unit for thematic accuracy assessment. Remote Sens. Environ. 2011, 115, 3044–3055. [Google Scholar] [CrossRef]

- Ye, S.; Pontius, R.G.; Rakshit, R. A review of accuracy assessment for object-based image analysis: From per-pixel to per-polygon approaches. ISPRS J. Photogramm. Remote Sens. 2018, 141, 137–147. [Google Scholar] [CrossRef]

- Bruzzone, L.; Demir, B. A review of modern approaches to classification of remote sensing data. In Land Use and Land Cover Mapping in Europe: Practices & Trends; Manakos, I., Braun, M., Eds.; Remote Sensing and Digital Image Processing; Springer: Dordrecht, The Netherlands, 2014; pp. 127–143. ISBN 978-94-007-7969-3. [Google Scholar]

- Maxwell, A.E.; Warner, T.A.; Fang, F. Implementation of machine-learning classification in remote sensing: An applied review. Int. J. Remote Sens. 2018, 39, 2784–2817. [Google Scholar] [CrossRef] [Green Version]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef] [Green Version]

- Jawak, S.D.; Devliyal, P.; Luis, A.J. A Comprehensive review on pixel oriented and object oriented methods for information extraction from remotely sensed satellite images with a special emphasis on cryospheric applications. Adv. Remote Sens. 2015, 4, 177–195. [Google Scholar] [CrossRef] [Green Version]

- Chenari, A.; Erfanifard, Y.; Dehghani, M.; Pourghasemi, H.R. Woodland mapping and single-tree levels using object-oriented classification of unoccupied aerial vehicle (UAV) images. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, 42, 43–49. [Google Scholar] [CrossRef] [Green Version]

- Blaschke, T. Object based image analysis for remote sensing. ISPRS J. Photogramm. Remote Sens. 2010, 65, 2–16. [Google Scholar] [CrossRef] [Green Version]

- Kolarik, N.E.; Gaughan, A.E.; Stevens, F.R.; Pricope, N.G.; Woodward, K.; Cassidy, L.; Salerno, J.; Hartter, J. A multi-plot assessment of vegetation structure using a micro-unoccupied aerial system (UAS) in a semi-arid savanna environment. ISPRS J. Photogramm. Remote Sens. 2020, 164, 84–96. [Google Scholar] [CrossRef] [Green Version]

- Salerno, J.; Cassidy, L.; Drake, M.; Hartter, J. Living in an Elephant Landscape: The local communities most affected by wildlife conservation often have little say in how it is carried out, even when policy incentives are intended to encourage their support. Am. Sci. 2018, 106, 34–41. [Google Scholar]

- Gaughan, A.; Waylen, P. Spatial and temporal precipitation variability in the Okavango-Kwando-Zambezi catchment, Southern Africa. J. Arid Environ. 2012, 82, 19–30. [Google Scholar] [CrossRef]

- Pricope, N.G.; Gaughan, A.E.; All, J.D.; Binford, M.W.; Rutina, L.P. Spatio-temporal analysis of vegetation dynamics in relation to shifting inundation and fire regimes: Disentangling environmental variability from land management decisions in a Southern African transboundary watershed. Land 2015, 4, 627–655. [Google Scholar] [CrossRef]

- Elliott, K.C.; Montgomery, R.; Resnik, D.B.; Goodwin, R.; Mudumba, T.; Booth, J.; Whyte, K. Drone use for environmental research [Perspectives]. IEEE Geosci. Remote Sens. Mag. 2019, 7, 106–111. [Google Scholar] [CrossRef]

- Pix4D Pix4Dmapper 4.1 USER MANUAL. Available online: https://support.pix4d.com/hc/en-us/articles/204272989-Offline-Getting-Started-and-Manual-pdf (accessed on 7 July 2021).

- Pricope, N.G.; Mapes, K.L.; Woodward, K.D.; Olsen, S.F.; Baxley, J.B. Multi-sensor assessment of the effects of varying processing parameters on UAS product accuracy and quality. Drones 2019, 3, 63. [Google Scholar] [CrossRef] [Green Version]

- R Core Team. R: A Language and Envrionment for Statistical Computing; R Foundation for Statistical Computing: Vienna, Austria, 2020. [Google Scholar]

- Su, W.; Li, J.; Chen, Y.; Liu, Z.; Zhang, J.; Low, T.M.; Suppiah, I.; Hashim, S.A.M. Textural and local spatial statistics for the object-oriented classification of urban areas using high resolution imagery. Int. J. Remote Sens. 2008, 29, 3105–3117. [Google Scholar] [CrossRef]

- Farwell, L.S.; Gudex-Cross, D.; Anise, I.E.; Bosch, M.J.; Olah, A.M.; Radeloff, V.C.; Razenkova, E.; Rogova, N.; Silveira, E.M.O.; Smith, M.M.; et al. Satellite image texture captures vegetation heterogeneity and explains patterns of bird richness. Remote Sens. Environ. 2021, 253, 112175. [Google Scholar] [CrossRef]

- Liu, D.; Xia, F. Assessing object-based classification: Advantages and limitations. Remote Sens. Lett. 2010, 1, 187–194. [Google Scholar] [CrossRef]

- Breiman, L. Bagging predictors. Mach. Learn. 1996, 24, 123–140. [Google Scholar] [CrossRef] [Green Version]

- Liaw, A.; Wiener, M. Classification and regression by randomForest. R News 2002, 2, 5. [Google Scholar]

- Fisher, J.T. Savanna woody vegetation classification—Now in 3-D. Appl. Veg. Sci. 2014, 17, 172–184. [Google Scholar] [CrossRef]

- Pontius, R.; Santacruz, A. Quantity, exchange, and shift components of difference in a square contingency table. Int. J. Remote Sens. 2014, 35, 7543–7554. [Google Scholar] [CrossRef]

- Foody, G.M. Status of land cover classification accuracy assessment. Remote Sens. Environ. 2002, 80, 185–201. [Google Scholar] [CrossRef]

- Cohen, J. A Coefficient of agreement for nominal scales. Educ. Psychol. Meas. 1960, 20, 37–46. [Google Scholar] [CrossRef]

- Story, M.; Congalton, R.C. Accuracy assessment: A user’s perspective. Photogramm. Eng. Remote Sens. 1986, 52, 397–399. [Google Scholar]

- Smith, W.K.; Dannenberg, M.P.; Yan, D.; Herrmann, S.; Barnes, M.L.; Barron-Gafford, G.A.; Biederman, J.A.; Ferrenberg, S.; Fox, A.M.; Hudson, A.; et al. Remote sensing of dryland ecosystem structure and function: Progress, challenges, and opportunities. Remote Sens. Environ. 2019, 233, 111401. [Google Scholar] [CrossRef]

- Lu, B.; He, Y. Species classification using unoccupied aerial vehicle (UAV)-acquired high spatial resolution imagery in a heterogeneous grassland. ISPRS J. Photogramm. Remote Sens. 2017, 128, 73–85. [Google Scholar] [CrossRef]

- Melville, B.; Fisher, A.; Lucieer, A. Ultra-high spatial resolution fractional vegetation cover from unoccupied aerial multispectral imagery. Int. J. Appl. Earth Obs. Geoinf. 2019, 78, 14–24. [Google Scholar] [CrossRef]

- Pádua, L.; Guimarães, N.; Adão, T.; Marques, P.; Peres, E.; Sousa, A.; Sousa, J.J. Classification of an agrosilvopastoral system using RGB imagery from an unoccupied aerial vehicle. In Progress in Artificial Intelligence; Moura Oliveira, P., Novais, P., Reis, L.P., Eds.; Lecture Notes in Computer Science; Springer International Publishing: Cham, Switzerland, 2019; Volume 11804, pp. 248–257. ISBN 978-3-030-30240-5. [Google Scholar]

- Weiss, G.M.; Provost, F. The effect of class distribution on classifier learning. In Technical Report ML-TR-43; Department of Computer Science, Rutgers University, 2001; Available online: https://storm.cis.fordham.edu/~gweiss/papers/ml-tr-44.pdf (accessed on 22 December 2021). [CrossRef]

- Baldi, P.; Brunak, S.; Chauvin, Y.; Andersen, C.A.F.; Nielsen, H. Assessing the accuracy of prediction algorithms for classification: An overview. Bioinformatics 2000, 16, 412–424. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Scholtz, R.; Kiker, G.A.; Smit, I.P.J.; Venter, F.J. Identifying drivers that influence the spatial distribution of woody vegetation in Kruger National Park, South Africa. Ecosphere 2014, 5, 1–12. [Google Scholar] [CrossRef]

| Type | Description | References |

|---|---|---|

| Error matrix | Cross tabulation of n × n array of land-cover classes. Columns represent the reference data; rows denote mapped land-cover class. | [51] |

| Omission | Number of reference points left out from the intended mapped land-cover class. | [54] |

| Commission | Number of reference points for a given class incorrectly mapped to a different land-cover class in the land-cover output. | [54] |

| Agreement | Total number of correctly classified reference points in the final mapped output. | [54] |

| Quantity | Amount of absolute difference between the reference map and a comparison map due to the less than perfect match in the proportions of the categories. | [51] |

| Exchange | Exchange occurs as a one-to-one difference between two categories. These differences do not reflect the quantity differences of the classes, but rather a spatial mismatch. | [51] |

| Shift | Shift represents the leftover disagreement after subtracting quantity and exchange differences from the total. These differences are due to exchanges occurring among >2 map classes. | [51] |

| Cohen’s kappa (unweighted) | Measure of agreement between a land-cover map and a set of reference points, corrected for chance uncertainty. | [53] |

| (a) Pixel-Based RF (P-RF) | |||||||

| Error Matrix Unweighted | |||||||

| Other | Shrub | Tree | |||||

| Other | 42 | 6 | 0 | ||||

| Shrub | 2 | 35 | 5 | ||||

| Tree | 0 | 3 | 39 | ||||

| Kappa Unweighted | |||||||

| Value | ASE | z | Pr(>|z|) | ||||

| 0.8182 | 0.04253 | 19.24 | 1.79E-82 | ||||

| Difference Table | |||||||

| Category | Omission | Agreement | Commission | Quantity | Exchange | Shift | |

| 1 | Other | 2 | 42 | 6 | 4 | 4 | 0 |

| 2 | Shrub | 9 | 35 | 7 | 2 | 10 | 4 |

| 3 | Tree | 5 | 39 | 3 | 2 | 6 | 0 |

| 4 | Overall | 16 | 116 | 16 | 4 | 10 | 2 |

| (b) Segment-Based RF (S-RF) | |||||||

| Error Matrix Unweighted | |||||||

| Other | Shrub | Tree | |||||

| Other | 36 | 3 | 2 | ||||

| Shrub | 8 | 37 | 10 | ||||

| Tree | 0 | 4 | 32 | ||||

| Kappa Unweighted | |||||||

| Value | ASE | z | Pr(>|z|) | ||||

| 0.6932 | 0.05247 | 13.21 | 7.69E-40 | ||||

| Difference Table | |||||||

| Category | Omission | Agreement | Commission | Quantity | Exchange | Shift | |

| 1 | Other | 8 | 36 | 5 | 3 | 6 | 4 |

| 2 | Shrub | 7 | 37 | 18 | 11 | 14 | 0 |

| 3 | Tree | 12 | 32 | 4 | 8 | 8 | 0 |

| 4 | Overall | 27 | 105 | 27 | 11 | 14 | 2 |

| (c) Canopy Height Threshold | |||||||

| Error Matrix Unweighted | |||||||

| Other | Shrub | Tree | |||||

| Other | 43 | 15 | 0 | ||||

| Shrub | 1 | 27 | 6 | ||||

| Tree | 0 | 2 | 38 | ||||

| Kappa Unweighted | |||||||

| Value | ASE | z | Pr(>|z|) | ||||

| 0.7273 | 0.04947 | 14.7 | 6.30E-49 | ||||

| Difference Table | |||||||

| Category | Omission | Agreement | Commission | Quantity | Exchange | Shift | |

| 1 | Other | 1 | 43 | 15 | 14 | 2 | 0 |

| 2 | Shrub | 17 | 27 | 7 | 10 | 6 | 8 |

| 3 | Tree | 6 | 38 | 2 | 4 | 4 | 0 |

| 4 | Overall | 24 | 108 | 24 | 14 | 6 | 4 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gaughan, A.E.; Kolarik, N.E.; Stevens, F.R.; Pricope, N.G.; Cassidy, L.; Salerno, J.; Bailey, K.M.; Drake, M.; Woodward, K.; Hartter, J. Using Very-High-Resolution Multispectral Classification to Estimate Savanna Fractional Vegetation Components. Remote Sens. 2022, 14, 551. https://doi.org/10.3390/rs14030551

Gaughan AE, Kolarik NE, Stevens FR, Pricope NG, Cassidy L, Salerno J, Bailey KM, Drake M, Woodward K, Hartter J. Using Very-High-Resolution Multispectral Classification to Estimate Savanna Fractional Vegetation Components. Remote Sensing. 2022; 14(3):551. https://doi.org/10.3390/rs14030551

Chicago/Turabian StyleGaughan, Andrea E., Nicholas E. Kolarik, Forrest R. Stevens, Narcisa G. Pricope, Lin Cassidy, Jonathan Salerno, Karen M. Bailey, Michael Drake, Kyle Woodward, and Joel Hartter. 2022. "Using Very-High-Resolution Multispectral Classification to Estimate Savanna Fractional Vegetation Components" Remote Sensing 14, no. 3: 551. https://doi.org/10.3390/rs14030551