Building Change Detection Based on 3D Co-Segmentation Using Satellite Stereo Imagery

Abstract

:1. Introduction

- A novel energy function is proposed that can fully mine the information contained in stereo image pairings by taking height information, spectral information, and spatial neighborhood information into consideration;

- Using a sigmoid function to map the change indicator to the energy value, which can better deal with the gross error in the change features;

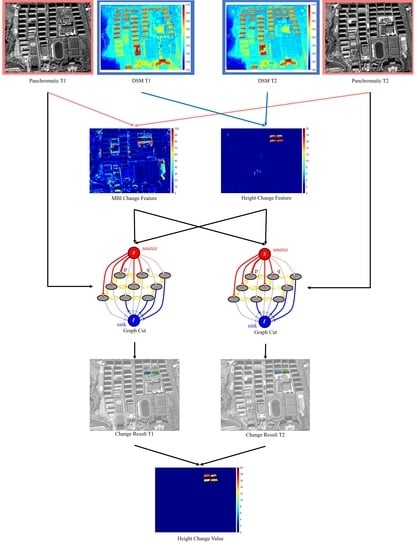

- A 3D co-segmentation algorithm for building change detection is proposed based on the proposed energy function. The algorithm considers height change, spectral change, and spatial neighborhood information, and uses segmentation to directly obtain change detection results;

- The algorithm can obtain the quantitative height change value of each changed building.

2. Materials and Methods

2.1. Stereo Reconstruction

2.2. 3D Change Feature

2.2.1. Spectral Change Feature

2.2.2. Height Change Feature

2.3. 3D Co-Segmentation

2.3.1. Energy Function

2.3.2. Graph Construction

2.3.3. Edge Weights

2.3.4. Energy Minimization

2.4. Spatial Correspondence

2.5. Height Change Determenation

3. Results

3.1. Study Area and Datasets

3.2. Evaluation Method

3.2.1. Pixel Based Accuracy

3.2.2. Object Based Accuracy

- True detected number (TDN): The number of changed buildings detected correctly;

- True detected rate (TDR): The ratio of the number of correctly detected buildings to the total number of changed buildings in the reference map,;

- False detected number (FDN): The number of incorrectly detected buildings with unchanged as changed;

- False detected rate (FDR): The ratio of the number of buildings that are incorrectly detected as changed to the total number of buildings detected in the result, .

3.2.3. Height Change Accuracy

3.3. Parameter Setting

3.4. Experiment Result

3.5. Accuracy Evaluation

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Hirschmuller, H. Stereo processing by semiglobal matching and mutual information. IEEE Trans. Pattern Anal. Mach. Intell. 2007, 30, 328–341. [Google Scholar] [CrossRef] [PubMed]

- d’Angelo, P.; Lehner, M.; Krauss, T.; Hoja, D.; Reinartz, P. Towards automated DEM generation from high resolution stereo satellite images. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. Int. Soc. Photogramm. Remote Sens. 2008, 37, 1137–1342. [Google Scholar]

- d’Angelo, P.; Reinartz, P. Semiglobal matching results on the ISPRS stereo matching benchmark. In High-Resolution Earth Imaging for Geospatial Information; Institute of Photogrammetry and GeoInformation, Leibniz Universität Hannover: Hanover, Germany, 2011. [Google Scholar]

- Qin, R.; Tian, J.; Reinartz, P. 3D change detection–approaches and applications. ISPRS J. Photogramm. Remote Sens. 2016, 122, 41–56. [Google Scholar] [CrossRef] [Green Version]

- Xiao, P.; Yuan, M.; Zhang, X.; Feng, X.; Guo, Y. Cosegmentation for object-based building change detection from high-resolution remotely sensed images. IEEE Trans. Geosci. Remote Sens. 2017, 55, 1587–1603. [Google Scholar] [CrossRef]

- Zhang, X.; Xiao, P.; Feng, X.; Yuan, M. Separate segmentation of multi-temporal high-resolution remote sensing images for object-based change detection in urban area. Remote Sens. Environ. 2017, 201, 243–255. [Google Scholar] [CrossRef]

- Chen, J.; Liu, H.; Hou, J.; Yang, M.; Deng, M. Improving Building Change Detection in VHR Remote Sensing Imagery by Combining Coarse Location and Co-Segmentation. ISPRS Int. J. Geo-Inf. 2018, 7, 213. [Google Scholar] [CrossRef] [Green Version]

- Gong, J.; Hu, X.; Pang, S.; Li, K. Patch Matching and Dense CRF-Based Co-Refinement for Building Change Detection from Bi-Temporal Aerial Images. Sensors 2019, 19, 1557. [Google Scholar] [CrossRef] [Green Version]

- Gong, J.; Hu, X.; Pang, S.; Wei, Y. Roof-Cut Guided Localization for Building Change Detection from Imagery and Footprint Map. Photogramm. Eng. Remote Sens. 2019, 85, 543–558. [Google Scholar] [CrossRef]

- Zhu, L.; Zhang, J.; Sun, Y. Remote Sensing Image Change Detection Using Superpixel Cosegmentation. Information 2021, 12, 94. [Google Scholar] [CrossRef]

- Hao, M.; Zhou, M.; Cai, L. An improved graph-cut-based unsupervised change detection method for multispectral remote-sensing images. Int. J. Remote Sens. 2021, 42, 4005–4022. [Google Scholar] [CrossRef]

- Zhang, K.; Fu, X.; Lv, X.; Yuan, J. Unsupervised Multitemporal Building Change Detection Framework Based on Cosegmentation Using Time-Series SAR. Remote Sens. 2021, 13, 471. [Google Scholar] [CrossRef]

- Gstaiger, V.; Tian, J.; Kiefl, R.; Kurz, F. 2d vs. 3d change detection using aerial imagery to support crisis management of large-scale events. Remote Sens. 2018, 10, 2054. [Google Scholar] [CrossRef] [Green Version]

- Tian, J.; Chaabouni-Chouayakh, H.; Reinartz, P. 3D building change detection from high resolution spaceborne stereo imagery. In Proceedings of the 2011 International Workshop on Multi-Platform/Multi-Sensor Remote Sensing and Mapping, Xiamen, China, 10–12 January 2011; pp. 1–7. [Google Scholar] [CrossRef] [Green Version]

- Tian, J.; Reinartz, P. Multitemporal 3D change detection in urban areas using stereo information from different sensors. In Proceedings of the 2011 International Symposium on Image and Data Fusion, Tengchong, China, 9–11 August 2011; pp. 1–4. [Google Scholar] [CrossRef] [Green Version]

- Tian, J.; Cui, S.; Reinartz, P. Building change detection based on satellite stereo imagery and digital surface models. IEEE Trans. Geosci. Remote Sens. 2013, 52, 406–417. [Google Scholar] [CrossRef] [Green Version]

- Tian, J.; Reinartz, P. Dempster-Shafer fusion based building change detection from satellite stereo imagery. In Proceedings of the 17th International Conference on Information Fusion (FUSION), Salamanca, Spain, 7–10 July 2014; pp. 1–7. [Google Scholar]

- Tian, J.; Reinartz, P.; Dezert, J. Building change detection in satellite stereo imagery based on belief functions. In Proceedings of the 2015 Joint Urban Remote Sensing Event (JURSE), Lausanne, Switzerland, 30 March–1 April 2015; pp. 1–4. [Google Scholar] [CrossRef] [Green Version]

- Tian, J.; Dezert, J.; Reinartz, P. Refined building change detection in satellite stereo imagery based on belief functions and reliabilities. In Proceedings of the 2015 IEEE International Conference on Multisensor Fusion and Integration for Intelligent Systems (MFI), San Diego, CA, USA, 14–16 September 2015; pp. 160–165. [Google Scholar] [CrossRef] [Green Version]

- Tian, J.; Dezert, J.; Qin, R. Time-Series 3D Building Change Detection Based on Belief Functions. In Proceedings of the 2018 21st International Conference on Information Fusion (FUSION), Cambridge, UK, 10–13 July 2018; pp. 1–5. [Google Scholar] [CrossRef] [Green Version]

- Tian, J.; Dezert, J. Fusion of multispectral imagery and DSMs for building change detection using belief functions and reliabilities. Int. J. Image Data Fusion 2019, 10, 1–27. [Google Scholar] [CrossRef] [Green Version]

- Tian, J.; Reinartz, P.; d’Angelo, P.; Ehlers, M. Region-based automatic building and forest change detection on Cartosat-1 stereo imagery. ISPRS J. Photogramm. Remote Sens. 2013, 79, 226–239. [Google Scholar] [CrossRef]

- Qin, R. An Object-Based Hierarchical Method for Change Detection Using Unmanned Aerial Vehicle Images. Remote Sens. 2014, 6, 7911–7932. [Google Scholar] [CrossRef] [Green Version]

- Qin, R. Change detection on LOD 2 building models with very high resolution spaceborne stereo imagery. ISPRS J. Photogramm. Remote Sens. 2014, 96, 179–192. [Google Scholar] [CrossRef]

- Tian, J.; Gharibbafghi, Z.; Reinartz, P. Superpixel-Based 3D Building Model Refinement and Change Detection, Using VHR Stereo Satellite Imagery. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Long Beach, CA, USA, 16–17 June 2019; pp. 493–495. [Google Scholar] [CrossRef] [Green Version]

- Wen, D.; Huang, X.; Zhang, A.; Ke, X. Monitoring 3D Building Change and Urban Redevelopment Patterns in Inner City Areas of Chinese Megacities Using Multi-View Satellite Imagery. Remote Sens. 2019, 11, 763. [Google Scholar] [CrossRef] [Green Version]

- Huang, X.; Cao, Y.; Li, J. An Automatic Change Detection Method for Monitoring Newly Constructed Building Areas Using Time-Series Multi-View High-Resolution Optical Satellite Images. Remote Sens. Environ. 2020, 244, 111802. [Google Scholar] [CrossRef]

- Qin, R.; Gruen, A. A supervised method for object-based 3d building change detection on aerial stereo images. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2014, 40, 259. [Google Scholar] [CrossRef] [Green Version]

- Chen, B.; Chen, Z.; Deng, L.; Duan, Y.; Zhou, J. Building change detection with RGB-D map generated from UAV images. Neurocomputing 2016, 208, 350–364. [Google Scholar] [CrossRef]

- Tian, J.; Qinb, R.; Cerra, D.; Reinartz, P. Building Change Detection in Very High Resolution Satellite Stereo Image Time Series. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2016 2016, 3, 149–155. [Google Scholar] [CrossRef] [Green Version]

- Qin, R.; Tian, J.; Reinartz, P. Spatiotemporal inferences for use in building detection using series of very-high-resolution space-borne stereo images. Int. J. Remote Sens. 2016, 37, 3455–3476. [Google Scholar] [CrossRef] [Green Version]

- Yuan, X.; Tian, J.; Reinartz, P. Building Change Detection Based on Deep Learning and Belief Function. In Proceedings of the Joint Urban Remote Sensing Event 2019, Vannes, France, 22–24 May 2019. [Google Scholar]

- Pang, S.; Hu, X.; Cai, Z.; Gong, J.; Zhang, M. Building change detection from bi-temporal dense-matching point clouds and aerial images. Sensors 2018, 18, 966. [Google Scholar] [CrossRef] [Green Version]

- Pang, S.; Hu, X.; Zhang, M.; Cai, Z.; Liu, F. Co-Segmentation and Superpixel-Based Graph Cuts for Building Change Detection from Bi-Temporal Digital Surface Models and Aerial Images. Remote Sens. 2019, 11, 729. [Google Scholar] [CrossRef] [Green Version]

- Nebiker, S.; Lack, N.; Deuber, M. Building Change Detection from Historical Aerial Photographs Using Dense Image Matching and Object-Based Image Analysis. Remote Sens. 2014, 6, 8310–8336. [Google Scholar] [CrossRef] [Green Version]

- Krauss, T.; d’Angelo, P.; Kuschk, G.; Tian, J.; Partovi, T. 3D-information fusion from very high resolution satellite sensors. ISPRS-Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2015, XL-7/W3, 651–656. [Google Scholar] [CrossRef] [Green Version]

- Mohammadi, H.; Samadzadegan, F. An Object Based Framework for Building Change Analysis Using 2D and 3D Information of High Resolution Satellite Images. Adv. Space Res. 2020, 66, 1386–1404. [Google Scholar] [CrossRef]

- Tabib Mahmoudi, F.; Hosseini, S. Three-Dimensional Building Change Detection Using Object-Based Image Analysis (Case Study: Tehran). Appl. Geomat. 2021, 13, 325–332. [Google Scholar] [CrossRef]

- Boykov, Y.; Veksler, O.; Zabih, R. Fast approximate energy minimization via graph cuts. IEEE Trans. Pattern Anal. Mach. Intell. 2001, 23, 1222–1239. [Google Scholar] [CrossRef] [Green Version]

- Huang, X.; Zhang, L. A Multidirectional and Multiscale Morphological Index for Automatic Building Extraction from Multispectral GeoEye-1 Imagery. Photogramm. Eng. Remote Sens. 2011, 77, 721–732. [Google Scholar] [CrossRef]

- Huang, X.; Zhang, L. Morphological Building/Shadow Index for Building Extraction From High-Resolution Imagery Over Urban Areas. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2012, 5, 161–172. [Google Scholar] [CrossRef]

- Fraser, C.S.; Hanley, H.B. Bias Compensation in Rational Functions for Ikonos Satellite Imagery. Photogramm. Eng. Remote Sens. 2003, 69, 53–57. [Google Scholar] [CrossRef]

- Fraser, C.S.; Hanley, H.B. Bias-Compensated RPCs for Sensor Orientation of High-Resolution Satellite Imagery. Photogramm. Eng. Remote Sens. 2005, 71, 909–915. [Google Scholar] [CrossRef]

- Grodecki, J.; Dial, G. Block Adjustment of High-Resolution Satellite Images Described by Rational Polynomials. Photogramm. Eng. Remote Sens. 2003, 69, 59–68. [Google Scholar] [CrossRef]

- Arefi, H.; Hahn, M. A morphological reconstruction algorithm for separating off-terrain points from terrain points in laser scanning data. In Proceedings of the ISPRS WG III/3, III/4, V/3 Workshop “Laser scanning 2005”, Enschede, The Netherlands, 12–14 September 2005. [Google Scholar]

- Boykov, Y.; Funka-Lea, G. Graph Cuts and Efficient N-D Image Segmentation. Int. J. Comput. Vis. 2006, 70, 109–131. [Google Scholar] [CrossRef] [Green Version]

- Otsu, N. A Threshold Selection Method from Gray-Level Histograms. IEEE Trans. Syst. Man, Cybern. 1979, 9, 62–66. [Google Scholar] [CrossRef] [Green Version]

- Boykov, Y.; Kolmogorov, V. An experimental comparison of min-cut/max-flow algorithms for energy minimization in vision. IEEE Trans. Pattern Anal. Mach. Intell. 2004, 26, 1124–1137. [Google Scholar] [CrossRef] [Green Version]

| Edge Type | Value | Condition |

|---|---|---|

| n-link | ||

| t-link | ||

| t-link |

| T1 | T2 | ||||

|---|---|---|---|---|---|

| Azimuth | Zenith | Azimuth | Zenith | ||

| DataSet 1 | BackWard | 191.475671 | 5.528352 | 241.092863 | 9.128378 |

| ForeWard | 12.051169 | 28.249459 | 359.066534 | 28.711070 | |

| DataSet 2 | BackWard | 290.6 | 17.1 | 348.3 | 15.3 |

| ForeWard | 221.2 | 32.9 | 208.2 | 23.4 | |

| Test Area 1 | Test Area 2 | Test Area 3 | Test Area 4 | Test Area 5 | Test Area 6 | ||

|---|---|---|---|---|---|---|---|

| T1 | 0.5 | 0.5 | 0.4 | 0.3 | 0.3 | 0.2 | |

| 0.0 | 0.0 | 0.3 | 0.2 | 0.2 | 0.15 | ||

| T2 | 0.4 | 0.3 | 0.2 | 0.2 | 0.2 | 0.2 | |

| 0.0 | 0.0 | 0.1 | 0.1 | 0.1 | 0.15 | ||

| DataSet | Method | Pixel Based | Object Based | |||||

|---|---|---|---|---|---|---|---|---|

| Precision | Recall | TDN/ | TDR | FDN/ | FDR | |||

| Test area 1 | dnDSM-T | 0.75353 | 0.85181 | 0.79966 | / | / | / | / |

| DS-Fusion | 0.77981 | 0.83344 | 0.80573 | 33/35 | 94.29% | 0/35 | 0% | |

| DSM-GC | 0.79883 | 0.83449 | 0.81627 | 33/35 | 94.29% | 1/35 | 2.86% | |

| Proposed | 0.78533 | 0.83475 | 0.80929 | 33/35 | 94.29% | 1/34 | 2.94% | |

| Test area 2 | dnDSM-T | 0.74105 | 0.83150 | 0.78367 | / | / | / | / |

| DS-Fusion | 0.76292 | 0.80187 | 0.78191 | 54/60 | 90% | 3/57 | 5.26% | |

| DSM-GC | 0.77557 | 0.82755 | 0.80072 | 57/60 | 95% | 4/61 | 6.56% | |

| Proposed | 0.81698 | 0.79966 | 0.80823 | 54/60 | 90% | 1/57 | 1.75% | |

| Test area 3 | dnDSM-T | 0.75395 | 0.74629 | 0.75010 | / | / | / | / |

| DS-Fusion | 0.82399 | 0.73545 | 0.77720 | 4/4 | 100% | 1/5 | 20% | |

| DSM-GC | 0.75901 | 0.69289 | 0.72445 | 4/4 | 100% | 3/7 | 42.86% | |

| Proposed | 0.88972 | 0.89174 | 0.89073 | 4/4 | 100% | 0/4 | 0% | |

| Test area 4 | dnDSM-T | 0.68805 | 0.90771 | 0.78276 | / | / | / | / |

| DS-Fusion | 0.65317 | 0.91346 | 0.76169 | 17/17 | 100% | 1/17 | 5.88% | |

| DSM-GC | 0.67037 | 0.88906 | 0.76438 | 17/17 | 100% | 3/19 | 15.79% | |

| Proposed | 0.73124 | 0.88523 | 0.80090 | 17/17 | 100% | 1/18 | 5.56% | |

| Test area 5 | dnDSM-T | 0.53095 | 0.95516 | 0.68251 | / | / | / | / |

| DS-Fusion | 0.60928 | 0.92995 | 0.73621 | 19/19 | 100% | 1/20 | 5% | |

| DSM-GC | 0.56258 | 0.96028 | 0.70950 | 19/19 | 100% | 8/27 | 29.63% | |

| Proposed | 0.72754 | 0.87491 | 0.79445 | 19/19 | 100% | 0/19 | 0% | |

| Test area 6 | dnDSM-T | 0.55767 | 0.92747 | 0.69653 | / | / | / | / |

| DS-Fusion | 0.67196 | 0.88452 | 0.76373 | 7/7 | 100% | 3/9 | 33.33% | |

| DSM-GC | 0.63604 | 0.91741 | 0.75124 | 7/7 | 100% | 6/12 | 50% | |

| Proposed | 0.86920 | 0.87839 | 0.87377 | 7/7 | 100% | 0/7 | 0% | |

| Building ID | Detected T2 | Ref | Bias | Building ID | Detected T2 | Ref | Bias |

|---|---|---|---|---|---|---|---|

| Xin1 | 98.87 | 99.9 | −1.03 | Hong1# | 70.8 | 71.4 | −0.6 |

| Xin2 | 99.33 | 99.9 | −0.57 | Hong2# | 71.46 | 71.4 | 0.06 |

| Xin3 | 100.1 | 99.9 | 0.2 | Hong3# | 71.62 | 71.4 | 0.22 |

| Xin4 | 98.95 | 99.9 | −0.95 | Hong5# | 72.38 | 74.3 | −1.92 |

| Xin5 | 100.5 | 99.9 | 0.6 | Hong6# | 81.12 | 79.5 | 1.62 |

| Xin6 | 100.5 | 99.9 | 0.6 | Hong7# | 80.71 | 79.5 | 1.21 |

| Han1# | 100.3 | 99.8 | 0.5 | Hong8# | 80.89 | 79.5 | 1.39 |

| Han2# | 97.43 | 97.5 | −0.07 | Hong9# | 80.01 | 79.95 | 0.06 |

| Han3# | 96.72 | 97.5 | −0.78 | Hong10# | 80.44 | 79.95 | 0.49 |

| Han5# | 95.45 | 97.5 | −2.05 | Hong11# | 79.86 | 79.5 | 0.36 |

| Han6# | 96.21 | 97.5 | −1.29 | Hong12# | 79.2 | 79.5 | −0.3 |

| Han7# | 98.07 | 99.8 | −1.73 | Ze1# | 99.65 | 97.6 | 2.05 |

| HanS1# | 13.94 | 20.4 | −6.46 | Ze2# | 96.88 | 97.6 | −0.72 |

| HanS2# | 12.77 | 14.7 | −1.93 | Ze3# | 96.53 | 97.6 | −1.07 |

| Shao1# | 100.4 | 99.8 | 0.6 | Ze5# | 11.02 | 12.1 | −1.08 |

| Shao2# | 99.34 | 99.8 | −0.46 | Ze6# | 77.68 | 77.3 | 0.38 |

| Shao3# | 99.76 | 99.8 | −0.04 | Ze7# | 96.74 | 97.6 | −0.86 |

| Shao5# | 95.98 | 97.5 | −1.52 | Ze8# | 74.17 | 74.4 | −0.23 |

| Shao6# | 93.04 | 94.6 | −1.56 | Ze9# | 96.85 | 97.6 | −0.75 |

| Shao7# | 93.34 | 94.6 | −1.26 | Ze10# | 8.925 | 8.65 | 0.275 |

| ShaoS1# | 11.81 | 13.35 | −1.54 | Ze11# | 99.2 | 97.6 | 1.6 |

| ShaoS2# | 12.19 | 13.35 | −1.16 | Ze12# | 60.16 | 59.9 | 0.26 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, H.; Lv, X.; Zhang, K.; Guo, B. Building Change Detection Based on 3D Co-Segmentation Using Satellite Stereo Imagery. Remote Sens. 2022, 14, 628. https://doi.org/10.3390/rs14030628

Wang H, Lv X, Zhang K, Guo B. Building Change Detection Based on 3D Co-Segmentation Using Satellite Stereo Imagery. Remote Sensing. 2022; 14(3):628. https://doi.org/10.3390/rs14030628

Chicago/Turabian StyleWang, Hao, Xiaolei Lv, Kaiyu Zhang, and Bin Guo. 2022. "Building Change Detection Based on 3D Co-Segmentation Using Satellite Stereo Imagery" Remote Sensing 14, no. 3: 628. https://doi.org/10.3390/rs14030628