A High-Precision Motion Errors Compensation Method Based on Sub-Image Reconstruction for HRWS SAR Imaging

Abstract

:1. Introduction

- The proposed method can compensate for the spatially varying phase errors in the range direction by estimating and correcting EPCs. It increases the accuracy of compensating for phase errors for each pixel and improves imaging quality.

- The proposed algorithm compensates for the motion errors of each channel before reconstruction, improving the imaging quality in the azimuth direction. Moreover, this compensation is implemented when calculating the distance history during sub-images imaging without additional calculation of the compensation phase for each pixel, which simplifies the processing.

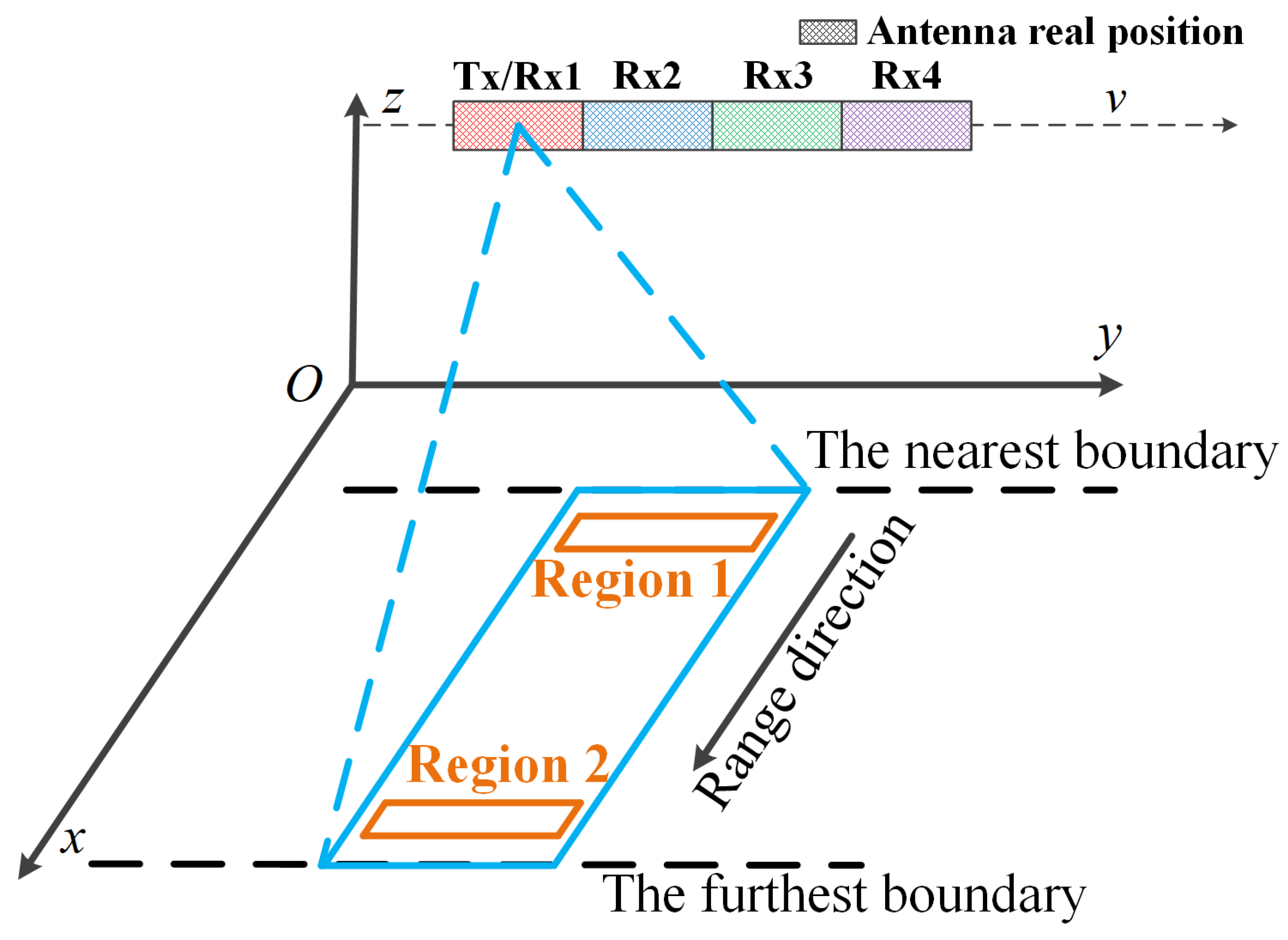

2. Signal Model and Problem Analysis

2.1. Signal Model of HRWS SAR with Motion Errors

2.2. Problem Analysis

3. HRWS SAR High-Precision Motion Compensation Method

3.1. Motion Errors Estimation Based on the Maximum Intensity of Strong Points in Multi-Region

3.2. Sub-Image EPCs Correction

3.3. Reconstruction

| Algorithm 1. High-precision Motion Errors Compensation Method Based on Sub-image Reconstruction for HRWS SAR Imaging |

| Inputs: The non-uniformly sampled echo and measured EPCs |

| Step 1: Obtain the SAR image with meaured EPCs by Equation (6). |

| Step 2: Select strong points and in multi-regions of SAR image, and calculate . |

| Step 3: Estimate motion errors based on maximizing image intensity by Equation (10). |

| Step 4: Correct EPCs error for each sub-image, and obtain the sub-image by Equation (15). |

| Step 5: Reconstruct the sub-image by Equation (16), and obtain the well-focused SAR imaging result . |

| Outputs: High-precision image with motion errors compensation. |

4. Experimental Results

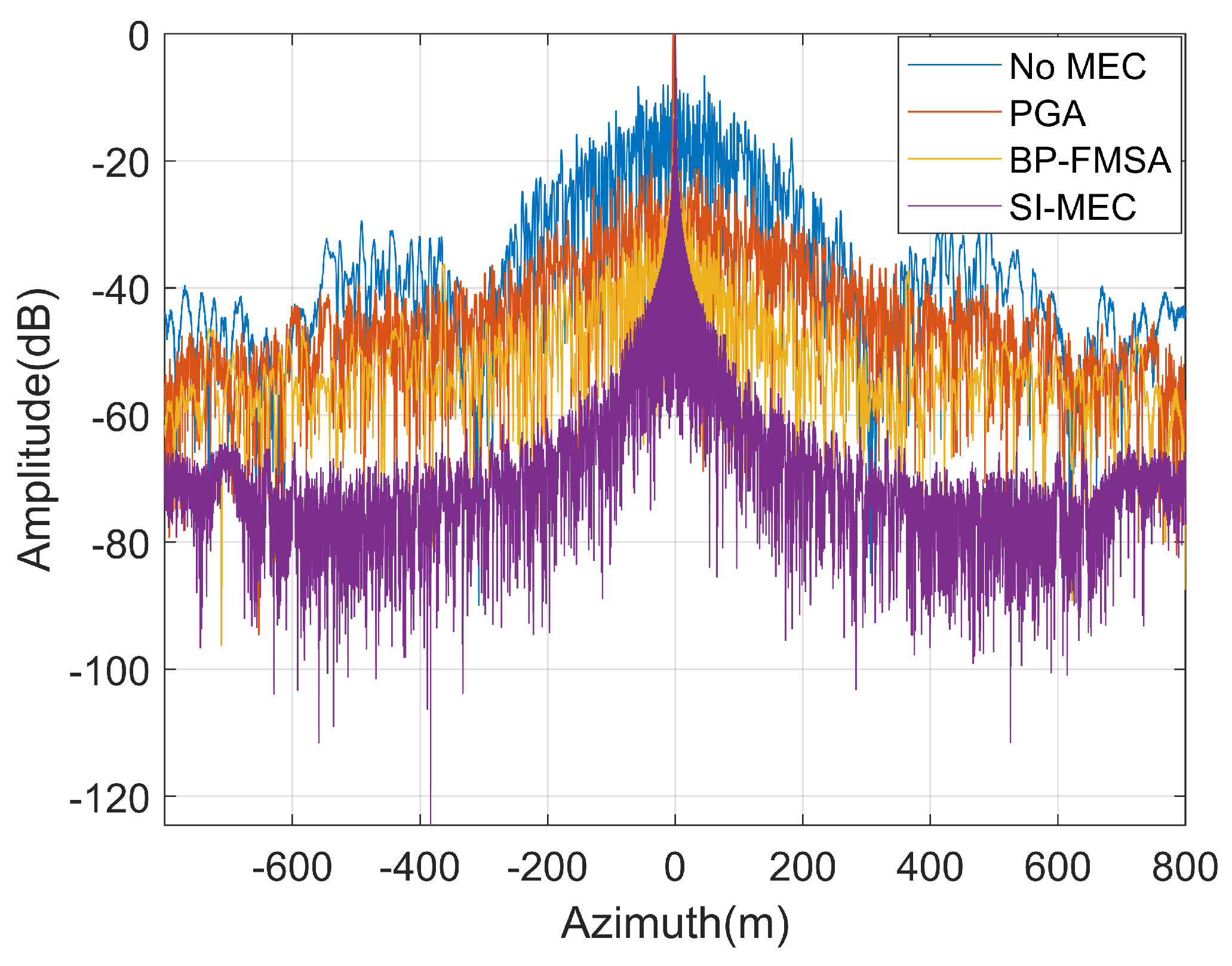

4.1. Point Target Simulation

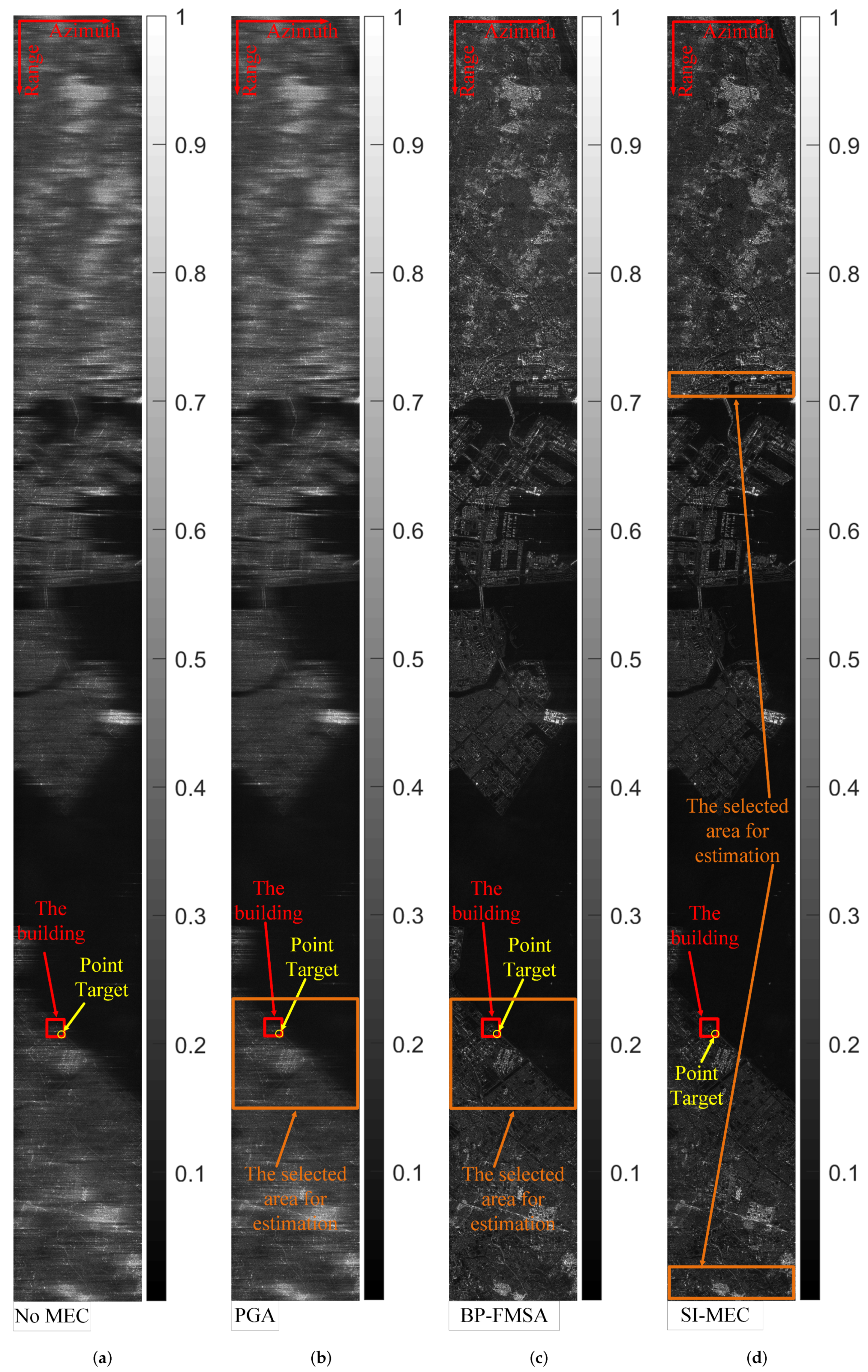

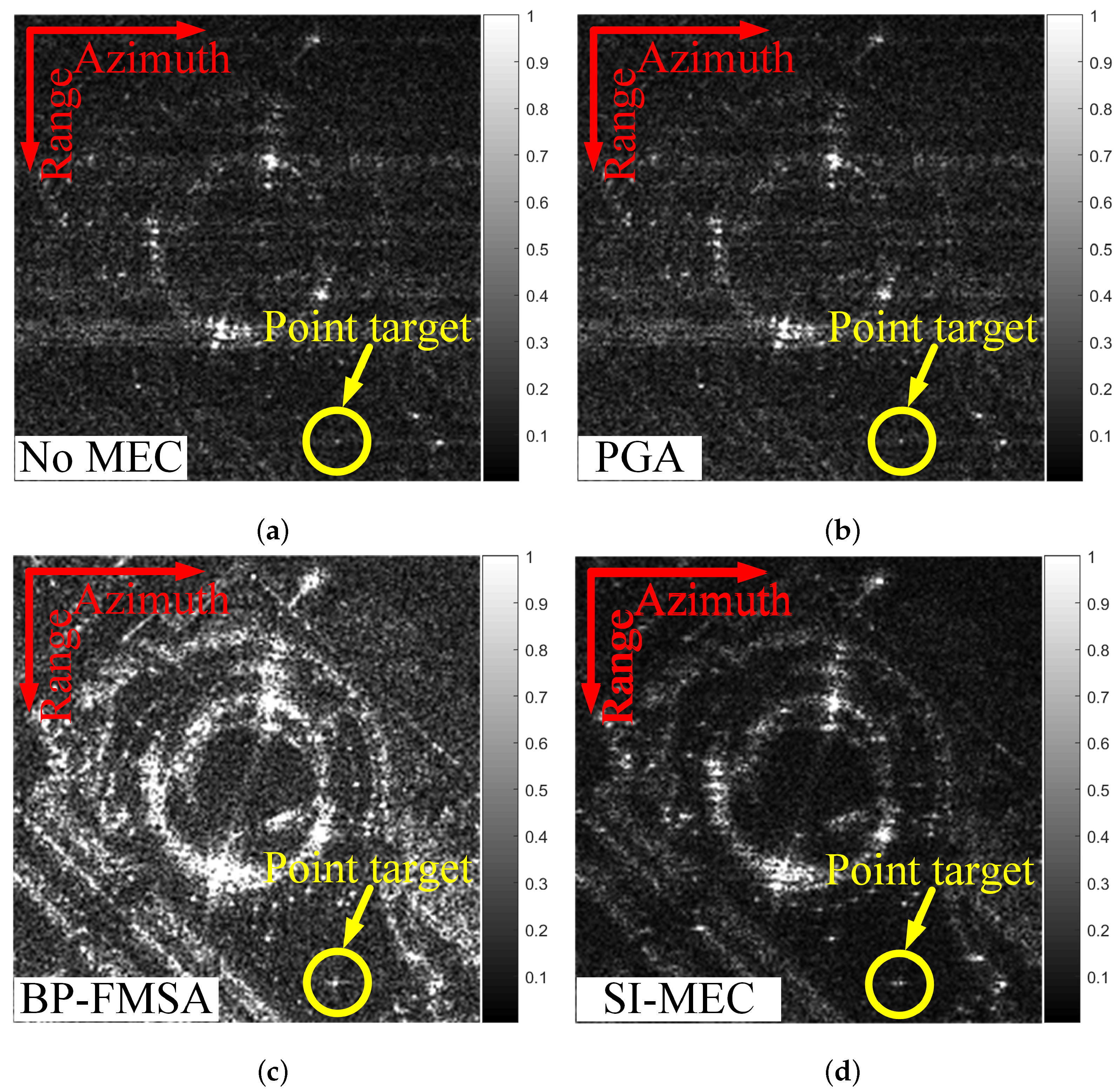

4.2. Complex Scene Simulation

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Zhang, S.X.; Xing, M.D.; Xia, X.G.; Liu, Y.Y.; Guo, R.; Bao, Z. A Robust Channel-Calibration Algorithm for Multi-Channel in Azimuth HRWS SAR Imaging Based on Local Maximum-Likelihood Weighted Minimum Entropy. IEEE Trans. Image Process. 2013, 22, 5294–5305. [Google Scholar] [CrossRef] [PubMed]

- Yang, J.; Qiu, X.; Zhong, L.; Shang, M.; Ding, C. A Simultaneous Imaging Scheme of Stationary Clutter and Moving Targets for Maritime Scenarios with the First Chinese Dual-Channel Spaceborne SAR Sensor. Remote Sens. 2019, 11, 2275. [Google Scholar] [CrossRef] [Green Version]

- Xu, W.; Yu, Q.; Fang, C.; Huang, P.; Tan, W.; Qi, Y. Onboard Digital Beamformer with Multi-Frequency and Multi-Group Time Delays for High-Resolution Wide-Swath SAR. Remote Sens. 2021, 13, 4354. [Google Scholar] [CrossRef]

- Mittermayer, J.; Krieger, G.; Bojarski, A.; Zonno, M.; Villano, M.; Pinheiro, M.; Bachmann, M.; Buckreuss, S.; Moreira, A. MirrorSAR: An HRWS Add-On for Single-Pass Multi-Baseline SAR Interferometry. IEEE Trans. Geosci. Remote Sens. 2021. [Google Scholar] [CrossRef]

- Muhuri, A.; Manickam, S.; Bhattacharya, A.; Snehmani. Snow Cover Mapping Using Polarization Fraction Variation With Temporal RADARSAT-2 C-Band Full-Polarimetric SAR Data Over the Indian Himalayas. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 2192–2209. [Google Scholar] [CrossRef]

- Touzi, R. Target Scattering Decomposition in Terms of Roll-Invariant Target Parameters. IEEE Trans. Geosci. Remote Sens. 2007, 45, 73–84. [Google Scholar] [CrossRef]

- Van Zyl, J.J.; Zebker, H.A.; Elachi, C. Imaging radar polarization signatures: Theory and observation. Radio Sci. 1987, 22, 529–543. [Google Scholar] [CrossRef]

- Zhang, T.; Zhang, X.; Shi, J.; Wei, S. Depthwise Separable Convolution Neural Network for High-Speed SAR Ship Detection. Remote Sens. 2019, 11, 2483. [Google Scholar] [CrossRef] [Green Version]

- Krieger, G.; Gebert, N.; Moreira, A. Unambiguous SAR signal reconstruction from nonuniform displaced phase center sampling. IEEE Geosci. Remote Sens. Lett. 2004, 1, 260–264. [Google Scholar] [CrossRef] [Green Version]

- Yang, T.; Li, Z.; Suo, Z.; Liu, Y.; Bao, Z. Performance analysis for multichannel HRWS SAR systems based on STAP approach. IEEE Geosci. Remote Sens. Lett. 2013, 10, 1409–1413. [Google Scholar] [CrossRef]

- Nicolas, G.; Gerhard, K.; Alberto, M. Digital Beamforming on Receive: Techniques and Optimization Strategies for High-Resolution Wide-Swath SAR Imaging. IEEE Trans. Aerosp. Electron. Syst. 2009, 45, 564–592. [Google Scholar]

- Zhou, L.; Zhang, X.; Zhan, X.; Pu, L.; Zhang, T.; Shi, J.; Wei, S. A Novel Sub-Image Local Area Minimum Entropy Reconstruction Method for HRWS SAR Adaptive Unambiguous Imaging. Remote Sens. 2021, 13, 3115. [Google Scholar] [CrossRef]

- Guo, J.; Chen, J.; Liu, W.; Li, C.; Yang, W. An Improved Airborne Multichannel SAR Imaging Method With Motion Compensation and Range-Variant Channel Mismatch Correction. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 5414–5423. [Google Scholar] [CrossRef]

- Zhao, S.; Wang, R.; Deng, Y.; Zhang, Z.; Li, N.; Guo, L.; Wang, W. Modifications on Multichannel Reconstruction Algorithm for SAR Processing Based on Periodic Nonuniform Sampling Theory and Nonuniform Fast Fourier Transform. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 4998–5006. [Google Scholar] [CrossRef]

- Li, N.; Zhang, H.; Zhao, J.; Wu, L.; Guo, Z. An Azimuth Signal-Reconstruction Method Based on Two-Step Projection Technology for Spaceborne Azimuth Multi-Channel High-Resolution and Wide-Swath SAR. Remote Sens. 2021, 13, 4988. [Google Scholar] [CrossRef]

- Huang, H.; Huang, P.; Liu, X.; Xia, X.G.; Deng, Y.; Fan, H.; Liao, G. A Novel Channel Errors Calibration Algorithm for Multichannel High-Resolution and Wide-Swath SAR Imaging. IEEE Trans. Geosci. Remote Sens. 2021, 60, 5201619. [Google Scholar] [CrossRef]

- Rui, Z.; Sun, J.; Hu, Y.; Qi, Y. Multichannel High Resolution Wide Swath SAR Imaging for Hypersonic Air Vehicle with Curved Trajectory. Sensors 2018, 18, 411. [Google Scholar]

- Chen, Z.; Zhang, Z.; Qiu, J.; Zhou, Y.; Wang, R. A Novel Motion Compensation Scheme for 2-D Multichannel SAR Systems With Quaternion Posture Calculation. IEEE Trans. Geosci. Remote Sens. 2020, 59, 9350–9360. [Google Scholar] [CrossRef]

- Ding, Z.; Liu, L.; Zeng, T.; Yang, W.; Long, T. Improved Motion Compensation Approach for Squint Airborne SAR. IEEE Trans. Geosci. Remote Sens. 2013, 51, 4378–4387. [Google Scholar] [CrossRef]

- Bhattacharya, A.; Muhuri, A.; De, S.; Manickam, S.; Frery, A.C. Modifying the Yamaguchi Four-Component Decomposition Scattering Powers Using a Stochastic Distance. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 3497–3506. [Google Scholar] [CrossRef] [Green Version]

- Kennedy, T. Strapdown inertial measurement units for motion compensation for synthetic aperture radars. IEEE Aerosp. Electron. Syst. Mag. 1988, 3, 32–35. [Google Scholar] [CrossRef]

- Hu, K.; Zhang, X.; He, S.; Zhao, H.; Shi, J. A Less-Memory and High-Efficiency Autofocus Back Projection Algorithm for SAR Imaging. IEEE Geosci. Remote Sens. Lett. 2015, 12, 890–894. [Google Scholar]

- Eichel, P.H.; Jakowatz, C.V. Phase-gradient algorithm as an optimal estimator of the phase derivative. Opt. Lett. 1989, 14, 1101–1103. [Google Scholar] [CrossRef] [PubMed]

- Ash, J.N. An Autofocus Method for Backprojection Imagery in Synthetic Aperture Radar. IEEE Geosci. Remote Sens. Lett. 2012, 9, 104–108. [Google Scholar] [CrossRef]

- Fletcher, I.; Watts, C.; Miller, E.; Rabinkin, D. Minimum entropy autofocus for 3D SAR images from a UAV platform. In Proceedings of the 2016 IEEE Radar Conference (RadarConf), Philadelphia, PA, USA, 2–6 May 2016; pp. 1–5. [Google Scholar] [CrossRef]

- Zhou, L.; Zhang, X.; Wang, Y.; Wang, C.; Wei, S. Precise Autofocus for SAR Imaging Based on Joint Multi-Region Optimization. In Proceedings of the IGARSS 2019—2019 IEEE International Geoscience and Remote Sensing Symposium, Yokohama, Japan, 28 July–2 August 2019. [Google Scholar]

- Wahl, D.; Eichel, P.; Ghiglia, D.; Jakowatz, C. Phase gradient autofocus-a robust tool for high resolution SAR phase correction. IEEE Trans. Aerosp. Electron. Syst. 1994, 30, 827–835. [Google Scholar] [CrossRef] [Green Version]

- Wei, S.; Zhou, L.; Zhang, X.; Shi, J. Fast back-projection autofocus for linear array SAR 3-D imaging via maximum sharpness. In Proceedings of the 2018 IEEE Radar Conference (RadarConf18), Oklahoma City, OK, USA, 23–27 April 2018; pp. 525–530. [Google Scholar] [CrossRef]

- Yen, J. On nonuniform sampling of bandwidth-limited signals. IRE Trans. Circuit Theory 1956, 3, 251–257. [Google Scholar] [CrossRef]

- Shi, J.; Zhang, X.; Yang, J.; Wen, C. APC Trajectory Design for One-Active Linear-Array Three-Dimensional Imaging SAR. IEEE Trans. Geosci. Remote Sens. 2010, 48, 1470–1486. [Google Scholar] [CrossRef]

- Pu, W. SAE-Net: A Deep Neural Network for SAR Autofocus. IEEE Trans. Geosci. Remote Sens. 2022. [Google Scholar] [CrossRef]

| Parameters | Value |

|---|---|

| Carrier frequency | 9.6 GHz |

| Signal bandwidth | 150 MHz |

| Range sampling rata | 500 Hz |

| PRF | 700 Hz |

| Azimuth bandwith | 1900 Hz |

| Platform height | 20 Km |

| Platform velocity | 1900 m/s |

| Number of channels | 4 |

| Method | (50 Km) | (60 Km) | (70 Km) | |||

|---|---|---|---|---|---|---|

| PSLR | ISLR | PSLR | ISLR | PSLR | ISLR | |

| No MEC | −6.49 dB | 8.74 dB | −9.35 dB | 4.74 dB | −10.60 dB | 2.33 dB |

| PGA [27] | −16.38 dB | −3.47 dB | −1.04 dB | 14.61 dB | −2.32 dB | 14.08 dB |

| BP-FMSA [28] | −12.64 dB | −8.13 dB | −12.64 dB | −8.13 dB | −12.47 dB | −6.51 dB |

| SI-MEC (Ours) | −13.26 dB | −9.83 dB | −13.27 dB | −9.83 dB | −13.27 dB | −9.83 dB |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhou, L.; Zhang, X.; Pu, L.; Zhang, T.; Shi, J.; Wei, S. A High-Precision Motion Errors Compensation Method Based on Sub-Image Reconstruction for HRWS SAR Imaging. Remote Sens. 2022, 14, 1033. https://doi.org/10.3390/rs14041033

Zhou L, Zhang X, Pu L, Zhang T, Shi J, Wei S. A High-Precision Motion Errors Compensation Method Based on Sub-Image Reconstruction for HRWS SAR Imaging. Remote Sensing. 2022; 14(4):1033. https://doi.org/10.3390/rs14041033

Chicago/Turabian StyleZhou, Liming, Xiaoling Zhang, Liming Pu, Tianwen Zhang, Jun Shi, and Shunjun Wei. 2022. "A High-Precision Motion Errors Compensation Method Based on Sub-Image Reconstruction for HRWS SAR Imaging" Remote Sensing 14, no. 4: 1033. https://doi.org/10.3390/rs14041033

APA StyleZhou, L., Zhang, X., Pu, L., Zhang, T., Shi, J., & Wei, S. (2022). A High-Precision Motion Errors Compensation Method Based on Sub-Image Reconstruction for HRWS SAR Imaging. Remote Sensing, 14(4), 1033. https://doi.org/10.3390/rs14041033