1. Introduction

Coastal wetland is a transitional area between terrestrial and marine ecosystems, which has a complex environment and monitoring elements [

1]. Accurate biodiversity monitoring of coastal wetlands is of great significance in water conservation [

2], biodiversity conservation [

3], and blue carbon sink development [

4]. Recently, natural factors and human activities have deteriorated biotope and biodiversity.

The traditional on-site monitoring receives data by stations and sections, which is time-consuming and laborious. In contrast, remote sensing technology has the advantages of large-area coverage, spatio-temporal synchronization, and high spatial-spectral resolution [

5], providing highly relevant information for a wide range of wetland monitoring applications. Therefore, biodiversity estimation based on remote sensing achieves economic and real-time data collection. In recent years, a lot of works have been developed for biodiversity estimation based on remote sensing.

The limitation of remote sensing including resolution and sensors makes the biodiversity estimation applied at a coarse scale [

6]. Biodiversity is mainly divided into animal diversity and plant diversity. land-cover mapping is one of the most widely used applications of optical remote sensing, which directly serves the plant diversity estimation. In addition, considering the limitations of remote sensing monitoring of animal diversity, land-cover mapping is capable of estimating animal diversity indirectly [

7]. Therefore, biodiversity estimation based on remote sensing relies on the interpretation of land-cover, for which hyperspectral images (HSI) have attracted significant attention [

8]. Su et al. [

9] designed an elastic network based on low-rank representation to classify HSI, which is collected by a GaoFen-5 satellite, thus plant diversity estimation of coastal wetland was achieved. Hong et al. [

10] combined the convolutional neural network (CNN) and graph convolutional network (GCN) to extract different types for urban land-cover classification. Zhang et al. [

11] developed a transferred 3D-CNN for HSI classification, for which the overfitting problem caused by insufficient labeled samples was alleviated. Wang et al. [

12] proposed a generative adversarial network (GAN) for land-cover recognition, and achieved promising results with imbalanced samples. Zhu et al. [

13] designed a spatial-temporal semantic segmentation model to harness temporal dependency for land-use and land-cover (LULC) classification. Zhang et al. [

14] proposed a parcel-level ensemble method for land-cover classification based on Sentinel-1 synthetic aperture radar (SAR) time series and segmentation generated from GaoFen-6 images. In [

15], the land-cover in coastal wetland were classified using an object-oriented random forest algorithm. In [

16], a hierarchical classification framework (HCF) was developed for wetland classification. The HCF classifies land-cover from rough classes to their subtypes based on spectral, texture, and geometric features.

Generally speaking, HSIs exhibit great advantages in land-cover classification due to carrying plenty of spectral information [

17]. However, the spatial resolution of HSI is usually lower because of the requirements of signal-to-noise ratio in long exposure [

18]. In addition, the existing “different body with same spectrum” or “same body with different spectrum” phenomenon on HSI deteriorates the interpretation. Therefore, joining the complementary merits of multisource data further improves the accuracy of land-cover classification [

19]. In the past decade, extensive classification techniques have been successfully applied to multisource data [

20,

21,

22]. Some of the machine learning methods rely on support vector machine (SVM), extreme learning machine (ELM) and random forest (RF) [

23,

24,

25]. More recently, deep learning has boosted the performance of classification in the remote sensing community. In [

26], the adaptive differential evolution was utilized to optimize the classification decision from different data sources. Rezaee [

27] et al. employed the deep CNN to classify wetland land-cover on a large scale. In [

28], a 3D-CNN was designed for multispectral image (MSI) classification to serve wetland feature monitoring. Zhao et al. [

29] developed a hierarchical random walk network (HRWN) to exploit the spatial consistency of land-cover over HSI and light detection and ranging (LiDAR) data. In [

30], a three-steam CNN was designed to fuse HSI and LiDAR data, in which the multi-sensor composite kernels (MCK) scheme was employed for feature integration. Xu [

31] et al. developed a dual-tunnel CNN and a cascaded network, named two-branch CNN, for feature extraction, and the multisource features were stacked for fusion. Liu [

32] et al. improved the two-branch CNN through interclass sparsity-based discriminative least square regression (CS_DLSR), which encouraged the feature discrimination among different land-cover. Zhang [

33] et al. designed an encoder–decoder to construct the latent representation between multisource data, and then fuse them for classification. In [

34], a depth feature interaction network (DFINet) was developed for HSI and MSI classification in the Yellow River estuary wetland. In [

35], a hierarchy-based classifier for urban vegetation classification was designed to incorporate the canopy height features into spectral and textural data.

Despite the intense interest in multisource data classification, it remains a highly challenging problem. The primary challenges are summed up into two aspects: (1) Data quality needs to be improved. A higher spatial and spectral resolution is conducive to the extraction of texture and spectral features, which improves the final classification results. (2) Sequential features need to be noticed. The continuity of spatially distribution and spectrum curves enhances the discrimination of features and the classification performance.

To address the aforementioned challenges, a systematic framework for multisource remote sensing image processing is constructed. The typical CNN is used for data fusion, and then a spatial-spectral vision transformer (SSVit) is employed for land-cover mapping which promotes biodiversity estimation. In stage 1, a CNN-based method is utilized to fuse the HSI and MSI over the Yellow River estuary wetland, thus both the spatial and spectral resolution of the fused image is improved. In stage 2, the land-cover mapping of fused image is generated by the SSViT, which exploits the sequential relationship of spatial and spectral information, respectively. After that, an external attention module is adopted for feature integration. Finally, biodiversity estimation is achieved by land-cover mapping and benthic collection in the study area. Extensive experiments conducted on the Yellow River estuary dataset and several related methods reveal that the proposed framework provides competitive advantages in terms of data quality and classification accuracy.

The main contributions of the proposed method are summarized as follows:

A systematic framework including stage 1 (data fusion) and stage 2 (classification) is constructed for land-cover mapping, which serves as the precondition of biodiversity estimation. In fact, the relationship between land-cover and biomass is of utmost importance for remote sensing monitoring of biodiversity. In this paper, the coarse-scale biodiversity estimation of the Yellow River estuary dataset is indirectly achieved based on land-cover mapping.

The classification stage is crucial for information interpretation. To explore the spatial-spectral sequential features of wetland, the spatial transformer and spectral transformer both with position embedding are utilized to extract the neighborhood correlation, which encourages the discrimination between different classes. In addition, an external attention module is employed to enhance the spatial-spectral features. Different from the self-attention module, the external attention module is optimized with all training sets. Finally, the pixel-wise prediction is achieved for land-cover mapping.

The rest of this paper is organized as follows: In

Section 2, the study area and considered data are described.

Section 3 illustrates the proposed method in detail.

Section 4 presents the experimental results on the Yellow River estuary dataset to validate the proposed method, and then the biodiversity estimation is achieved. Finally, the conclusions are presented in

Section 5, respectively.

2. Study Area and Data Description

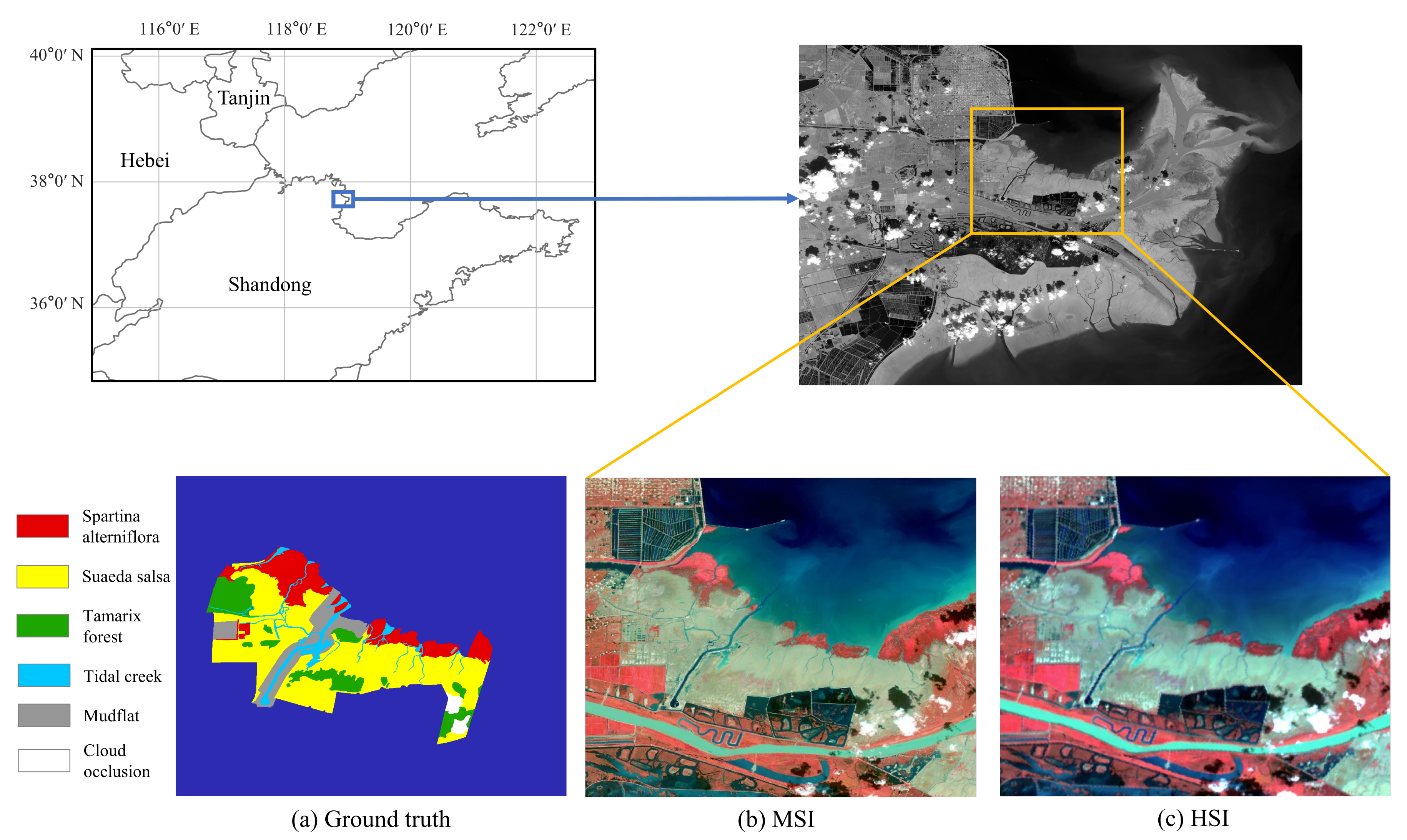

The Yellow River delta wetland locates in Shandong Province, China

–

E,

–

N. The Yellow River delta wetland is an important ecological functional area in the Bohai Sea, while the estuarine wetland is a typical area [

36]. In particular, the intertidal zone of the Yellow River estuary wetland involves rich salt marsh vegetation and benthos. It plays an important role in biodiversity protection and ecological restoration. However, the Yellow River delta wetland is struggling to face the reduction of natural area, biodiversity, and ecosystem service function.

Therefore, the monitoring of species composition and spatio-temporal distribution in the study area promotes biodiversity estimation and protection. As shown in

Figure 1, the intertidal zone in the north of the Yellow River is selected as the study area, and 11 sites are arranged for benthos collection. The coordinate and real landscape of field sites are listed in

Table 1.

In this paper, a mudflat quantitative sampler with the size of 0.25 m × 0.25 m × 0.3 m is utilized to collect intertidal biological samples at the sites according to the sites and number of quadrats listed in

Table 1. The size of a quadrat is 0.0625 m

. Note that

Bullacta exarat and

Mactra veneriformis are usually located on the surface of the intertidal zone, which is recorded directly through field observation. Some samples at Sites A2 and A3 are scoured by tide during sampling, thus the remaining samples are recorded at the proportion of

. All samples retained after elutriation are transferred to the sample bottle. To obtain the information of species composition and species density, the retained samples are further analyzed quantitatively under the stereomicroscope.

In addition, land-cover mapping is generated by multisource data including HSI and MSI, which are captured by ZiYuan1-02D (ZY1-02D) satellite on 26 September 2020. The MSI includes eight MSS (multi-spectral scanner) bands, with 10 m ground sample distance (GSD). The HSI is obtained by an AHSI sensor, with a spectral resolution of 10–20 nm. The parameters of ZiYuan1-02D satellite are listed in

Table 2. The multisource data are preprocessed by image registration, atmospheric correction, and radiometric calibration. To be specific, the HSI and MSI are transformed into the WGS 84 geographic coordinate system, and then the considered images are registered by the automatic registration tool in ENVI. The Fast Line-of-Sight Atmospheric Analysis of Spectral Hypercubes (FLAASH) module of ENVI is employed for atmospheric correction, and the radiometric calibration is carried out by using the gain and offset coefficients. The classification system is established based on the real landscape listed in

Table 1. Here, five classes are selected for land-cover classification, and the Cloud occlusion (white area in

Figure 1a) is eliminated. The benthic data collected at different sites are reported in

Figure 2 and

Table 3. The regions of interest are selected as the training set, in which the ground truth is annotated by experts with rich knowledge in field trips as listed in

Table 4. Note that Tamarix forest is mainly the distribution area of

Tamarix chinensis, which mixed with other vegetation such as

Suaeda salsa and

Phragmites australis.